B. F. Skinner

| |

|---|---|

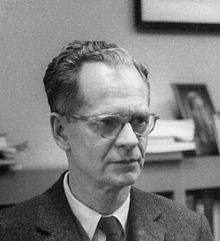

Skinner at the Harvard Psychology Department, c. 1950

| |

| Born |

Burrhus Frederic Skinner

March 20, 1904 |

| Died | August 18, 1990 (aged 86)

Cambridge, Massachusetts, U.S.

|

| Nationality | American |

| Alma mater | Hamilton College Harvard University |

| Known for | Operant conditioning Radical behaviorism Behavior analysis Verbal behavior |

| Spouse(s) | Yvonne (Eve) Blue (1936–1990) |

| Awards | National Medal of Science (1968) |

| Scientific career | |

| Fields | Psychology, linguistics, philosophy |

| Institutions | University of Minnesota Indiana University Harvard University |

| Influences | Charles Darwin Ivan Pavlov Ernst Mach Jacques Loeb Edward Thorndike William James Jean-Jacques Rousseau Henry David Thoreau |

| Influenced | Maxie Clarence Maultsby Jr. |

| Signature | |

| |

Burrhus Frederic Skinner (March 20, 1904 – August 18, 1990), commonly known as B. F. Skinner, was an American psychologist, behaviorist, author, inventor, and social philosopher. He was the Edgar Pierce Professor of Psychology at Harvard University from 1958 until his retirement in 1974.

Skinner considered free will an illusion and human action dependent on consequences of previous actions. If the consequences are bad, there is a high chance the action will not be repeated; if the consequences are good, the probability of the action being repeated becomes stronger. Skinner called this the principle of reinforcement.

To strengthen behavior, Skinner used operant conditioning, and he considered the rate of response to be the most effective measure of response strength. To study operant conditioning, he invented the operant conditioning chamber, also known as the Skinner Box, and to measure rate he invented the cumulative recorder. Using these tools, he and C. B. Ferster produced his most influential experimental work, which appeared in their book Schedules of Reinforcement (1957).

Skinner developed behavior analysis, the philosophy of that science he called radical behaviorism, and founded a school of experimental research psychology—the experimental analysis of behavior. He imagined the application of his ideas to the design of a human community in his utopian novel, Walden Two, and his analysis of human behavior culminated in his work, Verbal Behavior. Skinner was a prolific author who published 21 books and 180 articles. Contemporary academia considers Skinner a pioneer of modern behaviorism, along with John B. Watson and Ivan Pavlov. A June 2002 survey listed Skinner as the most influential psychologist of the 20th century.

Biography

The gravestone of B.F. Skinner and his wife Eve at Mount Auburn Cemetery

Skinner was born in Susquehanna, Pennsylvania, to Grace and William Skinner. His father was a lawyer. He became an atheist after a Christian teacher tried to assuage his fear of the hell that his grandmother described. His brother Edward, two and a half years younger, died at age sixteen of a cerebral hemorrhage.

Skinner's closest friend as a young boy was Raphael Miller, whom

he called Doc because his father was a doctor. Doc and Skinner became

friends due to their parents’ religiousness and both had an interest in

contraptions and gadgets. They had set up a telegraph line between

their houses to send messages to each other, although they had to call

each other on the telephone due to the confusing messages sent back and

forth. During one summer, Doc and Skinner started an elderberry

business to gather berries and sell them door to door. They had found

out that when they picked the ripe berries, the unripe ones came off the

branches too, so they built a device that was able to separate them.

The device was a bent piece of metal to form a trough. They would pour

water down the trough into a bucket, and the ripe berries would sink

into the bucket and the unripe ones would be pushed over the edge to be

thrown away.

He attended Hamilton College

in New York with the intention of becoming a writer. He found himself

at a social disadvantage at Hamilton College because of his intellectual

attitude. While attending, he joined Lambda Chi Alpha

fraternity. Hamilton was known for being a strong fraternity college.

Skinner had thought that his fraternity brothers were respectful and did

not haze or mistreat the newcomers, instead, they helped out the other

boys with courses or other activities. Freshmen were called “‘slimers’”

who had to wear small green knit hats and greet everyone that they

passed for punishment. The year before Skinner entered Hamilton, there

was a hazing accident that caused the death of a student. The freshman

was asleep in his bed when he was pushed onto the floor, where he

smashed his head, resulting in his death. Skinner had a similar incident

where two freshmen captured him and tied him to a pole, where he should

have stayed all night, but he had a razor blade in his shoe for

emergency and managed to cut himself free.

He wrote for the school paper, but, as an atheist, he was critical of

the traditional mores of his college. After receiving his Bachelor of Arts

in English literature in 1926, he attended Harvard University, where he

would later research, teach, and eventually become a prestigious board

member. While he was at Harvard, a fellow student, Fred Keller,

convinced Skinner that he could make an experimental science from the study of behavior. This led Skinner to invent his prototype for the Skinner Box and to join Keller in the creation of other tools for small experiments.

After graduation, he unsuccessfully tried to write a great novel while

he lived with his parents, a period that he later called the Dark Years. He became disillusioned with his literary skills despite encouragement from the renowned poet Robert Frost, concluding that he had little world experience and no strong personal perspective from which to write. His encounter with John B. Watson's Behaviorism led him into graduate study in psychology and to the development of his own version of behaviorism.

Skinner received a PhD from Harvard in 1931, and remained there as a researcher until 1936. He then taught at the University of Minnesota at Minneapolis and later at Indiana University,

where he was chair of the psychology department from 1946–1947, before

returning to Harvard as a tenured professor in 1948. He remained at

Harvard for the rest of his life. In 1973, Skinner was one of the

signers of the Humanist Manifesto II.

In 1936, Skinner married Yvonne (Eve) Blue. The couple had two daughters, Julie (m. Vargas) and Deborah (m. Buzan). Yvonne Skinner died in 1997, and is buried in Mount Auburn Cemetery, Cambridge, Massachusetts.

Skinner's public exposure had increased in the 1970s, he remained

active even after his retirement in 1974, until his death. In 1989,

Skinner was diagnosed with leukemia and died on August 18, 1990, in

Cambridge, Massachusetts. Ten days before his death, he was given the

lifetime achievement award by the American Psychological Association and

gave a talk in an auditorium concerning his work.

A controversial figure, Skinner has been depicted in many

different ways. He has been widely revered for bringing a much-needed

scientific approach to the study of human behavior; he has also been

vilified for attempting to apply findings based largely on animal

experiments to human behavior in real-life settings.

Contributions to psychological theory

Behaviorism

Skinner called his approach to the study of behavior radical behaviorism. This philosophy of behavioral science assumes that behavior is a consequence of environmental histories of reinforcement. In his words:

The position can be stated as follows: what is felt or introspectively observed is not some nonphysical world of consciousness, mind, or mental life but the observer's own body. This does not mean, as I shall show later, that introspection is a kind of psychological research, nor does it mean (and this is the heart of the argument) that what are felt or introspectively observed are the causes of the behavior. An organism behaves as it does because of its current structure, but most of this is out of reach of introspection. At the moment we must content ourselves, as the methodological behaviorist insists, with a person's genetic and environment histories. What are introspectively observed are certain collateral products of those histories.

...

In this way we repair the major damage wrought by mentalism. When what a person does [is] attributed to what is going on inside him, investigation is brought to an end. Why explain the explanation? For twenty five hundred years people have been preoccupied with feelings and mental life, but only recently has any interest been shown in a more precise analysis of the role of the environment. Ignorance of that role led in the first place to mental fictions, and it has been perpetuated by the explanatory practices to which they gave rise.

Theoretical structure

Skinner's behavioral theory was largely set forth in his first book, Behavior of Organisms.

Here he gave a systematic description of the manner in which

environmental variables control behavior. He distinguished two sorts of

behavior—respondent and operant—which are controlled in different ways.

Respondent behaviors are elicited by stimuli, and may be

modified through respondent conditioning, which is often called

"Pavlovian conditioning" or "classical conditioning", in which a neutral stimulus is paired with an eliciting stimulus. Operant

behaviors, in contrast, are "emitted", meaning that initially they are

not induced by any particular stimulus. They are strengthened through

operant conditioning, sometimes called "instrumental conditioning", in

which the occurrence of a response yields a reinforcer. Respondent

behaviors might be measured by their latency or strength, operant

behaviors by their rate. Both of these sorts of behavior had already

been studied experimentally, for example, respondents by Pavlov, and operants by Thorndike. Skinner's account differed in some ways from earlier ones, and was one of the first accounts to bring them under one roof.

The idea that behavior is strengthened or weakened by its

consequences raises several questions. Among the most important are

these: (1) Operant responses are strengthened by reinforcement, but

where do they come from in the first place? (2) Once it is in the

organism's repertoire, how is a response directed or controlled? (3)

How can very complex and seemingly novel behaviors be explained?

Origin of operant behavior

Skinner's

answer to the first question was very much like Darwin's answer to the

question of the origin of a "new" bodily structure, namely, variation

and selection. Similarly, the behavior of an individual varies from

moment to moment; a variation that is followed by reinforcement is

strengthened and becomes prominent in that individual's behavioral

repertoire. "Shaping" was Skinner's term for the gradual modification of

behavior by the reinforcement of desired variations. As discussed later

in this article, Skinner believed that "superstitious" behavior can

arise when a response happens to be followed by reinforcement to which it is actually unrelated.

Control of operant behavior

The

second question, "how is operant behavior controlled?" arises because,

to begin with, the behavior is "emitted" without reference to any

particular stimulus. Skinner answered this question by saying that a

stimulus comes to control an operant if it is present when the response

is reinforced and absent when it is not. For example, if lever-pressing

only brings food when a light is on, a rat, or a child, will learn to

press the lever only when the light is on. Skinner summarized this

relationship by saying that a discriminative stimulus (e.g. light) sets

the occasion for the reinforcement (food) of the operant (lever-press).

This three-term contingency

(stimulus-response-reinforcer) is one of Skinner's most important

concepts, and sets his theory apart from theories that use only

pair-wise associations.

Explaining complex behavior

Most

behavior of humans cannot easily be described in terms of individual

responses reinforced one by one, and Skinner devoted a great deal of

effort to the problem of behavioral complexity. Some complex behavior

can be seen as a sequence of relatively simple responses, and here

Skinner invoked the idea of "chaining". Chaining is based on the fact,

experimentally demonstrated, that a discriminative stimulus not only

sets the occasion for subsequent behavior, but it can also reinforce a

behavior that precedes it. That is, a discriminative stimulus is also a

"conditioned reinforcer". For example, the light that sets the occasion

for lever pressing may also be used to reinforce "turning around" in the

presence of a noise. This results in the sequence "noise - turn-around -

light - press lever - food". Much longer chains can be built by adding

more stimuli and responses.

However, Skinner recognized that a great deal of behavior,

especially human behavior, cannot be accounted for by gradual shaping or

the construction of response sequences.

Complex behavior often appears suddenly in its final form, as when a

person first finds his way to the elevator by following instructions

given at the front desk. To account for such behavior, Skinner

introduced the concept of rule-governed behavior. First, relatively

simple behaviors come under the control of verbal stimuli: the child

learns to "jump", "open the book", and so on. After a large number of

responses come under such verbal control, a sequence of verbal stimuli

can evoke an almost unlimited variety of complex responses.

Reinforcement

Reinforcement, a key concept of behaviorism, is the primary process that shapes and controls behavior, and occurs in two ways, "positive" and "negative". In The Behavior of Organisms (1938), Skinner defined "negative reinforcement" to be synonymous with punishment, that is, the presentation of an aversive stimulus. Subsequently, in Science and Human Behavior

(1953), Skinner redefined negative reinforcement. In what has now

become the standard set of definitions, positive reinforcement is the

strengthening of behavior by the occurrence of some event (e.g., praise

after some behavior is performed), whereas negative reinforcement is the

strengthening of behavior by the removal or avoidance of some aversive

event (e.g., opening and raising an umbrella over your head on a rainy

day is reinforced by the cessation of rain falling on you).

Both types of reinforcement strengthen behavior, or increase the

probability of a behavior reoccurring; the difference is in whether the

reinforcing event is something applied (positive reinforcement) or something removed or avoided (negative reinforcement).

Punishment is the application of an aversive stimulus/event (positive

punishment or punishment by contingent stimulation) or the removal of a

desirable stimulus (negative punishment

or punishment by contingent withdrawal). Though punishment is often

used to suppress behavior, Skinner argued that this suppression is

temporary and has a number of other, often unwanted, consequences. Extinction is the absence of a rewarding stimulus, which weakens behavior.

Writing in 1981, Skinner pointed out that Darwinian natural

selection is, like reinforced behavior, "selection by consequences".

Though, as he said, natural selection has now "made its case", he

regretted that essentially the same process, "reinforcement", was less

widely accepted as underlying human behavior.

Schedules of reinforcement

Skinner recognized that behavior is typically reinforced more than

once, and, together with C. B. Ferster, he did an extensive analysis of

the various ways in which reinforcements could be arranged over time,

which he called "schedules of reinforcement".

The most notable schedules of reinforcement studied by Skinner

were continuous, interval (fixed or variable), and ratio (fixed or

variable). All are methods used in operant conditioning.

- Continuous reinforcement (CRF) — each time a specific action is performed the subject receives a reinforcement. This method is effective when teaching a new behavior because it quickly establishes an association between the target behavior and the reinforcer.

- Interval Schedules — based on the time intervals between reinforcements

- Fixed Interval Schedule (FI): A procedure in which reinforcements are presented at fixed time periods, provided that the appropriate response is made. This schedule yields a response rate that is low just after reinforcement and becomes rapid just before the next reinforcement is scheduled.

- Variable Interval Schedule (VI): A procedure in which behavior is reinforced after random time durations following the last reinforcement. This schedule yields steady responding at a rate that varies with the average frequency of reinforcement.

- Ratio Schedules — based on the ratio of responses to reinforcements

- Fixed Ratio Schedule (FR): A procedure in which reinforcement is delivered after a specific number of responses have been made.

- Variable Ratio Schedule (VR): A procedure in which reinforcement comes after a number of responses that is randomized from one reinforcement to the next (ex. slot machines). The lower the number of responses required, the higher the response rate tends to be. Ratio schedules tend to produce very rapid responding, often with breaks of no responding just after reinforcement if a large number of responses is required for reinforcement.

Scientific inventions

Operant conditioning chamber

An operant conditioning chamber (also known as a Skinner Box)

is a laboratory apparatus used in the experimental analysis of animal

behavior. It was invented by Skinner while he was a graduate student at

Harvard University.

As used by Skinner, the box had a lever (for rats), or a disk in one

wall (for pigeons). A press on this "manipulandum" could deliver food to

the animal through an opening in the wall, and responses reinforced in

this way increased in frequency. By controlling this reinforcement

together with discriminative stimuli such as lights and tones, or

punishments such as electric shocks, experimenters have used the operant

box to study a wide variety of topics, including schedules of

reinforcement, discriminative control, delayed response ("memory"),

punishment, and so on. By channeling research in these directions, the

operant conditioning chamber has had a huge influence on course of

research in animal learning and its applications. It enabled great

progress on problems that could be studied by measuring the rate,

probability, or force of a simple, repeatable response. However, it

discouraged the study of behavioral processes not easily conceptualized

in such terms—spatial learning, in particular, which is now studied in

quite different ways, for example, by the use of the water maze.

Cumulative recorder

The cumulative recorder makes a pen-and-ink record of simple repeated responses. Skinner designed it for use with the Operant chamber

as a convenient way to record and view the rate of responses such as a

lever press or a key peck. In this device, a sheet of paper gradually

unrolls over a cylinder. Each response steps a small pen across the

paper, starting at one edge; when the pen reaches the other edge, it

quickly resets to the initial side. The slope of the resulting ink line

graphically displays the rate of the response; for example, rapid

responses yield a steeply sloping line on the paper, slow responding

yields a line of low slope. The cumulative recorder was a key tool used

by Skinner in his analysis of behavior, and it was very widely adopted

by other experimenters, gradually falling out of use with the advent of

the laboratory computer. Skinner's major experimental exploration of response rates, presented in his book with C. B. Ferster, Schedules of Reinforcement, is full of cumulative records produced by this device.

Air crib

The air crib is an easily cleaned, temperature- and humidity-controlled enclosure intended to replace the standard infant crib.

Skinner invented the device to help his wife cope with the day-to-day

tasks of child rearing. It was designed to make early childcare simpler

(by reducing laundry, diaper rash, cradle cap, etc.), while allowing the

baby to be more mobile and comfortable, and less prone to cry.

Reportedly it had some success in these goals.

The air crib was a controversial invention. It was popularly

mischaracterized as a cruel pen, and it was often compared to Skinner's operant conditioning chamber,

commonly called the "Skinner Box". This association with laboratory

animal experimentation discouraged its commercial success, though

several companies attempted production.

A 2004 book by Lauren Slater, entitled Opening Skinner's Box: Great Psychology Experiments of the Twentieth Century

caused a stir by mentioning the rumors that Skinner had used his baby

daughter, Deborah, in some of his experiments, and that she had

subsequently committed suicide. Although Slater's book stated that the

rumors were false, a reviewer in The Observer

in March 2004 misquoted Slater's book as supporting the rumors. This

review was read by Deborah Skinner (now Deborah Buzan, an artist and

writer living in London) who wrote a vehement riposte in The Guardian.

Teaching machine

The teaching machine, a mechanical invention to automate the task of programmed learning

The teaching machine was a mechanical device whose purpose was to administer a curriculum of programmed learning.

The machine embodies key elements of Skinner's theory of learning and

had important implications for education in general and classroom

instruction in particular.

In one incarnation, the machine was a box that housed a list of

questions that could be viewed one at a time through a small window.

(See picture). There was also a mechanism through which the learner

could respond to each question. Upon delivering a correct answer, the

learner would be rewarded.

Skinner advocated the use of teaching machines for a broad range of

students (e.g., preschool aged to adult) and instructional purposes

(e.g., reading and music). For example, one machine that he envisioned

could teach rhythm. He wrote:

A relatively simple device supplies the necessary contingencies. The student taps a rhythmic pattern in unison with the device. "Unison" is specified very loosely at first (the student can be a little early or late at each tap) but the specifications are slowly sharpened. The process is repeated for various speeds and patterns. In another arrangement, the student echoes rhythmic patterns sounded by the machine, though not in unison, and again the specifications for an accurate reproduction are progressively sharpened. Rhythmic patterns can also be brought under the control of a printed score.

The

instructional potential of the teaching machine stemmed from several

factors: it provided automatic, immediate and regular reinforcement

without the use of aversive control; the material presented was

coherent, yet varied and novel; the pace of learning could be adjusted

to suit the individual. As a result, students were interested,

attentive, and learned efficiently by producing the desired behavior,

"learning by doing".

Teaching machines, though perhaps rudimentary, were not rigid

instruments of instruction. They could be adjusted and improved based

upon the students' performance. For example, if a student made many

incorrect responses, the machine could be reprogrammed to provide less

advanced prompts or questions—the idea being that students acquire

behaviors most efficiently if they make few errors. Multiple-choice

formats were not well-suited for teaching machines because they tended

to increase student mistakes, and the contingencies of reinforcement

were relatively uncontrolled.

Not only useful in teaching explicit skills, machines could also

promote the development of a repertoire of behaviors that Skinner called

self-management. Effective self-management means attending to stimuli

appropriate to a task, avoiding distractions, reducing the opportunity

of reward for competing behaviors, and so on. For example, machines

encourage students to pay attention before receiving a reward. Skinner

contrasted this with the common classroom practice of initially

capturing students’ attention (e.g., with a lively video) and delivering

a reward (e.g., entertainment) before the students have actually

performed any relevant behavior. This practice fails to reinforce

correct behavior and actually counters the development of

self-management.

Skinner pioneered the use of teaching machines in the classroom,

especially at the primary level. Today computers run software that

performs similar teaching tasks, and there has been a resurgence of

interest in the topic related to the development of adaptive learning

systems.

Pigeon-guided missile

During World War II, the US Navy required a weapon effective against surface ships, such as the German Bismarck class battleships. Although missile and TV

technology existed, the size of the primitive guidance systems

available rendered automatic guidance impractical. To solve this

problem, Skinner initiated Project Pigeon,

which was intended to provide a simple and effective guidance system.

This system divided the nose cone of a missile into three compartments,

with a pigeon placed in each. Lenses projected an image of distant

objects onto a screen in front of each bird. Thus, when the missile was

launched from an aircraft within sight of an enemy ship, an image of the

ship would appear on the screen. The screen was hinged, such that pecks

at the image of the ship would guide the missile toward the ship.

Despite an effective demonstration, the project was abandoned,

and eventually more conventional solutions, such as those based on

radar, became available. Skinner complained that "our problem was no one

would take us seriously." It seemed that few people would trust pigeons to guide a missile, no matter how reliable the system appeared to be.

Verbal summator

Early in his career Skinner became interested in "latent speech" and experimented with a device he called the "verbal summator". This device can be thought of as an auditory version of the Rorschach inkblots.

When using the device, human participants listened to incomprehensible

auditory "garbage" but often read meaning into what they heard. Thus, as

with the Rorschach blots, the device was intended to yield overt

behavior that projected subconscious thoughts. Skinner's interest in

projective testing was brief, but he later used observations with the

summator in creating his theory of verbal behavior. The device also led

other researchers to invent new tests such as the tautophone test, the

auditory apperception test, and the Azzageddi test.

Verbal Behavior

Challenged by Alfred North Whitehead during a casual discussion while at Harvard to provide an account of a randomly provided piece of verbal behavior, Skinner set about attempting to extend his then-new functional, inductive approach to the complexity of human verbal behavior. Developed over two decades, his work appeared in the book Verbal Behavior. Although Noam Chomsky was highly critical of Verbal Behavior,

he conceded that Skinner's "S-R psychology" was worth a review.

(Behavior analysts reject the "S-R" characterization: operant

conditioning involves the emission of a response which then becomes more

or less likely depending upon its consequence–see above.).

Verbal Behavior had an uncharacteristically cool

reception, partly as a result of Chomsky's review, partly because of

Skinner's failure to address or rebut any of Chomsky's criticisms. Skinner's peers may have been slow to adopt the ideas presented in Verbal Behavior because of the absence of experimental evidence—unlike the empirical density that marked Skinner's experimental work. However, in applied settings there has been a resurgence of interest in Skinner's functional analysis of verbal behavior.

Influence on education

Skinner's views influenced education

as well as psychology. Skinner argued that education has two major

purposes: (1) to teach repertoires of both verbal and nonverbal

behavior; and (2) to interest students in learning. He recommended

bringing students’ behavior under appropriate control by providing

reinforcement only in the presence of stimuli relevant to the learning

task. Because he believed that human behavior can be affected by small

consequences, something as simple as "the opportunity to move forward

after completing one stage of an activity" can be an effective

reinforcer . Skinner was convinced that, to learn, a student must engage

in behavior, and not just passively receive information. (Skinner,

1961, p. 389).

Skinner believed that effective teaching must be based on

positive reinforcement which is, he argued, more effective at changing

and establishing behavior than punishment. He suggested that the main

thing people learn from being punished is how to avoid punishment. For

example, if a child is forced to practice playing an instrument, the

child comes to associate practicing with punishment and thus learns to

hate and avoid practicing the instrument. This view had obvious

implications for the then widespread practice of rote learning and punitive discipline in education. The use of educational activities as punishment may induce rebellious behavior such as vandalism or absence.

Because teachers are primarily responsible for modifying student

behavior, Skinner argued that teachers must learn effective ways of

teaching. In The Technology of Teaching, Skinner has a chapter on

why teachers fail (pages 93–113): He says that teachers have not been

given an in-depth understanding of teaching and learning. Without knowing the science underpinning teaching, teachers fall back on procedures that work poorly or not at all, such as:

- using aversive techniques (which produce escape and avoidance and undesirable emotional effects);

- relying on telling and explaining ("Unfortunately, a student does not learn simply when he is shown or told." p. 103);

- failing to adapt learning tasks to the student's current level;

- failing to provide positive reinforcement frequently enough.

Skinner suggests that any age-appropriate skill can be taught. The steps are

- Clearly specify the action or performance the student is to learn.

- Break down the task into small achievable steps, going from simple to complex.

- Let the student perform each step, reinforcing correct actions.

- Adjust so that the student is always successful until finally the goal is reached.

- Shift to intermittent reinforcement to maintain the student's performance.

Skinner's views on education are extensively presented in his book The Technology of Teaching. They are also reflected in Fred S. Keller's Personalized System of Instruction and Ogden R. Lindsley's Precision Teaching.

Walden Two and Beyond Freedom and Dignity

Skinner is popularly known mainly for his books Walden Two and Beyond Freedom and Dignity, (for which he made the cover of TIME Magazine). The former describes a fictional "experimental community"

in 1940s United States. The productivity and happiness of citizens in

this community is far greater than in the outside world because the

residents practice scientific social planning and use operant

conditioning in raising their children.

Walden Two, like Thoreau's Walden,

champions a lifestyle that does not support war, or foster competition

and social strife. It encourages a lifestyle of minimal consumption,

rich social relationships, personal happiness, satisfying work, and

leisure. In 1967, Kat Kinkade and others founded the Twin Oaks Community,

using Walden Two as a blueprint. The community still exists and

continues to use the Planner-Manager system and other aspects of the

community described in Skinner's book, though behavior modification is

not a community practice.

In Beyond Freedom and Dignity, Skinner suggests that a technology of behavior could help to make a better society. We would, however, have to accept that an autonomous agent

is not the driving force of our actions. Skinner offers alternatives to

punishment, and challenges his readers to use science and modern

technology to construct a better society.

Political views

Skinner's

political writings emphasized his hopes that an effective and human

science of behavioral control – a technology of human behavior – could

help with problems as yet unsolved and often aggravated by advances in

technology such as the atomic bomb. Indeed, one of Skinner's goals was to prevent humanity from destroying itself. He saw political activity as the use of aversive or non-aversive means to control a population. Skinner favored the use of positive reinforcement as a means of control, citing Jean-Jacques Rousseau's novel Emile: or, On Education as an example of literature that "did not fear the power of positive reinforcement".

Skinner's book, Walden Two, presents a vision of a

decentralized, localized society, which applies a practical, scientific

approach and behavioral expertise to deal peacefully with social

problems. (For example, his views led him to oppose corporal punishment

in schools, and he wrote a letter to the California Senate that helped

lead it to a ban on spanking.) Skinner's utopia is both a thought experiment and a rhetorical piece. In Walden Two,

Skinner answers the problem that exists in many utopian novels – "What

is the Good Life?" The book's answer is a life of friendship, health,

art, a healthy balance between work and leisure, a minimum of

unpleasantness, and a feeling that one has made worthwhile contributions

to a society in which resources are ensured, in part, by minimizing

consumption.

If the world is to save any part of its resources for the future, it must reduce not only consumption but the number of consumers.

— B. F. Skinner, Walden Two, p. xi.

When Milton's Satan falls from heaven, he ends in hell. And what does he say to reassure himself? 'Here, at least, we shall be free.' And that, I think, is the fate of the old-fashioned liberal. He's going to be free, but he's going to find himself in hell.

— B. F. Skinner, from William F. Buckley Jr, On the Firing Line, p. 87.

Superstition in the pigeon

One of Skinner's experiments examined the formation of superstition in one of his favorite experimental animals, the pigeon.

Skinner placed a series of hungry pigeons in a cage attached to an

automatic mechanism that delivered food to the pigeon "at regular

intervals with no reference whatsoever to the bird's behavior". He

discovered that the pigeons associated the delivery of the food with

whatever chance actions they had been performing as it was delivered,

and that they subsequently continued to perform these same actions.

One bird was conditioned to turn counter-clockwise about the cage, making two or three turns between reinforcements. Another repeatedly thrust its head into one of the upper corners of the cage. A third developed a 'tossing' response, as if placing its head beneath an invisible bar and lifting it repeatedly. Two birds developed a pendulum motion of the head and body, in which the head was extended forward and swung from right to left with a sharp movement followed by a somewhat slower return.

Skinner suggested that the pigeons behaved as if they were

influencing the automatic mechanism with their "rituals", and that this

experiment shed light on human behavior:

The experiment might be said to demonstrate a sort of superstition. The bird behaves as if there were a causal relation between its behavior and the presentation of food, although such a relation is lacking. There are many analogies in human behavior. Rituals for changing one's fortune at cards are good examples. A few accidental connections between a ritual and favorable consequences suffice to set up and maintain the behavior in spite of many unreinforced instances. The bowler who has released a ball down the alley but continues to behave as if she were controlling it by twisting and turning her arm and shoulder is another case in point. These behaviors have, of course, no real effect upon one's luck or upon a ball half way down an alley, just as in the present case the food would appear as often if the pigeon did nothing—or, more strictly speaking, did something else.

Modern behavioral psychologists have disputed Skinner's

"superstition" explanation for the behaviors he recorded. Subsequent

research (e.g. Staddon and Simmelhag, 1971), while finding similar

behavior, failed to find support for Skinner's "adventitious

reinforcement" explanation for it. By looking at the timing of different

behaviors within the interval, Staddon and Simmelhag were able to

distinguish two classes of behavior: the terminal response, which occurred in anticipation of food, and interim responses,

that occurred earlier in the interfood interval and were rarely

contiguous with food. Terminal responses seem to reflect classical (as

opposed to operant) conditioning, rather than adventitious

reinforcement, guided by a process like that observed in 1968 by Brown

and Jenkins in their "autoshaping" procedures. The causation of interim

activities (such as the schedule-induced polydipsia

seen in a similar situation with rats) also cannot be traced to

adventitious reinforcement and its details are still obscure (Staddon,

1977).

Criticism

Noam Chomsky

Noam Chomsky, a prominent critic of Skinner, published a review of Skinner's Verbal Behavior two years after it was published.

Chomsky argued that Skinner's attempt to use behaviorism to explain

human language amounted to little more than word games. Conditioned

responses could not account for a child's ability to create or

understand an infinite variety of novel sentences. Chomsky's review has

been credited with launching the cognitive revolution

in psychology and other disciplines. Skinner, who rarely responded

directly to critics, never formally replied to Chomsky's critique. Many

years later, Kenneth MacCorquodale's reply was endorsed by Skinner.

Chomsky also reviewed Skinner's Beyond Freedom and Dignity, using the same basic motives as his Verbal Behavior

review. Among Chomsky's criticisms were that Skinner's laboratory work

could not be extended to humans, that when it was extended to humans it

represented 'scientistic' behavior attempting to emulate science but which was not scientific, that Skinner was not a scientist because he rejected the hypothetico-deductive model of theory testing, and that Skinner had no science of behavior.

Psychodynamic psychology

Skinner has been repeatedly criticized for his supposed animosity towards Sigmund Freud, psychoanalysis, and psychodynamic psychology.

Some have argued, however, that Skinner shared several of Freud's

assumptions, and that he was influenced by Freudian points of view in

more than one field, among them the analysis of defense mechanisms, such as repression. To study such phenomena, Skinner even designed his own projective test, the "verbal summator" described above.

J. E. R. Staddon

As understood by Skinner, ascribing dignity

to individuals involves giving them credit for their actions. To say

"Skinner is brilliant" means that Skinner is an originating force. If

Skinner's determinist

theory is right, he is merely the focus of his environment. He is not

an originating force and he had no choice in saying the things he said

or doing the things he did. Skinner's environment and genetics both

allowed and compelled him to write his book. Similarly, the environment

and genetic potentials of the advocates of freedom and dignity cause

them to resist the reality that their own activities are

deterministically grounded. J. E. R. Staddon (The New Behaviorism, 2nd Edition, 2014) has argued the compatibilist position; Skinner's determinism is not in any way contradictory to traditional notions of reward and punishment, as he believed.

List of awards and positions

- 1926 AB, Hamilton College

- 1930 MA, Harvard University

- 1930−1931 Thayer Fellowship

- 1931 PhD, Harvard University

- 1931−1932 Walker Fellowship

- 1931−1933 National Research Council Fellowship

- 1933−1936 Junior Fellowship, Harvard Society of Fellows

- 1936-1937 Instructor, University of Minnesota

- 1937−1939 Assistant Professor, University of Minnesota

- 1939−1945 Associate Professor, University of Minnesota

- 1942 Guggenheim Fellowship (postponed until 1944–1945)

- 1942 Howard Crosby Warren Medal, Society of Experimental Psychologists

- 1945−1948 Professor and Chair, Indiana University

- 1947−1948 William James Lecturer, Harvard University

- 1948−1958 Professor, Harvard University

- 1949−1950 President of the Midwestern Psychological Association

- 1954−1955 President of the Eastern Psychological Association

- 1958 Distinguished Scientific Contribution Award, American Psychological Association

- 1958−1974 Edgar Pierce Professor of Psychology, Harvard University

- 1964−1974 Career Award, National Institute of Mental Health

- 1966 Edward Lee Thorndike Award, American Psychological Association

- 1966−1967 President of the Pavlovian Society of North America

- 1968 National Medal of Science, National Science Foundation

- 1969 Overseas Fellow in Churchill College, Cambridge

- 1971 Gold Medal Award, American Psychological Foundation

- 1971 Joseph P. Kennedy, Jr., Foundation for Mental Retardation International award

- 1972 Humanist of the Year, American Humanist Association

- 1972 Creative Leadership in Education Award, New York University

- 1972 Career Contribution Award, Massachusetts Psychological Association

- 1974−1990 Professor of Psychology and Social Relations Emeritus, Harvard University

- 1978 Distinguished Contributions to Educational Research Award and Development, American Educational Research Association

- 1978 National Association for Retarded Citizens Award

- 1985 Award for Excellence in Psychiatry, Albert Einstein School of Medicine

- 1985 President's Award, New York Academy of Science

- 1990 William James Fellow Award, American Psychological Society

- 1990 Lifetime Achievement Award, American Psychology Association

- 1991 Outstanding Member and Distinguished Professional Achievement Award, Society for Performance Improvement

- 1997 Scholar Hall of Fame Award, Academy of Resource and Development

- 2011 Committee for Skeptical Inquiry Pantheon of Skeptics—Inducted

Honorary degrees

Skinner received honorary degrees from:

- Alfred University

- Ball State University

- Dickinson College

- Hamilton College

- Harvard University

- Hobart and William Smith Colleges

- Johns Hopkins University

- Keio University

- Long Island University C. W. Post Campus

- McGill University

- North Carolina State University

- Ohio Wesleyan University

- Ripon College

- Rockford College

- Tufts University

- University of Chicago

- University of Exeter

- University of Missouri

- University of North Texas

- Western Michigan University

- University of Maryland, Baltimore County.

In popular culture

Writer of The Simpsons Jon Vitti named the Principal Skinner character after behavioral psychologist B. F. Skinner.

Bibliography

- The Behavior of Organisms: An Experimental Analysis, 1938. ISBN 1-58390-007-1, ISBN 0-87411-487-X.

- Walden Two, 1948. ISBN 0-87220-779-X (revised 1976 edition).

- Science and Human Behavior, 1953. ISBN 0-02-929040-6. A free copy of this book (in a 1.6 MB .pdf file) may be downloaded at the B. F. Skinner Foundation web site BFSkinner.org.

- Schedules of Reinforcement, with C. B. Ferster, 1957. ISBN 0-13-792309-0.

- Verbal Behavior, 1957. ISBN 1-58390-021-7.

- The Analysis of Behavior: A Program for Self Instruction, with James G. Holland, 1961. ISBN 0-07-029565-4.

- The Technology of Teaching, 1968. New York: Appleton-Century-Crofts Library of Congress Card Number 68-12340 E 81290 ISBN 0-13-902163-9.

- Contingencies of Reinforcement: A Theoretical Analysis, 1969. ISBN 0-390-81280-3.

- Beyond Freedom and Dignity, 1971. ISBN 0-394-42555-3.

- About Behaviorism, 1974. ISBN 0-394-49201-3, ISBN 0-394-71618-3.

- Particulars of My Life: Part One of an Autobiography, 1976. ISBN 0-394-40071-2.

- Reflections on Behaviorism and Society, 1978. ISBN 0-13-770057-1.

- The Shaping of a Behaviorist: Part Two of an Autobiography, 1979. ISBN 0-394-50581-6.

- Notebooks, edited by Robert Epstein, 1980. ISBN 0-13-624106-9.

- Skinner for the Classroom, edited by R. Epstein, 1982. ISBN 0-87822-261-8.

- Enjoy Old Age: A Program of Self-Management, with M. E. Vaughan, 1983. ISBN 0-393-01805-9.

- A Matter of Consequences: Part Three of an Autobiography, 1983. ISBN 0-394-53226-0, ISBN 0-8147-7845-3.

- Upon Further Reflection, 1987. ISBN 0-13-938986-5.

- Recent Issues in the Analysis of Behavior, 1989. ISBN 0-675-20674-X.

- Cumulative Record: A Selection of Papers, 1959, 1961, 1972 and 1999 as Cumulative Record: Definitive Edition. This book includes a reprint of Skinner's October 1945 Ladies' Home Journal article, "Baby in a Box", Skinner's original, personal account of the much-misrepresented "Baby in a box" device. ISBN 0-87411-969-3 (paperback)