December 2, 2010 by Judith Curry

Original link: https://judithcurry.com/2010/12/02/best-of-the-greenhouse/

On this thread, I try to synthesize the main issues

and arguments that were made and pull some of what I regard to be the

highlights from the comments.

The problem with explaining the atmospheric greenhouse effect is eloquently described by Nullius in Verba:

A great deal of confusion is caused in this debate by the fact

that there are two distinct explanations for the greenhouse effect: one

based on that developed by Fourier, Tyndall, etc. which works for purely

radiative atmospheres (i.e. no convection), and the

radiative-convective explanation developed by Manabe and Wetherald

around the 1970s, I think. (It may be earlier, but I don’t know of any

other references.)

Climate scientists do know how the basic greenhouse physics

works, and they model it using the Manabe and Wetherald approach. But

almost universally, when they try to explain it, they all use the purely

radiative approach, which is incorrect, misleading, contrary to

observation, and results in a variety of inconsistencies when people try

to plug real atmospheric physics into a bad model. It is actually

internally consistent, and it would happen like that if convection could

somehow be prevented, but it isn’t how the real atmosphere works.

This leads to a tremendous amount of wasted effort and confusion.

The G&T paper in particular got led down the garden path by picking

up several ‘popular’ explanations of the greenhouse effect and pursuing

them ad absurdam. A tremendous amount of debate is expended on

questions of the second law of thermodynamics, and whether back

radiation from a cold sky can warm the surface.

The Tyndall gas effect

John Nielsen-Gammon focuses in on the radiative explanation, which he refers to as the “Tyndall gas effect,” in a concurrent post on his blog Climate Abyss.

Vaughan Pratt succintly describes the Tyndall gas effect:

The proof of infrared absorption by CO2 was found by John Tyndall

in the 1860s and measured at 972 times the absorptivity of air. Since

then we have learned how to measure not only the strength of its

absorption but also how the strength depends on the absorbed wavelength.

The physics of infrared absorption by CO2 is understood in great

detail, certainly enough to predict what will happen to thermal

radiation passed through any given quantity of CO2, regardless of

whether that quantity is in a lab or overhead in the atmosphere.

In a second post,

John Nielsen-Gammon describes the Tyndall gas effect from the

perspective of weather satellites that measure infrared radiation at

different wavelengths.

In a slightly more technical treatment, Chris Colose explains the

physics behind what the weather satellites are seeing in terms of

infrared radiative transfer:

An interesting question to ask is to take a beam of energy going

from the surface to space, and ask how much of it is received by a

sensor in space. The answer is obviously the intensity of the upwelling

beam multiplied by that fractional portion of the beam which is

transmitted to space, where the transmissivity is given as

1-absorptivity (neglecting scattering) or exp(-τ), where τ is the

optical depth. This relation is known as Beer’s Law, and works for

wavelengths where the medium itself (the atmosphere) is not emitting

(such as in the visble wavelengths). In the real atmosphere of course,

you have longwave contribution from the outgoing flux not only from the

surface, but integrated over the depth of the atmosphere, with various

contributions from different layers, which in turn radiate locally in

accord with the Planck function for a given temperature. The combination

of these terms gives the so-called Schwartzchild equation of radiative

transfer.

In the optically thin limit (of low infrared opacity) , a sensor

from space will see the bulk of radiation emanating from the relatively

warm surface. This is the case in desert regions or Antarctica for

example, where opacity from water vapor is feeble. As you gradually add

more opacity to the atmosphere, the sensor in space will see less

upwelling surface radiation, which will be essentially “replaced” by

emission from colder, higher levels of the atmosphere. This is all

wavelength dependent in the real world, since some regions in the

spectrum are pretty transparent, and some are very strongly absorbing.

In the 15 micron band of CO2, an observer looking down is seeing

emission from the stratosphere, while outward toward ~10 microns, the

emission is from much lower down.

These “lines” that form in the spectrum, as seen from space,

require some vertical temperature gradient to exist, otherwise the flux

from all levels would be the same, even if you have opacity. The net

result is to take a “bite” out of a Earth spectrum (viewed from space),

see e.g., this image.

This reduces the total area under the curve of the outgoing emission,

which means the Earth’s outgoing energy is no longer balancing the

absorbed incoming stellar energy. It is therefore mandated to warm up

until the whole area under the spectrum is sufficiently increased to

allow a restoration of radiative equilibrium. Note that there’s some

exotic cases such as on Venus or perhaps ancient Mars where you can get a

substantial greenhouse effect from infrared scattering, as opposed to

absorption/emission, to which the above lapse rate issues are no longer

as relevant…but this physics is not really at play on Modern Earth.

A molecular perspective

Maxwell writes:

As a molecular physicist, I think it’s imperative to make sure

that the dynamics of each molecule come through in these mechanistic

explanations. A CO2 molecule absorbs an IR photon giving off by the

thermally excited surface of the earth (earthlight). The energy in that

photon gets redistributed by non-radiative relaxation processes

(collisions with other molecules mostly) and then emits a lower energy

IR photon in a random direction. A collection of excited CO2 molecules

will act like a point source, emitted IR radiation in all directions.

Some of that light is directed back at the surface of the earth where it

is absorbed and the whole thing happens over again.

All of this is very well understood, though in the context of the

CO2 laser. If you’re interested in these dynamics, there is a great

literature on the relaxation processes (radiative and otherwise) that

occur in an atmosphere-like gas.

Vaughan Pratt describes the underlying physics of the greenhouse effect from a molecular point of view:

The Sun heats the surface of the Earth with little interference

from Earth’s atmosphere except when there are clouds, or when the albedo

(reflectivity) is high. In the absence of greenhouse gases

like water vapor and CO2, Earth’s atmosphere allows all thermal

radiation from the Earth’s surface to escape into the void of outer

space.

The greenhouse gases, let’s say CO2 for definiteness, capture the

occasional escaping photon. This happens probabilistically: the

escaping photons are vibrating, and the shared electrons comprising the

bonds of a CO2 molecule are also vibrating. When a passing photon is in

close phase with a vibrating bond there is a higher-than-usual chance

that the photon will be absorbed by the bond and excite it into a higher

energy level.

This extra energy in the bond acts as though it were increasing

the spring constant, making for a stronger spring. The energy of the

captured photon now turns into vibrational energy in the CO2 molecule,

which it registers as an increase in its temperature.

This energy now bounces around between the various degrees of

freedom of the CO2 molecule. And when it collides with another

atmospheric molecule some transfer of energy takes place there too. In

equilibrium all the molecules of the atmosphere share the energy of the

photons being captured by the greenhouse gases.

By the same token the greenhouse gases radiate this energy. They do so isotropically, that is, in all directions.

The upshot is that the energy of photons escaping from Earth’s

surface is diverted to energy being radiated in all directions from

every point of the Earth’s atmosphere.

The higher the cooler, with a lapse rate of 5 °C per km for moist

air and 9 °C per km for dry air (the so-called dry adiabatic lapse rate

or DALR). (“Adiabatic” means changing temperature in response to a

pressure change so quickly that there is no time for the resulting heat

to leak elsewhere.)

Because of this lapse rate, every point in the atmosphere is

receiving slightly more photons from below than from above. There is

therefore a net flux of photonic energy from below to above. But because

the difference is slight, this flux is less than it would be if there

were no greenhouse gases. As a result greenhouse gases have the effect

of creating thermal resistance, slowing down the rate at which photons

can carry energy from the Earth’s surface to outer space.

This is not the usual explanation of what’s going on in the

atmosphere, which instead is described in terms of so-called “back

radiation.” While this is equivalent to what I wrote, it is harder to

see how it is consistent with the 2nd law of thermodynamics. Not that it

isn’t, but when described my way it is obviously thermodynamically

sound.

Radiative-convective perspective

In what was arguably the most lauded comment on the two threads, Nullius in Verba provides this eloquent explanation:

The greenhouse effect requires the understanding of two effects:

first, the temperature of a heated object in a vacuum, and second, the

adiabatic lapse rate in a convective atmosphere.

For the first, you need to know that the hotter the surface of an

object is, the faster it radiates heat. This acts as a sort of feedback

control, so that if the temperature falls below the equilibrium level

it radiates less heat than it absorbs and hence heats up, and if the

temperature rises above the equilibrium it radiates more heat than it is

absorbing and hence cools down. The average radiative temperature for

the Earth is easily calculated to be about -20 C, which is close enough

although a proper calculation taking non-uniformities into account would

be more complicated.

However, the critical point of the above is the question of what

“surface” we are talking about. The surface that radiates heat to space

is not the solid surface of the Earth. If you could see in infra-red,

the atmosphere would be a fuzzy opaque mist, and the surface you could

see would actually be high up in the atmosphere. It is this surface that

approaches the equilibrium temperature by radiation to space. Emission

occurs from all altitudes from the ground up to about 10 km, but the

average is at about 5 km.

The second thing you need to know doesn’t involve radiation or

greenhouse gases at all. It is a simply physical property of gases, that

if you compress them they get hot, and if you allow them to expand they

cool down. As air rises in the atmosphere due to convection the

pressure drops and hence so does its temperature. As it descends again

it is compressed and its temperature rises. The temperature changes are

not due to the flow of heat in to or out of the air; they are due to the

conversion of potential energy as air rises and falls in a

gravitational field.

This sets up a constant temperature gradient in the atmosphere.

The surface is at about 15 C on average, and as you climb the

temperature drops at a constant rate until you reach the top of the

troposphere where it has dropped to a chilly -54 C. Anyone who flies

planes will know this as the standard atmosphere.

Basic properties of gases would mean that dry air would change

temperature by about 10 C/km change in altitude. This is modified

somewhat by the latent heat of water vapour, which reduces it to about 6

C/km.

And if you multiply 6 C/km by 5 km between the layer at

equilibrium temperature and the surface, you get the 30 C greenhouse

effect.

It really is that simple, and this really is what the

peer-reviewed technical literature actually uses for calculation. (See

for example Soden and Held 2000, the discussion just below figure 1.)

It’s just that when it comes to explaining what’s going on, this other

version with back radiation getting “trapped” gets dragged out again and

set up in its place.

If an increase in back radiation tried to exceed this temperature

gradient near the surface, convection would simply increase until the

constant gradient was achieved again. Back radiation exists, and is very

large compared to other heat flows, but it does not control the surface

temperature.

Increasing CO2 in the atmosphere makes the fuzzy layer thicker,

increases the altitude of the emitting layer, and hence its distance

from the ground. The surface temperature is controlled by this height

and the gradient, and the gradient (called the adiabatic lapse rate) is

affected only by humidity.

I should mention for completeness that there are a couple of

complications. One is that if convection stops, as happens on windless

nights, and during the polar winters, you can get a temperature

inversion and the back radiation can once again become important. The

other is that the above calculation uses averages as being

representative, and that’s not valid when the physics is non-linear. The

heat input varies by latitude and time of day. The water vapour content

varies widely. There are clouds. There are great convection cycles in

air and ocean that carry heat horizontally. I don’t claim this to be the

entire story. But it’s a better place to start from.

Andy Lacis describes in general terms how this is determined in climate models:

While we speak of the greenhouse effect primarily in radiative

transfer terms, the key component is the temperature profile that has to

be defined in order to perform the radiative transfer calculations. So,

it is the Manabe-Moller concept that is being used. In 1-D model

calculations, such as those by Manabe-Moller, the temperature profile is

prescribed with the imposition of a “critical” lapse rate that

represents convective energy transport in the troposphere when the

radiative lapse rate becomes too steep to be stable. In 3-D climate GCMs

no such assumption is made. The temperature profile is determined

directly as the result of numerically solving the atmospheric

hydrodynamic and thermodynamic behavior. Radiative transfer calculations

are then performed for each (instantaneous) temperature profile at each

grid box.

It is these radiative transfer calculations that give the 33 K

(or 150 W/m2) measure of the terrestrial greenhouse effect. If radiative

equilibrium was calculated without the convective/advective temperature

profile input (radiative energy transport only), the radiative only

greenhouse effect would be about 66 K (for the same atmospheric

composition), instead of the current climate value of 33 K.

Skeptical perspectives

The skeptical perspectives on the greenhouse effect that were most

widely discussed were papers by Gerlich and Tscheuschner, Claes Johnson,

and (particularly) Miskolczi. The defenses put forward of these papers

did not stand up at all to the examinations by the radiative transfer

experts that participated in this discussion. Andy Lacis summarizes the

main concerns with the skeptical arguments:

Actually, the Gerlich and Tscheuschner, Claes Johnson, and

Miskolczi papers are a good test to evaluate one’s understanding of

radiative transfer. If you looked through these papers and did not

immediately realize that they were nonsense, then it is very likely that

you are simply not up to speed on radiative transfer. You should then

go and check the Georgia Tech’s radiative transfer course that was

recommended by Judy, or check the discussion of the greenhouse effect on

Real Climate or Chris Colose science blogs.

The notion by Gerlich and Tscheuschner that the second law of

thermodynamics forbids the operation of a greenhouse effect is nonsense.

The notion by Claes Johnson that “backradiation is unphysical because

it is unstable and serves no role” is beyond bizarre. A versatile LW

spectrometer used at the DoE ARM site in Oklahoma sees downwelling

“backradiation” (water vapor lines in emission) when pointed upward.

When looking downward from an airplane it sees upwelling thermal

radiation (water vapor lines in absorption). When looking horizontally

it sees a continuum spectrum since the water vapor and background light

source are both at the same temperature. Miskolczi, on the other hand,

acknowledges and includes downwelling backradiation in his calculations,

but he then goes and imposes an unphysical constraint to maintain a

constant atmospheric optical depth such that if CO2 increases water

vapor must decrease, a constraint that is not supported by observations.

Summary

While there is much uncertainty about the magnitude of the climate

sensitivity to doubling CO2 and the magnitude and nature of the various

feedback processes, the fundamental underlying physics of the

atmospheric greenhouse effect (radiative plus convective heat transfer)

is well understood.

That said, the explanation of the atmospheric greenhouse effect is

often confusing, and the terminology “greenhouse effect” is arguably

part of the confusion. We need better ways to communicate this. I

think the basic methods of explaining the greenhouse effect that have

emerged from this discussion are right on target; now we need some good

visuals/animations, and translations of this for an audience that is

less sophisticated in terms of understanding science. Your thoughts on

how to proceed with this?

And finally, I want to emphasize again that our basic understanding

of the underlying physics of the atmospheric greenhouse effect does not

direct translate into quantitative understanding of the sensitivity of

the Earth’s energy balance to doubling CO2, which remains a topic of

substantial debate and ongoing research. And it does not say anything

about other processes that cause climate change, such as solar and the

internal ocean oscillations.

So that is my take home message from all this. I am curious to hear

the reactions from the commenters that were asking questions or others

lurking on these threads. Did the dialogue clarify things for you or

confuse you? Do the explanations that I’ve highlighted make sense to

you? What do you see as the outstanding issues in terms of public

understanding of the basic mechanism behind the greenhouse effect?

A Medley of Potpourri is just what it says; various thoughts, opinions, ruminations, and contemplations on a variety of subjects.

Search This Blog

Wednesday, December 27, 2017

Sunday, December 24, 2017

Stratospheric Cooling and Tropospheric Warming

Posted on 18 December 2010 by Bob Guercio

This is a revised version of Stratospheric Cooling and Tropospheric Warming posted on December 1, 2010.

Increased levels of carbon dioxide (CO2) in the atmosphere have resulted in the warming of the troposphere and cooling of the stratosphere which is caused by two mechanisms. One mechanism involves the conversion of translational energy of motion or translational kinetic energy (KE) into Infrared radiation (IR) and the other method involves the absorption of IR energy by CO2 in the troposphere such that it is no longer available to the stratosphere. The former dominates and will be discussed first. For simplicity, both methods will be explained by considering a model of a fictitious planet with an atmosphere consisting of CO2 and an inert gas such as nitrogen (N2) at pressures equivalent to those on earth. This atmosphere will have a troposphere and a stratosphere with the tropopause at 10 km. The initial concentration of CO2 will be 100 parts per million (ppm) and will be increased to 1000 ppm. These parameters were chosen in order to generate graphs which enable the reader to easily understand the mechanisms discussed herein. Furthermore, in keeping with the concept of simplicity, the heating of the earth and atmosphere due to solar insolation will not be discussed. A short digression into the nature of radiation and its interaction with CO2 in the gaseous state follows.

Temperature is a measure of the energy content of matter and is indicated by the translational KE of the particles. A gas of fast particles is at a higher temperature than one of slow particles. Energy also causes CO2 molecules to vibrate but although this vibration is related to the energy content of CO2, it is not related to the temperature of the gaseous mixture. Molecules undergoing this vibration are in an excited state.

IR radiation contains energy and in the absence of matter, this radiation will continue to travel indefinitely. In this situation, there is no temperature because there is no matter.

The energy content of IR radiation can be indicated by its IR spectrum which is a graph of power density as a function of frequency. Climatologists use wavenumbers instead of frequencies for convenience and a wavenumber is defined as the number of cycles per centimeter. Figure 1 is such a graph where the x axis indicates the wavenumber and the y axis indicates the power per square meter per wavenumber. The area under the curve represents the total power per square meter in the radiation.

Figure 1. IR Spectrum - No Atmosphere

The interaction of IR radiation with CO2 is a two way street in that IR radiation can interact with unexcited CO2 molecules and cause them to vibrate and become excited and excited CO2 molecules can become unexcited by releasing IR radiation.

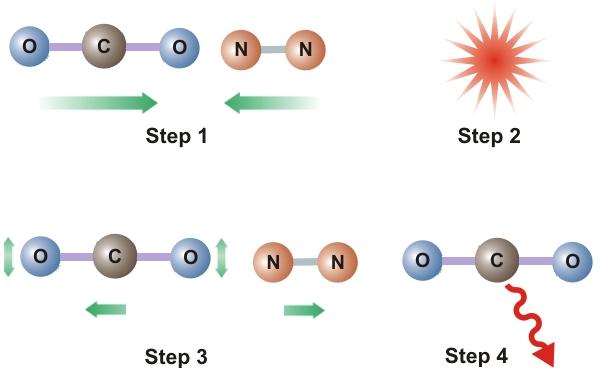

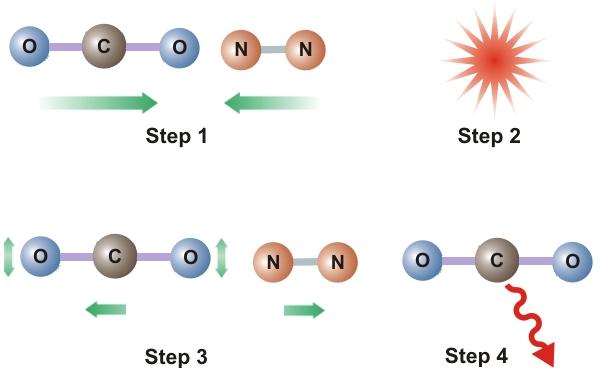

Consider now the atmosphere of our fictitious model. As depicted in Step 1 of Figure 2, N2 and CO2 molecules are in motion and the average speed of these molecules is related to the temperature of the stratosphere. Now imagine that CO2 molecules are injected into the atmosphere causing the concentration of CO2 to increase. These molecules will then collide with other molecules of either N2 or CO2 (Step 2) and some of the KE of these particles will be transferred to the CO2 resulting in excited CO2 molecules (Step 3) and a lowered stratospheric temperature. All entities, including atoms and molecules, prefer the unexcited state to the excited state. Therefore, these excited CO2 molecules will deexcite and emit IR radiation (Step 4) which, in the rarefied stratosphere, will simply be radiated out of the stratosphere. The net result is a lower stratospheric temperature. This does not happen in the troposphere because, due to higher pressures and shorter distances between particles, any emitted radiation gets absorbed by another nearby CO2 molecule.

Figure 2. Kinetic To IR Energy Transfer

In order to discuss the second and less dominant mechanism, consider Figure 1 which shows the IR spectrum from a planet with no atmosphere and Figures 3 which shows the IR spectrums from the same planet with CO2 levels of 100 ppm and 1000 ppm respectively. These graphs were generated from a model simulator at the website of Dr. David Archer, a professor in the Department of the Geophysical Sciences at the University of Chicago and edited to contain only the curves of interest to this discussion. As previously stated, these parameters were chosen in order to generate graphs which enable the reader to easily understand the mechanism discussed herein.

The curves of Figures 3 approximately follow the intensity curve of Figure 1 except for the missing band of energy centered at 667 cm-1. This band is called the absorption band and is so named because it represents the IR energy that is absorbed by CO2. IR radiation of all other wavenumbers do not react with CO2 and thus the IR intensity at these wavenumbers is the same as that of Figure 1. These wavenumbers represent the atmospheric window which is so named because the IR energy radiates through the atmosphere unaffected by the CO2.

Figure 3. CO2 IR Spectrum - 100/1000 ppm

A comparison of the curves in Figure 3 shows that the absorption band at 1000 ppm is wider than that at 100 ppm because more energy has been absorbed from the IR radiation by the troposphere at a CO2 concentration of 1000 ppm than at a concentration of 100 ppm. The energy that remains in the absorption band after the IR radiation has traveled through the troposphere is the only energy that is available to interact with the CO2 of the stratosphere. At a CO2 level of 100 ppm there is more energy available for this than at a level of 1000 ppm. Therefore, the stratosphere is cooler because of the higher level of CO2 in the troposphere. Additionally, the troposphere has warmed because it has absorbed the energy that is no longer available to the stratosphere.

In concluding, this paper has explained the mechanisms which cause the troposphere to warm and the stratosphere to cool when the atmospheric level of CO2 increases. The dominant mechanism involves the conversion of the energy of motion of the particles in the atmosphere to IR radiation which escapes to space and the second method involves the absorption of IR energy by CO2 in the troposphere such that it is no longer available to the stratosphere. Both methods act to reduce the temperature of the stratosphere.

*It is recognized that a fictitious planet as described herein is a physical impossibility. The simplicity of this model serves to explain a concept that would otherwise be more difficult using a more complex and realistic model.

Robert J. Guercio - December 18, 2010

This is a revised version of Stratospheric Cooling and Tropospheric Warming posted on December 1, 2010.

Increased levels of carbon dioxide (CO2) in the atmosphere have resulted in the warming of the troposphere and cooling of the stratosphere which is caused by two mechanisms. One mechanism involves the conversion of translational energy of motion or translational kinetic energy (KE) into Infrared radiation (IR) and the other method involves the absorption of IR energy by CO2 in the troposphere such that it is no longer available to the stratosphere. The former dominates and will be discussed first. For simplicity, both methods will be explained by considering a model of a fictitious planet with an atmosphere consisting of CO2 and an inert gas such as nitrogen (N2) at pressures equivalent to those on earth. This atmosphere will have a troposphere and a stratosphere with the tropopause at 10 km. The initial concentration of CO2 will be 100 parts per million (ppm) and will be increased to 1000 ppm. These parameters were chosen in order to generate graphs which enable the reader to easily understand the mechanisms discussed herein. Furthermore, in keeping with the concept of simplicity, the heating of the earth and atmosphere due to solar insolation will not be discussed. A short digression into the nature of radiation and its interaction with CO2 in the gaseous state follows.

Temperature is a measure of the energy content of matter and is indicated by the translational KE of the particles. A gas of fast particles is at a higher temperature than one of slow particles. Energy also causes CO2 molecules to vibrate but although this vibration is related to the energy content of CO2, it is not related to the temperature of the gaseous mixture. Molecules undergoing this vibration are in an excited state.

IR radiation contains energy and in the absence of matter, this radiation will continue to travel indefinitely. In this situation, there is no temperature because there is no matter.

The energy content of IR radiation can be indicated by its IR spectrum which is a graph of power density as a function of frequency. Climatologists use wavenumbers instead of frequencies for convenience and a wavenumber is defined as the number of cycles per centimeter. Figure 1 is such a graph where the x axis indicates the wavenumber and the y axis indicates the power per square meter per wavenumber. The area under the curve represents the total power per square meter in the radiation.

Figure 1. IR Spectrum - No Atmosphere

The interaction of IR radiation with CO2 is a two way street in that IR radiation can interact with unexcited CO2 molecules and cause them to vibrate and become excited and excited CO2 molecules can become unexcited by releasing IR radiation.

Consider now the atmosphere of our fictitious model. As depicted in Step 1 of Figure 2, N2 and CO2 molecules are in motion and the average speed of these molecules is related to the temperature of the stratosphere. Now imagine that CO2 molecules are injected into the atmosphere causing the concentration of CO2 to increase. These molecules will then collide with other molecules of either N2 or CO2 (Step 2) and some of the KE of these particles will be transferred to the CO2 resulting in excited CO2 molecules (Step 3) and a lowered stratospheric temperature. All entities, including atoms and molecules, prefer the unexcited state to the excited state. Therefore, these excited CO2 molecules will deexcite and emit IR radiation (Step 4) which, in the rarefied stratosphere, will simply be radiated out of the stratosphere. The net result is a lower stratospheric temperature. This does not happen in the troposphere because, due to higher pressures and shorter distances between particles, any emitted radiation gets absorbed by another nearby CO2 molecule.

Figure 2. Kinetic To IR Energy Transfer

In order to discuss the second and less dominant mechanism, consider Figure 1 which shows the IR spectrum from a planet with no atmosphere and Figures 3 which shows the IR spectrums from the same planet with CO2 levels of 100 ppm and 1000 ppm respectively. These graphs were generated from a model simulator at the website of Dr. David Archer, a professor in the Department of the Geophysical Sciences at the University of Chicago and edited to contain only the curves of interest to this discussion. As previously stated, these parameters were chosen in order to generate graphs which enable the reader to easily understand the mechanism discussed herein.

The curves of Figures 3 approximately follow the intensity curve of Figure 1 except for the missing band of energy centered at 667 cm-1. This band is called the absorption band and is so named because it represents the IR energy that is absorbed by CO2. IR radiation of all other wavenumbers do not react with CO2 and thus the IR intensity at these wavenumbers is the same as that of Figure 1. These wavenumbers represent the atmospheric window which is so named because the IR energy radiates through the atmosphere unaffected by the CO2.

Figure 3. CO2 IR Spectrum - 100/1000 ppm

A comparison of the curves in Figure 3 shows that the absorption band at 1000 ppm is wider than that at 100 ppm because more energy has been absorbed from the IR radiation by the troposphere at a CO2 concentration of 1000 ppm than at a concentration of 100 ppm. The energy that remains in the absorption band after the IR radiation has traveled through the troposphere is the only energy that is available to interact with the CO2 of the stratosphere. At a CO2 level of 100 ppm there is more energy available for this than at a level of 1000 ppm. Therefore, the stratosphere is cooler because of the higher level of CO2 in the troposphere. Additionally, the troposphere has warmed because it has absorbed the energy that is no longer available to the stratosphere.

In concluding, this paper has explained the mechanisms which cause the troposphere to warm and the stratosphere to cool when the atmospheric level of CO2 increases. The dominant mechanism involves the conversion of the energy of motion of the particles in the atmosphere to IR radiation which escapes to space and the second method involves the absorption of IR energy by CO2 in the troposphere such that it is no longer available to the stratosphere. Both methods act to reduce the temperature of the stratosphere.

*It is recognized that a fictitious planet as described herein is a physical impossibility. The simplicity of this model serves to explain a concept that would otherwise be more difficult using a more complex and realistic model.

Robert J. Guercio - December 18, 2010

Friday, December 15, 2017

Shape of the universe

From Wikipedia, the free encyclopedia

|

|

Cosmologists distinguish between the observable universe and the global universe. The observable universe consists of the part of the universe that can, in principle, be observed by light reaching Earth within the age of the universe. It encompasses a region of space which currently forms a ball centered at Earth of estimated radius 46 billion light-years (4.4×1026 m). This does not mean the universe is 46 billion years old; in fact the universe is believed to be 13.799 billion years old but space itself has also expanded causing the size of the observable universe to be as stated. (However, it is possible to observe these distant areas only in their very distant past, when the distance light had to travel was much less). Assuming an isotropic nature, the observable universe is similar for all contemporary vantage points.

According to the book Our Mathematical Universe[clarification needed], the shape of the global universe can be explained with three categories:[1]

- Finite or infinite

- Flat (no curvature), open (negative curvature), or closed (positive curvature)

- Connectivity, how the universe is put together, i.e., simply connected space or multiply connected.

The exact shape is still a matter of debate in physical cosmology, but experimental data from various, independent sources (WMAP, BOOMERanG, and Planck for example) confirm that the observable universe is flat with only a 0.4% margin of error.[3][4][5] Theorists have been trying to construct a formal mathematical model of the shape of the universe. In formal terms, this is a 3-manifold model corresponding to the spatial section (in comoving coordinates) of the 4-dimensional space-time of the universe. The model most theorists currently use is the Friedmann–Lemaître–Robertson–Walker (FLRW) model. Arguments have been put forward that the observational data best fit with the conclusion that the shape of the global universe is infinite and flat,[6] but the data are also consistent with other possible shapes, such as the so-called Poincaré dodecahedral space[7][8] and the Sokolov-Starobinskii space (quotient of the upper half-space model of hyperbolic space by 2-dimensional lattice).[9]

Shape of the observable universe

As stated in the introduction, there are two aspects to consider:- its local geometry, which predominantly concerns the curvature of the universe, particularly the observable universe, and

- its global geometry, which concerns the topology of the universe as a whole.

If the observable universe encompasses the entire universe, we may be able to determine the global structure of the entire universe by observation. However, if the observable universe is smaller than the entire universe, our observations will be limited to only a part of the whole, and we may not be able to determine its global geometry through measurement. From experiments, it is possible to construct different mathematical models of the global geometry of the entire universe all of which are consistent with current observational data and so it is currently unknown whether the observable universe is identical to the global universe or it is instead many orders of magnitude smaller than it. The universe may be small in some dimensions and not in others (analogous to the way a cuboid is longer in the dimension of length than it is in the dimensions of width and depth). To test whether a given mathematical model describes the universe accurately, scientists look for the model's novel implications—what are some phenomena in the universe that we have not yet observed, but that must exist if the model is correct—and they devise experiments to test whether those phenomena occur or not. For example, if the universe is a small closed loop, one would expect to see multiple images of an object in the sky, although not necessarily images of the same age.

Cosmologists normally work with a given space-like slice of spacetime called the comoving coordinates, the existence of a preferred set of which is possible and widely accepted in present-day physical cosmology. The section of spacetime that can be observed is the backward light cone (all points within the cosmic light horizon, given time to reach a given observer), while the related term Hubble volume can be used to describe either the past light cone or comoving space up to the surface of last scattering. To speak of "the shape of the universe (at a point in time)" is ontologically naive from the point of view of special relativity alone: due to the relativity of simultaneity we cannot speak of different points in space as being "at the same point in time" nor, therefore, of "the shape of the universe at a point in time". However, the comoving coordinates (if well-defined) provide a strict sense to those by using the time since the Big Bang (measured in the reference of CMB) as a distinguished universal time.

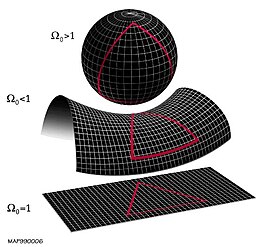

Curvature of the universe

The curvature is a quantity describing how the geometry of a space differs locally from the one of the flat space. The curvature of any locally isotropic space (and hence of a locally isotropic universe) falls into one of the three following cases:- Zero curvature (flat); a drawn triangle's angles add up to 180° and the Pythagorean theorem holds; such 3-dimensional space is locally modeled by Euclidean space E3.

- Positive curvature; a drawn triangle's angles add up to more than 180°; such 3-dimensional space is locally modeled by a region of a 3-sphere S3.

- Negative curvature; a drawn triangle's angles add up to less than 180°; such 3-dimensional space is locally modeled by a region of a hyperbolic space H3.

The local geometry of the universe is determined by whether the density parameter Ω is greater than, less than, or equal to 1.

From top to bottom: a spherical universe with Ω > 1, a hyperbolic universe with Ω < 1, and a flat universe with Ω = 1. These depictions of two-dimensional surfaces are merely easily visualizable analogs to the 3-dimensional structure of (local) space.

From top to bottom: a spherical universe with Ω > 1, a hyperbolic universe with Ω < 1, and a flat universe with Ω = 1. These depictions of two-dimensional surfaces are merely easily visualizable analogs to the 3-dimensional structure of (local) space.

General relativity explains that mass and energy bend the curvature of spacetime and is used to determine what curvature the universe has by using a value called the density parameter, represented with Omega (Ω). The density parameter is the average density of the universe divided by the critical energy density, that is, the mass energy needed for a universe to be flat. Put another way,

- If Ω = 1, the universe is flat

- If Ω > 1, there is positive curvature

- if Ω < 1 there is negative curvature

Ωmass ≈ 0.315±0.018

Ωrelativistic ≈ 9.24×10−5

ΩΛ ≈ 0.6817±0.0018

Ωtotal= Ωmass + Ωrelativistic + ΩΛ= 1.00±0.02

The actual value for critical density value is measured as ρcritical= 9.47×10−27 kg m−3. From these values, within experimental error, the universe seems to be flat.

Another way to measure Ω is to do so geometrically by measuring an angle across the observable universe. We can do this by using the CMB and measuring the power spectrum and temperature anisotropy. For an intuition, one can imagine finding a gas cloud that is not in thermal equilibrium due to being so large that light speed cannot propagate the thermal information. Knowing this propagation speed, we then know the size of the gas cloud as well as the distance to the gas cloud, we then have two sides of a triangle and can then determine the angles. Using a method similar to this, the BOOMERanG experiment has determined that the sum of the angles to 180° within experimental error, corresponding to an Ωtotal ≈ 1.00±0.12.[12]

These and other astronomical measurements constrain the spatial curvature to be very close to zero, although they do not constrain its sign. This means that although the local geometries of spacetime are generated by the theory of relativity based on spacetime intervals, we can approximate 3-space by the familiar Euclidean geometry.

The Friedmann–Lemaître–Robertson–Walker (FLRW) model using Friedmann equations is commonly used to model the universe. The FLRW model provides a curvature of the universe based on the mathematics of fluid dynamics, that is, modeling the matter within the universe as a perfect fluid. Although stars and structures of mass can be introduced into an "almost FLRW" model, a strictly FLRW model is used to approximate the local geometry of the observable universe. Another way of saying this is that if all forms of dark energy are ignored, then the curvature of the universe can be determined by measuring the average density of matter within it, assuming that all matter is evenly distributed (rather than the distortions caused by 'dense' objects such as galaxies). This assumption is justified by the observations that, while the universe is "weakly" inhomogeneous and anisotropic (see the large-scale structure of the cosmos), it is on average homogeneous and isotropic.

Global universe structure

-13 —

–

-12 —

–

-11 —

–

-10 —

–

-9 —

–

-8 —

–

-7 —

–

-6 —

–

-5 —

–

-4 —

–

-3 —

–

-2 —

–

-1 —

–

0 —

←

As stated in the introduction, investigations within the study of the global structure of the universe include:

- Whether the universe is infinite or finite in extent

- Whether the geometry of the global universe is flat, positively curved, or negatively curved

- Whether the topology is simply connected like a sphere or multiply connected, like a torus[13]

Infinite or finite

One of the presently unanswered questions about the universe is whether it is infinite or finite in extent. For intuition, it can be understood that a finite universe has a finite volume that, for example, could be in theory filled up with a finite amount of material, while an infinite universe is unbounded and no numerical volume could possibly fill it. Mathematically, the question of whether the universe is infinite or finite is referred to as boundedness. An infinite universe (unbounded metric space) means that there are points arbitrarily far apart: for any distance d, there are points that are of a distance at least d apart. A finite universe is a bounded metric space, where there is some distance d such that all points are within distance d of each other. The smallest such d is called the diameter of the universe, in which case the universe has a well-defined "volume" or "scale."With or without boundary

Assuming a finite universe, the universe can either have an edge or no edge. Many finite mathematical spaces, e.g., a disc, have an edge or boundary. Spaces that have an edge are difficult to treat, both conceptually and mathematically. Namely, it is very difficult to state what would happen at the edge of such a universe. For this reason, spaces that have an edge are typically excluded from consideration.However, there exist many finite spaces, such as the 3-sphere and 3-torus, which have no edges. Mathematically, these spaces are referred to as being compact without boundary. The term compact basically means that it is finite in extent ("bounded") and complete. The term "without boundary" means that the space has no edges. Moreover, so that calculus can be applied, the universe is typically assumed to be a differentiable manifold. A mathematical object that possesses all these properties, compact without boundary and differentiable, is termed a closed manifold. The 3-sphere and 3-torus are both closed manifolds.

Curvature

The curvature of the universe places constraints on the topology. If the spatial geometry is spherical, i.e., possess positive curvature, the topology is compact. For a flat (zero curvature) or a hyperbolic (negative curvature) spatial geometry, the topology can be either compact or infinite.[14] Many textbooks erroneously state that a flat universe implies an infinite universe; however, the correct statement is that a flat universe that is also simply connected implies an infinite universe.[14] For example, Euclidean space is flat, simply connected, and infinite, but the torus is flat, multiply connected, finite, and compact.In general, local to global theorems in Riemannian geometry relate the local geometry to the global geometry. If the local geometry has constant curvature, the global geometry is very constrained, as described in Thurston geometries.

The latest research shows that even the most powerful future experiments (like SKA, Planck..) will not be able to distinguish between flat, open and closed universe if the true value of cosmological curvature parameter is smaller than 10−4. If the true value of the cosmological curvature parameter is larger than 10−3 we will be able to distinguish between these three models even now.[15]

Results of the Planck mission released in 2015 show the cosmological curvature parameter, ΩK, to be 0.000±0.005, consistent with a flat universe.[16]

Universe with zero curvature

In a universe with zero curvature, the local geometry is flat. The most obvious global structure is that of Euclidean space, which is infinite in extent. Flat universes that are finite in extent include the torus and Klein bottle. Moreover, in three dimensions, there are 10 finite closed flat 3-manifolds, of which 6 are orientable and 4 are non-orientable. These are the Bieberbach manifolds. The most familiar is the aforementioned 3-torus universe.In the absence of dark energy, a flat universe expands forever but at a continually decelerating rate, with expansion asymptotically approaching zero. With dark energy, the expansion rate of the universe initially slows down, due to the effect of gravity, but eventually increases. The ultimate fate of the universe is the same as that of an open universe.

A flat universe can have zero total energy.

Universe with positive curvature

A positively curved universe is described by elliptic geometry, and can be thought of as a three-dimensional hypersphere, or some other spherical 3-manifold (such as the Poincaré dodecahedral space), all of which are quotients of the 3-sphere.Poincaré dodecahedral space, a positively curved space, colloquially described as "soccerball-shaped", as it is the quotient of the 3-sphere by the binary icosahedral group, which is very close to icosahedral symmetry, the symmetry of a soccer ball. This was proposed by Jean-Pierre Luminet and colleagues in 2003[7][17] and an optimal orientation on the sky for the model was estimated in 2008.[8]

Universe with negative curvature

Universe in an expanding sphere. The galaxies farthest away are moving fastest and hence experience length contraction and so become smaller to an observer in the centre.

A hyperbolic universe, one of a negative spatial curvature, is described by hyperbolic geometry, and can be thought of locally as a three-dimensional analog of an infinitely extended saddle shape. There are a great variety of hyperbolic 3-manifolds, and their classification is not completely understood. Those of finite volume can be understood via the Mostow rigidity theorem. For hyperbolic local geometry, many of the possible three-dimensional spaces are informally called "horn topologies", so called because of the shape of the pseudosphere, a canonical model of hyperbolic geometry. An example is the Picard horn, a negatively curved space, colloquially described as "funnel-shaped".[9]

Curvature: open or closed

When cosmologists speak of the universe as being "open" or "closed", they most commonly are referring to whether the curvature is negative or positive. These meanings of open and closed are different from the mathematical meaning of open and closed used for sets in topological spaces and for the mathematical meaning of open and closed manifolds, which gives rise to ambiguity and confusion. In mathematics, there are definitions for a closed manifold (i.e., compact without boundary) and open manifold (i.e., one that is not compact and without boundary). A "closed universe" is necessarily a closed manifold. An "open universe" can be either a closed or open manifold. For example, in the Friedmann–Lemaître–Robertson–Walker (FLRW) model the universe is considered to be without boundaries, in which case "compact universe" could describe a universe that is a closed manifold.Milne model ("spherical" expanding)

If one applies Minkowski space-based special relativity to expansion of the universe, without resorting to the concept of a curved spacetime, then one obtains the Milne model. Any spatial section of the universe of a constant age (the proper time elapsed from the Big Bang) will have a negative curvature; this is merely a pseudo-Euclidean geometric fact analogous to one that concentric spheres in the flat Euclidean space are nevertheless curved. Spatial geometry of this model is an unbounded hyperbolic space. The entire universe is contained within a light cone, namely the future cone of the Big Bang. For any given moment t> 0 of coordinate time (assuming the Big Bang has t = 0), the entire universe is bounded by a sphere of radius exactly c t. The apparent paradox of an infinite universe contained within a sphere is explained with length contraction: the galaxies farther away, which are travelling away from the observer the fastest, will appear thinner.This model is essentially a degenerate FLRW for Ω = 0. It is incompatible with observations that definitely rule out such a large negative spatial curvature. However, as a background in which gravitational fields (or gravitons) can operate, due to diffeomorphism invariance, the space on the macroscopic scale, is equivalent to any other (open) solution of Einstein's field equations.

Subscribe to:

Comments (Atom)

-

From Wikipedia, the free encyclopedia Ward Cunningham , inventor of the wiki A wiki is a website on whi...

-

From Wikipedia, the free encyclopedia https://en.wikipedia.org/wiki/Human_cloning Diagram of ...

-

From Wikipedia, the free encyclopedia Islamic State of Iraq and the Levant الدولة الإسلامية في العراق والشام ( ...