An intelligent tutoring system (ITS) is a computer system that imitates human tutors and aims to provide immediate and customized instruction or feedback to learners, usually without requiring intervention from a human teacher. ITSs have the common goal of enabling learning in a meaningful and effective manner by using a variety of computing technologies. There are many examples of ITSs being used in both formal education and professional settings in which they have demonstrated their capabilities and limitations. There is a close relationship between intelligent tutoring, cognitive learning theories and design; and there is ongoing research to improve the effectiveness of ITS. An ITS typically aims to replicate the demonstrated benefits of one-to-one, personalized tutoring, in contexts where students would otherwise have access to one-to-many instruction from a single teacher (e.g., classroom lectures), or no teacher at all (e.g., online homework). ITSs are often designed with the goal of providing access to high quality education to each and every student.

History

Early mechanical systems

The possibility of intelligent machines has been discussed for centuries. Blaise Pascal created the first calculating machine capable of mathematical functions in the 17th century simply called Pascal's Calculator. At this time the mathematician and philosopher Gottfried Wilhelm Leibniz envisioned machines capable of reasoning and applying rules of logic to settle disputes. These early works inspired later developments.

The concept of intelligent machines for instructional use date back as early as 1924, when Sidney Pressey of Ohio State University created a mechanical teaching machine to instruct students without a human teacher. His machine resembled closely a typewriter with several keys and a window that provided the learner with questions. The Pressey Machine allowed user input and provided immediate feedback by recording their score on a counter.

Pressey was influenced by Edward L. Thorndike, a learning theorist and educational psychologist at the Columbia University Teachers' College of the late 19th and early 20th centuries. Thorndike posited laws for maximizing learning. Thorndike's laws included the law of effect, the law of exercise, and the law of recency. By later standards, Pressey's teaching and testing machine would not be considered intelligent as it was mechanically run and was based on one question and answer at a time, but it set an early precedent for future projects.

By the 1950s and 1960s, new perspectives on learning were emerging. Burrhus Frederic "B.F." Skinner at Harvard University did not agree with Thorndike's learning theory of connectionism or Pressey's teaching machine. Rather, Skinner was a behaviorist who believed that learners should construct their answers and not rely on recognition. He too, constructed a teaching machine with an incremental mechanical system that would reward students for correct responses to questions.

Early electronic systems

In the period following the second world war, mechanical binary systems gave way to binary based electronic machines. These machines were considered intelligent when compared to their mechanical counterparts as they had the capacity to make logical decisions. However, the study of defining and recognizing a machine intelligence was still in its infancy.

Alan Turing, a mathematician, logician and computer scientist, linked computing systems to thinking. One of his most notable papers outlined a hypothetical test to assess the intelligence of a machine which came to be known as the Turing test. Essentially, the test would have a person communicate with two other agents, a human and a computer asking questions to both recipients. The computer passes the test if it can respond in such a way that the human posing the questions cannot differentiate between the other human and the computer. The Turing test has been used in its essence for more than two decades as a model for current ITS development. The main ideal for ITS systems is to effectively communicate. As early as the 1950s programs were emerging displaying intelligent features. Turing's work as well as later projects by researchers such as Allen Newell, Clifford Shaw, and Herb Simon showed programs capable of creating logical proofs and theorems. Their program, The Logic Theorist exhibited complex symbol manipulation and even generation of new information without direct human control and is considered by some to be the first AI program. Such breakthroughs would inspire the new field of Artificial Intelligence officially named in 1956 by John McCarthy at the Dartmouth Conference. This conference was the first of its kind that was devoted to scientists and research in the field of AI.

The latter part of the 1960s and 1970s saw many new CAI (Computer-Assisted instruction) projects that built on advances in computer science. The creation of the ALGOL programming language in 1958 enabled many schools and universities to begin developing Computer Assisted Instruction (CAI) programs. Major computer vendors and federal agencies in the US such as IBM, HP, and the National Science Foundation funded the development of these projects. Early implementations in education focused on programmed instruction (PI), a structure based on a computerized input-output system. Although many supported this form of instruction, there was limited evidence supporting its effectiveness. The programming language LOGO was created in 1967 by Wally Feurzeig, Cynthia Solomon, and Seymour Papert as a language streamlined for education. PLATO, an educational terminal featuring displays, animations, and touch controls that could store and deliver large amounts of course material, was developed by Donald Bitzer in the University of Illinois in the early 1970s. Along with these, many other CAI projects were initiated in many countries including the US, the UK, and Canada.

At the same time that CAI was gaining interest, Jaime Carbonell suggested that computers could act as a teacher rather than just a tool (Carbonell, 1970). A new perspective would emerge that focused on the use of computers to intelligently coach students called Intelligent Computer Assisted Instruction or Intelligent Tutoring Systems (ITS). Where CAI used a behaviourist perspective on learning based on Skinner's theories (Dede & Swigger, 1988), ITS drew from work in cognitive psychology, computer science, and especially artificial intelligence. There was a shift in AI research at this time as systems moved from the logic focus of the previous decade to knowledge based systems—systems could make intelligent decisions based on prior knowledge (Buchanan, 2006). Such a program was created by Seymour Papert and Ira Goldstein who created Dendral, a system that predicted possible chemical structures from existing data. Further work began to showcase analogical reasoning and language processing. These changes with a focus on knowledge had big implications for how computers could be used in instruction. The technical requirements of ITS, however, proved to be higher and more complex than CAI systems and ITS systems would find limited success at this time.

Towards the latter part of the 1970s interest in CAI technologies began to wane. Computers were still expensive and not as available as expected. Developers and instructors were reacting negatively to the high cost of developing CAI programs, the inadequate provision for instructor training, and the lack of resources.

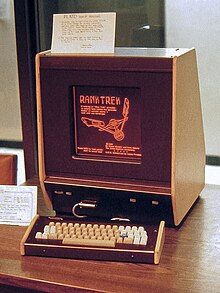

Microcomputers and intelligent systems

The microcomputer revolution in the late 1970s and early 1980s helped to revive CAI development and jumpstart development of ITS systems. Personal computers such as the Apple II, Commodore PET, and TRS-80 reduced the resources required to own computers and by 1981, 50% of US schools were using computers (Chambers & Sprecher, 1983). Several CAI projects utilized the Apple 2 as a system to deliver CAI programs in high schools and universities including the British Columbia Project and California State University Project in 1981.

The early 1980s would also see Intelligent Computer-Assisted Instruction (ICAI) and ITS goals diverge from their roots in CAI. As CAI became increasingly focused on deeper interactions with content created for a specific area of interest, ITS sought to create systems that focused on knowledge of the task and the ability to generalize that knowledge in non-specific ways (Larkin & Chabay, 1992). The key goals set out for ITS were to be able to teach a task as well as perform it, adapting dynamically to its situation. In the transition from CAI to ICAI systems, the computer would have to distinguish not only between the correct and incorrect response but the type of incorrect response to adjust the type of instruction. Research in Artificial Intelligence and Cognitive Psychology fueled the new principles of ITS. Psychologists considered how a computer could solve problems and perform 'intelligent' activities. An ITS programme would have to be able to represent, store and retrieve knowledge and even search its own database to derive its own new knowledge to respond to learner's questions. Basically, early specifications for ITS or (ICAI) require it to "diagnose errors and tailor remediation based on the diagnosis" (Shute & Psotka, 1994, p. 9). The idea of diagnosis and remediation is still in use today when programming ITS.

A key breakthrough in ITS research was the creation of The LISP Tutor, a program that implemented ITS principles in a practical way and showed promising effects increasing student performance. The LISP Tutor was developed and researched in 1983 as an ITS system for teaching students the LISP programming language (Corbett & Anderson, 1992). The LISP Tutor could identify mistakes and provide constructive feedback to students while they were performing the exercise. The system was found to decrease the time required to complete the exercises while improving student test scores (Corbett & Anderson, 1992). Other ITS systems beginning to develop around this time include TUTOR created by Logica in 1984 as a general instructional tool and PARNASSUS created in Carnegie Mellon University in 1989 for language instruction.

Modern ITS

After the implementation of initial ITS, more researchers created a number of ITS for different students. In the late 20th century, Intelligent Tutoring Tools (ITTs) was developed by the Byzantium project, which involved six universities. The ITTs were general purpose tutoring system builders and many institutions had positive feedback while using them. (Kinshuk, 1996) This builder, ITT, would produce an Intelligent Tutoring Applet (ITA) for different subject areas. Different teachers created the ITAs and built up a large inventory of knowledge that was accessible by others through the Internet. Once an ITS was created, teachers could copy it and modify it for future use. This system was efficient and flexible. However, Kinshuk and Patel believed that the ITS was not designed from an educational point of view and was not developed based on the actual needs of students and teachers (Kinshuk and Patel, 1997). Recent work has employed ethnographic and design research methods to examine the ways ITSs are actually used by students and teachers across a range of contexts, often revealing unanticipated needs that they meet, fail to meet, or in some cases, even create.

Modern day ITSs typically try to replicate the role of a teacher or a teaching assistant, and increasingly automate pedagogical functions such as problem generation, problem selection, and feedback generation. However, given a current shift towards blended learning models, recent work on ITSs has begun focusing on ways these systems can effectively leverage the complementary strengths of human-led instruction from a teacher or peer, when used in co-located classrooms or other social contexts.

There were three ITS projects that functioned based on conversational dialogue: AutoTutor, Atlas (Freedman, 1999), and Why2. The idea behind these projects was that since students learn best by constructing knowledge themselves, the programs would begin with leading questions for the students and would give out answers as a last resort. AutoTutor's students focused on answering questions about computer technology, Atlas's students focused on solving quantitative problems, and Why2's students focused on explaining physical systems qualitatively. (Graesser, VanLehn, and others, 2001) Other similar tutoring systems such as Andes (Gertner, Conati, and VanLehn, 1998) tend to provide hints and immediate feedback for students when students have trouble answering the questions. They could guess their answers and have correct answers without deep understanding of the concepts. Research was done with a small group of students using Atlas and Andes respectively. The results showed that students using Atlas made significant improvements compared with students who used Andes. However, since the above systems require analysis of students' dialogues, improvement is yet to be made so that more complicated dialogues can be managed.

Structure

Intelligent tutoring systems (ITSs) consist of four basic components based on a general consensus amongst researchers (Nwana,1990; Freedman, 2000; Nkambou et al., 2010):

- The Domain model

- The Student model

- The Tutoring model, and

- The User interface model

The domain model (also known as the cognitive model or expert knowledge model) is built on a theory of learning, such as the ACT-R theory which tries to take into account all the possible steps required to solve a problem. More specifically, this model "contains the concepts, rules, and problem-solving strategies of the domain to be learned. It can fulfill several roles: as a source of expert knowledge, a standard for evaluating the student's performance or for detecting errors, etc." (Nkambou et al., 2010, p. 4). Another approach for developing domain models is based on Stellan Ohlsson's Theory of Learning from performance errors, known as constraint-based modelling (CBM). In this case, the domain model is presented as a set of constraints on correct solutions.

The student model can be thought of as an overlay on the domain model. It is considered as the core component of an ITS paying special attention to student's cognitive and affective states and their evolution as the learning process advances. As the student works step-by-step through their problem solving process, an ITS engages in a process called model tracing. Anytime the student model deviates from the domain model, the system identifies, or flags, that an error has occurred. On the other hand, in constraint-based tutors the student model is represented as an overlay on the constraint set. Constraint-based tutors evaluate the student's solution against the constraint set, and identify satisfied and violated constraints. If there are any violated constraints, the student's solution is incorrect, and the ITS provides feedback on those constraints. Constraint-based tutors provide negative feedback (i.e. feedback on errors) and also positive feedback.

The tutor model accepts information from the domain and student models and makes choices about tutoring strategies and actions. At any point in the problem-solving process the learner may request guidance on what to do next, relative to their current location in the model. In addition, the system recognizes when the learner has deviated from the production rules of the model and provides timely feedback for the learner, resulting in a shorter period of time to reach proficiency with the targeted skills. The tutor model may contain several hundred production rules that can be said to exist in one of two states, learned or unlearned. Every time a student successfully applies a rule to a problem, the system updates a probability estimate that the student has learned the rule. The system continues to drill students on exercises that require effective application of a rule until the probability that the rule has been learned reaches at least 95% probability.

Knowledge tracing tracks the learner's progress from problem to problem and builds a profile of strengths and weaknesses relative to the production rules. The cognitive tutoring system developed by John Anderson at Carnegie Mellon University presents information from knowledge tracing as a skillometer, a visual graph of the learner's success in each of the monitored skills related to solving algebra problems. When a learner requests a hint, or an error is flagged, the knowledge tracing data and the skillometer are updated in real-time.

The user interface component "integrates three types of information that are needed in carrying out a dialogue: knowledge about patterns of interpretation (to understand a speaker) and action (to generate utterances) within dialogues; domain knowledge needed for communicating content; and knowledge needed for communicating intent" (Padayachee, 2002, p. 3).

Nkambou et al. (2010) make mention of Nwana's (1990) review of different architectures underlining a strong link between architecture and paradigm (or philosophy). Nwana (1990) declares, "[I]t is almost a rarity to find two ITSs based on the same architecture [which] results from the experimental nature of the work in the area" (p. 258). He further explains that differing tutoring philosophies emphasize different components of the learning process (i.e., domain, student or tutor). The architectural design of an ITS reflects this emphasis, and this leads to a variety of architectures, none of which, individually, can support all tutoring strategies (Nwana, 1990, as cited in Nkambou et al., 2010). Moreover, ITS projects may vary according to the relative level of intelligence of the components. As an example, a project highlighting intelligence in the domain model may generate solutions to complex and novel problems so that students can always have new problems to work on, but it might only have simple methods for teaching those problems, while a system that concentrates on multiple or novel ways of teaching a particular topic might find a less sophisticated representation of that content sufficient.

Design and development methods

Apart from the discrepancy amongst ITS architectures each emphasizing different elements, the development of an ITS is much the same as any instructional design process. Corbett et al. (1997) summarized ITS design and development as consisting of four iterative stages: (1) needs assessment, (2) cognitive task analysis, (3) initial tutor implementation and (4) evaluation.

The first stage known as needs assessment is common to any instructional design process, especially software development. This involves a learner analysis, consultation with subject matter experts and/or the instructor(s). This first step is part of the development of the expert/knowledge and student domain. The goal is to specify learning goals and to outline a general plan for the curriculum; it is imperative not to computerize traditional concepts but develop a new curriculum structure by defining the task in general and understanding learners' possible behaviours dealing with the task and to a lesser degree the tutor's behavior. In doing so, three crucial dimensions need to be dealt with: (1) the probability a student is able to solve problems; (2) the time it takes to reach this performance level and (3) the probability the student will actively use this knowledge in the future. Another important aspect that requires analysis is cost effectiveness of the interface. Moreover, teachers and student entry characteristics such as prior knowledge must be assessed since both groups are going to be system users.

The second stage, cognitive task analysis, is a detailed approach to expert systems programming with the goal of developing a valid computational model of the required problem solving knowledge. Chief methods for developing a domain model include: (1) interviewing domain experts, (2) conducting "think aloud" protocol studies with domain experts, (3) conducting "think aloud" studies with novices and (4) observation of teaching and learning behavior. Although the first method is most commonly used, experts are usually incapable of reporting cognitive components. The "think aloud" methods, in which the experts is asked to report aloud what s/he is thinking when solving typical problems, can avoid this problem. Observation of actual online interactions between tutors and students provides information related to the processes used in problem-solving, which is useful for building dialogue or interactivity into tutoring systems.

The third stage, initial tutor implementation, involves setting up a problem solving environment to enable and support an authentic learning process. This stage is followed by a series of evaluation activities as the final stage which is again similar to any software development project.

The fourth stage, evaluation includes (1) pilot studies to confirm basic usability and educational impact; (2) formative evaluations of the system under development, including (3) parametric studies that examine the effectiveness of system features and finally, (4) summative evaluations of the final tutor's effect: learning rate and asymptotic achievement levels.

A variety of authoring tools have been developed to support this process and create intelligent tutors, including ASPIRE, the Cognitive Tutor Authoring Tools (CTAT), GIFT, ASSISTments Builder and AutoTutor tools. The goal of most of these authoring tools is to simplify the tutor development process, making it possible for people with less expertise than professional AI programmers to develop Intelligent Tutoring Systems.

Eight principles of ITS design and development

Anderson et al. (1987) outlined eight principles for intelligent tutor design and Corbett et al. (1997) later elaborated on those principles highlighting an all-embracing principle which they believed governed intelligent tutor design, they referred to this principle as:

Principle 0: An intelligent tutor system should enable the student to work to the successful conclusion of problem solving.

- Represent student competence as a production set.

- Communicate the goal structure underlying the problem solving.

- Provide instruction in the problem solving context.

- Promote an abstract understanding of the problem-solving knowledge.

- Minimize working memory load.

- Provide immediate feedback on errors.

- Adjust the grain size of instruction with learning.

- Facilitate successive approximations to the target skill.

Use in practice

All this is a substantial amount of work, even if authoring tools have become available to ease the task. This means that building an ITS is an option only in situations in which they, in spite of their relatively high development costs, still reduce the overall costs through reducing the need for human instructors or sufficiently boosting overall productivity. Such situations occur when large groups need to be tutored simultaneously or many replicated tutoring efforts are needed. Cases in point are technical training situations such as training of military recruits and high school mathematics. One specific type of intelligent tutoring system, the Cognitive Tutor, has been incorporated into mathematics curricula in a substantial number of United States high schools, producing improved student learning outcomes on final exams and standardized tests. Intelligent tutoring systems have been constructed to help students learn geography, circuits, medical diagnosis, computer programming, mathematics, physics, genetics, chemistry, etc. Intelligent Language Tutoring Systems (ILTS), e.g. this one, teach natural language to first or second language learners. ILTS requires specialized natural language processing tools such as large dictionaries and morphological and grammatical analyzers with acceptable coverage.

Applications

During the rapid expansion of the web boom, new computer-aided instruction paradigms, such as e-learning and distributed learning, provided an excellent platform for ITS ideas. Areas that have used ITS include natural language processing, machine learning, planning, multi-agent systems, ontologies, Semantic Web, and social and emotional computing. In addition, other technologies such as multimedia, object-oriented systems, modeling, simulation, and statistics have also been connected to or combined with ITS. Historically non-technological areas such as the educational sciences and psychology have also been influenced by the success of ITS.

In recent years, ITS has begun to move away from the search-based to include a range of practical applications. ITS have expanded across many critical and complex cognitive domains, and the results have been far reaching. ITS systems have cemented a place within formal education and these systems have found homes in the sphere of corporate training and organizational learning. ITS offers learners several affordances such as individualized learning, just in time feedback, and flexibility in time and space.

While Intelligent tutoring systems evolved from research in cognitive psychology and artificial intelligence, there are now many applications found in education and in organizations. Intelligent tutoring systems can be found in online environments or in a traditional classroom computer lab, and are used in K-12 classrooms as well as in universities. There are a number of programs that target mathematics but applications can be found in health sciences, language acquisition, and other areas of formalized learning.

Reports of improvement in student comprehension, engagement, attitude, motivation, and academic results have all contributed to the ongoing interest in the investment in and research of theses systems. The personalized nature of the intelligent tutoring systems affords educators the opportunity to create individualized programs. Within education there are a plethora of intelligent tutoring systems, an exhaustive list does not exist but several of the more influential programs are listed below.

Education

As of May 2024, AI tutors make up five of the top 20 education apps in Apple's App Store, and two of the leaders are from Chinese developers.

- Algebra Tutor

- PAT (PUMP Algebra Tutor or Practical Algebra Tutor) developed by the Pittsburgh Advanced Cognitive Tutor Center at Carnegie Mellon University, engages students in anchored learning problems and uses modern algebraic tools to engage students in problem solving and sharing of their results. The aim of PAT is to tap into a student's prior knowledge and everyday experiences with mathematics to promote growth. The success of PAT is well documented (ex. Miami-Dade County Public Schools Office of Evaluation and Research) from both a statistical (student results) and emotional (student and instructor feedback) perspective.

- SQL-Tutor

- SQL-Tutor is the first ever constraint-based tutor developed by the Intelligent Computer Tutoring Group (ICTG) at the University of Canterbury, New Zealand. SQL-Tutor teaches students how to retrieve data from databases using the SQL SELECT statement.

- EER-Tutor

- EER-Tutor is a constraint-based tutor (developed by ICTG) that teaches conceptual database design using the Entity Relationship model. An earlier version of EER-Tutor was KERMIT, a stand-alone tutor for ER modelling, which resulted in significant improvement of student's knowledge after one hour of learning (with the effect size of 0.6).

- COLLECT-UML

- COLLECT-UML is a constraint-based tutor that supports pairs of students working collaboratively on UML class diagrams. The tutor provides feedback on the domain level as well as on collaboration.

- StoichTutor

- StoichTutor is a web-based intelligent tutor that helps high school students learn chemistry, specifically the sub-area of chemistry known as stoichiometry. It has been used to explore a variety of learning science principles and techniques, such as worked examples and politeness.

- Mathematics Tutor

- The Mathematics Tutor (Beal, Beck & Woolf, 1998) helps students solve word problems using fractions, decimals and percentages. The tutor records the success rates while a student is working on problems while providing subsequent, lever-appropriate problems for the student to work on. The subsequent problems that are selected are based on student ability and a desirable time in is estimated in which the student is to solve the problem.

- eTeacher

- eTeacher (Schiaffino et al., 2008) is an intelligent agent or pedagogical agent, that supports personalized e-learning assistance. It builds student profiles while observing student performance in online courses. eTeacher then uses the information from the student's performance to suggest a personalized courses of action designed to assist their learning process.

- ZOSMAT

- ZOSMAT was designed to address all the needs of a real classroom. It follows and guides a student in different stages of their learning process. This is a student-centered ITS does this by recording the progress in a student's learning and the student program changes based on the student's effort. ZOSMAT can be used for either individual learning or in a real classroom environment alongside the guidance of a human tutor.

- REALP

- REALP was designed to help students enhance their reading comprehension by providing reader-specific lexical practice and offering personalized practice with useful, authentic reading materials gathered from the Web. The system automatically build a user model according to student's performance. After reading, the student is given a series of exercises based on the target vocabulary found in reading.

- CIRCSlM-Tutor

- CIRCSIM_Tutor is an intelligent tutoring system that is used with first year medical students at the Illinois Institute of Technology. It uses natural dialogue based, Socratic language to help students learn about regulating blood pressure.

- Why2-Atlas

- Why2-Atlas is an ITS that analyses students explanations of physics principles. The students input their work in paragraph form and the program converts their words into a proof by making assumptions of student beliefs that are based on their explanations. In doing this, misconceptions and incomplete explanations are highlighted. The system then addresses these issues through a dialogue with the student and asks the student to correct their essay. A number of iterations may take place before the process is complete.

- SmartTutor

- The University of Hong Kong (HKU) developed a SmartTutor to support the needs of continuing education students. Personalized learning was identified as a key need within adult education at HKU and SmartTutor aims to fill that need. SmartTutor provides support for students by combining Internet technology, educational research and artificial intelligence.

- AutoTutor

- AutoTutor assists college students in learning about computer hardware, operating systems and the Internet in an introductory computer literacy course by simulating the discourse patterns and pedagogical strategies of a human tutor. AutoTutor attempts to understand learner's input from the keyboard and then formulate dialog moves with feedback, prompts, correction and hints.

- ActiveMath

- ActiveMath is a web-based, adaptive learning environment for mathematics. This system strives for improving long-distance learning, for complementing traditional classroom teaching, and for supporting individual and lifelong learning.

- ESC101-ITS

- The Indian Institute of Technology, Kanpur, India developed the ESC101-ITS, an intelligent tutoring system for introductory programming problems.

- AdaptErrEx

- is an adaptive intelligent tutor that uses interactive erroneous examples to help students learn decimal arithmetic.

Corporate training and industry

Generalized Intelligent Framework for Tutoring (GIFT) is an educational software designed for creation of computer-based tutoring systems. Developed by the U.S. Army Research Laboratory from 2009 to 2011, GIFT was released for commercial use in May 2012. GIFT is open-source and domain independent, and can be downloaded online for free. The software allows an instructor to design a tutoring program that can cover various disciplines through adjustments to existing courses. It includes coursework tools intended for use by researchers, instructional designers, instructors, and students. GIFT is compatible with other teaching materials, such as PowerPoint presentations, which can be integrated into the program.

SHERLOCK "SHERLOCK" is used to train Air Force technicians to diagnose problems in the electrical systems of F-15 jets. The ITS creates faulty schematic diagrams of systems for the trainee to locate and diagnose. The ITS provides diagnostic readings allowing the trainee to decide whether the fault lies in the circuit being tested or if it lies elsewhere in the system. Feedback and guidance are provided by the system and help is available if requested.

Cardiac Tutor The Cardiac Tutor's aim is to support advanced cardiac support techniques to medical personnel. The tutor presents cardiac problems and, using a variety of steps, students must select various interventions. Cardiac Tutor provides clues, verbal advice, and feedback in order to personalize and optimize the learning. Each simulation, regardless of whether the students were successfully able to help their patients, results in a detailed report which students then review.

CODES Cooperative Music Prototype Design is a Web-based environment for cooperative music prototyping. It was designed to support users, especially those who are not specialists in music, in creating musical pieces in a prototyping manner. The musical examples (prototypes) can be repeatedly tested, played and modified. One of the main aspects of CODES is interaction and cooperation between the music creators and their partners.

Effectiveness

Assessing the effectiveness of ITS programs is problematic. ITS vary greatly in design, implementation, and educational focus. When ITS are used in a classroom, the system is not only used by students, but by teachers as well. This usage can create barriers to effective evaluation for a number of reasons; most notably due to teacher intervention in student learning.

Teachers often have the ability to enter new problems into the system or adjust the curriculum. In addition, teachers and peers often interact with students while they learn with ITSs (e.g., during an individual computer lab session or during classroom lectures falling in between lab sessions) in ways that may influence their learning with the software. Prior work suggests that the vast majority of students' help-seeking behavior in classrooms using ITSs may occur entirely outside of the software - meaning that the nature and quality of peer and teacher feedback in a given class may be an important mediator of student learning in these contexts. In addition, aspects of classroom climate, such as students' overall level of comfort in publicly asking for help, or the degree to which a teacher is physically active in monitoring individual students may add additional sources of variation across evaluation contexts. All of these variables make evaluation of an ITS complex, and may help explain variation in results across evaluation studies.

Despite the inherent complexities, numerous studies have attempted to measure the overall effectiveness of ITS, often by comparisons of ITS to human tutors. Reviews of early ITS systems (1995) showed an effect size of d = 1.0 in comparison to no tutoring, where as human tutors were given an effect size of d = 2.0. Kurt VanLehn's much more recent overview (2011) of modern ITS found that there was no statistical difference in effect size between expert one-on-one human tutors and step-based ITS. Some individual ITS have been evaluated more positively than others. Studies of the Algebra Cognitive Tutor found that the ITS students outperformed students taught by a classroom teacher on standardized test problems and real-world problem solving tasks. Subsequent studies found that these results were particularly pronounced in students from special education, non-native English, and low-income backgrounds.

A more recent meta-analysis suggests that ITSs can exceed the effectiveness of both CAI and human tutors, especially when measured by local (specific) tests as opposed to standardized tests. "Students who received intelligent tutoring outperformed students from conventional classes in 46 (or 92%) of the 50 controlled evaluations, and the improvement in performance was great enough to be considered of substantive importance in 39 (or 78%) of the 50 studies. The median ES in the 50 studies was 0.66, which is considered a moderate-to-large effect for studies in the social sciences. It is roughly equivalent to an improvement in test performance from the 50th to the 75th percentile. This is stronger than typical effects from other forms of tutoring. C.-L. C. Kulik and Kulik's (1991) meta-analysis, for example, found an average ES of 0.31 in 165 studies of CAI tutoring. ITS gains are about twice as high. The ITS effect is also greater than typical effects from human tutoring. As we have seen, programs of human tutoring typically raise student test scores about 0.4 standard deviations over control levels. Developers of ITSs long ago set out to improve on the success of CAI tutoring and to match the success of human tutoring. Our results suggest that ITS developers have already met both of these goals.... Although effects were moderate to strong in evaluations that measured outcomes on locally developed tests, they were much smaller in evaluations that measured outcomes on standardized tests. Average ES on studies with local tests was 0.73; average ES on studies with standardized tests was 0.13. This discrepancy is not unusual for meta-analyses that include both local and standardized tests... local tests are likely to align well with the objectives of specific instructional programs. Off-the-shelf standardized tests provide a looser fit. ... Our own belief is that both local and standardized tests provide important information about instructional effectiveness, and when possible, both types of tests should be included in evaluation studies."

Some recognized strengths of ITS are their ability to provide immediate yes/no feedback, individual task selection, on-demand hints, and support mastery learning.

Limitations

Intelligent tutoring systems are expensive both to develop and implement. The research phase paves the way for the development of systems that are commercially viable. However, the research phase is often expensive; it requires the cooperation and input of subject matter experts, the cooperation and support of individuals across both organizations and organizational levels. Another limitation in the development phase is the conceptualization and the development of software within both budget and time constraints. There are also factors that limit the incorporation of intelligent tutors into the real world, including the long timeframe required for development and the high cost of the creation of the system components. A high portion of that cost is a result of content component building. For instance, surveys revealed that encoding an hour of online instruction time took 300 hours of development time for tutoring content. Similarly, building the Cognitive Tutor took a ratio of development time to instruction time of at least 200:1 hours. The high cost of development often eclipses replicating the efforts for real world application. Intelligent tutoring systems are not, in general, commercially feasible for real-world applications.

A criticism of Intelligent Tutoring Systems currently in use, is the pedagogy of immediate feedback and hint sequences that are built in to make the system "intelligent". This pedagogy is criticized for its failure to develop deep learning in students. When students are given control over the ability to receive hints, the learning response created is negative. Some students immediately turn to the hints before attempting to solve the problem or complete the task. When it is possible to do so, some students bottom out the hints – receiving as many hints as possible as fast as possible – in order to complete the task faster. If students fail to reflect on the tutoring system's feedback or hints, and instead increase guessing until positive feedback is garnered, the student is, in effect, learning to do the right thing for the wrong reasons. Most tutoring systems are currently unable to detect shallow learning, or to distinguish between productive versus unproductive struggle. For these and many other reasons (e.g., overfitting of underlying models to particular user populations), the effectiveness of these systems may differ significantly across users.

Another criticism of intelligent tutoring systems is the failure of the system to ask questions of the students to explain their actions. If the student is not learning the domain language than it becomes more difficult to gain a deeper understanding, to work collaboratively in groups, and to transfer the domain language to writing. For example, if the student is not "talking science" than it is argued that they are not being immersed in the culture of science, making it difficult to undertake scientific writing or participate in collaborative team efforts. Intelligent tutoring systems have been criticized for being too "instructivist" and removing intrinsic motivation, social learning contexts, and context realism from learning.

Practical concerns, in terms of the inclination of the sponsors/authorities and the users to adapt intelligent tutoring systems, should be taken into account. First, someone must have a willingness to implement the ITS. Additionally an authority must recognize the necessity to integrate an intelligent tutoring software into current curriculum and finally, the sponsor or authority must offer the needed support through the stages of the system development until it is completed and implemented.

Evaluation of an intelligent tutoring system is an important phase; however, it is often difficult, costly, and time-consuming. Even though there are various evaluation techniques presented in the literature, there are no guiding principles for the selection of appropriate evaluation method(s) to be used in a particular context. Careful inspection should be undertaken to ensure that a complex system does what it claims to do. This assessment may occur during the design and early development of the system to identify problems and to guide modifications (i.e. formative evaluation). In contrast, the evaluation may occur after the completion of the system to support formal claims about the construction, behaviour of, or outcomes associated with a completed system (i.e. summative evaluation). The great challenge introduced by the lack of evaluation standards resulted in neglecting the evaluation stage in several existing ITS'.

Improvements

Intelligent tutoring systems are less capable than human tutors in the areas of dialogue and feedback. For example, human tutors are able to interpret the affective state of the student, and potentially adapt instruction in response to these perceptions. Recent work is exploring potential strategies for overcoming these limitations of ITSs, to make them more effective.

Dialogue

Human tutors have the ability to understand a person's tone and inflection within a dialogue and interpret this to provide continual feedback through an ongoing dialogue. Intelligent tutoring systems are now being developed to attempt to simulate natural conversations. To get the full experience of dialogue there are many different areas in which a computer must be programmed; including being able to understand tone, inflection, body language, and facial expression and then to respond to these. Dialogue in an ITS can be used to ask specific questions to help guide students and elicit information while allowing students to construct their own knowledge. The development of more sophisticated dialogue within an ITS has been a focus in some current research partially to address the limitations and create a more constructivist approach to ITS. In addition, some current research has focused on modeling the nature and effects of various social cues commonly employed within a dialogue by human tutors and tutees, in order to build trust and rapport (which have been shown to have positive impacts on student learning).

Emotional affect

A growing body of work is considering the role of affect on learning, with the objective of developing intelligent tutoring systems that can interpret and adapt to the different emotional states. Humans do not just use cognitive processes in learning but the affective processes they go through also plays an important role. For example, learners learn better when they have a certain level of disequilibrium (frustration), but not enough to make the learner feel completely overwhelmed. This has motivated affective computing to begin to produce and research creating intelligent tutoring systems that can interpret the affective process of an individual. An ITS can be developed to read an individual's expressions and other signs of affect in an attempt to find and tutor to the optimal affective state for learning. There are many complications in doing this since affect is not expressed in just one way but in multiple ways so that for an ITS to be effective in interpreting affective states it may require a multimodal approach (tone, facial expression, etc...). These ideas have created a new field within ITS, that of Affective Tutoring Systems (ATS). One example of an ITS that addresses affect is Gaze Tutor which was developed to track students eye movements and determine whether they are bored or distracted and then the system attempts to reengage the student.

Rapport Building

To date, most ITSs have focused purely on the cognitive aspects of tutoring and not on the social relationship between the tutoring system and the student. As demonstrated by the Computers are social actors paradigm humans often project social heuristics onto computers. For example, in observations of young children interacting with Sam the CastleMate, a collaborative story telling agent, children interacted with this simulated child in much the same manner as they would a human child. It has been suggested that to effectively design an ITS that builds rapport with students, the ITS should mimic strategies of instructional immediacy, behaviors which bridge the apparent social distance between students and teachers such as smiling and addressing students by name. With regard to teenagers, Ogan et al. draw from observations of close friends tutoring each other to argue that in order for an ITS to build rapport as a peer to a student, a more involved process of trust building is likely necessary which may ultimately require that the tutoring system possess the capability to effectively respond to and even produce seemingly rude behavior in order to mediate motivational and affective student factors through playful joking and taunting.

Teachable Agents

Traditionally ITSs take on the role of autonomous tutors, however they can also take on the role of tutees for the purpose of learning by teaching exercises. Evidence suggests that learning by teaching can be an effective strategy for mediating self-explanation, improving feelings of self-efficacy, and boosting educational outcomes and retention. In order to replicate this effect the roles of the student and ITS can be switched. This can be achieved by designing the ITS to have the appearance of being taught as is the case in the Teachable Agent Arithmetic Game and Betty's Brain. Another approach is to have students teach a machine learning agent which can learn to solve problems by demonstration and correctness feedback as is the case in the APLUS system built with SimStudent. In order to replicate the educational effects of learning by teaching teachable agents generally have a social agent built on top of them which poses questions or conveys confusion. For example, Betty from Betty's Brain will prompt the student to ask her questions to make sure that she understands the material, and Stacy from APLUS will prompt the user for explanations of the feedback provided by the student.