Good ideas start with a question. Great ideas start with a question that comes back to you. One such question that has haunted scientists and philosophers for thousands of years is whether there is a smallest unit of length, a shortest distance below which we cannot resolve structures. Can we forever look closer and ever closer into space, time, and matter? Or is there a fundamental limit, and if so, what is it, and what is it that dictates its nature?

I

picture our foreign ancestors sitting in their cave watching the world

in amazement, wondering what the stones, the trees and they themselves

are made of — and then starving to death. Luckily, those smart enough to

hunt down the occasional bear eventually gave rise to a human

civilization that was sheltered enough from the harshness of life to let

the survivors get back to watching and wondering what we are made of.

Science and philosophy in earnest is only a few thousand years old, but

the question whether there is smallest unit has been a driving force in

our studies of the natural world for all of recorded history.

The

Ancient Greeks invented atomism: the idea that there is an ultimate and

smallest element of matter that everything is made of dates back to

Democritus of Abdera. Zeno’s famous paradoxa sought to shed light on the

possibility of infinite divisibility. The question returned in the

modern age with the advent of quantum mechanics, with Heisenberg’s

uncertainty principle fundamentally limiting the precision to which we

can measure. It became only more pressing with the divergences inherent

to quantum field theory, due to the necessary inclusion of infinitely

short distances.

It

was in fact Heisenberg who first suggested that the divergences in

quantum field theory might be cured by the existence of a fundamentally

minimal length, and he introduced it by making position operators

non-commuting among themselves. Just as the non-commutativity of

momentum and position operators leads to an uncertainty principle, the

non-commutativity of position operators limits how well distances can be

measured.

Heisenberg’s

main worry, which the minimal length was supposed to deal with, was the

non-renormalizability of Fermi’s theory of beta-decay. This theory,

however, turned out to be only an approximation to the renormalizable

electro-weak interaction, so he had to worry no more.

Heisenberg’s

idea was forgotten for some decades, then picked up again and

eventually grew into the area of non-commutative geometries. Meanwhile,

the problem of quantizing gravity appeared on stage and with it, again,

non-renormalizability.

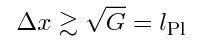

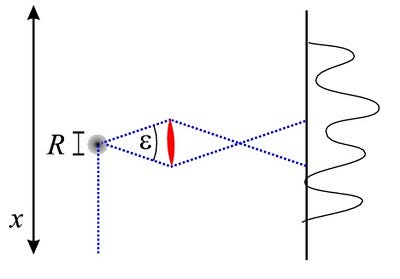

In the mid-1960s, Alden Mead reinvestigated Heisenberg’s microscope,

the argument that lead to the uncertainty principle, with

(non-quantized) gravity taken into account. He showed that gravity

amplifies the uncertainty inherent to position so that it becomes

impossible to measure distances below the Planck length: about 10^-33

cm. Mead’s argument was forgotten, then rediscovered in the 1990s by

string theorists who had noticed that using strings to prevent

divergences (by avoiding point-interactions) also implies a finite resolution, if in a technically somewhat different way than Mead’s.

Since

then, the idea that the Planck length may be a fundamental length

beyond which there is nothing new to find, ever, appeared in other

approaches towards quantum gravity, such as Loop Quantum Gravity and

Asymptotically Safe Gravity. It has also been studied as an effective

theory by modifying quantum field theory to include a minimal length

from scratch, and often runs under the name “generalized uncertainty”.

One

of the main difficulties with these theories is that a minimal length,

if interpreted as the length of a ruler, would not be invariant under

Lorentz-transformations due to length contraction. In other words, the

idea of a “minimum length” would suddenly imply that different observers

(i.e., people moving at different velocities) would measure different

fundamental minimum lengths from one another! This problem is easy to

overcome in momentum space, where it is a maximal energy that has to be

made Lorentz-invariant, because momentum space is not translationally

invariant. But in position space, one either has to break

Lorentz-invariance or deform it and give up locality, which has

observable consequences, and not always desired ones. Personally, I

think it is a mistake to interpret the minimal length as the length of a

ruler (a component of a Lorentz-vector), and it should instead be

interpreted as a Lorentz-invariant scalar to begin with, but opinions on

that matter differ.

The science and history of the physical idea of a minimal length has now been covered in a recent book by Amit Hagar.

Amit

is a philosopher but he certainly knows his math and physics. Indeed, I

suspect the book would be quite hard to understand for a reader without

at least some background knowledge in those two subjects. Amit has made

a considerable effort to address the topic of a fundamental length from

as many perspectives as possible, and he covers a lot of scientific

history and philosophical

considerations that I had not previously been

aware of. The book is also noteworthy for including a chapter on quantum

gravity phenomenology.

My

only complaint about the book is its title, because the question of

“discrete vs. continuous” is not the same as the question of “finite vs.

infinite resolution.” One can have a continuous structure and yet be

unable to resolve it beyond some limit, such as would be the case when

the limit makes itself noticeable as a blur rather than a

discretization. On the other hand, one can have a discrete structure

that does not prevent arbitrarily sharp resolution, which can happen

when localization on a single base-point of the discrete structure is

possible.

(Amit’s book is admittedly quite pricey,

so let me add that he said should sales numbers reach 500, Cambridge

University Press will put a considerably less expensive paperback

version on offer. So tell your library to get a copy and let’s hope

we’ll make it to 500 so it becomes affordable for more of the interested

readers.)

Every

once in a while I think that there maybe is no fundamentally smallest

unit of length; that all these arguments for its existence are wrong. I

like to think that we can look infinitely close into structures and will

never find a final theory, turtles upon turtles, or that structures are

ultimately self-similar and repeat. Alas, it is hard to make sense of

the romantic idea of universes in universes in universes mathematically,

not that I didn’t try, and so the minimal length keeps coming back to

me.

Many

(if not most) endeavors to find observational evidence for quantum

gravity today look for manifestations of a minimal length in one way or

the other, such as modifications of the dispersion relation, modifications of the commutation-relations, or Bekenstein’s tabletop search for quantum gravity.

The question of whether there is a smallest possible scale in the

Universe is today a very active area of research. We’ve come a long way,

but we’re still out to answer the same questions that people asked

themselves thousands of years ago. Although we’ve certainly made a lot

of progress, the ultimate answer is still beyond our abilities to

resolve.

This post was written by Sabine Hossenfelder, assistant professor of physics at Nordita. You can read her (more technical) paper on a fundamental minimum length here, and follow her tweets at @skdh.