A Markov decision process (MDP) is a discrete time stochastic control process. It provides a mathematical framework for modeling decision making in situations where outcomes are partly random and partly under the control of a decision maker. MDPs are useful for studying optimization problems solved via dynamic programming and reinforcement learning. MDPs were known at least as early as the 1950s; a core body of research on Markov decision processes resulted from Howard's 1960 book, Dynamic Programming and Markov Processes. They are used in many disciplines, including robotics, automatic control, economics and manufacturing. The name of MDPs comes from the Russian mathematician Andrey Markov.

At each time step, the process is in some state , and the decision maker may choose any action that is available in state . The process responds at the next time step by randomly moving into a new state , and giving the decision maker a corresponding reward .

The probability that the process moves into its new state is influenced by the chosen action. Specifically, it is given by the state transition function . Thus, the next state depends on the current state and the decision maker's action . But given and , it is conditionally independent of all previous states and actions; in other words, the state transitions of an MDP satisfies the Markov property.

Markov decision processes are an extension of Markov chains; the difference is the addition of actions (allowing choice) and rewards (giving motivation). Conversely, if only one action exists for each state (e.g. "wait") and all rewards are the same (e.g. "zero"), a Markov decision process reduces to a Markov chain.

At each time step, the process is in some state , and the decision maker may choose any action that is available in state . The process responds at the next time step by randomly moving into a new state , and giving the decision maker a corresponding reward .

The probability that the process moves into its new state is influenced by the chosen action. Specifically, it is given by the state transition function . Thus, the next state depends on the current state and the decision maker's action . But given and , it is conditionally independent of all previous states and actions; in other words, the state transitions of an MDP satisfies the Markov property.

Markov decision processes are an extension of Markov chains; the difference is the addition of actions (allowing choice) and rewards (giving motivation). Conversely, if only one action exists for each state (e.g. "wait") and all rewards are the same (e.g. "zero"), a Markov decision process reduces to a Markov chain.

Definition

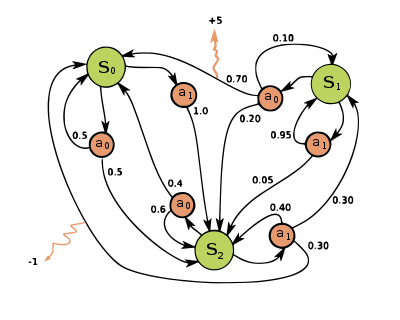

Example of a simple MDP with three states (green circles) and two actions (orange circles), with two rewards (orange arrows).

A Markov decision process is a 4-tuple , where:

- is a finite set of states;

- is a finite set of actions (alternatively, is the finite set of actions available from state );

- is the probability that action in state at time will lead to state at time ;

- is the immediate reward (or expected immediate reward) received after transitioning from state to state , due to action .

Definition

Example of a simple MDP with three states (green circles) and two actions (orange circles), with two rewards (orange arrows).

A Markov decision process is a 4-tuple , where:

- is a finite set of states;

- is a finite set of actions (alternatively, is the finite set of actions available from state );

- is the probability that action in state at time will lead to state at time ;

- is the immediate reward (or expected immediate reward) received after transitioning from state to state , due to action .

Problem

The core problem of MDPs is to find a "policy" for the decision maker: a function that specifies the action that the decision maker will choose when in state . Once a Markov decision process is combined with a policy in this way, this fixes the action for each state and the resulting combination behaves like a Markov chain (since the action chosen in state is completely determined by and reduces to , a Markov transition matrix).The goal is to choose a policy that will maximize some cumulative function of the random rewards, typically the expected discounted sum over a potentially infinite horizon:

- (where we choose , i.e. actions given by the policy),

Because of the Markov property, the optimal policy for this particular problem can indeed be written as a function of only, as assumed above.

Algorithms

The solution for an MDP is a policy which describes the best action for each state in the MDP, known as the optimal policy. This optimal policy can be found through a variety of methods, like dynamic programming.Some dynamic programming solutions require knowledge of the state transition function and the reward function . Others can solve for the optimal policy of an MDP using experimentation alone.

Consider the case in which there is a given the state transition function and reward function for an MDP, and we seek the optimal policy that maximizes the expected discounted reward.

The standard family of algorithms to calculate this optimal policy requires storage for two arrays indexed by state: value , which contains real values, and policy which contains actions. At the end of the algorithm, will contain the solution and will contain the discounted sum of the rewards to be earned (on average) by following that solution from state .

The algorithm has two kinds of steps, a value update and a policy update, which are repeated in some order for all the states until no further changes take place. Both recursively update a new estimation of the optimal policy and state value using an older estimation of those values.

Notable variants

Value iteration

In value iteration (Bellman 1957), which is also called backward induction, the function is not used; instead, the value of is calculated within whenever it is needed. Substituting the calculation of into the calculation of gives the combined step:Policy iteration

In policy iteration (Howard 1960), step one is performed once, and then step two is repeated until it converges. Then step one is again performed once and so on.Instead of repeating step two to convergence, it may be formulated and solved as a set of linear equations. These equations are merely obtained by making in the step two equation. Thus, repeating step two to convergence can be interpreted as solving the linear equations by Relaxation (iterative method).

This variant has the advantage that there is a definite stopping condition: when the array does not change in the course of applying step 1 to all states, the algorithm is completed.

Policy iteration is usually slower than value iteration for a large number of possible states.

Modified policy iteration

In modified policy iteration (van Nunen 1976; Puterman & Shin 1978), step one is performed once, and then step two is repeated several times. Then step one is again performed once and so on.Prioritized sweeping

In this variant, the steps are preferentially applied to states which are in some way important – whether based on the algorithm (there were large changes in or around those states recently) or based on use (those states are near the starting state, or otherwise of interest to the person or program using the algorithm).Extensions and generalizations

A Markov decision process is a stochastic game with only one player.Partial observability

The solution above assumes that the state is known when action is to be taken; otherwise cannot be calculated. When this assumption is not true, the problem is called a partially observable Markov decision process or POMDP.A major advance in this area was provided by Burnetas and Katehakis in "Optimal adaptive policies for Markov decision processes". In this work, a class of adaptive policies that possess uniformly maximum convergence rate properties for the total expected finite horizon reward were constructed under the assumptions of finite state-action spaces and irreducibility of the transition law. These policies prescribe that the choice of actions, at each state and time period, should be based on indices that are inflations of the right-hand side of the estimated average reward optimality equations.

Reinforcement learning

If the probabilities or rewards are unknown, the problem is one of reinforcement learning.For this purpose it is useful to define a further function, which corresponds to taking the action and then continuing optimally (or according to whatever policy one currently has):

Reinforcement learning can solve Markov decision processes without explicit specification of the transition probabilities; the values of the transition probabilities are needed in value and policy iteration. In reinforcement learning, instead of explicit specification of the transition probabilities, the transition probabilities are accessed through a simulator that is typically restarted many times from a uniformly random initial state. Reinforcement learning can also be combined with function approximation to address problems with a very large number of states.

Learning automata

Another application of MDP process in machine learning theory is called learning automata. This is also one type of reinforcement learning if the environment is stochastic. The first detail learning automata paper is surveyed by Narendra and Thathachar (1974), which were originally described explicitly as finite state automata. Similar to reinforcement learning, learning automata algorithm also has the advantage of solving the problem when probability or rewards are unknown. The difference between learning automata and Q-learning is that they omit the memory of Q-values, but update the action probability directly to find the learning result. Learning automata is a learning scheme with a rigorous proof of convergence.In learning automata theory, a stochastic automaton consists of:

- a set x of possible inputs,

- a set Φ = { Φ1, ..., Φs } of possible internal states,

- a set α = { α1, ..., αr } of possible outputs, or actions, with r≤s,

- an initial state probability vector p(0) = ≪ p1(0), ..., ps(0) ≫,

- a computable function A which after each time step t generates p(t+1) from p(t), the current input, and the current state, and

- a function G: Φ → α which generates the output at each time step.

Category theoretic interpretation

Other than the rewards, a Markov decision process can be understood in terms of Category theory. Namely, let denote the free monoid with generating set A. Let Dist denote the Kleisli category of the Giry monad. Then a functor encodes both the set S of states and the probability function P.In this way, Markov decision processes could be generalized from monoids (categories with one object) to arbitrary categories. One can call the result a context-dependent Markov decision process, because moving from one object to another in changes the set of available actions and the set of possible states.

Fuzzy Markov decision processes (FMDPs)

In the MDPs, an optimal policy is a policy which maximizes the probability-weighted summation of future rewards. Therefore, an optimal policy consists of several actions which belong to a finite set of actions. In fuzzy Markov decision processes (FMDPs), first, the value function is computed as regular MDPs (i.e., with a finite set of actions); then, the policy is extracted by a fuzzy inference system. In other words, the value function is utilized as an input for the fuzzy inference system, and the policy is the output of the fuzzy inference system.Continuous-time Markov decision process

In discrete-time Markov Decision Processes, decisions are made at discrete time intervals. However, for continuous-time Markov decision processes, decisions can be made at any time the decision maker chooses. In comparison to discrete-time Markov decision processes, continuous-time Markov decision processes can better model the decision making process for a system that has continuous dynamics, i.e., the system dynamics is defined by partial differential equations (PDEs).Definition

In order to discuss the continuous-time Markov decision process, we introduce two sets of notations:If the state space and action space are finite,

- : State space;

- : Action space;

- : , transition rate function;

- : , a reward function.

- : state space;

- : space of possible control;

- : , a transition rate function;

- : , a reward rate function such that , where is the reward function we discussed in previous case.

Problem

Like the discrete-time Markov decision processes, in continuous-time Markov decision processes we want to find the optimal policy or control which could give us the optimal expected integrated reward:Linear programming formulation

If the state space and action space are finite, we could use linear programming to find the optimal policy, which was one of the earliest approaches applied. Here we only consider the ergodic model, which means our continuous-time MDP becomes an ergodic continuous-time Markov chain under a stationary policy. Under this assumption, although the decision maker can make a decision at any time at the current state, he could not benefit more by taking more than one action. It is better for him to take an action only at the time when system is transitioning from the current state to another state. Under some conditions, if our optimal value function is independent of state , we will have the following inequality:- Primal linear program(P-LP);

- Dual linear program(D-LP);

Hamilton–Jacobi–Bellman equation

In continuous-time MDP, if the state space and action space are continuous, the optimal criterion could be found by solving Hamilton–Jacobi–Bellman (HJB) partial differential equation. In order to discuss the HJB equation, we need to reformulate our problemApplication

Continuous-time Markov decision processes have applications in queueing systems, epidemic processes, and population processes.Alternative notations

The terminology and notation for MDPs are not entirely settled. There are two main streams — one focuses on maximization problems from contexts like economics, using the terms action, reward, value, and calling the discount factor or , while the other focuses on minimization problems from engineering and navigation, using the terms control, cost, cost-to-go, and calling the discount factor . In addition, the notation for the transition probability varies.| in this article | alternative | comment |

|---|---|---|

| action | control |

|

| reward | cost | is the negative of |

| value | cost-to-go | is the negative of |

| policy | policy |

|

| discounting factor | discounting factor |

|

| transition probability | transition probability |

|

Constrained Markov decision processes

Constrained Markov decision processes (CMDPs) are extensions to Markov decision process (MDPs). There are three fundamental differences between MDPs and CMDPs:- There are multiple costs incurred after applying an action instead of one.

- CMDPs are solved with linear programs only, and dynamic programming does not work.

- The final policy depends on the starting state.

![{\displaystyle \max \quad \mathbb {E} _{u}\left[\left.\int _{0}^{\infty }\gamma ^{t}r(x(t),u(t)))dt\;\right|x_{0}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/3a1a607327986615b6f81c5b08e371182798c55a)

![{\displaystyle {\begin{aligned}V(x(0),0)={}&\max _{u}\int _{0}^{T}r(x(t),u(t))\,dt+D[x(T)]\\{\text{s.t.}}\quad &{\frac {dx(t)}{dt}}=f[t,x(t),u(t)]\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/3b798dc9345b260fe104fc55b5cdb475d1f9e006)