From Wikipedia, the free encyclopedia

Even very young children perform rudimentary experiments to learn about the world and how things work.

An

experiment is a procedure carried out to support, refute, or validate a

hypothesis.

Experiments vary greatly in goal and scale, but always rely on

repeatable procedure and logical analysis of the results. There also

exists

natural experimental studies.

A child may carry out basic experiments to understand gravity,

while teams of scientists may take years of systematic investigation to

advance their understanding of a phenomenon. Experiments and other types

of hands-on activities are very important to student learning in the

science classroom. Experiments can raise test scores and help a student

become more engaged and interested in the material they are learning,

especially when used over time.

Experiments can vary from personal and informal natural comparisons

(e.g. tasting a range of chocolates to find a favorite), to highly

controlled (e.g. tests requiring complex apparatus overseen by many

scientists that hope to discover information about subatomic particles).

Uses of experiments vary considerably between the

natural and

human sciences.

Experiments typically include

controls, which are designed to minimize the effects of variables other than the single

independent variable. This increases the reliability of the results, often through a comparison between control

measurements and the other measurements. Scientific controls are a part of the

scientific method.

Ideally, all variables in an experiment are controlled (accounted for

by the control measurements) and none are uncontrolled. In such an

experiment, if all controls work as expected, it is possible to conclude

that the experiment works as intended, and that results are due to the

effect of the tested variable.

Overview

An experiment usually tests a

hypothesis,

which is an expectation about how a particular process or phenomenon

works. However, an experiment may also aim to answer a "what-if"

question, without a specific expectation about what the experiment

reveals, or to confirm prior results. If an experiment is carefully

conducted, the results usually either support or disprove the

hypothesis. According to some

philosophies of science, an experiment can never "prove" a hypothesis, it can only add support. On the other hand, an experiment that provides a

counterexample can disprove a theory or hypothesis, but a theory can always be salvaged by appropriate

ad hoc modifications at the expense of simplicity. An experiment must also control the possible

confounding factors—any

factors that would mar the accuracy or repeatability of the experiment

or the ability to interpret the results. Confounding is commonly

eliminated through

scientific controls and/or, in

randomized experiments, through

random assignment.

In

engineering

and the physical sciences, experiments are a primary component of the

scientific method. They are used to test theories and hypotheses about

how physical processes work under particular conditions (e.g., whether a

particular engineering process can produce a desired chemical

compound). Typically, experiments in these fields focus on

replication of identical procedures in hopes of producing identical results in each replication. Random assignment is uncommon.

In medicine and the

social sciences,

the prevalence of experimental research varies widely across

disciplines. When used, however, experiments typically follow the form

of the

clinical trial,

where experimental units (usually individual human beings) are randomly

assigned to a treatment or control condition where one or more outcomes

are assessed. In contrast to norms in the physical sciences, the focus is typically on the

average treatment effect (the difference in outcomes between the treatment and control groups) or another

test statistic produced by the experiment. A single study typically does not involve replications of the experiment, but separate studies may be aggregated through

systematic review and

meta-analysis.

There are various differences in experimental practice in each of the

branches of science. For example,

agricultural research frequently uses randomized experiments (e.g., to test the comparative effectiveness of different fertilizers), while

experimental economics

often involves experimental tests of theorized human behaviors without

relying on random assignment of individuals to treatment and control

conditions.

History

One of the first methodical approaches to experiments in the modern

sense is visible in the works of the Arab mathematician and scholar

Ibn al-Haytham. He conducted his experiments in the field of optics - going back to optical and mathematical problems in the works of

Ptolemy

- by controlling his experiments due to factors such as

self-criticality, reliance on visible results of the experiments as well

as a criticality in terms of earlier results. He counts as one of the

first scholars using an inductive-experimental method for achieving

results. In his book "Optics" he describes the fundamentally new approach to knowledge and research in an experimental sense:

"We should, that is, recommence the inquiry into its

principles and premisses, beginning our investigation with an inspection

of the things that exist and a survey of the conditions of visible

objects. We should distinguish the properties of particulars, and gather

by induction what pertains to the eye when vision takes place and what

is found in the manner of sensation to be uniform, unchanging, manifest

and not subject to doubt. After which we should ascend in our inquiry

and reasonings, gradually and orderly, criticizing premisses and

exercising caution in regard to conclusions – our aim in all that we

make subject to inspection and review being to employ justice, not to

follow prejudice, and to take care in all that we judge and criticize

that we seek the truth and not to be swayed by opinion. We may in this

way eventually come to the truth that gratifies the heart and gradually

and carefully reach the end at which certainty appears; while through

criticism and caution we may seize the truth that dispels disagreement

and resolves doubtful matters. For all that, we are not free from that

human turbidity which is in the nature of man; but we must do our best

with what we possess of human power. From God we derive support in all

things."

According to his explanation, a strictly controlled test

execution with a sensibility for the subjectivity and susceptibility of

outcomes due to the nature of man is necessary. Furthermore, a critical

view on the results and outcomes of earlier scholars is necessary:

"It is thus the duty of the man

who studies the writings of scientists, if learning the truth is his

goal, to make himself an enemy of all that he reads, and, applying his

mind to the core and margins of its content, attack it from every side.

He should also suspect himself as he performs his critical examination

of it, so that he may avoid falling into either prejudice or leniency."

Thus, a comparison of earlier results with the experimental results

is necessary for an objective experiment - the visible results being

more important. In the end, this may mean that an experimental

researcher must find enough courage to discard traditional opinions or

results, especially if these results are not experimental but results

from a logical/ mental derivation. In this process of critical

consideration, the man himself should not forget that he tends to

subjective opinions - through "prejudices" and "leniency" - and thus has

to be critical about his own way of building hypotheses.

Francis Bacon (1561–1626), an English

philosopher and

scientist active in the 17th century, became an influential supporter of experimental science in the

English renaissance. He disagreed with the method of answering scientific questions by

deduction - similar to

Ibn al-Haytham

- and described it as follows: "Having first determined the question

according to his will, man then resorts to experience, and bending her

to conformity with his placets, leads her about like a captive in a

procession."

Bacon wanted a method that relied on repeatable observations, or

experiments. Notably, he first ordered the scientific method as we

understand it today.

There

remains simple experience; which, if taken as it comes, is called

accident, if sought for, experiment. The true method of experience first

lights the candle [hypothesis], and then by means of the candle shows

the way [arranges and delimits the experiment]; commencing as it does

with experience duly ordered and digested, not bungling or erratic, and

from it deducing axioms [theories], and from established axioms again

new experiments.

In the centuries that followed, people who applied the scientific

method in different areas made important advances and discoveries. For

example,

Galileo Galilei

(1564-1642) accurately measured time and experimented to make accurate

measurements and conclusions about the speed of a falling body.

Antoine Lavoisier (1743-1794), a French chemist, used experiment to describe new areas, such as

combustion and

biochemistry and to develop the theory of

conservation of mass (matter).

Louis Pasteur (1822-1895) used the scientific method to disprove the prevailing theory of

spontaneous generation and to develop the

germ theory of disease. Because of the importance of controlling potentially confounding variables, the use of well-designed

laboratory experiments is preferred when possible.

Types of experiment

Experiments

might be categorized according to a number of dimensions, depending

upon professional norms and standards in different fields of study. In

some disciplines (e.g.,

psychology or

political science), a 'true experiment' is a method of social research in which there are two kinds of

variables. The

independent variable is manipulated by the experimenter, and the

dependent variable is measured. The signifying characteristic of a true experiment is that it

randomly allocates

the subjects to neutralize experimenter bias, and ensures, over a large

number of iterations of the experiment, that it controls for all

confounding factors.

Controlled experiments

A controlled experiment often compares the results obtained from experimental samples against

control

samples, which are practically identical to the experimental sample

except for the one aspect whose effect is being tested (the

independent variable). A good example would be a drug trial. The sample or group receiving the drug would be the experimental group (

treatment group); and the one receiving the

placebo or regular treatment would be the

control one. In many laboratory experiments it is good practice to have several

replicate samples for the test being performed and have both a

positive control and a

negative control.

The results from replicate samples can often be averaged, or if one of

the replicates is obviously inconsistent with the results from the other

samples, it can be discarded as being the result of an experimental

error (some step of the test procedure may have been mistakenly omitted

for that sample). Most often, tests are done in duplicate or triplicate.

A positive control is a procedure similar to the actual experimental

test but is known from previous experience to give a positive result. A

negative control is known to give a negative result. The positive

control confirms that the basic conditions of the experiment were able

to produce a positive result, even if none of the actual experimental

samples produce a positive result. The negative control demonstrates the

base-line result obtained when a test does not produce a measurable

positive result. Most often the value of the negative control is treated

as a "background" value to subtract from the test sample results.

Sometimes the positive control takes the quadrant of a

standard curve.

An example that is often used in teaching laboratories is a controlled

protein assay.

Students might be given a fluid sample containing an unknown (to the

student) amount of protein. It is their job to correctly perform a

controlled experiment in which they determine the concentration of

protein in the fluid sample (usually called the "unknown sample"). The

teaching lab would be equipped with a protein standard solution with a

known protein concentration. Students could make several positive

control samples containing various dilutions of the protein standard.

Negative control samples would contain all of the reagents for the

protein assay but no protein. In this example, all samples are performed

in duplicate. The assay is a

colorimetric assay in which a

spectrophotometer

can measure the amount of protein in samples by detecting a colored

complex formed by the interaction of protein molecules and molecules of

an added dye. In the illustration, the results for the diluted test

samples can be compared to the results of the standard curve (the blue

line in the illustration) to estimate the amount of protein in the

unknown sample.

Controlled experiments can be performed when it is difficult to

exactly control all the conditions in an experiment. In this case, the

experiment begins by creating two or more sample groups that are

probabilistically equivalent,

which means that measurements of traits should be similar among the

groups and that the groups should respond in the same manner if given

the same treatment. This equivalency is determined by

statistical methods that take into account the amount of variation between individuals and the

number of individuals in each group. In fields such as

microbiology and

chemistry,

where there is very little variation between individuals and the group

size is easily in the millions, these statistical methods are often

bypassed and simply splitting a

solution into equal parts is assumed to produce identical sample groups.

Once equivalent groups have been formed, the experimenter tries to treat them identically except for the one

variable that he or she wishes to isolate.

Human experimentation requires special safeguards against outside variables such as the

placebo effect. Such experiments are generally

double blind,

meaning that neither the volunteer nor the researcher knows which

individuals are in the control group or the experimental group until

after all of the data have been collected. This ensures that any effects

on the volunteer are due to the treatment itself and are not a response

to the knowledge that he is being treated.

In human experiments, researchers may give a

subject (person) a

stimulus that the subject responds to. The goal of the experiment is to

measure the response to the stimulus by a

test method.

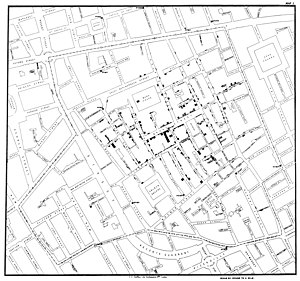

Original map by John Snow showing the clusters of cholera cases in the London epidemic of 1854

In the

design of experiments, two or more "treatments" are applied to estimate the

difference between the mean

responses

for the treatments. For example, an experiment on baking bread could

estimate the difference in the responses associated with quantitative

variables, such as the ratio of water to flour, and with qualitative

variables, such as strains of yeast. Experimentation is the step in the

scientific method that helps people decide between two or more competing explanations – or

hypotheses.

These hypotheses suggest reasons to explain a phenomenon, or predict

the results of an action. An example might be the hypothesis that "if I

release this ball, it will fall to the floor": this suggestion can then

be tested by carrying out the experiment of letting go of the ball, and

observing the results. Formally, a hypothesis is compared against its

opposite or

null hypothesis

("if I release this ball, it will not fall to the floor"). The null

hypothesis is that there is no explanation or predictive power of the

phenomenon through the reasoning that is being investigated. Once

hypotheses are defined, an experiment can be carried out and the results

analysed to confirm, refute, or define the accuracy of the hypotheses.

Natural experiments

The term "experiment" usually implies a controlled experiment, but

sometimes controlled experiments are prohibitively difficult or

impossible. In this case researchers resort to

natural experiments or

quasi-experiments. Natural experiments rely solely on observations of the variables of the

system

under study, rather than manipulation of just one or a few variables as

occurs in controlled experiments. To the degree possible, they attempt

to collect data for the system in such a way that contribution from all

variables can be determined, and where the effects of variation in

certain variables remain approximately constant so that the effects of

other variables can be discerned. The degree to which this is possible

depends on the observed

correlation between

explanatory variables in the observed data. When these variables are

not

well correlated, natural experiments can approach the power of

controlled experiments. Usually, however, there is some correlation

between these variables, which reduces the reliability of natural

experiments relative to what could be concluded if a controlled

experiment were performed. Also, because natural experiments usually

take place in uncontrolled environments, variables from undetected

sources are neither measured nor held constant, and these may produce

illusory correlations in variables under study.

Much research in several

science disciplines, including

economics,

political science,

geology,

paleontology,

ecology,

meteorology, and

astronomy,

relies on quasi-experiments. For example, in astronomy it is clearly

impossible, when testing the hypothesis "Stars are collapsed clouds of

hydrogen", to start out with a giant cloud of hydrogen, and then perform

the experiment of waiting a few billion years for it to form a star.

However, by observing various clouds of hydrogen in various states of

collapse, and other implications of the hypothesis (for example, the

presence of various spectral emissions from the light of stars), we can

collect data we require to support the hypothesis. An early example of

this type of experiment was the first verification in the 17th century

that light does not travel from place to place instantaneously, but

instead has a measurable speed. Observation of the appearance of the

moons of Jupiter were slightly delayed when Jupiter was farther from

Earth, as opposed to when Jupiter was closer to Earth; and this

phenomenon was used to demonstrate that the difference in the time of

appearance of the moons was consistent with a measurable speed.

Field experiments

Field experiments are so named to distinguish them from

laboratory

experiments, which enforce scientific control by testing a hypothesis

in the artificial and highly controlled setting of a laboratory. Often

used in the social sciences, and especially in economic analyses of

education and health interventions, field experiments have the advantage

that outcomes are observed in a natural setting rather than in a

contrived laboratory environment. For this reason, field experiments are

sometimes seen as having higher

external validity

than laboratory experiments. However, like natural experiments, field

experiments suffer from the possibility of contamination: experimental

conditions can be controlled with more precision and certainty in the

lab. Yet some phenomena (e.g., voter turnout in an election) cannot be

easily studied in a laboratory.

Contrast with observational study

The black box model for observation (input and output are observables). When there are a feedback with some observer's control, as illustrated, the observation is also an experiment.

An

observational study

is used when it is impractical, unethical, cost-prohibitive (or

otherwise inefficient) to fit a physical or social system into a

laboratory setting, to completely control confounding factors, or to

apply random assignment. It can also be used when confounding factors

are either limited or known well enough to analyze the data in light of

them (though this may be rare when social phenomena are under

examination). For an observational science to be valid, the experimenter

must know and account for

confounding

factors. In these situations, observational studies have value because

they often suggest hypotheses that can be tested with randomized

experiments or by collecting fresh data.

Fundamentally, however, observational studies are not

experiments. By definition, observational studies lack the manipulation

required for

Baconian experiments.

In addition, observational studies (e.g., in biological or social

systems) often involve variables that are difficult to quantify or

control. Observational studies are limited because they lack the

statistical properties of randomized experiments. In a randomized

experiment, the method of randomization specified in the experimental

protocol guides the statistical analysis, which is usually specified

also by the experimental protocol. Without a statistical model that reflects an objective randomization, the statistical analysis relies on a subjective model. Inferences from subjective models are unreliable in theory and practice.

In fact, there are several cases where carefully conducted

observational studies consistently give wrong results, that is, where

the results of the observational studies are inconsistent and also

differ from the results of experiments. For example, epidemiological

studies of colon cancer consistently show beneficial correlations with

broccoli consumption, while experiments find no benefit.

A particular problem with observational studies involving human

subjects is the great difficulty attaining fair comparisons between

treatments (or exposures), because such studies are prone to

selection bias,

and groups receiving different treatments (exposures) may differ

greatly according to their covariates (age, height, weight, medications,

exercise, nutritional status, ethnicity, family medical history, etc.).

In contrast, randomization implies that for each covariate, the mean

for each group is expected to be the same. For any randomized trial,

some variation from the mean is expected, of course, but the

randomization ensures that the experimental groups have mean values that

are close, due to the

central limit theorem and

Markov's inequality.

With inadequate randomization or low sample size, the systematic

variation in covariates between the treatment groups (or exposure

groups) makes it difficult to separate the effect of the treatment

(exposure) from the effects of the other covariates, most of which have

not been measured. The mathematical models used to analyze such data

must consider each differing covariate (if measured), and results are

not meaningful if a covariate is neither randomized nor included in the

model.

To avoid conditions that render an experiment far less useful, physicians conducting medical trials – say for U.S.

Food and Drug Administration

approval – quantify and randomize the covariates that can be

identified. Researchers attempt to reduce the biases of observational

studies with complicated statistical methods such as

propensity score matching

methods, which require large populations of subjects and extensive

information on covariates. Outcomes are also quantified when possible

(bone density, the amount of some cell or substance in the blood,

physical strength or endurance, etc.) and not based on a subject's or a

professional observer's opinion. In this way, the design of an

observational study can render the results more objective and therefore,

more convincing.

Ethics

By placing the distribution of the independent variable(s) under the

control of the researcher, an experiment – particularly when it involves

human subjects

– introduces potential ethical considerations, such as balancing

benefit and harm, fairly distributing interventions (e.g., treatments

for a disease), and

informed consent.

For example, in psychology or health care, it is unethical to provide a

substandard treatment to patients. Therefore, ethical review boards are

supposed to stop clinical trials and other experiments unless a new

treatment is believed to offer benefits as good as current best

practice.

It is also generally unethical (and often illegal) to conduct

randomized experiments on the effects of substandard or harmful

treatments, such as the effects of ingesting arsenic on human health. To

understand the effects of such exposures, scientists sometimes use

observational studies to understand the effects of those factors.

Even when experimental research does not directly involve human

subjects, it may still present ethical concerns. For example, the

nuclear bomb experiments conducted by the

Manhattan Project

implied the use of nuclear reactions to harm human beings even though

the experiments did not directly involve any human subjects.

Experimental method in law

The experimental method can be useful in solving juridical problems.