| Allergy | |

|---|---|

| |

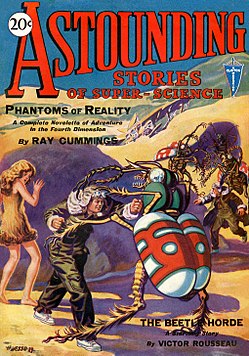

| Hives are a common allergic symptom. |

An allergy is a specific type of exaggerated immune response where the body mistakenly identifies a ordinarily harmless substance (allergens, like pollen, pet dander, or certain foods) as a threat and launches a defense against it.

Allergic diseases are the conditions that arise as a result of allergic reactions, such as hay fever, allergic conjunctivitis, allergic asthma, atopic dermatitis, food allergies, and anaphylaxis. Symptoms of the above diseases may include red eyes, an itchy rash, sneezing, coughing, a runny nose, shortness of breath, or swelling. Note that food intolerances and food poisoning are separate conditions.

Common allergens include pollen and certain foods. Metals and other substances may also cause such problems. Food, insect stings, and medications are common causes of severe reactions. Their development is due to both genetic and environmental factors. The underlying mechanism involves immunoglobulin E antibodies (IgE), part of the body's immune system, binding to an allergen and then to a receptor on mast cells or basophils where it triggers the release of inflammatory chemicals such as histamine. Diagnosis is typically based on a person's medical history. Further testing of the skin or blood may be useful in certain cases. Positive tests, however, may not necessarily mean there is a significant allergy to the substance in question.

Early exposure of children to potential allergens may be protective. Treatments for allergies include avoidance of known allergens and the use of medications such as steroids and antihistamines. In severe reactions, injectable adrenaline (epinephrine) is recommended. Allergen immunotherapy, which gradually exposes people to larger and larger amounts of allergen, is useful for some types of allergies such as hay fever and reactions to insect bites. Its use in food allergies is unclear.

Allergies are common. In the developed world, about 20% of people are affected by allergic rhinitis, food allergy affects 10% of adults and 8% of children, and about 20% have or have had atopic dermatitis at some point in time. Depending on the country, about 1–18% of people have asthma. Anaphylaxis occurs in between 0.05–2% of people. Rates of many allergic diseases appear to be increasing. The word "allergy" was first used by Clemens von Pirquet in 1906.

Signs and symptoms

| Affected organ | Common signs and symptoms |

|---|---|

| Nose | Swelling of the nasal mucosa (allergic rhinitis) runny nose, sneezing |

| Sinuses | Allergic sinusitis |

| Eyes | Redness and itching of the conjunctiva (allergic conjunctivitis, watery) |

| Airways | Sneezing, coughing, bronchoconstriction, wheezing and dyspnea, sometimes outright attacks of asthma, in severe cases the airway constricts due to swelling known as laryngeal edema |

| Ears | Feeling of fullness, possibly pain, and impaired hearing due to the lack of eustachian tube drainage. |

| Skin | Rashes, such as eczema and hives (urticaria) |

| Gastrointestinal tract | Abdominal pain, bloating, vomiting, diarrhea |

Many allergens such as dust or pollen are airborne particles. In these cases, symptoms arise in areas in contact with air, such as the eyes, nose, and lungs. For instance, allergic rhinitis, also known as hay fever, causes irritation of the nose, sneezing, itching, and redness of the eyes. Inhaled allergens can also lead to increased production of mucus in the lungs, shortness of breath, coughing, and wheezing.

Aside from these ambient allergens, allergic reactions can result from foods, insect stings, and reactions to medications like aspirin and antibiotics such as penicillin. Symptoms of food allergy include abdominal pain, bloating, vomiting, diarrhea, itchy skin, and hives. Food allergies rarely cause respiratory (asthmatic) reactions, or rhinitis. Insect stings, food, antibiotics, and certain medicines may produce a systemic allergic response that is also called anaphylaxis; multiple organ systems can be affected, including the digestive system, the respiratory system, and the circulatory system. Depending on the severity, anaphylaxis can include skin reactions, bronchoconstriction, swelling, low blood pressure, coma, and death. This type of reaction can be triggered suddenly, or the onset can be delayed. The nature of anaphylaxis is such that the reaction can seem to be subsiding but may recur throughout a period of time.

Skin

Substances that come into contact with the skin, such as latex, are also common causes of allergic reactions, known as contact dermatitis or eczema. Skin allergies frequently cause rashes, or swelling and inflammation within the skin, in what is known as a "wheal and flare" reaction characteristic of hives and angioedema.

With insect stings, a large local reaction may occur in the form of an area of skin redness greater than 10 cm in size that can last one to two days. This reaction may also occur after immunotherapy.

The way the body responds to foreign invaders on the molecular level is similar to how allergens are treated even on the skin. The skin forms an effective barrier to the entry of most allergens but this barrier cannot withstand everything that comes at it. A situation such as an insect sting can breach the barrier and inject allergen to the affected spot. When an allergen enters the epidermis or dermis, it triggers a localized allergic reaction which activates the mast cells in the skin resulting in an immediate increase in vascular permeability, leading to fluid leakage and swelling in the affected area. Mast-cell activation also stimulates a skin lesion called the wheal-and-flare reaction. This is when the release of chemicals from local nerve endings by a nerve axon reflex, causes the vasodilatations of surrounding cutaneous blood vessels, which causes redness of the surrounding skin.

As a part of the allergy response, the body has developed a secondary response which in some individuals causes a more widespread and sustained edematous response. This usually occurs about 8 hours after the allergen originally comes in contact with the skin. When an allergen is ingested, a dispersed form of wheal-and-flare reaction, known as urticaria or hives will appear when the allergen enters the bloodstream and eventually reaches the skin. The way the skin reacts to different allergens gives allergists the upper hand and allows them to test for allergies by injecting a very small amount of an allergen into the skin. Even though these injections are very small and local, they still pose the risk of causing systematic anaphylaxis.

Cause

Risk factors for allergies can be placed in two broad categories, namely host and environmental factors. Host factors include heredity, sex, race, and age, with heredity being by far the most significant. However, there has been a recent increase in the incidence of allergic disorders that cannot be explained by genetic factors alone. Four major environmental candidates are alterations in exposure to infectious diseases during early childhood, environmental pollution, allergen levels, and dietary changes.

Dust mites

Dust mite allergy, also known as house dust allergy, is a sensitization and allergic reaction to the droppings of house dust mites. The allergy is common and can trigger allergic reactions such as asthma, eczema, or itching. The mite's gut contains potent digestive enzymes (notably peptidase 1) that persist in their feces and are major inducers of allergic reactions such as wheezing. The mite's exoskeleton can also contribute to allergic reactions. Unlike scabies mites or skin follicle mites, house dust mites do not burrow under the skin and are not parasitic.

Foods

A wide variety of foods can cause allergic reactions, but 90% of allergic responses to foods are caused by cow's milk, soy, eggs, wheat, peanuts, tree nuts, fish, and shellfish. Other food allergies, affecting less than 1 person per 10,000 population, may be considered "rare". The most common food allergy in the US population is a sensitivity to crustacea. Although peanut allergies are notorious for their severity, peanut allergies are not the most common food allergy in adults or children. Severe or life-threatening reactions may be triggered by other allergens and are more common when combined with asthma.

Rates of allergies differ between adults and children. Children can sometimes outgrow peanut allergies. Egg allergies affect one to two percent of children but are outgrown by about two-thirds of children by the age of 5. The sensitivity is usually to proteins in the white, rather than the yolk.

Milk-protein allergies—distinct from lactose intolerance—are most common in children. Approximately 60% of milk-protein reactions are immunoglobulin E–mediated, with the remaining usually attributable to inflammation of the colon. Some people are unable to tolerate milk from goats or sheep as well as from cows, and many are also unable to tolerate dairy products such as cheese. Roughly 10% of children with a milk allergy will have a reaction to beef. Lactose intolerance, a common reaction to milk, is not a form of allergy at all, but due to the absence of an enzyme in the digestive tract.

Those with tree nut allergies may be allergic to one or many tree nuts, including pecans, pistachios, and walnuts. In addition, seeds, including sesame seeds and poppy seeds, contain oils in which protein is present, which may elicit an allergic reaction.

Allergens can be transferred from one food to another through genetic engineering; however, genetic modification can also remove allergens. Little research has been done on the natural variation of allergen concentrations in unmodified crops.

Latex

Latex can trigger an IgE-mediated cutaneous, respiratory, and systemic reaction. The prevalence of latex allergy in the general population is believed to be less than one percent. In a hospital study, 1 in 800 surgical patients (0.125 percent) reported latex sensitivity, although the sensitivity among healthcare workers is higher, between seven and ten percent. Researchers attribute this higher level to the exposure of healthcare workers to areas with significant airborne latex allergens, such as operating rooms, intensive-care units, and dental suites. These latex-rich environments may sensitize healthcare workers who regularly inhale allergenic proteins.

The most prevalent response to latex is an allergic contact dermatitis, a delayed hypersensitive reaction appearing as dry, crusted lesions. This reaction usually lasts 48–96 hours. Sweating or rubbing the area under the glove aggravates the lesions, possibly leading to ulcerations. Anaphylactic reactions occur most often in sensitive patients who have been exposed to a surgeon's latex gloves during abdominal surgery, but other mucosal exposures, such as dental procedures, can also produce systemic reactions.

Latex and banana sensitivity may cross-react. Furthermore, those with latex allergy may also have sensitivities to avocado, kiwifruit, and chestnut. These people often have perioral itching and local urticaria. Only occasionally have these food-induced allergies induced systemic responses. Researchers suspect that the cross-reactivity of latex with banana, avocado, kiwifruit, and chestnut occurs because latex proteins are structurally homologous with some other plant proteins.

Medications

About 10% of people report that they are allergic to penicillin; however, of that 10%, 90% turn out not to be. Serious allergies only occur in about 0.03%.

Insect stings

One of the main sources of human allergies is insects. An allergy to insects can be brought on by bites, stings, ingestion, and inhalation.

Toxins interacting with proteins

Another non-food protein reaction, urushiol-induced contact dermatitis, originates after contact with poison ivy, eastern poison oak, western poison oak, or poison sumac. Urushiol, which is not itself a protein, acts as a hapten and chemically reacts with, binds to, and changes the shape of integral membrane proteins on exposed skin cells. The immune system does not recognize the affected cells as normal parts of the body, causing a T-cell-mediated immune response.

Of these poisonous plants, sumac is the most virulent. The resulting dermatological response to the reaction between urushiol and membrane proteins includes redness, swelling, papules, vesicles, blisters, and streaking.

Estimates vary on the population fraction that will have an immune system response. Approximately 25% of the population will have a strong allergic response to urushiol. In general, approximately 80–90% of adults will develop a rash if they are exposed to 0.0050 mg (7.7×10−5 gr) of purified urushiol, but some people are so sensitive that it takes only a molecular trace on the skin to initiate an allergic reaction.

Genetics

Allergic diseases are strongly familial; identical twins are likely to have the same allergic diseases about 70% of the time; the same allergy occurs about 40% of the time in non-identical twins. Allergic parents are more likely to have allergic children and those children's allergies are likely to be more severe than those in children of non-allergic parents. Some allergies, however, are not consistent along genealogies; parents who are allergic to peanuts may have children who are allergic to ragweed. The likelihood of developing allergies is inherited and related to an irregularity in the immune system, but the specific allergen is not.

The risk of allergic sensitization and the development of allergies varies with age, with young children most at risk. Several studies have shown that IgE levels are highest in childhood and fall rapidly between the ages of 10 and 30 years. The peak prevalence of hay fever is highest in children and young adults and the incidence of asthma is highest in children under 10.

Ethnicity may play a role in some allergies; however, racial factors have been difficult to separate from environmental influences and changes due to migration. It has been suggested that different genetic loci are responsible for asthma, to be specific, in people of European, Hispanic, Asian, and African origins.

Researchers have worked to characterize genes involved in inflammation and the maintenance of mucosal integrity. The identified genes associated with allergic disease severity, progression, and development primarily function in four areas: regulating inflammatory responses (IFN-α, TLR-1, IL-13, IL-4, IL-5, HLA-G, iNOS), maintaining vascular endothelium and mucosal lining (FLG, PLAUR, CTNNA3, PDCH1, COL29A1), mediating immune cell function (PHF11, H1R, HDC, TSLP, STAT6, RERE, PPP2R3C), and influencing susceptibility to allergic sensitization (e.g., ORMDL3, CHI3L1).

Multiple studies have investigated the genetic profiles of individuals with predispositions to and experiences of allergic diseases, revealing a complex polygenic architecture. Specific genetic loci, such as MIIP, CXCR4, SCML4, CYP1B1, ICOS, and LINC00824, have been directly associated with allergic disorders. Additionally, some loci show pleiotropic effects, linking them to both autoimmune and allergic conditions, including PRDM2, G3BP1, HBS1L, and POU2AF1. These genes engage in shared inflammatory pathways across various epithelial tissues—such as the skin, esophagus, vagina, and lung—highlighting common genetic factors that contribute to the pathogenesis of asthma and other allergic diseases.

In atopic patients, transcriptome studies have identified IL-13-related pathways as key for eosinophilic airway inflammation and remodeling. That causes the body to experience the type of airflow restriction of allergic asthma. Expression of genes was quite variable: genes associated with inflammation were found almost exclusively in superficial airways, while genes related to airway remodeling were mainly present in endobronchial biopsy specimens. This enhanced gene profile was similar across multiple sample sizes – nasal brushing, sputum, endobronchial brushing – demonstrating the importance of eosinophilic inflammation, mast cell degranulation and group 3 innate lymphoid cells in severe adult-onset asthma. IL-13 is an immunoregulatory cytokine that is made mostly by activated T-helper 2 (Th2) cells. It is an important cytokine for many steps in B-cell maturation and differentiation, since it increases CD23 and MHC class II molecules, and aids in B-cell isotype switching to IgE. IL-13 also suppresses macrophage function by reducing the release of pro-inflammatory cytokines and chemokines. The more striking thing is that IL-13 is the prime mover in allergen-induced asthma via pathways that are independent of IgE and eosinophils.

Hygiene hypothesis

Allergic diseases are caused by inappropriate immunological responses to harmless antigens driven by a TH2-mediated immune response. Many bacteria and viruses elicit a TH1-mediated immune response, which down-regulates TH2 responses. The first proposed mechanism of action of the hygiene hypothesis was that insufficient stimulation of the TH1 arm of the immune system leads to an overactive TH2 arm, which in turn leads to allergic disease. In other words, individuals living in too sterile an environment are not exposed to enough pathogens to keep the immune system busy. Since our bodies evolved to deal with a certain level of such pathogens, when they are not exposed to this level, the immune system will attack harmless antigens, and thus normally benign microbial objects—like pollen—will trigger an immune response.

The hygiene hypothesis was developed to explain the observation that hay fever and eczema, both allergic diseases, were less common in children from larger families, which were, it is presumed, exposed to more infectious agents through their siblings, than in children from families with only one child. It is used to explain the increase in allergic diseases that have been seen since industrialization, and the higher incidence of allergic diseases in more developed countries. The hygiene hypothesis has now expanded to include exposure to symbiotic bacteria and parasites as important modulators of immune system development, along with infectious agents.

Epidemiological data support the hygiene hypothesis. Studies have shown that various immunological and autoimmune diseases are much less common in the developing world than the industrialized world, and that immigrants to the industrialized world from the developing world increasingly develop immunological disorders in relation to the length of time since arrival in the industrialized world. Longitudinal studies in the third world demonstrate an increase in immunological disorders as a country grows more affluent and, it is presumed, cleaner. The use of antibiotics in the first year of life has been linked to asthma and other allergic diseases. The use of antibacterial cleaning products has also been associated with higher incidence of asthma, as has birth by caesarean section rather than vaginal birth.

Stress

Chronic stress can aggravate allergic conditions. This has been attributed to a T helper 2 (TH2)-predominant response driven by suppression of interleukin 12 by both the autonomic nervous system and the hypothalamic–pituitary–adrenal axis. Stress management in highly susceptible individuals may improve symptoms.

Other environmental factors

Allergic diseases are more common in industrialized countries than in countries that are more traditional or agricultural, and there is a higher rate of allergic disease in urban populations versus rural populations, although these differences are becoming less defined. Historically, the trees planted in urban areas were predominantly male to prevent litter from seeds and fruits, but the high ratio of male trees causes high pollen counts, a phenomenon that horticulturist Tom Ogren has called "botanical sexism".

Alterations in exposure to microorganisms is another plausible explanation, at present, for the increase in atopic allergy. Endotoxin exposure reduces release of inflammatory cytokines such as TNF-α, IFNγ, interleukin-10, and interleukin-12 from white blood cells (leukocytes) that circulate in the blood. Certain microbe-sensing proteins, known as Toll-like receptors, found on the surface of cells in the body are also thought to be involved in these processes.

Parasitic worms and similar parasites are present in untreated drinking water in developing countries, and were present in the water of developed countries until the routine chlorination and purification of drinking water supplies. Recent research has shown that some common parasites, such as intestinal worms (e.g., hookworms), secrete chemicals into the gut wall (and, hence, the bloodstream) that suppress the immune system and prevent the body from attacking the parasite. This gives rise to a new slant on the hygiene hypothesis theory—that co-evolution of humans and parasites has led to an immune system that functions correctly only in the presence of the parasites. Without them, the immune system becomes unbalanced and oversensitive.

In particular, research suggests that allergies may coincide with the delayed establishment of gut flora in infants. However, the research to support this theory is conflicting, with some studies performed in China and Ethiopia showing an increase in allergy in people infected with intestinal worms. Clinical trials have been initiated to test the effectiveness of helminthic therapy with certain worms in treating some allergies. It may be that the term 'parasite' could turn out to be inappropriate, and in fact a hitherto unsuspected symbiosis is at work.

Pathophysiology

Acute response

In the initial stages of allergy, a type I hypersensitivity reaction against an allergen encountered for the first time and presented by a professional antigen-presenting cell causes a response in a type of immune cell called a TH2 lymphocyte, a subset of T cells that produce a cytokine called interleukin-4 (IL-4). These TH2 cells interact with other lymphocytes called B cells, whose role is production of antibodies. Coupled with signals provided by IL-4, this interaction stimulates the B cell to begin production of a large amount of a particular type of antibody known as IgE. Secreted IgE circulates in the blood and binds to an IgE-specific receptor (a kind of Fc receptor called FcεRI) on the surface of other kinds of immune cells called mast cells and basophils, which are both involved in the acute inflammatory response. The IgE-coated cells, at this stage, are sensitized to the allergen.

If later exposure to the same allergen occurs, the allergen can bind to the IgE molecules held on the surface of the mast cells or basophils. Cross-linking of the IgE and Fc receptors occurs when more than one IgE-receptor complex interacts with the same allergenic molecule and activates the sensitized cell. Activated mast cells and basophils undergo a process called degranulation, during which they release histamine and other inflammatory chemical mediators (cytokines, interleukins, leukotrienes, and prostaglandins) from their granules into the surrounding tissue causing several systemic effects, such as vasodilation, mucous secretion, nerve stimulation, and smooth muscle contraction.

This results in rhinorrhea, itchiness, dyspnea, and anaphylaxis. Depending on the individual, allergen, and mode of introduction, the symptoms can be system-wide (classical anaphylaxis) or localized to specific body systems. Asthma is localized to the respiratory system and eczema is localized to the dermis.

Late-phase response

After the chemical mediators of the acute response subside, late-phase responses can often occur. This is due to the migration of other leukocytes such as neutrophils, lymphocytes, eosinophils, and macrophages to the initial site. The reaction is usually seen 2–24 hours after the original reaction. Cytokines from mast cells may play a role in the persistence of long-term effects. Late-phase responses seen in asthma are slightly different from those seen in other allergic responses, although they are still caused by release of mediators from eosinophils and are still dependent on activity of TH2 cells.

Allergic contact dermatitis

Although allergic contact dermatitis is termed an "allergic" reaction (which usually refers to type I hypersensitivity), its pathophysiology involves a reaction that more correctly corresponds to a type IV hypersensitivity reaction. In type IV hypersensitivity, there is activation of certain types of T cells (CD8+) that destroy target cells on contact, as well as activated macrophages that produce hydrolytic enzymes.

Diagnosis

Effective management of allergic diseases relies on the ability to make an accurate diagnosis. Allergy testing can help confirm or rule out allergies. Correct diagnosis, counseling, and avoidance advice based on valid allergy test results reduce the incidence of symptoms and need for medications, and improve quality of life. To assess the presence of allergen-specific IgE antibodies, two different methods can be used: a skin prick test, or an allergy blood test. Both methods are recommended, and they have similar diagnostic value.

Skin prick tests and blood tests are equally cost-effective, and health economic evidence shows that both tests were cost-effective compared with no test. Early and more accurate diagnoses save cost due to reduced consultations, referrals to secondary care, misdiagnosis, and emergency admissions.

Allergy undergoes dynamic changes over time. Regular allergy testing of relevant allergens provides information on if and how patient management can be changed to improve health and quality of life. Annual testing is often the practice for determining whether allergy to milk, egg, soy, and wheat have been outgrown, and the testing interval is extended to 2–3 years for allergy to peanut, tree nuts, fish, and crustacean shellfish. Results of follow-up testing can guide decision-making regarding whether and when it is safe to introduce or re-introduce allergenic food into the diet.

Skin prick testing

Skin testing is also known as "puncture testing" and "prick testing" due to the series of tiny punctures or pricks made into the patient's skin. Tiny amounts of suspected allergens and/or their extracts (e.g., pollen, grass, mite proteins, peanut extract) are introduced to sites on the skin marked with pen or dye (the ink/dye should be carefully selected, lest it cause an allergic response itself). A negative and positive control are also included for comparison (eg, negative is saline or glycerin; positive is histamine). A small plastic or metal device is used to puncture or prick the skin. Sometimes, the allergens are injected "intradermally" into the patient's skin, with a needle and syringe. Common areas for testing include the inside forearm and the back.

If the patient is allergic to the substance, then a visible inflammatory reaction will usually occur within 30 minutes. This response will range from slight reddening of the skin to a full-blown hive (called "wheal and flare") in more sensitive patients similar to a mosquito bite. Interpretation of the results of the skin prick test is normally done by allergists on a scale of severity, with +/− meaning borderline reactivity, and 4+ being a large reaction. Increasingly, allergists are measuring and recording the diameter of the wheal and flare reaction. Interpretation by well-trained allergists is often guided by relevant literature.

In general, a positive response is interpreted when the wheal of an antigen is ≥3mm larger than the wheal of the negative control (eg, saline or glycerin). Some patients may believe they have determined their own allergic sensitivity from observation, but a skin test has been shown to be much better than patient observation to detect allergy.

If a serious life-threatening anaphylactic reaction has brought a patient in for evaluation, some allergists will prefer an initial blood test prior to performing the skin prick test. Skin tests may not be an option if the patient has widespread skin disease or has taken antihistamines in the last several days.

Patch testing

Patch testing is a method used to determine if a specific substance causes allergic inflammation of the skin. It tests for delayed reactions. It is used to help ascertain the cause of skin contact allergy or contact dermatitis. Adhesive patches, usually treated with several common allergic chemicals or skin sensitizers, are applied to the back. The skin is then examined for possible local reactions at least twice, usually at 48 hours after application of the patch, and again two or three days later.

Blood testing

An allergy blood test is quick and simple and can be ordered by a licensed health care provider (e.g., an allergy specialist) or general practitioner. Unlike skin-prick testing, a blood test can be performed irrespective of age, skin condition, medication, symptom, disease activity, and pregnancy. Adults and children of any age can get an allergy blood test. For babies and very young children, a single needle stick for allergy blood testing is often gentler than several skin pricks.

An allergy blood test is available through most laboratories. A sample of the patient's blood is sent to a laboratory for analysis, and the results are sent back a few days later. Multiple allergens can be detected with a single blood sample. Allergy blood tests are very safe since the person is not exposed to any allergens during the testing procedure. After the onset of anaphylaxis or a severe allergic reaction, guidelines recommend emergency departments obtain a time-sensitive blood test to determine blood tryptase levels and assess for mast cell activation.

The test measures the concentration of specific IgE antibodies in the blood. Quantitative IgE test results increase the possibility of ranking how different substances may affect symptoms. A rule of thumb is that the higher the IgE antibody value, the greater the likelihood of symptoms. Allergens found at low levels that today do not result in symptoms cannot help predict future symptom development. The quantitative allergy blood result can help determine what a patient is allergic to, help predict and follow the disease development, estimate the risk of a severe reaction, and explain cross-reactivity.

A low total IgE level is not adequate to rule out sensitization to commonly inhaled allergens. Statistical methods, such as ROC curves, predictive value calculations, and likelihood ratios have been used to examine the relationship of various testing methods to each other. These methods have shown that patients with a high total IgE have a high probability of allergic sensitization, but further investigation with allergy tests for specific IgE antibodies for a carefully chosen of allergens is often warranted.

Laboratory methods to measure specific IgE antibodies for allergy testing include enzyme-linked immunosorbent assay (ELISA, or EIA), radioallergosorbent test (RAST), fluorescent enzyme immunoassay (FEIA), and chemiluminescence immunoassay (CLIA).

Other testing

Challenge testing: Challenge testing is when tiny amounts of a suspected allergen are introduced to the body orally, through inhalation, or via other routes. Except for testing food and medication allergies, challenges are rarely performed. When this type of testing is chosen, it must be closely supervised by an allergist.

Elimination/challenge tests: This testing method is used most often with foods or medicines. A patient with a suspected allergen is instructed to modify his diet to totally avoid that allergen for a set time. If the patient experiences significant improvement, he may then be "challenged" by reintroducing the allergen, to see if symptoms are reproduced.

Unreliable tests: There are other types of allergy testing methods that are unreliable, including applied kinesiology (allergy testing through muscle relaxation), cytotoxicity testing, urine autoinjection, skin titration (Rinkel method), and provocative and neutralization (subcutaneous) testing or sublingual provocation.

Differential diagnosis

Before a diagnosis of allergic disease can be confirmed, other plausible causes of the presenting symptoms must be considered. Vasomotor rhinitis, for example, is one of many illnesses that share symptoms with allergic rhinitis, underscoring the need for professional differential diagnosis. Once a diagnosis of asthma, rhinitis, anaphylaxis, or other allergic disease has been made, there are several methods for discovering the causative agent of that allergy.

Prevention

Giving peanut products early in childhood may decrease the risk of allergies, and only breastfeeding during at least the first few months of life may decrease the risk of allergic dermatitis. There is little evidence that a mother's diet during pregnancy or breastfeeding affects the risk of allergies, although there has been some research to show that irregular cow's milk exposure might increase the risk of cow's milk allergy. There is some evidence that delayed introduction of certain foods is useful, and that early exposure to potential allergens may actually be protective.

Fish oil supplementation during pregnancy is associated with a lower risk of food sensitivities. Probiotic supplements during pregnancy or infancy may help to prevent atopic dermatitis.

Management

Management of allergies typically involves avoiding the allergy trigger and taking medications to improve the symptoms. Allergen immunotherapy may be useful for some types of allergies.

Medication

Several medications may be used to block the action of allergic mediators, or to prevent activation of cells and degranulation processes. These include antihistamines, glucocorticoids, epinephrine (adrenaline), mast cell stabilizers, and antileukotriene agents are common treatments of allergic diseases. Anticholinergics, decongestants, and other compounds thought to impair eosinophil chemotaxis are also commonly used. Although rare, the severity of anaphylaxis often requires epinephrine injection, and where medical care is unavailable, a device known as an epinephrine autoinjector may be used.

Immunotherapy

Allergen immunotherapy is useful for environmental allergies, allergies to insect bites, and asthma. Its benefit for food allergies is unclear and thus not recommended. Immunotherapy involves exposing people to larger and larger amounts of allergen in an effort to change the immune system's response.

Meta-analyses have found that injections of allergens under the skin is effective in the treatment in allergic rhinitis in children and in asthma. The benefits may last for years after treatment is stopped. It is generally safe and effective for allergic rhinitis and conjunctivitis, allergic forms of asthma, and stinging insects.

To a lesser extent, the evidence also supports the use of sublingual immunotherapy for rhinitis and asthma. For seasonal allergies the benefit is small. In this form the allergen is given under the tongue and people often prefer it to injections. Immunotherapy is not recommended as a stand-alone treatment for asthma.

Alternative medicine

An experimental treatment, enzyme potentiated desensitization (EPD), has been tried for decades but is not generally accepted as effective. EPD uses dilutions of allergen and an enzyme, beta-glucuronidase, to which T-regulatory lymphocytes are supposed to respond by favoring desensitization, or down-regulation, rather than sensitization. EPD has also been tried for the treatment of autoimmune diseases, but evidence does not show effectiveness.

A review found no effectiveness of homeopathic treatments and no difference compared with placebo. The authors concluded that based on rigorous clinical trials of all types of homeopathy for childhood and adolescence ailments, there is no convincing evidence that supports the use of homeopathic treatments.

According to the National Center for Complementary and Integrative Health, U.S., the evidence is relatively strong that saline nasal irrigation and butterbur are effective, when compared to other alternative medicine treatments, for which the scientific evidence is weak, negative, or nonexistent, such as honey, acupuncture, omega 3's, probiotics, astragalus, capsaicin, grape seed extract, Pycnogenol, quercetin, spirulina, stinging nettle, tinospora, or guduchi.

Epidemiology

The allergic diseases—hay fever and asthma—have increased in the Western world over the past 2–3 decades. Increases in allergic asthma and other atopic disorders in industrialized nations, it is estimated, began in the 1960s and 1970s, with further increases occurring during the 1980s and 1990s, although some suggest that a steady rise in sensitization has been occurring since the 1920s. The number of new cases per year of atopy in developing countries has, in general, remained much lower.

| Allergy type | United States | United Kingdom |

|---|---|---|

| Allergic rhinitis | 35.9 million (about 11% of the population) | 3.3 million (about 5.5% of the population) |

| Asthma | 10 million have allergic asthma (about 3% of the population). The prevalence of asthma increased 75% from 1980 to 1994. Asthma prevalence is 39% higher in African Americans than in Europeans. | 5.7 million (about 9.4%). In six- and seven-year-olds asthma increased from 18.4% to 20.9% over five years, during the same time the rate decreased from 31% to 24.7% in 13- to 14-year-olds. |

| Atopic eczema | About 9% of the population. Between 1960 and 1990, prevalence has increased from 3% to 10% in children. | 5.8 million (about 1% severe). |

| Anaphylaxis | At least 40 deaths per year due to insect venom. About 400 deaths due to penicillin anaphylaxis. About 220 cases of anaphylaxis and 3 deaths per year are due to latex allergy. An estimated 150 people die annually from anaphylaxis due to food allergy. | Between 1999 and 2006, 48 deaths occurred in people ranging from five months to 85 years old. |

| Insect venom | Around 15% of adults have mild, localized allergic reactions. Systemic reactions occur in 3% of adults and less than 1% of children. | Unknown |

| Drug allergies | Anaphylactic reactions to penicillin cause 400 deaths per year. | Unknown |

| Food allergies | 7.6% of children and 10.8% of adults. Peanut and/or tree nut (e.g. walnut) allergy affects about three million Americans, or 1.1% of the population. | 5–7% of infants and 1–2% of adults. A 117.3% increase in peanut allergies was observed from 2001 to 2005, an estimated 25,700 people in England are affected. |

| Multiple allergies (Asthma, eczema and allergic rhinitis together) | Unknown | 2.3 million (about 3.7%), prevalence has increased by 48.9% between 2001 and 2005. |

Changing frequency

Although genetic factors govern susceptibility to atopic disease, increases in atopy have occurred within too short a period to be explained by a genetic change in the population, thus pointing to environmental or lifestyle changes. Several hypotheses have been identified to explain this increased rate. Increased exposure to perennial allergens may be due to housing changes and increased time spent indoors, and a decreased activation of a common immune control mechanism may be caused by changes in cleanliness or hygiene, and exacerbated by dietary changes, obesity, and decline in physical exercise. The hygiene hypothesis maintains that high living standards and hygienic conditions exposes children to fewer infections. It is thought that reduced bacterial and viral infections early in life direct the maturing immune system away from TH1 type responses, leading to unrestrained TH2 responses that allow for an increase in allergy.

Changes in rates and types of infection alone, however, have been unable to explain the observed increase in allergic disease, and recent evidence has focused attention on the importance of the gastrointestinal microbial environment. Evidence has shown that exposure to food and fecal-oral pathogens, such as hepatitis A, Toxoplasma gondii, and Helicobacter pylori (which also tend to be more prevalent in developing countries), can reduce the overall risk of atopy by more than 60%, and an increased rate of parasitic infections has been associated with a decreased prevalence of asthma. It is speculated that these infections exert their effect by critically altering TH1/TH2 regulation. Important elements of newer hygiene hypotheses also include exposure to endotoxins, exposure to pets and growing up on a farm.

History

Some symptoms attributable to allergic diseases are mentioned in ancient sources. Particularly, three members of the Roman Julio-Claudian dynasty (Augustus, Claudius and Britannicus) are suspected to have a family history of atopy. The concept of "allergy" was originally introduced in 1906 by the Viennese pediatrician Clemens von Pirquet, after he noticed that patients who had received injections of horse serum or smallpox vaccine usually had quicker, more severe reactions to second injections. Pirquet called this phenomenon "allergy" from the Ancient Greek words ἄλλος allos meaning "other" and ἔργον ergon meaning "work".

All forms of hypersensitivity used to be classified as allergies, and all were thought to be caused by an improper activation of the immune system. Later, it became clear that several different disease mechanisms were implicated, with a common link to a disordered activation of the immune system. In 1963, a new classification scheme was designed by Philip Gell and Robin Coombs that described four types of hypersensitivity reactions, known as Type I to Type IV hypersensitivity.

With this new classification, the word allergy, sometimes clarified as a true allergy, was restricted to type I hypersensitivities (also called immediate hypersensitivity), which are characterized as rapidly developing reactions involving IgE antibodies.

A major breakthrough in understanding the mechanisms of allergy was the discovery of the antibody class labeled immunoglobulin E (IgE). IgE was simultaneously discovered in 1966–67 by two independent groups: Ishizaka's team at the Children's Asthma Research Institute and Hospital in Denver, USA, and by Gunnar Johansson and Hans Bennich in Uppsala, Sweden.[159] Their joint paper was published in April 1969.

Diagnosis

Radiometric assays include the radioallergosorbent test (RAST test) method, which uses IgE-binding (anti-IgE) antibodies labeled with radioactive isotopes for quantifying the levels of IgE antibody in the blood.

The RAST methodology was invented and marketed in 1974 by Pharmacia Diagnostics AB, Uppsala, Sweden, and the acronym RAST is actually a brand name. In 1989, Pharmacia Diagnostics AB replaced it with a superior test named the ImmunoCAP Specific IgE blood test, which uses the newer fluorescence-labeled technology.

American College of Allergy Asthma and Immunology (ACAAI) and the American Academy of Allergy Asthma and Immunology (AAAAI) issued the Joint Task Force Report "Pearls and pitfalls of allergy diagnostic testing" in 2008, and is firm in its statement that the term RAST is now obsolete:

The term RAST became a colloquialism for all varieties of (in vitro allergy) tests. This is unfortunate because it is well recognized that there are well-performing tests and some that do not perform so well, yet they are all called RASTs, making it difficult to distinguish which is which. For these reasons, it is now recommended that use of RAST as a generic descriptor of these tests be abandoned.

The updated version, the ImmunoCAP Specific IgE blood test, is the only specific IgE assay to receive Food and Drug Administration approval to quantitatively report to its detection limit of 0.1kU/L.

Medical specialty

| Occupation | |

|---|---|

| Names |

|

Occupation type | Specialty |

Activity sectors | Medicine |

| Specialty | immunology |

| Description | |

Education required |

|

Fields of employment | Hospitals, Clinics |

The medical speciality that studies, diagnoses and treats diseases caused by allergies is called allergology. An allergist is a physician specially trained to manage and treat allergies, asthma, and the other allergic diseases. In the United States physicians holding certification by the American Board of Allergy and Immunology (ABAI) have successfully completed an accredited educational program and evaluation process, including a proctored examination to demonstrate knowledge, skills, and experience in patient care in allergy and immunology. Becoming an allergist/immunologist requires completion of at least nine years of training.

After completing medical school and graduating with a medical degree, a physician will undergo three years of training in internal medicine (to become an internist) or pediatrics (to become a pediatrician). Once physicians have finished training in one of these specialties, they must pass the exam of either the American Board of Pediatrics (ABP), the American Osteopathic Board of Pediatrics (AOBP), the American Board of Internal Medicine (ABIM), or the American Osteopathic Board of Internal Medicine (AOBIM). Internists or pediatricians wishing to focus on the sub-specialty of allergy-immunology then complete at least an additional two years of study, called a fellowship, in an allergy/immunology training program. Allergist/immunologists listed as ABAI-certified have successfully passed the certifying examination of the ABAI following their fellowship.

In the United Kingdom, allergy is a subspecialty of general medicine or pediatrics. After obtaining postgraduate exams (MRCP or MRCPCH), a doctor works for several years as a specialist registrar before qualifying for the General Medical Council specialist register. Allergy services may also be delivered by immunologists. A 2003 Royal College of Physicians report presented a case for improvement of what were felt to be inadequate allergy services in the UK.

In 2006, the House of Lords convened a subcommittee. It concluded likewise in 2007 that allergy services were insufficient to deal with what the Lords referred to as an "allergy epidemic" and its social cost; it made several recommendations.