"The increase in CO2 from about 185 to about 265 ppm [from the last glacial period to the pre-industrial modern interglacial] gives a radiative forcing of

ΔFCO2 = (5.35 W·m–2) ln(265/185) = 1.9 W·m–2

The radiative forcing for CH4 is determined in a way analogous to that for CO2. For the increase of CH4 from about 375 to about 675 ppb, ΔFCH4 ≈ 0.3 W·m–2. Thus, the total radiative forcing, ΔF, due to these two greenhouse gases is about 2.2 W·m–2. The predicted change in the average planetary surface temperature is

ΔT ≈ [0.3 K·(W·m–2)–1] (2.2 W·m–2) ≈ 0.7 K

Analyses from multiple sites based on several different temperature

proxies indicate that Earth’s average surface temperature increased

between 3 and 4 K during the change from the last glacial period to the

present era.

Our calculated temperature change, that includes only the radiative forcing from increases in greenhouse gas concentrations, accounts for 20-25% of this observed temperature increase. This result implies climate sensitivity factor perhaps four to five times greater, ∼1.3 K·(W·m–2)–1, than obtained by simply balancing the radiative forcing of the greenhouse gases. [Italics and bold mine] The analysis based only on greenhouse gas forcing has not accounted for feedbacks in the planetary system triggered by increasing temperature, including changes in the structure of the atmosphere."

Unless I am seriously misreading this, the author(s) are assuming that the entire temperature rise from the last glacial to current interglacial (which they take to be 3-4K instead of the correct value of ~8K) is due entirely to increases in atmospheric greenhouse gases (which account for only 0.7K) and associated feedbacks (accounting for an additional 2.3-3.3K). As this is approximately what the IPCC claims, it is reasonable to suppose this assumption is the "empirical" basis for such high climate sensitivity. Note also that no justification is offered for this assumption; it is simply asserted.

The increase in atmospheric water vapor, due to rising temperatures, is often cited as the main cause for this large feedback. Curiously however, although water vapor is a greenhouse gas, and its increase should induce an additional temperature rise, I have never encountered an attempt to calculate how large it should be. Curious, for the same equations used to calculate the effect of CO2 on temperature can be applied to water vapor; only the constant in the Arrhenius relation needs to be modified, and that can be done by noting that although there is (conservatively) ten times as much of the latter as the former, it is estimated to cause only three times as much greenhouse warming, thus implying a constant only about one-third, or 5.35/3 = 1.78. As for the increase in atmospheric water vapor due to a temperature rise 0.7K, we can apply the rule of thumb that for every 10K increase there is about a doubling of water vapor pressure; thus, for 0.7K, this yields an increase of 5%, or a ratio of 1.05. Using the same Arrhenius relationship as in the post below gives

ΔFH2O = (1.78 W·m–2) ln(1.05) = 0.087 W·m–2

and applying the same approximation for calculating the temperature increase

ΔT ≈ [0.3 K·(W·m–2)–1] (0.087 W·m–2) ≈ 0.026 K

which is a < 4% addition to the 0.7K temperature rise, or a total of 0.73 K.

Of course, this addition 0.026 K warming raises water vapor even more, but by an amount to small to be significant. It is certainly nowhere close to the 2.3K-3.3K allegedly needed to account for the glacial-interglacial temperature difference.

Another strange feature of the post's analysis is the failure to mention albedo change due to the reduction of ice and snow covering the Earth's surface when a glacial age yields to an interglacial. If I make the estimate that, during the last glacial, ice and snow covered approximately 25% more of the Earth's surface than now, its albedo would have been around .36 -- some 20% greater than the current value. Employing the Stephan-Boltzmann relation estimates a temperature some 6K colder then, or some 75% of the actual temperature change (about 8K, remember).

Milankovitch cycles are also conspicuously absent, though it is difficult to calculate their effect on temperature with certainty. What is certain is that relying on greenhouse gas changes alone is woefully insufficient to the task employed here, that they only amount to about 10% of the glacial-interglacial temperature rise.

Without further ado, the ACS post.

ACS Climate Science Toolkit | How Atmospheric Warming Works

From link https://www.acs.org/content/acs/en/climatescience/atmosphericwarming/climatsensitivity.html

The concept of “climate sensitivity” is deceptively simple. How much would the average surface temperature of the Earth increase (decrease) for a given positive (negative) radiative forcing? The simplest approach to estimating climate sensitivity is to combine the energy balance for the incoming and outgoing energies and a simple atmospheric model to calculate how to counterbalance a given radiative forcing. If ΔF is the difference between incoming and outgoing energy flux (the equivalent of radiative forcing), we have

The concept of “climate sensitivity” is deceptively simple. How much would the average surface temperature of the Earth increase (decrease) for a given positive (negative) radiative forcing? The simplest approach to estimating climate sensitivity is to combine the energy balance for the incoming and outgoing energies and a simple atmospheric model to calculate how to counterbalance a given radiative forcing. If ΔF is the difference between incoming and outgoing energy flux (the equivalent of radiative forcing), we have

ΔF = (1 – α)Save – εσTP4 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . (1)

In this equation, α is the Earth’s albedo, Save is the average solar energy flux, 342 W·m–2, ε is the effective emissivity of the planetary system, σ is the Stefan-Boltzmann constant, and TP is the average planetary surface temperature. If ΔF is zero, the energies are balanced. That is,

(1 – α)Save = εσTP4 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . (2)

For ΔF > 0, a positive radiative forcing, the incoming energy is higher than the outgoing. To counterbalance this forcing, the surface temperature has to increase by ΔT to produce a planetary radiative flux that is ΔF larger than the incoming flux. The required counterbalance, assuming no changes in other factors affecting the climate, is represented by this equation.

ΔF = εσ[TP + ΔT]4 – (1 – α)Save. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . (3)

The algebraic manipulation shown below in small printgives this relationship between radiative forcing and the counterbalancing temperature change that would be required to return the planet to energy balance.

ΔF = εσ[TP + ΔT]4 – (1 – α)Save

ΔF = εσ[TP (1 + ΔT/ TP)]4 – (1 – α)Save

ΔF = εσTP4(1 + ΔT/ TP)4 – (1 – α)Save

Substituting εσTP4 = (1 – α)Save, gives

ΔF = [(1 – α)Save ](1 + ΔT/ TP)4 – (1 – α)Save

The factor (1 + ΔT/ TP)4 can be expanded and approximated:

(1 + ΔT/ TP)4 = 1 + 4(ΔT/ TP) + 6(ΔT/ TP)2 + 4(ΔT/ TP)3 + (ΔT/ TP)4

(1 + ΔT/ TP)4 ≈ 1 + 4(ΔT/ TP)

Because ΔT is small, ΔT/ TP << 1, and the higher order terms in the expansion are negligible. Substituting in the expression for ΔF, gives

ΔF ≈ [(1 – α)Save ] [1 + 4(ΔT/ TP)] – (1 – α)Save

ΔF ≈ (1 – α)Save + 4(1 – α)Save (ΔT/ TP) – (1 – α)Save

ΔF ≈ 4(1 – α)Save (ΔT/ TP)]

Solving for ΔT, gives the climate sensitivity based on this simple approach.

ΔT ≈ Tp ΔF/[4(1 – α)Save]

ΔT ≈ Tp ΔF/[4(1 – α)Save] ≈ [0.3 K·(W·m–2)–1] ΔF (for Tp ≈ 288 Κ). . . . . . . (4)

To apply this approximation for climate sensitivity due to CO2 and CH4, we can examine a case for which the change in concentration of greenhouse gases is reasonably well known and whose temperature change from an initial constant temperature state to a higher constant temperature is also known. The figure shows Antarctic ice core data that span the time from the end of the last glacial period to the beginning of the present era. For our purposes, we need the initial and final concentrations of CO2 and CH4, and the average global temperature change. For this test, we assume, that radiative forcing by these gases is the only external forcing on the climate system. (The detailed time course of the changes is interesting and can be correlated with changes that are evident in other geological records from this time span, but are not relevant for our calculation.)

The figure is based

on a figure from the NOAA Paleoclimatology Program website. The original

reference is Eric Monnin, Andreas Indermühle, André Dällenbach,

Jacqueline Flückiger, Bernhard Stauffer, Thomas F. Stocker, Dominique

Raynaud, Jean-Marc Barnola, “Atmospheric CO2 Concentrations Over the

Last Glacial Termination,” Science, 2001, 291, 112-114.

The increase in CO2 from about 185 to about 265 ppm gives a radiative forcing of

ΔFCO2 = (5.35 W·m–2) ln(265/185) = 1.9 W·m–2

The radiative forcing for CH4 is determined in a way analogous to that for CO2. For the increase of CH4 from about 375 to about 675 ppb, ΔFCH4 ≈ 0.3 W·m–2. Thus, the total radiative forcing, ΔF, due to these two greenhouse gases is about 2.2 W·m–2. The predicted change in the average planetary surface temperature is

ΔT ≈ [0.3 K·(W·m–2)–1] (2.2 W·m–2) ≈ 0.7 K

Analyses from multiple sites based on several different temperature

proxies indicate that Earth’s average surface temperature increased

between 3 and 4 K during the change from the last glacial period to the

present era.

Our calculated temperature change, that includes only the radiative forcing from increases in greenhouse gas concentrations, accounts for 20-25% of this observed temperature increase. This result implies climate sensitivity factor perhaps four to five times greater, ∼1.3 K·(W·m–2)–1, than obtained by simply balancing the radiative forcing of the greenhouse gases. The analysis based only on greenhouse gas forcing has not accounted for feedbacks in the planetary system triggered by increasing temperature, including changes in the structure of the atmosphere.

Water Vapor and Clouds

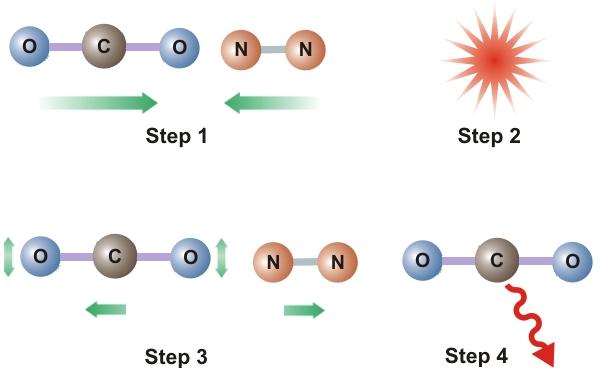

One of the most important sources of feedback in the planetary

system, shown graphically below, is the increase in the vapor pressure

of water as the ocean’s temperature increases. The vapor pressure

increases by about 7% per degree kelvin. Warming oceans evaporate more water and a warmer atmosphere can accommodate more water vapor, the most important greenhouse gas.

This feedback amplifies the warming effect of the non-condensable

greenhouse gases and is responsible for a good part of the multiplier

effect on climate sensitivity noted in the previous paragraph.

For the first calculation of atmospheric warming by increased CO2, Arrhenius chose to consider a doubling of its concentration and climate science has stuck with this standard. Thus, most values for climate sensitivity are given today as the temperature change predicted for doubling the CO2 concentration, ΔT2xCO2, or the equivalent of its doubling, taking all the greenhouse gas radiative forcing into account. The IPCC’s analysis gives a very likely (> 90% probability) value of 3 K with a likely (> 66% probability) range from 2 to 4.5 K. Our radiative forcing for doubling CO2 from 280 to 560 ppt was 3.53 W·m–2, which gives ΔT2xCO2 = 4.6 K (= [1.3 K·(W·m–2)–1][ 3.53 W·m–2]). Although on the high side, this first level approximation is not wildly amiss and provides some insight into the factors that affect climate sensitivity.

Credit: Jerry Bell

An increase in atmospheric water vapor also affects cloud formation. The effect of clouds on the energy balance between incoming solar radiation and outgoing thermal IR radiation depends on the kinds of clouds and can result in either positive or negative feedback for planetary warming. Clouds are composed of tiny water droplets or ice crystals, which makes them very good black bodies for absorption and re-emission of thermal IR radiation. Unlike greenhouse gases that absorb and emit only at discrete wavelengths, clouds absorb and emit like black bodies throughout the thermal IR. The higher the top of the cloud, the lower the temperature from which emission takes place and the lower the energy emitted. Thus, the higher the cloud, the greater its positive feedback effect on planetary warming. Thin, wispy cirrus clouds very high in the troposphere near the stratosphere have the strongest warming effect while low-lying layers of stratus clouds have a weaker warming effect.

Cumulous and stratus clouds in the lower troposphere are opaque—we

can’t see through them. The tiny water droplets or ice crystals in these

clouds scatter visible light in all directions, including back into

space, so they reduce the amount of solar energy that reaches the

surface. That is, they increase the Earth’s albedo

and therefore have a negative feedback effect on planetary warming. The

very high cirrus clouds contain very little water (as ice) and are not

opaque—we can see the sky through them. They do not scatter very much

solar radiation and have only a weak negative feedback effect.

Studies of changes in ocean surface salinity since 1950 indicate that salinity is increasing where evaporation occurs and decreasing where rainfall is high. The implication is that increasing sea surface temperature intensifies the evaporation-condensation-precipitation water cycle.

Since clouds have both positive and negative feedback effects, which predominates and how will changing global temperature affect this balance? These are very uncertain aspects of climate science. The factors that control where clouds form, what kinds are formed, and how increased temperature and atmospheric water vapor affect their formation are complex. Computer modeling of the turbulence, condensation, and growth of water droplets in cloud formation requires large amounts of computer time and capacity. At present, even the fastest computers, running general circulation models (GCM) of the climate require so much computer capacity that the models cannot incorporate the further complexity of cloud formation. Thus, the GCMs incorporate algorithms that relate cloud formation to other parameters, such as relative humidity, to estimate their formation and effects. These lead to great variation in the model predictions, depending on the algorithms and parameters used, but generally suggest that, as the planet warms, clouds will be a positive feedback, although perhaps relatively weak.

Aerosol Radiative Forcing

Aerosol particulate matter,

tiny particles or liquid droplets suspended in the atmosphere,

generally scatter and absorb incoming solar radiation, thus contributing

to the Earth’s albedo.

Naturally occurring aerosol particles are mainly picked up by the wind

as dust and water spray or produced by occasional volcanic eruptions.

Poor land-use practices by humans can make dust storms worse and

intensify the natural effects.

Human activities do, however, add significantly to aerosol sulfate particles as well as producing black carbon (soot) particles. Burning fossil fuels containing sulfur produces SO2 that is oxidized in the atmosphere, ultimately forming hygroscopic sulfuric acid molecules and salts that act as nucleation sites for tiny water droplets. These aerosol sulfate particles tend to be quite small, so, for a given amount of emission, the number of particles is large and scatters a good deal of solar radiation. This scattering increases the albedo and produces negative radiative forcing by reducing the amount of solar radiation reaching the surface.

The atmospheric models used in this Atmospheric Warming module of the ACS Climate Science Toolkit have been one-dimensional. They have focused on the properties of an atmospheric column only as a function of altitude. Two-dimensional models can aggregate the properties of one-dimensional models over many different locations to get a more realistic average over the planet. However, a two-dimensional model still lacks an essential characteristic of the climate system—continuous exchange of matter and energy among the one-dimensional atmospheric cells. Atmospheric circulation, the winds, must be accounted for to make any attempt to capture the observable characteristics of climate changes—past, present, or future. Similarly, the vast ocean currents, albeit much slower than atmospheric circulation, must be accounted for over long modeling periods. Developing, testing, refining, and comparing general circulation models of the climate are vitally important to further our understanding of the climate and make predictions of its future more reliable. Exploring the GCMs is beyond the scope of this Toolkit, but, if you are interested, References and Resources has some leading references where you can begin to explore.

Secondarily, the high concentration of these tiny aerosol sulfate particles leads to the formation of clouds with high concentrations of tiny water droplets that scatter more solar radiation than a lower concentration of larger droplets. This change in the composition of clouds also increases the albedo and produces further negative radiative forcing, sometimes called the indirect aerosol effect. Also, these clouds are more stable against formation of precipitation, so can have a longer lifetime to reflect sunlight. The uncertainties surrounding the modeling of both the direct and indirect effects of aerosol particulate matter, especially those involving cloud formation, are large and add further to the uncertainty in predicting the climate sensitivity resulting from human activities. The uncertainty is captured in the error bars associated with aerosols in this IPCC graphic and is also the impetus for increased research to better understand aerosols and clouds.

Credit: Figure 2, FAQ 2.1, from the IPCC Fourth Assessment Report (2007), Chapter 2,

Black carbon (soot) is released from incomplete fuel combustion. Burning biomass in inefficient cook stoves or to clear land and incomplete combustion of diesel fuel are large sources of black carbon over much of southeast Asia and other developing areas of the world. In the atmosphere, the particles absorb and scatter incoming solar radiation. As winds carry them across globe, some end up on snow and ice-covered ground where they reduce the albedo and produce positive radiative forcing. A new initiative to develop and distribute efficient cook stoves to replace those now in use, could greatly reduce black carbon emissions, reducing radiative forcing a bit and, as a bonus, improving the health of the populations using them.

![T={\sqrt[ {4}]{{\frac {(1-a)S}{4\epsilon \sigma }}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/184020fbd13be6a51e70be8e8e5bf13540ffb63d)