From Wikipedia, the free encyclopedia

Posthumanism or

post-humanism (meaning "after

humanism" or "beyond humanism") is an idea in

continental philosophy and

critical theory responding to the presence of

anthropocentrism in 21st-century thought.

Posthumanization comprises "those processes by which a society comes to include members other than 'natural' biological

human beings who, in one way or another, contribute to the structures, dynamics, or meaning of the

society."

It encompasses a wide variety of branches, including:

- Antihumanism: a branch of theory that is critical of traditional humanism and traditional ideas about the human condition, vitality and agency.

- Cultural posthumanism: A branch of cultural theory critical of the foundational assumptions of humanism and its legacy that examines and questions the historical notions of "human" and "human nature", often challenging typical notions of human subjectivity and embodiment and strives to move beyond "archaic" concepts of "human nature" to develop ones which constantly adapt to contemporary technoscientific knowledge.

- Philosophical posthumanism: A philosophical direction

that draws on cultural posthumanism, the philosophical strand examines

the ethical implications of expanding the circle of moral concern and

extending subjectivities beyond the human species.

- Posthuman condition: The deconstruction of the human condition by critical theorists.

- Posthuman transhumanism: A transhuman

ideology and movement which, drawing from posthumanist philosophy,

seeks to develop and make available technologies that enable immortality

and greatly enhance human intellectual, physical, and psychological capacities in order to achieve a "posthuman future".

- AI takeover: A variant of transhumanism in which humans will not be enhanced, but rather eventually replaced by artificial intelligences. Some philosophers and theorists, including Nick Land, promote the view that humans should embrace and accept their eventual demise as a consequence of a technological singularity. This is related to the view of "cosmism",

which supports the building of strong artificial intelligence even if

it may entail the end of humanity, as in their view it "would be a

cosmic tragedy if humanity freezes evolution at the puny human level".

- Voluntary human extinction: Seeks a "posthuman future" that in this case is a future without humans.

Philosophical posthumanism

Philosopher Theodore Schatzki suggests there are two varieties of posthumanism of the philosophical kind:

One, which he calls "objectivism", tries to counter the overemphasis of the subjective, or intersubjective,

that pervades humanism, and emphasises the role of the nonhuman agents,

whether they be animals and plants, or computers or other things,

because "Humans and nonhumans, it [objectivism] proclaims, codetermine

one another", and also claims "independence of (some) objects from human

activity and conceptualization".

A second posthumanist agenda is "the prioritization of practices

over individuals (or individual subjects)", which, they say, constitute

the individual.

There may be a third kind of posthumanism, propounded by the philosopher Herman Dooyeweerd. Though he did not label it "posthumanism", he made an immanent critique of humanism, and then constructed a philosophy that presupposed neither humanist, nor scholastic, nor Greek thought but started with a different religious ground motive.

Dooyeweerd prioritized law and meaningfulness as that which enables

humanity and all else to exist, behave, live, occur, etc. "Meaning is the being of all that has been created", Dooyeweerd wrote, "and the nature even of our selfhood". Both human and nonhuman alike function subject to a common law-side, which is diverse, composed of a number of distinct law-spheres or aspects.

The temporal being of both human and non-human is multi-aspectual; for

example, both plants and humans are bodies, functioning in the biotic

aspect, and both computers and humans function in the formative and

lingual aspect, but humans function in the aesthetic, juridical, ethical

and faith aspects too. The Dooyeweerdian version is able to incorporate

and integrate both the objectivist version and the practices version,

because it allows nonhuman agents their own subject-functioning in

various aspects and places emphasis on aspectual functioning.

Emergence of philosophical posthumanism

Ihab Hassan, theorist in the academic study of literature,

once stated: "Humanism may be coming to an end as humanism transforms

itself into something one must helplessly call posthumanism."

This view predates most currents of posthumanism which have developed

over the late 20th century in somewhat diverse, but complementary,

domains of thought and practice. For example, Hassan is a known scholar

whose theoretical writings expressly address postmodernity in society.

Beyond postmodernist studies, posthumanism has been developed and

deployed by various cultural theorists, often in reaction to problematic

inherent assumptions within humanistic and enlightenment thought.

Theorists who both complement and contrast Hassan include Michel Foucault, Judith Butler, cyberneticists such as Gregory Bateson, Warren McCullouch, Norbert Wiener, Bruno Latour, Cary Wolfe, Elaine Graham, N. Katherine Hayles, Benjamin H. Bratton, Donna Haraway, Peter Sloterdijk, Stefan Lorenz Sorgner, Evan Thompson, Francisco Varela, Humberto Maturana, Timothy Morton, and Douglas Kellner.

Among the theorists are philosophers, such as Robert Pepperell, who

have written about a "posthuman condition", which is often substituted

for the term posthumanism.

Posthumanism differs from classical humanism by relegating humanity back to one of many natural species, thereby rejecting any claims founded on anthropocentric dominance. According to this claim, humans have no inherent rights to destroy nature or set themselves above it in ethical considerations a priori. Human knowledge is also reduced to a less controlling position, previously seen as the defining aspect of the world. Human rights exist on a spectrum with animal rights and posthuman rights. The limitations and fallibility of human intelligence are confessed, even though it does not imply abandoning the rational tradition of humanism.

Proponents of a posthuman discourse, suggest that innovative

advancements and emerging technologies have transcended the traditional

model of the human, as proposed by Descartes among others associated with philosophy of the Enlightenment period. Posthumanistic views were also found in the works of Shakespeare.

In contrast to humanism, the discourse of posthumanism seeks to

redefine the boundaries surrounding modern philosophical understanding

of the human. Posthumanism represents an evolution of thought beyond

that of the contemporary social boundaries and is predicated on the

seeking of truth within a postmodern context. In so doing, it rejects

previous attempts to establish "anthropological universals" that are imbued with anthropocentric assumptions.

Recently, critics have sought to describe the emergence of posthumanism

as a critical moment in modernity, arguing for the origins of key

posthuman ideas in modern fiction, in Nietzsche, or in a modernist response to the crisis of historicity.

Although Nietzsche's philosophy has been characterized as posthumanist, Foucault placed posthumanism within a context that differentiated humanism from Enlightenment thought.

According to Foucault, the two existed in a state of tension: as

humanism sought to establish norms while Enlightenment thought attempted

to transcend all that is material, including the boundaries that are

constructed by humanistic thought.

Drawing on the Enlightenment's challenges to the boundaries of

humanism, posthumanism rejects the various assumptions of human dogmas

(anthropological, political, scientific) and takes the next step by

attempting to change the nature of thought about what it means to be

human. This requires not only decentering the human in multiple

discourses (evolutionary, ecological and technological) but also

examining those discourses to uncover inherent humanistic,

anthropocentric, normative notions of humanness and the concept of the

human.

Contemporary posthuman discourse

Posthumanistic

discourse aims to open up spaces to examine what it means to be human

and critically question the concept of "the human" in light of current

cultural and historical contexts. In her book How We Became Posthuman, N. Katherine Hayles,

writes about the struggle between different versions of the posthuman

as it continually co-evolves alongside intelligent machines. Such coevolution, according to some strands of the posthuman discourse, allows one to extend their subjective understandings of real experiences beyond the boundaries of embodied existence. According to Hayles's view of posthuman, often referred to as "technological posthumanism", visual perception

and digital representations thus paradoxically become ever more

salient. Even as one seeks to extend knowledge by deconstructing

perceived boundaries, it is these same boundaries that make knowledge

acquisition possible. The use of technology in a contemporary society is

thought to complicate this relationship.yles discusses the translation of human bodies into information (as suggested by Hans Moravec)

in order to illuminate how the boundaries of our embodied reality have

been compromised in the current age and how narrow definitions of

humanness no longer apply. Because of this, according to Hayles,

posthumanism is characterized by a loss of subjectivity based on bodily

boundaries.

This strand of posthumanism, including the changing notion of

subjectivity and the disruption of ideas concerning what it means to be

human, is often associated with Donna Haraway's concept of the cyborg. However, Haraway has distanced herself from posthumanistic discourse due to other theorists' use of the term to promote utopian views of technological innovation to extend the human biological capacity (even though these notions would more correctly fall into the realm of transhumanism).

While posthumanism is a broad and complex ideology, it has

relevant implications today and for the future. It attempts to redefine social structures without inherently humanly or even biological origins, but rather in terms of social and psychological systems where consciousness and communication could potentially exist as unique disembodied

entities. Questions subsequently emerge with respect to the current use

and the future of technology in shaping human existence, as do new concerns with regards to language, symbolism, subjectivity, phenomenology, ethics, justice and creativity.

Technological versus non-technological

Posthumanism can be divided into non-technological and technological forms.

Non-technological posthumanism

While

posthumanization has links with the scholarly methodologies of

posthumanism, it is a distinct phenomenon. The rise of explicit

posthumanism as a scholarly approach is relatively recent, occurring

since the late 1970s; however, some of the processes of posthumanization that it studies are ancient. For example, the dynamics of non-technological posthumanization have existed historically in all societies in which animals were incorporated into families as household pets or in which ghosts, monsters, angels, or semidivine heroes were considered to play some role in the world.

Such non-technological posthumanization has been manifested not

only in mythological and literary works but also in the construction of temples, cemeteries, zoos,

or other physical structures that were considered to be inhabited or

used by quasi- or para-human beings who were not natural, living,

biological human beings but who nevertheless played some role within a

given society, to the extent that, according to philosopher Francesca Ferrando: "the notion of spirituality

dramatically broadens our understanding of the posthuman, allowing us

to investigate not only technical technologies (robotics, cybernetics,

biotechnology, nanotechnology, among others), but also, technologies of

existence."

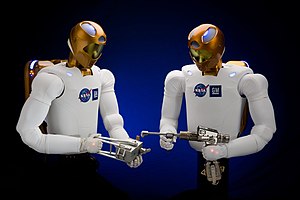

Technological posthumanism

Some

forms of technological posthumanization involve efforts to directly

alter the social, psychological, or physical structures and behaviors of

the human being through the development and application of technologies

relating to genetic engineering or neurocybernetic augmentation; such forms of posthumanization are studied, e.g., by cyborg theory. Other forms of technological posthumanization indirectly "posthumanize" human society through the deployment of social robots or attempts to develop artificial general intelligences, sentient networks, or other entities that can collaborate and interact with human beings as members of posthumanized societies.

The dynamics of technological posthumanization have long been an important element of science fiction; genres such as cyberpunk

take them as a central focus. In recent decades, technological

posthumanization has also become the subject of increasing attention by

scholars and policymakers. The expanding and accelerating forces of

technological posthumanization have generated diverse and conflicting

responses, with some researchers viewing the processes of

posthumanization as opening the door to a more meaningful and advanced transhumanist future for humanity, while other bioconservative

critiques warn that such processes may lead to a fragmentation of human

society, loss of meaning, and subjugation to the forces of technology.

Common features

Processes of technological and non-technological posthumanization both tend to result in a partial "de-anthropocentrization"

of human society, as its circle of membership is expanded to include

other types of entities and the position of human beings is decentered. A

common theme of posthumanist study is the way in which processes of

posthumanization challenge or blur simple binaries,

such as those of "human versus non-human", "natural versus artificial",

"alive versus non-alive", and "biological versus mechanical".

Relationship with transhumanism

Sociologist James Hughes comments that there is considerable confusion between the two terms. In the introduction to their book on post- and transhumanism, Robert Ranisch and Stefan Sorgner

address the source of this confusion, stating that posthumanism is

often used as an umbrella term that includes both transhumanism and

critical posthumanism.

Although both subjects relate to the future of humanity, they differ in their view of anthropocentrism. Pramod Nayar, author of Posthumanism, states that posthumanism has two main branches: ontological and critical. Ontological posthumanism is synonymous with transhumanism. The subject is regarded as "an intensification of humanism".

Transhumanist thought suggests that humans are not post human yet, but

that human enhancement, often through technological advancement and

application, is the passage of becoming post human. Transhumanism

retains humanism's focus on the Homo sapiens as the center of the world

but also considers technology to be an integral aid to human

progression. Critical posthumanism, however, is opposed to these views.

Critical posthumanism "rejects both human exceptionalism (the idea that

humans are unique creatures) and human instrumentalism (that humans

have a right to control the natural world)". These contrasting views on the importance of human beings are the main distinctions between the two subjects.

Transhumanism is also more ingrained in popular culture than

critical posthumanism, especially in science fiction. The term is

referred to by Pramod Nayar as "the pop posthumanism of cinema and pop

culture".

Criticism

Some

critics have argued that all forms of posthumanism, including

transhumanism, have more in common than their respective proponents

realize. Linking these different approaches, Paul James

suggests that 'the key political problem is that, in effect, the

position allows the human as a category of being to flow down the

plughole of history':

This is ontologically critical.

Unlike the naming of 'postmodernism' where the 'post' does not infer the

end of what it previously meant to be human (just the passing of the

dominance of the modern) the posthumanists are playing a serious game

where the human, in all its ontological variability, disappears in the

name of saving something unspecified about us as merely a motley

co-location of individuals and communities.

However, some posthumanists in the humanities and the arts

are critical of transhumanism (the brunt of James's criticism), in

part, because they argue that it incorporates and extends many of the

values of Enlightenment humanism and classical liberalism, namely scientism, according to performance philosopher Shannon Bell:

Altruism, mutualism, humanism are

the soft and slimy virtues that underpin liberal capitalism. Humanism

has always been integrated into discourses of exploitation: colonialism,

imperialism, neoimperialism, democracy, and of course, American

democratization. One of the serious flaws in transhumanism is the

importation of liberal-human values to the biotechno enhancement of the

human. Posthumanism has a much stronger critical edge attempting to

develop through enactment new understandings of the self and others,

essence, consciousness, intelligence, reason, agency, intimacy, life,

embodiment, identity and the body.

While many modern leaders of thought are accepting of nature of

ideologies described by posthumanism, some are more skeptical of the

term. Haraway, the author of A Cyborg Manifesto,

has outspokenly rejected the term, though acknowledges a philosophical

alignment with posthumanism. Haraway opts instead for the term of

companion species, referring to nonhuman entities with which humans

coexist.

Questions of race, some argue, are suspiciously elided within the

"turn" to posthumanism. Noting that the terms "post" and "human" are

already loaded with racial meaning, critical theorist Zakiyyah Iman

Jackson argues that the impulse to move "beyond" the human within

posthumanism too often ignores "praxes of humanity and critiques

produced by black people", including Frantz Fanon, Aime Cesaire, Hortense Spillers and Fred Moten.

Interrogating the conceptual grounds in which such a mode of "beyond"

is rendered legible and viable, Jackson argues that it is important to

observe that "blackness conditions and constitutes the very nonhuman

disruption and/or disruption" which posthumanists invite.

In other words, given that race in general and blackness in particular

constitute the very terms through which human-nonhuman distinctions are

made, for example in enduring legacies of scientific racism, a gesture toward a "beyond" actually "returns us to a Eurocentric transcendentalism long challenged". Posthumanist scholarship, due to characteristic rhetorical techniques, is also frequently subject to the same critiques commonly made of postmodernist scholarship in the 1980s and 1990s.