The frontispiece to

Erasmus Darwin's

evolution-themed poem

The Temple of Nature shows a goddess pulling back the veil from nature (in the person of

Artemis). Allegory and metaphor have often played an important role in the history of biology.

The

history of biology traces the study of the

living world from

ancient to

modern times. Although the concept of

biology as a single coherent field arose in the 19th century, the biological sciences emerged from

traditions of medicine and

natural history reaching back to

ayurveda,

ancient Egyptian medicine and the works of

Aristotle and

Galen in the ancient

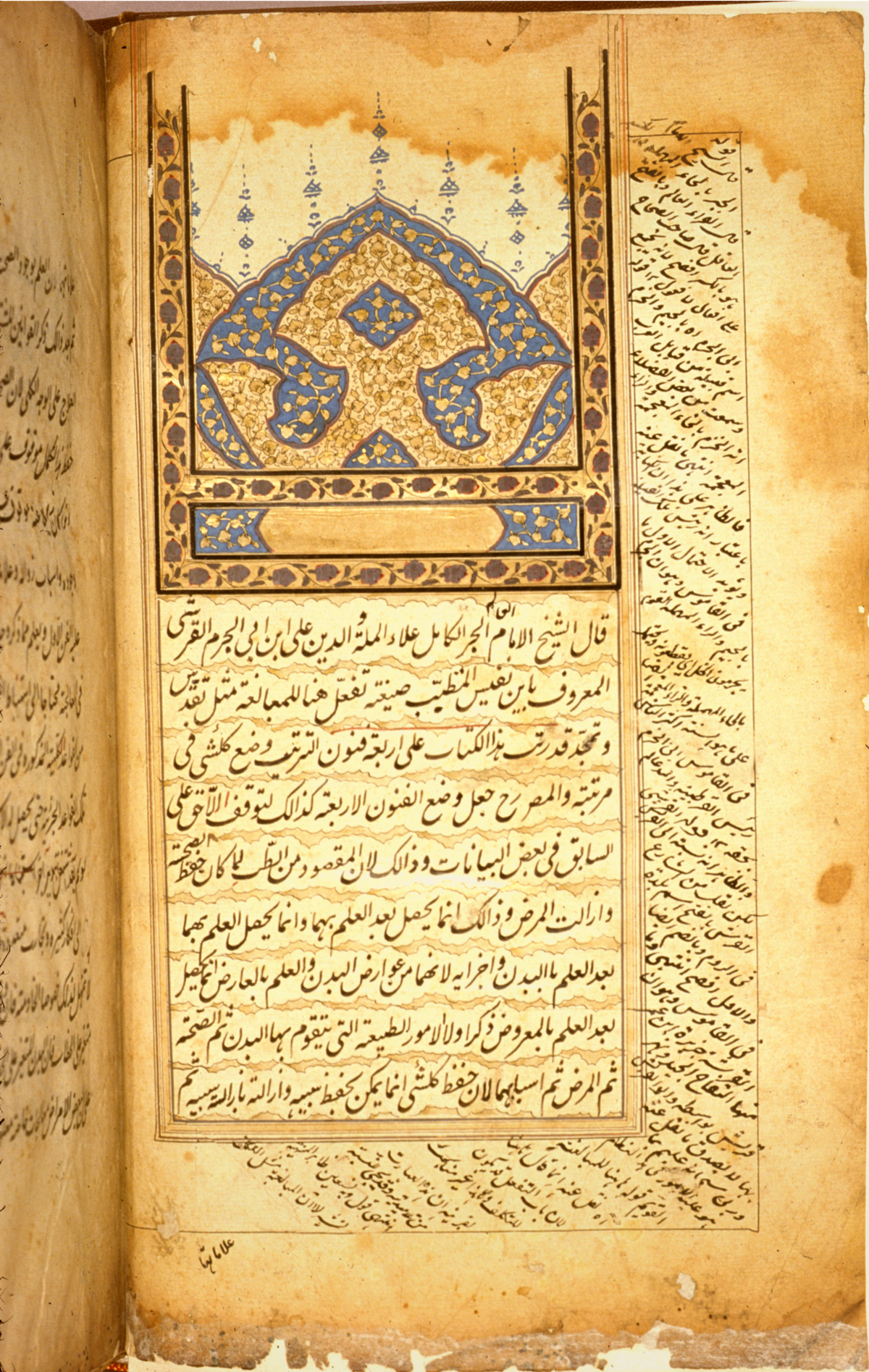

Greco-Roman world. This ancient work was further developed in the Middle Ages by

Muslim physicians and scholars such as

Avicenna. During the European

Renaissance and early modern period, biological thought was revolutionized in Europe by a renewed interest in

empiricism and the discovery of many novel organisms. Prominent in this movement were

Vesalius and

Harvey, who used experimentation and careful observation in physiology, and naturalists such as

Linnaeus and

Buffon who began to

classify the diversity of life and the

fossil record, as well as the development and behavior of organisms.

Microscopy revealed the previously unknown world of microorganisms, laying the groundwork for

cell theory. The growing importance of

natural theology, partly a response to the rise of

mechanical philosophy, encouraged the growth of natural history (although it entrenched the

argument from design).

Over the 18th and 19th centuries, biological sciences such as

botany and

zoology became increasingly professional

scientific disciplines.

Lavoisier and other physical scientists began to connect the animate and inanimate worlds through physics and chemistry. Explorer-naturalists such as

Alexander von Humboldt investigated the interaction between organisms and their environment, and the ways this relationship depends on geography—laying the foundations for

biogeography,

ecology and

ethology. Naturalists began to reject

essentialism and consider the importance of

extinction and the

mutability of species.

Cell theory provided a new perspective on the fundamental basis of life. These developments, as well as the results from

embryology and

paleontology, were synthesized in

Charles Darwin's theory of

evolution by

natural selection. The end of the 19th century saw the fall of

spontaneous generation and the rise of the

germ theory of disease, though the mechanism of

inheritance remained a mystery.

In the early 20th century, the rediscovery of

Mendel's work led to the rapid development of

genetics by

Thomas Hunt Morgan and his students, and by the 1930s the combination of

population genetics and natural selection in the "

neo-Darwinian synthesis". New disciplines developed rapidly, especially after

Watson and

Crick proposed the structure of

DNA. Following the establishment of the

Central Dogma and the cracking of the

genetic code, biology was largely split between

organismal biology—the fields that deal with whole organisms and groups of organisms—and the fields related to

cellular and molecular biology. By the late 20th century, new fields like

genomics and

proteomics were reversing this trend, with organismal biologists using molecular techniques, and molecular and cell biologists investigating the interplay between genes and the environment, as well as the genetics of natural populations of organisms.

Etymology of "biology"

The word

biology is formed by combining the

Greek βίος (bios), meaning "life", and the suffix '-logy', meaning "science of", "knowledge of", "study of", based on the Greek verb

λέγειν, 'legein' "to select", "to gather" (cf. the noun

λόγος, 'logos' "word"). The term

biology in its modern sense appears to have been introduced independently by

Thomas Beddoes (in 1799),

[1] Karl Friedrich Burdach (in 1800),

Gottfried Reinhold Treviranus (

Biologie oder Philosophie der lebenden Natur, 1802) and

Jean-Baptiste Lamarck (

Hydrogéologie, 1802).

[2][3] The word itself appears in the title of Volume 3 of

Michael Christoph Hanow's

Philosophiae naturalis sive physicae dogmaticae: Geologia, biologia, phytologia generalis et dendrologia, published in 1766.

Before

biology, there were several terms used for the study of animals and plants.

Natural history referred to the descriptive aspects of biology, though it also included

mineralogy and other non-biological fields; from the Middle Ages through the Renaissance, the unifying framework of natural history was the

scala naturae or

Great Chain of Being.

Natural philosophy and

natural theology encompassed the conceptual and metaphysical basis of plant and animal life, dealing with problems of why organisms exist and behave the way they do, though these subjects also included what is now

geology,

physics,

chemistry, and

astronomy. Physiology and (botanical) pharmacology were the province of medicine.

Botany,

zoology, and (in the case of fossils)

geology replaced

natural history and

natural philosophy in the 18th and 19th centuries before

biology was widely adopted.

[4][5] To this day, "botany" and "zoology" are widely used, although they have been joined by other sub-disciplines of biology, such as

mycology and

molecular biology.

Ancient and medieval knowledge

Early cultures

The

earliest humans must have had and passed on knowledge about

plants and

animals to increase their chances of survival. This may have included knowledge of human and animal anatomy and aspects of animal behavior (such as migration patterns). However, the first major turning point in biological knowledge came with the

Neolithic Revolution about 10,000 years ago. Humans first domesticated plants for farming, then

livestock animals to accompany the resulting sedentary societies.

[6]

The ancient cultures of

Mesopotamia,

Egypt, the

Indian subcontinent, and

China, among others, produced renowned surgeons and students of the natural sciences such as

Susruta and

Zhang Zhongjing, reflecting independent sophisticated systems of natural philosophy. However, the roots of modern biology are usually traced back to the

secular tradition of

ancient Greek philosophy.

[7]

Ancient Chinese traditions

In ancient China, biological topics can be found dispersed across several different disciplines, including the work of

herbologists, physicians, alchemists, and

philosophers. The

Taoist tradition of

Chinese alchemy, for example, can be considered part of the life sciences due to its emphasis on health (with the ultimate goal being the

elixir of life). The system of

classical Chinese medicine usually revolved around the theory of

yin and yang, and the

five phases.

[8] Taoist philosophers, such as

Zhuangzi in the 4th century BCE, also expressed ideas related to

evolution, such as denying the fixity of biological species and speculating that species had developed differing attributes in response to differing environments.

[9]

Ancient Indian traditions

One of the oldest organised systems of medicine is known from the Indian subcontinent in the form of

Ayurveda which originated around 1500 BCE from

Atharvaveda (one of the four most ancient books of Indian knowledge, wisdom and culture).

The ancient Indian

Ayurveda tradition independently developed the concept of three humours, resembling that of the

four humours of

ancient Greek medicine, though the Ayurvedic system included further complications, such as the body being composed of

five elements and seven basic

tissues. Ayurvedic writers also classified living things into four categories based on the method of birth (from the womb, eggs, heat & moisture, and seeds) and explained the conception of a

fetus in detail. They also made considerable advances in the field of

surgery, often without the use of human

dissection or animal

vivisection.

[10] One of the earliest Ayurvedic treatises was the

Sushruta Samhita, attributed to Sushruta in the 6th century BCE. It was also an early

materia medica, describing 700 medicinal plants, 64 preparations from mineral sources, and 57 preparations based on animal sources.

[11]

Ancient Mesopotamian traditions

Ancient Mesopotamian medicine may be represented by

Esagil-kin-apli, a prominent scholar of the 11th Century BCE, who made a compilation of medical prescriptions and procedures, which he presented as exorcisms.

Ancient Egyptian traditions

Over a dozen

medical papyri have been preserved, most notably the

Edwin Smith Papyrus (the oldest extant surgical handbook) and the

Ebers Papyrus (a handbook of preparing and using materia medica for various diseases), both from the 16th Century BCE.

Ancient Egypt is also known for developing

embalming, which was used for

mummification, in order to preserve human remains and forestall

decomposition.

[12]

Ancient Greek and Roman traditions

The

pre-Socratic philosophers asked many questions about life but produced little systematic knowledge of specifically biological interest—though the attempts of the

atomists to explain life in purely physical terms would recur periodically through the history of biology. However, the medical theories of

Hippocrates and his followers, especially

humorism, had a lasting impact.

[13]

The philosopher

Aristotle was the most influential scholar of the living world from

classical antiquity. Though his early work in natural philosophy was speculative, Aristotle's later biological writings were more empirical, focusing on biological causation and the diversity of life. He made countless observations of nature, especially the habits and

attributes of

plants and

animals in the world around him, which he devoted considerable attention to

categorizing. In all, Aristotle classified 540 animal species, and dissected at least 50. He believed that intellectual purposes,

formal causes, guided all natural processes.

[14]

Aristotle, and nearly all Western scholars after him until the 18th century, believed that creatures were arranged in a graded scale of perfection rising from plants on up to humans: the

scala naturae or

Great Chain of Being.

[15] Aristotle's successor at the

Lyceum,

Theophrastus, wrote a series of books on botany—the

History of Plants—which survived as the most important contribution of antiquity to botany, even into the

Middle Ages. Many of Theophrastus' names survive into modern times, such as

carpos for fruit, and

pericarpion for seed vessel.

Dioscorides wrote a pioneering and

encyclopaedic pharmacopoeia,

De Materia Medica, incorporating descriptions of some 600 plants and their uses in

medicine.

Pliny the Elder, in his

Natural History, assembled a similarly encyclopaedic account of things in nature, including accounts of many plants and animals.

[16]

A few scholars in the

Hellenistic period under the

Ptolemies—particularly

Herophilus of Chalcedon and

Erasistratus of Chios—amended Aristotle's physiological work, even performing dissections and vivisections.

[17] Claudius Galen became the most important authority on medicine and anatomy. Though a few ancient

atomists such as

Lucretius challenged the

teleological Aristotelian viewpoint that all aspects of life are the result of design or purpose, teleology (and after the rise of

Christianity,

natural theology) would remain central to biological thought essentially until the 18th and 19th centuries.

Ernst W. Mayr argued that "Nothing of any real consequence happened in biology after Lucretius and Galen until the Renaissance."

[18] The ideas of the Greek traditions of natural history and medicine survived, but they were generally taken unquestioningly in

medieval Europe.

[19]

Medieval and Islamic knowledge

The decline of the

Roman Empire led to the disappearance or destruction of much knowledge, though physicians still incorporated many aspects of the Greek tradition into training and practice. In

Byzantium and the

Islamic world, many of the Greek works were translated into

Arabic and many of the works of Aristotle were preserved.

[20]

During the

High Middle Ages, a few European scholars such as

Hildegard of Bingen,

Albertus Magnus and

Frederick II expanded the natural history canon. The

rise of European universities, though important for the development of physics and philosophy, had little impact on biological scholarship.

[21]

Renaissance and early modern developments

The

European Renaissance brought expanded interest in both empirical natural history and physiology. In 1543,

Andreas Vesalius inaugurated the modern era of Western medicine with his seminal

human anatomy treatise

De humani corporis fabrica, which was based on dissection of corpses. Vesalius was the first in a series of anatomists who gradually replaced

scholasticism with

empiricism in physiology and medicine, relying on first-hand experience rather than authority and abstract reasoning. Via

herbalism, medicine was also indirectly the source of renewed empiricism in the study of plants.

Otto Brunfels,

Hieronymus Bock and

Leonhart Fuchs wrote extensively on wild plants, the beginning of a nature-based approach to the full range of plant life.

[22] Bestiaries—a genre that combines both the natural and figurative knowledge of animals—also became more sophisticated, especially with the work of

William Turner,

Pierre Belon,

Guillaume Rondelet,

Conrad Gessner, and

Ulisse Aldrovandi.

[23]Artists such as

Albrecht Dürer and

Leonardo da Vinci, often working with naturalists, were also interested in the bodies of animals and humans, studying physiology in detail and contributing to the growth of anatomical knowledge.

[24] The traditions of

alchemy and

natural magic, especially in the work of

Paracelsus, also laid claim to knowledge of the living world. Alchemists subjected organic matter to chemical analysis and experimented liberally with both biological and mineral

pharmacology.

[25] This was part of a larger transition in world views (the rise of the

mechanical philosophy) that continued into the 17th century, as the traditional metaphor of

nature as organism was replaced by the

nature as machine metaphor.

[26]

Seventeenth and eighteenth centuries

Systematizing, naming and classifying dominated natural history throughout much of the 17th and 18th centuries.

Carolus Linnaeus published a basic

taxonomy for the natural world in 1735 (variations of which have been in use ever since), and in the 1750s introduced

scientific names for all his species.

[27] While Linnaeus conceived of species as unchanging parts of a designed hierarchy, the other great naturalist of the 18th century,

Georges-Louis Leclerc, Comte de Buffon, treated species as artificial categories and living forms as malleable—even suggesting the possibility of

common descent. Though he was opposed to evolution, Buffon is a key figure in the

history of evolutionary thought; his work would influence the evolutionary theories of both

Lamarck and

Darwin.

[28]

The discovery and description of new species and the

collection of specimens became a passion of scientific gentlemen and a lucrative enterprise for entrepreneurs; many naturalists traveled the globe in search of scientific knowledge and adventure.

[29]

Cabinets of curiosities, such as that of

Ole Worm, were centers of biological knowledge in the early modern period, bringing organisms from across the world together in one place. Before the

Age of Exploration, naturalists had little idea of the sheer scale of biological diversity.

Extending the work of Vesalius into experiments on still living bodies (of both humans and animals),

William Harvey and other natural philosophers investigated the roles of blood, veins and arteries. Harvey's

De motu cordis in 1628 was the beginning of the end for Galenic theory, and alongside

Santorio Santorio's studies of metabolism, it served as an influential model of quantitative approaches to physiology.

[30]

In the early 17th century, the micro-world of biology was just beginning to open up. A few lensmakers and natural philosophers had been creating crude

microscopes since the late 16th century, and

Robert Hooke published the seminal

Micrographia based on observations with his own compound microscope in 1665. But it was not until

Antony van Leeuwenhoek's dramatic improvements in lensmaking beginning in the 1670s—ultimately producing up to 200-fold magnification with a single lens—that scholars discovered

spermatozoa,

bacteria,

infusoria and the sheer strangeness and diversity of microscopic life. Similar investigations by

Jan Swammerdam led to new interest in

entomology and built the basic techniques of microscopic dissection and

staining.

[31]

In

Micrographia, Robert Hooke had applied the word

cell to biological structures such as this piece of

cork, but it was not until the 19th century that scientists considered cells the universal basis of life.

As the microscopic world was expanding, the macroscopic world was shrinking. Botanists such as

John Ray worked to incorporate the flood of newly discovered organisms shipped from across the globe into a coherent taxonomy, and a coherent theology (

natural theology).

[32] Debate over another flood, the

Noachian, catalyzed the development of

paleontology; in 1669

Nicholas Steno published an essay on how the remains of living organisms could be trapped in layers of sediment and mineralized to produce

fossils. Although Steno's ideas about fossilization were well known and much debated among natural philosophers, an organic origin for all fossils would not be accepted by all naturalists until the end of the 18th century due to philosophical and theological debate about issues such as the age of the earth and

extinction.

[33]

19th century: the emergence of biological disciplines

Up through the 19th century, the scope of biology was largely divided between medicine, which investigated questions of form and function (i.e., physiology), and natural history, which was concerned with the diversity of life and interactions among different forms of life and between life and non-life. By 1900, much of these domains overlapped, while natural history (and its counterpart

natural philosophy) had largely given way to more specialized scientific disciplines—

cytology,

bacteriology,

morphology,

embryology,

geography, and

geology.

In the course of his travels,

Alexander von Humboldt mapped the distribution of plants across landscapes and recorded a variety of physical conditions such as pressure and temperature.

Natural history and natural philosophy

Widespread travel by naturalists in the early-to-mid-19th century resulted in a wealth of new information about the diversity and distribution of living organisms. Of particular importance was the work of

Alexander von Humboldt, which analyzed the relationship between organisms and their environment (i.e., the domain of

natural history) using the quantitative approaches of

natural philosophy (i.e.,

physics and

chemistry). Humboldt's work laid the foundations of

biogeography and inspired several generations of scientists.

[34]

Geology and paleontology

The emerging discipline of geology also brought natural history and natural philosophy closer together; the establishment of the

stratigraphic column linked the spacial distribution of organisms to their temporal distribution, a key precursor to concepts of evolution.

Georges Cuvier and others made great strides in

comparative anatomy and

paleontology in the late 1790s and early 19th century. In a series of lectures and papers that made detailed comparisons between living mammals and

fossil remains Cuvier was able to establish that the fossils were remains of species that had become

extinct—rather than being remains of species still alive elsewhere in the world, as had been widely believed.

[35] Fossils discovered and described by

Gideon Mantell,

William Buckland,

Mary Anning, and

Richard Owen among others helped establish that there had been an 'age of reptiles' that had preceded even the prehistoric mammals. These discoveries captured the public imagination and focused attention on the history of life on earth.

[36] Most of these geologists held to

catastrophism, but

Charles Lyell's influential

Principles of Geology (1830) popularised

Hutton's uniformitarianism, a theory that explained the geological past and present on equal terms.

[37]

Evolution and biogeography

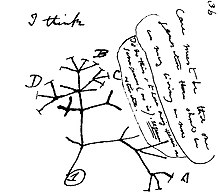

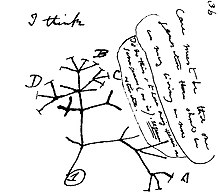

Charles Darwin's first sketch of an evolutionary tree from his

First Notebook on Transmutation of Species (1837)

The most significant evolutionary theory before Darwin's was that of

Jean-Baptiste Lamarck; based on the

inheritance of acquired characteristics (an inheritance mechanism that was widely accepted until the 20th century), it described a chain of development stretching from the lowliest microbe to humans.

[38] The British naturalist

Charles Darwin, combining the biogeographical approach of Humboldt, the uniformitarian geology of Lyell,

Thomas Malthus's writings on population growth, and his own morphological expertise, created a more successful evolutionary theory based on

natural selection; similar evidence led

Alfred Russel Wallace to independently reach the same conclusions.

[39]

The 1859 publication of Darwin's theory in

On the Origin of Species by Means of Natural Selection, or the Preservation of Favoured Races in the Struggle for Life is often considered the central event in the history of modern biology. Darwin's established credibility as a naturalist, the sober tone of the work, and most of all the sheer strength and volume of evidence presented, allowed

Origin to succeed where previous evolutionary works such as the anonymous

Vestiges of Creation had failed. Most scientists were convinced of evolution and

common descent by the end of the 19th century. However, natural selection would not be accepted as the primary mechanism of evolution until well into the 20th century, as most contemporary theories of heredity seemed incompatible with the inheritance of random variation.

[40]

Wallace, following on earlier work by

de Candolle,

Humboldt and Darwin, made major contributions to

zoogeography. Because of his interest in the transmutation hypothesis, he paid particular attention to the geographical distribution of closely allied species during his field work first in

South America and then in the

Malay archipelago. While in the archipelago he identified the

Wallace line, which runs through the

Spice Islands dividing the fauna of the archipelago between an Asian zone and a

New Guinea/Australian zone. His key question, as to why the fauna of islands with such similar climates should be so different, could only be answered by considering their origin. In 1876 he wrote

The Geographical Distribution of Animals, which was the standard reference work for over half a century, and a sequel,

Island Life, in 1880 that focused on island biogeography. He extended the six-zone system developed by

Philip Sclater for describing the geographical distribution of birds to animals of all kinds. His method of tabulating data on animal groups in geographic zones highlighted the discontinuities; and his appreciation of evolution allowed him to propose rational explanations, which had not been done before.

[41][42]

The scientific study of

heredity grew rapidly in the wake of Darwin's

Origin of Species with the work of

Francis Galton and the

biometricians. The origin of

genetics is usually traced to the 1866 work of the

monk Gregor Mendel, who would later be credited with the

laws of inheritance. However, his work was not recognized as significant until 35 years afterward. In the meantime, a variety of theories of inheritance (based on

pangenesis,

orthogenesis, or other mechanisms) were debated and investigated vigorously.

[43] Embryology and

ecology also became central biological fields, especially as linked to evolution and popularized in the work of

Ernst Haeckel. Most of the 19th century work on heredity, however, was not in the realm of natural history, but that of experimental physiology.

Physiology

Over the course of the 19th century, the scope of physiology expanded greatly, from a primarily medically oriented field to a wide-ranging investigation of the physical and chemical processes of life—including plants, animals, and even microorganisms in addition to man.

Living things as machines became a dominant metaphor in biological (and social) thinking.

[44]

Cell theory, embryology and germ theory

Advances in

microscopy also had a profound impact on biological thinking. In the early 19th century, a number of biologists pointed to the central importance of the

cell. In 1838 and 1839,

Schleiden and

Schwann began promoting the ideas that (1) the basic unit of organisms is the cell and (2) that individual cells have all the characteristics of

life, though they opposed the idea that (3) all cells come from the division of other cells. Thanks to the work of

Robert Remak and

Rudolf Virchow, however, by the 1860s most biologists accepted all three tenets of what came to be known as

cell theory.

[45]

Cell theory led biologists to re-envision individual organisms as interdependent assemblages of individual cells. Scientists in the rising field of

cytology, armed with increasingly powerful microscopes and new

staining methods, soon found that even single cells were far more complex than the homogeneous fluid-filled chambers described by earlier microscopists.

Robert Brown had described the

nucleus in 1831, and by the end of the 19th century cytologists identified many of the key cell components:

chromosomes,

centrosomes mitochondria,

chloroplasts, and other structures made visible through staining. Between 1874 and 1884

Walther Flemming described the discrete stages of mitosis, showing that they were not

artifacts of staining but occurred in living cells, and moreover, that chromosomes doubled in number just before the cell divided and a daughter cell was produced. Much of the research on cell reproduction came together in

August Weismann's theory of heredity: he identified the nucleus (in particular chromosomes) as the hereditary material, proposed the distinction between

somatic cells and

germ cells (arguing that chromosome number must be halved for germ cells, a precursor to the concept of

meiosis), and adopted

Hugo de Vries's theory of

pangenes. Weismannism was extremely influential, especially in the new field of experimental

embryology.

[46]

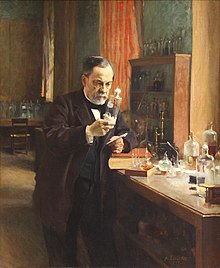

By the mid-1850s the

miasma theory of disease was largely superseded by the

germ theory of disease, creating extensive interest in microorganisms and their interactions with other forms of life. By the 1880s,

bacteriology was becoming a coherent discipline, especially through the work of

Robert Koch, who introduced methods for growing pure cultures on

agar gels containing specific nutrients in

Petri dishes. The long-held idea that living organisms could easily originate from nonliving matter (

spontaneous generation) was attacked in a series of experiments carried out by

Louis Pasteur, while debates over

vitalism vs.

mechanism (a perennial issue since the time of Aristotle and the Greek atomists) continued apace.

[47]

Rise of organic chemistry and experimental physiology

In chemistry, one central issue was the distinction between organic and inorganic substances, especially in the context of organic transformations such as

fermentation and

putrefaction. Since Aristotle these had been considered essentially biological (

vital) processes. However,

Friedrich Wöhler,

Justus Liebig and other pioneers of the rising field of

organic chemistry—building on the work of Lavoisier—showed that the organic world could often be analyzed by physical and chemical methods. In 1828 Wöhler showed that the organic substance

urea could be created by chemical means that do not involve life, providing a powerful challenge to

vitalism. Cell extracts ("ferments") that could effect chemical transformations were discovered, beginning with

diastase in 1833. By the end of the 19th century the concept of

enzymes was well established, though equations of

chemical kinetics would not be applied to enzymatic reactions until the early 20th century.

[48]

Physiologists such as

Claude Bernard explored (through vivisection and other experimental methods) the chemical and physical functions of living bodies to an unprecedented degree, laying the groundwork for

endocrinology (a field that developed quickly after the discovery of the first

hormone,

secretin, in 1902),

biomechanics, and the study of

nutrition and

digestion. The importance and diversity of experimental physiology methods, within both medicine and biology, grew dramatically over the second half of the 19th century. The control and manipulation of life processes became a central concern, and experiment was placed at the center of biological education.

[49]

Twentieth century biological sciences

Embryonic development of a salamander, filmed in the 1920s

At the beginning of the 20th century, biological research was largely a professional endeavour. Most work was still done in the

natural history mode, which emphasized morphological and phylogenetic analysis over experiment-based causal explanations. However, anti-

vitalist experimental physiologists and embryologists, especially in Europe, were increasingly influential. The tremendous success of experimental approaches to development, heredity, and metabolism in the 1900s and 1910s demonstrated the power of experimentation in biology. In the following decades, experimental work replaced natural history as the dominant mode of research.

[50]

Ecology and environmental science

In the early 20th century, naturalists were faced with increasing pressure to add rigor and preferably experimentation to their methods, as the newly prominent laboratory-based biological disciplines had done. Ecology had emerged as a combination of biogeography with the

biogeochemical cycle concept pioneered by chemists; field biologists developed quantitative methods such as the

quadrat and adapted laboratory instruments and cameras for the field to further set their work apart from traditional natural history. Zoologists and botanists did what they could to mitigate the unpredictability of the living world, performing laboratory experiments and studying semi-controlled natural environments such as gardens; new institutions like the

Carnegie Station for Experimental Evolution and the

Marine Biological Laboratory provided more controlled environments for studying organisms through their entire life cycles.

[51]

The

ecological succession concept, pioneered in the 1900s and 1910s by

Henry Chandler Cowles and

Frederic Clements, was important in early plant ecology.

[52] Alfred Lotka's predator-prey equations,

G. Evelyn Hutchinson's studies of the biogeography and biogeochemical structure of lakes and rivers (

limnology) and

Charles Elton's studies of animal

food chains were pioneers among the succession of quantitative methods that colonized the developing ecological specialties. Ecology became an independent discipline in the 1940s and 1950s after

Eugene P. Odum synthesized many of the concepts of

ecosystem ecology, placing relationships between groups of organisms (especially material and energy relationships) at the center of the field.

[53]

In the 1960s, as evolutionary theorists explored the possibility of multiple

units of selection, ecologists turned to evolutionary approaches. In

population ecology, debate over

group selection was brief but vigorous; by 1970, most biologists agreed that natural selection was rarely effective above the level of individual organisms. The evolution of ecosystems, however, became a lasting research focus. Ecology expanded rapidly with the rise of the environmental movement; the

International Biological Program attempted to apply the methods of

big science (which had been so successful in the physical sciences) to ecosystem ecology and pressing environmental issues, while smaller-scale independent efforts such as

island biogeography and the

Hubbard Brook Experimental Forest helped redefine the scope of an increasingly diverse discipline.

[54]

Classical genetics, the modern synthesis, and evolutionary theory

1900 marked the so-called

rediscovery of Mendel:

Hugo de Vries,

Carl Correns, and

Erich von Tschermak independently arrived at

Mendel's laws (which were not actually present in Mendel's work).

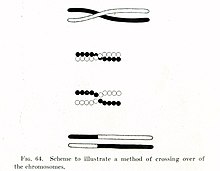

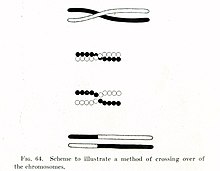

[55] Soon after, cytologists (cell biologists) proposed that

chromosomes were the hereditary material. Between 1910 and 1915,

Thomas Hunt Morgan and the "

Drosophilists" in his fly lab forged these two ideas—both controversial—into the "Mendelian-chromosome theory" of heredity.

[56] They quantified the phenomenon of genetic linkage and postulated that genes reside on chromosomes like beads on string; they hypothesized

crossing over to explain linkage and constructed

genetic maps of the fruit fly

Drosophila melanogaster, which became a widely used

model organism.

[57]

Hugo de Vries tried to link the new genetics with evolution; building on his work with heredity and

hybridization, he proposed a theory of

mutationism, which was widely accepted in the early 20th century.

Lamarckism also had many adherents.

Darwinism was seen as incompatible with the continuously variable traits studied by

biometricians, which seemed only partially heritable. In the 1920s and 1930s—following the acceptance of the Mendelian-chromosome theory— the emergence of the discipline of

population genetics, with the work of

R.A. Fisher,

J.B.S. Haldane and

Sewall Wright, unified the idea of evolution by

natural selection with

Mendelian genetics, producing the

modern synthesis. The

inheritance of acquired characters was rejected, while mutationism gave way as genetic theories matured.

[58]

In the second half of the century the ideas of population genetics began to be applied in the new discipline of the genetics of behavior,

sociobiology, and, especially in humans,

evolutionary psychology. In the 1960s

W.D. Hamilton and others developed

game theory approaches to explain

altruism from an evolutionary perspective through

kin selection. The possible origin of higher organisms through

endosymbiosis, and contrasting approaches to molecular evolution in the

gene-centered view (which held selection as the predominant cause of evolution) and the

neutral theory (which made

genetic drift a key factor) spawned perennial debates over the proper balance of

adaptationism and contingency in evolutionary theory.

[59]

In the 1970s

Stephen Jay Gould and

Niles Eldredge proposed the theory of

punctuated equilibrium which holds that stasis is the most prominent feature of the fossil record, and that most evolutionary changes occur rapidly over relatively short periods of time.

[60] In 1980

Luis Alvarez and

Walter Alvarez proposed the hypothesis that an

impact event was responsible for the

Cretaceous–Paleogene extinction event.

[61] Also in the early 1980s, statistical analysis of the fossil record of marine organisms published by

Jack Sepkoski and

David M. Raup led to a better appreciation of the importance of

mass extinction events to the history of life on earth.

[62]

Biochemistry, microbiology, and molecular biology

By the end of the 19th century all of the major pathways of

drug metabolism had been discovered, along with the outlines of protein and fatty acid metabolism and urea synthesis.

[63] In the early decades of the 20th century, the minor components of foods in human nutrition, the

vitamins, began to be isolated and synthesized. Improved laboratory techniques such as

chromatography and

electrophoresis led to rapid advances in physiological chemistry, which—as

biochemistry—began to achieve independence from its medical origins. In the 1920s and 1930s, biochemists—led by

Hans Krebs and

Carl and

Gerty Cori—began to work out many of the central

metabolic pathways of life: the

citric acid cycle,

glycogenesis and

glycolysis, and the synthesis of

steroids and

porphyrins. Between the 1930s and 1950s,

Fritz Lipmann and others established the role of

ATP as the universal carrier of energy in the cell, and

mitochondria as the powerhouse of the cell. Such traditionally biochemical work continued to be very actively pursued throughout the 20th century and into the 21st.

[64]

Origins of molecular biology

Following the rise of classical genetics, many biologists—including a new wave of physical scientists in biology—pursued the question of the gene and its physical nature.

Warren Weaver—head of the science division of the

Rockefeller Foundation—issued grants to promote research that applied the methods of physics and chemistry to basic biological problems, coining the term

molecular biology for this approach in 1938; many of the significant biological breakthroughs of the 1930s and 1940s were funded by the Rockefeller Foundation.

[65]

Like biochemistry, the overlapping disciplines of

bacteriology and

virology (later combined as

microbiology), situated between science and medicine, developed rapidly in the early 20th century.

Félix d'Herelle's isolation of

bacteriophage during World War I initiated a long line of research focused on phage viruses and the bacteria they infect.

[66]

The development of standard, genetically uniform organisms that could produce repeatable experimental results was essential for the development of

molecular genetics. After early work with

Drosophila and

maize, the adoption of simpler

model systems like the bread mold

Neurospora crassa made it possible to connect genetics to biochemistry, most importantly with

Beadle and

Tatum's

one gene-one enzyme hypothesis in 1941. Genetics experiments on even simpler systems like

tobacco mosaic virus and

bacteriophage, aided by the new technologies of

electron microscopy and

ultracentrifugation, forced scientists to re-evaluate the literal meaning of

life; virus heredity and reproducing

nucleoprotein cell structures outside the nucleus ("plasmagenes") complicated the accepted Mendelian-chromosome theory.

[67]

The "

central dogma of molecular biology" (originally a "dogma" only in jest) was proposed by Francis Crick in 1958.

[68] This is Crick's reconstruction of how he conceived of the central dogma at the time. The solid lines represent (as it seemed in 1958) known modes of information transfer, and the dashed lines represent postulated ones.

Oswald Avery showed in 1943 that

DNA was likely the genetic material of the chromosome, not its protein; the issue was settled decisively with the 1952

Hershey–Chase experiment—one of many contributions from the so-called

phage group centered around physicist-turned-biologist

Max Delbrück. In 1953

James Watson and

Francis Crick, building on the work of

Maurice Wilkins and

Rosalind Franklin, suggested that the structure of DNA was a double helix. In their famous paper "

Molecular structure of Nucleic Acids", Watson and Crick noted coyly, "It has not escaped our notice that the specific pairing we have postulated immediately suggests a possible copying mechanism for the genetic material."

[69] After the 1958

Meselson–Stahl experiment confirmed the

semiconservative replication of DNA, it was clear to most biologists that nucleic acid sequence must somehow determine

amino acid sequence in proteins; physicist

George Gamow proposed that a fixed

genetic code connected proteins and DNA.

Between 1953 and 1961, there were few known biological sequences—either DNA or protein—but an abundance of proposed code systems, a situation made even more complicated by expanding knowledge of the intermediate role of

RNA. To actually decipher the code, it took an extensive series of experiments in biochemistry and bacterial genetics, between 1961 and 1966—most importantly the work of

Nirenberg and

Khorana.

[70]

Expansion of molecular biology

In addition to the Division of Biology at

Caltech, the

Laboratory of Molecular Biology (and its precursors) at

Cambridge, and a handful of other institutions, the

Pasteur Institute became a major center for molecular biology research in the late 1950s.

[71] Scientists at Cambridge, led by

Max Perutz and

John Kendrew, focused on the rapidly developing field of

structural biology, combining

X-ray crystallography with

Molecular modelling and the new computational possibilities of

digital computing (benefiting both directly and indirectly from the

military funding of science). A number of biochemists led by

Frederick Sanger later joined the Cambridge lab, bringing together the study of

macromolecular structure and function.

[72] At the Pasteur Institute,

François Jacob and

Jacques Monod followed the 1959

PaJaMo experiment with a series of publications regarding the

lac operon that established the concept of

gene regulation and identified what came to be known as

messenger RNA.

[73] By the mid-1960s, the intellectual core of molecular biology—a model for the molecular basis of metabolism and reproduction— was largely complete.

[74]

The late 1950s to the early 1970s was a period of intense research and institutional expansion for molecular biology, which had only recently become a somewhat coherent discipline. In what organismic biologist

E. O. Wilson called "The Molecular Wars", the methods and practitioners of molecular biology spread rapidly, often coming to dominate departments and even entire disciplines.

[75] Molecularization was particularly important in

genetics,

immunology,

embryology, and

neurobiology, while the idea that life is controlled by a "

genetic program"—a metaphor Jacob and Monod introduced from the emerging fields of

cybernetics and

computer science—became an influential perspective throughout biology.

[76] Immunology in particular became linked with molecular biology, with innovation flowing both ways: the

clonal selection theory developed by

Niels Jerne and

Frank Macfarlane Burnet in the mid-1950s helped shed light on the general mechanisms of protein synthesis.

[77]

Resistance to the growing influence of molecular biology was especially evident in

evolutionary biology.

Protein sequencing had great potential for the quantitative study of evolution (through the

molecular clock hypothesis), but leading evolutionary biologists questioned the relevance of molecular biology for answering the big questions of evolutionary causation. Departments and disciplines fractured as organismic biologists asserted their importance and independence:

Theodosius Dobzhansky made the famous statement that "

nothing in biology makes sense except in the light of evolution" as a response to the molecular challenge. The issue became even more critical after 1968;

Motoo Kimura's neutral theory of molecular evolution suggested that

natural selection was not the ubiquitous cause of evolution, at least at the molecular level, and that molecular evolution might be a fundamentally different process from

morphological evolution. (Resolving this "molecular/morphological paradox" has been a central focus of molecular evolution research since the 1960s.)

[78]

Biotechnology, genetic engineering, and genomics

Biotechnology in the general sense has been an important part of biology since the late 19th century. With the industrialization of

brewing and

agriculture, chemists and biologists became aware of the great potential of human-controlled biological processes. In particular,

fermentation proved a great boon to chemical industries. By the early 1970s, a wide range of biotechnologies were being developed, from drugs like

penicillin and

steroids to foods like

Chlorella and single-cell protein to

gasohol—as well as a wide range of

hybrid high-yield crops and agricultural technologies, the basis for the

Green Revolution.

[79]

Carefully engineered

strains of the bacterium

Escherichia coli are crucial tools in biotechnology as well as many other biological fields.

Recombinant DNA

Biotechnology in the modern sense of

genetic engineering began in the 1970s, with the invention of

recombinant DNA techniques.

[80] Restriction enzymes were discovered and characterized in the late 1960s, following on the heels of the isolation, then duplication, then synthesis of viral

genes. Beginning with the lab of

Paul Berg in 1972 (aided by

EcoRI from

Herbert Boyer's lab, building on work with

ligase by

Arthur Kornberg's lab), molecular biologists put these pieces together to produce the first

transgenic organisms. Soon after, others began using

plasmid vectors and adding genes for

antibiotic resistance, greatly increasing the reach of the recombinant techniques.

[81]

Wary of the potential dangers (particularly the possibility of a prolific bacteria with a viral cancer-causing gene), the scientific community as well as a wide range of scientific outsiders reacted to these developments with both enthusiasm and fearful restraint. Prominent molecular biologists led by Berg suggested a temporary moratorium on recombinant DNA research until the dangers could be assessed and policies could be created. This moratorium was largely respected, until the participants in the 1975

Asilomar Conference on Recombinant DNA created policy recommendations and concluded that the technology could be used safely.

[82]

Following Asilomar, new genetic engineering techniques and applications developed rapidly.

DNA sequencing methods improved greatly (pioneered by

Frederick Sanger and

Walter Gilbert), as did

oligonucleotide synthesis and

transfection techniques.

[83] Researchers learned to control the expression of

transgenes, and were soon racing—in both academic and industrial contexts—to create organisms capable of expressing human genes for the production of human hormones. However, this was a more daunting task than molecular biologists had expected; developments between 1977 and 1980 showed that, due to the phenomena of split genes and

splicing, higher organisms had a much more complex system of

gene expression than the bacteria models of earlier studies.

[84] The first such race, for synthesizing human

insulin, was won by

Genentech. This marked the beginning of the biotech boom (and with it, the era of

gene patents), with an unprecedented level of overlap between biology, industry, and law.

[85]

Molecular systematics and genomics

By the 1980s, protein sequencing had already transformed methods of

scientific classification of organisms (especially

cladistics) but biologists soon began to use RNA and DNA sequences as

characters; this expanded the significance of

molecular evolution within evolutionary biology, as the results of

molecular systematics could be compared with traditional evolutionary trees based on

morphology. Following the pioneering ideas of

Lynn Margulis on

endosymbiotic theory, which holds that some of the

organelles of

eukaryotic cells originated from free living

prokaryotic organisms through

symbiotic relationships, even the overall division of the tree of life was revised. Into the 1990s, the five domains (Plants, Animals, Fungi, Protists, and Monerans) became three (the

Archaea, the

Bacteria, and the

Eukarya) based on

Carl Woese's pioneering

molecular systematics work with

16S rRNA sequencing.

[86]

The development and popularization of the

polymerase chain reaction (PCR) in mid-1980s (by

Kary Mullis and others at

Cetus Corp.) marked another watershed in the history of modern biotechnology, greatly increasing the ease and speed of genetic analysis.

[87] Coupled with the use of

expressed sequence tags, PCR led to the discovery of many more genes than could be found through traditional biochemical or genetic methods and opened the possibility of sequencing entire genomes.

[88]

The unity of much of the

morphogenesis of organisms from fertilized egg to adult began to be unraveled after the discovery of the

homeobox genes, first in fruit flies, then in other insects and animals, including humans. These developments led to advances in the field of

evolutionary developmental biology towards understanding how the various

body plans of the animal phyla have evolved and how they are related to one another.

[89]

The

Human Genome Project—the largest, most costly single biological study ever undertaken—began in 1988 under the leadership of

James D. Watson, after preliminary work with genetically simpler model organisms such as

E. coli,

S. cerevisiae and

C. elegans.

Shotgun sequencing and gene discovery methods pioneered by

Craig Venter—and fueled by the financial promise of gene patents with

Celera Genomics— led to a public–private sequencing competition that ended in compromise with the first draft of the human DNA sequence announced in 2000.

[90]

Twenty-first century biological sciences

At the beginning of the 21st century, biological sciences converged with previously differentiated new and classic disciplines like

Physics into research fields like

Biophysics. Advances were made in analytical chemistry and physics instrumentation including improved sensors, optics, tracers, instrumentation, signal processing, networks, robots, satellites, and compute power for data collection, storage, analysis, modeling, visualization, and simulations.

These technology advances allowed theoretical and experimental research including internet publication of molecular biochemistry, biological systems, and ecosystems science. This enabled worldwide access to better measurements, theoretical models, complex simulations, theory predictive model experimentation, analysis, worldwide internet observational

data reporting, open peer-review, collaboration, and internet publication. New fields of biological sciences research emerged including

Bioinformatics,

Neuroscience,

Theoretical biology,

Computational genomics,

Astrobiology and

Synthetic Biology.