From Wikipedia, the free encyclopedia

The multiverse (or meta-universe) is the hypothetical set of infinite or finite possible universes (including the Universe we consistently experience) that together comprise everything that exists: the entirety of space, time, matter, and energy as well as the physical laws and constants that describe them. The various universes within the multiverse are sometimes called "parallel universes" or "alternate universes".

The structure of the multiverse, the nature of each universe within it and the relationships among the various constituent universes, depend on the specific multiverse hypothesis considered. Multiple universes have been hypothesized in cosmology, physics, astronomy, religion, philosophy, transpersonal psychology, and fiction, particularly in science fiction and fantasy. In these contexts, parallel universes are also called "alternate universes", "quantum universes", "interpenetrating dimensions", "parallel dimensions", "parallel worlds", "alternate realities", "alternate timelines", and "dimensional planes", among others. The term 'multiverse' was coined in 1895 by the American philosopher and psychologist William James in a different context.[1]

The multiverse hypothesis is a source of debate within the physics community. Physicists disagree about whether the multiverse exists, and whether the multiverse is a proper subject of scientific inquiry.[2] Supporters of one of the multiverse hypotheses include Stephen Hawking,[3] Brian Greene,[4][5] Max Tegmark,[6] Alan Guth,[7] Andrei Linde,[8] Michio Kaku,[9] David Deutsch,[10] Leonard Susskind,[11] Raj Pathria,[12] Alexander Vilenkin,[13] Laura Mersini-Houghton,[14][15] Neil deGrasse Tyson[16] and Sean Carroll.[17] In contrast, those who are not proponents of the multiverse include: Nobel laureate Steven Weinberg,[18] Nobel laureate David Gross,[19] Paul Steinhardt,[20] Neil Turok,[21] Viatcheslav Mukhanov,[22] George Ellis,[23][24] Jim Baggott,[25] and Paul Davies. Some argue that the multiverse question is philosophical rather than scientific, that the multiverse cannot be a scientific question because it lacks falsifiability, or even that the multiverse hypothesis is harmful or pseudoscientific.

Multiverse hypotheses in physics

Categories

Max Tegmark and Brian Greene have devised classification schemes that categorize the various theoretical types of multiverse, or types of universe that might theoretically comprise a multiverse ensemble.Max Tegmark's four levels

Cosmologist Max Tegmark has provided a taxonomy of universes beyond the familiar observable universe. The levels according to Tegmark's classification are arranged such that subsequent levels can be understood to encompass and expand upon previous levels, and they are briefly described below.[26][27]Level I: Beyond our cosmological horizon

A generic prediction of chaotic inflation is an infinite ergodic universe, which, being infinite, must contain Hubble volumes realizing all initial conditions.Accordingly, an infinite universe will contain an infinite number of Hubble volumes, all having the same physical laws and physical constants. In regard to configurations such as the distribution of matter, almost all will differ from our Hubble volume. However, because there are infinitely many, far beyond the cosmological horizon, there will eventually be Hubble volumes with similar, and even identical, configurations. Tegmark estimates that an identical volume to ours should be about 1010115 meters away from us.[6] Given infinite space, there would, in fact, be an infinite number of Hubble volumes identical to ours in the Universe.[28] This follows directly from the cosmological principle, wherein it is assumed our Hubble volume is not special or unique.

Level II: Universes with different physical constants

"Bubble universes": every disk is a bubble universe (Universe 1 to Universe 6 are different bubbles; they have physical constants that are different from our universe); our universe is just one of the bubbles.

In the chaotic inflation theory, a variant of the cosmic inflation theory, the multiverse as a whole is stretching and will continue doing so forever,[29] but some regions of space stop stretching and form distinct bubbles, like gas pockets in a loaf of rising bread. Such bubbles are embryonic level I multiverses. Linde and Vanchurin calculated the number of these universes to be on the scale of 101010,000,000.[30]

Different bubbles may experience different spontaneous symmetry breaking resulting in different properties such as different physical constants.[28]

This level also includes John Archibald Wheeler's oscillatory universe theory and Lee Smolin's fecund universes theory.

Level III: Many-worlds interpretation of quantum mechanics

Hugh Everett's many-worlds interpretation (MWI) is one of several mainstream interpretations of quantum mechanics. In brief, one aspect of quantum mechanics is that certain observations cannot be predicted absolutely.Instead, there is a range of possible observations, each with a different probability. According to the MWI, each of these possible observations corresponds to a different universe. Suppose a six-sided die is thrown and that the result of the throw corresponds to a quantum mechanics observable. All six possible ways the die can fall correspond to six different universes.

Tegmark argues that a level III multiverse does not contain more possibilities in the Hubble volume than a level I-II multiverse. In effect, all the different "worlds" created by "splits" in a level III multiverse with the same physical constants can be found in some Hubble volume in a level I multiverse. Tegmark writes that "The only difference between Level I and Level III is where your doppelgängers reside. In Level I they live elsewhere in good old three-dimensional space. In Level III they live on another quantum branch in infinite-dimensional Hilbert space." Similarly, all level II bubble universes with different physical constants can in effect be found as "worlds" created by "splits" at the moment of spontaneous symmetry breaking in a level III multiverse.[28] According to Nomura[31] and Bousso and Susskind,[11] this is because global spacetime appearing in the (eternally) inflating multiverse is a redundant concept. This implies that the multiverses of Level I, II, and III are, in fact, the same thing. This hypothesis is referred to as "Multiverse = Quantum Many Worlds".

Related to the many-worlds idea are Richard Feynman's multiple histories interpretation and H. Dieter Zeh's many-minds interpretation.

Level IV: Ultimate ensemble

The ultimate ensemble or mathematical universe hypothesis is the hypothesis of Tegmark himself.[32] This level considers equally real all universes that can be described by different mathematical structures. Tegmark writes that "abstract mathematics is so general that any Theory Of Everything (TOE) that is definable in purely formal terms (independent of vague human terminology) is also a mathematical structure. For instance, a TOE involving a set of different types of entities (denoted by words, say) and relations between them (denoted by additional words) is nothing but what mathematicians call a set-theoretical model, and one can generally find a formal system that it is a model of." He argues this "implies that any conceivable parallel universe theory can be described at Level IV" and "subsumes all other ensembles, therefore brings closure to the hierarchy of multiverses, and there cannot be say a Level V."[6]Jürgen Schmidhuber, however, says the "set of mathematical structures" is not even well-defined, and admits only universe representations describable by constructive mathematics, that is, computer programs. He explicitly includes universe representations describable by non-halting programs whose output bits converge after finite time, although the convergence time itself may not be predictable by a halting program, due to Kurt Gödel's limitations.[33][34][35] He also explicitly discusses the more restricted ensemble of quickly computable universes.[36]

Brian Greene's nine types

American theoretical physicist and string theorist Brian Greene discussed nine types of parallel universes:[37]- Quilted

- The quilted multiverse works only in an infinite universe. With an infinite amount of space, every possible event will occur an infinite number of times. However, the speed of light prevents us from being aware of these other identical areas.

- Inflationary

- The inflationary multiverse is composed of various pockets where inflation fields collapse and form new universes.

- Brane

- The brane multiverse follows from M-theory and states that our universe is a 3-dimensional brane that exists with many others on a higher-dimensional brane or "bulk". Particles are bound to their respective branes except for gravity.

- Cyclic

- The cyclic multiverse (via the ekpyrotic scenario) has multiple branes (each a universe) that collided, causing Big Bangs. The universes bounce back and pass through time, until they are pulled back together and again collide, destroying the old contents and creating them anew.

- Landscape

- The landscape multiverse relies on string theory's Calabi–Yau shapes. Quantum fluctuations drop the shapes to a lower energy level, creating a pocket with a different set of laws from the surrounding space.

- Quantum

- The quantum multiverse creates a new universe when a diversion in events occurs, as in the many-worlds interpretation of quantum mechanics.

- Holographic

- The holographic multiverse is derived from the theory that the surface area of a space can simulate the volume of the region.

- Simulated

- The simulated multiverse exists on complex computer systems that simulate entire universes.

- Ultimate

- The ultimate multiverse contains every mathematically possible universe under different laws of physics.

Cyclic theories

In several theories there is a series of infinite, self-sustaining cycles (for example: an eternity of Big Bang-Big crunches).M-theory

A multiverse of a somewhat different kind has been envisaged within string theory and its higher-dimensional extension, M-theory.[38] These theories require the presence of 10 or 11 spacetime dimensions respectively. The extra 6 or 7 dimensions may either be compactified on a very small scale, or our universe may simply be localized on a dynamical (3+1)-dimensional object, a D-brane. This opens up the possibility that there are other branes which could support "other universes".[39][40] This is unlike the universes in the "quantum multiverse", but both concepts can operate at the same time.[citation needed]Some scenarios postulate that our big bang was created, along with our universe, by the collision of two branes.[39][40]

Black-hole cosmology

A black-hole cosmology is a cosmological model in which the observable universe is the interior of a black hole existing as one of possibly many inside a larger universe. This includes the theory of white holes of which are on the opposite side of space time. While a black hole sucks everything in including light, a white hole releases matter and light, hence the name "white hole".Anthropic principle

The concept of other universes has been proposed to explain how our own universe appears to be fine-tuned for conscious life as we experience it. If there were a large (possibly infinite) number of universes, each with possibly different physical laws (or different fundamental physical constants), some of these universes, even if very few, would have the combination of laws and fundamental parameters that are suitable for the development of matter, astronomical structures, elemental diversity, stars, and planets that can exist long enough for life to emerge and evolve. The weak anthropic principle could then be applied to conclude that we (as conscious beings) would only exist in one of those few universes that happened to be finely tuned, permitting the existence of life with developed consciousness. Thus, while the probability might be extremely small that any particular universe would have the requisite conditions for life (as we understand life) to emerge and evolve, this does not require intelligent design as an explanation for the conditions in the Universe that promote our existence in it.Search for evidence

Around 2010, scientists such as Stephen M. Feeney analyzed Wilkinson Microwave Anisotropy Probe (WMAP) data and claimed to find preliminary evidence suggesting that our universe collided with other (parallel) universes in the distant past.[41][unreliable source?][42][43][44] However, a more thorough analysis of data from the WMAP and from the Planck satellite, which has a resolution 3 times higher than WMAP, failed to find any statistically significant evidence of such a bubble universe collision.[45][46] In addition, there is no evidence of any gravitational pull of other universes on ours.[47][48]Criticism

Non-scientific claims

In his 2003 NY Times opinion piece, A Brief History of the Multiverse, author and cosmologist, Paul Davies, offers a variety of arguments that multiverse theories are non-scientific :[49]For a start, how is the existence of the other universes to be tested? To be sure, all cosmologists accept that there are some regions of the universe that lie beyond the reach of our telescopes, but somewhere on the slippery slope between that and the idea that there are an infinite number of universes, credibility reaches a limit. As one slips down that slope, more and more must be accepted on faith, and less and less is open to scientific verification. Extreme multiverse explanations are therefore reminiscent of theological discussions. Indeed, invoking an infinity of unseen universes to explain the unusual features of the one we do see is just as ad hoc as invoking an unseen Creator. The multiverse theory may be dressed up in scientific language, but in essence it requires the same leap of faith.Taking cosmic inflation as a popular case in point, George Ellis, writing in August 2011, provides a balanced criticism of not only the science, but as he suggests, the scientific philosophy, by which multiverse theories are generally substantiated. He, like most cosmologists, accepts Tegmark's level I "domains", even though they lie far beyond the cosmological horizon. Likewise, the multiverse of cosmic inflation is said to exist very far away. It would be so far away, however, that it's very unlikely any evidence of an early interaction will be found. He argues that for many theorists, the lack of empirical testability or falsifiability is not a major concern. "Many physicists who talk about the multiverse, especially advocates of the string landscape, do not care much about parallel universes per se. For them, objections to the multiverse as a concept are unimportant. Their theories live or die based on internal consistency and, one hopes, eventual laboratory testing." Although he believes there's little hope that will ever be possible, he grants that the theories on which the speculation is based, are not without scientific merit. He concludes that multiverse theory is a "productive research program":[50]

— Paul Davies, A Brief History of the Multiverse

As skeptical as I am, I think the contemplation of the multiverse is an excellent opportunity to reflect on the nature of science and on the ultimate nature of existence: why we are here… In looking at this concept, we need an open mind, though not too open. It is a delicate path to tread. Parallel universes may or may not exist; the case is unproved. We are going to have to live with that uncertainty. Nothing is wrong with scientifically based philosophical speculation, which is what multiverse proposals are. But we should name it for what it is.

— George Ellis, Scientific American, Does the Multiverse Really Exist?

Occam's razor

Proponents and critics disagree about how to apply Occam's razor. Critics argue that to postulate a practically infinite number of unobservable universes just to explain our own seems contrary to Occam's razor.[51] In contrast, proponents argue that, in terms of Kolmogorov complexity, the proposed multiverse is simpler than a single idiosyncratic universe.[28]For example, multiverse proponent Max Tegmark argues:

[A]n entire ensemble is often much simpler than one of its members. This principle can be stated more formally using the notion of algorithmic information content. The algorithmic information content in a number is, roughly speaking, the length of the shortest computer program that will produce that number as output. For example, consider the set of all integers. Which is simpler, the whole set or just one number? Naively, you might think that a single number is simpler, but the entire set can be generated by quite a trivial computer program, whereas a single number can be hugely long. Therefore, the whole set is actually simpler... (Similarly), the higher-level multiverses are simpler. Going from our universe to the Level I multiverse eliminates the need to specify initial conditions, upgrading to Level II eliminates the need to specify physical constants, and the Level IV multiverse eliminates the need to specify anything at all.... A common feature of all four multiverse levels is that the simplest and arguably most elegant theory involves parallel universes by default. To deny the existence of those universes, one needs to complicate the theory by adding experimentally unsupported processes and ad hoc postulates: finite space, wave function collapse and ontological asymmetry. Our judgment therefore comes down to which we find more wasteful and inelegant: many worlds or many words. Perhaps we will gradually get used to the weird ways of our cosmos and find its strangeness to be part of its charm.[28]Princeton cosmologist Paul Steinhardt used the 2014 Annual Edge Question to voice his opposition to multiverse theorizing:

— Max Tegmark, "Parallel universes. Not just a staple of science fiction, other universes are a direct implication of cosmological observations." Scientific American 2003 May;288(5):40–51

A pervasive idea in fundamental physics and cosmology that should be retired: the notion that we live in a multiverse in which the laws of physics and the properties of the cosmos vary randomly from one patch of space to another. According to this view, the laws and properties within our observable universe cannot be explained or predicted because they are set by chance. Different regions of space too distant to ever be observed have different laws and properties, according to this picture. Over the entire multiverse, there are infinitely many distinct patches. Among these patches, in the words of Alan Guth, "anything that can happen will happen—and it will happen infinitely many times". Hence, I refer to this concept as a Theory of Anything. Any observation or combination of observations is consistent with a Theory of Anything. No observation or combination of observations can disprove it. Proponents seem to revel in the fact that the Theory cannot be falsified. The rest of the scientific community should be up in arms since an unfalsifiable idea lies beyond the bounds of normal science. Yet, except for a few voices, there has been surprising complacency and, in some cases, grudging acceptance of a Theory of Anything as a logical possibility. The scientific journals are full of papers treating the Theory of Anything seriously. What is going on?[20]Steinhardt claims that multiverse theories have gained currency mostly because too much has been invested in theories that have failed, e.g. inflation or string theory. He tends to see in them an attempt to redefine the values of science to which he objects even more strongly:

— Paul Steinhardt, "Theories of Anything" edge.com'

A Theory of Anything is useless because it does not rule out any possibility and worthless because it submits to no do-or-die tests. (Many papers discuss potential observable consequences, but these are only possibilities, not certainties, so the Theory is never really put at risk.)[20]

— Paul Steinhardt, "Theories of Anything" edge.com'

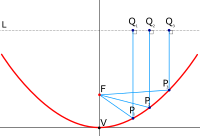

, where

, where  is its focal length. (See "

is its focal length. (See " where

where  is the focal length,

is the focal length,  is the depth of the dish (measured along the axis of symmetry from the vertex to the plane of the rim), and

is the depth of the dish (measured along the axis of symmetry from the vertex to the plane of the rim), and  is the radius of the rim. All units must be the same. If two of these three quantities are known, this equation can be used to calculate the third.

is the radius of the rim. All units must be the same. If two of these three quantities are known, this equation can be used to calculate the third. (or the equivalent:

(or the equivalent:  and

and  where

where

and

and  where

where  means the

means the  , i.e. its logarithm to base "

, i.e. its logarithm to base " where the symbols are defined as above. This can be compared with the formulae for the volumes of a

where the symbols are defined as above. This can be compared with the formulae for the volumes of a  a

a  where

where  and a

and a

is the aperture area of the dish, the area enclosed by the rim, which is proportional to the amount of sunlight the reflector dish can intercept.

is the aperture area of the dish, the area enclosed by the rim, which is proportional to the amount of sunlight the reflector dish can intercept.