Computed Tomography (CT) of a head, from top to base of the skull

Para-sagittal MRI of the head in a patient with benign familial macrocephaly.

Neuroimaging or brain imaging is the use of various techniques to either directly or indirectly image the structure, function/pharmacology of the nervous system. It is a relatively new discipline within medicine, neuroscience, and psychology.[1] Physicians who specialize in the performance and interpretation of neuroimaging in the clinical setting are neuroradiologists.

Neuroimaging falls into two broad categories:

- Structural imaging, which deals with the structure of the nervous system and the diagnosis of gross (large scale) intracranial disease (such as tumor) and injury.

- Functional imaging, which is used to diagnose metabolic diseases and lesions on a finer scale (such as Alzheimer's disease) and also for neurological and cognitive psychology research and building brain-computer interfaces.

History

The first chapter of the history of neuroimaging traces back to the Italian neuroscientist Angelo Mosso who invented the 'human circulation balance', which could non-invasively measure the redistribution of blood during emotional and intellectual activity.[2] However, even if only briefly mentioned by William James in 1890, the details and precise workings of this balance and the experiments Mosso performed with it have remained largely unknown until the recent discovery of the original instrument as well as Mosso’s reports by Stefano Sandrone and colleagues.[3]In 1918 the American neurosurgeon Walter Dandy introduced the technique of ventriculography. X-ray images of the ventricular system within the brain were obtained by injection of filtered air directly into one or both lateral ventricles of the brain. Dandy also observed that air introduced into the subarachnoid space via lumbar spinal puncture could enter the cerebral ventricles and also demonstrate the cerebrospinal fluid compartments around the base of the brain and over its surface. This technique was called pneumoencephalography.

In 1927 Egas Moniz introduced cerebral angiography, whereby both normal and abnormal blood vessels in and around the brain could be visualized with great precision.

In the early 1970s, Allan McLeod Cormack and Godfrey Newbold Hounsfield introduced computerized axial tomography (CAT or CT scanning), and ever more detailed anatomic images of the brain became available for diagnostic and research purposes. Cormack and Hounsfield won the 1979 Nobel Prize for Physiology or Medicine for their work. Soon after the introduction of CAT in the early 1980s, the development of radioligands allowed single photon emission computed tomography (SPECT) and positron emission tomography (PET) of the brain.

More or less concurrently, magnetic resonance imaging (MRI or MR scanning) was developed by researchers including Peter Mansfield and Paul Lauterbur, who were awarded the Nobel Prize for Physiology or Medicine in 2003. In the early 1980s MRI was introduced clinically, and during the 1980s a veritable explosion of technical refinements and diagnostic MR applications took place. Scientists soon learned that the large blood flow changes measured by PET could also be imaged by the correct type of MRI. Functional magnetic resonance imaging (fMRI) was born, and since the 1990s, fMRI has come to dominate the brain mapping field due to its low invasiveness, lack of radiation exposure, and relatively wide availability.

In the early 2000s the field of neuroimaging reached the stage where limited practical applications of functional brain imaging have become feasible. The main application area is crude forms of brain-computer interface.

Indications

Neuroimaging follows a neurological examination in which a physician has found cause to more deeply investigate a patient who has or may have a neurological disorder.One of the more common neurological problems which a person may experience is simple syncope.[4][5] In cases of simple syncope in which the patient's history does not suggest other neurological symptoms, the diagnosis includes a neurological examination but routine neurological imaging is not indicated because the likelihood of finding a cause in the central nervous system is extremely low and the patient is unlikely to benefit from the procedure.[5]

Neuroimaging is not indicated for patients with stable headaches which are diagnosed as migraine.[6] Studies indicate that presence of migraine does not increase a patient's risk for intracranial disease.[6] A diagnosis of migraine which notes the absence of other problems, such as papilledema, would not indicate a need for neuroimaging.[6] In the course of conducting a careful diagnosis, the physician should consider whether the headache has a cause other than the migraine and might require neuroimaging.[6]

Another indication for neuroimaging is CT-, MRI- and PET-guided stereotactic surgery or radiosurgery for treatment of intracranial tumors, arteriovenous malformations and other surgically treatable conditions.[7][8][9][10]

Brain imaging techniques

Computed axial tomography

Computed tomography (CT) or Computed Axial Tomography (CAT) scanning uses a series of x-rays of the head taken from many different directions. Typically used for quickly viewing brain injuries, CT scanning uses a computer program that performs a numerical integral calculation (the inverse Radon transform) on the measured x-ray series to estimate how much of an x-ray beam is absorbed in a small volume of the brain. Typically the information is presented as cross sections of the brain.[11]Diffuse optical imaging

Diffuse optical imaging (DOI) or diffuse optical tomography (DOT) is a medical imaging modality which uses near infrared light to generate images of the body. The technique measures the optical absorption of haemoglobin, and relies on the absorption spectrum of haemoglobin varying with its oxygenation status. High-density diffuse optical tomography (HD-DOT) has been compared directly to fMRI using response to visual stimulation in subjects studied with both techniques, with reassuringly similar results.[12] HD-DOT has also been compared to fMRI in terms of language tasks and resting state functional connectivity.[13]Event-related optical signal (EROS) is a brain-scanning technique which uses infrared light through optical fibers to measure changes in optical properties of active areas of the cerebral cortex. Whereas techniques such as diffuse optical imaging (DOT) and near infrared spectroscopy (NIRS) measure optical absorption of haemoglobin, and thus are based on blood flow, EROS takes advantage of the scattering properties of the neurons themselves, and thus provides a much more direct measure of cellular activity. EROS can pinpoint activity in the brain within millimeters (spatially) and within milliseconds (temporally). Its biggest downside is the inability to detect activity more than a few centimeters deep. EROS is a new, relatively inexpensive technique that is non-invasive to the test subject. It was developed at the University of Illinois at Urbana-Champaign where it is now used in the Cognitive Neuroimaging Laboratory of Dr. Gabriele Gratton and Dr. Monica Fabiani.

Magnetic resonance imaging

Sagittal MRI slice at the midline.

Magnetic resonance imaging (MRI) uses magnetic fields and radio waves to produce high quality two- or three-dimensional images of brain structures without use of ionizing radiation (X-rays) or radioactive tracers.

Functional magnetic resonance imaging

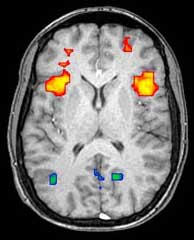

Axial MRI slice at the level of the basal ganglia, showing fMRI BOLD signal changes overlaid in red (increase) and blue (decrease) tones.

Functional magnetic resonance imaging (fMRI) and arterial spin labeling (ASL) relies on the paramagnetic properties of oxygenated and deoxygenated hemoglobin to see images of changing blood flow in the brain associated with neural activity. This allows images to be generated that reflect which brain structures are activated (and how) during performance of different tasks or at resting state. According to the oxygenation hypothesis, changes in oxygen usage in regional cerebral blood flow during cognitive or behavioral activity can be associated with the regional neurons as being directly related to the cognitive or behavioral tasks being attended.

Most fMRI scanners allow subjects to be presented with different visual images, sounds and touch stimuli, and to make different actions such as pressing a button or moving a joystick. Consequently, fMRI can be used to reveal brain structures and processes associated with perception, thought and action. The resolution of fMRI is about 2-3 millimeters at present, limited by the spatial spread of the hemodynamic response to neural activity. It has largely superseded PET for the study of brain activation patterns. PET, however, retains the significant advantage of being able to identify specific brain receptors (or transporters) associated with particular neurotransmitters through its ability to image radiolabelled receptor "ligands" (receptor ligands are any chemicals that stick to receptors).

As well as research on healthy subjects, fMRI is increasingly used for the medical diagnosis of disease. Because fMRI is exquisitely sensitive to oxygen usage in blood flow, it is extremely sensitive to early changes in the brain resulting from ischemia (abnormally low blood flow), such as the changes which follow stroke. Early diagnosis of certain types of stroke is increasingly important in neurology, since substances which dissolve blood clots may be used in the first few hours after certain types of stroke occur, but are dangerous to use afterwards. Brain changes seen on fMRI may help to make the decision to treat with these agents. With between 72% and 90% accuracy where chance would achieve 0.8%,[14] fMRI techniques can decide which of a set of known images the subject is viewing.[15]

Magnetoencephalography

Magnetoencephalography (MEG) is an imaging technique used to measure the magnetic fields produced by electrical activity in the brain via extremely sensitive devices such as superconducting quantum interference devices (SQUIDs) or spin exchange relaxation-free[16] (SERF) magnetometers. MEG offers a very direct measurement of neural electrical activity (compared to fMRI for example) with very high temporal resolution but relatively low spatial resolution. The advantage of measuring the magnetic fields produced by neural activity is that they are likely to be less distorted by surrounding tissue (particularly the skull and scalp) compared to the electric fields measured by electroencephalography (EEG). Specifically, it can be shown that magnetic fields produced by electrical activity are not affected by the surrounding head tissue, when the head is modeled as a set of concentric spherical shells, each being an isotropic homogeneous conductor. Real heads are non-spherical and have largely anisotropic conductivities (particularly white matter and skull). While skull anisotropy has negligible effect on MEG (unlike EEG), white matter anisotropy strongly affects MEG measurements for radial and deep sources.[17] Note, however, that the skull was assumed to be uniformly anisotropic in this study, which is not true for a real head: the absolute and relative thicknesses of diploë and tables layers vary among and within the skull bones. This makes it likely that MEG is also affected by the skull anisotropy,[18] although probably not to the same degree as EEG.There are many uses for MEG, including assisting surgeons in localizing a pathology, assisting researchers in determining the function of various parts of the brain, neurofeedback, and others.

Positron emission tomography

Positron emission tomography (PET) measures emissions from radioactively labeled metabolically active chemicals that have been injected into the bloodstream. The emission data are computer-processed to produce 2- or 3-dimensional images of the distribution of the chemicals throughout the brain.[19]:57 The positron emitting radioisotopes used are produced by a cyclotron, and chemicals are labeled with these radioactive atoms. The labeled compound, called a radiotracer, is injected into the bloodstream and eventually makes its way to the brain. Sensors in the PET scanner detect the radioactivity as the compound accumulates in various regions of the brain. A computer uses the data gathered by the sensors to create multicolored 2- or 3-dimensional images that show where the compound acts in the brain. Especially useful are a wide array of ligands used to map different aspects of neurotransmitter activity, with by far the most commonly used PET tracer being a labeled form of glucose (see Fludeoxyglucose (18F) (FDG)).The greatest benefit of PET scanning is that different compounds can show blood flow and oxygen and glucose metabolism in the tissues of the working brain. These measurements reflect the amount of brain activity in the various regions of the brain and allow to learn more about how the brain works. PET scans were superior to all other metabolic imaging methods in terms of resolution and speed of completion (as little as 30 seconds), when they first became available. The improved resolution permitted better study to be made as to the area of the brain activated by a particular task. The biggest drawback of PET scanning is that because the radioactivity decays rapidly, it is limited to monitoring short tasks.[19]:60 Before fMRI technology came online, PET scanning was the preferred method of functional (as opposed to structural) brain imaging, and it continues to make large contributions to neuroscience.

PET scanning is also used for diagnosis of brain disease, most notably because brain tumors, strokes, and neuron-damaging diseases which cause dementia (such as Alzheimer's disease) all cause great changes in brain metabolism, which in turn causes easily detectable changes in PET scans. PET is probably most useful in early cases of certain dementias (with classic examples being Alzheimer's disease and Pick's disease) where the early damage is too diffuse and makes too little difference in brain volume and gross structure to change CT and standard MRI images enough to be able to reliably differentiate it from the "normal" range of cortical atrophy which occurs with aging (in many but not all) persons, and which does not cause clinical dementia.

Single-photon emission computed tomography

Single-photon emission computed tomography (SPECT) is similar to PET and uses gamma ray-emitting radioisotopes and a gamma camera to record data that a computer uses to construct two- or three-dimensional images of active brain regions.[20] SPECT relies on an injection of radioactive tracer, or "SPECT agent," which is rapidly taken up by the brain but does not redistribute. Uptake of SPECT agent is nearly 100% complete within 30 to 60 seconds, reflecting cerebral blood flow (CBF) at the time of injection. These properties of SPECT make it particularly well-suited for epilepsy imaging, which is usually made difficult by problems with patient movement and variable seizure types. SPECT provides a "snapshot" of cerebral blood flow since scans can be acquired after seizure termination (so long as the radioactive tracer was injected at the time of the seizure). A significant limitation of SPECT is its poor resolution (about 1 cm) compared to that of MRI. Today, SPECT machines with Dual Detector Heads are commonly used, although Triple Detector Head machines are available in the marketplace. Tomographic reconstruction, (mainly used for functional "snapshots" of the brain) requires multiple projections from Detector Heads which rotate around the human skull, so some researchers have developed 6 and 11 Detector Head SPECT machines to cut imaging time and give higher resolution.[21][22]Like PET, SPECT also can be used to differentiate different kinds of disease processes which produce dementia, and it is increasingly used for this purpose. Neuro-PET has a disadvantage of requiring use of tracers with half-lives of at most 110 minutes, such as FDG. These must be made in a cyclotron, and are expensive or even unavailable if necessary transport times are prolonged more than a few half-lives. SPECT, however, is able to make use of tracers with much longer half-lives, such as technetium-99m, and as a result, is far more widely available.

Cranial ultrasound

Cranial ultrasound is usually only used in babies, whose open fontanelles provide acoustic windows allowing ultrasound imaging of the brain. Advantages include absence of ionising radiation and the possibility of bedside scanning, but the lack of soft-tissue detail means MRI may be preferred for some conditions.Advantages and Concerns of Neuroimaging Techniques

Functional Magnetic Resonance Imaging (fMRI)

fMRI is commonly classified as a minimally-to-moderate risk due to its non-invasiveness compared to other imaging methods. fMRI uses blood oxygenation level dependent (BOLD)-contrast in order to produce its form of imaging. BOLD-contrast is a naturally occurring process in the body so fMRI is often preferred over imaging methods that require radioactive markers to produce similar imaging.[23] A concern in the use of fMRI is its use in individuals with medical implants or devices and metallic items in the body. The magnetic resonance (MR) emitted from the equipment can cause failure of medical devices and attract metallic objects in the body if not properly screened for. Currently, the FDA classifies medical implants and devices into three categories, depending on MR-compatibility: MR-safe (safe in all MR environments), MR-unsafe (unsafe in any MR environment), and MR-conditional (MR-compatible in certain environments, requiring further information).[24]- FDA MR safety labels for implants and devices

-

MR Safe[25]