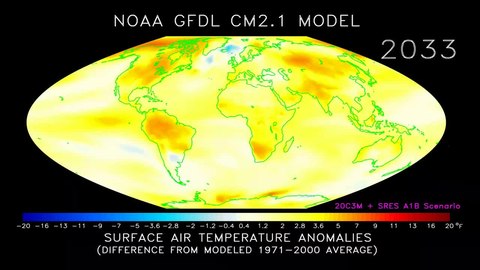

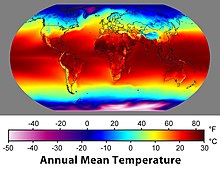

The Earth Simulator (ES) (地球シミュレータ, Chikyū Shimyurēta), developed by the Japanese government's initiative "Earth Simulator Project", was a highly parallel vector supercomputer system for running global climate models to evaluate the effects of global warming and problems in solid earth geophysics. The system was developed for Japan Aerospace Exploration Agency, Japan Atomic Energy Research Institute, and Japan Marine Science and Technology Center (JAMSTEC) in 1997.

Construction started in October 1999, and the site officially opened on 11 March 2002. The project cost 60 billion yen.

Built by NEC, ES was based on their SX-6 architecture. It consisted of 640 nodes with eight vector processors and 16 gigabytes of computer memory at each node, for a total of 5120 processors and 10 terabytes of memory. Two nodes were installed per 1 metre × 1.4 metre × 2 metre cabinet. Each cabinet consumed 20 kW of power. The system had 700 terabytes of disk storage (450 for the system and 250 for the users) and 1.6 petabytes of mass storage in tape drives. It was able to run holistic simulations of global climate in both the atmosphere and the oceans down to a resolution of 10 km. Its performance on the LINPACK benchmark was 35.86 TFLOPS, which was almost five times faster than the previous fastest supercomputer, ASCI White. As of 2020, comparable performance can be achieved by using 4 Nvidia A100 GPUs, each with 9.746 FP64 TFlops.

ES was the fastest supercomputer in the world from 2002 to 2004. Its capacity was surpassed by IBM's Blue Gene/L prototype on 29 September 2004.

ES was replaced by the Earth Simulator 2 (ES2) in March 2009. ES2 is an NEC SX-9/E system, and has a quarter as many nodes each of 12.8 times the performance (3.2× clock speed, four times the processing resource per node), for a peak performance of 131 TFLOPS. With a delivered LINPACK performance of 122.4 TFLOPS, ES2 was the most efficient supercomputer in the world at that point. In November 2010, NEC announced that ES2 topped the Global FFT, one of the measures of the HPC Challenge Awards, with the performance number of 11.876 TFLOPS.

ES2 was replaced by the Earth Simulator 3 (ES3) in March 2015. ES3 is a NEC SX-ACE system with 5120 nodes, and a performance of 1.3 PFLOPS.

ES3, from 2017 to 2018, ran alongside Gyoukou, a supercomputer with immersion cooling that can achieve up to 19 PFLOPS.

System overview

Hardware

The Earth Simulator (ES for short) was developed as a national project by three governmental agencies: the National Space Development Agency of Japan (NASDA), the Japan Atomic Energy Research Institute (JAERI), and the Japan Marine Science and Technology Center (JAMSTEC). The ES is housed in the Earth Simulator Building (approx; 50m × 65m × 17m). The Earth Simulator 2 (ES2) uses 160 nodes of NEC's SX-9E. The upgrade of the Earth Simulator has been completed in March 2015. The Earth Simulator 3(ES3) system uses 5120 nodes of NEC's SX-ACE.

System configuration

The ES is a highly parallel vector supercomputer system of the distributed-memory type, and consisted of 160 processor nodes connected by Fat-Tree Network. Each Processor nodes is a system with a shared memory, consisting of 8 vector-type arithmetic processors, a 128-GB main memory system. The peak performance of each Arithmetic processors is 102.4Gflops. The ES as a whole thus consists of 1280 arithmetic processors with 20 TB of main memory and the theoretical performance of 131Tflops.

Construction of CPU

Each CPU consists of a 4-way super-scalar unit (SU), a vector unit (VU), and main memory access control unit on a single LSI chip. The CPU operates at a clock frequency of 3.2 GHz. Each VU has 72 vector registers, each of which has 256 vector elements, along with 8 sets of six different types of vector pipelines: addition /shifting, multiplication, division, logical operations, masking, and load/store. The same type of vector pipelines works together by a single vector instruction and pipelines of different types can operate concurrently.

Processor Node (PN)

The processor node is composed of 8 CPU and 10 memory modules.

Interconnection Network (IN)

The RCU is directly connected to the crossbar switches and controls inter-node data communications at 64 GB/s bidirectional transfer rate for both sending and receiving data. Thus the total bandwidth of inter-node network is about 10 TB/s.

Processor Node (PN) Cabinet

The processor node is composed two nodes of one cabinet, and consists of power supply part 8 memory modules and PCI box with 8 CPU modules.

Software

Below is the description of software technologies used in the operating system, Job Scheduling and the programming environment of ES2.

Operating system

The operating system running on ES, "Earth Simulator Operating System", is a custom version of NEC's SUPER-UX used for the NEC SX supercomputers that make up ES.

Mass storage file system

If a large parallel job running on 640 PNs reads from/writes to one disk installed in a PN, each PN accesses to the disk in sequence and performance degrades terribly. Although local I/O in which each PN reads from or writes to its own disk solves the problem, it is a very hard work to manage such a large number of partial files. Then ES adopts Staging and Global File System (GFS) that offers a high-speed I/O performance.

Job scheduling

ES is basically a batch-job system. Network Queuing System II (NQSII) is introduced to manage the batch job. Queue configuration of the Earth Simulator. ES has two-type queues. S batch queue is designed for single-node batch jobs and L batch queue is for multi-node batch queue. There are two-type queues. One is L batch queue and the other is S batch queue. S batch queue is aimed at being used for a pre-run or a post-run for large-scale batch jobs (making initial data, processing results of a simulation and other processes), and L batch queue is for a production run. Users choose the appropriate queue for their job.

- The nodes allocated to a batch job are used exclusively for that batch job.

- The batch job is scheduled based on elapsed time instead of CPU time.

Strategy (1) enables to estimate the job termination time and to make it easy to allocate nodes for the next batch jobs in advance. Strategy (2) contributes to an efficient job execution. The job can use the nodes exclusively and the processes in each node can be executed simultaneously. As a result, the large-scale parallel program is able to be executed efficiently. PNs of L-system are prohibited from access to the user disk to ensure enough disk I/O performance. herefore the files used by the batch job are copied from the user disk to the work disk before the job execution. This process is called "stage-in." It is important to hide this staging time for the job scheduling. Main steps of the job scheduling are summarized as follows;

- Node Allocation

- Stage-in (copies files from the user disk to the work disk automatically)

- Job Escalation (rescheduling for the earlier estimated start time if possible)

- Job Execution

- Stage-out (copies files from the work disk to the user disk automatically)

When a new batch job is submitted, the scheduler searches available nodes (Step.1). After the nodes and the estimated start time are allocated to the batch job, stage-in process starts (Step.2). The job waits until the estimated start time after stage-in process is finished. If the scheduler find the earlier start time than the estimated start time, it allocates the new start time to the batch job. This process is called "Job Escalation" (Step.3). When the estimated start time has arrived, the scheduler executes the batch job (Step.4). The scheduler terminates the batch job and starts stage-out process after the job execution is finished or the declared elapsed time is over (Step.5). To execute the batch job, the user logs into the login-server and submits the batch script to ES. And the user waits until the job execution is done. During that time, the user can see the state of the batch job using the conventional web browser or user commands. The node scheduling, the file staging and other processing are automatically processed by the system according to the batch script.

Programming environment

Programming model in ES

The ES hardware has a 3-level hierarchy of parallelism: vector processing in an AP, parallel processing with shared memory in a PN, and parallel processing among PNs via IN. To bring out high performance of ES fully, you must develop parallel programs that make the most use of such parallelism. the 3-level hierarchy of parallelism of ES can be used in two manners, which are called hybrid and flat parallelization, respectively . In the hybrid parallelization, the inter-node parallelism is expressed by HPF or MPI, and the intra-node by microtasking or OpenMP, and you must, therefore, consider the hierarchical parallelism in writing your programs. In the flat parallelization, the both inter- and intra-node parallelism can be expressed by HPF or MPI, and it is not necessary for you to consider such complicated parallelism. Generally speaking, the hybrid parallelization is superior to the flat in performance and vice versa in ease of programming. Note that the MPI libraries and the HPF runtimes are optimized to perform as well as possible both in the hybrid and flat parallelization.

Languages

Compilers for Fortran 90, C and C++ are available. All of them have an advanced capability of automatic vectorization and microtasking. Microtasking is a sort of multitasking provided for the Cray's supercomputer at the same time and is also used for intra-node parallelization on ES. Microtasking can be controlled by inserting directives into source programs or using the compiler's automatic parallelization. (Note that OpenMP is also available in Fortran 90 and C++ for intra-node parallelization.)

Parallelization

Message Passing Interface (MPI)

MPI is a message passing library based on the MPI-1 and MPI-2 standards and provides high-speed communication capability that fully exploits the features of IXS and shared memory. It can be used for both intra- and inter-node parallelization. An MPI process is assigned to an AP in the flat parallelization, or to a PN that contains microtasks or OpenMP threads in the hybrid parallelization. MPI libraries are designed and optimizedcarefully to achieve highest performance of communication on the ES architecture in both of the parallelization manner.

High Performance Fortrans (HPF)

Principal users of ES are considered to be natural scientists who are not necessarily familiar with the parallel programming or rather dislike it. Accordingly, a higher-level parallel language is in great demand. HPF/SX provides easy and efficient parallel programming on ES to supply the demand. It supports the specifications of HPF2.0, its approved extensions, HPF/JA, and some unique extensions for ES

Tools

-Integrated development environment (PSUITE)

Integrated development environment (PSUITE) is integration of various tools to develop the program that operates by SUPER-UX. Because PSUITE assumes that various tools can be used by GUI, and has the coordinated function between tools, it comes to be able to develop the program more efficiently than the method of developing the past the program and easily.

-Debug Support

In SUPER-UX, the following are prepared as strong debug support functions to support the program development.

Facilities

Features of the Earth Simulator building

Protection from natural disasters

The Earth Simulator Center has several special features that help to protect the computer from natural disasters or occurrences. A wire nest hangs over the building which helps to protect from lightning. The nest itself uses high-voltage shielded cables to release lightning current into the ground. A special light propagation system utilizes halogen lamps, installed outside of the shielded machine room walls, to prevent any magnetic interference from reaching the computers. The building is constructed on a seismic isolation system, composed of rubber supports, that protect the building during earthquakes.

Lightning protection system

Three basic features:

- Four poles at both sides of the Earth Simulator Building compose wire nest to protect the building from lightning strikes.

- Special high-voltage shielded cable is used for inductive wire which releases a lightning current to the earth.

- Ground plates are laid by keeping apart from the building about 10 meters.

Illumination

Lighting: Light propagation system inside a tube (255mm diameter, 44m(49yd) length, 19 tubes) Light source: halogen lamps of 1 kW Illumination: 300 lx at the floor in average The light sources installed out of the shielded machine room walls.

Seismic isolation system

11 isolators (1 ft height, 3.3 ft. Diameter, 20-layered rubbers supporting the bottom of the ES building)

Performance

LINPACK

The new Earth Simulator system, which began operation in March 2009, achieved sustained performance of 122.4 TFLOPS and computing efficiency (*2) of 93.38% on the LINPACK Benchmark (*1).

- 1. LINPACK Benchmark

The LINPACK Benchmark is a measure of a computer's performance and is used as a standard benchmark to rank computer systems in the TOP500 project. LINPACK is a program for performing numerical linear algebra on computers.

- 2. Computing efficiency

Computing efficiency is the ratio of sustained performance to a peak computing performance. Here, it is the ratio of 122.4TFLOPS to 131.072TFLOPS.

Computational performance of WRF on Earth Simulator

WRF (Weather Research and Forecasting Model) is a mesoscale meteorological simulation code which has been developed under the collaboration among US institutions, including NCAR (National Center for Atmospheric Research) and NCEP (National Centers for Environmental Prediction). JAMSTEC has optimized WRFV2 on the Earth Simulator (ES2) renewed in 2009 with the measurement of computational performance. As a result, it was successfully demonstrated that WRFV2 can run on the ES2 with outstanding and sustained performance.

The numerical meteorological simulation was conducted by using WRF on the Earth Simulator for the earth's hemisphere with the Nature Run model condition. The model spatial resolution is 4486 by 4486 horizontally with the grid spacing of 5 km and 101 levels vertically. Mostly adiabatic conditions were applied with the time integration step of 6 seconds. A very high performance on the Earth Simulator was achieved for high-resolution WRF. While the number of CPU cores used is only 1% as compared to the world fastest class system Jaguar (CRAY XT5) at Oak Ridge National Laboratory, the sustained performance obtained on the Earth Simulator is almost 50% of that measured on the Jaguar system. The peak performance ratio on the Earth Simulator is also record-high 22.2%.