| Enabling Act of 1933 | |

|---|---|

| |

| Reichstag (Weimar Republic) | |

| Passed | 23 March 1933 |

|---|---|

| Enacted | 23 March 1933 |

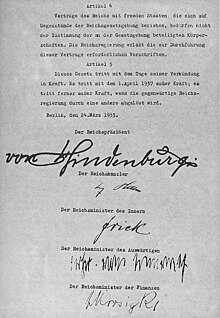

| Signed | 23 March 1933 |

| Signed by | Paul von Hindenburg |

| Commenced | 23 March 1933 |

| Repealed by | |

| Control Council Law No. 1 - Repealing of Nazi Laws | |

| Status: Repealed | |

| Part of a series on |

| Nazism |

|---|

The Enabling Act (German: Ermächtigungsgesetz) of 1933, officially titled Gesetz zur Behebung der Not von Volk und Reich ("Law to Remedy the Distress of People and Reich"), was a law that gave the German Cabinet—most importantly, the Chancellor—the powers to make and enforce laws without the involvement of the Reichstag and with no need to consult with Weimar President Paul von Hindenburg. Critically, the Enabling Act allowed the Chancellor to bypass the system of checks and balances in the government and these laws could explicitly violate individual rights prescribed in the Weimar Constitution.

In January 1933 Nazi Party leader Adolf Hitler convinced President Paul von Hindenburg to appoint him as chancellor, the head of the German government. Four weeks into his chancellorship, the Reichstag building caught fire in the middle of the night. Hitler blamed the incident on the communists and was convinced the arson was part of a larger effort to overthrow the German government, after which Hitler persuaded Hindenburg to enact the Reichstag Fire Decree. The decree abolished most civil liberties including the right to speak, assemble, protest, and due process. Using the decree the Nazis declared a state of emergency and began to arrest, intimidate, and purge his political enemies. Communists and labor union leaders were the first to be arrested and interned in the first Nazi concentration camps. By clearing the political arena of anyone willing to challenge him, Hitler submitted a proposal to the Reichstag that would immediately grant all legislative powers to the cabinet. This would in effect allow Hitler's government to act without concern to the constitution.

Despite outlawing the communists and repressing other opponents, the passage of the Enabling Act was not a guarantee. Hitler allied with other nationalists and conservative factions and they steamrolled over the Social Democrats in the March 5, 1933 German Federal Election.That election would be the last multiparty election held in a united Germany until 1990, fifty-seven years later, and occurred in an atmosphere of extreme voter intimidation on the part of the Nazis. Contrary to popular belief, Adolf Hitler did not command a majority in the Reichstag voting on the Enabling Act. The majority of Germans did not vote for the Nazi party, as Hitler's total vote was less than 45% despite the terror and fear fomented by his repression. In order for the enabling act to be passed the Nazis implemented a strategy of coercion, bribery, and manipulation. Hitler removed any remaining political obstacle so his coalition of conservatives, nationalists, and National Socialists built the Nazi dictatorship. The Communists had already been repressed and were not allowed to be present or to vote, and some Social Democrats were kept away as well. In the end most of those present voted for the act, except for the Social Democrats, who all voted against it.

The act passed in both the Reichstag and Reichsrat on 23 March 1933, and was signed by President Paul von Hindenburg later that day. Unless extended by the Reichstag, the act would expire after four years. With the Enabling Act now in force, the chancellor could pass and enforce unconstitutional laws without any objection. The combined effect of the two laws ultimately transformed Hitler's cabinet into a legal dictatorship and laid the groundwork for his totalitarian regime. The Nazis dramatically escalated the political repression, the party now armed with the Enabling Act outlawed all political activity and by July, the Nazis were the only legal party allowed to participate. The Reichstag from 1933 onward effectively became the rubber stamp parliament that Hitler always wanted. The Enabling Act would be renewed twice and would be rendered null once Nazi Germany collapsed to the Allies in 1945.

The passing of the Enabling Act is significant in German and world history as it marked the formal transition from the democratic Weimar Republic to the totalitarian Nazi dictatorship. From 1933 onwards Hitler continued to consolidate and centralize power via purges, and propaganda. The Hitlerian purges reached their height with the Night of the Long Knives. Once the purges of the Nazi party and German government concluded, Hitler had total control and authority over Germany and began the process of rearmament. Thus began the political and military struggles that ultimately culminated in the Second World War.

Background

After being appointed Chancellor of Germany on 30 January 1933, Hitler asked President von Hindenburg to dissolve the Reichstag. A general election was scheduled for 5 March 1933. A secret meeting was held between Hitler and 20 to 25 industrialists at the official residence of Hermann Göring in the Reichstag Presidential Palace, aimed at financing the election campaign of the Nazi Party.

The burning of the Reichstag, depicted by the Nazis as the beginning of a communist revolution, resulted in the presidential Reichstag Fire Decree, which among other things suspended freedom of press and habeas corpus rights just five days before the election. Hitler used the decree to have the Communist Party's offices raided and its representatives arrested, effectively eliminating them as a political force.

Although they received five million more votes than in the previous election, the Nazis failed to gain an absolute majority in parliament, and depended on the 8% of seats won by their coalition partner, the German National People's Party, to reach 52% in total.

To free himself from this dependency, Hitler had the cabinet, in its first post-election meeting on 15 March, draw up plans for an Enabling Act which would give the cabinet legislative power for four years. The Nazis devised the Enabling Act to gain complete political power without the need of the support of a majority in the Reichstag and without the need to bargain with their coalition partners. The Nazi regime was unique compared to its contemporaries most famously Joseph Stalin because Hitler did not seek to draft a completely new constitution whereas Stalin did so. Technically the Weimar Constitution of 1919 remained in effect even after the Enabling Act, only losing force when Berlin fell to the Soviet Union in 1945 and Germany surrendered.

Preparations and negotiations

The Enabling Act allowed the National Ministry (essentially the cabinet) to enact legislation, including laws deviating from or altering the constitution, without the consent of the Reichstag. Because this law allowed for departures from the constitution, it was itself considered a constitutional amendment. Thus, its passage required the support of two-thirds of those deputies who were present and voting. A quorum of two-thirds of the entire Reichstag was required to be present in order to call up the bill.

The Social Democrats (SPD) and the Communists (KPD) were expected to vote against the Act. The government had already arrested all Communist and some Social Democrat deputies under the Reichstag Fire Decree. The Nazis expected the parties representing the middle class, the Junkers and business interests to vote for the measure, as they had grown weary of the instability of the Weimar Republic and would not dare to resist.

Hitler believed that with the Centre Party members' votes, he would get the necessary two-thirds majority. Hitler negotiated with the Centre Party's chairman, Ludwig Kaas, a Catholic priest, finalizing an agreement by 22 March. Kaas agreed to support the Act in exchange for assurances of the Centre Party's continued existence, the protection of Catholics' civil and religious liberties, religious schools and the retention of civil servants affiliated with the Centre Party. It has also been suggested that some members of the SPD were intimidated by the presence of the Nazi Sturmabteilung (SA) throughout the proceedings.

Some historians, such as Klaus Scholder, have maintained that Hitler also promised to negotiate a Reichskonkordat with the Holy See, a treaty that formalized the position of the Catholic Church in Germany on a national level. Kaas was a close associate of Cardinal Pacelli, then Vatican Secretary of State (and later Pope Pius XII). Pacelli had been pursuing a German concordat as a key policy for some years, but the instability of Weimar governments as well as the enmity of some parties to such a treaty had blocked the project. The day after the Enabling Act vote, Kaas went to Rome in order to, in his own words, "investigate the possibilities for a comprehensive understanding between church and state". However, so far no evidence for a link between the Enabling Act and the Reichskonkordat signed on 20 July 1933 has surfaced.

Text

As with most of the laws passed in the process of Gleichschaltung, the Enabling Act is quite short, especially considering its implications. The full text, in German and English, follows:

| Gesetz zur Behebung der Not von Volk und Reich | Law to Remedy the Distress of the People and the Reich |

| Der Reichstag hat das folgende Gesetz beschlossen, das mit Zustimmung des Reichsrats hiermit verkündet wird, nachdem festgestellt ist, daß die Erfordernisse verfassungsändernder Gesetzgebung erfüllt sind: | The Reichstag has enacted the following law, which is hereby proclaimed with the assent of the Reichsrat, it having been established that the requirements for a constitutional amendment have been fulfilled: |

| Artikel 1 | Article 1 |

| Reichsgesetze können außer in dem in der Reichsverfassung vorgesehenen Verfahren auch durch die Reichsregierung beschlossen werden. Dies gilt auch für die in den Artikeln 85 Abs. 2 und 87 der Reichsverfassung bezeichneten Gesetze. | In addition to the procedure prescribed by the constitution, laws of the Reich may also be enacted by the government of the Reich. This includes the laws referred to by Articles 85 Paragraph 2 and Article 87 of the constitution. |

| Artikel 2 | Article 2 |

| Die von der Reichsregierung beschlossenen Reichsgesetze können von der Reichsverfassung abweichen, soweit sie nicht die Einrichtung des Reichstags und des Reichsrats als solche zum Gegenstand haben. Die Rechte des Reichspräsidenten bleiben unberührt. | Laws enacted by the government of the Reich may deviate from the constitution as long as they do not affect the institutions of the Reichstag and the Reichsrat. The rights of the President remain unaffected. |

| Artikel 3 | Article 3 |

| Die von der Reichsregierung beschlossenen Reichsgesetze werden vom Reichskanzler ausgefertigt und im Reichsgesetzblatt verkündet. Sie treten, soweit sie nichts anderes bestimmen, mit dem auf die Verkündung folgenden Tage in Kraft. Die Artikel 68 bis 77 der Reichsverfassung finden auf die von der Reichsregierung beschlossenen Gesetze keine Anwendung. | Laws enacted by the Reich government shall be issued by the Chancellor and announced in the Reich Gazette. They shall take effect on the day following the announcement, unless they prescribe a different date. Articles 68 to 77 of the Constitution do not apply to laws enacted by the Reich government. |

| Artikel 4 | Article 4 |

| Verträge des Reiches mit fremden Staaten, die sich auf Gegenstände der Reichsgesetzgebung beziehen, bedürfen für die Dauer der Geltung dieser Gesetze nicht der Zustimmung der an der Gesetzgebung beteiligten Körperschaften. Die Reichsregierung erläßt die zur Durchführung dieser Verträge erforderlichen Vorschriften. | Treaties of the Reich with foreign states, which relate to matters of Reich legislation, shall for the duration of the validity of these laws not require the consent of the legislative authorities. The Reich government shall enact the legislation necessary to implement these agreements. |

| Artikel 5 | Article 5 |

| Dieses Gesetz tritt mit dem Tage seiner Verkündung in Kraft. Es tritt mit dem 1. April 1937 außer Kraft; es tritt ferner außer Kraft, wenn die gegenwärtige Reichsregierung durch eine andere abgelöst wird. | This law enters into force on the day of its proclamation. It expires on 1 April 1937; it expires furthermore if the present Reich government is replaced by another. |

Articles 1 and 4 gave the government the right to draw up the budget and approve treaties without input from the Reichstag.

Passage

Debate within the Centre Party continued until the day of the vote, 23 March 1933, with Kaas advocating voting in favour of the act, referring to an upcoming written guarantee from Hitler, while former Chancellor Heinrich Brüning called for a rejection of the Act. The majority sided with Kaas, and Brüning agreed to maintain party discipline by voting for the Act.

The Reichstag, led by its President, Hermann Göring, changed its rules of procedure to make it easier to pass the bill. Under the Weimar Constitution, a quorum of two-thirds of the entire Reichstag membership was required to be present in order to bring up a constitutional amendment bill. In this case, 432 of the Reichstag's 647 deputies would have normally been required for a quorum. However, Göring reduced the quorum to 378 by not counting the 81 KPD deputies. Despite the virulent rhetoric directed against the Communists, the Nazis did not formally ban the KPD right away. Not only did they fear a violent uprising, but they hoped the KPD's presence on the ballot would siphon off votes from the SPD. However, it was an open secret that the KPD deputies would never be allowed to take their seats; they were thrown in jail as quickly as the police could track them down. Courts began taking the line that since the Communists were responsible for the fire, KPD membership was an act of treason. Thus, for all intents and purposes, the KPD was banned as of 6 March, the day after the election.

Göring also declared that any deputy who was "absent without excuse" was to be considered as present, in order to overcome obstructions. Leaving nothing to chance, the Nazis used the provisions of the Reichstag Fire Decree to detain several SPD deputies. A few others saw the writing on the wall and fled into exile.

Later that day, the Reichstag assembled under intimidating circumstances, with SA men swarming inside and outside the chamber. Hitler's speech, which emphasised the importance of Christianity in German culture, was aimed particularly at appeasing the Centre Party's sensibilities and incorporated Kaas' requested guarantees almost verbatim. Kaas gave a speech, voicing the Centre's support for the bill amid "concerns put aside", while Brüning notably remained silent.

Only SPD chairman Otto Wels spoke against the Act, declaring that the proposed bill could not "destroy ideas which are eternal and indestructible." Kaas had still not received the written constitutional guarantees he had negotiated, but with the assurance it was being "typed up", voting began. Kaas never received the letter.

At this stage, the majority of deputies already supported the bill, and any deputies who might have been reluctant to vote in favour were intimidated by the SA troops surrounding the meeting. In the end, all parties except the SPD voted in favour of the Enabling Act. With the KPD banned and 26 SPD deputies arrested or in hiding, the final tally was 444 in favour of the Enabling Act against 94 (all Social Democrats) opposed. The Reichstag had adopted the Enabling Act with the support of 83% of the deputies. The session took place under such intimidating conditions that even if all SPD deputies had been present, it would have still passed with 78.7% support. The same day in the evening, the Reichsrat also gave its approval, unanimously and without prior discussion. The Act was then signed into law by President Hindenburg.

| Party | Deputies | For | Against | Absent | |

|---|---|---|---|---|---|

|

|

NSDAP | 288 | 288 | – | – |

|

|

SPD | 120 | – | 94 | 26 |

|

|

KPD | 81 | – | – | 81 |

|

|

Centre | 73 | 72 | – | 1 |

|

|

DNVP | 52 | 52 | – | – |

|

|

BVP | 19 | 19 | – | – |

|

|

DStP | 5 | 5 | – | – |

|

|

CSVD | 4 | 4 | – | – |

|

|

DVP | 2 | 1 | – | 1 |

|

|

DBP | 2 | 2 | – | – |

|

|

Landbund | 1 | 1 | – | – |

| Total | 647 | 444 | 94 | 109 | |

Consequences

Under the Act, the government had acquired the authority to enact laws without either parliamentary consent or control. These laws could (with certain exceptions) even deviate from the Constitution. The Act effectively eliminated the Reichstag as active player in German politics. While its existence was protected by the Enabling Act, for all intents and purposes it reduced the Reichstag to a mere stage for Hitler's speeches. It only met sporadically until the end of World War II, held no debates and enacted only a few laws. Within three months of the passage of the Enabling Act, all parties except the Nazi Party were banned or pressured into dissolving themselves, followed on 14 July by a law that made the Nazi Party the only legally permitted party in the country. With this, Hitler had fulfilled what he had promised in earlier campaign speeches: "I set for myself one aim ... to sweep these thirty parties out of Germany!"

During the negotiations between the government and the political parties, it was agreed that the government should inform the Reichstag parties of legislative measures passed under the Enabling Act. For this purpose, a working committee was set up, co-chaired by Hitler and Centre Party chairman Kaas. However, this committee met only three times without any major impact, and rapidly became a dead letter even before all other parties were banned.

Though the Act had formally given legislative powers to the government as a whole, these powers were for all intents and purposes exercised by Hitler himself. After its passage, there were no longer serious deliberations in Cabinet meetings. Its meetings became more and more infrequent after 1934, and it never met in full after 1938.

Due to the great care that Hitler took to give his dictatorship an appearance of legality, the Enabling Act was renewed twice, in 1937 and 1941. However, its renewal was practically assured since all other parties were banned. Voters were presented with a single list of Nazis and Nazi-approved "guest" candidates under far-from-secret conditions. In 1942, the Reichstag passed a law giving Hitler power of life and death over every citizen, effectively extending the provisions of the Enabling Act for the duration of the war.

Ironically, at least two, and possibly three, of the penultimate measures Hitler took to consolidate his power in 1934 violated the Enabling Act. In February 1934, the Reichsrat, representing the states, was abolished even though Article 2 of the Enabling Act specifically protected the existence of both the Reichstag and the Reichsrat. It can be argued that the Enabling Act had been breached two weeks earlier by the Law for the Reconstruction of the Reich, which transferred the states' powers to the Reich and effectively left the Reichsrat impotent. Article 2 stated that laws passed under the Enabling Act could not affect the institutions of either chamber.

In August, Hindenburg died, and Hitler seized the president's powers for himself in accordance with a law passed the previous day, an action confirmed via a referendum later that month. Article 2 stated that the president's powers were to remain "undisturbed" (or "unaffected", depending on the translation), which has long been interpreted to mean that it forbade Hitler from tampering with the presidency. A 1932 amendment to the constitution made the president of the High Court of Justice, not the chancellor, first in the line of succession to the presidency—and even then on an interim basis pending new elections. However, the Enabling Act provided no remedy for any violations of Article 2, and these actions were never challenged in court.

In the Federal Republic of Germany

Article 9 of the German Constitution, enacted in 1949, allows for social groups to be labeled verfassungsfeindlich ("hostile to the constitution") and to be proscribed by the federal government. Political parties can be labeled enemies to the constitution only by the Bundesverfassungsgericht (Federal Constitutional Court), according to Art. 21 II. The idea behind the concept is the notion that even a majority rule of the people cannot be allowed to install a totalitarian or autocratic regime such as with the Enabling Act of 1933, thereby violating the principles of the German constitution.

Validity

In his book, The Coming of the Third Reich, British historian Richard J. Evans argued that the Enabling Act was legally invalid. He contended that Göring had no right to arbitrarily reduce the quorum required to bring the bill up for a vote. While the Enabling Act only required the support of two-thirds of those present and voting, two-thirds of the entire Reichstag's membership had to be present in order for the legislature to consider a constitutional amendment. According to Evans, while Göring was not required to count the KPD deputies in order to get the Enabling Act passed, he was required to "recognize their existence" by counting them for purposes of the quorum needed to call it up, making his refusal to do so "an illegal act". (Even if the Communists had been present and voting, the session's atmosphere was so intimidating that the Act would have still passed with, at the very least, 68.7% support.) He also argued that the act's passage in the Reichsrat was tainted by the overthrow of the state governments under the Reichstag Fire Decree; as Evans put it, the states were no longer "properly constituted or represented", making the Enabling Act's passage in the Reichsrat "irregular".

Portrayal in films

The 2003 film Hitler: The Rise of Evil contains a scene portraying the passage of the Enabling Act. The portrayal in this film is inaccurate, with the provisions of the Reichstag Fire Decree (which in practice, as the name states, was a decree issued by President Hindenburg weeks before the Enabling Act) merged into the Act. Non-Nazi members of the Reichstag, including Vice-Chancellor von Papen, are shown objecting. In reality the Act met little resistance, with only the centre-left Social Democratic Party voting against passage.

This film also shows Hermann Göring, speaker of the house, beginning to sing the "Deutschlandlied". Nazi representatives then stand and immediately join in with Göring, all other party members join in too, with everyone performing the Hitler salute. In reality, this never happened.

![{\displaystyle \mathbb {P} ({\text{True}}\mid +)={(1-\beta )\mathbb {P} ({\text{True}}) \over {(1-\beta )\mathbb {P} ({\text{True}})+\alpha \left[1-\mathbb {P} ({\text{True}})\right]}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/e687490adb92ebd81e8e75b06f118194d0c846a3)

![{\displaystyle u\in [0,1]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/5ab4b2bf4a8cda1b335fdb4f789c61285c971034)

![{\displaystyle \mathbb {P} ({\text{True}}|+)={\left[1-(1-u)\beta \right]\mathbb {P} ({\text{True}}) \over {\left[1-(1-u)\beta \right]\mathbb {P} ({\text{True}})+\left[(1-u)\alpha +u\right]\left[1-\mathbb {P} ({\text{True}})\right]}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/2affaa9a7c153e2665c4dd96135d93baf204d8c7)