Mining is the extraction of valuable minerals or other geological materials from the Earth, usually from an ore body, lode, vein, seam, reef, or placer deposit. The exploitation of these deposits for raw material is based on the economic viability of investing in the equipment, labor, and energy required to extract, refine and transport the materials found at the mine to manufacturers who can use the material.

Ores recovered by mining include metals, coal, oil shale, gemstones, limestone, chalk, dimension stone, rock salt, potash, gravel, and clay. Mining is required to obtain most materials that cannot be grown through agricultural processes, or feasibly created artificially in a laboratory or factory. Mining in a wider sense includes extraction of any non-renewable resource such as petroleum, natural gas, or even water. Modern mining processes involve prospecting for ore bodies, analysis of the profit potential of a proposed mine, extraction of the desired materials, and final reclamation or restoration of the land after the mine is closed.

Mining operations can create a negative environmental impact, both during the mining activity and after the mine has closed. Hence, most of the world's nations have passed regulations to decrease the impact; however, the outsized role of mining in generating business for often rural, remote or economically depressed communities means that governments may fail to fully enforce such regulations. Work safety has long been a concern as well, and where enforcing modern practices have significantly improved safety in mines. Moreover, unregulated or poorly regulated mining, especially in developing economies, frequently contributes to local human rights violations and resource conflicts.

History

Prehistory

Since the beginning of civilization, people have used stone, clay and, later, metals found close to the Earth's surface. These were used to make early tools and weapons; for example, high quality flint found in northern France, southern England and Poland was used to create flint tools. Flint mines have been found in chalk areas where seams of the stone were followed underground by shafts and galleries. The mines at Grimes Graves and Krzemionki are especially famous, and like most other flint mines, are Neolithic in origin (c. 4000–3000 BC). Other hard rocks mined or collected for axes included the greenstone of the Langdale axe industry based in the English Lake District. The oldest-known mine on archaeological record is the Ngwenya Mine in Eswatini (Swaziland), which radiocarbon dating shows to be about 43,000 years old. At this site Paleolithic humans mined hematite to make the red pigment ochre. Mines of a similar age in Hungary are believed to be sites where Neanderthals may have mined flint for weapons and tools.

Ancient Egypt

Ancient Egyptians mined malachite at Maadi. At first, Egyptians used the bright green malachite stones for ornamentations and pottery. Later, between 2613 and 2494 BC, large building projects required expeditions abroad to the area of Wadi Maghareh in order to secure minerals and other resources not available in Egypt itself. Quarries for turquoise and copper were also found at Wadi Hammamat, Tura, Aswan and various other Nubian sites on the Sinai Peninsula and at Timna.

Mining in Egypt occurred in the earliest dynasties. The gold mines of Nubia were among the largest and most extensive of any in Ancient Egypt. These mines are described by the Greek author Diodorus Siculus, who mentions fire-setting as one method used to break down the hard rock holding the gold. One of the complexes is shown in one of the earliest known maps. The miners crushed the ore and ground it to a fine powder before washing the powder for the gold dust.

Ancient Greece and Rome

Mining in Europe has a very long history. Examples include the silver mines of Laurium, which helped support the Greek city state of Athens. Although they had over 20,000 slaves working them, their technology was essentially identical to their Bronze Age predecessors. At other mines, such as on the island of Thassos, marble was quarried by the Parians after they arrived in the 7th century BC. The marble was shipped away and was later found by archaeologists to have been used in buildings including the tomb of Amphipolis. Philip II of Macedon, the father of Alexander the Great, captured the gold mines of Mount Pangeo in 357 BC to fund his military campaigns. He also captured gold mines in Thrace for minting coinage, eventually producing 26 tons per year.

However, it was the Romans who developed large-scale mining methods, especially the use of large volumes of water brought to the minehead by numerous aqueducts. The water was used for a variety of purposes, including removing overburden and rock debris, called hydraulic mining, as well as washing comminuted, or crushed, ores and driving simple machinery.

The Romans used hydraulic mining methods on a large scale to prospect for the veins of ore, especially using a now-obsolete form of mining known as hushing. They built numerous aqueducts to supply water to the minehead, where the water was stored in large reservoirs and tanks. When a full tank was opened, the flood of water sluiced away the overburden to expose the bedrock underneath and any gold-bearing veins. The rock was then worked by fire-setting to heat the rock, which would be quenched with a stream of water. The resulting thermal shock cracked the rock, enabling it to be removed by further streams of water from the overhead tanks. The Roman miners used similar methods to work cassiterite deposits in Cornwall and lead ore in the Pennines.

Sluicing methods were developed by the Romans in Spain in 25 AD to exploit large alluvial gold deposits, the largest site being at Las Medulas, where seven long aqueducts tapped local rivers and sluiced the deposits. The Romans also exploited the silver present in the argentiferous galena in the mines of Cartagena (Cartago Nova), Linares (Castulo), Plasenzuela and Azuaga, among many others. Spain was one of the most important mining regions, but all regions of the Roman Empire were exploited. In Great Britain the natives had mined minerals for millennia, but after the Roman conquest, the scale of the operations increased dramatically, as the Romans needed Britannia's resources, especially gold, silver, tin, and lead.

Roman techniques were not limited to surface mining. They followed the ore veins underground once opencast mining was no longer feasible. At Dolaucothi they stoped out the veins and drove adits through bare rock to drain the stopes. The same adits were also used to ventilate the workings, especially important when fire-setting was used. At other parts of the site, they penetrated the water table and dewatered the mines using several kinds of machines, especially reverse overshot water-wheels. These were used extensively in the copper mines at Rio Tinto in Spain, where one sequence comprised 16 such wheels arranged in pairs, and lifting water about 24 metres (79 ft). They were worked as treadmills with miners standing on the top slats. Many examples of such devices have been found in old Roman mines and some examples are now preserved in the British Museum and the National Museum of Wales.

Medieval Europe

Mining as an industry underwent dramatic changes in medieval Europe. The mining industry in the early Middle Ages was mainly focused on the extraction of copper and iron. Other precious metals were also used, mainly for gilding or coinage. Initially, many metals were obtained through open-pit mining, and ore was primarily extracted from shallow depths, rather than through deep mine shafts. Around the 14th century, the growing use of weapons, armour, stirrups, and horseshoes greatly increased the demand for iron. Medieval knights, for example, were often laden with up to 100 pounds (45 kg) of plate or chain link armour in addition to swords, lances and other weapons. The overwhelming dependency on iron for military purposes spurred iron production and extraction processes.

The silver crisis of 1465 occurred when all mines had reached depths at which the shafts could no longer be pumped dry with the available technology. Although an increased use of banknotes, credit and copper coins during this period did decrease the value of, and dependence on, precious metals, gold and silver still remained vital to the story of medieval mining.

Due to differences in the social structure of society, the increasing extraction of mineral deposits spread from central Europe to England in the mid-sixteenth century. On the continent, mineral deposits belonged to the crown, and this regalian right was stoutly maintained. But in England, royal mining rights were restricted to gold and silver (of which England had virtually no deposits) by a judicial decision of 1568 and a law in 1688. England had iron, zinc, copper, lead, and tin ores. Landlords who owned the base metals and coal under their estates then had a strong inducement to extract these metals or to lease the deposits and collect royalties from mine operators. English, German, and Dutch capital combined to finance extraction and refining. Hundreds of German technicians and skilled workers were brought over; in 1642 a colony of 4,000 foreigners was mining and smelting copper at Keswick in the northwestern mountains.

Use of water power in the form of water mills was extensive. The water mills were employed in crushing ore, raising ore from shafts, and ventilating galleries by powering giant bellows. Black powder was first used in mining in Selmecbánya, Kingdom of Hungary (now Banská Štiavnica, Slovakia) in 1627. Black powder allowed blasting of rock and earth to loosen and reveal ore veins. Blasting was much faster than fire-setting and allowed the mining of previously impenetrable metals and ores. In 1762, the world's first mining academy was established in the same town there.

The widespread adoption of agricultural innovations such as the iron plowshare, as well as the growing use of metal as a building material, was also a driving force in the tremendous growth of the iron industry during this period. Inventions like the arrastra were often used by the Spanish to pulverize ore after being mined. This device was powered by animals and used the same principles used for grain threshing.

Much of the knowledge of medieval mining techniques comes from books such as Biringuccio's De la pirotechnia and probably most importantly from Georg Agricola's De re metallica (1556). These books detail many different mining methods used in German and Saxon mines. A prime issue in medieval mines, which Agricola explains in detail, was the removal of water from mining shafts. As miners dug deeper to access new veins, flooding became a very real obstacle. The mining industry became dramatically more efficient and prosperous with the invention of mechanically- and animal-driven pumps.

Africa

Iron metallurgy in Africa dates back over four thousand years. Gold became an important commodity for Africa during the trans-Saharan gold trade from the 7th century to the 14th century. Gold was often traded to Mediterranean economies that demanded gold and could supply salt, even though much of Africa was abundant with salt due to the mines and resources in the Sahara desert. The trading of gold for salt was mostly used to promote trade between the different economies. Since the 19th century, gold and diamond mining in Southern Africa has had major political and economic impacts. The Democratic Republic of Congo is the largest producer of diamonds in Africa, with an estimated 12 million carats in 2019. Other types of mining reserves in Africa include cobalt, bauxite, iron ore, coal, and copper.

Oceania

Gold and coal mining started in Australia and New Zealand in the 19th century. Nickel has become important in the economy of New Caledonia.

In Fiji, in 1934, the Emperor Gold Mining Company Ltd. established operations at Vatukoula, followed in 1935 by the Loloma Gold Mines, N.L., and then by Fiji Mines Development Ltd. (aka Dolphin Mines Ltd.). These developments ushered in a “mining boom”, with gold production rising more than a hundred-fold, from 931.4 oz in 1934 to 107,788.5 oz in 1939, an order of magnitude then comparable to the combined output of New Zealand and Australia's eastern states.

Americas

During prehistoric times, early Americans mined large amounts of copper along Lake Superior's Keweenaw Peninsula and in nearby Isle Royale; metallic copper was still present near the surface in colonial times. Indigenous peoples used Lake Superior copper from at least 5,000 years ago; copper tools, arrowheads, and other artifacts that were part of an extensive native trade-network have been discovered. In addition, obsidian, flint, and other minerals were mined, worked, and traded. Early French explorers who encountered the sites made no use of the metals due to the difficulties of transporting them, but the copper was eventually traded throughout the continent along major river routes.

In the early colonial history of the Americas, "native gold and silver was quickly expropriated and sent back to Spain in fleets of gold- and silver-laden galleons", the gold and silver originating mostly from mines in Central and South America. Turquoise dated at 700 AD was mined in pre-Columbian America; in the Cerillos Mining District in New Mexico, an estimate of "about 15,000 tons of rock had been removed from Mt. Chalchihuitl using stone tools before 1700."

In 1727 Louis Denys (Denis) (1675–1741), sieur de La Ronde – brother of Simon-Pierre Denys de Bonaventure and the son-in-law of René Chartier – took command of Fort La Pointe at Chequamegon Bay; where natives informed him of an island of copper. La Ronde obtained permission from the French crown to operate mines in 1733, becoming "the first practical miner on Lake Superior"; seven years later, mining was halted by an outbreak between Sioux and Chippewa tribes.

Mining in the United States became widespread in the 19th century, and the United States Congress passed the General Mining Act of 1872 to encourage mining of federal lands. As with the California Gold Rush in the mid-19th century, mining for minerals and precious metals, along with ranching, became a driving factor in the U.S. Westward Expansion to the Pacific coast. With the exploration of the West, mining camps sprang up and "expressed a distinctive spirit, an enduring legacy to the new nation"; Gold Rushers would experience the same problems as the Land Rushers of the transient West that preceded them. Aided by railroads, many people traveled West for work opportunities in mining. Western cities such as Denver and Sacramento originated as mining towns.

When new areas were explored, it was usually the gold (placer and then lode) and then silver that were taken into possession and extracted first. Other metals would often wait for railroads or canals, as coarse gold dust and nuggets do not require smelting and are easy to identify and transport.

Modernity

In the early 20th century, the gold and silver rush to the western United States also stimulated mining for coal as well as base metals such as copper, lead, and iron. Areas in modern Montana, Utah, Arizona, and later Alaska became predominate suppliers of copper to the world, which was increasingly demanding copper for electrical and households goods. Canada's mining industry grew more slowly than did the United States' due to limitations in transportation, capital, and U.S. competition; Ontario was the major producer of the early 20th century with nickel, copper, and gold.

Meanwhile, Australia experienced the Australian gold rushes and by the 1850s was producing 40% of the world's gold, followed by the establishment of large mines such as the Mount Morgan Mine, which ran for nearly a hundred years, Broken Hill ore deposit (one of the largest zinc-lead ore deposits), and the iron ore mines at Iron Knob. After declines in production, another boom in mining occurred in the 1960s. Now, in the early 21st century, Australia remains a major world mineral producer.

As the 21st century begins, a globalized mining industry of large multinational corporations has arisen. Peak minerals and environmental impacts have also become a concern. Different elements, particularly rare earth minerals, have begun to increase in demand as a result of new technologies.

Mine development and life cycle

The process of mining from discovery of an ore body through extraction of minerals and finally to returning the land to its natural state consists of several distinct steps. The first is discovery of the ore body, which is carried out through prospecting or exploration to find and then define the extent, location and value of the ore body. This leads to a mathematical resource estimation to estimate the size and grade of the deposit.

This estimation is used to conduct a pre-feasibility study to determine the theoretical economics of the ore deposit. This identifies, early on, whether further investment in estimation and engineering studies is warranted and identifies key risks and areas for further work. The next step is to conduct a feasibility study to evaluate the financial viability, the technical and financial risks, and the robustness of the project.

This is when the mining company makes the decision whether to develop the mine or to walk away from the project. This includes mine planning to evaluate the economically recoverable portion of the deposit, the metallurgy and ore recoverability, marketability and payability of the ore concentrates, engineering concerns, milling and infrastructure costs, finance and equity requirements, and an analysis of the proposed mine from the initial excavation all the way through to reclamation. The proportion of a deposit that is economically recoverable is dependent on the enrichment factor of the ore in the area.

To gain access to the mineral deposit within an area it is often necessary to mine through or remove waste material which is not of immediate interest to the miner. The total movement of ore and waste constitutes the mining process. Often more waste than ore is mined during the life of a mine, depending on the nature and location of the ore body. Waste removal and placement is a major cost to the mining operator, so a detailed characterization of the waste material forms an essential part of the geological exploration program for a mining operation.

Once the analysis determines a given ore body is worth recovering, development begins to create access to the ore body. The mine buildings and processing plants are built, and any necessary equipment is obtained. The operation of the mine to recover the ore begins and continues as long as the company operating the mine finds it economical to do so. Once all the ore that the mine can produce profitably is recovered, reclamation can begin, to make the land used by the mine suitable for future use.

Technical and economic challenges notwithstanding, successful mine development must also address human factors. Working conditions are paramount to success, especially with regard to exposures to dusts, radiation, noise, explosives hazards, and vibration, as well as illumination standards. Mining today increasingly must address environmental and community impacts, including psychological and sociological dimensions. Thus, mining educator Frank T. M. White (1909–1971), broadened the focus to the “total environment of mining”, including reference to community development around mining, and how mining is portrayed to an urban society, which depends on the industry, although seemingly unaware of this dependency. He stated, “[I]n the past, mining engineers have not been called upon to study the psychological, sociological and personal problems of their own industry – aspects that nowadays are assuming tremendous importance. The mining engineer must rapidly expand his knowledge and his influence into these newer fields.”

Techniques

Mining techniques can be divided into two common excavation types: surface mining and sub-surface (underground) mining. Today, surface mining is much more common, and produces, for example, 85% of minerals (excluding petroleum and natural gas) in the United States, including 98% of metallic ores.

Targets are divided into two general categories of materials: placer deposits, consisting of valuable minerals contained within river gravels, beach sands, and other unconsolidated materials; and lode deposits, where valuable minerals are found in veins, in layers, or in mineral grains generally distributed throughout a mass of actual rock. Both types of ore deposit, placer or lode, are mined by both surface and underground methods.

Some mining, including much of the rare earth elements and uranium mining, is done by less-common methods, such as in-situ leaching: this technique involves digging neither at the surface nor underground. The extraction of target minerals by this technique requires that they be soluble, e.g., potash, potassium chloride, sodium chloride, sodium sulfate, which dissolve in water. Some minerals, such as copper minerals and uranium oxide, require acid or carbonate solutions to dissolve.

Artisanal

An artisanal miner or small-scale miner (ASM) is a subsistence miner who is not officially employed by a mining company, but works independently, mining minerals using their own resources, usually by hand.

Small-scale mining includes enterprises or individuals that employ workers for mining, but generally still using manually-intensive methods, working with hand tools.

Artisanal miners often undertake the activity of mining seasonally – for example crops are planted in the rainy season, and mining is pursued in the dry season. However, they also frequently travel to mining areas and work year-round. There are four broad types of ASM: permanent artisanal mining, seasonal (annually migrating during idle agriculture periods), rush-type (massive migration, pulled often by commodity price jumps), and shock-push (poverty-drive, following conflict or natural disasters).

ASM is an important socio-economic sector for the rural poor in many developing nations, many of whom have few other options for supporting their families. Over 90% of the world's mining workforce are ASM. There are an estimated 40.5 million men, women and children directly engaged in ASM, from over 80 countries in the global south. 20% of the global gold supply is produced by the ASM sector, as well as 80% of the global gemstone and 20% of global diamond supply, and 25% of global tin production. More than 150 million depend on ASM for their livelihood. 70 - 80% of small-scale miners are informal, and approximately 30% are women, although this ranges in certain countries and commodities from 5% to 80%.Surface

Surface mining is done by removing surface vegetation, dirt, and bedrock to reach buried ore deposits. Techniques of surface mining include: open-pit mining, which is the recovery of materials from an open pit in the ground; quarrying, identical to open-pit mining except that it refers to sand, stone and clay; strip mining, which consists of stripping surface layers off to reveal ore underneath; and mountaintop removal, commonly associated with coal mining, which involves taking the top of a mountain off to reach ore deposits at depth. Most placer deposits, because they are shallowly buried, are mined by surface methods. Finally, landfill mining involves sites where landfills are excavated and processed. Landfill mining has been thought of as a long-term solution to methane emissions and local pollution.

High wall

High wall mining, which evolved from auger mining, is another form of surface mining. In high wall mining, the remaining part of a coal seam previously exploited by other surface-mining techniques has too much overburden to be removed but can still be profitably exploited from the side of the artificial cliff made by previous mining. A typical cycle alternates sumping, which undercuts the seam, and shearing, which raises and lowers the cutter-head boom to cut the entire height of the coal seam. As the coal recovery cycle continues, the cutter-head is progressively launched further into the coal seam. High wall mining can produce thousands of tons of coal in contour-strip operations with narrow benches, previously mined areas, trench mine applications and steep-dip seams.

Underground mining

Sub-surface mining consists of digging tunnels or shafts into the earth to reach buried ore deposits. Ore, for processing, and waste rock, for disposal, are brought to the surface through the tunnels and shafts. Sub-surface mining can be classified by the type of access shafts used, and the extraction method or the technique used to reach the mineral deposit. Drift mining utilizes horizontal access tunnels, slope mining uses diagonally sloping access shafts, and shaft mining utilizes vertical access shafts. Mining in hard and soft rock formations requires different techniques.

Other methods include shrinkage stope mining, which is mining upward, creating a sloping underground room, long wall mining, which is grinding a long ore surface underground, and room and pillar mining, which is removing ore from rooms while leaving pillars in place to support the roof of the room. Room and pillar mining often leads to retreat mining, in which supporting pillars are removed as miners retreat, allowing the room to cave in, thereby loosening more ore. Additional sub-surface mining methods include hard rock mining, bore hole mining, drift and fill mining, long hole slope mining, sub level caving, and block caving.

Machines

Heavy machinery is used in mining to explore and develop sites, to remove and stockpile overburden, to break and remove rocks of various hardness and toughness, to process the ore, and to carry out reclamation projects after the mine is closed. Bulldozers, drills, explosives and trucks are all necessary for excavating the land. In the case of placer mining, unconsolidated gravel, or alluvium, is fed into machinery consisting of a hopper and a shaking screen or trommel which frees the desired minerals from the waste gravel. The minerals are then concentrated using sluices or jigs.

Large drills are used to sink shafts, excavate stopes, and obtain samples for analysis. Trams are used to transport miners, minerals and waste. Lifts carry miners into and out of mines, and move rock and ore out, and machinery in and out, of underground mines. Huge trucks, shovels and cranes are employed in surface mining to move large quantities of overburden and ore. Processing plants utilize large crushers, mills, reactors, roasters and other equipment to consolidate the mineral-rich material and extract the desired compounds and metals from the ore.

Processing

Once the mineral is extracted, it is often then processed. The science of extractive metallurgy is a specialized area in the science of metallurgy that studies the extraction of valuable metals from their ores, especially through chemical or mechanical means.

Mineral processing (or mineral dressing) is a specialized area in the science of metallurgy that studies the mechanical means of crushing, grinding, and washing that enable the separation (extractive metallurgy) of valuable metals or minerals from their gangue (waste material). Processing of placer ore material consists of gravity-dependent methods of separation, such as sluice boxes. Only minor shaking or washing may be necessary to disaggregate (unclump) the sands or gravels before processing. Processing of ore from a lode mine, whether it is a surface or subsurface mine, requires that the rock ore be crushed and pulverized before extraction of the valuable minerals begins. After lode ore is crushed, recovery of the valuable minerals is done by one, or a combination of several, mechanical and chemical techniques.

Since most metals are present in ores as oxides or sulfides, the metal needs to be reduced to its metallic form. This can be accomplished through chemical means such as smelting or through electrolytic reduction, as in the case of aluminium. Geometallurgy combines the geologic sciences with extractive metallurgy and mining.

In 2018, led by Chemistry and Biochemistry professor Bradley D. Smith, University of Notre Dame researchers "invented a new class of molecules whose shape and size enable them to capture and contain precious metal ions," reported in a study published by the Journal of the American Chemical Society. The new method "converts gold-containing ore into chloroauric acid and extracts it using an industrial solvent. The container molecules are able to selectively separate the gold from the solvent without the use of water stripping." The newly developed molecules can eliminate water stripping, whereas mining traditionally "relies on a 125-year-old method that treats gold-containing ore with large quantities of poisonous sodium cyanide... this new process has a milder environmental impact and that, besides gold, it can be used for capturing other metals such as platinum and palladium," and could also be used in urban mining processes that remove precious metals from wastewater streams.

Environmental effects

Environmental regulation

Mine operators frequently have to follow some regulatory practices to minimize environmental impact and avoid impacting human health. In better regulated economies, regulations require the common steps of environmental impact assessment, development of environmental management plans, mine closure planning (which must be done before the start of mining operations), and environmental monitoring during operation and after closure. However, in some areas, particularly in the developing world, government regulations may not be well enforced.

For major mining companies and any company seeking international financing, there are a number of other mechanisms to enforce environmental standards. These generally relate to financing standards such as the Equator Principles, IFC environmental standards, and criteria for Socially responsible investing. Mining companies have used this oversight from the financial sector to argue for some level of industry self-regulation. In 1992, a Draft Code of Conduct for Transnational Corporations was proposed at the Rio Earth Summit by the UN Centre for Transnational Corporations (UNCTC), but the Business Council for Sustainable Development (BCSD) together with the International Chamber of Commerce (ICC) argued successfully for self-regulation instead.

This was followed by the Global Mining Initiative which was begun by nine of the largest metals and mining companies and which led to the formation of the International Council on Mining and Metals, whose purpose was to "act as a catalyst" in an effort to improve social and environmental performance in the mining and metals industry internationally. The mining industry has provided funding to various conservation groups, some of which have been working with conservation agendas that are at odds with an emerging acceptance of the rights of indigenous people – particularly the right to make land-use decisions.

Certification of mines with good practices occurs through the International Organization for Standardization (ISO). For example, ISO 9000 and ISO 14001, which certify an "auditable environmental management system", involve short inspections, although they have been accused of lacking rigor. Certification is also available through Ceres' Global Reporting Initiative, but these reports are voluntary and unverified. Miscellaneous other certification programs exist for various projects, typically through nonprofit groups.

The purpose of a 2012 EPS PEAKS paper was to provide evidence on policies managing ecological costs and maximize socio-economic benefits of mining using host country regulatory initiatives. It found existing literature suggesting donors encourage developing countries to:

- Make the environment-poverty link and introduce cutting-edge wealth measures and natural capital accounts.

- Reform old taxes in line with more recent financial innovation, engage directly with the companies, enact land use and impact assessments, and incorporate specialized support and standards agencies.

- Set in play transparency and community participation initiatives using the wealth accrued.

Waste

Ore mills generate large amounts of waste, called tailings. For example, 99 tons of waste is generated per ton of copper, with even higher ratios in gold mining – because only 5.3 g of gold is extracted per ton of ore, a ton of gold produces 200,000 tons of tailings. (As time goes on and richer deposits are exhausted – and technology improves – this number is going down to .5 g and less.) These tailings can be toxic. Tailings, which are usually produced as a slurry, are most commonly dumped into ponds made from naturally existing valleys. These ponds are secured by impoundments (dams or embankment dams). In 2000 it was estimated that 3,500 tailings impoundments existed, and that every year, 2 to 5 major failures and 35 minor failures occurred. For example, in the Marcopper mining disaster at least 2 million tons of tailings were released into a local river. In 2015, Barrick Gold Corporation spilled over 1 million liters of cyanide into a total of five rivers in Argentina near their Veladero mine. Since 2007 in central Finland, the Talvivaara Terrafame polymetal mine's waste effluent and leaks of saline mine water have resulted in ecological collapse of a nearby lake. Subaqueous tailings disposal is another option. The mining industry has argued that submarine tailings disposal (STD), which disposes of tailings in the sea, is ideal because it avoids the risks of tailings ponds. The practice is illegal in the United States and Canada, but it is used in the developing world.

The waste is classified as either sterile or mineralized, with acid generating potential, and the movement and storage of this material form a major part of the mine planning process. When the mineralised package is determined by an economic cut-off, the near-grade mineralised waste is usually dumped separately with view to later treatment should market conditions change and it becomes economically viable. Civil engineering design parameters are used in the design of the waste dumps, and special conditions apply to high-rainfall areas and to seismically active areas. Waste dump designs must meet all regulatory requirements of the country in whose jurisdiction the mine is located. It is also common practice to rehabilitate dumps to an internationally acceptable standard, which in some cases means that higher standards than the local regulatory standard are applied.

Industry

Mining exists in many countries. London is the headquarters for large miners such as Anglo American, BHP and Rio Tinto. The US mining industry is also large, but it is dominated by extraction of coal and other nonmetal minerals (e.g., rock and sand), and various regulations have worked to reduce the significance of mining in the United States. In 2007 the total market capitalization of mining companies was reported at US$962 billion, which compares to a total global market cap of publicly traded companies of about US$50 trillion in 2007. In 2002, Chile and Peru were reportedly the major mining countries of South America. The mineral industry of Africa includes the mining of various minerals; it produces relatively little of the industrial metals copper, lead, and zinc, but according to one estimate has as a percent of world reserves 40% of gold, 60% of cobalt, and 90% of the world's platinum group metals. Mining in India is a significant part of that country's economy. In the developed world, mining in Australia, with BHP founded and headquartered in the country, and mining in Canada are particularly significant. For rare earth minerals mining, China reportedly controlled 95% of production in 2013.

While exploration and mining can be conducted by individual entrepreneurs or small businesses, most modern-day mines are large enterprises requiring large amounts of capital to establish. Consequently, the mining sector of the industry is dominated by large, often multinational, companies, most of them publicly listed. It can be argued that what is referred to as the 'mining industry' is actually two sectors, one specializing in exploration for new resources and the other in mining those resources. The exploration sector is typically made up of individuals and small mineral resource companies, called "juniors", which are dependent on venture capital. The mining sector is made up of large multinational companies that are sustained by production from their mining operations. Various other industries such as equipment manufacture, environmental testing, and metallurgy analysis rely on, and support, the mining industry throughout the world. Canadian stock exchanges have a particular focus on mining companies, particularly junior exploration companies through Toronto's TSX Venture Exchange; Canadian companies raise capital on these exchanges and then invest the money in exploration globally. Some have argued that below juniors there exists a substantial sector of illegitimate companies primarily focused on manipulating stock prices.

Mining operations can be grouped into five major categories in terms of their respective resources. These are oil and gas extraction, coal mining, metal ore mining, nonmetallic mineral mining and quarrying, and mining support activities. Of all of these categories, oil and gas extraction remains one of the largest in terms of its global economic importance. Prospecting potential mining sites, a vital area of concern for the mining industry, is now done using sophisticated new technologies such as seismic prospecting and remote-sensing satellites. Mining is heavily affected by the prices of the commodity minerals, which are often volatile. The 2000s commodities boom ("commodities supercycle") increased the prices of commodities, driving aggressive mining. In addition, the price of gold increased dramatically in the 2000s, which increased gold mining; for example, one study found that conversion of forest in the Amazon increased six-fold from the period 2003–2006 (292 ha/yr) to the period 2006–2009 (1,915 ha/yr), largely due to artisanal mining.

Corporate classifications

Mining companies can be classified based on their size and financial capabilities:

- Major companies are considered to have an adjusted annual mining-related revenue of more than US$500 million, with the financial capability to develop a major mine on its own.

- Intermediate companies have at least $50 million in annual revenue but less than $500 million.

- Junior companies rely on equity financing as their principal means of funding exploration. Juniors are mainly pure exploration companies, but may also produce minimally, and do not have a revenue exceeding US$50 million.

Re their valuation, and stock market characteristics, see Valuation (finance) § Valuation of mining projects.

Regulation and governance

New regulations and a process of legislative reforms aim to improve the harmonization and stability of the mining sector in mineral-rich countries. New legislation for mining industry in African countries still appears to be an issue, but has the potential to be solved, when a consensus is reached on the best approach. By the beginning of the 21st century the booming and increasingly complex mining sector in mineral-rich countries was providing only slight benefits to local communities, especially in given the sustainability issues. Increasing debate and influence by NGOs and local communities called for new approaches which would also include disadvantaged communities, and work towards sustainable development even after mine closure (including transparency and revenue management). By the early 2000s, community development issues and resettlements became mainstream concerns in World Bank mining projects. Mining-industry expansion after mineral prices increased in 2003 and also potential fiscal revenues in those countries created an omission in the other economic sectors in terms of finances and development. Furthermore, this highlighted regional and local demand for mining revenues and an inability of sub-national governments to effectively use the revenues. The Fraser Institute (a Canadian think tank) has highlighted the environmental protection laws in developing countries, as well as voluntary efforts by mining companies to improve their environmental impact.

In 2007 the Extractive Industries Transparency Initiative (EITI) was mainstreamed in all countries cooperating with the World Bank in mining industry reform. The EITI operates and was implemented with the support of the EITI multi-donor trust fund, managed by the World Bank. The EITI aims to increase transparency in transactions between governments and companies in extractive industries by monitoring the revenues and benefits between industries and recipient governments. The entrance process is voluntary for each country and is monitored by multiple stakeholders including governments, private companies and civil society representatives, responsible for disclosure and dissemination of the reconciliation report; however, the competitive disadvantage of company-by-company public report is for some of the businesses in Ghana at least, the main constraint. Therefore, the outcome assessment in terms of failure or success of the new EITI regulation does not only "rest on the government's shoulders" but also on civil society and companies.

On the other hand, implementation has issues; inclusion or exclusion of artisanal mining and small-scale mining (ASM) from the EITI and how to deal with "non-cash" payments made by companies to subnational governments. Furthermore, the disproportionate revenues the mining industry can bring to the comparatively small number of people that it employs, causes other problems, like a lack of investment in other less lucrative sectors, leading to swings in government revenue because of volatility in the oil markets. Artisanal mining is clearly an issue in EITI Countries such as the Central African Republic, D.R. Congo, Guinea, Liberia and Sierra Leone – i.e. almost half of the mining countries implementing the EITI. Among other things, limited scope of the EITI involving disparity in terms of knowledge of the industry and negotiation skills, thus far flexibility of the policy (e.g. liberty of the countries to expand beyond the minimum requirements and adapt it to their needs), creates another risk of unsuccessful implementation. Public awareness increase, where government should act as a bridge between public and initiative for a successful outcome of the policy is an important element to be considered.

World Bank

The World Bank has been involved in mining since 1955, mainly through grants from its International Bank for Reconstruction and Development, with the Bank's Multilateral Investment Guarantee Agency offering political risk insurance. Between 1955 and 1990 it provided about $2 billion to fifty mining projects, broadly categorized as reform and rehabilitation, greenfield mine construction, mineral processing, technical assistance, and engineering. These projects have been criticized, particularly the Ferro Carajas project of Brazil, begun in 1981. The World Bank established mining codes intended to increase foreign investment; in 1988 it solicited feedback from 45 mining companies on how to increase their involvement.

In 1992 the World Bank began to push for privatization of government-owned mining companies with a new set of codes, beginning with its report The Strategy for African Mining. In 1997, Latin America's largest miner Companhia Vale do Rio Doce (CVRD) was privatized. These and other developments such as the Philippines 1995 Mining Act led the bank to publish a third report (Assistance for Minerals Sector Development and Reform in Member Countries) which endorsed mandatory environment impact assessments and attention to the concerns of the local population. The codes based on this report are influential in the legislation of developing nations. The new codes are intended to encourage development through tax holidays, zero custom duties, reduced income taxes, and related measures. The results of these codes were analyzed by a group from the University of Quebec, which concluded that the codes promote foreign investment but "fall very short of permitting sustainable development". The observed negative correlation between natural resources and economic development is known as the resource curse.

Safety

Safety has long been a concern in the mining business, especially in sub-surface mining. The Courrières mine disaster, Europe's worst mining accident, involved the death of 1,099 miners in Northern France on March 10, 1906. This disaster was surpassed only by the Benxihu Colliery accident in China on April 26, 1942, which killed 1,549 miners. While mining today is substantially safer than it was in previous decades, mining accidents still occur. Government figures indicate that 5,000 Chinese miners die in accidents each year, while other reports have suggested a figure as high as 20,000. Mining accidents continue worldwide, including accidents causing dozens of fatalities at a time such as the 2007 Ulyanovskaya Mine disaster in Russia, the 2009 Heilongjiang mine explosion in China, and the 2010 Upper Big Branch Mine disaster in the United States. Mining has been identified by the National Institute for Occupational Safety and Health (NIOSH) as a priority industry sector in the National Occupational Research Agenda (NORA) to identify and provide intervention strategies regarding occupational health and safety issues. The Mining Safety and Health Administration (MSHA) was established in 1978 to "work to prevent death, illness, and injury from mining and promote safe and healthful workplaces for US miners." Since its implementation in 1978, the number of miner fatalities has decreased from 242 miners in 1978 to 24 miners in 2019.

There are numerous occupational hazards associated with mining, including exposure to rockdust which can lead to diseases such as silicosis, asbestosis, and pneumoconiosis. Gases in the mine can lead to asphyxiation and could also be ignited. Mining equipment can generate considerable noise, putting workers at risk for hearing loss. Cave-ins, rock falls, and exposure to excess heat are also known hazards. The current NIOSH Recommended Exposure Limit (REL) of noise is 85 dBA with a 3 dBA exchange rate and the MSHA Permissible Exposure Limit (PEL) is 90 dBA with a 5 dBA exchange rate as an 8-hour time-weighted average. NIOSH has found that 25% of noise-exposed workers in Mining, Quarrying, and Oil and Gas Extraction have hearing impairment. The prevalence of hearing loss increased by 1% from 1991 to 2001 within these workers.

Noise studies have been conducted in several mining environments. Stageloaders (84-102 dBA), shearers (85-99 dBA), auxiliary fans (84–120 dBA), continuous mining machines (78–109 dBA), and roof bolters (92–103 dBA) represent some of the noisiest equipment in underground coal mines. Dragline oilers, dozer operators, and welders using air arcing were occupations with the highest noise exposures among surface coal miners. Coal mines had the highest hearing loss injury likelihood.

Human rights

In addition to the environmental impacts of mining processes, a prominent criticism pertaining to this form of extractive practice and of mining companies are the human rights abuses occurring within mining sites and communities in close proximity of them. Frequently, despite being protected by International Labor rights, miners are not given appropriate equipment to provide them with protection from possible mine collapse or from harmful pollutants and chemicals expelled during the mining process, work in inhumane conditions spending numerous hours working in extreme heat, darkness and 14 hour workdays with no allocated time for breaks.

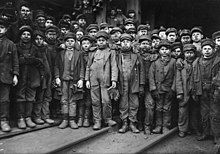

Child labor

Included within the human rights abuses that occur during mining processes are instances of child labor. These instances are a cause for widespread criticism of mines harvesting cobalt, a mineral essential for powering modern technologies such as laptops, smartphones and electric vehicles. Many of these cases of child laborers are found in the Democratic Republic of Congo. Reports have risen of children carrying sacks of cobalt weighing 25 kg from small mines to local traders being paid for their work only in food and accommodation. A number of companies such as Apple, Google, Microsoft and Tesla have been implicated in lawsuits brought by families whose children were severely injured or killed during mining activities in Congo. In December 2019, 14 Congolese families filed a lawsuit against Glencore, a mining company which supplies the essential cobalt to these multinational corporations with allegations of negligence that led to the deaths of children or injuries such as broken spines, emotional distress and forced labor.

Indigenous peoples

There have also been instances of killings and evictions attributed to conflicts with mining companies. Almost a third of 227 murders in 2020 were of Indigenous peoples rights activists on the frontlines of climate change activism linked to logging, mining, large-scale agribusiness, hydroelectric dams, and other infrastructure, according to Global Witness.

The relationship between indigenous peoples and mining is defined by struggles over access to land. In Australia, the Aboriginal Bininj said mining posed a threat to their living culture and could damage sacred heritage sites.

In the Philippines, an anti-mining movement has raised concerns regarding "the total disregard for [Indigenous communities'] ancestral land rights". Ifugao peoples' opposition to mining led a governor to proclaim a ban on mining operations in Mountain Province, Philippines.

In Brazil, more than 170 tribes organized a march to oppose controversial attempts to strip back indigenous land rights and open their territories to mining operations. The United Nations Commission on Human Rights has called on Brazil's Supreme Court to uphold Indigenous land rights to prevent exploitation by mining groups and industrial agriculture.

Records

As of 2019, Mponeng is the world's deepest mine from ground level, reaching a depth of 4 km (2.5 mi) below ground level. The trip from the surface to the bottom of the mine takes over an hour. It is a gold mine in South Africa's Gauteng province. Previously known as Western Deep Levels #1 Shaft, the underground and surface works were commissioned in 1987. The mine is considered to be one of the most substantial gold mines in the world.

The Moab Khutsong gold mine in North West Province (South Africa) has the world's longest winding steel wire rope, which is able to lower workers to 3,054 metres (10,020 ft) in one uninterrupted four-minute journey.

The deepest mine in Europe is the 16th shaft of the uranium mines in Příbram, Czech Republic, at 1,838 metres (6,030 ft). Second is Bergwerk Saar in Saarland, Germany, at 1,750 metres (5,740 ft).

The deepest open-pit mine in the world is Bingham Canyon Mine in Bingham Canyon, Utah, United States, at over 1,200 metres (3,900 ft). The largest and second deepest open-pit copper mine in the world is Chuquicamata in northern Chile at 900 metres (3,000 ft), which annually produces 443,000 tons of copper and 20,000 tons of molybdenum.

The deepest open-pit mine with respect to sea level is Tagebau Hambach in Germany, where the base of the pit is 299 metres (981 ft) below sea level.

The largest underground mine is Kiirunavaara Mine in Kiruna, Sweden. With 450 kilometres (280 mi) of roads, 40 million tonnes of annually produced ore, and a depth of 1,270 metres (4,170 ft), it is also one of the most modern underground mines. The deepest borehole in the world is Kola Superdeep Borehole at 12,262 metres (40,230 ft), but this is connected to scientific drilling, not mining.

Metal reserves and recycling

During the 20th century, the variety of metals used in society grew rapidly. Today, the development of major nations such as China and India and advances in technologies are fueling an ever-greater demand. The result is that metal mining activities are expanding and more and more of the world's metal stocks are above ground in use rather than below ground as unused reserves. An example is the in-use stock of copper. Between 1932 and 1999, copper in use in the US rose from 73 kilograms (161 lb) to 238 kilograms (525 lb) per person.

95% of the energy used to make aluminium from bauxite ore is saved by using recycled material. However, levels of metals recycling are generally low. In 2010, the International Resource Panel, hosted by the United Nations Environment Programme (UNEP), published reports on metal stocks that exist within society and their recycling rates.

The report's authors observed that the metal stocks in society can serve as huge mines above ground. However, they warned that the recycling rates of some rare metals used in applications such as mobile phones, battery packs for hybrid cars, and fuel cells are so low that unless future end-of-life recycling rates are dramatically stepped up these critical metals will become unavailable for use in modern technology.

As recycling rates are low and so much metal has already been extracted, some landfills now contain higher concentrations of metal than mines themselves. This is especially true of aluminium, used in cans, and precious metals, found in discarded electronics. Furthermore, waste after 15 years has still not broken down, so less processing would be required when compared to mining ores. A study undertaken by Cranfield University has found £360 million of metals could be mined from just four landfill sites. There is also up to 20 MJ/kg of energy in waste, potentially making the re-extraction more profitable. However, although the first landfill mine opened in Tel Aviv, Israel in 1953, little work has followed due to the abundance of accessible ores.