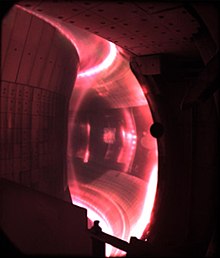

A tokamak (/ˈtoʊkəmæk/; Russian: токамáк) is a device which uses a powerful magnetic field to confine plasma in the shape of a torus. The tokamak is one of several types of magnetic confinement devices being developed to produce controlled thermonuclear fusion power. As of 2016, it was the leading candidate for a practical fusion reactor. The word "tokamak" is derived from a Russian acronym meaning "toroidal chamber with magnetic coils".

Methods for extracting energy from a tokamak include heat transfer, direct energy conversion, and magnetohydrodynamic conversion.

Tokamaks were initially conceptualized in the 1950s by Soviet physicists Igor Tamm and Andrei Sakharov, inspired by a letter by Oleg Lavrentiev. The first working tokamak was attributed to the work of Natan Yavlinsky on the T-1 in 1958. It had been demonstrated that a stable plasma equilibrium requires magnetic field lines that wind around the torus in a helix. Devices like the z-pinch and stellarator had attempted this, but demonstrated serious instabilities. It was the development of the concept now known as the safety factor (labelled q in mathematical notation) that guided tokamak development; by arranging the reactor so this critical factor q was always greater than 1, the tokamaks strongly suppressed the instabilities which plagued earlier designs.

By the mid-1960s, the tokamak designs began to show greatly improved performance. The initial results were released in 1965, but were ignored; Lyman Spitzer dismissed them out of hand after noting potential problems in their system for measuring temperatures. A second set of results was published in 1968, this time claiming performance far in advance of any other machine. When these were also met skeptically, the Soviets invited a delegation from the United Kingdom to make their own measurements. These confirmed the Soviet results, and their 1969 publication resulted in a stampede of tokamak construction.

By the mid-1970s, dozens of tokamaks were in use around the world. By the late 1970s, these machines had reached all of the conditions needed for practical fusion, although not at the same time nor in a single reactor. With the goal of breakeven (a fusion energy gain factor equal to 1) now in sight, a new series of machines were designed that would run on a fusion fuel of deuterium and tritium. These machines, notably the Joint European Torus (JET) and Tokamak Fusion Test Reactor (TFTR), had the explicit goal of reaching breakeven.

Instead, these machines demonstrated new problems that limited their performance. Solving these would require a much larger and more expensive machine, beyond the abilities of any one country. After an initial agreement between Ronald Reagan and Mikhail Gorbachev in November 1985, the International Thermonuclear Experimental Reactor (ITER) effort emerged and remains the primary international effort to develop practical fusion power. Many smaller designs, and offshoots like the spherical tokamak, continue to be used to investigate performance parameters and other issues. As of 2022, JET remains the record holder for fusion output, with 59 MJ of energy output over a 5-second period.

Etymology

The word tokamak is a transliteration of the Russian word токамак, an acronym of either:

- тороидальная камера с магнитными катушками

- toroidal'naya kamera s magnitnymi katushkami

- toroidal chamber with magnetic coils;

or

- тороидальная камера с аксиальным магнитным полем

- toroidal'naya kamera s aksial'nym magnitnym polem

- toroidal chamber with axial magnetic field.

The term was coined in 1957 by Igor Golovin, the vice-director of the Laboratory of Measuring Apparatus of Academy of Science, today's Kurchatov Institute. A similar term, tokomag, was also proposed for a time.

History

First steps

In 1934, Mark Oliphant, Paul Harteck and Ernest Rutherford were the first to achieve fusion on Earth, using a particle accelerator to shoot deuterium nuclei into metal foil containing deuterium or other atoms. This allowed them to measure the nuclear cross section of various fusion reactions, and determined that the deuterium–deuterium reaction occurred at a lower energy than other reactions, peaking at about 100,000 electronvolts (100 keV).

Accelerator-based fusion is not practical because the reactor cross section is tiny; most of the particles in the accelerator will scatter off the fuel, not fuse with it. These scatterings cause the particles to lose energy to the point where they can no longer undergo fusion. The energy put into these particles is thus lost, and it is easy to demonstrate this is much more energy than the resulting fusion reactions can release.

To maintain fusion and produce net energy output, the bulk of the fuel must be raised to high temperatures so its atoms are constantly colliding at high speed; this gives rise to the name thermonuclear due to the high temperatures needed to bring it about. In 1944, Enrico Fermi calculated the reaction would be self-sustaining at about 50,000,000 K; at that temperature, the rate that energy is given off by the reactions is high enough that they heat the surrounding fuel rapidly enough to maintain the temperature against losses to the environment, continuing the reaction.

During the Manhattan Project, the first practical way to reach these temperatures was created, using an atomic bomb. In 1944, Fermi gave a talk on the physics of fusion in the context of a then-hypothetical hydrogen bomb. However, some thought had already been given to a controlled fusion device, and James L. Tuck and Stanislaw Ulam had attempted such using shaped charges driving a metal foil infused with deuterium, although without success.

The first attempts to build a practical fusion machine took place in the United Kingdom, where George Paget Thomson had selected the pinch effect as a promising technique in 1945. After several failed attempts to gain funding, he gave up and asked two graduate students, Stanley (Stan) W. Cousins and Alan Alfred Ware (1924–2010), to build a device out of surplus radar equipment. This was successfully operated in 1948, but showed no clear evidence of fusion and failed to gain the interest of the Atomic Energy Research Establishment.

Lavrentiev's letter

In 1950, Oleg Lavrentiev, then a Red Army sergeant stationed on Sakhalin, wrote a letter to the Central Committee of the Communist Party of the Soviet Union. The letter outlined the idea of using an atomic bomb to ignite a fusion fuel, and then went on to describe a system that used electrostatic fields to contain a hot plasma in a steady state for energy production.

The letter was sent to Andrei Sakharov for comment. Sakharov noted that "the author formulates a very important and not necessarily hopeless problem", and found his main concern in the arrangement was that the plasma would hit the electrode wires, and that "wide meshes and a thin current-carrying part which will have to reflect almost all incident nuclei back into the reactor. In all likelihood, this requirement is incompatible with the mechanical strength of the device."

Some indication of the importance given to Lavrentiev's letter can be seen in the speed with which it was processed; the letter was received by the Central Committee on 29 July, Sakharov sent his review in on 18 August, by October, Sakharov and Igor Tamm had completed the first detailed study of a fusion reactor, and they had asked for funding to build it in January 1951.

Magnetic confinement

When heated to fusion temperatures, the electrons in atoms dissociate, resulting in a fluid of nuclei and electrons known as plasma. Unlike electrically neutral atoms, a plasma is electrically conductive, and can, therefore, be manipulated by electrical or magnetic fields.

Sakharov's concern about the electrodes led him to consider using magnetic confinement instead of electrostatic. In the case of a magnetic field, the particles will circle around the lines of force. As the particles are moving at high speed, their resulting paths look like a helix. If one arranges a magnetic field so lines of force are parallel and close together, the particles orbiting adjacent lines may collide, and fuse.

Such a field can be created in a solenoid, a cylinder with magnets wrapped around the outside. The combined fields of the magnets create a set of parallel magnetic lines running down the length of the cylinder. This arrangement prevents the particles from moving sideways to the wall of the cylinder, but it does not prevent them from running out the end. The obvious solution to this problem is to bend the cylinder around into a donut shape, or torus, so that the lines form a series of continual rings. In this arrangement, the particles circle endlessly.

Sakharov discussed the concept with Igor Tamm, and by the end of October 1950 the two had written a proposal and sent it to Igor Kurchatov, the director of the atomic bomb project within the USSR, and his deputy, Igor Golovin. However, this initial proposal ignored a fundamental problem; when arranged along a straight solenoid, the external magnets are evenly spaced, but when bent around into a torus, they are closer together on the inside of the ring than the outside. This leads to uneven forces that cause the particles to drift away from their magnetic lines.

During visits to the Laboratory of Measuring Instruments of the USSR Academy of Sciences (LIPAN), the Soviet nuclear research centre, Sakharov suggested two possible solutions to this problem. One was to suspend a current-carrying ring in the centre of the torus. The current in the ring would produce a magnetic field that would mix with the one from the magnets on the outside. The resulting field would be twisted into a helix, so that any given particle would find itself repeatedly on the outside, then inside, of the torus. The drifts caused by the uneven fields are in opposite directions on the inside and outside, so over the course of multiple orbits around the long axis of the torus, the opposite drifts would cancel out. Alternately, he suggested using an external magnet to induce a current in the plasma itself, instead of a separate metal ring, which would have the same effect.

In January 1951, Kurchatov arranged a meeting at LIPAN to consider Sakharov's concepts. They found widespread interest and support, and in February a report on the topic was forwarded to Lavrentiy Beria, who oversaw the atomic efforts in the USSR. For a time, nothing was heard back.

Richter and the birth of fusion research

On 25 March 1951, Argentine President Juan Perón announced that a former German scientist, Ronald Richter, had succeeded in producing fusion at a laboratory scale as part of what is now known as the Huemul Project. Scientists around the world were excited by the announcement, but soon concluded it was not true; simple calculations showed that his experimental setup could not produce enough energy to heat the fusion fuel to the needed temperatures.

Although dismissed by nuclear researchers, the widespread news coverage meant politicians were suddenly aware of, and receptive to, fusion research. In the UK, Thomson was suddenly granted considerable funding. Over the next months, two projects based on the pinch system were up and running. In the US, Lyman Spitzer read the Huemul story, realized it was false, and set about designing a machine that would work. In May he was awarded $50,000 to begin research on his stellarator concept. Jim Tuck had returned to the UK briefly and saw Thomson's pinch machines. When he returned to Los Alamos he also received $50,000 directly from the Los Alamos budget.

Similar events occurred in the USSR. In mid-April, Dmitri Efremov of the Scientific Research Institute of Electrophysical Apparatus stormed into Kurchatov's study with a magazine containing a story about Richter's work, demanding to know why they were beaten by the Argentines. Kurchatov immediately contacted Beria with a proposal to set up a separate fusion research laboratory with Lev Artsimovich as director. Only days later, on 5 May, the proposal had been signed by Joseph Stalin.

New ideas

By October, Sakharov and Tamm had completed a much more detailed consideration of their original proposal, calling for a device with a major radius (of the torus as a whole) of 12 metres (39 ft) and a minor radius (the interior of the cylinder) of 2 metres (6 ft 7 in). The proposal suggested the system could produce 100 grams (3.5 oz) of tritium a day, or breed 10 kilograms (22 lb) of U233 a day.

As the idea was further developed, it was realized that a current in the plasma could create a field that was strong enough to confine the plasma as well, removing the need for the external coils. At this point, the Soviet researchers had re-invented the pinch system being developed in the UK, although they had come to this design from a very different starting point.

Once the idea of using the pinch effect for confinement had been proposed, a much simpler solution became evident. Instead of a large toroid, one could simply induce the current into a linear tube, which could cause the plasma within to collapse down into a filament. This had a huge advantage; the current in the plasma would heat it through normal resistive heating, but this would not heat the plasma to fusion temperatures. However, as the plasma collapsed, the adiabatic process would result in the temperature rising dramatically, more than enough for fusion. With this development, only Golovin and Natan Yavlinsky continued considering the more static toroidal arrangement.

Instability

On 4 July 1952, Nikolai Filippov's group measured neutrons being released from a linear pinch machine. Lev Artsimovich demanded that they check everything before concluding fusion had occurred, and during these checks, they found that the neutrons were not from fusion at all. This same linear arrangement had also occurred to researchers in the UK and US, and their machines showed the same behaviour. But the great secrecy surrounding the type of research meant that none of the groups were aware that others were also working on it, let alone having the identical problem.

After much study, it was found that some of the released neutrons were produced by instabilities in the plasma. There were two common types of instability, the sausage that was seen primarily in linear machines, and the kink which was most common in the toroidal machines. Groups in all three countries began studying the formation of these instabilities and potential ways to address them. Important contributions to the field were made by Martin David Kruskal and Martin Schwarzschild in the US, and Shafranov in the USSR.

One idea that came from these studies became known as the "stabilized pinch". This concept added additional coils to the outside of the chamber, which created a magnetic field that would be present in the plasma before the pinch discharge. In most concepts, the externally induced field was relatively weak, and because a plasma is diamagnetic, it penetrated only the outer areas of the plasma. When the pinch discharge occurred and the plasma quickly contracted, this field became "frozen in" to the resulting filament, creating a strong field in its outer layers. In the US, this was known as "giving the plasma a backbone".

Sakharov revisited his original toroidal concepts and came to a slightly different conclusion about how to stabilize the plasma. The layout would be the same as the stabilized pinch concept, but the role of the two fields would be reversed. Instead of weak externally induced magnetic fields providing stabilization and a strong pinch current responsible for confinement, in the new layout, the external field would be much more powerful in order to provide the majority of confinement, while the current would be much smaller and responsible for the stabilizing effect.

Steps toward declassification

In 1955, with the linear approaches still subject to instability, the first toroidal device was built in the USSR. TMP was a classic pinch machine, similar to models in the UK and US of the same era. The vacuum chamber was made of ceramic, and the spectra of the discharges showed silica, meaning the plasma was not perfectly confined by magnetic field and hitting the walls of the chamber. Two smaller machines followed, using copper shells. The conductive shells were intended to help stabilize the plasma, but were not completely successful in any of the machines that tried it.

With progress apparently stalled, in 1955, Kurchatov called an All Union conference of Soviet researchers with the ultimate aim of opening up fusion research within the USSR. In April 1956, Kurchatov travelled to the UK as part of a widely publicized visit by Nikita Khrushchev and Nikolai Bulganin. He offered to give a talk at Atomic Energy Research Establishment, at the former RAF Harwell, where he shocked the hosts by presenting a detailed historical overview of the Soviet fusion efforts. He took time to note, in particular, the neutrons seen in early machines and warned that neutrons did not mean fusion.

Unknown to Kurchatov, the British ZETA stabilized pinch machine was being built at the far end of the former runway. ZETA was, by far, the largest and most powerful fusion machine to date. Supported by experiments on earlier designs that had been modified to include stabilization, ZETA intended to produce low levels of fusion reactions. This was apparently a great success, and in January 1958, they announced the fusion had been achieved in ZETA based on the release of neutrons and measurements of the plasma temperature.

Vitaly Shafranov and Stanislav Braginskii examined the news reports and attempted to figure out how it worked. One possibility they considered was the use of weak "frozen in" fields, but rejected this, believing the fields would not last long enough. They then concluded ZETA was essentially identical to the devices they had been studying, with strong external fields.

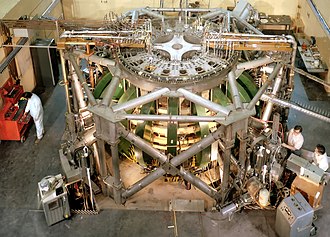

First tokamaks

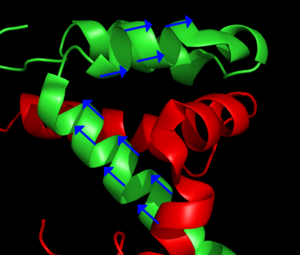

By this time, Soviet researchers had decided to build a larger toroidal machine along the lines suggested by Sakharov. In particular, their design considered one important point found in Kruskal's and Shafranov's works; if the helical path of the particles made them circulate around the plasma's circumference more rapidly than they circulated the long axis of the torus, the kink instability would be strongly suppressed.

(To be clear, Electrical current in coils wrapping around the torus produces a toroidal magnetic field inside the torus; a pulsed magnetic field through the hole in the torus induces the axial current in the torus which has a poloidal magnetic field surrounding it; there may also be rings of current above and below the torus that create additional poloidal magnetic field. The combined magnetic fields form a helical magnetic structure inside the torus.)

Today this basic concept is known as the safety factor. The ratio of the number of times the particle orbits the major axis compared to the minor axis is denoted q, and the Kruskal-Shafranov Limit stated that the kink will be suppressed as long as q > 1. This path is controlled by the relative strengths of the externally induced magnetic field compared to the field created by the internal current. To have q > 1, the external magnets must be much more powerful, or alternatively, the internal current has to be reduced.

Following this criterion, design began on a new reactor, T-1, which today is known as the first real tokamak. T-1 used both stronger external magnetic fields and a reduced current compared to stabilized pinch machines like ZETA. The success of the T-1 resulted in its recognition as the first working tokamak. For his work on "powerful impulse discharges in a gas, to obtain unusually high temperatures needed for thermonuclear processes", Yavlinskii was awarded the Lenin Prize and the Stalin Prize in 1958. Yavlinskii was already preparing the design of an even larger model, later built as T-3. With the apparently successful ZETA announcement, Yavlinskii's concept was viewed very favourably.

Details of ZETA became public in a series of articles in Nature later in January. To Shafranov's surprise, the system did use the "frozen in" field concept. He remained sceptical, but a team at the Ioffe Institute in St. Petersberg began plans to build a similar machine known as Alpha. Only a few months later, in May, the ZETA team issued a release stating they had not achieved fusion, and that they had been misled by erroneous measures of the plasma temperature.

T-1 began operation at the end of 1958. It demonstrated very high energy losses through radiation. This was traced to impurities in the plasma due to the vacuum system causing outgassing from the container materials. In order to explore solutions to this problem, another small device was constructed, T-2. This used an internal liner of corrugated metal that was baked at 550 °C (1,022 °F) to cook off trapped gasses.

Atoms for Peace and the doldrums

As part of the second Atoms for Peace meeting in Geneva in September 1958, the Soviet delegation released many papers covering their fusion research. Among them was a set of initial results on their toroidal machines, which at that point had shown nothing of note.

The "star" of the show was a large model of Spitzer's stellarator, which immediately caught the attention of the Soviets. In contrast to their designs, the stellarator produced the required twisted paths in the plasma without driving a current through it, using a series of external coils (producing internal magnetic fields) that could operate in the steady state rather than the pulses of the induction system that produced the axial current. Kurchatov began asking Yavlinskii to change their T-3 design to a stellarator, but they convinced him that the current provided a useful second role in heating, something the stellarator lacked.

At the time of the show, the stellarator had suffered a long string of minor problems that were just being solved. Solving these revealed that the diffusion rate of the plasma was much faster than theory predicted. Similar problems were seen in all the contemporary designs, for one reason or another. The stellarator, various pinch concepts and the magnetic mirror machines in both the US and USSR all demonstrated problems that limited their confinement times.

From the first studies of controlled fusion, there was a problem lurking in the background. During the Manhattan Project, David Bohm had been part of the team working on isotopic separation of uranium. In the post-war era he continued working with plasmas in magnetic fields. Using basic theory, one would expect the plasma to diffuse across the lines of force at a rate inversely proportional to the square of the strength of the field, meaning that small increases in force would greatly improve confinement. But based on their experiments, Bohm developed an empirical formula, now known as Bohm diffusion, that suggested the rate was linear with the magnetic force, not its square.

If Bohm's formula was correct, there was no hope one could build a fusion reactor based on magnetic confinement. To confine the plasma at the temperatures needed for fusion, the magnetic field would have to be orders of magnitude greater than any known magnet. Spitzer ascribed the difference between the Bohm and classical diffusion rates to turbulence in the plasma, and believed the steady fields of the stellarator would not suffer from this problem. Various experiments at that time suggested the Bohm rate did not apply, and that the classical formula was correct.

But by the early 1960s, with all of the various designs leaking plasma at a prodigious rate, Spitzer himself concluded that the Bohm scaling was an inherent quality of plasmas, and that magnetic confinement would not work. The entire field descended into what became known as "the doldrums", a period of intense pessimism.

Progress in the 1960s

In contrast to the other designs, the experimental tokamaks appeared to be progressing well, so well that a minor theoretical problem was now a real concern. In the presence of gravity, there is a small pressure gradient in the plasma, formerly small enough to ignore but now becoming something that had to be addressed. This led to the addition of yet another set of coils in 1962, which produced a vertical magnetic field that offset these effects. These were a success, and by the mid-1960s the machines began to show signs that they were beating the Bohm limit.

At the 1965 Second International Atomic Energy Agency Conference on fusion at the UK's newly opened Culham Centre for Fusion Energy, Artsimovich reported that their systems were surpassing the Bohm limit by 10 times. Spitzer, reviewing the presentations, suggested that the Bohm limit may still apply; the results were within the range of experimental error of results seen on the stellarators, and the temperature measurements, based on the magnetic fields, were simply not trustworthy.

The next major international fusion meeting was held in August 1968 in Novosibirsk. By this time two additional tokamak designs had been completed, TM-2 in 1965, and T-4 in 1968. Results from T-3 had continued to improve, and similar results were coming from early tests of the new reactors. At the meeting, the Soviet delegation announced that T-3 was producing electron temperatures of 1000 eV (equivalent to 10 million degrees Celsius) and that confinement time was at least 50 times the Bohm limit.

These results were at least 10 times that of any other machine. If correct, they represented an enormous leap for the fusion community. Spitzer remained sceptical, noting that the temperature measurements were still based on the indirect calculations from the magnetic properties of the plasma. Many concluded they were due to an effect known as runaway electrons, and that the Soviets were measuring only those extremely energetic electrons and not the bulk temperature. The Soviets countered with several arguments suggesting the temperature they were measuring was Maxwellian, and the debate raged.

Culham Five

In the aftermath of ZETA, the UK teams began the development of new plasma diagnostic tools to provide more accurate measurements. Among these was the use of a laser to directly measure the temperature of the bulk electrons using Thomson scattering. This technique was well known and respected in the fusion community; Artsimovich had publicly called it "brilliant". Artsimovich invited Bas Pease, the head of Culham, to use their devices on the Soviet reactors. At the height of the cold war, in what is still considered a major political manoeuvre on Artsimovich's part, British physicists were allowed to visit the Kurchatov Institute, the heart of the Soviet nuclear bomb effort.

The British team, nicknamed "The Culham Five", arrived late in 1968. After a lengthy installation and calibration process, the team measured the temperatures over a period of many experimental runs. Initial results were available by August 1969; the Soviets were correct, their results were accurate. The team phoned the results home to Culham, who then passed them along in a confidential phone call to Washington. The final results were published in Nature in November 1969. The results of this announcement have been described as a "veritable stampede" of tokamak construction around the world.

One serious problem remained. Because the electrical current in the plasma was much lower and produced much less compression than a pinch machine, this meant the temperature of the plasma was limited to the resistive heating rate of the current. First proposed in 1950, Spitzer resistivity stated that the electrical resistance of a plasma was reduced as the temperature increased, meaning the heating rate of the plasma would slow as the devices improved and temperatures were pressed higher. Calculations demonstrated that the resulting maximum temperatures while staying within q > 1 would be limited to the low millions of degrees. Artsimovich had been quick to point this out in Novosibirsk, stating that future progress would require new heating methods to be developed.

US turmoil

One of the people attending the Novosibirsk meeting in 1968 was Amasa Stone Bishop, one of the leaders of the US fusion program. One of the few other devices to show clear evidence of beating the Bohm limit at that time was the multipole concept. Both Lawrence Livermore and the Princeton Plasma Physics Laboratory (PPPL), home of Spitzer's stellarator, were building variations on the multipole design. While moderately successful on their own, T-3 greatly outperformed either machine. Bishop was concerned that the multipoles were redundant and thought the US should consider a tokamak of its own.

When he raised the issue at a December 1968 meeting, directors of the labs refused to consider it. Melvin B. Gottlieb of Princeton was exasperated, asking "Do you think that this committee can out-think the scientists?" With the major labs demanding they control their own research, one lab found itself left out. Oak Ridge had originally entered the fusion field with studies for reactor fueling systems, but branched out into a mirror program of their own. By the mid-1960s, their DCX designs were running out of ideas, offering nothing that the similar program at the more prestigious and politically powerful Livermore did not. This made them highly receptive to new concepts.

After a considerable internal debate, Herman Postma formed a small group in early 1969 to consider the tokamak. They came up with a new design, later christened Ormak, that had several novel features. Primary among them was the way the external field was created in a single large copper block, fed power from a large transformer below the torus. This was as opposed to traditional designs that used electric current windings on the outside. They felt the single block would produce a much more uniform field. It would also have the advantage of allowing the torus to have a smaller major radius, lacking the need to route cables through the donut hole, leading to a lower aspect ratio, which the Soviets had already suggested would produce better results.

Tokamak race in the US

In early 1969, Artsimovich visited MIT, where he was hounded by those interested in fusion. He finally agreed to give several lectures in April and then allowed lengthy question-and-answer sessions. As these went on, MIT itself grew interested in the tokamak, having previously stayed out of the fusion field for a variety of reasons. Bruno Coppi was at MIT at the time, and following the same concepts as Postma's team, came up with his own low-aspect-ratio concept, Alcator. Instead of Ormak's toroidal transformer, Alcator used traditional ring-shaped magnetic field coils but required them to be much smaller than existing designs. MIT's Francis Bitter Magnet Laboratory was the world leader in magnet design and they were confident they could build them.

During 1969, two additional groups entered the field. At General Atomics, Tihiro Ohkawa had been developing multipole reactors, and submitted a concept based on these ideas. This was a tokamak that would have a non-circular plasma cross-section; the same math that suggested a lower aspect-ratio would improve performance also suggested that a C or D-shaped plasma would do the same. He called the new design Doublet. Meanwhile, a group at University of Texas at Austin was proposing a relatively simple tokamak to explore heating the plasma through deliberately induced turbulence, the Texas Turbulent Tokamak.

When the members of the Atomic Energy Commissions' Fusion Steering Committee met again in June 1969, they had "tokamak proposals coming out of our ears". The only major lab working on a toroidal design that was not proposing a tokamak was Princeton, who refused to consider it in spite of their Model C stellarator being just about perfect for such a conversion. They continued to offer a long list of reasons why the Model C should not be converted. When these were questioned, a furious debate broke out about whether the Soviet results were reliable.

Watching the debate take place, Gottlieb had a change of heart. There was no point moving forward with the tokamak if the Soviet electron temperature measurements were not accurate, so he formulated a plan to either prove or disprove their results. While swimming in the pool during the lunch break, he told Harold Furth his plan, to which Furth replied: "well, maybe you're right." After lunch, the various teams presented their designs, at which point Gottlieb presented his idea for a "stellarator-tokamak" based on the Model C.

The Standing Committee noted that this system could be complete in six months, while Ormak would take a year. It was only a short time later that the confidential results from the Culham Five were released. When they met again in October, the Standing Committee released funding for all of these proposals. The Model C's new configuration, soon named Symmetrical Tokamak, intended to simply verify the Soviet results, while the others would explore ways to go well beyond T-3.

Heating: US takes the lead

Experiments on the Symmetric Tokamak began in May 1970, and by early the next year they had confirmed the Soviet results and then surpassed them. The stellarator was abandoned, and PPPL turned its considerable expertise to the problem of heating the plasma. Two concepts seemed to hold promise. PPPL proposed using magnetic compression, a pinch-like technique to compress a warm plasma to raise its temperature, but providing that compression through magnets rather than current. Oak Ridge suggested neutral beam injection, small particle accelerators that would shoot fuel atoms through the surrounding magnetic field where they would collide with the plasma and heat it.

PPPL's Adiabatic Toroidal Compressor (ATC) began operation in May 1972, followed shortly thereafter by a neutral-beam equipped Ormak. Both demonstrated significant problems, but PPPL leapt past Oak Ridge by fitting beam injectors to ATC and provided clear evidence of successful heating in 1973. This success "scooped" Oak Ridge, who fell from favour within the Washington Steering Committee.

By this time a much larger design based on beam heating was under construction, the Princeton Large Torus, or PLT. PLT was designed specifically to "give a clear indication whether the tokamak concept plus auxiliary heating can form a basis for a future fusion reactor". PLT was an enormous success, continually raising its internal temperature until it hit 60 million Celsius (8,000 eV, eight times T-3's record) in 1978. This is a key point in the development of the tokamak; fusion reactions become self-sustaining at temperatures between 50 and 100 million Celsius, PLT demonstrated that this was technically achievable.

These experiments, especially PLT, put the US far in the lead in tokamak research. This is due largely to budget; a tokamak cost about $500,000 and the US annual fusion budget was around $25 million at that time. They could afford to explore all of the promising methods of heating, ultimately discovering neutral beams to be among the most effective.

During this period, Robert Hirsch took over the Directorate of fusion development in the U.S. Atomic Energy Commission. Hirsch felt that the program could not be sustained at its current funding levels without demonstrating tangible results. He began to reformulate the entire program. What had once been a lab-led effort of mostly scientific exploration was now a Washington-led effort to build a working power-producing reactor. This was given a boost by the 1973 oil crisis, which led to greatly increased research into alternative energy systems.

1980s: great hope, great disappointment

By the late-1970s, tokamaks had reached all the conditions needed for a practical fusion reactor; in 1978 PLT had demonstrated ignition temperatures, the next year the Soviet T-7 successfully used superconducting magnets for the first time, Doublet proved to be a success and led to almost all future designs adopting this "shaped plasma" approach. It appeared all that was needed to build a power-producing reactor was to put all of these design concepts into a single machine, one that would be capable of running with the radioactive tritium in its fuel mix.

The race was on. During the 1970s, four major second-generation proposals were funded worldwide. The Soviets continued their development lineage with the T-15, while a pan-European effort was developing the Joint European Torus (JET) and Japan began the JT-60 effort (originally known as the "Breakeven Plasma Test Facility"). In the US, Hirsch began formulating plans for a similar design, skipping over proposals for another stepping-stone design directly to a tritium-burning one. This emerged as the Tokamak Fusion Test Reactor (TFTR), run directly from Washington and not linked to any specific lab. Originally favouring Oak Ridge as the host, Hirsch moved it to PPPL after others convinced him they would work the hardest on it because they had the most to lose.

The excitement was so widespread that several commercial ventures to produce commercial tokamaks began around this time. Best known among these, in 1978, Bob Guccione, publisher of Penthouse Magazine, met Robert Bussard and became the world's biggest and most committed private investor in fusion technology, ultimately putting $20 million of his own money into Bussard's Compact Tokamak. Funding by the Riggs Bank led to this effort being known as the Riggatron.

TFTR won the construction race and began operation in 1982, followed shortly by JET in 1983 and JT-60 in 1985. JET quickly took the lead in critical experiments, moving from test gases to deuterium and increasingly powerful "shots". But it soon became clear that none of the new systems were working as expected. A host of new instabilities appeared, along with a number of more practical problems that continued to interfere with their performance. On top of this, dangerous "excursions" of the plasma hitting with the walls of the reactor were evident in both TFTR and JET. Even when working perfectly, plasma confinement at fusion temperatures, the so-called "fusion triple product", continued to be far below what would be needed for a practical reactor design.

Through the mid-1980s the reasons for many of these problems became clear, and various solutions were offered. However, these would significantly increase the size and complexity of the machines. A follow-on design incorporating these changes would be both enormous and vastly more expensive than either JET or TFTR. A new period of pessimism descended on the fusion field.

ITER

At the same time these experiments were demonstrating problems, much of the impetus for the US's massive funding disappeared; in 1986 Ronald Reagan declared the 1970s energy crisis was over, and funding for advanced energy sources had been slashed in the early 1980s.

Some thought of an international reactor design had been ongoing since June 1973 under the name INTOR, for INternational TOkamak Reactor. This was originally started through an agreement between Richard Nixon and Leonid Brezhnev, but had been moving slowly since its first real meeting on 23 November 1978.

During the Geneva Summit in November 1985, Reagan raised the issue with Mikhail Gorbachev and proposed reforming the organization. "... The two leaders emphasized the potential importance of the work aimed at utilizing controlled thermonuclear fusion for peaceful purposes and, in this connection, advocated the widest practicable development of international cooperation in obtaining this source of energy, which is essentially inexhaustible, for the benefit for all mankind."

The next year, an agreement was signed between the US, Soviet Union, European Union and Japan, creating the International Thermonuclear Experimental Reactor organization.

Design work began in 1988, and since that time the ITER reactor has been the primary tokamak design effort worldwide.

High Field Tokamaks

It has been known for a long time that stronger field magnets would enable high energy gain in a much smaller tokamak, with concepts such as FIRE, IGNITOR, and the Compact Ignition Tokamak (CIT) being proposed decades ago.

The commercial availability of high temperature superconductors (HTS) in the 2010s opened a promising pathway to building the higher field magnets required to achieve ITER-like levels of energy gain in a compact device. To leverage this new technology, the MIT Plasma Science and Fusion Center (PSFC) and MIT spinout Commonwealth Fusion Systems (CFS) successfully built and tested the Toroidal Field Model Coil (TFMC) in 2021 to demonstrate the necessary 20 Tesla magnetic field needed to build SPARC, a device designed to achieve a similar fusion gain as ITER but with only ~1/40th ITER's plasma volume.

British startup Tokamak Energy is also planning on building a net-energy tokamak using HTS magnets, but with the spherical tokamak variant.

Design

Basic problem

Positively charged ions and negatively charged electrons in a fusion plasma are at very high temperatures, and have correspondingly large velocities. In order to maintain the fusion process, particles from the hot plasma must be confined in the central region, or the plasma will rapidly cool. Magnetic confinement fusion devices exploit the fact that charged particles in a magnetic field experience a Lorentz force and follow helical paths along the field lines.

The simplest magnetic confinement system is a solenoid. A plasma in a solenoid will spiral about the lines of field running down its center, preventing motion towards the sides. However, this does not prevent motion towards the ends. The obvious solution is to bend the solenoid around into a circle, forming a torus. However, it was demonstrated that such an arrangement is not uniform; for purely geometric reasons, the field on the outside edge of the torus is lower than on the inside edge. This asymmetry causes the electrons and ions to drift across the field, and eventually hit the walls of the torus.

The solution is to shape the lines so they do not simply run around the torus, but twist around like the stripes on a barber pole or candycane. In such a field any single particle will find itself at the outside edge where it will drift one way, say up, and then as it follows its magnetic line around the torus it will find itself on the inside edge, where it will drift the other way. This cancellation is not perfect, but calculations showed it was enough to allow the fuel to remain in the reactor for a useful time.

Tokamak solution

The two first solutions to making a design with the required twist were the stellarator which did so through a mechanical arrangement, twisting the entire torus, and the z-pinch design which ran an electrical current through the plasma to create a second magnetic field to the same end. Both demonstrated improved confinement times compared to a simple torus, but both also demonstrated a variety of effects that caused the plasma to be lost from the reactors at rates that were not sustainable.

The tokamak is essentially identical to the z-pinch concept in its physical layout. Its key innovation was the realization that the instabilities that were causing the pinch to lose its plasma could be controlled. The issue was how "twisty" the fields were; fields that caused the particles to transit inside and out more than once per orbit around the long axis torus were much more stable than devices that had less twist. This ratio of twists to orbits became known as the safety factor, denoted q. Previous devices operated at q about 1⁄3, while the tokamak operates at q ≫ 1. This increases stability by orders of magnitude.

When the problem is considered even more closely, the need for a vertical (parallel to the axis of rotation) component of the magnetic field arises. The Lorentz force of the toroidal plasma current in the vertical field provides the inward force that holds the plasma torus in equilibrium.

Other issues

While the tokamak addresses the issue of plasma stability in a gross sense, plasmas are also subject to a number of dynamic instabilities. One of these, the kink instability, is strongly suppressed by the tokamak layout, a side-effect of the high safety factors of tokamaks. The lack of kinks allowed the tokamak to operate at much higher temperatures than previous machines, and this allowed a host of new phenomena to appear.

One of these, the banana orbits, is caused by the wide range of particle energies in a tokamak – much of the fuel is hot but a certain percentage is much cooler. Due to the high twist of the fields in the tokamak, particles following their lines of force rapidly move towards the inner edge and then outer. As they move inward they are subject to increasing magnetic fields due to the smaller radius concentrating the field. The low-energy particles in the fuel will reflect off this increasing field and begin to travel backwards through the fuel, colliding with the higher energy nuclei and scattering them out of the plasma. This process causes fuel to be lost from the reactor, although this process is slow enough that a practical reactor is still well within reach.

Breakeven, Q, and ignition

One of the first goals for any controlled fusion device is to reach breakeven, the point where the energy being released by the fusion reactions is equal to the amount of energy being used to maintain the reaction. The ratio of output to input energy is denoted Q, and breakeven corresponds to a Q of 1. A Q of more than one is needed for the reactor to generate net energy, but for practical reasons, it is desirable for it to be much higher.

Once breakeven is reached, further improvements in confinement generally lead to a rapidly increasing Q. That is because some of the energy being given off by the fusion reactions of the most common fusion fuel, a 50-50 mix of deuterium and tritium, is in the form of alpha particles. These can collide with the fuel nuclei in the plasma and heat it, reducing the amount of external heat needed. At some point, known as ignition, this internal self-heating is enough to keep the reaction going without any external heating, corresponding to an infinite Q.

In the case of the tokamak, this self-heating process is maximized if the alpha particles remain in the fuel long enough to guarantee they will collide with the fuel. As the alphas are electrically charged, they are subject to the same fields that are confining the fuel plasma. The amount of time they spend in the fuel can be maximized by ensuring their orbit in the field remains within the plasma. It can be demonstrated that this occurs when the electrical current in the plasma is about 3 MA.

Advanced tokamaks

In the early 1970s, studies at Princeton into the use of high-power superconducting magnets in future tokamak designs examined the layout of the magnets. They noticed that the arrangement of the main toroidal coils meant that there was significantly more tension between the magnets on the inside of the curvature where they were closer together. Considering this, they noted that the tensional forces within the magnets would be evened out if they were shaped like a D, rather than an O. This became known as the "Princeton D-coil".

This was not the first time this sort of arrangement had been considered, although for entirely different reasons. The safety factor varies across the axis of the machine; for purely geometrical reasons, it is always smaller at the inside edge of the plasma closest to the machine's center because the long axis is shorter there. That means that a machine with an average q = 2 might still be less than 1 in certain areas. In the 1970s, it was suggested that one way to counteract this and produce a design with a higher average q would be to shape the magnetic fields so that the plasma only filled the outer half of the torus, shaped like a D or C when viewed end-on, instead of the normal circular cross section.

One of the first machines to incorporate a D-shaped plasma was the JET, which began its design work in 1973. This decision was made both for theoretical reasons as well as practical; because the force is larger on the inside edge of the torus, there is a large net force pressing inward on the entire reactor. The D-shape also had the advantage of reducing the net force, as well as making the supported inside edge flatter so it was easier to support. Code exploring the general layout noticed that a non-circular shape would slowly drift vertically, which led to the addition of an active feedback system to hold it in the center. Once JET had selected this layout, the General Atomics Doublet III team redesigned that machine into the D-IIID with a D-shaped cross-section, and it was selected for the Japanese JT-60 design as well. This layout has been largely universal since then.

One problem seen in all fusion reactors is that the presence of heavier elements causes energy to be lost at an increased rate, cooling the plasma. During the very earliest development of fusion power, a solution to this problem was found, the divertor, essentially a large mass spectrometer that would cause the heavier elements to be flung out of the reactor. This was initially part of the stellarator designs, where it is easy to integrate into the magnetic windings. However, designing a divertor for a tokamak proved to be a very difficult design problem.

Another problem seen in all fusion designs is the heat load that the plasma places on the wall of the confinement vessel. There are materials that can handle this load, but they are generally undesirable and expensive heavy metals. When such materials are sputtered in collisions with hot ions, their atoms mix with the fuel and rapidly cool it. A solution used on most tokamak designs is the limiter, a small ring of light metal that projected into the chamber so that the plasma would hit it before hitting the walls. This eroded the limiter and caused its atoms to mix with the fuel, but these lighter materials cause less disruption than the wall materials.

When reactors moved to the D-shaped plasmas it was quickly noted that the escaping particle flux of the plasma could be shaped as well. Over time, this led to the idea of using the fields to create an internal divertor that flings the heavier elements out of the fuel, typically towards the bottom of the reactor. There, a pool of liquid lithium metal is used as a sort of limiter; the particles hit it and are rapidly cooled, remaining in the lithium. This internal pool is much easier to cool, due to its location, and although some lithium atoms are released into the plasma, its very low mass makes it a much smaller problem than even the lightest metals used previously.

As machines began to explore this newly shaped plasma, they noticed that certain arrangements of the fields and plasma parameters would sometimes enter what is now known as the high-confinement mode, or H-mode, which operated stably at higher temperatures and pressures. Operating in the H-mode, which can also be seen in stellarators, is now a major design goal of the tokamak design.

Finally, it was noted that when the plasma had a non-uniform density it would give rise to internal electrical currents. This is known as the bootstrap current. This allows a properly designed reactor to generate some of the internal current needed to twist the magnetic field lines without having to supply it from an external source. This has a number of advantages, and modern designs all attempt to generate as much of their total current through the bootstrap process as possible.

By the early 1990s, the combination of these features and others collectively gave rise to the "advanced tokamak" concept. This forms the basis of modern research, including ITER.

Plasma disruptions

Tokamaks are subject to events known as "disruptions" that cause confinement to be lost in milliseconds. There are two primary mechanisms. In one, the "vertical displacement event" (VDE), the entire plasma moves vertically until it touches the upper or lower section of the vacuum chamber. In the other, the "major disruption", long wavelength, non-axisymmetric magnetohydrodynamical instabilities cause the plasma to be forced into non-symmetrical shapes, often squeezed into the top and bottom of the chamber.

When the plasma touches the vessel walls it undergoes rapid cooling, or "thermal quenching". In the major disruption case, this is normally accompanied by a brief increase in plasma current as the plasma concentrates. Quenching ultimately causes the plasma confinement to break up. In the case of the major disruption the current drops again, the "current quench". The initial increase in current is not seen in the VDE, and the thermal and current quench occurs at the same time. In both cases, the thermal and electrical load of the plasma is rapidly deposited on the reactor vessel, which has to be able to handle these loads. ITER is designed to handle 2600 of these events over its lifetime.

For modern high-energy devices, where plasma currents are on the order of 15 megaamperes in ITER, it is possible the brief increase in current during a major disruption will cross a critical threshold. This occurs when the current produces a force on the electrons that is higher than the frictional forces of the collisions between particles in the plasma. In this event, electrons can be rapidly accelerated to relativistic velocities, creating so-called "runaway electrons" in the relativistic runaway electron avalanche. These retain their energy even as the current quench is occurring on the bulk of the plasma.

When confinement finally breaks down, these runaway electrons follow the path of least resistance and impact the side of the reactor. These can reach 12 megaamps of current deposited in a small area, well beyond the capabilities of any mechanical solution. In one famous case, the Tokamak de Fontenay aux Roses had a major disruption where the runaway electrons burned a hole through the vacuum chamber.

The occurrence of major disruptions in running tokamaks has always been rather high, of the order of a few percent of the total numbers of the shots. In currently operated tokamaks, the damage is often large but rarely dramatic. In the ITER tokamak, it is expected that the occurrence of a limited number of major disruptions will definitively damage the chamber with no possibility to restore the device. The development of systems to counter the effects of runaway electrons is considered a must-have piece of technology for the operational level ITER.

A large amplitude of the central current density can also result in internal disruptions, or sawteeth, which do not generally result in termination of the discharge.

Plasma heating

In an operating fusion reactor, part of the energy generated will serve to maintain the plasma temperature as fresh deuterium and tritium are introduced. However, in the startup of a reactor, either initially or after a temporary shutdown, the plasma will have to be heated to its operating temperature of greater than 10 keV (over 100 million degrees Celsius). In current tokamak (and other) magnetic fusion experiments, insufficient fusion energy is produced to maintain the plasma temperature, and constant external heating must be supplied. Chinese researchers set up the Experimental Advanced Superconducting Tokamak (EAST) in 2006 which is believed to sustain 100 million degree Celsius plasma (sun has 15 million degree Celsius temperature) which is required to initiate the fusion between hydrogen atoms, according to the latest test conducted in EAST (test conducted in November 2018).

Ohmic heating ~ inductive mode

Since the plasma is an electrical conductor, it is possible to heat the plasma by inducing a current through it; the induced current that provides most of the poloidal field is also a major source of initial heating.

The heating caused by the induced current is called ohmic (or resistive) heating; it is the same kind of heating that occurs in an electric light bulb or in an electric heater. The heat generated depends on the resistance of the plasma and the amount of electric current running through it. But as the temperature of heated plasma rises, the resistance decreases and ohmic heating becomes less effective. It appears that the maximum plasma temperature attainable by ohmic heating in a tokamak is 20–30 million degrees Celsius. To obtain still higher temperatures, additional heating methods must be used.

The current is induced by continually increasing the current through an electromagnetic winding linked with the plasma torus: the plasma can be viewed as the secondary winding of a transformer. This is inherently a pulsed process because there is a limit to the current through the primary (there are also other limitations on long pulses). Tokamaks must therefore either operate for short periods or rely on other means of heating and current drive.

Magnetic compression

A gas can be heated by sudden compression. In the same way, the temperature of a plasma is increased if it is compressed rapidly by increasing the confining magnetic field. In a tokamak, this compression is achieved simply by moving the plasma into a region of higher magnetic field (i.e., radially inward). Since plasma compression brings the ions closer together, the process has the additional benefit of facilitating attainment of the required density for a fusion reactor.

Magnetic compression was an area of research in the early "tokamak stampede", and was the purpose of one major design, the ATC. The concept has not been widely used since then, although a somewhat similar concept is part of the General Fusion design.

Neutral-beam injection

Neutral-beam injection involves the introduction of high energy (rapidly moving) atoms or molecules into an ohmically heated, magnetically confined plasma within the tokamak.

The high energy atoms originate as ions in an arc chamber before being extracted through a high voltage grid set. The term "ion source" is used to generally mean the assembly consisting of a set of electron emitting filaments, an arc chamber volume, and a set of extraction grids. A second device, similar in concept, is used to separately accelerate electrons to the same energy. The much lighter mass of the electrons makes this device much smaller than its ion counterpart. The two beams then intersect, where the ions and electrons recombine into neutral atoms, allowing them to travel through the magnetic fields.

Once the neutral beam enters the tokamak, interactions with the main plasma ions occur. This has two effects. One is that the injected atoms re-ionize and become charged, thereby becoming trapped inside the reactor and adding to the fuel mass. The other is that the process of being ionized occurs through impacts with the rest of the fuel, and these impacts deposit energy in that fuel, heating it.

This form of heating has no inherent energy (temperature) limitation, in contrast to the ohmic method, but its rate is limited to the current in the injectors. Ion source extraction voltages are typically on the order of 50–100 kV, and high voltage, negative ion sources (-1 MV) are being developed for ITER. The ITER Neutral Beam Test Facility in Padova will be the first ITER facility to start operation.

While neutral beam injection is used primarily for plasma heating, it can also be used as a diagnostic tool and in feedback control by making a pulsed beam consisting of a string of brief 2–10 ms beam blips. Deuterium is a primary fuel for neutral beam heating systems and hydrogen and helium are sometimes used for selected experiments.

Radio-frequency heating

High-frequency electromagnetic waves are generated by oscillators (often by gyrotrons or klystrons) outside the torus. If the waves have the correct frequency (or wavelength) and polarization, their energy can be transferred to the charged particles in the plasma, which in turn collide with other plasma particles, thus increasing the temperature of the bulk plasma. Various techniques exist including electron cyclotron resonance heating (ECRH) and ion cyclotron resonance heating. This energy is usually transferred by microwaves.

Particle inventory

Plasma discharges within the tokamak's vacuum chamber consist of energized ions and atoms and the energy from these particles eventually reaches the inner wall of the chamber through radiation, collisions, or lack of confinement. The inner wall of the chamber is water-cooled and the heat from the particles is removed via conduction through the wall to the water and convection of the heated water to an external cooling system.

Turbomolecular or diffusion pumps allow for particles to be evacuated from the bulk volume and cryogenic pumps, consisting of a liquid helium-cooled surface, serve to effectively control the density throughout the discharge by providing an energy sink for condensation to occur. When done correctly, the fusion reactions produce large amounts of high energy neutrons. Being electrically neutral and relatively tiny, the neutrons are not affected by the magnetic fields nor are they stopped much by the surrounding vacuum chamber.

The neutron flux is reduced significantly at a purpose-built neutron shield boundary that surrounds the tokamak in all directions. Shield materials vary but are generally materials made of atoms which are close to the size of neutrons because these work best to absorb the neutron and its energy. Good candidate materials include those with much hydrogen, such as water and plastics. Boron atoms are also good absorbers of neutrons. Thus, concrete and polyethylene doped with boron make inexpensive neutron shielding materials.

Once freed, the neutron has a relatively short half-life of about 10 minutes before it decays into a proton and electron with the emission of energy. When the time comes to actually try to make electricity from a tokamak-based reactor, some of the neutrons produced in the fusion process would be absorbed by a liquid metal blanket and their kinetic energy would be used in heat transfer processes to ultimately turn a generator.