https://en.wikipedia.org/wiki/Good_and_evil

In religion, ethics, philosophy, and psychology, "good and evil" is a very common dichotomy. In cultures with Manichaean and Abrahamic religious influence, evil is perceived as the dualistic antagonistic opposite of good, in which good should prevail and evil should be defeated. In cultures with Buddhist spiritual influence, both good and evil are perceived as part of an antagonistic duality that itself must be overcome through achieving Śūnyatā: emptiness in the sense of recognition of good and evil being two opposing principles but not a reality, emptying the duality of them, and achieving a oneness.

Evil is often used to denote profound immorality. Evil has also been described as a supernatural force. Definitions of evil vary, as does the analysis of its motives. However, elements that are commonly associated with evil involve unbalanced behavior involving expediency, selfishness, ignorance, or neglect. Shakespeare once famously wrote the phrase, "There is nothing that is either good or bad, but thinking makes it so."

The modern philosophical questions regarding good and evil are subsumed into three major areas of study: metaethics concerning the nature of good and evil, normative ethics concerning how we ought to behave, and applied ethics concerning particular moral issues.

History and etymology

Every language has a word expressing good in the sense of "having the right or desirable quality" (ἀρετή) and bad in the sense "undesirable". A sense of moral judgment and a distinction "right and wrong, good and bad" are cultural universals.

Ancient world

The philosopher Zoroaster simplified the pantheon of early Iranian gods into two opposing forces: Ahura Mazda (Illuminating Wisdom) and Angra Mainyu (Destructive Spirit) which were in conflict.

This idea developed into a religion which spawned many sects, some of which embraced an extreme dualistic belief that the material world should be shunned and the spiritual world should be embraced. Gnostic ideas influenced many ancient religions which teach that gnosis (variously interpreted as enlightenment, salvation, emancipation or 'oneness with God') may be reached by practising philanthropy to the point of personal poverty, sexual abstinence (as far as possible for hearers, total for initiates) and diligently searching for wisdom by helping others.

Similarly, in ancient Egypt, there were the concepts of Ma'at, the principle of justice, order, and cohesion, and Isfet, the principle of chaos, disorder, and decay, with the former being the power and principles which society sought to embody where the latter was such that undermined society. This correspondence can also be seen reflected in ancient Mesopotamian religion as well in the conflict between Marduk and Tiamat.

Classical world

In Western civilisation, the basic meanings of κακός and ἀγαθός are "bad, cowardly" and "good, brave, capable", and their absolute sense emerges only around 400 BC, with pre-Socratic philosophy, in particular Democritus. Morality in this absolute sense solidifies in the dialogues of Plato, together with the emergence of monotheistic thought (notably in Euthyphro, which ponders the concept of piety (τὸ ὅσιον) as a moral absolute). The idea was further developed in Late Antiquity by Neoplatonists, Gnostics, and Church Fathers.

This development from the relative or habitual to the absolute is also evident in the terms ethics and morality both being derived from terms for "regional custom", Greek ήθος and Latin mores, respectively (see also siðr).

Medieval period

According to the classical definition of Augustine of Hippo, sin is "a word, deed, or desire in opposition to the eternal law of God."

Many medieval Christian theologians both broadened and narrowed the basic concept of Good and evil until it came to have several, sometimes complex definitions such as:

- a personal preference or subjective judgment regarding any issue which might be earn praise or punishment from the religious authorities

- religious obligation arising from Divine law leading to sainthood or damnation

- a generally accepted cultural standard of behaviour which might enhance group survival or wealth

- natural law or behaviour which induces strong emotional reaction

- statute law imposing a legal duty

Modern ideas

Today the basic dichotomy often breaks down along these lines:

- Good is a broad concept often associated with life, charity, continuity, happiness, love, or justice.

- Evil is often associated with conscious and deliberate wrongdoing, discrimination designed to harm others, humiliation of people designed to diminish their psychological needs and dignity, destructiveness, and acts of unnecessary or indiscriminate violence.

The modern English word evil (Old English yfel) and its cognates such as the German Übel and Dutch euvel are widely considered to come from a Proto-Germanic reconstructed form of *ubilaz, comparable to the Hittite huwapp- ultimately from the Proto-Indo-European form *wap- and suffixed zero-grade form *up-elo-. Other later Germanic forms include Middle English evel, ifel, ufel, Old Frisian evel (adjective and noun), Old Saxon ubil, Old High German ubil, and Gothic ubils.

The nature of being good has been given many treatments; one is that the good is based on the natural love, bonding, and affection that begins at the earliest stages of personal development; another is that goodness is a product of knowing truth. Differing views also exist as to why evil might arise. Many religious and philosophical traditions claim that evil behavior is an aberration that results from the imperfect human condition (e.g. "The Fall of Man"). Sometimes, evil is attributed to the existence of free will and human agency. Some argue that evil itself is ultimately based in an ignorance of truth (i.e., human value, sanctity, divinity). A variety of thinkers have alleged the opposite, by suggesting that evil is learned as a consequence of tyrannical social structures.

Theories of moral goodness

Chinese moral philosophy

In Confucianism and Taoism, there is no direct analogue to the way good and evil are opposed, although references to demonic influence is common in Chinese folk religion. Confucianism's primary concern is with correct social relationships and the behavior appropriate to the learned or superior man. Evil would thus correspond to wrong behavior. Still less does it map into Taoism, in spite of the centrality of dualism in that system, but the opposite of the basic virtues of Taoism (compassion, moderation, and humility) can be inferred to be the analogue of evil in it.

Western philosophy

Pyrrhonism

Pyrrhonism holds that good and evil do not exist by nature, meaning that good and evil do not exist within the things themselves. All judgments of good and evil are relative to the one doing the judging.

Spinoza

Benedict de Spinoza states:

1. By good, I understand that which we certainly know is useful to us.

2. By evil, on the contrary I understand that which we certainly know hinders us from possessing anything that is good.

Spinoza assumes a quasi-mathematical style and states these further propositions which he purports to prove or demonstrate from the above definitions in part IV of his Ethics :

- Proposition 8 "Knowledge of good or evil is nothing but affect of joy or sorrow in so far as we are conscious of it."

- Proposition 30 "Nothing can be evil through that which it possesses in common with our nature, but in so far as a thing is evil to us it is contrary to us."

- Proposition 64 "The knowledge of evil is inadequate knowledge."

- Corollary "Hence it follows that if the human mind had none but adequate ideas, it would form no notion of evil."

- Proposition 65 "According to the guidance of reason, of two things which are good, we shall follow the greater good, and of two evils, follow the less."

- Proposition 68 "If men were born free, they would form no conception of good and evil so long as they were free."

Nietzsche

Friedrich Nietzsche, in a rejection of Judeo-Christian morality, addresses this in two books, Beyond Good and Evil and On the Genealogy of Morals. In these works, he states that the natural, functional, "non-good" has been socially transformed into the religious concept of evil by the "slave mentality" of the masses, who resent their "masters", the strong. He also critiques morality by saying that many who consider themselves to be moral are simply acting out of cowardice – wanting to do evil but afraid of the repercussions.

Psychology

Carl Jung

Carl Jung, in his book Answer to Job and elsewhere, depicted evil as the dark side of the Devil. People tend to believe evil is something external to them, because they project their shadow onto others. Jung interpreted the story of Jesus as an account of God facing his own shadow.

Philip Zimbardo

In 2007, Philip Zimbardo suggested that people may act in evil ways as a result of a collective identity. This hypothesis, based on his previous experience from the Stanford prison experiment, was published in the book The Lucifer Effect: Understanding How Good People Turn Evil.

Religion

Abrahamic religions

Baháʼí Faith

The Baháʼí Faith asserts that evil is non-existent and that it is a concept for the lacking of good, just as cold is the state of no heat, darkness is the state of no light, forgetfulness the lacking of memory, ignorance the lacking of knowledge. All of these are states of lacking and have no real existence.

Thus, evil does not exist, and is relative to man. `Abdu'l-Bahá, son of the founder of the religion, in Some Answered Questions states:

"Nevertheless, a doubt occurs to the mind—that is, scorpions and serpents are poisonous. Are they good or evil, for they are existing beings? Yes, a scorpion is evil in relation to man; a serpent is evil in relation to man; but in relation to themselves they are not evil, for their poison is their weapon, and by their sting they defend themselves."

Thus, evil is more of an intellectual concept than a true reality. Since God is good, and upon creating creation he confirmed it by saying it is Good (Genesis 1:31) evil cannot have a true reality.

Christianity

Christian theology draws its concept of evil from the Old and New Testaments. The Christian Bible exercises "the dominant influence upon ideas about God and evil in the Western world." In the Old Testament, evil is understood to be an opposition to God as well as something unsuitable or inferior such as the leader of the fallen angels Satan. In the New Testament the Greek word poneros is used to indicate unsuitability, while kakos is used to refer to opposition to God in the human realm. Officially, the Catholic Church extracts its understanding of evil from its canonical antiquity and the Dominican theologian, Thomas Aquinas, who in Summa Theologica defines evil as the absence or privation of good. French-American theologian Henri Blocher describes evil, when viewed as a theological concept, as an "unjustifiable reality. In common parlance, evil is 'something' that occurs in experience that ought not to be." According to 1 Timothy 6:10 "For the love of money is the root of all of evil"

In Mormonism, mortal life is viewed as a test of faith, where one's choices are central to the Plan of Salvation. See Agency (LDS Church). Evil is that which keeps one from discovering the nature of God. It is believed that one must choose not to be evil to return to God.

Christian Science believes that evil arises from a misunderstanding of the goodness of nature, which is understood as being inherently perfect if viewed from the correct (spiritual) perspective. Misunderstanding God's reality leads to incorrect choices, which are termed evil. This has led to the rejection of any separate power being the source of evil, or of God as being the source of evil; instead, the appearance of evil is the result of a mistaken concept of good. Christian Scientists argue that even the most evil person does not pursue evil for its own sake, but from the mistaken viewpoint that he or she will achieve some kind of good thereby.

Islam

There is no concept of absolute evil in Islam, as a fundamental universal principle that is independent from and equal with good in a dualistic sense. Within Islam, it is considered essential to believe that all comes from God, whether it is perceived as good or bad by individuals; and things that are perceived as evil or bad are either natural events (natural disasters or illnesses) or caused by humanity's free will to disobey God's orders.

According to the Ahmadiyya understanding of Islam, evil does not have a positive existence in itself and is merely the lack of good, just as darkness is the result of lack of light.

Judaism

In Judaism, yetzer hara is the congenital inclination to do evil, by violating the will of God. The term is drawn from the phrase "the imagination of the heart of man [is] evil" (יֵצֶר לֵב הָאָדָם רַע, yetzer lev-ha-adam ra), which occurs twice at the beginning of the Torah. Genesis 6:5 and 8:21. The Hebrew word "yetzer" having appeared twice in Genesis occurs again at the end of the Torah: "I knew their devisings that they do". Thus from beginning to end the heart's "yetzer" is continually bent on evil, a profoundly pessimistic view of the human being. However, the Torah which began with blessing anticipates future blessing which will come as a result of God circumcising the heart in the latter days.

In traditional Judaism, the yetzer hara is not a demonic force, but rather man's misuse of things the physical body needs to survive. Thus, the need for food becomes gluttony due to the yetzer hara. The need for procreation becomes promiscuity, and so on. The yetzer hara could thus be best described as one's baser instincts.

According to the Talmudic tractate Avot de-Rabbi Natan, a boy's evil inclination is greater than his good inclination until he turns 13 (bar mitzvah), at which point the good inclination is "born" and able to control his behavior. Moreover, the rabbis have stated: "The greater the man, the greater his [evil] inclination."

Indian religions

Buddhism

Buddhist ethics are traditionally based on what Buddhists view as the enlightened perspective of the Buddha, or other enlightened beings such as Bodhisattvas. The Indian term for ethics or morality used in Buddhism is Śīla or sīla (Pāli). Śīla in Buddhism is one of three sections of the Noble Eightfold Path, and is a code of conduct that embraces a commitment to harmony and self-restraint with the principal motivation being nonviolence, or freedom from causing harm. It has been variously described as virtue, moral discipline and precept.

Sīla is an internal, aware, and intentional ethical behavior, according to one's commitment to the path of liberation. It is an ethical compass within self and relationships, rather than what is associated with the English word "morality" (i.e., obedience, a sense of obligation, and external constraint).

Sīla is one of the three practices foundational to Buddhism and the non-sectarian Vipassana movement; sīla, samādhi, and paññā as well as the Theravadin foundations of sīla, dāna, and bhavana. It is also the second pāramitā. Sīla is also wholehearted commitment to what is wholesome. Two aspects of sīla are essential to the training: right "performance" (caritta), and right "avoidance" (varitta). Honoring the precepts of sīla is considered a "great gift" (mahadana) to others, because it creates an atmosphere of trust, respect, and security. It means the practitioner poses no threat to another person's life, property, family, rights, or well-being.

Moral instructions are included in Buddhist scriptures or handed down through tradition. Most scholars of Buddhist ethics thus rely on the examination of Buddhist scriptures, and the use of anthropological evidence from traditional Buddhist societies, to justify claims about the nature of Buddhist ethics.

Hinduism

In Hinduism the concept of dharma or righteousness clearly divides the world into good and evil, and clearly explains that wars have to be waged sometimes to establish and protect dharma; this war is called Dharmayuddha. This division of good and evil is of major importance in both the Hindu epics of Ramayana and Mahabharata. However, the main emphasis in Hinduism is on bad action, rather than bad people. The Hindu holy text, the Bhagavad Gita, speaks of the balance of good and evil. When this balance goes off, divine incarnations come to help to restore this balance, as a balance must be maintained for peace and harmony in the world.

Sikhism

In adherence to the core principle of spiritual evolution, the Sikh idea of evil changes depending on one's position on the path to liberation. At the beginning stages of spiritual growth, good and evil may seem neatly separated. However, once one's spirit evolves to the point where it sees most clearly, the idea of evil vanishes and the truth is revealed. In his writings Guru Arjan explains that, because God is the source of all things, what we believe to be evil must too come from God. And because God is ultimately a source of absolute good, nothing truly evil can originate from God.

Nevertheless, Sikhism, like many other religions, does incorporate a list of "vices" from which suffering, corruption, and abject negativity arise. These are known as the Five Thieves, called such due to their propensity to cloud the mind and lead one astray from the prosecution of righteous action. These are:

One who gives in to the temptations of the Five Thieves is known as "Manmukh", or someone who lives selfishly and without virtue. Inversely, the "Gurmukh, who thrive in their reverence toward divine knowledge, rise above vice via the practice of the high virtues of Sikhism. These are:

- Sewa, or selfless service to others.

- Nam Simran, or meditation upon the divine name.

Zoroastrianism

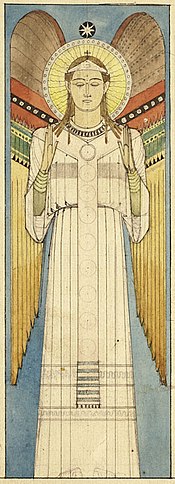

In the originally Persian religion of Zoroastrianism, the world is a battle ground between the God Ahura Mazda (also called Ormazd) and the malignant spirit Angra Mainyu (also called Ahriman). The final resolution of the struggle between good and evil was supposed to occur on a Day of Judgement, in which all beings that have lived will be led across a bridge of fire, and those who are evil will be cast down forever. In Afghan belief, angels (yazata) and saints are beings sent to help us achieve the path towards goodness.

Descriptive, meta-ethical, and normative fields

It is possible to treat the essential theories of value by the use of a philosophical and academic approach. In properly analyzing theories of value, everyday beliefs are not only carefully catalogued and described, but also rigorously analyzed and judged.

There are at least two basic ways of presenting a theory of value, based on two different kinds of questions:

- What do people find good, and what do they despise?

- What really is good, and what really is bad?

The two questions are subtly different. One may answer the first question by researching the world by use of social science, and examining the preferences that people assert. However, one may answer the second question by use of reasoning, introspection, prescription, and generalization. The former kind of method of analysis is called "descriptive", because it attempts to describe what people actually view as good or evil; while the latter is called "normative", because it tries to actively prohibit evils and cherish goods. These descriptive and normative approaches can be complementary. For example, tracking the decline of the popularity of slavery across cultures is the work of descriptive ethics, while advising that slavery be avoided is normative.

Meta-ethics is the study of the fundamental questions concerning the nature and origins of the good and the evil, including inquiry into the nature of good and evil, as well as the meaning of evaluative language. In this respect, meta-ethics is not necessarily tied to investigations into how others see the good, or of asserting what is good.

Theories of the intrinsically good

A satisfying formulation of goodness is valuable because it might allow one to construct a good life or society by reliable processes of deduction, elaboration, or prioritization. One could answer the ancient question, "How should we then live?" among many other important related questions. It has long been thought that this question can best be answered by examining what it is that necessarily makes a thing valuable, or in what the source of value consists.

Platonic idealism

One attempt to define goodness describes it as a property of the world with Platonic idealism. According to this claim, to talk about the good is to talk about something real that exists in the object itself, independent of the perception of it. Plato advocated this view, in his expression that there is such a thing as an eternal realm of forms or ideas, and that the greatest of the ideas and the essence of being was goodness, or The good. The good was defined by many ancient Greeks and other ancient philosophers as a perfect and eternal idea, or blueprint. The good is the right relation between all that exists, and this exists in the mind of the Divine, or some heavenly realm. The good is the harmony of a just political community, love, friendship, the ordered human soul of virtues, and the right relation to the Divine and to Nature. The characters in Plato's dialogues mention the many virtues of a philosopher, or a lover of wisdom.

A theist is a person who believes that the Supreme Being exists or gods exist (monotheism or polytheism). A theist may, therefore, claim that the universe has a purpose and value according to the will of such creator(s) that lies partially beyond human understanding. For instance, Thomas Aquinas—a proponent of this view—believed he had proven the existence of God, and the right relations that humans ought to have to the divine first cause.

Monotheists might also hope for infinite universal love. Such hope is often translated as "faith", and wisdom itself is largely defined within some religious doctrines as a knowledge and understanding of innate goodness. The concepts of innocence, spiritual purity, and salvation are likewise related to a concept of being in, or returning to, a state of goodness—one that, according to various teachings of "enlightenment", approaches a state of holiness (or Godliness).

Perfectionism

Aristotle believed that virtues consisted of realization of potentials unique to humanity, such as the use of reason. This type of view, called perfectionism, has been recently defended in modern form by Thomas Hurka.

An entirely different form of perfectionism has arisen in response to rapid technological change. Some techno-optimists, especially transhumanists, avow a form of perfectionism in which the capacity to determine good and trade off fundamental values, is expressed not by humans but by software, genetic engineering of humans, artificial intelligence. Skeptics assert that rather than perfect goodness, it would be only the appearance of perfect goodness, reinforced by persuasion technology and probably brute force of violent technological escalation, which would cause people to accept such rulers or rules authored by them.

Welfarist theories

Welfarist theories of value say things that are good are such because of their positive effects on human well-being.

Subjective theories of well-being

It is difficult to figure out where an immaterial trait such as "goodness" could reside in the world. A counterproposal is to locate values inside people. Some philosophers go so far as to say that if some state of affairs does not tend to arouse a desirable subjective state in self-aware beings, then it cannot be good.

Most philosophers who think goods have to create desirable mental states also say that goods are experiences of self-aware beings. These philosophers often distinguish the experience, which they call an intrinsic good, from the things that seem to cause the experience, which they call "inherent" goods.

Some theories describe no higher collective value than that of maximizing pleasure for individual(s). Some even define goodness and intrinsic value as the experience of pleasure, and bad as the experience of pain. This view is called hedonism, a monistic theory of value. It has two main varieties: simple, and Epicurean.

Simple hedonism is the view that physical pleasure is the ultimate good. However, the ancient philosopher Epicurus used the word 'pleasure' in a more general sense that encompassed a range of states from bliss to contentment to relief. Contrary to popular caricature, he valued pleasures of the mind to bodily pleasures, and advocated moderation as the surest path to happiness.

Jeremy Bentham's book The Principles of Morals and Legislation prioritized goods by considering pleasure, pain and consequences. This theory had a wide effect on public affairs, up to and including the present day. A similar system was later named Utilitarianism by John Stuart Mill. More broadly, utilitarian theories are examples of Consequentialism. All utilitarian theories are based upon the maxim of utility, which states that good is whatever provides the greatest happiness for the greatest number. It follows from this principle that what brings happiness to the greatest number of people, is good.

A benefit of tracing good to pleasure and pain is that both are easily understandable, both in oneself and to an extent in others. For the hedonist, the explanation for helping behaviour may come in the form of empathy—the ability of a being to "feel" another's pain. People tend to value the lives of gorillas more than those of mosquitoes because the gorilla lives and feels, making it easier to empathize with them. This idea is carried forward in the ethical relationship view and has given rise to the animal rights movement and parts of the peace movement. The impact of sympathy on human behaviour is compatible with Enlightenment views, including David Hume's stances that the idea of a self with unique identity is illusory, and that morality ultimately comes down to sympathy and fellow feeling for others, or the exercise of approval underlying moral judgments.

A view adopted by James Griffin attempts to find a subjective alternative to hedonism as an intrinsic value. He argues that the satisfaction of one's informed desires constitutes well-being, whether or not these desires actually bring the agent happiness. Moreover, these preferences must be life-relevant, that is, contribute to the success of a person's life overall.

Desire satisfaction may occur without the agent's awareness of the satisfaction of the desire. For example, if a man wishes for his legal will to be enacted after his death, and it is, then his desire has been satisfied even though he will never experience or know of it.

Meher Baba proposed that it is not the satisfaction of desires that motivates the agent but rather "a desire to be free from the limitation of all desires. Those experiences and actions which increase the fetters of desire are bad, and those experiences and actions which tend to emancipate the mind from limiting desires are good." It is through good actions, then, that the agent becomes free from selfish desires and achieves a state of well-being: "The good is the main link between selfishness thriving and dying. Selfishness, which in the beginning is the father of evil tendencies, becomes through good deeds the hero of its own defeat. When the evil tendencies are completely replaced by good tendencies, selfishness is transformed into selflessness, i.e., individual selfishness loses itself in universal interest."

Objective theories of well-being

The idea that the ultimate good exists and is not orderable but is globally measurable is reflected in various ways in economic (classical economics, green economics, welfare economics, gross national happiness) and scientific (positive psychology, the science of morality) well-being measuring theories, all of which focus on various ways of assessing progress towards that goal, a so-called genuine progress indicator. Modern economics thus reflects very ancient philosophy, but a calculation or quantitative or other process based on cardinality and statistics replaces the simple ordering of values.

For example, in both economics and in folk wisdom, the value of something seems to rise so long as it is relatively scarce. However, if it becomes too scarce, it leads often to a conflict, and can reduce collective value.

In the classical political economy of Adam Smith and David Ricardo, and in its critique by Karl Marx, human labour is seen as the ultimate source of all new economic value. This is an objective theory of value, which attributes value to real production-costs, and ultimately expenditures of human labour-time (see law of value). It contrasts with marginal utility theory, which argues that the value of labour depends on subjective preferences by consumers, which may however also be objectively studied.

The economic value of labour may be assessed technically in terms of its use-value or utility or commercially in terms of its exchange-value, price or production cost (see labour power). But its value may also be socially assessed in terms of its contribution to the wealth and well-being of a society.

In non-market societies, labour may be valued primarily in terms of skill, time, and output, as well as moral or social criteria and legal obligations. In market societies, labour is valued economically primarily through the labour market. The price of labour may then be set by supply and demand, by strike action or legislation, or by legal or professional entry-requirements into occupations.

Mid-range theories

Conceptual metaphor theories argue against both subjective and objective conceptions of value and meaning, and focus on the relationships between body and other essential elements of human life. In effect, conceptual metaphor theories treat ethics as an ontology problem and the issue of how to work-out values as a negotiation of these metaphors, not the application of some abstraction or a strict standoff between parties who have no way to understand each other's views.

Philosophical questions

Universality

A fundamental question is whether there is a universal, transcendent definition of evil, or whether evil is determined by one's social or cultural background. C. S. Lewis, in The Abolition of Man, maintained that there are certain acts that are universally considered evil, such as rape and murder. However, the numerous instances in which rape or murder is morally affected by social context call this into question. Up until the mid-19th century, many countries practiced forms of slavery. As is often the case, those transgressing moral boundaries stood to profit from that exercise. Arguably, slavery has always been the same and objectively evil, but individuals with a motivation to transgress will justify that action.

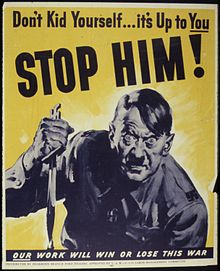

The Nazis, during World War II, considered genocide to be acceptable, as did the Hutu Interahamwe in the Rwandan genocide. One might point out, though, that the actual perpetrators of those atrocities probably avoided calling their actions genocide, since the objective meaning of any act accurately described by that word is to wrongfully kill a selected group of people, which is an action that at least their victims will understand to be evil. Universalists consider evil independent of culture, and wholly related to acts or intents.

Views on the nature of evil tend to fall into one of four opposed camps:

- Moral absolutism holds that good and evil are fixed concepts established by a deity or deities, nature, morality, common sense, or some other source.

- Amoralism claims that good and evil are meaningless, that there is no moral ingredient in nature.

- Moral relativism holds that standards of good and evil are only products of local culture, custom, or prejudice.

- Moral universalism is the attempt to find a compromise between the absolutist sense of morality, and the relativist view; universalism claims that morality is only flexible to a degree, and that what is truly good or evil can be determined by examining what is commonly considered to be evil amongst all humans.

Plato wrote that there are relatively few ways to do good, but there are countless ways to do evil, which can therefore have a much greater impact on our lives, and the lives of other beings capable of suffering.

Usefulness as a term

Psychologist Albert Ellis, in his school of psychology called Rational Emotive Behavioral Therapy, says the root of anger and the desire to harm someone is almost always related to variations of implicit or explicit philosophical beliefs about other human beings. He further claims that without holding variants of those covert or overt belief and assumptions, the tendency to resort to violence in most cases is less likely.

American psychiatrist M. Scott Peck on the other hand, describes evil as militant ignorance. The original Judeo-Christian concept of sin is as a process that leads one to miss the mark and not achieve perfection. Peck argues that while most people are conscious of this at least on some level, those that are evil actively and militantly refuse this consciousness. Peck describes evil as a malignant type of self-righteousness which results in a projection of evil onto selected specific innocent victims (often children or other people in relatively powerless positions). Peck considers those he calls evil to be attempting to escape and hide from their own conscience (through self-deception) and views this as being quite distinct from the apparent absence of conscience evident in sociopaths.

According to Peck, an evil person:

- Is consistently self-deceiving, with the intent of avoiding guilt and maintaining a self-image of perfection

- Deceives others as a consequence of their own self-deception

- Psychologically projects his or her evils and sins onto very specific targets, scapegoating those targets while treating everyone else normally ("their insensitivity toward him was selective")

- Commonly hates with the pretense of love, for the purposes of self-deception as much as the deception of others

- Abuses political or emotional power ("the imposition of one's will upon others by overt or covert coercion")

- Maintains a high level of respectability and lies incessantly in order to do so

- Is consistent in his or her sins. Evil people are defined not so much by the magnitude of their sins, but by their consistency (of destructiveness)

- Is unable to think from the viewpoint of their victim

- Has a covert intolerance to criticism and other forms of narcissistic injury

He also considers certain institutions may be evil, as his discussion of the My Lai Massacre and its attempted coverup illustrate. By this definition, acts of criminal and state terrorism would also be considered evil.

Necessary evil

Martin Luther argued that there are cases where a little evil is a positive good. He wrote, "Seek out the society of your boon companions, drink, play, talk bawdy, and amuse yourself. One must sometimes commit a sin out of hate and contempt for the Devil, so as not to give him the chance to make one scrupulous over mere nothings... ."

The necessary evil approach to politics was put forth by Niccolò Machiavelli, a 16th-century Florentine writer who advised tyrants that "it is far safer to be feared than loved." Treachery, deceit, eliminating political rivals, and the usage of fear are offered as methods of stabilizing the prince's security and power.

The international relations theories of realism and neorealism, sometimes called realpolitik advise politicians to explicitly ban absolute moral and ethical considerations from international politics, and to focus on self-interest, political survival, and power politics, which they hold to be more accurate in explaining a world they view as explicitly amoral and dangerous. Political realists usually justify their perspectives by laying claim to a higher moral duty specific to political leaders, under which the greatest evil is seen to be the failure of the state to protect itself and its citizens. Machiavelli wrote: "...there will be traits considered good that, if followed, will lead to ruin, while other traits, considered vices which if practiced achieve security and well being for the Prince."

Anton LaVey, founder of the Church of Satan, was a materialist and claimed that evil is actually good. He was responding to the common practice of describing sexuality or disbelief as evil, and his claim was that when the word evil is used to describe the natural pleasures and instincts of men and women, or the skepticism of an inquiring mind, the things called evil are really good.

Goodness and agency

Goodwill

John Rawls' book A Theory of Justice prioritized social arrangements and goods based on their contribution to justice. Rawls defined justice as fairness, especially in distributing social goods, defined fairness in terms of procedures, and attempted to prove that just institutions and lives are good, if rational individuals' goods are considered fairly. Rawls's crucial invention was the original position, a procedure in which one tries to make objective moral decisions by refusing to let personal facts about oneself enter one's moral calculations. Immanuel Kant, a great influence for Rawls, similarly applies a lot of procedural practice within the practical application of The Categorical Imperative, however, this is indeed not based solely on 'fairness'.

Society, life and ecology

Many views value unity as a good: to go beyond eudaimonia by saying that an individual person's flourishing is valuable only as a means to the flourishing of society as a whole. In other words, a single person's life is, ultimately, not important or worthwhile in itself, but is good only as a means to the success of society as a whole. Some elements of Confucianism are an example of this, encouraging the view that people ought to conform as individuals to demands of a peaceful and ordered society.

According to the naturalistic view, the flourishing of society is not, or not the only, intrinsically good thing. Defenses of this notion are often formulated by reference to biology, and observations that living things compete more with their own kind than with other kinds. Rather, what is of intrinsic good is the flourishing of all sentient life, extending to those animals that have some level of similar sentience, such as Great Ape personhood. Others go farther, declaring that life itself is of intrinsic value.

By another approach, one achieves peace and agreement by focusing, not on one's peers (who may be rivals or competitors), but on the common environment. The reasoning: As living beings it is clearly and objectively good that we are surrounded by an ecosystem that supports life. Indeed, if we weren't, we could neither discuss that good nor even recognize it. The anthropic principle in cosmology recognizes this view.

Under materialism or even embodiment values, or in any system that recognizes the validity of ecology as a scientific study of limits and potentials, an ecosystem is a fundamental good. To all who investigate, it seems that goodness, or value, exists within an ecosystem, Earth. Creatures within that ecosystem and wholly dependent on it, evaluate good relative to what else could be achieved there. In other words, good is situated in a particular place and one does not dismiss everything that is not available there (such as very low gravity or absolutely abundant sugar candy) as "not good enough", one works within its constraints. Transcending them and learning to be satisfied with them, is thus another sort of value, perhaps called satisfaction.

Values and the people that hold them seem necessarily subordinate to the ecosystem. If this is so, then what kind of being could validly apply the word "good" to an ecosystem as a whole? Who would have the power to assess and judge an ecosystem as good or bad? By what criteria? And by what criteria would ecosystems be modified, especially larger ones such as the atmosphere (climate change) or oceans (extinction) or forests (deforestation)?

"Remaining on Earth" as the most basic value. While green ethicists have been most forthright about it, and have developed theories of Gaia philosophy, biophilia, bioregionalism that reflect it, the questions are now universally recognized as central in determining value, e.g. the economic "value of Earth" to humans as a whole, or the "value of life" that is neither whole-Earth nor human. Many have come to the conclusion that without assuming ecosystem continuation as a universal good, with attendant virtues like biodiversity and ecological wisdom it is impossible to justify such operational requirements as sustainability of human activity on Earth.

One response is that humans are not necessarily confined to Earth, and could use it and move on. A counter-argument is that only a tiny fraction of humans could do this—and they would be self-selected by ability to do technological escalation on others (for instance, the ability to create large spacecraft to flee the planet in, and simultaneously fend off others who seek to prevent them). Another counter-argument is that extraterrestrial life would encounter the fleeing humans and destroy them as a locust species. A third is that if there are no other worlds fit to support life (and no extraterrestrials who compete with humans to occupy them) it is both futile to flee, and foolish to imagine that it would take less energy and skill to protect the Earth as a habitat than it would take to construct some new habitat.

Accordingly, remaining on Earth, as a living being surrounded by a working ecosystem, is a fair statement of the most basic values and goodness to any being we are able to communicate with. A moral system without this axiom seems simply not actionable.

However, most religious systems acknowledge an afterlife and improving this is seen as an even more basic good. In many other moral systems, also, remaining on Earth in a state that lacks honor or power over self is less desirable—consider seppuku in bushido, kamikazes or the role of suicide attacks in Jihadi rhetoric. In all these systems, remaining on Earth is perhaps no higher than a third-place value.

Radical values environmentalism can be seen as either a very old or a very new view: that the only intrinsically good thing is a flourishing ecosystem; individuals and societies are merely instrumentally valuable, good only as means to having a flourishing ecosystem. The Gaia philosophy is the most detailed expression of this overall thought but it strongly influenced deep ecology and the modern Green Parties.

It is often claimed that aboriginal peoples never lost this sort of view. Anthropological linguistics studies links between their languages and the ecosystems they lived in, which gave rise to their knowledge distinctions. Very often, environmental cognition and moral cognition were not distinguished in these languages. Offenses to nature were like those to other people, and Animism reinforced this by giving nature "personality" via myth. Anthropological theories of value explore these questions.

Most people in the world reject older situated ethics and localized religious views. However small-community-based and ecology-centric views have gained some popularity in recent years. In part, this has been attributed to the desire for ethical certainties. Such a deeply rooted definition of goodness would be valuable because it might allow one to construct a good life or society by reliable processes of deduction, elaboration or prioritisation. Ones that relied only on local referents one could verify for oneself, creating more certainty and therefore less investment in protection, hedging and insuring against consequences of loss of the value.

History and novelty

An event is often seen as being of value simply because of its novelty in fashion and art. By contrast, cultural history and other antiques are sometimes seen as of value in and of themselves due to their age. Philosopher-historians Will and Ariel Durant spoke as much with the quote, "As the sanity of the individual lies in the continuity of his memories, so the sanity of the group lies in the continuity of its traditions; in either case a break in the chain invites a neurotic reaction" (The Lessons of History, 72).

Assessment of the value of old or historical artifacts takes into consideration, especially but not exclusively: the value placed on having a detailed knowledge of the past, the desire to have tangible ties to ancestral history, or the increased market value scarce items traditionally hold.

Creativity and innovation and invention are sometimes upheld as fundamentally good especially in Western industrial society—all imply newness, and even opportunity to profit from novelty. Bertrand Russell was notably pessimistic about creativity and thought that knowledge expanding faster than wisdom necessarily was fatal.