In physics, Wien's displacement law states that the black-body radiation curve for different temperatures will peak at different wavelengths that are inversely proportional to the temperature. The shift of that peak is a direct consequence of the Planck radiation law, which describes the spectral brightness or intensity of black-body radiation as a function of wavelength at any given temperature. However, it had been discovered by German physicist Wilhelm Wien several years before Max Planck developed that more general equation, and describes the entire shift of the spectrum of black-body radiation toward shorter wavelengths as temperature increases.

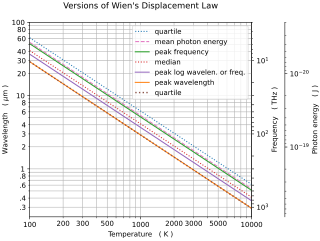

Formally, the wavelength version of Wien's displacement law states that the spectral radiance of black-body radiation per unit wavelength, peaks at the wavelength given by: where T is the absolute temperature and b is a constant of proportionality called Wien's displacement constant, equal to 2.897771955...×10−3 m⋅K, or b ≈ 2898 μm⋅K.

This is an inverse relationship between wavelength and temperature. So the higher the temperature, the shorter or smaller the wavelength of the thermal radiation. The lower the temperature, the longer or larger the wavelength of the thermal radiation. For visible radiation, hot objects emit bluer light than cool objects. If one is considering the peak of black body emission per unit frequency or per proportional bandwidth, one must use a different proportionality constant. However, the form of the law remains the same: the peak wavelength is inversely proportional to temperature, and the peak frequency is directly proportional to temperature.

There are other formulations of Wien's displacement law, which are parameterized relative to other quantities. For these alternate formulations, the form of the relationship is similar, but the proportionality constant, b, differs.

Wien's displacement law may be referred to as "Wien's law", a term which is also used for the Wien approximation.

In "Wien's displacement law", the word displacement refers to how the intensity-wavelength graphs appear shifted (displaced) for different temperatures.

Examples

Wien's displacement law is relevant to some everyday experiences:

- A piece of metal heated by a blow torch first becomes "red hot" as the very longest visible wavelengths appear red, then becomes more orange-red as the temperature is increased, and at very high temperatures would be described as "white hot" as shorter and shorter wavelengths come to predominate the black body emission spectrum. Before it had even reached the red hot temperature, the thermal emission was mainly at longer infrared wavelengths, which are not visible; nevertheless, that radiation could be felt as it warms one's nearby skin.

- One easily observes changes in the color of an incandescent light bulb (which produces light through thermal radiation) as the temperature of its filament is varied by a light dimmer. As the light is dimmed and the filament temperature decreases, the distribution of color shifts toward longer wavelengths and the light appears redder, as well as dimmer.

- A wood fire at 1500 K puts out peak radiation at about 2000 nanometers. 98% of its radiation is at wavelengths longer than 1000 nm, and only a tiny proportion at visible wavelengths (390–700 nanometers). Consequently, a campfire can keep one warm but is a poor source of visible light.

- The effective temperature of the Sun is 5778 Kelvin. Using Wien's law, one finds a peak emission per nanometer (of wavelength) at a wavelength of about 500 nm, in the green portion of the spectrum near the peak sensitivity of the human eye. On the other hand, in terms of power per unit optical frequency, the Sun's peak emission is at 343 THz or a wavelength of 883 nm in the near infrared. In terms of power per percentage bandwidth, the peak is at about 635 nm, a red wavelength. About half of the Sun's radiation is at wavelengths shorter than 710 nm, about the limit of the human vision. Of that, about 12% is at wavelengths shorter than 400 nm, ultraviolet wavelengths, which is invisible to an unaided human eye. A large amount of the Sun's radiation falls in the fairly small visible spectrum and passes through the atmosphere.

- The preponderance of emission in the visible range, however, is not the case in most stars. The hot supergiant Rigel emits 60% of its light in the ultraviolet, while the cool supergiant Betelgeuse emits 85% of its light at infrared wavelengths. With both stars prominent in the constellation of Orion, one can easily appreciate the color difference between the blue-white Rigel (T = 12100 K) and the red Betelgeuse (T ≈ 3800 K). While few stars are as hot as Rigel, stars cooler than the Sun or even as cool as Betelgeuse are very commonplace.

- Mammals with a skin temperature of about 300 K emit peak radiation at around 10 μm in the far infrared. This is therefore the range of infrared wavelengths that pit viper snakes and passive IR cameras must sense.

- When comparing the apparent color of lighting sources (including fluorescent lights, LED lighting, computer monitors, and photoflash), it is customary to cite the color temperature. Although the spectra of such lights are not accurately described by the black-body radiation curve, a color temperature (the correlated color temperature) is quoted for which black-body radiation would most closely match the subjective color of that source. For instance, the blue-white fluorescent light sometimes used in an office may have a color temperature of 6500 K, whereas the reddish tint of a dimmed incandescent light may have a color temperature (and an actual filament temperature) of 2000 K. Note that the informal description of the former (bluish) color as "cool" and the latter (reddish) as "warm" is exactly opposite the actual temperature change involved in black-body radiation.

Discovery

The law is named for Wilhelm Wien, who derived it in 1893 based on a thermodynamic argument. Wien considered adiabatic expansion of a cavity containing waves of light in thermal equilibrium. Using Doppler's principle, he showed that, under slow expansion or contraction, the energy of light reflecting off the walls changes in exactly the same way as the frequency. A general principle of thermodynamics is that a thermal equilibrium state, when expanded very slowly, stays in thermal equilibrium.

Wien himself deduced this law theoretically in 1893, following Boltzmann's thermodynamic reasoning. It had previously been observed, at least semi-quantitatively, by an American astronomer, Langley. This upward shift in with is familiar to everyone—when an iron is heated in a fire, the first visible radiation (at around 900 K) is deep red, the lowest frequency visible light. Further increase in causes the color to change to orange then yellow, and finally blue at very high temperatures (10,000 K or more) for which the peak in radiation intensity has moved beyond the visible into the ultraviolet.

The adiabatic principle allowed Wien to conclude that for each mode, the adiabatic invariant energy/frequency is only a function of the other adiabatic invariant, the frequency/temperature. From this, he derived the "strong version" of Wien's displacement law: the statement that the blackbody spectral radiance is proportional to for some function F of a single variable. A modern variant of Wien's derivation can be found in the textbook by Wannier and in a paper by E. Buckingham

The consequence is that the shape of the black-body radiation function (which was not yet understood) would shift proportionally in frequency (or inversely proportionally in wavelength) with temperature. When Max Planck later formulated the correct black-body radiation function it did not explicitly include Wien's constant . Rather, the Planck constant was created and introduced into his new formula. From the Planck constant and the Boltzmann constant , Wien's constant can be obtained.

Peak differs according to parameterization

| Parameterized by | x | b (μm⋅K) |

|---|---|---|

| Wavelength, | 4.965114231744276303... | 2898 |

| or | 3.920690394872886343... | 3670 |

| Frequency, | 2.821439372122078893... | 5099 |

| Parameterized by | x | b (μm⋅K) |

|---|---|---|

| Mean photon energy | 2.701... | 5327 |

| 10% percentile | 6.553... | 2195 |

| 25% percentile | 4.965... | 2898 |

| 50% percentile | 3.503... | 4107 |

| 70% percentile | 2.574... | 5590 |

| 90% percentile | 1.534... | 9376 |

The results in the tables above summarize results from other sections of this article. Percentiles are percentiles of the Planck blackbody spectrum. Only 25 percent of the energy in the black-body spectrum is associated with wavelengths shorter than the value given by the peak-wavelength version of Wien's law.

Notice that for a given temperature, different parameterizations imply different maximal wavelengths. In particular, the curve of intensity per unit frequency peaks at a different wavelength than the curve of intensity per unit wavelength.

For example, using = 6,000 K (5,730 °C; 10,340 °F) and parameterization by wavelength, the wavelength for maximal spectral radiance is = 482.962 nm with corresponding frequency = 620.737 THz. For the same temperature, but parameterizing by frequency, the frequency for maximal spectral radiance is = 352.735 THz with corresponding wavelength = 849.907 nm.

These functions are radiance density functions, which are probability density functions scaled to give units of radiance. The density function has different shapes for different parameterizations, depending on relative stretching or compression of the abscissa, which measures the change in probability density relative to a linear change in a given parameter. Since wavelength and frequency have a reciprocal relation, they represent significantly non-linear shifts in probability density relative to one another.

The total radiance is the integral of the distribution over all positive values, and that is invariant for a given temperature under any parameterization. Additionally, for a given temperature the radiance consisting of all photons between two wavelengths must be the same regardless of which distribution you use. That is to say, integrating the wavelength distribution from to will result in the same value as integrating the frequency distribution between the two frequencies that correspond to and , namely from to . However, the distribution shape depends on the parameterization, and for a different parameterization the distribution will typically have a different peak density, as these calculations demonstrate.

The important point of Wien's law, however, is that any such wavelength marker, including the median wavelength (or, alternatively, the wavelength below which any specified percentage of the emission occurs) is proportional to the reciprocal of temperature. That is, the shape of the distribution for a given parameterization scales with and translates according to temperature, and can be calculated once for a canonical temperature, then appropriately shifted and scaled to obtain the distribution for another temperature. This is a consequence of the strong statement of Wien's law.

Frequency-dependent formulation

For spectral flux considered per unit frequency (in hertz), Wien's displacement law describes a peak emission at the optical frequency given by: or equivalently where = 2.821439372122078893... is a constant resulting from the maximization equation, k is the Boltzmann constant, h is the Planck constant, and T is the absolute temperature. With the emission now considered per unit frequency, this peak now corresponds to a wavelength about 76% longer than the peak considered per unit wavelength. The relevant math is detailed in the next section.

Derivation from Planck's law

Parameterization by wavelength

Planck's law for the spectrum of black-body radiation predicts the Wien displacement law and may be used to numerically evaluate the constant relating temperature and the peak parameter value for any particular parameterization. Commonly a wavelength parameterization is used and in that case the black body spectral radiance (power per emitting area per solid angle) is:

Differentiating with respect to and setting the derivative equal to zero gives: which can be simplified to give:

By defining: the equation becomes one in the single variable x: which is equivalent to:

This equation is solved by where is the principal branch of the Lambert W function, and gives 4.965114231744276303.... Solving for the wavelength in millimetres, and using kelvins for the temperature yields:

- (2.897771955185172661... mm⋅K).

Parameterization by frequency

Another common parameterization is by frequency. The derivation yielding peak parameter value is similar, but starts with the form of Planck's law as a function of frequency :

The preceding process using this equation yields: The net result is: This is similarly solved with the Lambert W function: giving = 2.821439372122078893....

Solving for produces:

- (0.05878925757646824946... THz⋅K−1).

Parameterization by the logarithm of wavelength or frequency

Using the implicit equation yields the peak in the spectral radiance density function expressed in the parameter radiance per proportional bandwidth. (That is, the density of irradiance per frequency bandwidth proportional to the frequency itself, which can be calculated by considering infinitesimal intervals of (or equivalently ) rather of frequency itself.) This is perhaps a more intuitive way of presenting "wavelength of peak emission". That yields = 3.920690394872886343....

Mean photon energy as an alternate characterization

Another way of characterizing the radiance distribution is via the mean photon energy where is the Riemann zeta function. The wavelength corresponding to the mean photon energy is given by

Criticism

Marr and Wilkin (2012) contend that the widespread teaching of Wien's displacement law in introductory courses is undesirable, and it would be better replaced by alternate material. They argue that teaching the law is problematic because:

- the Planck curve is too broad for the peak to stand out or be regarded as significant;

- the location of the peak depends on the parameterization, and they cite several sources as concurring that "that the designation of any peak of the function is not meaningful and should, therefore, be de-emphasized";

- the law is not used for determining temperatures in actual practice, direct use of the Planck function being relied upon instead.