In

physical cosmology and

astronomy,

dark energy is an unknown form of

energy which is hypothesized to permeate all of space, tending to

accelerate the

expansion of the universe.

[1] Dark energy is the most accepted hypothesis to explain the observations since the 1990s indicating that the universe is

expanding at an

accelerating rate. According to the

Planck mission team, and based on the

standard model of cosmology, on a

mass–energy equivalence basis, the

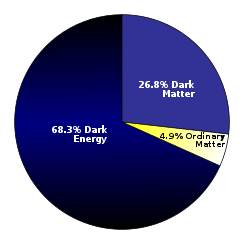

observable universe contains 26.8%

dark matter, 68.3% dark energy (for a total of 95.1%) and 4.9%

ordinary matter.

[2][3][4][5] Again on a mass–energy equivalence basis, the density of dark energy (6.91 × 10

−27 kg/m

3) is very low, much less than the density of ordinary matter or dark matter within galaxies. However, it comes to dominate the mass–energy of the universe because it is uniform across space.

[6]

Two proposed forms for dark energy are the

cosmological constant, a

constant energy density filling space homogeneously,

[7] and

scalar fields such as

quintessence or

moduli,

dynamic quantities whose energy density can vary in time and space. Contributions from scalar fields that are constant in space are usually also included in the cosmological constant. The cosmological constant can be formulated to be equivalent to

vacuum energy. Scalar fields that do change in space can be difficult to distinguish from a cosmological constant because the change may be extremely slow.

High-precision measurements of the

expansion of the universe are required to understand how the expansion rate changes over time and space. In

general relativity, the evolution of the expansion rate is parameterized by the cosmological

equation of state (the relationship between temperature, pressure, and combined matter, energy, and vacuum energy density for any region of space). Measuring the equation of state for dark energy is one of the biggest efforts in observational cosmology today.

Adding the cosmological constant to cosmology's standard

FLRW metric leads to the

Lambda-CDM model, which has been referred to as the "standard model" of cosmology because of its precise agreement with observations. Dark energy has been used as a crucial ingredient in a recent attempt to formulate a

cyclic model for the universe.

[8]

Nature of dark energy

Many things about the nature of dark energy remain matters of speculation. The evidence for dark energy is indirect but comes from three independent sources:

- Distance measurements and their relation to redshift, which suggest the universe has expanded more in the last half of its life.[9]

- The theoretical need for a type of additional energy that is not matter or dark matter to form the observationally flat universe (absence of any detectable global curvature).

- It can be inferred from measures of large scale wave-patterns of mass density in the universe.

Dark energy is thought to be very

homogeneous, not very

dense and is not known to interact through any of the

fundamental forces other than

gravity. Since it is quite rarefied—roughly 10

−30 g/cm

3—it is unlikely to be detectable in laboratory experiments. Dark energy can have such a profound effect on the universe, making up 68% of universal density, only because it uniformly fills otherwise empty space. The two leading models are a

cosmological constant and

quintessence. Both models include the common characteristic that dark energy must have negative pressure.

Effect of dark energy: a small constant negative pressure of vacuum

Independently of its actual nature, dark energy would need to have a strong negative pressure (acting repulsively) in order to explain the observed

acceleration of the

expansion of the universe.

According to general relativity, the pressure within a substance contributes to its gravitational attraction for other things just as its mass density does. This happens because the physical quantity that causes matter to generate gravitational effects is the

stress–energy tensor, which contains both the energy (or matter) density of a substance and its pressure and viscosity.

In the

Friedmann–Lemaître–Robertson–Walker metric, it can be shown that a strong constant negative pressure in all the universe causes an acceleration in universe expansion if the universe is already expanding, or a deceleration in universe contraction if the universe is already contracting. More exactly, the second derivative of the universe scale factor,

, is positive if the

equation of state of the universe is such that

(see

Friedmann equations).

This accelerating expansion effect is sometimes labeled "gravitational repulsion", which is a colorful but possibly confusing expression. In fact a negative pressure does not influence the gravitational interaction between masses—which remains attractive—but rather alters the overall evolution of the universe at the cosmological scale, typically resulting in the accelerating expansion of the universe despite the attraction among the masses present in the universe.

The acceleration is simply a function of dark energy density. Dark energy is persistent: its density remains constant (experimentally, within a factor of 1:10), i.e. it does not get diluted when space expands.

Evidence of existence

Supernovae

A Type Ia supernova (bright spot on the bottom-left) near a galaxy

In 1998, published observations of

Type Ia supernovae ("one-A") by the

High-Z Supernova Search Team[10] followed in 1999 by the

Supernova Cosmology Project[11] suggested that the expansion of the universe is

accelerating.

[12] The 2011

Nobel Prize in Physics was awarded to

Saul Perlmutter,

Brian P. Schmidt and

Adam G. Riess for their leadership in the discovery.

[13][14]

Since then, these observations have been corroborated by several independent sources. Measurements of the

cosmic microwave background,

gravitational lensing, and the

large-scale structure of the cosmos as well as improved measurements of supernovae have been consistent with the

Lambda-CDM model.

[15] Some people argue that the only indication for the existence of dark energy is observations of distance measurements and associated redshifts. Cosmic microwave background anisotropies and baryon acoustic oscillations are only observations that redshifts are larger than expected from a "dusty" Friedmann–Lemaître universe and the local measured Hubble constant.

[16]

Supernovae are useful for cosmology because they are excellent

standard candles across cosmological distances. They allow the expansion history of the universe to be measured by looking at the relationship between the distance to an object and its

redshift, which gives how fast it is receding from us. The relationship is roughly linear, according to

Hubble's law. It is relatively easy to measure redshift, but finding the distance to an object is more difficult. Usually, astronomers use standard candles: objects for which the intrinsic brightness, the

absolute magnitude, is known. This allows the object's distance to be measured from its actual observed brightness, or

apparent magnitude. Type Ia supernovae are the best-known standard candles across cosmological distances because of their extreme and consistent luminosity.

Recent observations of supernovae are consistent with a universe made up 71.3% of dark energy and 27.4% of a combination of

dark matter and

baryonic matter.

[17]

Cosmic microwave background

The existence of dark energy, in whatever form, is needed to reconcile the measured geometry of space with the total amount of matter in the universe. Measurements of

cosmic microwave background (CMB)

anisotropies indicate that the universe is close to

flat. For the

shape of the universe to be flat, the mass/energy density of the universe must be equal to the

critical density. The total amount of matter in the universe (including

baryons and

dark matter), as measured from the CMB spectrum, accounts for only about 30% of the critical density. This implies the existence of an additional form of energy to account for the remaining 70%.

[15] The

Wilkinson Microwave Anisotropy Probe (WMAP) spacecraft

seven-year analysis estimated a universe made up of 72.8% dark energy, 22.7% dark matter and 4.5% ordinary matter.

[4] Work done in 2013 based on the

Planck spacecraft observations of the CMB gave a more accurate estimate of 68.3% of dark energy, 26.8% of dark matter and 4.9% of ordinary matter.

[18]

Large-scale structure

The theory of

large-scale structure, which governs the formation of structures in the universe (

stars,

quasars,

galaxies and

galaxy groups and clusters), also suggests that the density of matter in the universe is only 30% of the critical density.

A 2011 survey, the WiggleZ galaxy survey of more than 200,000 galaxies, provided further evidence towards the existence of dark energy, although the exact physics behind it remains unknown.

[19][20] The WiggleZ survey from

Australian Astronomical Observatory scanned the galaxies to determine their redshift. Then, by exploiting the fact that

baryon acoustic oscillations have left

voids regularly of ~150 Mpc diameter, surrounded by the galaxies, the voids were used as standard rulers to determine distances to galaxies as far as 2,000 Mpc (redshift 0.6), which allowed astronomers to determine more accurately the speeds of the galaxies from their redshift and distance. The data confirmed

cosmic acceleration up to half of the age of the universe (7 billion years) and constrain its inhomogeneity to 1 part in 10.

[20] This provides a confirmation to cosmic acceleration independent of supernovae.

Late-time integrated Sachs-Wolfe effect

Accelerated cosmic expansion causes

gravitational potential wells and hills to flatten as

photons pass through them, producing cold spots and hot spots on the CMB aligned with vast supervoids and superclusters. This so-called late-time

Integrated Sachs–Wolfe effect (ISW) is a direct signal of dark energy in a flat universe.

[21] It was reported at high significance in 2008 by Ho

et al.[22] and Giannantonio

et al.[23]

Observational Hubble constant data

A new approach to test evidence of dark energy through observational

Hubble constant (H(z)) data (OHD) has gained significant attention in recent years.

[24][25][26][27] The Hubble constant is measured as a function of cosmological

redshift. OHD directly tracks the expansion history of the universe by taking passively evolving early-type galaxies as “cosmic chronometers”.

[28] From this point, this approach provides standard clocks in the universe. The core of this idea is the measurement of the differential age evolution as a function of redshift of these cosmic chronometers. Thus, it provides a direct estimate of the Hubble parameter H(z)=-1/(1+z)dz/dt≈-1/(1+z)Δz/Δt. The merit of this approach is clear: the reliance on a differential quantity, Δz/Δt, can minimize many common issues and systematic effects; and as a direct measurement of the Hubble parameter instead of its integral, like

supernovae and

baryon acoustic oscillations (BAO), it brings more information and is appealing in computation. For these reasons, it has been widely used to examine the accelerated cosmic expansion and study properties of dark energy.

Theories of explanation

Cosmological constant

Lambda, the letter that represents the cosmological constant

The simplest explanation for dark energy is that it is simply the "cost of having space": that is, a volume of space has some intrinsic, fundamental energy. This is the cosmological constant, sometimes called Lambda (hence

Lambda-CDM model) after the Greek letter Λ, the symbol used to represent this quantity mathematically. Since energy and mass are related by

E =

mc2, Einstein's theory of

general relativity predicts that this energy will have a gravitational effect. It is sometimes called a

vacuum energy because it is the energy density of empty

vacuum. In fact, most theories of

particle physics predict

vacuum fluctuations that would give the vacuum this sort of energy. This is related to the

Casimir effect, in which there is a small suction into regions where virtual particles are geometrically inhibited from forming (e.g. between plates with tiny separation). The cosmological constant is estimated by cosmologists to be on the order of 10

−29 g/cm

3, or about 10

−120 in

reduced Planck units[citation needed]. Particle physics predicts a natural value of 1 in reduced Planck units, leading to a large discrepancy.

The cosmological constant has negative pressure equal to its energy density and so causes the expansion of the universe to

accelerate. The reason why a cosmological constant has negative pressure can be seen from classical thermodynamics; Energy must be lost from inside a container to do work on the container. A change in volume

dV requires work done equal to a change of energy −

P dV, where

P is the pressure. But the amount of energy in a container full of vacuum actually increases when the volume increases (

dV is positive), because the energy is equal to

ρV, where

ρ (rho) is the energy density of the cosmological constant. Therefore,

P is negative and, in fact,

P = −

ρ.

A major outstanding

problem is that most

quantum field theories predict a huge cosmological constant from the energy of the

quantum vacuum, more than 100

orders of magnitude too large.

[7] This would need to be cancelled almost, but not exactly, by an equally large term of the opposite sign. Some

supersymmetric theories require a cosmological constant that is exactly zero,

[citation needed] which does not help because supersymmetry must be broken. The present scientific consensus amounts to

extrapolating the

empirical evidence where it is relevant to predictions, and

fine-tuning theories until a more elegant solution is found. Technically, this amounts to checking theories against macroscopic observations. Unfortunately, as the known error-margin in the constant predicts the

fate of the universe more than its present state, many such "deeper" questions remain unknown.

In spite of its problems, the cosmological constant is in many respects the most

economical solution to the problem of

cosmic acceleration. One number successfully explains a multitude of observations. Thus, the current standard model of cosmology, the Lambda-CDM model, includes the cosmological constant as an essential feature.

Quintessence

In

quintessence models of dark energy, the observed acceleration of the scale factor is caused by the potential energy of a dynamical

field, referred to as quintessence field. Quintessence differs from the cosmological constant in that it can vary in space and time. In order for it not to clump and form

structure like matter, the field must be very light so that it has a large

Compton wavelength.

No evidence of quintessence is yet available, but it has not been ruled out either. It generally predicts a slightly slower acceleration of the expansion of the universe than the cosmological constant. Some scientists think that the best evidence for quintessence would come from violations of Einstein's

equivalence principle and

variation of the fundamental constants in space or time.

[citation needed] Scalar fields are predicted by the

standard model and

string theory, but an analogous problem to the cosmological constant problem (or the problem of constructing models of

cosmic inflation) occurs:

renormalization theory predicts that scalar fields should acquire large masses.

The

cosmic coincidence problem asks why the

cosmic acceleration began when it did. If

cosmic acceleration began earlier in the universe, structures such as

galaxies would never have had time to form and life, at least as we know it, would never have had a chance to exist. Proponents of the

anthropic principle view this as support for their arguments. However, many models of quintessence have a so-called

tracker behavior, which solves this problem. In these models, the quintessence field has a density which closely tracks (but is less than) the radiation density until

matter-radiation equality, which triggers quintessence to start behaving as dark energy, eventually dominating the universe. This naturally sets the low

energy scale of the dark energy.

[citation needed]

In 2004, when scientists fit the evolution of dark energy with the cosmological data, they found that the equation of state had possibly crossed the cosmological constant boundary (w=−1) from above to below. A No-Go theorem has been proved that gives this scenario at least two degrees of freedom as required for dark energy models. This scenario is so-called

Quintom scenario.

Some special cases of quintessence are

phantom energy, in which the energy density of quintessence actually increases with time, and k-essence (short for kinetic quintessence) which has a non-standard form of

kinetic energy. They can have unusual properties:

phantom energy, for example, can cause a

Big Rip.

Alternative ideas

Some alternatives to dark energy aim to explain the observational data by a more refined use of established theories, focusing, for example, on the gravitational effects of density inhomogeneities, or on consequences of

electroweak symmetry breaking in the early universe. If we are located in an emptier-than-average region of space, the observed cosmic expansion rate could be mistaken for a variation in time, or acceleration.

[29][30][31][32] A different approach uses a cosmological extension of the

equivalence principle to show how space might appear to be expanding more rapidly in the voids surrounding our local cluster. While weak, such effects considered cumulatively over billions of years could become significant, creating the illusion of cosmic acceleration, and making it appear as if we live in a

Hubble bubble.

[33][34][35]

Another class of theories attempts to come up with an all-encompassing theory of both dark matter and dark energy as a single phenomenon that modifies the laws of gravity at various scales. An example of this type of theory is the theory of

dark fluid. Another class of theories that unifies dark matter and dark energy are suggested to be covariant theories of modified gravities. These theories alter the dynamics of the space-time such that the modified dynamic stems what have been assigned to the presence of dark energy and dark matter.

[36]

A 2011 paper in the journal

Physical Review D by Christos Tsagas, a cosmologist at Aristotle University of Thessaloniki in Greece, argued that it is likely that the accelerated expansion of the universe is an illusion caused by the relative motion of us to the rest of the universe. The paper cites data showing that the 2.5 billion ly wide region of space we are inside of is moving very quickly relative to everything around it. If the theory is confirmed, then dark energy would not exist (but the "

dark flow" still might).

[37][38]

Some theorists think that dark energy and

cosmic acceleration are a failure of

general relativity on very large scales, larger than

superclusters.

[citation needed] However most attempts at modifying general relativity have turned out to be either equivalent to theories of

quintessence, or inconsistent with observations.

[citation needed] Other ideas for dark energy have come from

string theory,

brane cosmology and the

holographic principle, but have not yet proved

[citation needed] as compelling as quintessence and the cosmological constant.

On string theory, an article in the journal

Nature described:

String theories, popular with many particle physicists, make it possible, even desirable, to think that the observable universe is just one of 10500 universes in a grander multiverse, says Leonard Susskind, a cosmologist at Stanford University in California. The vacuum energy will have different values in different universes, and in many or most it might indeed be vast. But it must be small in ours because it is only in such a universe that observers such as ourselves can evolve.

Paul Steinhardt in the same article criticizes string theory's explanation of dark energy stating "...Anthropics and randomness don't explain anything... I am disappointed with what most theorists are willing to accept".

[39]

Another set of proposals is based on the possibility of a double

metric tensor for space-time.

[40][41] It has been argued that time reversed solutions in

general relativity require such double metric for consistency, and that both

dark matter and dark energy can be understood in terms of time reversed solutions of general relativity.

[42]

It has been shown that if

inertia is assumed to be due to the effect of horizons on

Unruh radiation then this predicts galaxy rotation and a cosmic acceleration similar to that observed.

[43]

Implications for the fate of the universe

Cosmologists estimate that the

acceleration began roughly 5 billion years ago. Before that, it is thought that the expansion was decelerating, due to the attractive influence of

dark matter and

baryons. The density of dark matter in an expanding universe decreases more quickly than dark energy, and eventually the dark energy dominates.

Specifically, when the volume of the universe doubles, the density of

dark matter is halved, but the density of dark energy is nearly unchanged (it is exactly constant in the case of a cosmological constant).

If the acceleration continues indefinitely, the ultimate result will be that galaxies outside the

local supercluster will have a

line-of-sight velocity that continually increases with time, eventually far exceeding the speed of light.

[44] This is not a violation of

special relativity because the notion of "velocity" used here is different from that of velocity in a local

inertial frame of reference, which is still constrained to be less than the speed of light for any massive object (see

Uses of the proper distance for a discussion of the subtleties of defining any notion of relative velocity in cosmology). Because the

Hubble parameter is decreasing with time, there can actually be cases where a galaxy that is receding from us faster than light does manage to emit a signal which reaches us eventually.

[45][46]

However, because of the accelerating expansion, it is projected that most galaxies will eventually cross a type of cosmological

event horizon where any light they emit past that point will never be able to reach us at any time in the infinite future

[47] because the light never reaches a point where its "peculiar velocity" toward us exceeds the expansion velocity away from us (these two notions of velocity are also discussed in

Uses of the proper distance). Assuming the dark energy is constant (a

cosmological constant), the current distance to this cosmological event horizon is about 16 billion light years, meaning that a signal from an event happening

at present would eventually be able to reach us in the future if the event were less than 16 billion light years away, but the signal would never reach us if the event were more than 16 billion light years away.

[46]

As galaxies approach the point of crossing this cosmological event horizon, the light from them will become more and more

redshifted, to the point where the wavelength becomes too large to detect in practice and the galaxies appear to vanish completely

[48][49] (

see Future of an expanding universe). The Earth, the

Milky Way, and the

Virgo Supercluster[contradictory], however, would remain virtually undisturbed while the rest of the universe recedes and disappears from view. In this scenario, the local supercluster would ultimately suffer

heat death, just as was thought for the flat, matter-dominated universe before measurements of

cosmic acceleration.

There are some very speculative ideas about the future of the universe. One suggests that phantom energy causes

divergent expansion, which would imply that the effective force of dark energy continues growing until it dominates all other forces in the universe. Under this scenario, dark energy would ultimately tear apart all gravitationally bound structures, including galaxies and solar systems, and eventually overcome the

electrical and

nuclear forces to tear apart atoms themselves, ending the universe in a "

Big Rip". On the other hand, dark energy might dissipate with time or even become attractive. Such uncertainties leave open the possibility that gravity might yet rule the day and lead to a universe that contracts in on itself in a "

Big Crunch".

[50] Some scenarios, such as the

cyclic model, suggest this could be the case. It is also possible the universe may never have an end and continue in its present state forever (see

The Second Law as a law of disorder). While these ideas are not supported by observations, they are not ruled out.

History of discovery and previous speculation

The cosmological constant was first proposed by

Einstein as a mechanism to obtain a solution of the

gravitational field equation that would lead to a static universe, effectively using dark energy to balance gravity.

[51] Not only was the mechanism an inelegant example of

fine-tuning but it was also later realized that Einstein's static universe would actually be unstable because local inhomogeneities would ultimately lead to either the runaway expansion or contraction of the universe. The

equilibrium is unstable: If the universe expands slightly, then the expansion releases vacuum energy, which causes yet more expansion. Likewise, a universe which contracts slightly will continue contracting. These sorts of disturbances are inevitable, due to the uneven distribution of matter throughout the universe. More importantly, observations made by

Edwin Hubble in 1929 showed that the universe appears to be expanding and not static at all. Einstein reportedly referred to his failure to predict the idea of a dynamic universe, in contrast to a static universe, as his greatest blunder.

[52]

Alan Guth and

Alexei Starobinsky proposed in 1980 that a negative pressure field, similar in concept to dark energy, could drive

cosmic inflation in the very early universe. Inflation postulates that some repulsive force, qualitatively similar to dark energy, resulted in an enormous and exponential expansion of the universe slightly after the

Big Bang. Such expansion is an essential feature of most current models of the Big Bang. However, inflation must have occurred at a much higher energy density than the dark energy we observe today and is thought to have completely ended when the universe was just a fraction of a second old. It is unclear what relation, if any, exists between dark energy and inflation. Even after inflationary models became accepted, the cosmological constant was thought to be irrelevant to the current universe.

Nearly all inflation models predict that the total (matter+energy) density of the universe should be very close to the critical density. During the 1980s, most cosmological research focused on models with critical density in matter only, usually 95%

cold dark matter and 5% ordinary matter (baryons). These models were found to be successful at forming realistic galaxies and clusters, but some problems appeared in the late 1980s: notably, the model required a value for the

Hubble constant lower than preferred by observations, and the model under-predicted observations of large-scale galaxy clustering. These difficulties became stronger after the discovery of anisotropy in the

cosmic microwave background by the

COBE spacecraft in 1992, and several modified CDM models came under active study through the mid-1990s: these included the

Lambda-CDM model and a mixed cold/hot dark matter model. The first direct evidence for dark energy came from supernova observations in 1998 of

accelerated expansion in

Riess et al.[10] and in

Perlmutter et al.,

[11] and the Lambda-CDM model then became the leading model. Soon after, dark energy was supported by independent observations: in 2000, the

BOOMERanG and

Maxima cosmic microwave background experiments observed the first

acoustic peak in the CMB, showing that the total (matter+energy) density is close to 100% of critical density. Then in 2001, the

2dF Galaxy Redshift Survey gave strong evidence that the matter density is around 30% of critical. The large difference between these two supports a smooth component of dark energy making up the difference. Much more precise measurements from

WMAP in 2003–2010 have continued to support the standard model and give more accurate measurements of the key parameters.

The term "dark energy", echoing

Fritz Zwicky's "dark matter" from the 1930s, was coined by

Michael Turner in 1998.

[53]

As of 2013, the

Lambda-CDM model is consistent with a series of increasingly rigorous cosmological observations, including the

Planck spacecraft and the Supernova Legacy Survey. First results from the SNLS reveal that the average behavior (i.e., equation of state) of dark energy behaves like Einstein's cosmological constant to a precision of 10%.

[54] Recent results from the Hubble Space Telescope Higher-Z Team indicate that dark energy has been present for at least 9 billion years and during the period preceding cosmic acceleration.

, is positive if the

, is positive if the  (see

(see