- Biofeedback data and biofeedback technology are used by Massimiliano Peretti in a contemporary art environment, the Amigdalae project. This project explores the way in which emotional reactions filter and distort human perception and observation. During the performance, biofeedback medical technology, such as the EEG, body temperature variations, heart rate, and galvanic responses, are used to analyze an audience's emotions while they watch the video art. Using these signals, the music changes so that the consequent sound environment simultaneously mirrors and influences the viewer's emotional state. More information is available at the website of the CNRS French National Center of Neural Research.

- Charles Wehrenberg implemented competitive-relaxation as a gaming paradigm with the Will Ball Games circa 1973. In the first bio-mechanical versions, comparative GSR inputs monitored each player's relaxation response and moved the Will Ball across a playing field appropriately using stepper motors.

- In 1984, Wehrenberg programmed the Will Ball games for Apple II computers. The Will Ball game itself is described as pure competitive-relaxation; Brain Ball is a duel between one player's left- and right-brain hemispheres; Mood Ball is an obstacle-based game; Psycho Dice is a psycho-kinetic game. In 1999 The HeartMath Institute developed an educational system based on heart rhythm measurement and display on a Personal Computer (Windows/Macintosh). Their systems have been copied by many but are still unique in the way they assist people to learn about and self-manage their physiology. A handheld version of their system was released in 2006 and is completely portable, being the size of a small mobile phone and having rechargeable batteries. With this unit, one can move around and go about daily business while gaining feedback about inner psycho-physiological states.WillBall gaming table 1973

- In 2001, the company Journey to Wild Divine began producing biofeedback hardware and software for the Macintosh and Windows operating systems. Third-party and open-source software and games are also available for the Wild Divine hardware. Tetris 64 makes use of biofeedback to adjust the speed of the tetris puzzle game.

- David Rosenboom has worked to develop musical instruments that would respond to mental and physiological commands. Playing these instruments can be learned through a process of biofeedback.

- In the mid-1970s, an episode of the television series The Bionic Woman featured a doctor who could "heal" himself using biofeedback techniques to communicate to his body and react to stimuli. For example, he could exhibit "super" powers, such as walking on hot coals, by feeling the heat on the sole of his feet and then convincing his body to react by sending large quantities of perspiration to compensate. He could also convince his body to deliver extremely high levels of adrenalin to provide more energy to allow him to run faster and jump higher. When injured, he could slow his heart rate to reduce blood pressure, send extra platelets to aid in clotting a wound, and direct white blood cells to an area to attack infection.

- In the science-fiction book Quantum Lens by Douglas E. Richards, bio-feedback is used to enhance certain abilities to detect quantum effects that give the user special powers.

| Biofeedback | |

|---|---|

A diagram showing the feedback loop between person, sensor, and processor to help provide biofeedback training | |

| ICD-10-PCS | GZC |

| ICD-9-CM | 94.39 |

| MeSH | D001676 |

| MedlinePlus | 002241 |

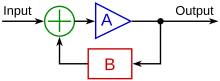

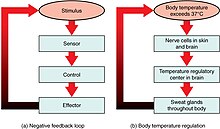

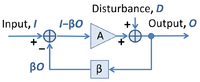

Biofeedback is the process of gaining greater awareness of many physiological functions of one's own body, commercially by using electronic or other instruments, and with a goal of being able to manipulate the body's systems at will. Humans conduct biofeedback naturally all the time, at varied levels of consciousness and intentionality. Biofeedback and the biofeedback loop can also be thought of as self-regulation. Some of the processes that can be controlled include brainwaves, muscle tone, skin conductance, heart rate and pain perception.

Biofeedback may be used to improve health, performance, and the physiological changes that often occur in conjunction with changes to thoughts, emotions, and behavior. Recently, technologies have provided assistance with intentional biofeedback. Eventually, these changes may be maintained without the use of extra equipment, for no equipment is necessarily required to practice biofeedback.

Biofeedback has been found to be effective for the treatment of headaches and migraines and ADHD, among other issues.

Information coded biofeedback

Information coded biofeedback is an evolving form and methodology in the field of biofeedback. Its uses may be applied in the areas of health, wellness and awareness. Biofeedback has its modern conventional roots in the early 1970s.

Over the years, biofeedback as a discipline and a technology has continued to mature and express new versions of the method with novel interpretations in areas utilizing the electromyograph, electrodermograph, electroencephalograph and electrocardiogram among others. The concept of biofeedback is based on the fact that a wide variety of ongoing intrinsic natural functions of the organism occur at a level of awareness generally called the "unconscious". The biofeedback process is designed to interface with select aspects of these "unconscious" processes.

The definition reads: Biofeedback is a process that enables an individual to learn how to change physiological activity for the purposes of improving health and performance. Precise instruments measure physiological activity such as brainwaves, heart function, breathing, muscle activity, and skin temperature. These instruments rapidly and accurately feed back information to the user. The presentation of this information—often in conjunction with changes in thinking, emotions, and behavior—supports desired physiological changes. Over time, these changes can endure without continued use of an instrument.

A more simple definition could be: Biofeedback is the process of gaining greater awareness of many physiological functions primarily using instruments that provide information on the activity of those same systems, with a goal of being able to manipulate them at will. (Emphasis added by author.)

In both of these definitions, a cardinal feature of the concept is the association of the "will" with the result of a new cognitive "learning" skill. Some examine this concept and do not necessarily ascribe it simply to a willful acquisition of a new learned skill but also extend the dynamics into the realms of a behavioristic conditioning (7). Behaviorism contends that it is possible to change the actions and functions of an organism by exposing it to a number of conditions or influences. Key to the concept is not only that the functions are unconscious but that conditioning processes themselves may be unconscious to the organism. Information coded biofeedback relies primarily on the behavior conditioning aspect of biofeedback in promoting significant changes in the functioning of the organism.

The principle of "information" is both complex and, in part, controversial. The term itself is derived from the Latin verb informare which means literally "to bring into form or shape". The meaning of "information" is largely affected by the context of usage. Probably the simplest and perhaps most insightful definition of "information" was given by Gregory Bateson—"Information is news of change" or another as "the difference that makes a difference". Information may also be thought of as "any type of pattern that influences the formation or transformation of other patterns". Recognizing the inherent complexity of an organism, information coded biofeedback applies algorithmic calculations in a stochastic approach to identify significant probabilities in a limited set of possibilities.

Sensor modalities

Electromyograph

An electromyograph (EMG) uses surface electrodes to detect muscle action potentials from underlying skeletal muscles that initiate muscle contraction. Clinicians record the surface electromyogram (SEMG) using one or more active electrodes that are placed over a target muscle and a reference electrode that is placed within six inches of either active. The SEMG is measured in microvolts (millionths of a volt).

In addition to surface electrodes, clinicians may also insert wires or needles intramuscularly to record an EMG signal. While this is more painful and often costly, the signal is more reliable since surface electrodes pick up cross talk from nearby muscles. The use of surface electrodes is also limited to superficial muscles, making the intramuscular approach beneficial to access signals from deeper muscles. The electrical activity picked up by the electrodes is recorded and displayed in the same fashion as the surface electrodes. Prior to placing surface electrodes, the skin is normally shaved, cleaned and exfoliated to get the best signal. Raw EMG signals resemble noise (electrical signal not coming from the muscle of interest) and the voltage fluctuates, therefore they are processed normally in three ways: rectification, filtering, and integration. This processing allows for a unified signal that is then able to be compared to other signals using the same processing techniques.

Biofeedback therapists use EMG biofeedback when treating anxiety and worry, chronic pain, computer-related disorder, essential hypertension, headache (migraine, mixed headache, and tension-type headache), low back pain, physical rehabilitation (cerebral palsy, incomplete spinal cord lesions, and stroke), temporomandibular joint dysfunction (TMD), torticollis, and fecal incontinence, urinary incontinence, and pelvic pain. Physical therapists have also used EMG biofeedback for evaluating muscle activation and providing feedback for their patients.

Feedback thermometer

A feedback thermometer detects skin temperature with a thermistor (a temperature-sensitive resistor) that is usually attached to a finger or toe and measured in degrees Celsius or Fahrenheit. Skin temperature mainly reflects arteriole diameter. Hand-warming and hand-cooling are produced by separate mechanisms, and their regulation involves different skills. Hand-warming involves arteriole vasodilation produced by a beta-2 adrenergic hormonal mechanism. Hand-cooling involves arteriole vasoconstriction produced by the increased firing of sympathetic C-fibers.

Biofeedback therapists use temperature biofeedback when treating chronic pain, edema, headache (migraine and tension-type headache), essential hypertension, Raynaud's disease, anxiety, and stress.

Electrodermograph

An electrodermograph (EDG) measures skin electrical activity directly (skin conductance and skin potential) and indirectly (skin resistance) using electrodes placed over the digits or hand and wrist. Orienting responses to unexpected stimuli, arousal and worry, and cognitive activity can increase eccrine sweat gland activity, increasing the conductivity of the skin for electric current.

In skin conductance, an electrodermograph imposes an imperceptible current across the skin and measures how easily it travels through the skin. When anxiety raises the level of sweat in a sweat duct, conductance increases. Skin conductance is measured in microsiemens (millionths of a siemens). In skin potential, a therapist places an active electrode over an active site (e.g., the palmar surface of the hand) and a reference electrode over a relatively inactive site (e.g., forearm). Skin potential is the voltage that develops between eccrine sweat glands and internal tissues and is measured in millivolts (thousandths of a volt). In skin resistance, also called galvanic skin response (GSR), an electrodermograph imposes a current across the skin and measures the amount of opposition it encounters. Skin resistance is measured in kΩ (thousands of ohms).

Biofeedback therapists use electrodermal biofeedback when treating anxiety disorders, hyperhidrosis (excessive sweating), and stress. Electrodermal biofeedback is used as an adjunct to psychotherapy to increase client awareness of their emotions. In addition, electrodermal measures have long served as one of the central tools in polygraphy (lie detection) because they reflect changes in anxiety or emotional activation.

Electroencephalograph

An electroencephalograph (EEG) measures the electrical activation of the brain from scalp sites located over the human cortex. The EEG shows the amplitude of electrical activity at each cortical site, the amplitude and relative power of various wave forms at each site, and the degree to which each cortical site fires in conjunction with other cortical sites (coherence and symmetry).

The EEG uses precious metal electrodes to detect a voltage between at least two electrodes located on the scalp. The EEG records both excitatory postsynaptic potentials (EPSPs) and inhibitory postsynaptic potentials (IPSPs) that largely occur in dendrites in pyramidal cells located in macrocolumns, several millimeters in diameter, in the upper cortical layers. Neurofeedback monitors both slow and fast cortical potentials.

Slow cortical potentials are gradual changes in the membrane potentials of cortical dendrites that last from 300 ms to several seconds. These potentials include the contingent negative variation (CNV), readiness potential, movement-related potentials (MRPs), and P300 and N400 potentials.

Fast cortical potentials range from 0.5 Hz to 100 Hz. The main frequency ranges include delta, theta, alpha, the sensorimotor rhythm, low beta, high beta, and gamma. The thresholds or boundaries defining the frequency ranges vary considerably among professionals. Fast cortical potentials can be described by their predominant frequencies, but also by whether they are synchronous or asynchronous wave forms. Synchronous wave forms occur at regular periodic intervals, whereas asynchronous wave forms are irregular.

The synchronous delta rhythm ranges from 0.5 to 3.5 Hz. Delta is the dominant frequency from ages 1 to 2, and is associated in adults with deep sleep and brain pathology like trauma and tumors, and learning disability.

The synchronous theta rhythm ranges from 4 to 7 Hz. Theta is the dominant frequency in healthy young children and is associated with drowsiness or starting to sleep, REM sleep, hypnagogic imagery (intense imagery experienced before the onset of sleep), hypnosis, attention, and processing of cognitive and perceptual information.

The synchronous alpha rhythm ranges from 8 to 13 Hz and is defined by its waveform and not by its frequency. Alpha activity can be observed in about 75% of awake, relaxed individuals and is replaced by low-amplitude desynchronized beta activity during movement, complex problem-solving, and visual focusing. This phenomenon is called alpha blocking.

The synchronous sensorimotor rhythm (SMR) ranges from 12 to 15 Hz and is located over the sensorimotor cortex (central sulcus). The sensorimotor rhythm is associated with the inhibition of movement and reduced muscle tone.

The beta rhythm consists of asynchronous waves and can be divided into low beta and high beta ranges (13–21 Hz and 20–32 Hz). Low beta is associated with activation and focused thinking. High beta is associated with anxiety, hypervigilance, panic, peak performance, and worry.

EEG activity from 36 to 44 Hz is also referred to as gamma. Gamma activity is associated with perception of meaning and meditative awareness.

Neurotherapists use EEG biofeedback when treating addiction, attention deficit hyperactivity disorder (ADHD), learning disability, anxiety disorders (including worry, obsessive-compulsive disorder and posttraumatic stress disorder), depression, migraine, and generalized seizures.

Photoplethysmograph

A photoplethysmograph (PPG) measures the relative blood flow through a digit using a photoplethysmographic (PPG) sensor attached by a Velcro band to the fingers or to the temple to monitor the temporal artery. An infrared light source is transmitted through or reflected off the tissue, detected by a phototransistor, and quantified in arbitrary units. Less light is absorbed when blood flow is greater, increasing the intensity of light reaching the sensor.

A photoplethysmograph can measure blood volume pulse (BVP), which is the phasic change in blood volume with each heartbeat, heart rate, and heart rate variability (HRV), which consists of beat-to-beat differences in intervals between successive heartbeats.

A photoplethysmograph can provide useful feedback when temperature feedback shows minimal change. This is because the PPG sensor is more sensitive than a thermistor to minute blood flow changes. Biofeedback therapists can use a photoplethysmograph to supplement temperature biofeedback when treating chronic pain, edema, headache (migraine and tension-type headache), essential hypertension, Raynaud's disease, anxiety, and stress.

Electrocardiogram

The electrocardiogram (ECG) uses electrodes placed on the torso, wrists, or legs, to measure the electrical activity of the heart and measures the interbeat interval (distances between successive R-wave peaks in the QRS complex). The interbeat interval, divided into 60 seconds, determines the heart rate at that moment. The statistical variability of that interbeat interval is what we call heart rate variability. The ECG method is more accurate than the PPG method in measuring heart rate variability.

Biofeedback therapists use heart rate variability (HRV) biofeedback when treating asthma, COPD, depression, anxiety, fibromyalgia, heart disease, and unexplained abdominal pain. Research shows that HRV biofeedback can also be used to improve physiological and psychological wellbeing in healthy individuals.

HRV data from both polyplethysmographs and electrocardiograms are analyzed via mathematical transformations such as the commonly-used fast Fourier transform (FFT). The FFT splits the HRV data into a power spectrum, revealing the waveform's constituent frequencies. Among those constituent frequencies, high-frequency (HF) and low-frequency (LF) components are defined as above and below .15 Hz, respectively. As a rule of thumb, the LF component of HRV represents sympathetic activity, and the HF component represents parasympathetic activity. The two main components are often represented as a LF/HF ratio and used to express sympathovagal balance. Some researchers consider a third, medium-frequency (MF) component from .08 Hz to .15 Hz, which has been shown to increase in power during times of appreciation.

Pneumograph

A pneumograph or respiratory strain gauge uses a flexible sensor band that is placed around the chest, abdomen, or both. The strain gauge method can provide feedback about the relative expansion/contraction of the chest and abdomen, and can measure respiratory rate (the number of breaths per minute). Clinicians can use a pneumograph to detect and correct dysfunctional breathing patterns and behaviors. Dysfunctional breathing patterns include clavicular breathing (breathing that primarily relies on the external intercostals and the accessory muscles of respiration to inflate the lungs), reverse breathing (breathing where the abdomen expands during exhalation and contracts during inhalation), and thoracic breathing (shallow breathing that primarily relies on the external intercostals to inflate the lungs). Dysfunctional breathing behaviors include apnea (suspension of breathing), gasping, sighing, and wheezing.

A pneumograph is often used in conjunction with an electrocardiograph (ECG) or photoplethysmograph (PPG) in heart rate variability (HRV) training.

Biofeedback therapists use pneumograph biofeedback with patients diagnosed with anxiety disorders, asthma, chronic pulmonary obstructive disorder (COPD), essential hypertension, panic attacks, and stress.

Capnometer

A capnometer or capnograph uses an infrared detector to measure end-tidal CO

2

(the partial pressure of carbon dioxide in expired air at the end of

expiration) exhaled through the nostril into a latex tube. The average

value of end-tidal CO

2

for a resting adult is 5% (36 Torr or 4.8 kPa). A capnometer is a

sensitive index of the quality of patient breathing. Shallow, rapid, and

effortful breathing lowers CO

2, while deep, slow, effortless breathing increases it.

Biofeedback therapists use capnometric biofeedback to supplement respiratory strain gauge biofeedback with patients diagnosed with anxiety disorders, asthma, chronic pulmonary obstructive disorder (COPD), essential hypertension, panic attacks, and stress.

Rheoencephalograph

Rheoencephalography (REG), or brain blood flow biofeedback, is a biofeedback technique of a conscious control of blood flow. An electronic device called a rheoencephalograph [from Greek rheos stream, anything flowing, from rhein to flow] is utilized in brain blood flow biofeedback. Electrodes are attached to the skin at certain points on the head and permit the device to measure continuously the electrical conductivity of the tissues of structures located between the electrodes. The brain blood flow technique is based on non-invasive method of measuring bio-impedance. Changes in bio-impedance are generated by blood volume and blood flow and registered by a rheographic device. The pulsative bio-impedance changes directly reflect the total blood flow of the deep structures of brain due to high frequency impedance measurements.

Hemoencephalography

Hemoencephalography or HEG biofeedback is a functional infrared imaging technique. As its name describes, it measures the differences in the color of light reflected back through the scalp based on the relative amount of oxygenated and unoxygenated blood in the brain. Research continues to determine its reliability, validity, and clinical applicability. HEG is used to treat ADHD and migraine, and for research.

Pressure

Pressure can be monitored as a patient performs exercises while resting against an air-filled cushion. This is pertinent to physiotherapy. Alternatively, the patient may actively grip or press against an air-filled cushion of custom shape.

Applications

Urinary incontinence

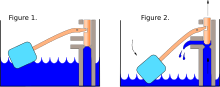

Mowrer detailed the use of a bedwetting alarm that sounds when children urinate while asleep. This simple biofeedback device can quickly teach children to wake up when their bladders are full and to contract the urinary sphincter and relax the detrusor muscle, preventing further urine release. Through classical conditioning, sensory feedback from a full bladder replaces the alarm and allows children to continue sleeping without urinating.

Kegel developed the perineometer in 1947 to treat urinary incontinence (urine leakage) in women whose pelvic floor muscles are weakened during pregnancy and childbirth. The perineometer, which is inserted into the vagina to monitor pelvic floor muscle contraction, satisfies all the requirements of a biofeedback device and enhances the effectiveness of popular Kegel exercises. Contradicting this, a 2013 randomized controlled trial found no benefit of adding biofeedback to pelvic floor muscle exercise in stress urinary incontinence. In another randomized controlled trial the addition of biofeedback to the training of pelvic floor muscles for the treatment of stress urinary incontinence, improved pelvic floor muscle function, reduced urinary symptoms, and improved of the quality of life.

Research has shown that biofeedback can improve the efficacy of pelvic floor exercises and help restore proper bladder functions. The mode of action of vaginal cones, for instance involves a biological biofeedback mechanism. Studies have shown that biofeedback obtained with vaginal cones is as effective as biofeedback induced through physiotherapy electrostimulation

In 1992, the United States Agency for Health Care Policy and Research recommended biofeedback as a first-line treatment for adult urinary incontinence.

Fecal incontinence and anismus

Biofeedback is a major treatment for anismus (paradoxical contraction of puborectalis during defecation). This therapy directly evolved from the investigation anorectal manometry where a probe that can record pressure is placed in the anal canal. Biofeedback therapy is also a commonly used and researched therapy for fecal incontinence, but the benefits are uncertain. Biofeedback therapy varies in the way it is delivered. It is also unknown if one type has benefits over another. The aims have been described as to enhance either the rectoanal inhibitory reflex (RAIR), rectal sensitivity (by discrimination of progressively smaller volumes of a rectal balloon and promptly contracting the external anal sphincter (EAS)), or the strength and endurance of the EAS contraction. Three general types of biofeedback have been described, though they are not mutually exclusive, with many protocols combining these elements. Similarly there is variance of the length of both the individual sessions and the overall length of the training, and if home exercises are performed in addition and how. In rectal sensitivity training, a balloon is placed in the rectum, and is gradually distended until there is a sensation of rectal filling. Successively smaller volume reinflations of the balloon aim to help the person detect rectal distension at a lower threshold, giving more time to contract the EAS and prevent incontinence, or to journey to the toilet. Alternatively, in those with urge incontinence/ rectal hypersensitivity, training is aimed at teaching the person to tolerate progressively larger volumes. Strength training may involve electromyography (EMG) skin electrodes, manometric pressures, intra-anal EMG, or endoanal ultrasound. One of these measures are used to relay the muscular activity or anal canal pressure during anal sphincter exercise. Performance and progress can be monitored in this manner. Co-ordination training involves the placing of 3 balloons, in the rectum and in the upper and lower anal canal. The rectal balloon is inflated to trigger the RAIR, an event often followed by incontinence. Co-ordination training aims to teach voluntary contraction of EAS when the RAIR occurs (i.e. when there is rectal distension).

EEG

Caton recorded spontaneous electrical potentials from the exposed cortical surface of monkeys and rabbits, and was the first to measure event-related potentials (EEG responses to stimuli) in 1875.

Danilevsky published Investigations in the Physiology of the Brain, which explored the relationship between the EEG and states of consciousness in 1877.

Beck published studies of spontaneous electrical potentials detected from the brains of dogs and rabbits, and was the first to document alpha blocking, where light alters rhythmic oscillations, in 1890.

Sherrington introduced the terms neuron and synapse and published the Integrative Action of the Nervous System in 1906.

Pravdich-Neminsky photographed the EEG and event related potentials from dogs, demonstrated a 12–14 Hz rhythm that slowed during asphyxiation, and introduced the term electrocerebrogram in 1912.

Forbes reported the replacement of the string galvanometer with a vacuum tube to amplify the EEG in 1920. The vacuum tube became the de facto standard by 1936.

Berger (1924) published the first human EEG data. He recorded electrical potentials from his son Klaus's scalp. At first he believed that he had discovered the physical mechanism for telepathy but was disappointed that the electromagnetic variations disappear only millimeters away from the skull. (He did continue to believe in telepathy throughout his life, however, having had a particularly confirming event regarding his sister). He viewed the EEG as analogous to the ECG and introduced the term elektenkephalogram. He believed that the EEG had diagnostic and therapeutic promise in measuring the impact of clinical interventions. Berger showed that these potentials were not due to scalp muscle contractions. He first identified the alpha rhythm, which he called the Berger rhythm, and later identified the beta rhythm and sleep spindles. He demonstrated that alterations in consciousness are associated with changes in the EEG and associated the beta rhythm with alertness. He described interictal activity (EEG potentials between seizures) and recorded a partial complex seizure in 1933. Finally, he performed the first QEEG, which is the measurement of the signal strength of EEG frequencies.

Adrian and Matthews confirmed Berger's findings in 1934 by recording their own EEGs using a cathode-ray oscilloscope. Their demonstration of EEG recording at the 1935 Physiological Society meetings in England caused its widespread acceptance. Adrian used himself as a subject and demonstrated the phenomenon of alpha blocking, where opening his eyes suppressed alpha rhythms.

Gibbs, Davis, and Lennox inaugurated clinical electroencephalography in 1935 by identifying abnormal EEG rhythms associated with epilepsy, including interictal spike waves and 3 Hz activity in absence seizures.

Bremer used the EEG to show how sensory signals affect vigilance in 1935.

Walter (1937, 1953) named the delta waves and theta waves, and the contingent negative variation (CNV), a slow cortical potential that may reflect expectancy, motivation, intention to act, or attention. He located an occipital lobe source for alpha waves and demonstrated that delta waves can help locate brain lesions like tumors. He improved Berger's electroencephalograph and pioneered EEG topography.

Kleitman has been recognized as the "Father of American sleep research" for his seminal work in the regulation of sleep-wake cycles, circadian rhythms, the sleep patterns of different age groups, and the effects of sleep deprivation. He discovered the phenomenon of rapid eye movement (REM) sleep with his graduate student Aserinsky in 1953.

Dement, another of Kleitman's students, described the EEG architecture and phenomenology of sleep stages and the transitions between them in 1955, associated REM sleep with dreaming in 1957, and documented sleep cycles in another species, cats, in 1958, which stimulated basic sleep research. He established the Stanford University Sleep Research Center in 1970.

Andersen and Andersson (1968) proposed that thalamic pacemakers project synchronous alpha rhythms to the cortex via thalamocortical circuits.

Kamiya (1968) demonstrated that the alpha rhythm in humans could be operantly conditioned. He published an influential article in Psychology Today that summarized research that showed that subjects could learn to discriminate when alpha was present or absent, and that they could use feedback to shift the dominant alpha frequency about 1 Hz. Almost half of his subjects reported experiencing a pleasant "alpha state" characterized as an "alert calmness." These reports may have contributed to the perception of alpha biofeedback as a shortcut to a meditative state. He also studied the EEG correlates of meditative states.

Brown (1970) demonstrated the clinical use of alpha-theta biofeedback. In research designed to identify the subjective states associated with EEG rhythms, she trained subjects to increase the abundance of alpha, beta, and theta activity using visual feedback and recorded their subjective experiences when the amplitude of these frequency bands increased. She also helped popularize biofeedback by publishing a series of books, including New Mind, New body (1974) and Stress and the Art of Biofeedback (1977).

Mulholland and Peper (1971) showed that occipital alpha increases with eyes open and not focused, and is disrupted by visual focusing; a rediscovery of alpha blocking.

Green and Green (1986) investigated voluntary control of internal states by individuals like Swami Rama and American Indian medicine man Rolling Thunder both in India and at the Menninger Foundation. They brought portable biofeedback equipment to India and monitored practitioners as they demonstrated self-regulation. A film containing footage from their investigations was released as Biofeedback: The Yoga of the West (1974). They developed alpha-theta training at the Menninger Foundation from the 1960s to the 1990s. They hypothesized that theta states allow access to unconscious memories and increase the impact of prepared images or suggestions. Their alpha-theta research fostered Peniston's development of an alpha-theta addiction protocol.

Sterman (1972) showed that cats and human subjects could be operantly trained to increase the amplitude of the sensorimotor rhythm (SMR) recorded from the sensorimotor cortex. He demonstrated that SMR production protects cats against drug-induced generalized seizures (tonic-clonic seizures involving loss of consciousness) and reduces the frequency of seizures in humans diagnosed with epilepsy. He found that his SMR protocol, which uses visual and auditory EEG biofeedback, normalizes their EEGs (SMR increases while theta and beta decrease toward normal values) even during sleep. Sterman also co-developed the Sterman-Kaiser (SKIL) QEEG database.

Birbaumer and colleagues (1981) have studied feedback of slow cortical potentials since the late 1970s. They have demonstrated that subjects can learn to control these DC potentials and have studied the efficacy of slow cortical potential biofeedback in treating ADHD, epilepsy, migraine, and schizophrenia.

Lubar (1989) studied SMR biofeedback to treat attention disorders and epilepsy in collaboration with Sterman. He demonstrated that SMR training can improve attention and academic performance in children diagnosed with Attention Deficit Disorder with Hyperactivity (ADHD). He documented the importance of theta-to-beta ratios in ADHD and developed theta suppression-beta enhancement protocols to decrease these ratios and improve student performance. The Neuropsychiatric EEG-Based Assessment Aid (NEBA) System a device used to measure the Theta-to-Beta ratio was approved as a tool to assist in diagnosis of ADHD on July 15, 2013.

However, the field has recently moved away from the measure. This move has been caused by the general change in the population norms in the past 20 years (most likely due to the change in the average amount of sleep in young people).

Electrodermal system

Feré demonstrated the exosomatic method of recording of skin electrical activity by passing a small current through the skin in 1888.

Tarchanoff used the endosomatic method by recording the difference in skin electrical potential from points on the skin surface in 1889; no external current was applied.

Jung employed the galvanometer, which used the exosomatic method, in 1907 to study unconscious emotions in word-association experiments.

Marjorie and Hershel Toomim (1975) published a landmark article about the use of GSR biofeedback in psychotherapy.

Meyer and Reich discussed similar material in a British publication.

Musculoskeletal system

Jacobson (1930) developed hardware to measure EMG voltages over time, showed that cognitive activity (like imagery) affects EMG levels, introduced the deep relaxation method Progressive Relaxation, and wrote Progressive Relaxation (1929) and You Must Relax (1934). He prescribed daily Progressive Relaxation practice to treat diverse psychophysiological disorders like hypertension.

Several researchers showed that human subjects could learn precise control of individual motor units (motor neurons and the muscle fibers they control). Lindsley (1935) found that relaxed subjects could suppress motor unit firing without biofeedback training.

Harrison and Mortensen (1962) trained subjects using visual and auditory EMG biofeedback to control individual motor units in the tibialis anterior muscle of the leg.

Basmajian (1963) instructed subjects using unfiltered auditory EMG biofeedback to control separate motor units in the abductor pollicis muscle of the thumb in his Single Motor Unit Training (SMUT) studies. His best subjects coordinated several motor units to produce drum rolls. Basmajian demonstrated practical applications for neuromuscular rehabilitation, pain management, and headache treatment.

Marinacci (1960) applied EMG biofeedback to neuromuscular disorders (where proprioception is disrupted) including Bell Palsy (one-sided facial paralysis), polio, and stroke.

"While Marinacci used EMG to treat neuromuscular disorders, his colleagues used the EMG only for diagnosis. They were unable to recognize its potential as a teaching tool even when the evidence stared them in the face! Many electromyographers who performed nerve conduction studies used visual and auditory feedback to reduce interference when a patient recruited too many motor units. Even though they used EMG biofeedback to guide the patient to relax so that clean diagnostic EMG tests could be recorded, they were unable to envision EMG biofeedback treatment of motor disorders."

Whatmore and Kohli (1968) introduced the concept of dysponesis (misplaced effort) to explain how functional disorders (where body activity is disturbed) develop. Bracing your shoulders when you hear a loud sound illustrates dysponesis, since this action does not protect against injury. These clinicians applied EMG biofeedback to diverse functional problems like headache and hypertension. They reported case follow-ups ranging from 6 to 21 years. This was long compared with typical 0-24 month follow-ups in the clinical literature. Their data showed that skill in controlling misplaced efforts was positively related to clinical improvement. Last, they wrote The Pathophysiology and Treatment of Functional Disorders (1974) that outlined their treatment of functional disorders.

Wolf (1983) integrated EMG biofeedback into physical therapy to treat stroke patients and conducted landmark stroke outcome studies.

Peper (1997) applied SEMG to the workplace, studied the ergonomics of computer use, and promoted "healthy computing."

Taub (1999, 2006) demonstrated the clinical efficacy of constraint-induced movement therapy (CIMT) for the treatment of spinal cord-injured and stroke patients.

Cardiovascular system

Shearn (1962) operantly trained human subjects to increase their heart rates by 5 beats-per-minute to avoid electric shock. In contrast to Shearn's slight heart rate increases, Swami Rama used yoga to produce atrial flutter at an average 306 beats per minute before a Menninger Foundation audience. This briefly stopped his heart's pumping of blood and silenced his pulse.

Engel and Chism (1967) operantly trained subjects to decrease, increase, and then decrease their heart rates (this was analogous to ON-OFF-ON EEG training). He then used this approach to teach patients to control their rate of premature ventricular contractions (PVCs), where the ventricles contract too soon. Engel conceptualized this training protocol as illness onset training, since patients were taught to produce and then suppress a symptom. Peper has similarly taught asthmatics who wheeze to better control their breathing.

Schwartz (1971, 1972) examined whether specific patterns of cardiovascular activity are easier to learn than others due to biological constraints. He examined the constraints on learning integrated (two autonomic responses change in the same direction) and differentiated (two autonomic responses change inversely) patterns of blood pressure and heart rate change.

Schultz and Luthe (1969) developed Autogenic Training, which is a deep relaxation exercise derived from hypnosis. This procedure combines passive volition with imagery in a series of three treatment procedures (standard Autogenic exercises, Autogenic neutralization, and Autogenic meditation). Clinicians at the Menninger Foundation coupled an abbreviated list of standard exercises with thermal biofeedback to create autogenic biofeedback. Luthe (1973) also published a series of six volumes titled Autogenic therapy.

Fahrion and colleagues (1986) reported on an 18-26 session treatment program for hypertensive patients. The Menninger program combined breathing modification, autogenic biofeedback for the hands and feet, and frontal EMG training. The authors reported that 89% of their medication patients discontinued or reduced medication by one-half while significantly lowering blood pressure. While this study did not include a double-blind control, the outcome rate was impressive.

Freedman and colleagues (1991) demonstrated that hand-warming and hand-cooling are produced by different mechanisms. The primary hand-warming mechanism is beta-adrenergic (hormonal), while the main hand-cooling mechanism is alpha-adrenergic and involves sympathetic C-fibers. This contradicts the traditional view that finger blood flow is controlled exclusively by sympathetic C-fibers. The traditional model asserts that, when firing is slow, hands warm; when firing is rapid, hands cool. Freedman and colleagues' studies support the view that hand-warming and hand-cooling represent entirely different skills.

Vaschillo and colleagues (1983) published the first studies of heart rate variability (HRV) biofeedback with cosmonauts and treated patients diagnosed with psychiatric and psychophysiological disorders. Lehrer collaborated with Smetankin and Potapova in treating pediatric asthma patients and published influential articles on HRV asthma treatment in the medical journal Chest. The most direct effect of HRV biofeedback is on the baroreflex, a homeostatic reflex that helps control blood pressure fluctuations. When blood pressure goes up, the baroreflex makes heart rate go down. The opposite happens when blood pressure goes down. Because it takes about 5 seconds for blood pressure to change after changes in heart rate (think of different amounts of blood flowing through the same sized tube), the baroreflex produces a rhythm in heart rate with a period of about 10 seconds. Another rhythm in heart rate is caused by respiration (respiratory sinus arrhythmia), such that heart rate rises during inhalation and falls during exhalation. During HRV biofeedback, these two reflexes stimulate each other, stimulating resonance properties of the cardiovascular system caused by the inherent rhythm in the baroreflex, and thus causing very big oscillations in heart rate and large-amplitude stimulation of the baroreflex. Thus HRV biofeedback exercises the baroreflex, and strengthens it. This apparently has the effect of modulating autonomic reactivity to stimulation. Because the baroreflex is controlled through brain stem mechanisms that communicate directly with the insula and amygdala, which control emotion, HRV biofeedback also appears to modulate emotional reactivity, and to help people suffering from anxiety, stress, and depression.

Emotions are intimately linked to heart health, which is linked to physical and mental health. In general, good mental and physical health are correlated with positive emotions and high heart rate variability (HRV) modulated by mostly high frequencies. High HRV has been correlated with increased executive functioning skills such as memory and reaction time. Biofeedback that increased HRV and shifted power toward HF (high-frequencies) has been shown to lower blood pressure.

On the other hand, LF (low-frequency) power in the heart is associated with sympathetic vagal activity, which is known to increase the risk of heart attack. LF-dominated HRV power spectra are also directly associated with higher mortality rates in healthy individuals, and among individuals with mood disorders. Anger and frustration increase the LF range of HRV. Other studies have shown anger to increase the risk of heart attack, so researchers at the Heartmath Institute have made the connection between emotions and physical health via HRV.

Because emotions have such an impact on cardiac function, which cascades to numerous other biological processes, emotional regulation techniques are able to effect practical, psychophysiological change. McCraty et al. discovered that feelings of gratitude increased HRV and moved its power spectrum toward the MF (mid-frequency) and HF (high-frequency) ranges, while decreasing LF (low-frequency) power. The Heartmath Institute's patented techniques involve engendering feelings of gratitude and happiness, focusing on the physical location of the heart, and breathing in 10-second cycles. Other techniques have been shown to improve HRV, such as strenuous aerobic exercise, and meditation.

Pain

Chronic back pain

Newton-John, Spense, and Schotte (1994) compared the effectiveness of Cognitive Behavior Therapy (CBT) and Electromyographic Biofeedback (EMG-Biofeedback) for 44 participants with chronic low back pain. Newton-John et al. (1994) split the participants into two groups, then measured the intensity of pain, the participants' perceived disability, and depression before treatment, after treatment and again six months later. Newton-John et al.(1994) found no significant differences between the group which received CBT and the group which received EMG-Biofeedback. This seems to indicate that biofeedback is as effective as CBT in chronic low back pain. Comparing the results of the groups before treatment and after treatment, indicates that EMG-Biofeedback reduced pain, disability, and depression as much as by half.

Muscle pain

Budzynski and Stoyva (1969) showed that EMG biofeedback could reduce frontalis muscle (forehead) contraction. They demonstrated in 1973 that analog (proportional) and binary (ON or OFF) visual EMG biofeedback were equally helpful in lowering masseter SEMG levels.

McNulty, Gevirtz, Hubbard, and Berkoff (1994) proposed that sympathetic nervous system innervation of muscle spindles underlies trigger points.

Tension headache

Budzynski, Stoyva, Adler, and Mullaney (1973) reported that auditory frontalis EMG biofeedback combined with home relaxation practice lowered tension headache frequency and frontalis EMG levels. A control group that received noncontingent (false) auditory feedback did not improve. This study helped make the frontalis muscle the placement-of-choice in EMG assessment and treatment of headache and other psychophysiological disorders.

Migraine

Sargent, Green, and Walters (1972, 1973) demonstrated that hand-warming could abort migraines and that autogenic biofeedback training could reduce headache activity. The early Menninger migraine studies, although methodologically weak (no pretreatment baselines, control groups, or random assignment to conditions), strongly influenced migraine treatment. A 2013 review classified biofeedback among the techniques that might be of benefit in the management of chronic migraine.

Phantom-limb pain

Flor (2002) trained amputees to detect the location and frequency of shocks delivered to their stumps, which resulted in an expansion of corresponding cortical regions and significant reduction of their phantom limb pain.

Financial decision making

Financial traders use biofeedback as a tool for regulating their level of emotional arousal in order to make better financial decisions. The technology company Philips and the Dutch bank ABN AMRO developed a biofeedback device for retail investors based on a galvanic skin response sensor. Astor et al. (2013) developed a biofeedback based serious game in which financial decision makers can learn how to effectively regulate their emotions using heart rate measurements.

Stress reduction

A randomized study by Sutarto et al. assessed the effect of resonant breathing biofeedback (recognize and control involuntary heart rate variability) among manufacturing operators; depression, anxiety and stress significantly decreased. Heart rate variability data can be analyzed with deep neural networks to accurately predict stress levels. This technology is utilized in a mobile app in combination with mindfulness techniques to effectively promote stress reduction.

Macular disease of the retina

A 2012 observational study by Pacella et al. found a significant improvement in both visual acuity and fixation treating patients suffering from age-related macular degeneration or macular degeneration with biofeedback treatment through MP-1 microperimeter.

Clinical effectiveness

Research

Moss, LeVaque, and Hammond (2004) observed that "Biofeedback and neurofeedback seem to offer the kind of evidence-based practice that the healthcare establishment is demanding." "From the beginning biofeedback developed as a research-based approach emerging directly from laboratory research on psychophysiology and behavior therapy. The ties of biofeedback/neurofeedback to the biomedical paradigm and to research are stronger than is the case for many other behavioral interventions" (p. 151).

The Association for Applied Psychophysiology and Biofeedback (AAPB) and the International Society for Neurofeedback and Research (ISNR) have collaborated in validating and rating treatment protocols to address questions about the clinical efficacy of biofeedback and neurofeedback applications, like ADHD and headache. In 2001, Donald Moss, then president of the Association for Applied Psychophysiology and Biofeedback, and Jay Gunkelman, president of the International Society for Neurofeedback and Research, appointed a task force to establish standards for the efficacy of biofeedback and neurofeedback.

The Task Force document was published in 2002, and a series of white papers followed, reviewing the efficacy of a series of disorders. The white papers established the efficacy of biofeedback for functional anorectal disorders, attention deficit disorder, facial pain and temporomandibular joint dysfunction, hypertension, urinary incontinence, Raynaud's phenomenon, substance abuse, and headache.

A broader review was published and later updated, applying the same efficacy standards to the entire range of medical and psychological disorders. The 2008 edition reviewed the efficacy of biofeedback for over 40 clinical disorders, ranging from alcoholism/substance abuse to vulvar vestibulitis. The ratings for each disorder depend on the nature of research studies available on each disorder, ranging from anecdotal reports to double blind studies with a control group. Thus, a lower rating may reflect the lack of research rather than the ineffectiveness of biofeedback for the problem.

The randomized trial by Dehli et al. compared if the injection of a bulking agent in the anal canal was superior to sphincter training with biofeedback to treat fecal incontinence. Both methods lead to an improvement of FI, but comparisons of St Mark's scores between the groups showed no differences in effect between treatments.

Efficacy

Yucha and Montgomery's (2008) ratings are listed for the five levels of efficacy recommended by a joint Task Force and adopted by the Boards of Directors of the Association for Applied Psychophysiology (AAPB) and the International Society for Neuronal Regulation (ISNR). From weakest to strongest, these levels include: not empirically supported, possibly efficacious, probably efficacious, efficacious, and efficacious and specific.

Level 1: Not empirically supported. This designation includes applications supported by anecdotal reports and/or case studies in non-peer-reviewed venues. Yucha and Montgomery (2008) assigned eating disorders, immune function, spinal cord injury, and syncope to this category.

Level 2: Possibly efficacious. This designation requires at least one study of sufficient statistical power with well-identified outcome measures but lacking randomized assignment to a control condition internal to the study. Yucha and Montgomery (2008) assigned asthma, autism, Bell palsy, cerebral palsy, COPD, coronary artery disease, cystic fibrosis, depression, erectile dysfunction, fibromyalgia, hand dystonia, irritable bowel syndrome, PTSD, repetitive strain injury, respiratory failure, stroke, tinnitus, and urinary incontinence in children to this category.

Level 3: Probably efficacious. This designation requires multiple observational studies, clinical studies, waitlist-controlled studies, and within subject and intrasubject replication studies that demonstrate efficacy. Yucha and Montgomery (2008) assigned alcoholism and substance abuse, arthritis, diabetes mellitus, fecal disorders in children, fecal incontinence in adults, insomnia, pediatric headache, traumatic brain injury, urinary incontinence in males, and vulvar vestibulitis (vulvodynia) to this category.

Level 4: Efficacious. This designation requires the satisfaction of six criteria:

(a) In a comparison with a no-treatment control group, alternative treatment group, or sham (placebo) control using randomized assignment, the investigational treatment is shown to be statistically significantly superior to the control condition or the investigational treatment is equivalent to a treatment of established efficacy in a study with sufficient power to detect moderate differences.

(b) The studies have been conducted with a population treated for a specific problem, for whom inclusion criteria are delineated in a reliable, operationally defined manner.

(c) The study used valid and clearly specified outcome measures related to the problem being treated.

(d) The data are subjected to appropriate data analysis.

(e) The diagnostic and treatment variables and procedures are clearly defined in a manner that permits replication of the study by independent researchers.

(f) The superiority or equivalence of the investigational treatment has been shown in at least two independent research settings.

Yucha and Montgomery (2008) assigned attention deficit hyperactivity disorder (ADHD), anxiety, chronic pain, epilepsy, constipation (adult), headache (adult), hypertension, motion sickness, Raynaud's disease, and temporomandibular joint dysfunction to this category.

Level 5: Efficacious and specific. The investigational treatment must be shown to be statistically superior to credible sham therapy, pill, or alternative bona fide treatment in at least two independent research settings. Yucha and Montgomery (2008) assigned urinary incontinence (females) to this category.

Criticisms

In a healthcare environment that emphasizes cost containment and evidence-based practice, biofeedback and neurofeedback professionals continue to address skepticism in the medical community about the cost-effectiveness and efficacy of their treatments. Critics question how these treatments compare with conventional behavioral and medical interventions on efficacy and cost.

Organizations

The Association for Applied Psychophysiology and Biofeedback (AAPB) is a non-profit scientific and professional society for biofeedback and neurofeedback. The International Society for Neurofeedback and Research (ISNR) is a non-profit scientific and professional society for neurofeedback. The Biofeedback Foundation of Europe (BFE) sponsors international education, training, and research activities in biofeedback and neurofeedback. The Northeast Regional Biofeedback Association (NRBS) sponsors theme-centered educational conferences, political advocacy for biofeedback friendly legislation, and research activities in biofeedback and neurofeedback in the Northeast regions of the United States. The Southeast Biofeedback and Clinical Neuroscience Association (SBCNA) is a non-profit regional organization supporting biofeedback professionals with continuing education, ethics guidelines, and public awareness promoting the efficacy and safety of professional biofeedback. The SBCNA offers an Annual Conference for professional continuing education as well as promoting biofeedback as an adjunct to the allied health professions. The SBCNA was formally the North Carolina Biofeedback Society (NCBS), serving Biofeedback since the 1970s. In 2013, the NCBS reorganized as the SBCNA supporting and representing biofeedback and neurofeedback in the Southeast Region of the United States of America.

Certification

The Biofeedback Certification International Alliance (formerly the Biofeedback Certification Institute of America) is a non-profit organization that is a member of the Institute for Credentialing Excellence (ICE). BCIA offers biofeedback certification, neurofeedback (also called EEG biofeedback) certification, and pelvic muscle dysfunction biofeedback. BCIA certifies individuals meeting education and training standards in biofeedback and neurofeedback and progressively recertifies those satisfying continuing education requirements. BCIA certification has been endorsed by the Mayo Clinic, the Association for Applied Psychophysiology and Biofeedback (AAPB), the International Society for Neurofeedback and Research (ISNR), and the Washington State Legislature.

The BCIA didactic education requirement includes a 48-hour course from a regionally-accredited academic institution or a BCIA-approved training program that covers the complete General Biofeedback Blueprint of Knowledge and study of human anatomy and physiology. The General Biofeedback Blueprint of Knowledge areas include: I. Orientation to Biofeedback, II. Stress, Coping, and Illness, III. Psychophysiological Recording, IV. Surface Electromyographic (SEMG) Applications, V. Autonomic Nervous System (ANS) Applications, VI. Electroencephalographic (EEG) Applications, VII. Adjunctive Interventions, and VIII. Professional Conduct.

Applicants may demonstrate their knowledge of human anatomy and physiology by completing a course in human anatomy, human physiology, or human biology provided by a regionally-accredited academic institution or a BCIA-approved training program or by successfully completing an Anatomy and Physiology exam covering the organization of the human body and its systems.

Applicants must also document practical skills training that includes 20 contact hours supervised by a BCIA-approved mentor designed to them teach how to apply clinical biofeedback skills through self-regulation training, 50 patient/client sessions, and case conference presentations. Distance learning allows applicants to complete didactic course work over the internet. Distance mentoring trains candidates from their residence or office. They must recertify every 4 years, complete 55 hours of continuing education during each review period or complete the written exam, and attest that their license/credential (or their supervisor's license/credential) has not been suspended, investigated, or revoked.[172]

Pelvic muscle dysfunction

Pelvic Muscle Dysfunction Biofeedback (PMDB) encompasses "elimination disorders and chronic pelvic pain syndromes." The BCIA didactic education requirement includes a 28-hour course from a regionally-accredited academic institution or a BCIA-approved training program that covers the complete Pelvic Muscle Dysfunction Biofeedback Blueprint of Knowledge and study of human anatomy and physiology. The Pelvic Muscle Dysfunction Biofeedback areas include: I. Applied Psychophysiology and Biofeedback, II. Pelvic Floor Anatomy, Assessment, and Clinical Procedures, III. Clinical Disorders: Bladder Dysfunction, IV. Clinical Disorders: Bowel Dysfunction, and V. Chronic Pelvic Pain Syndromes.

Currently, only licensed healthcare providers may apply for this certification. Applicants must also document practical skills training that includes a 4-hour practicum/personal training session and 12 contact hours spent with a BCIA-approved mentor designed to teach them how to apply clinical biofeedback skills through 30 patient/client sessions and case conference presentations. They must recertify every 3 years, complete 36 hours of continuing education or complete the written exam, and attest that their license/credential has not been suspended, investigated, or revoked.

History

Claude Bernard proposed in 1865 that the body strives to maintain a steady state in the internal environment (milieu intérieur), introducing the concept of homeostasis. In 1885, J.R. Tarchanoff showed that voluntary control of heart rate could be fairly direct (cortical-autonomic) and did not depend on "cheating" by altering breathing rate. In 1901, J. H. Bair studied voluntary control of the retrahens aurem muscle that wiggles the ear, discovering that subjects learned this skill by inhibiting interfering muscles and demonstrating that skeletal muscles are self-regulated.

Alexander Graham Bell attempted to teach the deaf to speak through the use of two devices—the phonautograph, created by Édouard-Léon Scott's, and a manometric flame. The former translated sound vibrations into tracings on smoked glass to show their acoustic waveforms, while the latter allowed sound to be displayed as patterns of light. After World War II, mathematician Norbert Wiener developed cybernetic theory, that proposed that systems are controlled by monitoring their results. The participants at the landmark 1969 conference at the Surfrider Inn in Santa Monica coined the term biofeedback from Wiener's feedback. The conference resulted in the founding of the Bio-Feedback Research Society, which permitted normally isolated researchers to contact and collaborate with each other, as well as popularizing the term "biofeedback." The work of B.F. Skinner led researchers to apply operant conditioning to biofeedback, decide which responses could be voluntarily controlled and which could not. In the first experimental demonstration of biofeedback Shearn used these procedures with heart rate. The effects of the perception of autonomic nervous system activity was initially explored by George Mandler's group in 1958. In 1965, Maia Lisina combined classical and operant conditioning to train subjects to change blood vessel diameter, eliciting and displaying reflexive blood flow changes to teach subjects how to voluntarily control the temperature of their skin. In 1974, H.D. Kimmel trained subjects to sweat using the galvanic skin response.

Hinduism:

Biofeedback systems have been known in India and some other countries for millennia. Ancient Hindu practices like yoga and Pranayama (breathing techniques) are essentially biofeedback methods. Many yogis and sadhus have been known to exercise control over their physiological processes. In addition to recent research on Yoga, Paul Brunton, the British writer who travelled extensively in India, has written about many cases he has witnessed.

Timeline

1958 – G. Mandler's group studied the process of autonomic feedback and its effects.

1962 – D. Shearn used feedback instead of conditioned stimuli to change heart rate.

1962 – Publication of Muscles Alive by John Basmajian and Carlo De Luca

1968 – Annual Veteran's Administration research meeting in Denver that brought together several biofeedback researchers

1969 – April: Conference on Altered States of Consciousness, Council Grove, KS; October: formation and first meeting of the Biofeedback Research Society (BRS), Surfrider Inn, Santa Monica, CA; co-founder Barbara B. Brown becomes the society's first president

1972 – Review and analysis of early biofeedback studies by D. Shearn in the 'Handbook of Psychophysiology'.

1974 – Publication of The Alpha Syllabus: A Handbook of Human EEG Alpha Activity and the first popular book on biofeedback, New Mind, New Body (December), both by Barbara B. Brown

1975 – American Association of Biofeedback Clinicians founded; publication of The Biofeedback Syllabus: A Handbook for the Psychophysiologic Study of Biofeedback by Barbara B. Brown

1976 – BRS renamed the Biofeedback Society of America (BSA)

1977 – Publication of Beyond Biofeedback by Elmer and Alyce Green and Biofeedback: Methods and Procedures in Clinical Practice by George Fuller and Stress and The Art of Biofeedback by Barbara B. Brown

1978 – Publication of Biofeedback: A Survey of the Literature by Francine Butler

1979 – Publication of Biofeedback: Principles and Practice for Clinicians by John Basmajian and Mind/Body Integration: Essential Readings in Biofeedback by Erik Peper, Sonia Ancoli, and Michele Quinn

1980 – First national certification examination in biofeedback offered by the Biofeedback Certification Institute of America (BCIA); publication of Biofeedback: Clinical Applications in Behavioral Medicine by David Olton and Aaron Noonberg and Supermind: The Ultimate Energy by Barbara B. Brown

1984 – Publication of Principles and Practice of Stress Management by Woolfolk and Lehrer and Between Health and Illness: New Notions on Stress and the Nature of Well Being by Barbara B. Brown

1984 - Publication of The Biofeedback Way To Starve Stress, by Mark Golin in Prevention Magazine 1984

1987 – Publication of Biofeedback: A Practitioner's Guide by Mark Schwartz

1989 – BSA renamed the Association for Applied Psychophysiology and Biofeedback

1991 – First national certification examination in stress management offered by BCIA

1994 – Brain Wave and EMG sections established within AAPB

1995 – Society for the Study of Neuronal Regulation (SSNR) founded

1996 – Biofeedback Foundation of Europe (BFE) established

1999 – SSNR renamed the Society for Neuronal Regulation (SNR)

2002 – SNR renamed the International Society for Neuronal Regulation (iSNR)

2003 – Publication of The Neurofeedback Book by Thompson and Thompson

2004 – Publication of Evidence-Based Practice in Biofeedback and Neurofeedback by Carolyn Yucha and Christopher Gilbert

2006 – ISNR renamed the International Society for Neurofeedback and Research (ISNR)

2008 – Biofeedback Neurofeedback Alliance formed to pool the resources of the AAPB, BCIA, and ISNR on joint initiatives

2008 – Biofeedback Alliance and Nomenclature Task Force define biofeedback

2009 – The International Society for Neurofeedback & Research defines neurofeedback

2010 – Biofeedback Certification Institute of America renamed the Biofeedback Certification International Alliance (BCIA)