A digital camera is a camera that captures photographs in digital memory. Most cameras produced today are digital, largely replacing those that capture images on photographic film. Digital cameras are now widely incorporated into mobile devices like smartphones with the same or more capabilities and features of dedicated cameras (which are still avaliable). High-end, high-definition dedicated cameras are still commonly used by professionals and those who desire to take higher-quality photographs.

Digital and digital movie cameras share an optical system, typically using a lens with a variable diaphragm to focus light onto an image pickup device. The diaphragm and shutter admit a controlled amount of light to the image, just as with film, but the image pickup device is electronic rather than chemical. However, unlike film cameras, digital cameras can display images on a screen immediately after being recorded, and store and delete images from memory. Many digital cameras can also record moving videos with sound. Some digital cameras can crop and stitch pictures and perform other elementary image editing.

History

The first semiconductor image sensor was the charge-coupled device (CCD), invented by Willard S. Boyle and George E. Smith at Bell Labs in 1969, based on MOS capacitor technology. The NMOS active-pixel sensor was later invented by Tsutomu Nakamura's team at Olympus in 1985, which led to the development of the CMOS active-pixel sensor (CMOS sensor) by Eric Fossum's team at the NASA Jet Propulsion Laboratory in 1993.

In the 1960s, Eugene F. Lally of the Jet Propulsion Laboratory was thinking about how to use a mosaic photosensor to capture digital images. His idea was to take pictures of the planets and stars while travelling through space to give information about the astronauts' position. As with Texas Instruments employee Willis Adcock's film-less camera (US patent 4,057,830) in 1972, the technology had yet to catch up with the concept.

The Cromemco Cyclops was an all-digital camera introduced as a commercial product in 1975. Its design was published as a hobbyist construction project in the February 1975 issue of Popular Electronics magazine. It used a 32×32 metal-oxide-semiconductor (MOS) image sensor, which was a modified MOS dynamic RAM (DRAM) memory chip.

Steven Sasson, an engineer at Eastman Kodak, invented and built a self-contained electronic camera that used a CCD image sensor in 1975. Around the same time, Fujifilm began developing CCD technology in the 1970s. Early uses were mainly military and scientific; followed by medical and news applications.

Nikon was interested in digital photography since the mid-1980s. In 1986, while presenting to Photokina, Nikon introduced an operational prototype of the first SLR-type electronic camera (Still Video Camera), manufactured by Panasonic. The Nikon SVC was built around a sensor 2/3″ charge-coupled device of 300,000 pixels. Storage media, a magnetic floppy disk inside the camera allows recording 25 or 50 B&W images, depending on the definition. In 1988, Nikon released the first commercial electronic single-lens reflex camera, the QV-1000C.

At Photokina 1988, Fujifilm introduced the FUJIX DS-1P, the first fully digital camera, capable of saving data to a semiconductor memory card. The camera's memory card had a capacity of 2 MB of SRAM (static random-access memory), and could hold up to ten photographs. In 1989, Fujifilm released the FUJIX DS-X, the first fully digital camera to be commercially released. In 1996, Toshiba's 40 MB flash memory card was adopted for several digital cameras.

The first commercial camera phone was the Kyocera Visual Phone VP-210, released in Japan in May 1999. It was called a "mobile videophone" at the time, and had a 110,000-pixel front-facing camera. It stored up to 20 JPEG digital images, which could be sent over e-mail, or the phone could send up to two images per second over Japan's Personal Handy-phone System (PHS) cellular network. The Samsung SCH-V200, released in South Korea in June 2000, was also one of the first phones with a built-in camera. It had a TFT liquid-crystal display (LCD) and stored up to 20 digital photos at 350,000-pixel resolution. However, it could not send the resulting image over the telephone function, but required a computer connection to access photos. The first mass-market camera phone was the J-SH04, a Sharp J-Phone model sold in Japan in November 2000. It could instantly transmit pictures via cell phone telecommunication. By the mid-2000s, higher-end cell phones had an integrated digital camera and by the early 2010s, almost all smartphones had an integrated digital camera.

Image sensors

The two major types of digital image sensor are CCD and CMOS. A CCD sensor has one amplifier for all the pixels, while each pixel in a CMOS active-pixel sensor has its own amplifier. Compared to CCDs, CMOS sensors use less power. Cameras with a small sensor use a back-side-illuminated CMOS (BSI-CMOS) sensor. The image processing capabilities of the camera determine the outcome of the final image quality much more than the sensor type.

Sensor resolution

The resolution of a digital camera is often limited by the image sensor that turns light into discrete signals. The brighter the image at a given point on the sensor, the larger the value that is read for that pixel. Depending on the physical structure of the sensor, a color filter array may be used, which requires demosaicing to recreate a full-color image. The number of pixels in the sensor determines the camera's "pixel count". In a typical sensor, the pixel count is the product of the number of rows and the number of columns. For example, a 1,000 by 1,000 pixel sensor would have 1,000,000 pixels, or 1 megapixel.

Resolution options

Firmwares' resolution selector allows the user to optionally lower the resolution, to reduce the file size per picture and extend lossless digital zooming. The bottom resolution option is typically 640×480 pixels (0.3 Megapixels).

A lower resolution extends the number of remaining photos in free space, postponing the exhaustion of space storage, which is of use where no further data storage device is available, and for captures of lower significance, where the benefit from less space storage consumption outweighs the disadvantage from reduced detail.

Image sharpness

The final quality of an image depends on all optical transformations in the chain of producing the image. Carl Zeiss, a German optician, points out that the weakest link in an optical chain determines the final image quality. In the case of the digital camera, a simple way to describe this concept is that the lens determines the maximum sharpness of the image while the image sensor determines the maximum resolution. The illustration on the right can be said to compare a lens with very poor sharpness on a camera with high resolution, to a lens with good sharpness on a camera with lower resolution.

Methods of image capture

Since the first digital backs were introduced, there have been three main methods of capturing the image, each based on the hardware configuration of the sensor and color filters.

Single-shot capture systems use either one sensor chip with a Bayer filter mosaic, or three separate image sensors (one each for the primary additive colors red, green, and blue) which are exposed to the same image via a beam splitter (see Three-CCD camera).

Multi-shot exposes the sensor to the image in a sequence of three or more openings of the lens aperture. There are several methods of application of the multi-shot technique. The most common was originally to use a single image sensor with three filters passed in front of the sensor in sequence to obtain the additive color information. Another multiple shot method is called Microscanning. This method uses a single sensor chip with a Bayer filter and physically moves the sensor on the focus plane of the lens to construct a higher resolution image than the native resolution of the chip. A third version combines these two methods without a Bayer filter on the chip.

The third method is called scanning because the sensor moves across the focal plane much like the sensor of an image scanner. The linear or tri-linear sensors in scanning cameras utilize only a single line of photosensors, or three lines for the three colors. Scanning may be accomplished by moving the sensor (for example, when using color co-site sampling) or by rotating the whole camera. A digital rotating line camera offers images consisting of a total resolution that is very high.

The choice of method for a given capture is determined largely by the subject matter. It is usually inappropriate to attempt to capture a subject that moves with anything but a single-shot system. However, the higher color fidelity and larger file sizes and resolutions that are available with multi-shot and scanning backs make them more attractive for commercial photographers who are working with stationary subjects and large-format photographs.

Improvements in single-shot cameras and image file processing at the beginning of the 21st century made single shot cameras almost completely dominant, even in high-end commercial photography.

Filter mosaics, interpolation, and aliasing

Most current consumer digital cameras use a Bayer filter mosaic in combination with an optical anti-aliasing filter to reduce the aliasing due to the reduced sampling of the different primary-color images. A demosaicing algorithm is used to interpolate color information to create a full array of RGB image data.

Cameras that use a beam-splitter single-shot 3CCD approach, three-filter multi-shot approach, color co-site sampling or Foveon X3 sensor do not use anti-aliasing filters, nor demosaicing.

Firmware in the camera, or a software in a raw converter program such as Adobe Camera Raw, interprets the raw data from the sensor to obtain a full color image, because the RGB color model requires three intensity values for each pixel: one each for the red, green, and blue (other color models, when used, also require three or more values per pixel). A single sensor element cannot simultaneously record these three intensities, and so a color filter array (CFA) must be used to selectively filter a particular color for each pixel.

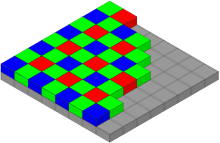

The Bayer filter pattern is a repeating 2x2 mosaic pattern of light filters, with green ones at opposite corners and red and blue in the other two positions. The high proportion of green takes advantage of properties of the human visual system, which determines brightness mostly from green and is far more sensitive to brightness than to hue or saturation. Sometimes a 4-color filter pattern is used, often involving two different hues of green. This provides potentially more accurate color, but requires a slightly more complicated interpolation process.

The color intensity values not captured for each pixel can be interpolated from the values of adjacent pixels which represent the color being calculated.

Sensor size and angle of view

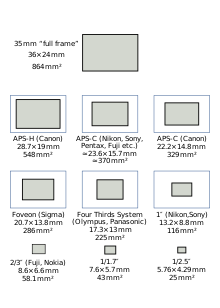

Cameras with digital image sensors that are smaller than the typical 35 mm film size have a smaller field or angle of view when used with a lens of the same focal length. This is because angle of view is a function of both focal length and the sensor or film size used.

The crop factor is relative to the 35mm film format. If a smaller sensor is used, as in most digicams, the field of view is cropped by the sensor to smaller than the 35 mm full-frame format's field of view. This narrowing of the field of view may be described as crop factor, a factor by which a longer focal length lens would be needed to get the same field of view on a 35 mm film camera. Full-frame digital SLRs utilize a sensor of the same size as a frame of 35 mm film.

Common values for field of view crop in DSLRs using active pixel sensors include 1.3x for some Canon (APS-H) sensors, 1.5x for Sony APS-C sensors used by Nikon, Pentax and Konica Minolta and for Fujifilm sensors, 1.6 (APS-C) for most Canon sensors, ~1.7x for Sigma's Foveon sensors and 2x for Kodak and Panasonic 4/3-inch sensors currently used by Olympus and Panasonic. Crop factors for non-SLR consumer compact and bridge cameras are larger, frequently 4x or more.

| Type | Width (mm) | Height (mm) | Size (mm²) |

|---|---|---|---|

| 1/3.6" | 4.00 | 3.00 | 12.0 |

| 1/3.2" | 4.54 | 3.42 | 15.5 |

| 1/3" | 4.80 | 3.60 | 17.3 |

| 1/2.7" | 5.37 | 4.04 | 21.7 |

| 1/2.5" | 5.76 | 4.29 | 24.7 |

| 1/2.3" | 6.16 | 4.62 | 28.5 |

| 1/2" | 6.40 | 4.80 | 30.7 |

| 1/1.8" | 7.18 | 5.32 | 38.2 |

| 1/1.7" | 7.60 | 5.70 | 43.3 |

| 2/3" | 8.80 | 6.60 | 58.1 |

| 1" | 12.8 | 9.6 | 123 |

| 4/3" | 18.0 | 13.5 | 243 |

| APS-C | 25.1 | 16.7 | 419 |

| 35 mm | 36 | 24 | 864 |

| Back | 48 | 36 | 1728 |

Types of digital cameras

Digital cameras come in a wide range of sizes, prices and capabilities. In addition to general purpose digital cameras, specialized cameras including multispectral imaging equipment and astrographs are used for scientific, military, medical and other special purposes.

Compacts

Compact cameras are intended to be portable (pocketable) and are particularly suitable for casual "snapshots".

Many incorporate a retractable lens assembly that provides optical zoom. In most models, an auto actuating lens cover protects the lens from elements. Most ruggedized or water-resistant models do not retract, and most with superzoom capability do not retract fully.

Compact cameras are usually designed to be easy to use. Almost all include an automatic mode, or "auto mode", which automatically makes all camera settings for the user. Some also have manual controls. Compact digital cameras typically contain a small sensor which trades-off picture quality for compactness and simplicity; images can usually only be stored using lossy compression (JPEG). Most have a built-in flash usually of low power, sufficient for nearby subjects. A few high end compact digital cameras have a hotshoe for connecting to an external flash. Live preview is almost always used to frame the photo on an integrated LCD. In addition to being able to take still photographs almost all compact cameras have the ability to record video.

Compacts often have macro capability and zoom lenses, but the zoom range (up to 30x) is generally enough for candid photography but less than is available on bridge cameras (more than 60x), or the interchangeable lenses of DSLR cameras available at a much higher cost. Autofocus systems in compact digital cameras generally are based on a contrast-detection methodology using the image data from the live preview feed of the main imager. Some compact digital cameras use a hybrid autofocus system similar to what is commonly available on DSLRs.

Typically, compact digital cameras incorporate a nearly silent leaf shutter into the lens but play a simulated camera sound for skeuomorphic purposes.

For low cost and small size, these cameras typically use image sensor formats with a diagonal between 6 and 11 mm, corresponding to a crop factor between 7 and 4. This gives them weaker low-light performance, greater depth of field, generally closer focusing ability, and smaller components than cameras using larger sensors. Some cameras use a larger sensor including, at the high end, a pricey full-frame sensor compact camera, such as Sony Cyber-shot DSC-RX1, but have capability near that of a DSLR.

A variety of additional features are available depending on the model of the camera. Such features include GPS, compass, barometers and altimeters.

Starting in 2011, some compact digital cameras can take 3D still photos. These 3D compact stereo cameras can capture 3D panoramic photos with dual lens or even single lens for play back on a 3D TV.

In 2013, Sony released two add-on camera models without display, to be used with a smartphone or tablet, controlled by a mobile application via WiFi.

Rugged compacts

Rugged compact cameras typically include protection against submersion, hot and cold conditions, shock and pressure. Terms used to describe such properties include waterproof, freezeproof, heatproof, shockproof and crushproof, respectively. Nearly all major camera manufacturers have at least one product in this category. Some are waterproof to a considerable depth up to 100 feet (30 m); others only 10 feet (3 m), but only a few will float. Ruggeds often lack some of the features of ordinary compact camera, but they have video capability and the majority can record sound. Most have image stabilization and built-in flash. Touchscreen LCD and GPS do not work under water.

Action cameras

GoPro and other brands offer action cameras which are rugged, small and can be easily attached to helmet, arm, bicycle, etc. Most have wide angle and fixed focus, and can take still pictures and video, typically with sound.

360-degree cameras

The 360-degree camera can take picture or video 360 degrees using two lenses back-to-back and shooting at the same time. Some of the cameras are Ricoh Theta S, Nikon Keymission 360 and Samsung Gear 360. Nico360 was launched in 2016 and claimed as the world's smallest 360-degree camera with size 46 x 46 x 28 mm (1.8 x 1.8 x 1.1 in) and price less than $200. With virtual reality mode built-in stitching, Wifi, and Bluetooth, live streaming can be done. Due to it also being water resistant, the Nico360 can be used as action camera.

There are tend that action cameras have capabilities to shoot 360 degrees with at least 4K resolution.

Bridge cameras

Bridge cameras physically resemble DSLRs, and are sometimes called DSLR-shape or DSLR-like. They provide some similar features but, like compacts, they use a fixed lens and a small sensor. Some compact cameras have also PSAM mode. Most use live preview to frame the image. Their usual autofocus is by the same contrast-detect mechanism as compacts, but many bridge cameras have a manual focus mode and some have a separate focus ring for greater control.

Big physical size and small sensor allow superzoom and wide aperture. Bridge cameras generally include an image stabilization system to enable longer handheld exposures, sometimes better than DSLR for low light condition.

As of 2014, bridge cameras come in two principal classes in terms of sensor size, firstly the more traditional 1/2.3" sensor (as measured by image sensor format) which gives more flexibility in lens design and allows for handholdable zoom from 20 to 24 mm (35 mm equivalent) wide angle all the way up to over 1000 mm supertele, and secondly a 1" sensor that allows better image quality particularly in low light (higher ISO) but puts greater constraints on lens design, resulting in zoom lenses that stop at 200 mm (constant aperture, e.g. Sony RX10) or 400 mm (variable aperture, e.g. Panasonic Lumix FZ1000) equivalent, corresponding to an optical zoom factor of roughly 10 to 15.

Some bridge cameras have a lens thread to attach accessories such as wide-angle or telephoto converters as well as filters such as UV or Circular Polarizing filter and lens hoods. The scene is composed by viewing the display or the electronic viewfinder (EVF). Most have a slightly longer shutter lag than a DSLR. Many of these cameras can store images in a raw format in addition to supporting JPEG. The majority have a built-in flash, but only a few have a hotshoe.

In bright sun, the quality difference between a good compact camera and a digital SLR is minimal but bridge cameras are more portable, cost less and have a greater zoom ability. Thus a bridge camera may better suit outdoor daytime activities, except when seeking professional-quality photos.

Mirrorless interchangeable-lens cameras

In late 2008, a new type of camera emerged, called a mirrorless interchangeable-lens camera. It is technically a DSLR camera that does not require a reflex mirror, a key component of the former. While a typical DSLR has a mirror that reflects light from the lens up to the optical viewfinder, in a mirrorless camera, there is no optical viewfinder. The image sensor is exposed to light at all times, giving the user a digital preview of the image either on the built-in rear LCD screen or an electronic viewfinder (EVF).

These are simpler and more compact than DSLRs due to not having a lens reflex system. MILCs, or mirrorless cameras for short, come with various sensor sizes depending on the brand and manufacturer, these include: a small 1/2.3 inch sensor, as is commonly used in bridge cameras such as the original Pentax Q (more recent Pentax Q versions have a slightly larger 1/1.7 inch sensor); a 1-inch sensor; a Micro Four Thirds sensor; an APS-C sensor found in Sony NEX series and α "DSLR-likes", Fujifilm X series, Pentax K-01, and Canon EOS M; and some, such as the Sony α7, use a full frame (35 mm) sensor, with the Hasselblad X1D being the first medium format mirrorless camera. Some MILCs have a separate electronic viewfinder to compensate the lack of an optical one. In other cameras, the back display is used as the primary viewfinder in the same way as in compact cameras. One disadvantage of mirrorless cameras compared to a typical DSLR is its battery life due to the energy consumption of the electronic viewfinder, but this can be mitigated by a setting inside the camera in some models.

Olympus and Panasonic released many Micro Four Thirds cameras with interchangeable lenses that are fully compatible with each other without any adapter, while others have proprietary mounts. In 2014, Kodak released its first Micro Four Third system camera.

As of March 2014, mirrorless cameras are fast becoming appealing to both amateurs and professionals alike due to their simplicity, compatibility with some DSLR lenses, and features that match most DSLRs today.

Modular cameras

While most digital cameras with interchangeable lenses feature a lens-mount of some kind, there are also a number of modular cameras, where the shutter and sensor are incorporated into the lens module.

The first such modular camera was the Minolta Dimâge V in 1996, followed by the Minolta Dimâge EX 1500 in 1998 and the Minolta MetaFlash 3D 1500 in 1999. In 2009, Ricoh released the Ricoh GXR modular camera.

At CES 2013, Sakar International announced the Polaroid iM1836, an 18 MP camera with 1"-sensor with interchangeable sensor-lens. An adapter for Micro Four Thirds, Nikon and K-mount lenses was planned to ship with the camera.

There are also a number of add-on camera modules for smartphones, they are called lens-style cameras (lens camera or smart lens). They contain all the essential components of a digital camera inside a DSLR lens-shaped module, hence the name, but lack any sort of viewfinder and most controls of a regular camera. Instead, they are connected wirelessly and/or mounted to a smartphone to be used as its display output and operate the camera's various controls.

Lens-style cameras include:

- Sony Cyber-shot QX series "Smart Lens" or "SmartShot" cameras, announced and released in mid 2013 with the Cyber-shot DSC-QX10. In January 2014, a firmware update was announced for the DSC-QX10 and DSC-QX100. In September 2014, Sony announced the Cyber-shot DSC-QX30 as well as the Alpha ILCE-QX1, the former an ultrazoom with a built-in 30x optical zoom lens, the latter opting for an interchangeable Sony E-mount instead of a built-in lens.

- Kodak PixPro smart lens camera series, announced in 2014. These include: the 5X optical zoom SL5, 10X optical zoom SL10, and the 25X optical zoom SL25; all featuring 16 MP sensors and 1080p video recording, except for the SL5 which caps at 720p.

- ViviCam IU680 smart lens camera from Sakar-owned brand, Vivitar, announced in 2014.

- Olympus Air A01 lens camera, announced in 2014 and released in 2015, the lens camera is an open platform with an Android operating system and can detach into 2 parts (sensor module and lens), just like the Sony QX1, and all compatible Micro Four Thirds lenses can then be attached to the built-in lens mount of the camera's sensor module.

Digital single-lens reflex cameras (DSLR)

Digital single-lens reflex cameras (DSLR) is a camera with a digital sensor that utilizes a reflex mirror to split or direct light into the viewfinder to produce an image. The reflex mirror finds the image by blocking light to the camera's sensor and then reflecting it into the camera's pentaprism which allows it to be seen through the viewfinder. When the shutter release is fully pressed the reflex mirror pulls out horizontally below the pentaprism briefly darkening the viewfinder and then opening up the sensor for exposure which creates the photo. The digital image is produced by the sensor which is an array of photoreceptors on a microchip capable of recording light values. Many modern DSLRs offer the ability for "live view" or the framing of the subject emitted from the sensor onto a digital screen.

The sensor also known as a full-frame sensor is much larger than the other types, typically 18mm to 36mm on the diagonal (crop factor 2, 1.6, or 1). The larger sensor permits more light to be received by each pixel; this, combined with the relatively large lenses provides superior low-light performance. For the same field of view and the same aperture, a larger sensor gives shallower focus. DSLRs can equip interchangeable lenses for versatility by removing it from the lens mount of the camera, typically a silver ring on the front side of DSLRs. These lenses work in tandem with the mechanics of the DSLR to adjust aperture and focus. Autofocus is accomplished using sensors in the mirror box and on most modern lenses can be activated from the lens itself which will trigger upon shutter release.

Digital Still Cameras (DSC)

Digital Still Camera (DSC), such as the Sony DSC cameras, is a type of camera that doesn't use a reflex mirror. DSCs are like point-and-shoot cameras and is, the most common type of cameras, due to its comfortable price and its quality.

Here are a list of DSCs: List of Sony Cyber-shot cameras

Fixed-mirror DSLT cameras

Cameras with fixed semi-transparent mirrors, also known as DSLT cameras, such as the Sony SLT cameras, are single-lens without a moving reflex mirror as in a conventional DSLR. A semi-transparent mirror transmits some of the light to the image sensor and reflects some of the light along the path to a pentaprism/pentamirror which then goes to an optical view finder (OVF) as is done with a reflex mirror in DSLR cameras. The total amount of light is not changed, just some of the light travels one path and some of it travels the other. The consequences are that DSLT cameras should shoot a half stop differently from DSLR. One advantage of using a DSLT camera is the blind moments a DSLR user experiences while the reflecting mirror is moved to send the light to the sensor instead of the viewfinder do not exist for DSLT cameras. Because there is no time at which light is not traveling along both paths, DSLT cameras get the benefit of continuous auto-focus tracking. This is especially beneficial for burst-mode shooting in low-light conditions and also for tracking when taking video.

Digital rangefinders

A rangefinder is a device to measure subject distance, with the intent to adjust the focus of a camera's objective lens accordingly (open-loop controller). The rangefinder and lens focusing mechanism may or may not be coupled. In common parlance, the term "rangefinder camera" is interpreted very narrowly to denote manual-focus cameras with a visually-read out optical rangefinder based on parallax. Most digital cameras achieve focus through analysis of the image captured by the objective lens and distance estimation, if it is provided at all, is only a byproduct of the focusing process (closed-loop controller).

Line-scan camera systems

A line-scan camera traditionally has a single row of pixel sensors, instead of a matrix of them. The lines are continuously fed to a computer that joins them to each other and makes an image. This is most commonly done by connecting the camera output to a frame grabber which resides in a PCI slot of an industrial computer. The frame grabber acts to buffer the image and sometimes provide some processing before delivering to the computer software for processing. Industrial processes often require height and width measurements performed by digital line-scan systems.

Multiple rows of sensors may be used to make colored images, or to increase sensitivity by TDI (time delay and integration).

Many industrial applications require a wide field of view. Traditionally maintaining consistent light over large 2D areas is quite difficult. With a line scan camera all that is necessary is to provide even illumination across the “line” currently being viewed by the camera. This makes sharp pictures of objects that pass the camera at high speed.

Such cameras are also commonly used to make photo finishes, to determine the winner when multiple competitors cross the finishing line at nearly the same time. They can also be used as industrial instruments for analyzing fast processes.

Line-scan cameras are also extensively used in imaging from satellites (see push broom scanner). In this case the row of sensors is perpendicular to the direction of satellite motion. Line-scan cameras are widely used in scanners. In this case, the camera moves horizontally.

Stand alone camera

Stand alone cameras can be used as remote camera. One kind weighs 2.31 ounces (65.5 g), with a periscope shape, IPx7 water-resistance and dust-resistance rating and can be enhanced to IPx8 by using a cap. They have no viewfinder or LCD. Lens is a 146 degree wide angle or standard lens, with fixed focus. It can have a microphone and speaker, And it can take photos and video. As a remote camera, a phone app using Android or iOS is needed to send live video, change settings, take photos, or use time lapse.

Superzoom Cameras

Digital superzoom cameras are digital cameras that can zoom in very far. These superzoom cameras are suitable for people who have nearsightedness.

The HX series is a series containing Sony's superzoom cameras like HX20V, HX90V and the newest HX99. HX stands for HyperXoom.

Light-field camera

This type of digital camera captures information about the light field emanating from a scene; that is, the intensity of light in a scene, and also the direction that the light rays are traveling in space. This contrasts with a conventional digital camera, which records only light intensity.

Integration into other devices

Many devices have a built-in digital camera, including, for example, smartphones, mobile phones, PDAs and laptop computers. Built-in cameras generally store the images in the JPEG file format.

Mobile phones incorporating digital cameras were introduced in Japan in 2001 by J-Phone. In 2003 camera phones outsold stand-alone digital cameras, and in 2006 they outsold film and digital stand-alone cameras. Five billion camera phones were sold in five years, and by 2007 more than half of the installed base of all mobile phones were camera phones. Sales of separate cameras peaked in 2008.

Notable digital camera manufacturers

There are many manufacturers that lead in the production of digital cameras (commonly DSLRs). Each brand embodies different mission statements that differ them from each other outside of the physical technology that they produce. While majority manufacturer share modern features amongst their production of cameras, some specialize in specific details either physically on camera or within the system and image quality.

Market trends

Sales of traditional digital cameras have declined due to the increasing use of smartphones for casual photography, which also enable easier manipulation and sharing of photos through the use of apps and web-based services. "Bridge cameras", in contrast, have held their ground with functionality that most smartphone cameras lack, such as optical zoom and other advanced features. DSLRs have also lost ground to Mirrorless interchangeable-lens camera (MILC)s offering the same sensor size in a smaller camera. A few expensive ones use a full-frame sensor, just like DSLR professional cameras.

In response to the convenience and flexibility of smartphone cameras, some manufacturers produced "smart" digital cameras that combine features of traditional cameras with those of a smartphone. In 2012, Nikon and Samsung released the Coolpix S800c and Galaxy Camera, the first two digital cameras to run the Android operating system. Since this software platform is used in many smartphones, they can integrate with some of the same services (such as e-mail attachments, social networks and photo sharing sites) that smartphones do and use other Android-compatible software.

In an inversion, some phone makers have introduced smartphones with cameras designed to resemble traditional digital cameras. Nokia released the 808 PureView and Lumia 1020 in 2012 and 2013; the two devices respectively run the Symbian and Windows Phone operating systems, and both include a 41-megapixel camera (along with a camera grip attachment for the latter). Similarly, Samsung introduced the Galaxy S4 Zoom, having a 16-megapixel camera and 10x optical zoom, combining traits from the Galaxy S4 Mini with the Galaxy Camera. Panasonic Lumix DMC-CM1 is an Android KitKat 4.4 smartphone with 20MP, 1" sensor, the largest sensor for a smartphone ever, with Leica fixed lens equivalent of 28 mm at F2.8, can take RAW image and 4K video, has 21 mm thickness. Furthermore, in 2018 Huawei P20 Pro is an android Oreo 8.1 has triple Leica lenses in the back of the smartphone with 40MP 1/1.7" RGB sensor as first lens, 20MP 1/2.7" monochrome sensor as second lens and 8MP 1/4" RGB sensor with 3x optical zoom as third lens. Combination of first lens and second lens will produce bokeh image with larger high dynamic range, whereas combination of mega pixel first lens and optical zoom will produce maximum 5x digital zoom without loss of quality by reducing the image size to 8MP.

Light-field cameras were introduced in 2013 with one consumer product and several professional ones.

After a big dip of sales in 2012, consumer digital camera sales declined again in 2013 by 36 percent. In 2011, compact digital cameras sold 10 million per month. In 2013, sales fell to about 4 million per month. DSLR and MILC sales also declined in 2013 by 10–15% after almost ten years of double digit growth. Worldwide unit sales of digital cameras is continuously declining from 148 million in 2011 to 58 million in 2015 and tends to decrease more in the following years.

Film camera sales hit their peak at about 37 million units in 1997, while digital camera sales began in 1989. By 2008, the film camera market had died and digital camera sales hit their peak at 121 million units in 2010. In 2002, cell phones with an integrated camera had been introduced and in 2003 the cell phone with an integrated camera had sold 80 million units per year. By 2011, cell phones with an integrated camera were selling hundreds of millions per year, which were causing a decline in digital cameras. In 2015, digital camera sales were 35 million units or only less than a third of digital camera sales numbers at their peak and also slightly less than film camera sold number at their peak.

Connectivity

Transferring photos

Many digital cameras can connect directly to a computer to transfer data:-

- Early cameras used the PC serial port. USB is now the most widely used method (most cameras are viewable as USB mass storage), though some have a FireWire port. Some cameras use USB PTP mode for connection instead of USB MSC; some offer both modes.

- Other cameras use wireless connections, via Bluetooth or IEEE 802.11 Wi-Fi, such as the Kodak EasyShare One. Wi-Fi integrated Memory cards (SDHC, SDXC) can transmit stored images, video and other files to computers or smartphones. Mobile operating systems such as Android allow automatic upload and backup or sharing of images over Wi-Fi to photo sharing and cloud services.

- Cameras with integrated Wi-Fi or specific Wi-Fi adapters mostly allow camera control, especially shutter release, exposure control and more (tethering) from computer or smartphone apps additionally to the transfer of media data.

- Cameraphones and some high-end stand-alone digital cameras also use cellular networks to connect for sharing images. The most common standard on cellular networks is the MMS Multimedia Messaging Service, commonly called "picture messaging". The second method with smartphones is to send a picture as an email attachment. Many old cameraphones, however, do not support email.

A common alternative is the use of a card reader which may be capable of reading several types of storage media, as well as high speed transfer of data to the computer. Use of a card reader also avoids draining the camera battery during the download process. An external card reader allows convenient direct access to the images on a collection of storage media. But if only one storage card is in use, moving it back and forth between the camera and the reader can be inconvenient. Many computers have a card reader built in, at least for SD cards.

Printing photos

Many modern cameras support the PictBridge standard, which allows them to send data directly to a PictBridge-capable computer printer without the need for a computer.

Wireless connectivity can also provide for printing photos without a cable connection.

An instant-print camera, is a digital camera with a built-in printer. This confers a similar functionality as an instant camera which uses instant film to quickly generate a physical photograph. Such non-digital cameras were popularized by Polaroid with the SX-70 in 1972.

Displaying photos

Many digital cameras include a video output port. Usually sVideo, it sends a standard-definition video signal to a television, allowing the user to show one picture at a time. Buttons or menus on the camera allow the user to select the photo, advance from one to another, or automatically send a "slide show" to the TV.

HDMI has been adopted by many high-end digital camera makers, to show photos in their high-resolution quality on an HDTV.

In January 2008, Silicon Image announced a new technology for sending video from mobile devices to a television in digital form. MHL sends pictures as a video stream, up to 1080p resolution, and is compatible with HDMI.

Some DVD recorders and television sets can read memory cards used in cameras; alternatively several types of flash card readers have TV output capability.

Weather-sealing and waterproofing

Cameras can be equipped with a varying amount of environmental sealing to provide protection against splashing water, moisture (humidity and fog), dust and sand, or complete waterproofness to a certain depth and for a certain duration. The latter is one of the approaches to allow underwater photography, the other approach being the use of waterproof housings. Many waterproof digital cameras are also shockproof and resistant to low temperatures.

Some waterproof cameras can be fitted with a waterproof housing to increase the operational depth range. The Olympus 'Tough' range of compact cameras is an example.

Modes

Many digital cameras have preset modes for different applications. Within the constraints of correct exposure various parameters can be changed, including exposure, aperture, focusing, light metering, white balance, and equivalent sensitivity. For example, a portrait might use a wider aperture to render the background out of focus, and would seek out and focus on a human face rather than other image content.

Few cameras are equipped with a voice note (audio-only) recording feature.

Scene modes

Vendors implement a variety scene modes in cameras' firmwares for various purposes, such as a "landscape mode" which prevents focusing on rainy and/or stained window glass such as a windshield, and a "sports mode" which reduces motion blur of moving subjects by reducing exposure time with the help of increased light sensitivity. Firmwares may be equipped with the ability to select a suitable scene mode automatically through artificial intelligence.

Image data storage

Many camera phones and most stand alone digital cameras store image data in flash memory cards or other removable media. Most stand-alone cameras use SD format, while a few use CompactFlash or other types. In January 2012, a faster XQD card format was announced. In early 2014, some high end cameras have two hot-swappable memory slots. Photographers can swap one of the memory card with camera-on. Each memory slot can accept either Compact Flash or SD Card. All new Sony cameras also have two memory slots, one for its Memory Stick and one for SD Card, but not hot-swapable.

The approximate count of remaining photos until space exhaustion is calculated by the firmware throughout use and indicated in the viewfinder, to prepare the user for an impending necessary hot swap of the memory card, and/or file offload.

A few cameras used other removable storage such as Microdrives (very small hard disk drives), CD single (185 MB), and 3.5" floppy disks (e. g. Sony Mavica). Other unusual formats include:

- Onboard (internal) flash memory — Cheap cameras and cameras secondary to the device's main use (such as a camera phone). Some have small capacities such as 100 Megabytes and less, where intended use is buffer storage for uninterrupted operation during a memory card hot swap.

- SuperDisk (LS120) used in two Panasonic digital cameras, the PV-SD4090 and PV-SD5000, which allowed them to use both SuperDisk and 3.5" floppy disks

- PC Card hard drives — early professional cameras (discontinued)

- Thermal printer — known only in one model of camera that printed images immediately rather than storing

- Zink technology — printiing images immediately rather than storing

Sony's Proprietary Memory Stick

Most manufacturers of digital cameras do not provide drivers and software to allow their cameras to work with Linux or other free software. Still, many cameras use the standard USB mass storage and/or Media Transfer Protocol, and are thus widely supported. Other cameras are supported by the gPhoto project, and many computers are equipped with a memory card reader.

File formats

The Joint Photography Experts Group standard (JPEG) is the most common file format for storing image data. Other file types include Tagged Image File Format (TIFF) and various Raw image formats.

Many cameras, especially high-end ones, support a raw image format. A raw image is the unprocessed set of pixel data directly from the camera's sensor, often saved in a proprietary format. Adobe Systems has released the DNG format, a royalty-free raw image format used by at least 10 camera manufacturers.

Raw files initially had to be processed in specialized image editing programs, but over time many mainstream editing programs, such as Google's Picasa, have added support for raw images. Rendering to standard images from raw sensor data allows more flexibility in making major adjustments without losing image quality or retaking the picture.

Formats for movies are AVI, DV, MPEG, MOV (often containing motion JPEG), WMV, and ASF (basically the same as WMV). Recent formats include MP4, which is based on the QuickTime format and uses newer compression algorithms to allow longer recording times in the same space.

Other formats that are used in cameras (but not for pictures) are the Design Rule for Camera Format (DCF), an ISO specification, used in almost all camera since 1998, which defines an internal file structure and naming. Also used is the Digital Print Order Format (DPOF), which dictates what order images are to be printed in and how many copies. The DCF 1998 defines a logical file system with 8.3 filenames and makes the usage of either FAT12, FAT16, FAT32 or exFAT mandatory for its physical layer in order to maximize platform interoperability.

Most cameras include Exif data that provides metadata about the picture. Exif data may include aperture, exposure time, focal length, date and time taken. Some are able to tag the location.

Directory and file structure

In order to guarantee interoperability, DCF specifies the file system for image and sound files to be used on formatted DCF media (like removable or non-removable memory) as FAT12, FAT16, FAT32, or exFAT. Media with a capacity of more than 2 GB must be formatted using FAT32 or exFAT.

The filesystem in a digital camera contains a DCIM (Digital Camera IMages) directory, which can contain multiple subdirectories with names such as "123ABCDE" that consist of a unique directory number (in the range 100...999) and five alphanumeric characters, which may be freely chosen and often refer to a camera maker. These directories contain files with names such as "ABCD1234.JPG" that consist of four alphanumeric characters (often "100_", "DSC0", "DSCF", "IMG_", "MOV_", or "P000"), followed by a number. Handling of directories with possibly user-created duplicate numbers may vary among camera firmwares.

DCF 2.0 adds support for DCF optional files recorded in an optional color space (that is, Adobe RGB rather than sRGB). Such files must be indicated by a leading "_" (as in "_DSC" instead of "100_" or "DSC0").

Thumbnail files

To enable loading many images in miniature view quickly and efficiently, and to retain meta data, some vendors' firmwares generate accompanying low-resolution thumbnail files for videos and raw photos. For example, those of Canon cameras end with .THM. JPEG can already store a thumbnail image standalone.

Batteries

Digital cameras have become smaller over time, resulting in an ongoing need to develop a battery small enough to fit in the camera and yet able to power it for a reasonable length of time.

Digital cameras utilize either proprietary or standard consumer batteries. As of March 2014, most cameras use proprietary lithium-ion batteries while some use standard AA batteries or primarily use a proprietary Lithium-ion rechargeable battery pack but have an optional AA battery holder available.

Proprietary

The most common class of battery used in digital cameras is proprietary battery formats. These are built to a manufacturer's custom specifications. Almost all proprietary batteries are lithium-ion. In addition to being available from the OEM, aftermarket replacement batteries are commonly available for most camera models.

Standard consumer batteries

Digital cameras that utilize off-the-shelf batteries are typically designed to be able to use both single-use disposable and rechargeable batteries, but not with both types in use at the same time. The most common off-the-shelf battery size used is AA. CR2, CR-V3 batteries, and AAA batteries are also used in some cameras. The CR2 and CR-V3 batteries are lithium based, intended for a single use. Rechargeable RCR-V3 lithium-ion batteries are also available as an alternative to non-rechargeable CR-V3 batteries.

Some battery grips for DSLRs come with a separate holder to accommodate AA cells as an external power source.

Conversion of film cameras to digital

When digital cameras became common, many photographers asked whether their film cameras could be converted to digital. The answer was not immediately clear, as it differed among models. For the majority of 35 mm film cameras the answer is no, the reworking and cost would be too great, especially as lenses have been evolving as well as cameras. For most a conversion to digital, to give enough space for the electronics and allow a liquid crystal display to preview, would require removing the back of the camera and replacing it with a custom built digital unit.

Many early professional SLR cameras, such as the Kodak DCS series, were developed from 35 mm film cameras. The technology of the time, however, meant that rather than being digital "backs" the bodies of these cameras were mounted on large, bulky digital units, often bigger than the camera portion itself. These were factory built cameras, however, not aftermarket conversions.

A notable exception is the Nikon E2 and Nikon E3, using additional optics to convert the 35 mm format to a 2/3 CCD-sensor.

A few 35 mm cameras have had digital camera backs made by their manufacturer, Leica being a notable example. Medium format and large format cameras (those using film stock greater than 35 mm), have a low unit production, and typical digital backs for them cost over $10,000. These cameras also tend to be highly modular, with handgrips, film backs, winders, and lenses available separately to fit various needs.

The very large sensor these backs use leads to enormous image sizes. For example, Phase One's P45 39 MP image back creates a single TIFF image of size up to 224.6 MB, and even greater pixel counts are available. Medium format digitals such as this are geared more towards studio and portrait photography than their smaller DSLR counterparts; the ISO speed in particular tends to have a maximum of 400, versus 6400 for some DSLR cameras. (Canon EOS-1D Mark IV and Nikon D3S have ISO 12800 plus Hi-3 ISO 102400 with the Canon EOS-1Dx's ISO of 204800).

Digital camera backs

In the industrial and high-end professional photography market, some camera systems use modular (removable) image sensors. For example, some medium format SLR cameras, such as the Mamiya 645D series, allow installation of either a digital camera back or a traditional photographic film back.

- Area array

- CCD

- CMOS

- Linear array

- CCD (monochrome)

- 3-strip CCD with color filters

Linear array cameras are also called scan backs.

- Single-shot

- Multi-shot (three-shot, usually)

Most earlier digital camera backs used linear array sensors, moving vertically to digitize the image. Many of them only capture grayscale images. The relatively long exposure times, in the range of seconds or even minutes generally limit scan backs to studio applications, where all aspects of the photographic scene are under the photographer's control.

Some other camera backs use CCD arrays similar to typical cameras. These are called single-shot backs.

Since it is much easier to manufacture a high-quality linear CCD array with only thousands of pixels than a CCD matrix with millions, very high resolution linear CCD camera backs were available much earlier than their CCD matrix counterparts. For example, you could buy an (albeit expensive) camera back with over 7,000 pixel horizontal resolution in the mid-1990s. However, as of 2004, it is still difficult to buy a comparable CCD matrix camera of the same resolution. Rotating line cameras, with about 10,000 color pixels in its sensor line, are able, as of 2005, to capture about 120,000 lines during one full 360 degree rotation, thereby creating a single digital image of 1,200 Megapixels.

Most modern digital camera backs use CCD or CMOS matrix sensors. The matrix sensor captures the entire image frame at once, instead of incrementing scanning the frame area through the prolonged exposure. For example, Phase One produces a 39 million pixel digital camera back with a 49.1 x 36.8 mm CCD in 2008. This CCD array is a little smaller than a frame of 120 film and much larger than a 35 mm frame (36 x 24 mm). In comparison, consumer digital cameras use arrays ranging from 36 x 24 mm (full frame on high end consumer DSLRs) to 1.28 x 0.96 mm (on camera phones) CMOS sensor.