From Wikipedia, the free encyclopedia

Genetic engineering, also called genetic modification, is the direct manipulation of an organism's genome using biotechnology. New DNA may be inserted in the host genome by first isolating and copying the genetic material of interest using molecular cloning methods to generate a DNA sequence, or by synthesizing the DNA, and then inserting this construct into the host organism. Genes may be removed, or "knocked out", using a nuclease. Gene targeting is a different technique that uses homologous recombination to change an endogenous gene, and can be used to delete a gene, remove exons, add a gene, or introduce point mutations.

An organism that is generated through genetic engineering is considered to be a genetically modified organism (GMO). The first GMOs were bacteria in 1973 and GM mice were generated in 1974. Insulin-producing bacteria were commercialized in 1982 and genetically modified food has been sold since 1994. Glofish, the first GMO designed as a pet, was first sold in the United States December in 2003.[1]

Genetic engineering techniques have been applied in numerous fields including research, agriculture, industrial biotechnology, and medicine. Enzymes used in laundry detergent and medicines such as insulin and human growth hormone are now manufactured in GM cells, experimental GM cell lines and GM animals such as mice or zebrafish are being used for research purposes, and genetically modified crops have been commercialized.

Definition

Genetic engineering alters the genetic make-up of an organism using techniques that remove heritable material or that introduce DNA prepared outside the organism either directly into the host or into a cell that is then fused or hybridized with the host.[4] This involves using recombinant nucleic acid (DNA or RNA) techniques to form new combinations of heritable genetic material followed by the incorporation of that material either indirectly through a vector system or directly through micro-injection, macro-injection and micro-encapsulation techniques.

Genetic engineering does not normally include traditional animal and plant breeding, in vitro fertilisation, induction of polyploidy, mutagenesis and cell fusion techniques that do not use recombinant nucleic acids or a genetically modified organism in the process.[4] However the European Commission has also defined genetic engineering broadly as including selective breeding and other means of artificial selection.[5] Cloning and stem cell research, although not considered genetic engineering,[6] are closely related and genetic engineering can be used within them.[7] Synthetic biology is an emerging discipline that takes genetic engineering a step further by introducing artificially synthesized material from raw materials into an organism.[8]

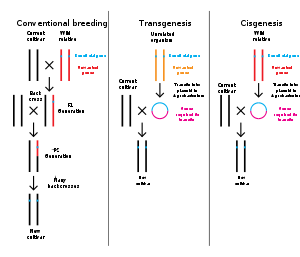

If genetic material from another species is added to the host, the resulting organism is called transgenic. If genetic material from the same species or a species that can naturally breed with the host is used the resulting organism is called cisgenic.[9] Genetic engineering can also be used to remove genetic material from the target organism, creating a gene knockout organism.[10] In Europe genetic modification is synonymous with genetic engineering while within the United States of America it can also refer to conventional breeding methods.[11][12] The Canadian regulatory system is based on whether a product has novel features regardless of method of origin. In other words, a product is regulated as genetically modified if it carries some trait not previously found in the species whether it was generated using traditional breeding methods (e.g., selective breeding, cell fusion, mutation breeding) or genetic engineering.[13][14][15] Within the scientific community, the term genetic engineering is not commonly used; more specific terms such as transgenic are preferred.

Genetically modified organisms

Plants, animals or micro organisms that have changed through genetic engineering are termed genetically modified organisms or GMOs.[16] Bacteria were the first organisms to be genetically modified. Plasmid DNA containing new genes can be inserted into the bacterial cell and the bacteria will then express those genes. These genes can code for medicines or enzymes that process food and other substrates.[17][18] Plants have been modified for insect protection, herbicide resistance, virus resistance, enhanced nutrition, tolerance to environmental pressures and the production of edible vaccines.[19] Most commercialised GMO's are insect resistant and/or herbicide tolerant crop plants.[20]

Genetically modified animals have been used for research, model animals and the production of agricultural or pharmaceutical products. They include animals with genes knocked out, increased susceptibility to disease, hormones for extra growth and the ability to express proteins in their milk.[21]

In 1972 Paul Berg created the first recombinant DNA molecules by combining DNA from the monkey virus SV40 with that of the lambda virus.[24] In 1973 Herbert Boyer and Stanley Cohen created the first transgenic organism by inserting antibiotic resistance genes into the plasmid of an E. coli bacterium.[25][26] A year later Rudolf Jaenisch created a transgenic mouse by introducing foreign DNA into its embryo, making it the world’s first transgenic animal.[27] These achievements led to concerns in the scientific community about potential risks from genetic engineering, which were first discussed in depth at the Asilomar Conference in 1975. One of the main recommendations from this meeting was that government oversight of recombinant DNA research should be established until the technology was deemed safe.[28][29]

In 1976 Genentech, the first genetic engineering company, was founded by Herbert Boyer and Robert Swanson and a year later the company produced a human protein (somatostatin) in E.coli. Genentech announced the production of genetically engineered human insulin in 1978.[30] In 1980, the U.S. Supreme Court in the Diamond v. Chakrabarty case ruled that genetically altered life could be patented.[31] The insulin produced by bacteria, branded humulin, was approved for release by the Food and Drug Administration in 1982.[32]

In the 1970s graduate student Steven Lindow of the University of Wisconsin–Madison with D.C. Arny and C. Upper found a bacterium he identified as P. syringae that played a role in ice nucleation and in 1977, he discovered a mutant ice-minus strain. Later, he successfully created a recombinant ice-minus strain.[33] In 1983, a biotech company, Advanced Genetic Sciences (AGS) applied for U.S. government authorization to perform field tests with the ice-minus strain of P. syringae to protect crops from frost, but environmental groups and protestors delayed the field tests for four years with legal challenges.[34] In 1987, the ice-minus strain of P. syringae became the first genetically modified organism (GMO) to be released into the environment[35] when a strawberry field and a potato field in California were sprayed with it.[36] Both test fields were attacked by activist groups the night before the tests occurred: "The world's first trial site attracted the world's first field trasher".[35]

The first field trials of genetically engineered plants occurred in France and the USA in 1986, tobacco plants were engineered to be resistant to herbicides.[37] The People’s Republic of China was the first country to commercialize transgenic plants, introducing a virus-resistant tobacco in 1992.[38] In 1994 Calgene attained approval to commercially release the Flavr Savr tomato, a tomato engineered to have a longer shelf life.[39] In 1994, the European Union approved tobacco engineered to be resistant to the herbicide bromoxynil, making it the first genetically engineered crop commercialized in Europe.[40] In 1995, Bt Potato was approved safe by the Environmental Protection Agency, after having been approved by the FDA, making it the first pesticide producing crop to be approved in the USA.[41] In 2009 11 transgenic crops were grown commercially in 25 countries, the largest of which by area grown were the USA, Brazil, Argentina, India, Canada, China, Paraguay and South Africa.[42]

In the late 1980s and early 1990s, guidance on assessing the safety of genetically engineered plants and food emerged from organizations including the FAO and WHO.[43][44][45][46]

In 2010, scientists at the J. Craig Venter Institute, announced that they had created the first synthetic bacterial genome. The researchers added the new genome to bacterial cells and selected for cells that contained the new genome. To do this the cells undergoes a process called resolution, where during bacterial cell division one new cell receives the original DNA genome of the bacteria, whilst the other receives the new synthetic genome. When this cell replicates it uses the synthetic genome as its template. The resulting bacterium the researchers developed, named Synthia, was the world's first synthetic life form.[47][48]

On 19 March 2015, scientists, including an inventor of CRISPR, urged a worldwide moratorium on using gene editing methods to genetically engineer the human genome in a way that can be inherited, writing “scientists should avoid even attempting, in lax jurisdictions, germline genome modification for clinical application in humans” until the full implications “are discussed among scientific and governmental organizations.”[49][50][51][52]

The gene to be inserted into the genetically modified organism must be combined with other genetic elements in order for it to work properly. The gene can also be modified at this stage for better expression or effectiveness. As well as the gene to be inserted most constructs contain a promoter and terminator region as well as a selectable marker gene. The promoter region initiates transcription of the gene and can be used to control the location and level of gene expression, while the terminator region ends transcription. The selectable marker, which in most cases confers antibiotic resistance to the organism it is expressed in, is needed to determine which cells are transformed with the new gene. The constructs are made using recombinant DNA techniques, such as restriction digests, ligations and molecular cloning.[57] The manipulation of the DNA generally occurs within a plasmid.

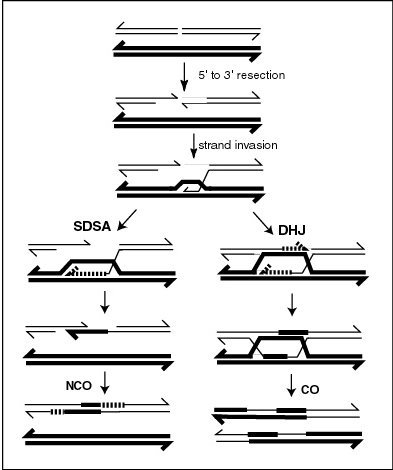

The most common form of genetic engineering involves inserting new genetic material randomly within the host genome.[citation needed] Other techniques allow new genetic material to be inserted at a specific location in the host genome or generate mutations at desired genomic loci capable of knocking out endogenous genes. The technique of gene targeting uses homologous recombination to target desired changes to a specific endogenous gene. This tends to occur at a relatively low frequency in plants and animals and generally requires the use of selectable markers. The frequency of gene targeting can be greatly enhanced with the use of engineered nucleases such as zinc finger nucleases,[58][59] engineered homing endonucleases,[60][61] or nucleases created from TAL effectors.[62][63] In addition to enhancing gene targeting, engineered nucleases can also be used to introduce mutations at endogenous genes that generate a gene knockout.[64][65]

Only about 1% of bacteria are naturally capable of taking up foreign DNA. However, this ability can be induced in other bacteria via stress (e.g. thermal or electric shock), thereby increasing the cell membrane's permeability to DNA; up-taken DNA can either integrate with the genome or exist as extrachromosomal DNA. DNA is generally inserted into animal cells using microinjection, where it can be injected through the cell's nuclear envelope directly into the nucleus or through the use of viral vectors.[66] In plants the DNA is generally inserted using Agrobacterium-mediated recombination or biolistics.[67]

In Agrobacterium-mediated recombination, the plasmid construct contains T-DNA, DNA which is responsible for insertion of the DNA into the host plants genome. This plasmid is transformed into Agrobacterium containing no plasmids prior to infecting the plant cells. The Agrobacterium will then naturally insert the genetic material into the plant cells.[68] In biolistics transformation particles of gold or tungsten are coated with DNA and then shot into young plant cells or plant embryos. Some genetic material will enter the cells and transform them. This method can be used on plants that are not susceptible to Agrobacterium infection and also allows transformation of plant plastids. Another transformation method for plant and animal cells is electroporation. Electroporation involves subjecting the plant or animal cell to an electric shock, which can make the cell membrane permeable to plasmid DNA. In some cases the electroporated cells will incorporate the DNA into their genome. Due to the damage caused to the cells and DNA the transformation efficiency of biolistics and electroporation is lower than agrobacterial mediated transformation and microinjection.[69]

As often only a single cell is transformed with genetic material the organism must be regenerated from that single cell. As bacteria consist of a single cell and reproduce clonally regeneration is not necessary. In plants this is accomplished through the use of tissue culture. Each plant species has different requirements for successful regeneration through tissue culture. If successful an adult plant is produced that contains the transgene in every cell. In animals it is necessary to ensure that the inserted DNA is present in the embryonic stem cells. Selectable markers are used to easily differentiate transformed from untransformed cells. These markers are usually present in the transgenic organism, although a number of strategies have been developed that can remove the selectable marker from the mature transgenic plant.[70] When the offspring is produced they can be screened for the presence of the gene. All offspring from the first generation will be heterozygous for the inserted gene and must be mated together to produce a homozygous animal.

Further testing uses PCR, Southern hybridization, and DNA sequencing is conducted to confirm that an organism contains the new gene. These tests can also confirm the chromosomal location and copy number of the inserted gene. The presence of the gene does not guarantee it will be expressed at appropriate levels in the target tissue so methods that look for and measure the gene products (RNA and protein) are also used. These include northern hybridization, quantitative RT-PCR, Western blot, immunofluorescence, ELISA and phenotypic analysis. For stable transformation the gene should be passed to the offspring in a Mendelian inheritance pattern, so the organism's offspring are also studied.

Genetic engineering is used to create animal models of human diseases. Genetically modified mice are the most common genetically engineered animal model.[80] They have been used to study and model cancer (the oncomouse), obesity, heart disease, diabetes, arthritis, substance abuse, anxiety, aging and Parkinson disease.[81] Potential cures can be tested against these mouse models. Also genetically modified pigs have been bred with the aim of increasing the success of pig to human organ transplantation.[82]

Gene therapy is the genetic engineering of humans by replacing defective human genes with functional copies. This can occur in somatic tissue or germline tissue. If the gene is inserted into the germline tissue it can be passed down to that person's descendants.[83][84] Gene therapy has been successfully used to treat multiple diseases, including X-linked SCID,[85] chronic lymphocytic leukemia (CLL),[86] and Parkinson's disease.[87] In 2012, Glybera became the first gene therapy treatment to be approved for clinical use in either Europe or the United States after its endorsement by the European Commission.[88][89] There are also ethical concerns should the technology be used not just for treatment, but for enhancement, modification or alteration of a human beings' appearance, adaptability, intelligence, character or behavior.[90] The distinction between cure and enhancement can also be difficult to establish.[91] Transhumanists consider the enhancement of humans desirable.

Genetic engineering is an important tool for natural scientists. Genes and other genetic information from a wide range of organisms are transformed into bacteria for storage and modification, creating genetically modified bacteria in the process. Bacteria are cheap, easy to grow, clonal, multiply quickly, relatively easy to transform and can be stored at -80 °C almost indefinitely. Once a gene is isolated it can be stored inside the bacteria providing an unlimited supply for research.

Organisms are genetically engineered to discover the functions of certain genes. This could be the effect on the phenotype of the organism, where the gene is expressed or what other genes it interacts with. These experiments generally involve loss of function, gain of function, tracking and expression.

Bacteria have been engineered to function as sensors by expressing a fluorescent protein under certain environmental conditions.[100]

One of the best-known and controversial applications of genetic engineering is the creation and use of genetically modified crops or genetically modified organisms, such as genetically modified fish, which are used to produce genetically modified food and materials with diverse uses. There are four main goals in generating genetically modified crops.[102]

One goal, and the first to be realized commercially, is to provide protection from environmental threats, such as cold (in the case of Ice-minus bacteria), or pathogens, such as insects or viruses, and/or resistance to herbicides. There are also fungal and virus resistant crops developed or in development.[103][104] They have been developed to make the insect and weed management of crops easier and can indirectly increase crop yield.[105]

Another goal in generating GMOs is to modify the quality of produce by, for instance, increasing the nutritional value or providing more industrially useful qualities or quantities.[106] The Amflora potato, for example, produces a more industrially useful blend of starches. Cows have been engineered to produce more protein in their milk to facilitate cheese production.[107] Soybeans and canola have been genetically modified to produce more healthy oils.[108][109]

Another goal consists of driving the GMO to produce materials that it does not normally make. One example is "pharming", which uses crops as bioreactors to produce vaccines, drug intermediates, or drug themselves; the useful product is purified from the harvest and then used in the standard pharmaceutical production process.[110] Cows and goats have been engineered to express drugs and other proteins in their milk, and in 2009 the FDA approved a drug produced in goat milk.[111][112]

Another goal in generating GMOs, is to directly improve yield by accelerating growth, or making the organism more hardy (for plants, by improving salt, cold or drought tolerance).[106] Some agriculturally important animals have been genetically modified with growth hormones to increase their size.[113]

The genetic engineering of agricultural crops can increase the growth rates and resistance to different diseases caused by pathogens and parasites.[114] This is beneficial as it can greatly increase the production of food sources with the usage of fewer resources that would be required to host the world's growing populations. These modified crops would also reduce the usage of chemicals, such as fertilizers and pesticides, and therefore decrease the severity and frequency of the damages produced by these chemical pollution.[114][115]

Ethical and safety concerns have been raised around the use of genetically modified food.[116] A major safety concern relates to the human health implications of eating genetically modified food, in particular whether toxic or allergic reactions could occur.[117] Gene flow into related non-transgenic crops, off target effects on beneficial organisms and the impact on biodiversity are important environmental issues.[118] Ethical concerns involve religious issues, corporate control of the food supply, intellectual property rights and the level of labeling needed on genetically modified products.

Genetic engineering has also been used to create novelty items such as lavender-colored carnations,[121] blue roses,[122] and glowing fish.[123][124]

History

Humans have altered the genomes of species for thousands of years through selective breeding, or artificial selection as contrasted with natural selection, and more recently through mutagenesis. Genetic engineering as the direct manipulation of DNA by humans outside breeding and mutations has only existed since the 1970s. The term "genetic engineering" was first coined by Jack Williamson in his science fiction novel Dragon's Island, published in 1951,[22] one year before DNA's role in heredity was confirmed by Alfred Hershey and Martha Chase,[23] and two years before James Watson and Francis Crick showed that the DNA molecule has a double-helix structure.In 1972 Paul Berg created the first recombinant DNA molecules by combining DNA from the monkey virus SV40 with that of the lambda virus.[24] In 1973 Herbert Boyer and Stanley Cohen created the first transgenic organism by inserting antibiotic resistance genes into the plasmid of an E. coli bacterium.[25][26] A year later Rudolf Jaenisch created a transgenic mouse by introducing foreign DNA into its embryo, making it the world’s first transgenic animal.[27] These achievements led to concerns in the scientific community about potential risks from genetic engineering, which were first discussed in depth at the Asilomar Conference in 1975. One of the main recommendations from this meeting was that government oversight of recombinant DNA research should be established until the technology was deemed safe.[28][29]

In 1976 Genentech, the first genetic engineering company, was founded by Herbert Boyer and Robert Swanson and a year later the company produced a human protein (somatostatin) in E.coli. Genentech announced the production of genetically engineered human insulin in 1978.[30] In 1980, the U.S. Supreme Court in the Diamond v. Chakrabarty case ruled that genetically altered life could be patented.[31] The insulin produced by bacteria, branded humulin, was approved for release by the Food and Drug Administration in 1982.[32]

In the 1970s graduate student Steven Lindow of the University of Wisconsin–Madison with D.C. Arny and C. Upper found a bacterium he identified as P. syringae that played a role in ice nucleation and in 1977, he discovered a mutant ice-minus strain. Later, he successfully created a recombinant ice-minus strain.[33] In 1983, a biotech company, Advanced Genetic Sciences (AGS) applied for U.S. government authorization to perform field tests with the ice-minus strain of P. syringae to protect crops from frost, but environmental groups and protestors delayed the field tests for four years with legal challenges.[34] In 1987, the ice-minus strain of P. syringae became the first genetically modified organism (GMO) to be released into the environment[35] when a strawberry field and a potato field in California were sprayed with it.[36] Both test fields were attacked by activist groups the night before the tests occurred: "The world's first trial site attracted the world's first field trasher".[35]

The first field trials of genetically engineered plants occurred in France and the USA in 1986, tobacco plants were engineered to be resistant to herbicides.[37] The People’s Republic of China was the first country to commercialize transgenic plants, introducing a virus-resistant tobacco in 1992.[38] In 1994 Calgene attained approval to commercially release the Flavr Savr tomato, a tomato engineered to have a longer shelf life.[39] In 1994, the European Union approved tobacco engineered to be resistant to the herbicide bromoxynil, making it the first genetically engineered crop commercialized in Europe.[40] In 1995, Bt Potato was approved safe by the Environmental Protection Agency, after having been approved by the FDA, making it the first pesticide producing crop to be approved in the USA.[41] In 2009 11 transgenic crops were grown commercially in 25 countries, the largest of which by area grown were the USA, Brazil, Argentina, India, Canada, China, Paraguay and South Africa.[42]

In the late 1980s and early 1990s, guidance on assessing the safety of genetically engineered plants and food emerged from organizations including the FAO and WHO.[43][44][45][46]

In 2010, scientists at the J. Craig Venter Institute, announced that they had created the first synthetic bacterial genome. The researchers added the new genome to bacterial cells and selected for cells that contained the new genome. To do this the cells undergoes a process called resolution, where during bacterial cell division one new cell receives the original DNA genome of the bacteria, whilst the other receives the new synthetic genome. When this cell replicates it uses the synthetic genome as its template. The resulting bacterium the researchers developed, named Synthia, was the world's first synthetic life form.[47][48]

On 19 March 2015, scientists, including an inventor of CRISPR, urged a worldwide moratorium on using gene editing methods to genetically engineer the human genome in a way that can be inherited, writing “scientists should avoid even attempting, in lax jurisdictions, germline genome modification for clinical application in humans” until the full implications “are discussed among scientific and governmental organizations.”[49][50][51][52]

Process

The first step is to choose and isolate the gene that will be inserted into the genetically modified organism. As of 2012, most commercialised GM plants have genes transferred into them that provide protection against insects or tolerance to herbicides.[53] The gene can be isolated using restriction enzymes to cut DNA into fragments and gel electrophoresis to separate them out according to length.[54] Polymerase chain reaction (PCR) can also be used to amplify up a gene segment, which can then be isolated through gel electrophoresis.[55] If the chosen gene or the donor organism's genome has been well studied it may be present in a genetic library. If the DNA sequence is known, but no copies of the gene are available, it can be artificially synthesized.[56]The gene to be inserted into the genetically modified organism must be combined with other genetic elements in order for it to work properly. The gene can also be modified at this stage for better expression or effectiveness. As well as the gene to be inserted most constructs contain a promoter and terminator region as well as a selectable marker gene. The promoter region initiates transcription of the gene and can be used to control the location and level of gene expression, while the terminator region ends transcription. The selectable marker, which in most cases confers antibiotic resistance to the organism it is expressed in, is needed to determine which cells are transformed with the new gene. The constructs are made using recombinant DNA techniques, such as restriction digests, ligations and molecular cloning.[57] The manipulation of the DNA generally occurs within a plasmid.

The most common form of genetic engineering involves inserting new genetic material randomly within the host genome.[citation needed] Other techniques allow new genetic material to be inserted at a specific location in the host genome or generate mutations at desired genomic loci capable of knocking out endogenous genes. The technique of gene targeting uses homologous recombination to target desired changes to a specific endogenous gene. This tends to occur at a relatively low frequency in plants and animals and generally requires the use of selectable markers. The frequency of gene targeting can be greatly enhanced with the use of engineered nucleases such as zinc finger nucleases,[58][59] engineered homing endonucleases,[60][61] or nucleases created from TAL effectors.[62][63] In addition to enhancing gene targeting, engineered nucleases can also be used to introduce mutations at endogenous genes that generate a gene knockout.[64][65]

Transformation

A. tumefaciens attaching itself to a carrot cell

Only about 1% of bacteria are naturally capable of taking up foreign DNA. However, this ability can be induced in other bacteria via stress (e.g. thermal or electric shock), thereby increasing the cell membrane's permeability to DNA; up-taken DNA can either integrate with the genome or exist as extrachromosomal DNA. DNA is generally inserted into animal cells using microinjection, where it can be injected through the cell's nuclear envelope directly into the nucleus or through the use of viral vectors.[66] In plants the DNA is generally inserted using Agrobacterium-mediated recombination or biolistics.[67]

In Agrobacterium-mediated recombination, the plasmid construct contains T-DNA, DNA which is responsible for insertion of the DNA into the host plants genome. This plasmid is transformed into Agrobacterium containing no plasmids prior to infecting the plant cells. The Agrobacterium will then naturally insert the genetic material into the plant cells.[68] In biolistics transformation particles of gold or tungsten are coated with DNA and then shot into young plant cells or plant embryos. Some genetic material will enter the cells and transform them. This method can be used on plants that are not susceptible to Agrobacterium infection and also allows transformation of plant plastids. Another transformation method for plant and animal cells is electroporation. Electroporation involves subjecting the plant or animal cell to an electric shock, which can make the cell membrane permeable to plasmid DNA. In some cases the electroporated cells will incorporate the DNA into their genome. Due to the damage caused to the cells and DNA the transformation efficiency of biolistics and electroporation is lower than agrobacterial mediated transformation and microinjection.[69]

As often only a single cell is transformed with genetic material the organism must be regenerated from that single cell. As bacteria consist of a single cell and reproduce clonally regeneration is not necessary. In plants this is accomplished through the use of tissue culture. Each plant species has different requirements for successful regeneration through tissue culture. If successful an adult plant is produced that contains the transgene in every cell. In animals it is necessary to ensure that the inserted DNA is present in the embryonic stem cells. Selectable markers are used to easily differentiate transformed from untransformed cells. These markers are usually present in the transgenic organism, although a number of strategies have been developed that can remove the selectable marker from the mature transgenic plant.[70] When the offspring is produced they can be screened for the presence of the gene. All offspring from the first generation will be heterozygous for the inserted gene and must be mated together to produce a homozygous animal.

Further testing uses PCR, Southern hybridization, and DNA sequencing is conducted to confirm that an organism contains the new gene. These tests can also confirm the chromosomal location and copy number of the inserted gene. The presence of the gene does not guarantee it will be expressed at appropriate levels in the target tissue so methods that look for and measure the gene products (RNA and protein) are also used. These include northern hybridization, quantitative RT-PCR, Western blot, immunofluorescence, ELISA and phenotypic analysis. For stable transformation the gene should be passed to the offspring in a Mendelian inheritance pattern, so the organism's offspring are also studied.

Genome editing

Genome editing is a type of genetic engineering in which DNA is inserted, replaced, or removed from a genome using artificially engineered nucleases, or "molecular scissors." The nucleases create specific double-stranded break (DSBs) at desired locations in the genome, and harness the cell’s endogenous mechanisms to repair the induced break by natural processes of homologous recombination (HR) and nonhomologous end-joining (NHEJ). There are currently four families of engineered nucleases: meganucleases, zinc finger nucleases (ZFNs), transcription activator-like effector nucleases (TALENs), and CRISPRs.[71][72]Applications

Genetic engineering has applications in medicine, research, industry and agriculture and can be used on a wide range of plants, animals and micro organisms.Medicine

In medicine, genetic engineering has been used to mass-produce insulin, human growth hormones, follistim (for treating infertility), human albumin, monoclonal antibodies, antihemophilic factors, vaccines and many other drugs.[73][74] Vaccination generally involves injecting weak, live, killed or inactivated forms of viruses or their toxins into the person being immunized.[75] Genetically engineered viruses are being developed that can still confer immunity, but lack the infectious sequences.[76] Mouse hybridomas, cells fused together to create monoclonal antibodies, have been humanised through genetic engineering to create human monoclonal antibodies.[77] Genetic engineering has shown promise for treating certain forms of cancer.[78][79]Genetic engineering is used to create animal models of human diseases. Genetically modified mice are the most common genetically engineered animal model.[80] They have been used to study and model cancer (the oncomouse), obesity, heart disease, diabetes, arthritis, substance abuse, anxiety, aging and Parkinson disease.[81] Potential cures can be tested against these mouse models. Also genetically modified pigs have been bred with the aim of increasing the success of pig to human organ transplantation.[82]

Gene therapy is the genetic engineering of humans by replacing defective human genes with functional copies. This can occur in somatic tissue or germline tissue. If the gene is inserted into the germline tissue it can be passed down to that person's descendants.[83][84] Gene therapy has been successfully used to treat multiple diseases, including X-linked SCID,[85] chronic lymphocytic leukemia (CLL),[86] and Parkinson's disease.[87] In 2012, Glybera became the first gene therapy treatment to be approved for clinical use in either Europe or the United States after its endorsement by the European Commission.[88][89] There are also ethical concerns should the technology be used not just for treatment, but for enhancement, modification or alteration of a human beings' appearance, adaptability, intelligence, character or behavior.[90] The distinction between cure and enhancement can also be difficult to establish.[91] Transhumanists consider the enhancement of humans desirable.

Research

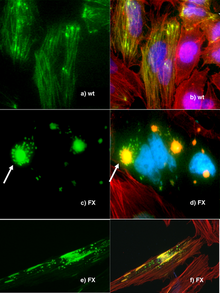

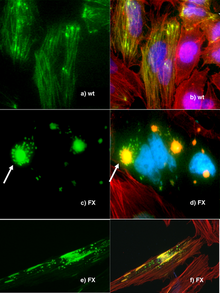

Human cells in which some proteins are fused with green fluorescent protein to allow them to be visualised

Genetic engineering is an important tool for natural scientists. Genes and other genetic information from a wide range of organisms are transformed into bacteria for storage and modification, creating genetically modified bacteria in the process. Bacteria are cheap, easy to grow, clonal, multiply quickly, relatively easy to transform and can be stored at -80 °C almost indefinitely. Once a gene is isolated it can be stored inside the bacteria providing an unlimited supply for research.

Organisms are genetically engineered to discover the functions of certain genes. This could be the effect on the phenotype of the organism, where the gene is expressed or what other genes it interacts with. These experiments generally involve loss of function, gain of function, tracking and expression.

- Loss of function experiments, such as in a gene knockout experiment, in which an organism is engineered to lack the activity of one or more genes. A knockout experiment involves the creation and manipulation of a DNA construct in vitro, which, in a simple knockout, consists of a copy of the desired gene, which has been altered such that it is non-functional. Embryonic stem cells incorporate the altered gene, which replaces the already present functional copy. These stem cells are injected into blastocysts, which are implanted into surrogate mothers. This allows the experimenter to analyze the defects caused by this mutation and thereby determine the role of particular genes. It is used especially frequently in developmental biology. Another method, useful in organisms such as Drosophila (fruit fly), is to induce mutations in a large population and then screen the progeny for the desired mutation. A similar process can be used in both plants and prokaryotes.

- Gain of function experiments, the logical counterpart of knockouts. These are sometimes performed in conjunction with knockout experiments to more finely establish the function of the desired gene. The process is much the same as that in knockout engineering, except that the construct is designed to increase the function of the gene, usually by providing extra copies of the gene or inducing synthesis of the protein more frequently.

- Tracking experiments, which seek to gain information about the localization and interaction of the desired protein. One way to do this is to replace the wild-type gene with a 'fusion' gene, which is a juxtaposition of the wild-type gene with a reporting element such as green fluorescent protein (GFP) that will allow easy visualization of the products of the genetic modification. While this is a useful technique, the manipulation can destroy the function of the gene, creating secondary effects and possibly calling into question the results of the experiment. More sophisticated techniques are now in development that can track protein products without mitigating their function, such as the addition of small sequences that will serve as binding motifs to monoclonal antibodies.

- Expression studies aim to discover where and when specific proteins are produced. In these experiments, the DNA sequence before the DNA that codes for a protein, known as a gene's promoter, is reintroduced into an organism with the protein coding region replaced by a reporter gene such as GFP or an enzyme that catalyzes the production of a dye. Thus the time and place where a particular protein is produced can be observed. Expression studies can be taken a step further by altering the promoter to find which pieces are crucial for the proper expression of the gene and are actually bound by transcription factor proteins; this process is known as promoter bashing.

Industrial

Using genetic engineering techniques one can transform microorganisms such as bacteria or yeast, or transform cells from multicellular organisms such as insects or mammals, with a gene coding for a useful protein, such as an enzyme, so that the transformed organism will overexpress the desired protein. One can manufacture mass quantities of the protein by growing the transformed organism in bioreactor equipment using techniques of industrial fermentation, and then purifying the protein.[92] Some genes do not work well in bacteria, so yeast, insect cells, or mammalians cells, each a eukaryote, can also be used.[93] These techniques are used to produce medicines such as insulin, human growth hormone, and vaccines, supplements such as tryptophan, aid in the production of food (chymosin in cheese making) and fuels.[94] Other applications involving genetically engineered bacteria being investigated involve making the bacteria perform tasks outside their natural cycle, such as making biofuels,[95] cleaning up oil spills, carbon and other toxic waste[96] and detecting arsenic in drinking water.[97]Experimental, lab scale industrial applications

In materials science, a genetically modified virus has been used in an academic lab as a scaffold for assembling a more environmentally friendly lithium-ion battery.[98][99]Bacteria have been engineered to function as sensors by expressing a fluorescent protein under certain environmental conditions.[100]

Agriculture

Bt-toxins present in peanut leaves (bottom image) protect it from extensive damage caused by European corn borer larvae (top image).[101]

One of the best-known and controversial applications of genetic engineering is the creation and use of genetically modified crops or genetically modified organisms, such as genetically modified fish, which are used to produce genetically modified food and materials with diverse uses. There are four main goals in generating genetically modified crops.[102]

One goal, and the first to be realized commercially, is to provide protection from environmental threats, such as cold (in the case of Ice-minus bacteria), or pathogens, such as insects or viruses, and/or resistance to herbicides. There are also fungal and virus resistant crops developed or in development.[103][104] They have been developed to make the insect and weed management of crops easier and can indirectly increase crop yield.[105]

Another goal in generating GMOs is to modify the quality of produce by, for instance, increasing the nutritional value or providing more industrially useful qualities or quantities.[106] The Amflora potato, for example, produces a more industrially useful blend of starches. Cows have been engineered to produce more protein in their milk to facilitate cheese production.[107] Soybeans and canola have been genetically modified to produce more healthy oils.[108][109]

Another goal consists of driving the GMO to produce materials that it does not normally make. One example is "pharming", which uses crops as bioreactors to produce vaccines, drug intermediates, or drug themselves; the useful product is purified from the harvest and then used in the standard pharmaceutical production process.[110] Cows and goats have been engineered to express drugs and other proteins in their milk, and in 2009 the FDA approved a drug produced in goat milk.[111][112]

Another goal in generating GMOs, is to directly improve yield by accelerating growth, or making the organism more hardy (for plants, by improving salt, cold or drought tolerance).[106] Some agriculturally important animals have been genetically modified with growth hormones to increase their size.[113]

The genetic engineering of agricultural crops can increase the growth rates and resistance to different diseases caused by pathogens and parasites.[114] This is beneficial as it can greatly increase the production of food sources with the usage of fewer resources that would be required to host the world's growing populations. These modified crops would also reduce the usage of chemicals, such as fertilizers and pesticides, and therefore decrease the severity and frequency of the damages produced by these chemical pollution.[114][115]

Ethical and safety concerns have been raised around the use of genetically modified food.[116] A major safety concern relates to the human health implications of eating genetically modified food, in particular whether toxic or allergic reactions could occur.[117] Gene flow into related non-transgenic crops, off target effects on beneficial organisms and the impact on biodiversity are important environmental issues.[118] Ethical concerns involve religious issues, corporate control of the food supply, intellectual property rights and the level of labeling needed on genetically modified products.

BioArt and entertainment

Genetic engineering is also being used to create BioArt.[119] Some bacteria have been genetically engineered to create black and white photographs.[120]Genetic engineering has also been used to create novelty items such as lavender-colored carnations,[121] blue roses,[122] and glowing fish.[123][124]

Regulation

The regulation of genetic engineering concerns the approaches taken by governments to assess and manage the risks associated with the development and release of genetically modified crops. There are differences in the regulation of GM crops between countries, with some of the most marked differences occurring between the USA and Europe. Regulation varies in a given country depending on the intended use of the products of the genetic engineering. For example, a crop not intended for food use is generally not reviewed by authorities responsible for food safety.Controversy

Critics have objected to use of genetic engineering per se on several grounds, including ethical concerns, ecological concerns, and economic concerns raised by the fact GM techniques and GM organisms are subject to intellectual property law. GMOs also are involved in controversies over GM food with respect to whether food produced from GM crops is safe, whether it should be labeled, and whether GM crops are needed to address the world's food needs. See the genetically modified food controversies article for discussion of issues about GM crops and GM food.

These controversies have led to litigation, international trade disputes, and protests, and to restrictive regulation of commercial products in some countries.