From Wikipedia, the free encyclopedia

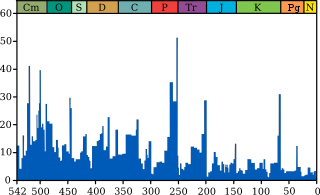

Plot of extinction intensity (percentage of genera that are present in each interval of time but do not exist in the following interval) vs time in the past for marine genera.[1] Geological periods are annotated (by abbreviation and colour) above. The Permian–Triassic extinction event is the most significant event for marine genera, with just over 50% (according to this source) failing to survive. (source and image info)

The Permian–Triassic (P–Tr) extinction event, colloquially known as the Great Dying or the Great Permian Extinction,[2][3] occurred about 252 Ma (million years) ago,[4] forming the boundary between the Permian and Triassic geologic periods, as well as the Paleozoic and Mesozoic eras. It is the Earth's most severe known extinction event, with up to 96% of all marine species[5][6] and 70% of terrestrial vertebrate species becoming extinct.[7] It is the only known mass extinction of insects.[8][9] Some 57% of all families and 83% of all genera became extinct. Because so much biodiversity was lost, the recovery of life on Earth took significantly longer than after any other extinction event,[5] possibly up to 10 million years.[10]

There is evidence for from one to three distinct pulses, or phases, of extinction.[7][11][12][13] There are several proposed mechanisms for the extinctions; the earlier phase was probably due to gradual environmental change, while the latter phase has been argued to be due to a catastrophic event. Suggested mechanisms for the latter include one or more large bolide impact events, massive volcanism, coal or gas fires and explosions from the Siberian Traps,[14] and a runaway greenhouse effect triggered by sudden release of methane from the sea floor due to methane clathrate dissociation or methane-producing microbes known as methanogens;[15] possible contributing gradual changes include sea-level change, increasing anoxia, increasing aridity, and a shift in ocean circulation driven by climate change.

Dating the extinction

Until 2000, it was thought that rock sequences spanning the Permian–Triassic boundary were too few and contained too many gaps for scientists to determine reliably its details.[20] Uranium-lead dating of zircons from rock sequences in multiple locations in southern China[4] dates the extinction to 252.28±0.08 Ma; an earlier study of rock sequences near Meishan in Changxing County of Zhejiang Province, China[21] dates the extinction to 251.4±0.3 Ma, with an ongoing elevated extinction rate occurring for some time thereafter.[11] A large (approximately 0.9%), abrupt global decrease in the ratio of the stable isotope 13C to that of 12C, coincides with this extinction,[18][22][23][24][25] and is sometimes used to identify the Permian–Triassic boundary in rocks that are unsuitable for radiometric dating.[26] Further evidence for environmental change around the P–Tr boundary suggests an 8 °C (14 °F) rise in temperature,[18] and an increase in CO2 levels by 2000 ppm (by contrast, the concentration immediately before the industrial revolution was 280 ppm.)[18] There is also evidence of increased ultraviolet radiation reaching the earth causing the mutation of plant spores.[18]

It has been suggested that the Permian–Triassic boundary is associated with a sharp increase in the abundance of marine and terrestrial fungi, caused by the sharp increase in the amount of dead plants and animals fed upon by the fungi.[27] For a while this "fungal spike" was used by some paleontologists to identify the Permian–Triassic boundary in rocks that are unsuitable for radiometric dating or lack suitable index fossils, but even the proposers of the fungal spike hypothesis pointed out that "fungal spikes" may have been a repeating phenomenon created by the post-extinction ecosystem in the earliest Triassic.[27] The very idea of a fungal spike has been criticized on several grounds, including that: Reduviasporonites, the most common supposed fungal spore, was actually a fossilized alga;[18][28] the spike did not appear worldwide;[29][30] and in many places it did not fall on the Permian–Triassic boundary.[31] The algae, which were misidentified as fungal spores, may even represent a transition to a lake-dominated Triassic world rather than an earliest Triassic zone of death and decay in some terrestrial fossil beds.[32] Newer chemical evidence agrees better with a fungal origin for Reduviasporonites, diluting these critiques.[33]

Uncertainty exists regarding the duration of the overall extinction and about the timing and duration of various groups' extinctions within the greater process. Some evidence suggests that there were multiple extinction pulses[7] or that the extinction was spread out over a few million years, with a sharp peak in the last million years of the Permian.[31][34] Statistical analyses of some highly fossiliferous strata in Meishan, Sichuan Province southwest China, suggest that the main extinction was clustered around one peak.[11] Recent research shows that different groups became extinct at different times; for example, while difficult to date absolutely, ostracod and brachiopod extinctions were separated by 670 to 1170 thousand years.[35] In a well-preserved sequence in east Greenland, the decline of animals is concentrated in a period 10 to 60 thousand years long, with plants taking several hundred thousand additional years to show the full impact of the event.[36] An older theory, still supported in some recent papers,[37] is that there were two major extinction pulses 9.4 million years apart, separated by a period of extinctions well above the background level, and that the final extinction killed off only about 80% of marine species alive at that time while the other losses occurred during the first pulse or the interval between pulses. According to this theory one of these extinction pulses occurred at the end of the Guadalupian epoch of the Permian.[7][38] For example, all but one of the surviving dinocephalian genera died out at the end of the Guadalupian,[39] as did the Verbeekinidae, a family of large-size fusuline foraminifera.[40] The impact of the end-Guadalupian extinction on marine organisms appears to have varied between locations and between taxonomic groups—brachiopods and corals had severe losses.[41][42]

Extinction patterns

Marine organisms[edit]

Marine invertebrates suffered the greatest losses during the P–Tr extinction. In the intensively sampled south China sections at the P–Tr boundary, for instance, 286 out of 329 marine invertebrate genera disappear within the final 2 sedimentary zones containing conodonts from the Permian.[11]Statistical analysis of marine losses at the end of the Permian suggests that the decrease in diversity was caused by a sharp increase in extinctions instead of a decrease in speciation.[44] The extinction primarily affected organisms with calcium carbonate skeletons, especially those reliant on stable CO2 levels to produce their skeletons,[45] for the increase in atmospheric CO2 led to ocean acidification.

Among benthic organisms, the extinction event multiplied background extinction rates, and therefore caused most damage to taxa that had a high background extinction rate (by implication, taxa with a high turnover).[46][47] The extinction rate of marine organisms was catastrophic.[11][48][49][50]

Surviving marine invertebrate groups include: articulate brachiopods (those with a hinge), which have suffered a slow decline in numbers since the P–Tr extinction; the Ceratitida order of ammonites; and crinoids ("sea lilies"), which very nearly became extinct but later became abundant and diverse.

The groups with the highest survival rates generally had active control of circulation, elaborate gas exchange mechanisms, and light calcification; more heavily calcified organisms with simpler breathing apparatus were the worst hit.[16][51] In the case of the brachiopods at least, surviving taxa were generally small, rare members of a diverse community.[52]

The ammonoids, which had been in a long-term decline for the 30 million years since the Roadian (middle Permian), suffered a selective extinction pulse 10 mya before the main event, at the end of the Capitanian stage. In this preliminary extinction, which greatly reduced disparity, that is the range of different ecological guilds, environmental factors were apparently responsible. Diversity and disparity fell further until the P–Tr boundary; the extinction here was non-selective, consistent with a catastrophic initiator. During the Triassic, diversity rose rapidly, but disparity remained low.[53]

The range of morphospace occupied by the ammonoids, that is the range of possible forms, shape or structure, became more restricted as the Permian progressed. Just a few million years into the Triassic, the original range of ammonoid structures was once again reoccupied, but the parameters were now shared differently among clades.[54]

Terrestrial invertebrates

The Permian had great diversity in insect and other invertebrate species, including the largest insects ever to have existed. The end-Permian is the only known mass extinction of insects,[8] with eight or nine insect orders becoming extinct and ten more greatly reduced in diversity. Palaeodictyopteroids (insects with piercing and sucking mouthparts) began to decline during the mid-Permian; these extinctions have been linked to a change in flora. The greatest decline occurred in the Late Permian and was probably not directly caused by weather-related floral transitions.[48]Most fossil insect groups found after the Permian–Triassic boundary differ significantly from those that lived prior to the P–Tr extinction. With the exception of the Glosselytrodea, Miomoptera, and Protorthoptera, Paleozoic insect groups have not been discovered in deposits dating to after the P–Tr boundary. The caloneurodeans, monurans, paleodictyopteroids, protelytropterans, and protodonates became extinct by the end of the Permian. In well-documented Late Triassic deposits, fossils overwhelmingly consist of modern fossil insect groups.[8]

Terrestrial plants

Plant ecosystem response

The geological record of terrestrial plants is sparse, and based mostly on pollen and spore studies. Interestingly, plants are relatively immune to mass extinction, with the impact of all the major mass extinctions "insignificant" at a family level.[18] Even the reduction observed in species diversity (of 50%) may be mostly due to taphonomic processes.[18] However, a massive rearrangement of ecosystems does occur, with plant abundances and distributions changing profoundly and all the forests virtually disappearing;[18][55] the Palaeozoic flora scarcely survived this extinction.[56]At the P–Tr boundary, the dominant floral groups changed, with many groups of land plants entering abrupt decline, such as Cordaites (gymnosperms) and Glossopteris (seed ferns).[57] Dominant gymnosperm genera were replaced post-boundary by lycophytes—extant lycophytes are recolonizers of disturbed areas.[58]

Palynological or pollen studies from East Greenland of sedimentary rock strata laid down during the extinction period indicate dense gymnosperm woodlands before the event. At the same time that marine invertebrate macrofauna are in decline these large woodlands die out and are followed by a rise in diversity of smaller herbaceous plants including Lycopodiophyta, both Selaginellales and Isoetales. Later on other groups of gymnosperms again become dominant but again suffer major die offs; these cyclical flora shifts occur a few times over the course of the extinction period and afterwards. These fluctuations of the dominant flora between woody and herbaceous taxa indicate chronic environmental stress resulting in a loss of most large woodland plant species.

The successions and extinctions of plant communities do not coincide with the shift in δ13C values, but occurs many years after.[30] The recovery of gymnosperm forests took 4–5 million years.[18]

Coal gap

No coal deposits are known from the Early Triassic, and those in the Middle Triassic are thin and low-grade.[19] This "coal gap" has been explained in many ways. It has been suggested that new, more aggressive fungi, insects and vertebrates evolved, and killed vast numbers of trees. These decomposers themselves suffered heavy losses of species during the extinction, and are not considered a likely cause of the coal gap.[19] It could simply be that all coal forming plants were rendered extinct by the P–Tr extinction, and that it took 10 million years for a new suite of plants to adapt to the moist, acid conditions of peat bogs.[19] On the other hand, abiotic factors (not caused by organisms), such as decreased rainfall or increased input of clastic sediments, may also be to blame.[18] Finally, it is also true that there are very few sediments of any type known from the Early Triassic, and the lack of coal may simply reflect this scarcity. This opens the possibility that coal-producing ecosystems may have responded to the changed conditions by relocating, perhaps to areas where we have no sedimentary record for the Early Triassic.[18] For example, in eastern Australia a cold climate had been the norm for a long period of time, with a peat mire ecosystem specialising to these conditions. Approximately 95% of these peat-producing plants went locally extinct at the P–Tr boundary;[59] Interestingly, coal deposits in Australia and Antarctica disappear significantly before the P–Tr boundary.[18]Terrestrial vertebrates

There is enough evidence to indicate that over two-thirds of terrestrial labyrinthodont amphibians, sauropsid ("reptile") and therapsid ("mammal-like reptile") families became extinct. Large herbivores suffered the heaviest losses. All Permian anapsid reptiles died out except the procolophonids (testudines have anapsid skulls but are most often thought to have evolved later, from diapsid ancestors). Pelycosaurs died out before the end of the Permian. Too few Permian diapsid fossils have been found to support any conclusion about the effect of the Permian extinction on diapsids (the "reptile" group from which lizards, snakes, crocodilians, and dinosaurs [including birds] evolved).[60][61] Even the groups that survived suffered extremely heavy losses of species, and some terrestrial vertebrate groups very nearly became extinct at the end-Permian. Some of the surviving groups did not persist for long past this period, while others that barely survived went on to produce diverse and long-lasting lineages. Yet it took 30 million years for the terrestrial vertebrate fauna to fully recover both numerically and ecologically.[62]Possible explanations of these patterns

An analysis of marine fossils from the Permian's final Changhsingian stage found that marine organisms with low tolerance for hypercapnia (high concentration of carbon dioxide) had high extinction rates, while the most tolerant organisms had very slight losses.The most vulnerable marine organisms were those that produced calcareous hard parts (i.e., from calcium carbonate) and had low metabolic rates and weak respiratory systems—notably calcareous sponges, rugose and tabulate corals, calcite-depositing brachiopods, bryozoans, and echinoderms; about 81% of such genera became extinct. Close relatives without calcareous hard parts suffered only minor losses, for example sea anemones, from which modern corals evolved. Animals with high metabolic rates, well-developed respiratory systems, and non-calcareous hard parts had negligible losses—except for conodonts, in which 33% of genera died out.[63]

This pattern is consistent with what is known about the effects of hypoxia, a shortage but not a total absence of oxygen. However, hypoxia cannot have been the only killing mechanism for marine organisms. Nearly all of the continental shelf waters would have had to become severely hypoxic to account for the magnitude of the extinction, but such a catastrophe would make it difficult to explain the very selective pattern of the extinction. Models of the Late Permian and Early Triassic atmospheres show a significant but protracted decline in atmospheric oxygen levels, with no acceleration near the P–Tr boundary. Minimum atmospheric oxygen levels in the Early Triassic are never less than present day levels—the decline in oxygen levels does not match the temporal pattern of the extinction.[63]

Marine organisms are more sensitive to changes in CO2 levels than are terrestrial organisms for a variety of reasons. CO2 is 28 times more soluble in water than is oxygen. Marine animals normally function with lower concentrations of CO2 in their bodies than land animals, as the removal of CO2 in air-breathing animals is impeded by the need for the gas to pass through the respiratory system's membranes (lungs' alveolus, tracheae, and the like), even when CO2 diffuses more easily than Oxygen. In marine organisms, relatively modest but sustained increases in CO2 concentrations hamper the synthesis of proteins, reduce fertilization rates, and produce deformities in calcareous hard parts.[63] In addition, an increase in CO2 concentration is inevitably linked to ocean acidification, consistent with the preferential extinction of heavily calcified taxa and other signals in the rock record that suggest a more acidic ocean.[64]

It is difficult to analyze extinction and survival rates of land organisms in detail, because few terrestrial fossil beds span the Permian–Triassic boundary. Triassic insects are very different from those of the Permian, but a gap in the insect fossil record spans approximately 15 million years from the late Permian to early Triassic. The best-known record of vertebrate changes across the Permian–Triassic boundary occurs in the Karoo Supergroup of South Africa, but statistical analyses have so far not produced clear conclusions.[63] However, analysis of the fossil river deposits of the floodplains indicate a shift from meandering to braided river patterns, indicating an abrupt drying of the climate.[65] The climate change may have taken as little as 100,000 years, prompting the extinction of the unique Glossopteris flora and its herbivores, followed by the carnivorous guild.[66]

Biotic recovery

Earlier analyses indicated that life on Earth recovered quickly after the Permian extinctions, but this was mostly in the form of disaster taxa, opportunist organisms such as the hardy Lystrosaurus. Research published in 2006 indicates that the specialized animals that formed complex ecosystems, with high biodiversity, complex food webs and a variety of niches, took much longer to recover. It is thought that this long recovery was due to the successive waves of extinction, which inhibited recovery, and prolonged environmental stress to organisms, which continued into the Early Triassic. Research indicates that recovery did not begin until the start of the mid-Triassic, 4 to 6 million years after the extinction;[67] and some writers estimate that the recovery was not complete until 30 Ma after the P–Tr extinction, i.e. in the late Triassic.[7]A study published in the journal Science [68] found that during the Great Extinction the oceans' surface temperatures reached 40 °C (104 °F), which explains why recovery took so long: it was simply too hot for life to survive.[69]

During the early Triassic (4 to 6 million years after the P–Tr extinction), the plant biomass was insufficient to form coal deposits, which implies a limited food mass for herbivores.[19] River patterns in the Karoo changed from meandering to braided, indicating that vegetation there was very sparse for a long time.[70]

Each major segment of the early Triassic ecosystem—plant and animal, marine and terrestrial—was dominated by a small number of genera, which appeared virtually worldwide, for example: the herbivorous therapsid Lystrosaurus (which accounted for about 90% of early Triassic land vertebrates) and the bivalves Claraia, Eumorphotis, Unionites and Promylina. A healthy ecosystem has a much larger number of genera, each living in a few preferred types of habitat.[57][71]

Disaster taxa took advantage of the devastated ecosystems and enjoyed a temporary population boom and increase in their territory. Microconchids are the dominant component of otherwise impoverished Early Triassic encrusting assemblages. For example: Lingula (a brachiopod); stromatolites, which had been confined to marginal environments since the Ordovician; Pleuromeia (a small, weedy plant); Dicroidium (a seed fern).[71][72][73]

Changes in marine ecosystems

Sessile filter feeders like this crinoid were significantly less abundant after the P–Tr extinction.

Prior to the extinction, about two-thirds of marine animals were sessile and attached to the sea floor but, during the Mesozoic, only about half of the marine animals were sessile while the rest were free-living. Analysis of marine fossils from the period indicated a decrease in the abundance of sessile epifaunal suspension feeders such as brachiopods and sea lilies and an increase in more complex mobile species such as snails, sea urchins and crabs.[74]

Before the Permian mass extinction event, both complex and simple marine ecosystems were equally common; after the recovery from the mass extinction, the complex communities outnumbered the simple communities by nearly three to one,[74] and the increase in predation pressure led to the Mesozoic Marine Revolution.

Bivalves were fairly rare before the P–Tr extinction but became numerous and diverse in the Triassic, and one group, the rudist clams, became the Mesozoic's main reef-builders. Some researchers think much of this change happened in the 5 million years between the two major extinction pulses.[75]

Crinoids ("sea lilies") suffered a selective extinction, resulting in a decrease in the variety of their forms.[76] Their ensuing adaptive radiation was brisk, and resulted in forms possessing flexible arms becoming widespread; motility, predominantly a response to predation pressure, also became far more prevalent.[77]

Land vertebrates

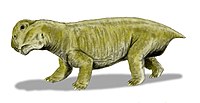

Lystrosaurus, a pig-sized herbivorous dicynodont therapsid, constituted as much as 90% of some earliest Triassic land vertebrate fauna. Smaller carnivorous cynodont therapsids also survived, including the ancestors of mammals.

In the Karoo region of southern Africa, the therocephalians Tetracynodon, Moschorhinus and Ictidosuchoides survived, but do not appear to have been abundant in the Triassic.[78]

Archosaurs (which included the ancestors of dinosaurs and crocodilians) were initially rarer than therapsids, but they began to displace therapsids in the mid-Triassic. In the mid to late Triassic, the dinosaurs evolved from one group of archosaurs, and went on to dominate terrestrial ecosystems during the Jurassic and Cretaceous.[79] This "Triassic Takeover" may have contributed to the evolution of mammals by forcing the surviving therapsids and their mammaliform successors to live as small, mainly nocturnal insectivores; nocturnal life probably forced at least the mammaliforms to develop fur and higher metabolic rates,[80] while losing part of the differential color-sensitive retinal receptors reptilians and birds preserved.

Some temnospondyl amphibians made a relatively quick recovery, in spite of nearly becoming extinct. Mastodonsaurus and trematosaurians were the main aquatic and semiaquatic predators during most of the Triassic, some preying on tetrapods and others on fish.[81]

Land vertebrates took an unusually long time to recover from the P–Tr extinction; writer M. J. Benton estimated the recovery was not complete until 30 million years after the extinction, i.e. not until the Late Triassic, in which dinosaurs, pterosaurs, crocodiles, archosaurs, amphibians, and mammaliforms were abundant and diverse.[5]

Causes of the extinction event

Pinpointing the exact cause or causes of the Permian–Triassic extinction event is difficult, mostly because the catastrophe occurred over 250 million years ago, and much of the evidence that would have pointed to the cause either has been destroyed by now or is concealed deep within the Earth under many layers of rock. The sea floor is also completely recycled every 200 million years by the ongoing process of plate tectonics and seafloor spreading, leaving no useful indications beneath the ocean. With the fairly significant evidence that scientists have accumulated, several mechanisms have been proposed for the extinction event, including both catastrophic and gradual processes (similar to those theorized for the Cretaceous–Paleogene extinction event). The former group includes one or more large bolide impact events, increased volcanism, and sudden release of methane from the sea floor, either due to dissociation of methane hydrate deposits or metabolism of organic carbon deposits by methanogenic microbes. The latter group includes sea level change, increasing anoxia, and increasing aridity. Any hypothesis about the cause must explain the selectivity of the event, which affected organisms with calcium carbonate skeletons most severely; the long period (4 to 6 million years) before recovery started, and the minimal extent of biological mineralization (despite inorganic carbonates being deposited) once the recovery began.[45]Impact event

Artist's impression of a major impact event: A collision between Earth and an asteroid a few kilometres in diameter would release as much energy as several million nuclear weapons detonating.

Evidence that an impact event may have caused the Cretaceous–Paleogene extinction event has led to speculation that similar impacts may have been the cause of other extinction events, including the P–Tr extinction, and therefore to a search for evidence of impacts at the times of other extinctions and for large impact craters of the appropriate age.

Reported evidence for an impact event from the P–Tr boundary level includes rare grains of shocked quartz in Australia and Antarctica;[82][83] fullerenes trapping extraterrestrial noble gases;[84] meteorite fragments in Antarctica;[85] and grains rich in iron, nickel and silicon, which may have been created by an impact.[86] However, the accuracy of most of these claims has been challenged.[87][88][89][90] Quartz from Graphite Peak in Antarctica, for example, once considered "shocked", has been re-examined by optical and transmission electron microscopy. The observed features were concluded to be not due to shock, but rather to plastic deformation, consistent with formation in a tectonic environment such as volcanism.[91]

An impact crater on the sea floor would be evidence of a possible cause of the P–Tr extinction, but such a crater would by now have disappeared. As 70% of the Earth's surface is currently sea, an asteroid or comet fragment is now perhaps more than twice as likely to hit ocean as it is to hit land. However, Earth has no ocean-floor crust more than 200 million years old, because the "conveyor belt" process of seafloor spreading and subduction destroys it within that time. Craters produced by very large impacts may be masked by extensive flood basalting from below after the crust is punctured or weakened.[92] Subduction should not, however, be entirely accepted as an explanation of why no firm evidence can be found: as with the K-T event, an ejecta blanket stratum rich in siderophilic elements (e.g. iridium) would be expected to be seen in formations from the time.

One attraction of large impact theories is that theoretically they could trigger other cause-considered extinction-paralleling phenomena,[clarification needed][93] such as the Siberian Traps eruptions (see below) as being either an impact site[94] or the antipode of an impact site.[93][95] The abruptness of an impact also explains why more species did not rapidly evolve to survive, as would be expected if the Permian-Triassic event had been slower and less global than a meteorite impact.

Possible impact sites

Several possible impact craters have been proposed as the site of an impact causing the P–Tr extinction, including the Bedout structure off the northwest coast of Australia[83] and the hypothesized Wilkes Land crater of East Antarctica.[96][97] In each of these cases, the idea that an impact was responsible has not been proven, and has been widely criticized. In the case of Wilkes Land, the age of this sub-ice geophysical feature is very uncertain – it may be later than the Permian–Triassic extinction.The Araguainha crater has been most recently dated to 254.7 ± 2.5 million years ago, overlapping with estimates for the Permo-Triassic boundary.[98] Much of the local rock was oil shale. The estimated energy released by the Araguainha impact is insufficient to be a direct cause of the global mass extinction, but the colossal local earth tremors would have released huge amounts of oil and gas from the shattered rock. The resulting sudden global warming might have precipitated the Permian–Triassic extinction event.[99]

Volcanism

The final stages of the Permian had two flood basalt events. A small one, the Emeishan Traps in China, occurred at the same time as the end-Guadalupian extinction pulse, in an area close to the equator at the time.[100][101] The flood basalt eruptions that produced the Siberian Traps constituted one of the largest known volcanic events on Earth and covered over 2,000,000 square kilometres (770,000 sq mi) with lava.[102][103][104] The Siberian Traps eruptions were formerly thought to have lasted for millions of years, but recent research dates them to 251.2 ± 0.3 Ma — immediately before the end of the Permian.[11][105]The Emeishan and Siberian Traps eruptions may have caused dust clouds and acid aerosols—which would have blocked out sunlight and thus disrupted photosynthesis both on land and in the photic zone of the ocean, causing food chains to collapse. These eruptions may also have caused acid rain when the aerosols washed out of the atmosphere. This may have killed land plants and molluscs and planktonic organisms which had calcium carbonate shells. The eruptions would also have emitted carbon dioxide, causing global warming. When all of the dust clouds and aerosols washed out of the atmosphere, the excess carbon dioxide would have remained and the warming would have proceeded without any mitigating effects.[93]

The Siberian Traps had unusual features that made them even more dangerous. Pure flood basalts produce fluid, low-viscosity lava and do not hurl debris into the atmosphere. It appears, however, that 20% of the output of the Siberian Traps eruptions was pyroclastic, i.e. consisted of ash and other debris thrown high into the atmosphere, increasing the short-term cooling effect.[106] The basalt lava erupted or intruded into carbonate rocks and into sediments that were in the process of forming large coal beds, both of which would have emitted large amounts of carbon dioxide, leading to stronger global warming after the dust and aerosols settled.[93]

There is doubt, however, about whether these eruptions were enough on their own to cause a mass extinction as severe as the end-Permian. Equatorial eruptions are necessary to produce sufficient dust and aerosols to affect life worldwide, whereas the much larger Siberian Traps eruptions were inside or near the Arctic Circle. Furthermore, if the Siberian Traps eruptions occurred within a period of 200,000 years, the atmosphere's carbon dioxide content would have doubled. Recent climate models suggest such a rise in CO2 would have raised global temperatures by 1.5 to 4.5 °C (2.7 to 8.1 °F), which is unlikely to cause a catastrophe as great as the P–Tr extinction.[93]

In January 2011, a team led by Stephen Grasby of the Geological Survey of Canada—Calgary, reported evidence that volcanism caused massive coal beds to ignite, possibly releasing more than 3 trillion tons of carbon. The team found ash deposits in deep rock layers near what is now Buchanan Lake. According to their article, "... coal ash dispersed by the explosive Siberian Trap eruption would be expected to have an associated release of toxic elements in impacted water bodies where fly ash slurries developed ...", and "Mafic megascale eruptions are long-lived events that would allow significant build-up of global ash clouds".[107][108] In a statement, Grasby said, "In addition to these volcanoes causing fires through coal, the ash it spewed was highly toxic and was released in the land and water, potentially contributing to the worst extinction event in earth history."[109]

Methane hydrate gasification

Scientists have found worldwide evidence of a swift decrease of about 1% in the 13C/12C isotope ratio in carbonate rocks from the end-Permian.[50][110] This is the first, largest, and most rapid of a series of negative and positive excursions (decreases and increases in 13C/12C ratio) that continues until the isotope ratio abruptly stabilised in the middle Triassic, followed soon afterwards by the recovery of calcifying life forms (organisms that use calcium carbonate to build hard parts such as shells).[16]A variety of factors may have contributed to this drop in the 13C/12C ratio, but most turn out to be insufficient to account fully for the observed amount:[111]

- Gases from volcanic eruptions have a 13C/12C ratio about 0.5 to 0.8% below standard (δ13C about −0.5 to −0.8%), but the amount required to produce a reduction of about 1.0% worldwide requires eruptions greater by orders of magnitude than any for which evidence has been found.[112]

- A reduction in organic activity would extract 12C more slowly from the environment and leave more of it to be incorporated into sediments, thus reducing the 13C/12C ratio. Biochemical processes preferentially use the lighter isotopes, since chemical reactions are ultimately driven by electromagnetic forces between atoms and lighter isotopes respond more quickly to these forces. But a study of a smaller drop of 0.3 to 0.4% in 13C/12C (δ13C −3 to −4 ‰) at the Paleocene-Eocene Thermal Maximum (PETM) concluded that even transferring all the organic carbon (in organisms, soils, and dissolved in the ocean) into sediments would be insufficient: even such a large burial of material rich in 12C would not have produced the 'smaller' drop in the 13C/12C ratio of the rocks around the PETM.[112]

- Buried sedimentary organic matter has a 13C/12C ratio 2.0 to 2.5% below normal (δ13C −2.0 to −2.5%). Theoretically, if the sea level fell sharply, shallow marine sediments would be exposed to oxidization. But 6,500–8,400 gigatons (1 gigaton = 109 metric tons) of organic carbon would have to be oxidized and returned to the ocean-atmosphere system within less than a few hundred thousand years to reduce the 13C/12C ratio by 1.0%. This is not thought to be a realistic possibility.[48]

- Rather than a sudden decline in sea level, intermittent periods of ocean-bottom hyperoxia and anoxia (high-oxygen and low- or zero-oxygen conditions) may have caused the 13C/12C ratio fluctuations in the Early Triassic;[16] and global anoxia may have been responsible for the end-Permian blip. The continents of the end-Permian and early Triassic were more clustered in the tropics than they are now, and large tropical rivers would have dumped sediment into smaller, partially enclosed ocean basins at low latitudes. Such conditions favor oxic and anoxic episodes; oxic/anoxic conditions would result in a rapid release/burial, respectively, of large amounts of organic carbon, which has a low 13C/12C ratio because biochemical processes use the lighter isotopes more.[113] This, or another organic-based reason, may have been responsible for both this and a late Proterozoic/Cambrian pattern of fluctuating 13C/12C ratios.[16]

The only proposed mechanism sufficient to cause a global 1.0% reduction in the 13C/12C ratio is the release of methane from methane clathrates,.[48] Carbon-cycle models confirm it would have had enough effect to produce the observed reduction.[111][114] Methane clathrates, also known as methane hydrates, consist of methane molecules trapped in cages of water molecules. The methane, produced by methanogens (microscopic single-celled organisms), has a 13C/12C ratio about 6.0% below normal (δ13C −6.0%). At the right combination of pressure and temperature, it gets trapped in clathrates fairly close to the surface of permafrost and in much larger quantities at continental margins (continental shelves and the deeper seabed close to them). Oceanic methane hydrates are usually found buried in sediments where the seawater is at least 300 m (980 ft) deep. They can be found up to about 2,000 m (6,600 ft) below the sea floor, but usually only about 1,100 m (3,600 ft) below the sea floor.[115]

The area covered by lava from the Siberian Traps eruptions is about twice as large as was originally thought, and most of the additional area was shallow sea at the time. The seabed probably contained methane hydrate deposits, and the lava caused the deposits to dissociate, releasing vast quantities of methane.[116] A vast release of methane might cause significant global warming, since methane is a very powerful greenhouse gas. Strong evidence suggests the global temperatures increased by about 6 °C (10.8 °F) near the equator and therefore by more at higher latitudes: a sharp decrease in oxygen isotope ratios (18O/16O);[117] the extinction of Glossopteris flora (Glossopteris and plants that grew in the same areas), which needed a cold climate, and its replacement by floras typical of lower paleolatitudes.[118]

However, the pattern of isotope shifts expected to result from a massive release of methane does not match the patterns seen throughout the early Triassic. Not only would a methane cause require the release of five times as much methane as postulated for the PETM,[16] but it would also have to be reburied at an unrealistically high rate to account for the rapid increases in the 13C/12C ratio (episodes of high positive δ13C) throughout the early Triassic, before being released again several times.[16]

Methanosarcina

A 2014 paper suggested a bacterial source of the carbon-cycle disruption: the methanogenic archaeal genus Methanosarcina. Three lines of chronology converge at 250 mya, supporting a scenario in which a single-gene transfer created a metabolic pathway for efficient methane production in these archaea, nourished by volcanic nickel. According to the theory, the resultant super-exponential bacterial bloom suddenly freed carbon from ocean-bottom organic sediments into the water and air.[119]Anoxia

Evidence for widespread ocean anoxia (severe deficiency of oxygen) and euxinia (presence of hydrogen sulfide) is found from the Late Permian to the Early Triassic. Throughout most of the Tethys and Panthalassic Oceans, evidence for anoxia, including fine laminations in sediments, small pyrite framboids, high uranium/thorium ratios, and biomarkers for green sulfur bacteria, appear at the extinction event.[120] However, in some sites, including Meishan, China, and eastern Greenland, evidence for anoxia precedes the extinction.[121][122] Biomarkers for green sulfur bacteria, such as isorenieratane, the diagenetic product of isorenieratene, are widely used as indicators of photic zone euxinia, because green sulfur bacteria require both sunlight and hydrogen sulfide to survive. Their abundance in sediments from the P-T boundary indicates hydrogen sulfide was present even in shallow waters.This spread of toxic, oxygen-depleted water would have been devastating for marine life, producing widespread die-offs. Models of ocean chemistry show that anoxia and euxinia would have been closely associated with high levels of carbon dioxide.[123] This suggests that poisoning from hydrogen sulfide, anoxia, and hypercapnia acted together as a killing mechanism. Hypercapnia best explains the selectivity of the extinction, but anoxia and euxinia probably contributed to the high mortality of the event. The persistence of anoxia through the Early Triassic may explain the slow recovery of marine life after the extinction. Models also show that anoxic events can cause catastrophic hydrogen sulfide emissions into the atmosphere (see below).[124]The sequence of events leading to anoxic oceans may have been triggered by carbon dioxide emissions from the eruption of the Siberian Traps.[124] In this scenario, warming from the enhanced greenhouse effect would reduce the solubility of oxygen in seawater, causing the concentration of oxygen to decline. Increased weathering of the continents due to warming and the acceleration of the water cycle would increase the riverine flux of phosphate to the ocean. This phosphate would have supported greater primary productivity in the surface oceans. This increase in organic matter production would have caused more organic matter to sink into the deep ocean, where its respiration would further decrease oxygen concentrations. Once anoxia became established, it would have been sustained by a positive feedback loop because deep water anoxia tends to increase the recycling efficiency of phosphate, leading to even higher productivity.

Hydrogen sulfide emissions

A severe anoxic event at the end of the Permian would have allowed sulfate-reducing bacteria to thrive, causing the production of large amounts of hydrogen sulfide in the anoxic ocean. Upwelling of this water may have released massive hydrogen sulfide emissions into the atmosphere. This would poison terrestrial plants and animals, as well as severely weaken the ozone layer, exposing much of the life that remained to fatal levels of UV radiation.[124]Indeed, biomarker evidence for anaerobic photosynthesis by Chlorobiaceae (green sulfur bacteria) from the Late-Permian into the Early Triassic indicates that hydrogen sulfide did upwell into shallow waters because these bacteria are restricted to the photic zone and use sulfide as an electron donor.

This hypothesis has the advantage of explaining the mass extinction of plants, which would have added to the methane levels and should otherwise have thrived in an atmosphere with a high level of carbon dioxide. Fossil spores from the end-Permian further support the theory:[citation needed] many show deformities that could have been caused by ultraviolet radiation, which would have been more intense after hydrogen sulfide emissions weakened the ozone layer.

The supercontinent Pangaea

About halfway through the Permian (in the Kungurian age of the Permian's Cisuralian epoch), all the continents joined to form the supercontinent Pangaea, surrounded by the superocean Panthalassa, although blocks that are now parts of Asia did not join the supercontinent until very late in the Permian.[125] This configuration severely decreased the extent of shallow aquatic environments, the most productive part of the seas, and exposed formerly isolated organisms of the rich continental shelves to competition from invaders. Pangaea's formation would also have altered both oceanic circulation and atmospheric weather patterns, creating seasonal monsoons near the coasts and an arid climate in the vast continental interior.[citation needed]

Marine life suffered very high but not catastrophic rates of extinction after the formation of Pangaea (see the diagram "Marine genus biodiversity" at the top of this article)—almost as high as in some of the "Big Five" mass extinctions. The formation of Pangaea seems not to have caused a significant rise in extinction levels on land, and, in fact, most of the advance of the therapsids and increase in their diversity seems to have occurred in the late Permian, after Pangaea was almost complete. So it seems likely that Pangaea initiated a long period of increased marine extinctions, but was not directly responsible for the "Great Dying" and the end of the Permian.

Microbes

According to a theory published in 2014 (see also above), a genus of anaerobic methanogenic archaea known as Methanosarcina may have been largely responsible for the event.[126] Evidence suggests that these microbes acquired a new metabolic pathway via gene transfer at about that time, enabling them to efficiently metabolize acetate into methane. This would have led to their exponential reproduction, allowing them to rapidly consume vast deposits of organic carbon that had accumulated in marine sediment. The result would have been a sharp buildup of methane and carbon dioxide in the Earth's oceans and atmosphere. Massive volcanism facilitated this process by releasing large amounts of nickel, a scarce metal which is a cofactor for one of the enzymes involved in producing methane.[119]Combination of causes

Possible causes supported by strong evidence appear to describe a sequence of catastrophes, each one worse than the last: the Siberian Traps eruptions were bad enough in their own right, but because they occurred near coal beds and the continental shelf, they also triggered very large releases of carbon dioxide and methane.[63] The resultant global warming may have caused perhaps the most severe anoxic event in the oceans' history: according to this theory, the oceans became so anoxic, anaerobic sulfur-reducing organisms dominated the chemistry of the oceans and caused massive emissions of toxic hydrogen sulfide.[63]However, there may be some weak links in this chain of events: the changes in the 13C/12C ratio expected to result from a massive release of methane do not match the patterns seen throughout the early Triassic;[16] and the types of oceanic thermohaline circulation, which may have existed at the end of the Permian, are not likely to have supported deep-sea anoxia.[127]