The Second Industrial Revolution, also known as the Technological Revolution, was a phase of rapid industrialization in the final third of the 19th century and the beginning of the 20th. The First Industrial Revolution, which ended in the early to mid 1800s, was punctuated by a slowdown in macroinventions before the Second Industrial Revolution in 1870. Though a number of its characteristic events can be traced to earlier innovations in manufacturing, such as the establishment of a machine tool industry, the development of methods for manufacturing interchangeable parts and the invention of the Bessemer Process to produce steel, the Second Industrial Revolution is generally dated between 1870 and 1914 (the start of World War I).

Advancements in manufacturing and production technology enabled the widespread adoption of preexisting technological systems such as telegraph and railroad networks, gas and water supply, and sewage systems, which had earlier been concentrated to a few select cities. The enormous expansion of rail and telegraph lines after 1870 allowed unprecedented movement of people and ideas, which culminated in a new wave of globalization. In the same time period, new technological systems were introduced, most significantly electrical power and telephones. The Second Industrial Revolution continued into the 20th century with early factory electrification and the production line, and ended at the start of World War I.

Overview

The concept was introduced by Patrick Geddes, Cities in Evolution (1910), but David Landes' use of the term in a 1966 essay and in The Unbound Prometheus (1972) standardized scholarly definitions of the term, which was most intensely promoted by Alfred Chandler (1918–2007). However, some continue to express reservations about its use.[3]

Landes (2003) stresses the importance of new technologies, especially, the internal combustion engine and petroleum, new materials and substances, including alloys and chemicals, electricity and communication technologies (such as the telegraph, telephone and radio).

Vaclav Smil called the period 1867–1914 "The Age of Synergy" during which most of the great innovations were developed. Unlike the First Industrial Revolution, the inventions and innovations were engineering and science-based.[4]

Industry and technology

A synergy between iron and steel, railroads and coal developed at the beginning of the Second Industrial Revolution. Railroads allowed cheap transportation of materials and products, which in turn led to cheap rails to build more roads. Railroads also benefited from cheap coal for their steam locomotives. This synergy led to the laying of 75,000 miles of track in the U.S. in the 1880s, the largest amount anywhere in world history.[5]Iron

The hot blast technique, in which the hot flue gas from a blast furnace is used to preheat combustion air blown into a blast furnace, was invented and patented by James Beaumont Neilson in 1828 at Wilsontown Ironworks in Scotland. Hot blast was the single most important advance in fuel efficiency of the blast furnace as it greatly reduced the fuel consumption for making pig iron, and was one of the most important technologies developed during the Industrial Revolution.[6] Falling costs for producing wrought iron coincided with the emergence of the railway in the 1830s.The early technique of hot blast used iron for the regenerative heating medium. Iron caused problems with expansion and contraction, which stressed the iron and caused failure. Edward Alfred Cowper developed the Cowper stove in 1857.[7] This stove used firebrick as a storage medium, solving the expansion and cracking problem. The Cowper stove was also capable of producing high heat, which resulted in very high throughput of blast furnaces. The Cowper stove is still used in today's blast furnaces.

With the greatly reduced cost of producing pig iron with coke using hot blast, demand grew dramatically and so did the size of blast furnaces.[8][9]

Steel

A diagram of the Bessemer converter.

Air blown through holes in the converter bottom creates a violent

reaction in the molten pig iron that oxidizes the excess carbon,

converting the pig iron to pure iron or steel, depending on the residual

carbon.

The Bessemer process, invented by Sir Henry Bessemer, allowed the mass-production of steel, increasing the scale and speed of production of this vital material, and decreasing the labor requirements. The key principle was the removal of excess carbon and other impurities from pig iron by oxidation with air blown through the molten iron. The oxidation also raises the temperature of the iron mass and keeps it molten.

The "acid" Bessemer process had a serious limitation in that it required relatively scarce hematite ore[10] which is low in phosphorus. Sidney Gilchrist Thomas developed a more sophisticated process to eliminate the phosphorus from iron. Collaborating with his cousin, Percy Gilchrist a chemist at the Blaenavon Ironworks, Wales, he patented his process in 1878;[11] Bolckow Vaughan & Co. in Yorkshire was the first company to use his patented process.[12] His process was especially valuable on the continent of Europe, where the proportion of phosphoric iron was much greater than in England, and both in Belgium and in Germany the name of the inventor became more widely known than in his own country. In America, although non-phosphoric iron largely predominated, an immense interest was taken in the invention.[12]

The Barrow Hematite Steel Company operated 18 Bessemer converters and owned the largest steelworks in the world at the turn of the 20th century.

The next great advance in steel making was the Siemens-Martin process. Sir Charles William Siemens developed his regenerative furnace in the 1850s, for which he claimed in 1857 to able to recover enough heat to save 70–80% of the fuel. The furnace operated at a high temperature by using regenerative preheating of fuel and air for combustion. Through this method, an open-hearth furnace can reach temperatures high enough to melt steel, but Siemens did not initially use it in that manner.

French engineer Pierre-Émile Martin was the first to take out a license for the Siemens furnace and apply it to the production of steel in 1865. The Siemens-Martin process complemented rather than replaced the Bessemer process. Its main advantages were that it did not expose the steel to excessive nitrogen (which would cause the steel to become brittle), it was easier to control, and that it permitted the melting and refining of large amounts of scrap steel, lowering steel production costs and recycling an otherwise troublesome waste material. It became the leading steel making process by the early 20th century.

The availability of cheap steel allowed building larger bridges, railroads, skyscrapers, and ships.[13] Other important steel products—also made using the open hearth process—were steel cable, steel rod and sheet steel which enabled large, high-pressure boilers and high-tensile strength steel for machinery which enabled much more powerful engines, gears and axles than were previously possible. With large amounts of steel it became possible to build much more powerful guns and carriages, tanks, armored fighting vehicles and naval ships.

Rail

A rail rolling mill in Donetsk, 1887.

The increase in steel production from the 1860s meant that railroads could finally be made from steel at a competitive cost. Being a much more durable material, steel steadily replaced iron as the standard for railway rail, and due to its greater strength, longer lengths of rails could now be rolled. Wrought iron was soft and contained flaws caused by included dross. Iron rails could also not support heavy locomotives and was damaged by hammer blow. The first to make durable rails of steel rather than wrought iron was Robert Forester Mushet at the Darkhill Ironworks, Gloucestershire in 1857.

The first of his steel rails was sent to Derby Midland railway station. They were laid at part of the station approach where the iron rails had to be renewed at least every six months, and occasionally every three. Six years later, in 1863, the rail seemed as perfect as ever, although some 700 trains had passed over it daily.[14] This provided the basis for the accelerated construction of rail transportation throughout the world in the late nineteenth century. Steel rails lasted over ten times longer than did iron,[15] and with the falling cost of steel, heavier weight rails were used. This allowed the use of more powerful locomotives, which could pull longer trains, and longer rail cars, all of which greatly increased the productivity of railroads.[16] Rail became the dominant form of transport infrastructure throughout the industrialized world,[17] producing a steady decrease in the cost of shipping seen for the rest of the century.[18]

Electrification

The theoretical and practical basis for the harnessing of electric power was laid by the scientist and experimentalist Michael Faraday. Through his research on the magnetic field around a conductor carrying a direct current, Faraday established the basis for the concept of the electromagnetic field in physics.[19][20] His inventions of electromagnetic rotary devices were the foundation of the practical use of electricity in technology.

U.S. Patent#223898: Electric-Lamp. Issued January 27, 1880.

In 1881, Sir Joseph Swan, inventor of the first feasible incandescent light bulb, supplied about 1,200 Swan incandescent lamps to the Savoy Theatre in the City of Westminster, London, which was the first theatre, and the first public building in the world, to be lit entirely by electricity.[21][22] Swan's lightbulb had already been used in 1879 to light Mosley Street, in Newcastle upon Tyne, the first electrical street lighting installation in the world.[23][24] This set the stage for the electrification of industry and the home. The first large scale central distribution supply plant was opened at Holborn Viaduct in London in 1882[25] and later at Pearl Street Station in New York City.[26]

Three-phase rotating magnetic field of an AC motor.

The three poles are each connected to a separate wire. Each wire

carries current 120 degrees apart in phase. Arrows show the resulting

magnetic force vectors. Three phase current is used in commerce and

industry.

The first modern power station in the world was built by the English electrical engineer Sebastian de Ferranti at Deptford. Built on an unprecedented scale and pioneering the use of high voltage (10,000V) alternating current, it generated 800 kilowatts and supplied central London. On its completion in 1891 it supplied high-voltage AC power that was then "stepped down" with transformers for consumer use on each street. Electrification allowed the final major developments in manufacturing methods of the Second Industrial Revolution, namely the assembly line and mass production.[27]

Electrification was called "the most important engineering achievement of the 20th century" by the National Academy of Engineering.[28] Electric lighting in factories greatly improved working conditions, eliminating the heat and pollution caused by gas lighting, and reducing the fire hazard to the extent that the cost of electricity for lighting was often offset by the reduction in fire insurance premiums. Frank J. Sprague developed the first successful DC motor in 1886. By 1889 110 electric street railways were either using his equipment or in planning. The electric street railway became a major infrastructure before 1920. The AC (Induction motor) was developed in the 1890s and soon began to be used in the electrification of industry.[29] Household electrification did not become common until the 1920s, and then only in cities. Fluorescent lighting was commercially introduced at the 1939 World's Fair.

Electrification also allowed the inexpensive production of electro-chemicals, such as aluminium, chlorine, sodium hydroxide, and magnesium.[30]

Machine tools

A graphic representation of formulas for the pitches of threads of screw bolts.

The use of machine tools began with the onset of the First Industrial Revolution. The increase in mechanization required more metal parts, which were usually made of cast iron or wrought iron—and hand working lacked precision and was a slow and expensive process. One of the first machine tools was John Wilkinson's boring machine, that bored a precise hole in James Watt's first steam engine in 1774. Advances in the accuracy of machine tools can be traced to Henry Maudslay and refined by Joseph Whitworth. Standardization of screw threads began with Henry Maudslay around 1800, when the modern screw-cutting lathe made interchangeable V-thread machine screws a practical commodity.

In 1841, Joseph Whitworth created a design that, through its adoption by many British railroad companies, became the world's first national machine tool standard called British Standard Whitworth.[31] During the 1840s through 1860s, this standard was often used in the United States and Canada as well, in addition to myriad intra- and inter-company standards.

The importance of machine tools to mass production is shown by the fact that production of the Ford Model T used 32,000 machine tools, most of which were powered by electricity.[32] Henry Ford is quoted as saying that mass production would not have been possible without electricity because it allowed placement of machine tools and other equipment in the order of the work flow.[33]

Paper making

The first paper making machine was the Fourdrinier machine, built by Sealy and Henry Fourdrinier, stationers in London. In 1800, Matthias Koops, working in London, investigated the idea of using wood to make paper, and began his printing business a year later. However, his enterprise was unsuccessful due to the prohibitive cost at the time.[34][35][36]It was in the 1840s, that Charles Fenerty in Nova Scotia and Friedrich Gottlob Keller in Saxony both invented a successful machine which extracted the fibres from wood (as with rags) and from it, made paper. This started a new era for paper making,[37] and, together with the invention of the fountain pen and the mass-produced pencil of the same period, and in conjunction with the advent of the steam driven rotary printing press, wood based paper caused a major transformation of the 19th century economy and society in industrialized countries. With the introduction of cheaper paper, schoolbooks, fiction, non-fiction, and newspapers became gradually available by 1900. Cheap wood based paper also allowed keeping personal diaries or writing letters and so, by 1850, the clerk, or writer, ceased to be a high-status job. By the 1880s chemical processes for paper manufacture were in use, becoming dominant by 1900.

Petroleum

The petroleum industry, both production and refining, began in 1848 with the first oil works in Scotland. The chemist James Young set up a small business refining the crude oil in 1848. Young found that by slow distillation he could obtain a number of useful liquids from it, one of which he named "paraffine oil" because at low temperatures it congealed into a substance resembling paraffin wax.[38] In 1850 Young built the first truly commercial oil-works and oil refinery in the world at Bathgate, using oil extracted from locally mined torbanite, shale, and bituminous coal to manufacture naphtha and lubricating oils; paraffin for fuel use and solid paraffin were not sold till 1856.Cable tool drilling was developed in ancient China and was used for drilling brine wells. The salt domes also held natural gas, which some wells produced and which was used for evaporation of the brine. Chinese well drilling technology was introduced to Europe in 1828.[39]

Although there were many efforts in the mid-19th century to drill for oil Edwin Drake's 1859 well near Titusville, Pennsylvania, is considered the first "modern oil well".[40] Drake's well touched off a major boom in oil production in the United States.[41] Drake learned of cable tool drilling from Chinese laborers in the U. S.[42] The first primary product was kerosene for lamps and heaters.[30][43] Similar developments around Baku fed the European market.

Kerosene lighting was much more efficient and less expensive than vegetable oils, tallow and whale oil. Although town gas lighting was available in some cities, kerosene produced a brighter light until the invention of the gas mantle. Both were replaced by electricity for street lighting following the 1890s and for households during the 1920s. Gasoline was an unwanted byproduct of oil refining until automobiles were mass-produced after 1914, and gasoline shortages appeared during World War I. The invention of the Burton process for thermal cracking doubled the yield of gasoline, which helped alleviate the shortages.[43]

Chemical

The BASF-chemical factories in Ludwigshafen, Germany, 1881

Synthetic dye was discovered by English chemist William Henry Perkin in 1856. At the time, chemistry was still in a quite primitive state; it was still a difficult proposition to determine the arrangement of the elements in compounds and chemical industry was still in its infancy. Perkin's accidental discovery was that aniline could be partly transformed into a crude mixture which when extracted with alcohol produced a substance with an intense purple colour. He scaled up production of the new "mauveine", and commercialized it as the world's first synthetic dye.[44]

After the discovery of mauveine, many new aniline dyes appeared (some discovered by Perkin himself), and factories producing them were constructed across Europe. Towards the end of the century, Perkin and other British companies found their research and development efforts increasingly eclipsed by the German chemical industry which became world dominant by 1914.

Maritime technology

The launch of Great Britain, which was advanced for her time, 1843.

This era saw the birth of the modern ship as disparate technological advances came together.

The screw propeller was introduced in 1835 by Francis Pettit Smith who discovered a new way of building propellers by accident. Up to that time, propellers were literally screws, of considerable length. But during the testing of a boat propelled by one, the screw snapped off, leaving a fragment shaped much like a modern boat propeller. The boat moved faster with the broken propeller.[45] The superiority of screw against paddles was taken up by navies. Trials with Smith's SS Archimedes, the first steam driven screw, led to the famous tug-of-war competition in 1845 between the screw-driven HMS Rattler and the paddle steamer HMS Alecto; the former pulling the latter backward at 2.5 knots (4.6 km/h).

The first seagoing iron steamboat was built by Horseley Ironworks and named the Aaron Manby. It also used an innovative oscillating engine for power. The boat was built at Tipton using temporary bolts, disassembled for transportation to London, and reassembled on the Thames in 1822, this time using permanent rivets.

Other technological developments followed, including the invention of the surface condenser, which allowed boilers to run on purified water rather than salt water, eliminating the need to stop to clean them on long sea journeys. The Great Western[46] ,[47][48] built by engineer Isambard Kingdom Brunel, was the longest ship in the world at 236 ft (72 m) with a 250-foot (76 m) keel and was the first to prove that transatlantic steamship services were viable. The ship was constructed mainly from wood, but Brunel added bolts and iron diagonal reinforcements to maintain the keel's strength. In addition to its steam-powered paddle wheels, the ship carried four masts for sails.

Brunel followed this up with the Great Britain, launched in 1843 and considered the first modern ship built of metal rather than wood, powered by an engine rather than wind or oars, and driven by propeller rather than paddle wheel.[49] Brunel's vision and engineering innovations made the building of large-scale, propeller-driven, all-metal steamships a practical reality, but the prevailing economic and industrial conditions meant that it would be several decades before transoceanic steamship travel emerged as a viable industry.

Highly efficient multiple expansion steam engines began being used on ships, allowing them to carry less coal than freight.[50] The oscillating engine was first built by Aaron Manby and Joseph Maudslay in the 1820s as a type of direct-acting engine that was designed to achieve further reductions in engine size and weight. Oscillating engines had the piston rods connected directly to the crankshaft, dispensing with the need for connecting rods. In order to achieve this aim, the engine cylinders were not immobile as in most engines, but secured in the middle by trunnions which allowed the cylinders themselves to pivot back and forth as the crankshaft rotated, hence the term oscillating.

It was John Penn, engineer for the Royal Navy who perfected the oscillating engine. One of his earliest engines was the grasshopper beam engine. In 1844 he replaced the engines of the Admiralty yacht, HMS Black Eagle with oscillating engines of double the power, without increasing either the weight or space occupied, an achievement which broke the naval supply dominance of Boulton & Watt and Maudslay, Son & Field. Penn also introduced the trunk engine for driving screw propellers in vessels of war. HMS Encounter (1846) and HMS Arrogant (1848) were the first ships to be fitted with such engines and such was their efficacy that by the time of Penn's death in 1878, the engines had been fitted in 230 ships and were the first mass-produced, high-pressure and high-revolution marine engines.[51]

The revolution in naval design led to the first modern battleships in the 1870s, evolved from the ironclad design of the 1860s. The Devastation-class turret ships were built for the British Royal Navy as the first class of ocean-going capital ship that did not carry sails, and the first whose entire main armament was mounted on top of the hull rather than inside it.

Rubber

The vulcanization of rubber, by American Charles Goodyear and Englishman Thomas Hancock in the 1840s paved the way for a growing rubber industry, especially the manufacture of rubber tyres[52]John Boyd Dunlop developed the first practical pneumatic tyre in 1887 in South Belfast. Willie Hume demonstrated the supremacy of Dunlop's newly invented pneumatic tyres in 1889, winning the tyre's first ever races in Ireland and then England.[53] [54] Dunlop's development of the pneumatic tyre arrived at a crucial time in the development of road transport and commercial production began in late 1890.

Bicycles

The modern bicycle was designed by the English engineer Harry John Lawson in 1876, although it was John Kemp Starley who produced the first commercially successful safety bicycle a few years later.[55] Its popularity soon grew, causing the bike boom of the 1890s.Road networks improved greatly in the period, using the Macadam method pioneered by Scottish engineer John Loudon McAdam, and hard surfaced roads were built around the time of the bicycle craze of the 1890s. Modern tarmac was patented by British civil engineer Edgar Purnell Hooley in 1901.[56]

Automobile

German inventor Karl Benz patented the world's first automobile in 1886. It featured wire wheels (unlike carriages' wooden ones)[57] with a four-stroke engine of his own design between the rear wheels, with a very advanced coil ignition [58] and evaporative cooling rather than a radiator.[58] Power was transmitted by means of two roller chains to the rear axle. It was the first automobile entirely designed as such to generate its own power, not simply a motorized-stage coach or horse carriage.Benz began to sell the vehicle (advertising it as the Benz Patent Motorwagen) in the late summer of 1888, making it the first commercially available automobile in history.

Henry Ford built his first car in 1896 and worked as a pioneer in the industry, with others who would eventually form their own companies, until the founding of Ford Motor Company in 1903.[27] Ford and others at the company struggled with ways to scale up production in keeping with Henry Ford's vision of a car designed and manufactured on a scale so as to be affordable by the average worker.[27] The solution that Ford Motor developed was a completely redesigned factory with machine tools and special purpose machines that were systematically positioned in the work sequence. All unnecessary human motions were eliminated by placing all work and tools within easy reach, and where practical on conveyors, forming the assembly line, the complete process being called mass production. This was the first time in history when a large, complex product consisting of 5000 parts had been produced on a scale of hundreds of thousands per year.[27][32] The savings from mass production methods allowed the price of the Model T to decline from $780 in 1910 to $360 in 1916. In 1924 2 million T-Fords were produced and retailed $290 each.[59]

Applied science

Applied science opened many opportunities. By the middle of the 19th century there was a scientific understanding of chemistry and a fundamental understanding of thermodynamics and by the last quarter of the century both of these sciences were near their present-day basic form. Thermodynamic principles were used in the development of physical chemistry. Understanding chemistry greatly aided the development of basic inorganic chemical manufacturing and the aniline dye industries.The science of metallurgy was advanced through the work of Henry Clifton Sorby and others. Sorby pioneered the study of iron and steel under microscope, which paved the way for a scientific understanding of metal and the mass-production of steel. In 1863 he used etching with acid to study the microscopic structure of metals and was the first to understand that a small but precise quantity of carbon gave steel its strength.[60] This paved the way for Henry Bessemer and Robert Forester Mushet to develop the method for mass-producing steel.

Other processes were developed for purifying various elements such as chromium, molybdenum, titanium, vanadium and nickel which could be used for making alloys with special properties, especially with steel. Vanadium steel, for example, is strong and fatigue resistant, and was used in half the automotive steel.[61] Alloy steels were used for ball bearings which were used in large scale bicycle production in the 1880s. Ball and roller bearings also began being used in machinery. Other important alloys are used in high temperatures, such as steam turbine blades, and stainless steels for corrosion resistance.

The work of Justus von Liebig and August Wilhelm von Hofmann laid the groundwork for modern industrial chemistry. Liebig is considered the "father of the fertilizer industry" for his discovery of nitrogen as an essential plant nutrient and went on to establish Liebig's Extract of Meat Company which produced the Oxo meat extract. Hofmann headed a school of practical chemistry in London, under the style of the Royal College of Chemistry, introduced modern conventions for molecular modeling and taught Perkin who discovered the first synthetic dye.

The science of thermodynamics was developed into its modern form by Sadi Carnot, William Rankine, Rudolf Clausius, William Thomson, James Clerk Maxwell, Ludwig Boltzmann and J. Willard Gibbs. These scientific principles were applied to a variety of industrial concerns, including improving the efficiency of boilers and steam turbines. The work of Michael Faraday and others was pivotal in laying the foundations of the modern scientific understanding of electricity.

Scottish scientist James Clerk Maxwell was particularly influential—his discoveries ushered in the era of modern physics.[62] His most prominent achievement was to formulate a set of equations that described electricity, magnetism, and optics as manifestations of the same phenomenon, namely the electromagnetic field.[63] The unification of light and electrical phenomena led to the prediction of the existence of radio waves and was the basis for the future development of radio technology by Hughes, Marconi and others.[64]

Maxwell himself developed the first durable colour photograph in 1861 and published the first scientific treatment of control theory.[65][66] Control theory is the basis for process control, which is widely used in automation, particularly for process industries, and for controlling ships and airplanes.[67] Control theory was developed to analyze the functioning of centrifugal governors on steam engines. These governors came into use in the late 18th century on wind and water mills to correctly position the gap between mill stones, and were adapted to steam engines by James Watt. Improved versions were used to stabilize automatic tracking mechanisms of telescopes and to control speed of ship propellers and rudders. However, those governors were sluggish and oscillated about the set point. James Clerk Maxwell wrote a paper mathematically analyzing the actions of governors, which marked the beginning of the formal development of control theory. The science was continually improved and evolved into an engineering discipline.

Fertilizer

Justus von Liebig was the first to understand the importance of ammonia as fertilizer, and promoted the importance of inorganic minerals to plant nutrition. In England, he attempted to implement his theories commercially through a fertilizer created by treating phosphate of lime in bone meal with sulfuric acid. Another pioneer was John Bennet Lawes who began to experiment on the effects of various manures on plants growing in pots in 1837, leading to a manure formed by treating phosphates with sulphuric acid; this was to be the first product of the nascent artificial manure industry.[68]The discovery of coprolites in commercial quantities in East Anglia, led Fisons and Edward Packard to develop one of the first large-scale commercial fertilizer plants at Bramford, and Snape in the 1850s. By the 1870s superphosphates produced in those factories, were being shipped around the world from the port at Ipswich.[69][70]

The Birkeland–Eyde process was developed by Norwegian industrialist and scientist Kristian Birkeland along with his business partner Sam Eyde in 1903,[71] but was soon replaced by the much more efficient Haber process,[72] developed by the Nobel prize-winning chemists Carl Bosch of IG Farben and Fritz Haber in Germany.[73] The process utilized molecular nitrogen (N2) and methane (CH4) gas in an economically sustainable synthesis of ammonia (NH3). The ammonia produced in the Haber process is the main raw material for production of nitric acid.

Engines and turbines

The steam turbine was developed by Sir Charles Parsons in 1884. His first model was connected to a dynamo that generated 7.5 kW (10 hp) of electricity.[74] The invention of Parson's steam turbine made cheap and plentiful electricity possible and revolutionized marine transport and naval warfare.[75] By the time of Parson's death, his turbine had been adopted for all major world power stations.[76] Unlike earlier steam engines, the turbine produced rotary power rather than reciprocating power which required a crank and heavy flywheel. The large number of stages of the turbine allowed for high efficiency and reduced size by 90%. The turbine's first application was in shipping followed by electric generation in 1903.The first widely used internal combustion engine was the Otto type of 1876. From the 1880s until electrification it was successful in small shops because small steam engines were inefficient and required too much operator attention.[4] The Otto engine soon began being used to power automobiles, and remains as today's common gasoline engine.

The diesel engine was independently designed by Rudolf Diesel and Herbert Akroyd Stuart in the 1890s using thermodynamic principles with the specific intention of being highly efficient. It took several years to perfect and become popular, but found application in shipping before powering locomotives. It remains the world's most efficient prime mover.[4]

Telecommunications

Major telegraph lines in 1891.

The first commercial telegraph system was installed by Sir William Fothergill Cooke and Charles Wheatstone in May 1837 between Euston railway station and Camden Town in London.[77]

The rapid expansion of telegraph networks took place throughout the century, with the first undersea cable being built by John Watkins Brett between France and England. The Atlantic Telegraph Company was formed in London in 1856 to undertake to construct a commercial telegraph cable across the Atlantic Ocean. This was successfully completed on 18 July 1866 by the ship SS Great Eastern, captained by Sir James Anderson after many mishaps along the away.[78] From the 1850s until 1911, British submarine cable systems dominated the world system. This was set out as a formal strategic goal, which became known as the All Red Line.[79]

The telephone was patented in 1876 by Alexander Graham Bell, and like the early telegraph, it was used mainly to speed business transactions.[80]

As mentioned above, one of the most important scientific advancements in all of history was the unification of light, electricity and magnetism through Maxwell's electromagnetic theory. A scientific understanding of electricity was necessary for the development of efficient electric generators, motors and transformers. David Edward Hughes and Heinrich Hertz both demonstrated and confirmed the phenomenon of electromagnetic waves that had been predicted by Maxwell.[4]

It was Italian inventor Guglielmo Marconi who successfully commercialized radio at the turn of the century.[81] He founded The Wireless Telegraph & Signal Company in Britain in 1897[82][83] and in the same year transmitted Morse code across Salisbury Plain, sent the first ever wireless communication over open sea[84] and made the first transatlantic transmission in 1901 from Poldhu, Cornwall to Signal Hill, Newfoundland. Marconi built high-powered stations on both sides of the Atlantic and began a commercial service to transmit nightly news summaries to subscribing ships in 1904.[85]

The key development of the vacuum tube by Sir John Ambrose Fleming in 1904 underpinned the development of modern electronics and radio broadcasting. Lee De Forest's subsequent invention of the triode allowed the amplification of electronic signals, which paved the way for radio broadcasting in the 1920s.

Modern business management

Railroads are credited with creating the modern business enterprise by scholars such as Alfred Chandler. Previously, the management of most businesses had consisted of individual owners or groups of partners, some of whom often had little daily hands-on operations involvement. Centralized expertise in the home office was not enough. A railroad required expertise available across the whole length of its trackage, to deal with daily crises, breakdowns and bad weather. A collision in Massachusetts in 1841 led to a call for safety reform. This led to the reorganization of railroads into different departments with clear lines of management authority. When the telegraph became available, companies built telegraph lines along the railroads to keep track of trains.[86]Railroads involved complex operations and employed extremely large amounts of capital and ran a more complicated business compared to anything previous. Consequently, they needed better ways to track costs. For example, to calculate rates they needed to know the cost of a ton-mile of freight. They also needed to keep track of cars, which could go missing for months at a time. This led to what was called "railroad accounting", which was later adopted by steel and other industries, and eventually became modern accounting.[87]

Later in the Second Industrial Revolution, Frederick Winslow Taylor and others in America developed the concept of scientific management or Taylorism. Scientific management initially concentrated on reducing the steps taken in performing work (such as bricklaying or shoveling) by using analysis such as time-and-motion studies, but the concepts evolved into fields such as industrial engineering, manufacturing engineering, and business management that helped to completely restructure[citation needed] the operations of factories, and later entire segments of the economy.

Taylor's core principles included:[citation needed]

- replacing rule-of-thumb work methods with methods based on a scientific study of the tasks

- scientifically selecting, training, and developing each employee rather than passively leaving them to train themselves

- providing "detailed instruction and supervision of each worker in the performance of that worker's discrete task"

- dividing work nearly equally between managers and workers, such that the managers apply scientific-management principles to planning the work and the workers actually perform the tasks

Socio-economic impacts

The period from 1870 to 1890 saw the greatest increase in economic growth in such a short period as ever in previous history. Living standards improved significantly in the newly industrialized countries as the prices of goods fell dramatically due to the increases in productivity. This caused unemployment and great upheavals in commerce and industry, with many laborers being displaced by machines and many factories, ships and other forms of fixed capital becoming obsolete in a very short time span.[50]"The economic changes that have occurred during the last quarter of a century -or during the present generation of living men- have unquestionably been more important and more varied than during any period of the world's history".[50]Crop failures no longer resulted in starvation in areas connected to large markets through transport infrastructure.[50]

Massive improvements in public health and sanitation resulted from public health initiatives, such as the construction of the London sewerage system in the 1860s and the passage of laws that regulated filtered water supplies—(the Metropolis Water Act introduced regulation of the water supply companies in London, including minimum standards of water quality for the first time in 1852). This greatly reduced the infection and death rates from many diseases.

By 1870 the work done by steam engines exceeded that done by animal and human power. Horses and mules remained important in agriculture until the development of the internal combustion tractor near the end of the Second Industrial Revolution.[88]

Improvements in steam efficiency, like triple-expansion steam engines, allowed ships to carry much more freight than coal, resulting in greatly increased volumes of international trade. Higher steam engine efficiency caused the number of steam engines to increase several fold, leading to an increase in coal usage, the phenomenon being called the Jevons paradox.[89]

By 1890 there was an international telegraph network allowing orders to be placed by merchants in England or the US to suppliers in India and China for goods to be transported in efficient new steamships. This, plus the opening of the Suez Canal, led to the decline of the great warehousing districts in London and elsewhere, and the elimination of many middlemen.[50]

The tremendous growth in productivity, transportation networks, industrial production and agricultural output lowered the prices of almost all goods. This led to many business failures and periods that were called depressions that occurred as the world economy actually grew.[50] See also: Long depression.

The factory system centralized production in separate buildings funded and directed by specialists (as opposed to work at home). The division of labor made both unskilled and skilled labor more productive, and led to a rapid growth of population in industrial centers. The shift away from agriculture toward industry had occurred in Britain by the 1730s, when the percentage of the working population engaged in agriculture fell below 50%, a development that would only happen elsewhere (the Low Countries) in the 1830s and '40s. By 1890, the figure had fallen to under 10% percent and the vast majority of the British population was urbanized. This milestone was reached by the Low Countries and the US in the 1950s.[90]

Like the first industrial revolution, the second supported population growth and saw most governments protect their national economies with tariffs. Britain retained its belief in free trade throughout this period. The wide-ranging social impact of both revolutions included the remaking of the working class as new technologies appeared. The changes resulted in the creation of a larger, increasingly professional, middle class, the decline of child labor and the dramatic growth of a consumer-based, material culture.[91]

By 1900, the leaders in industrial production was Britain with 24% of the world total, followed by the US (19%), Germany (13%), Russia (9%) and France (7%). Europe together accounted for 62%.[92]

The great inventions and innovations of the Second Industrial Revolution are part of our modern life. They continued to be drivers of the economy until after WWII. Only a few major innovations occurred in the post-war era, some of which are: computers, semiconductors, the fiber optic network and the Internet, cellular telephones, combustion turbines (jet engines) and the Green Revolution.[93] Although commercial aviation existed before WWII, it became a major industry after the war.

United Kingdom

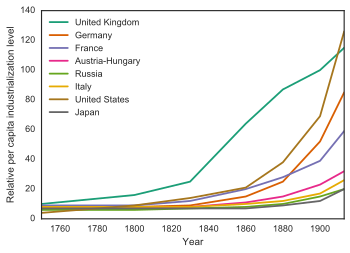

Relative per capita levels of industrialization, 1750-1910.[94]

New products and services were introduced which greatly increased international trade. Improvements in steam engine design and the wide availability of cheap steel meant that slow, sailing ships were replaced with faster steamship, which could handle more trade with smaller crews. The chemical industries also moved to the forefront. Britain invested less in technological research than the U.S. and Germany, which caught up.

The development of more intricate and efficient machines along with mass production techniques (after 1910) greatly expanded output and lowered production costs. As a result, production often exceeded domestic demand. Among the new conditions, more markedly evident in Britain, the forerunner of Europe's industrial states, were the long-term effects of the severe Long Depression of 1873–1896, which had followed fifteen years of great economic instability. Businesses in practically every industry suffered from lengthy periods of low — and falling — profit rates and price deflation after 1873.

United States

The U.S. had its highest economic growth rate in the last two decades of the Second Industrial Revolution;[95] however, population growth slowed while productivity growth peaked around the mid 20th century. The Gilded Age in America was based on heavy industry such as factories, railroads and coal mining. The iconic event was the opening of the First Transcontinental Railroad in 1869, providing six-day service between the East Coast and San Francisco.[96]During the Gilded Age, American railroad mileage tripled between 1860 and 1880, and tripled again by 1920, opening new areas to commercial farming, creating a truly national marketplace and inspiring a boom in coal mining and steel production. The voracious appetite for capital of the great trunk railroads facilitated the consolidation of the nation's financial market in Wall Street. By 1900, the process of economic concentration had extended into most branches of industry—a few large corporations, some organized as "trusts" (e.g. Standard Oil), dominated in steel, oil, sugar, meatpacking, and the manufacture of agriculture machinery. Other major components of this infrastructure were the new methods for manufacturing steel, especially the Bessemer process. The first billion-dollar corporation was United States Steel, formed by financier J. P. Morgan in 1901, who purchased and consolidated steel firms built by Andrew Carnegie and others.[97]

Increased mechanization of industry and improvements to worker efficiency, increased the productivity of factories while undercutting the need for skilled labor. Mechanical innovations such as batch and continuous processing began to become much more prominent in factories. This mechanization made some factories an assemblage of unskilled laborers performing simple and repetitive tasks under the direction of skilled foremen and engineers. In some cases, the advancement of such mechanization substituted for low-skilled workers altogether. Both the number of unskilled and skilled workers increased, as their wage rates grew[98] Engineering colleges were established to feed the enormous demand for expertise. Together with rapid growth of small business, a new middle class was rapidly growing, especially in northern cities.[99]

Employment distribution

In the early 1900s there was a disparity between the levels of employment seen in the northern and southern United States. On average, states in the North had both a higher population, and a higher rate of employment than states in the South. The higher rate of employment is easily seen by considering the 1909 rates of employment compared to the populations of each state in the 1910 census. This difference was most notable in the states with the largest populations, such as New York and Pennsylvania. Each of these states had roughly 5 percent more of the total US workforce than would be expected given their populations. Conversely, the states in the South with the best actual rates of employment, North Carolina and Georgia, had roughly 2 percent less of the workforce than one would expect from their population. When the averages of all southern states and all northern states are taken, the trend holds with the North over-performing by about 2 percent, and the South under-performing by about 1 percent.[100]Germany

The German Empire came to rival Britain as Europe's primary industrial nation during this period. Since Germany industrialized later, it was able to model its factories after those of Britain, thus making more efficient use of its capital and avoiding legacy methods in its leap to the envelope of technology. Germany invested more heavily than the British in research, especially in chemistry, motors and electricity. The German concern system (known as Konzerne), being significantly concentrated, was able to make more efficient use of capital. Germany was not weighted down with an expensive worldwide empire that needed defense. Following Germany's annexation of Alsace-Lorraine in 1871, it absorbed parts of what had been France's industrial base.[101]By 1900 the German chemical industry dominated the world market for synthetic dyes. The three major firms BASF, Bayer and Hoechst produced several hundred different dyes, along with the five smaller firms. In 1913 these eight firms produced almost 90 percent of the world supply of dyestuffs, and sold about 80 percent of their production abroad. The three major firms had also integrated upstream into the production of essential raw materials and they began to expand into other areas of chemistry such as pharmaceuticals, photographic film, agricultural chemicals and electrochemical. Top-level decision-making was in the hands of professional salaried managers, leading Chandler to call the German dye companies "the world's first truly managerial industrial enterprises".[102] There were many spin offs from research—such as the pharmaceutical industry, which emerged from chemical research.[103]

Belgium

Belgium during the Belle Époque showed the value of the railways for speeding the Second Industrial Revolution. After 1830, when it broke away from the Netherlands and became a new nation, it decided to stimulate industry. It planned and funded a simple cruciform system that connected major cities, ports and mining areas, and linked to neighboring countries. Belgium thus became the railway center of the region. The system was soundly built along British lines, so that profits were low but the infrastructure necessary for rapid industrial growth was put in place.[104]Alternative uses

There have been other times that have been called "second industrial revolution". Industrial revolutions may be renumbered by taking earlier developments, such as the rise of medieval technology in the 12th century, or of ancient Chinese technology during the Tang Dynasty, or of ancient Roman technology, as first. "Second industrial revolution" has been used in the popular press and by technologists or industrialists to refer to the changes following the spread of new technology after World War I.Excitement and debate over the dangers and benefits of the Atomic Age were more intense and lasting than those over the Space age but both were predicted to lead to another industrial revolution. At the start of the 21st century[105] the term "second industrial revolution" has been used to describe the anticipated effects of hypothetical molecular nanotechnology systems upon society. In this more recent scenario, they would render the majority of today's modern manufacturing processes obsolete, transforming all facets of the modern economy.