https://en.wikipedia.org/wiki/Theories_of_second-language_acquisition

The main purpose of theories of second-language acquisition (SLA) is to shed light on how people who already know one language learn a second language. The field of second-language acquisition involves various contributions, such as linguistics, sociolinguistics, psychology, cognitive science, neuroscience, and education. These multiple fields in second-language acquisition can be grouped as four major research strands: (a) linguistic dimensions of SLA, (b) cognitive (but not linguistic) dimensions of SLA, (c) socio-cultural dimensions of SLA, and (d) instructional dimensions of SLA. While the orientation of each research strand is distinct, they are in common in that they can guide us to find helpful condition to facilitate successful language learning. Acknowledging the contributions of each perspective and the interdisciplinarity between each field, more and more second language researchers are now trying to have a bigger lens on examining the complexities of second language acquisition.

The main purpose of theories of second-language acquisition (SLA) is to shed light on how people who already know one language learn a second language. The field of second-language acquisition involves various contributions, such as linguistics, sociolinguistics, psychology, cognitive science, neuroscience, and education. These multiple fields in second-language acquisition can be grouped as four major research strands: (a) linguistic dimensions of SLA, (b) cognitive (but not linguistic) dimensions of SLA, (c) socio-cultural dimensions of SLA, and (d) instructional dimensions of SLA. While the orientation of each research strand is distinct, they are in common in that they can guide us to find helpful condition to facilitate successful language learning. Acknowledging the contributions of each perspective and the interdisciplinarity between each field, more and more second language researchers are now trying to have a bigger lens on examining the complexities of second language acquisition.

History

As second-language acquisition began as an interdisciplinary field, it is hard to pin down a precise starting date.

However, there are two publications in particular that are seen as

instrumental to the development of the modern study of SLA: (1) Corder's 1967 essay The Significance of Learners' Errors, and (2) Selinker's 1972 article Interlanguage.

Corder's essay rejected a behaviorist account of SLA and suggested that

learners made use of intrinsic internal linguistic processes;

Selinker's article argued that second-language learners possess their

own individual linguistic systems that are independent from both the

first and second languages.

In the 1970s the general trend in SLA was for research exploring the ideas of Corder and Selinker, and refuting behaviorist theories of language acquisition. Examples include research into error analysis, studies in transitional stages of second-language ability, and the "morpheme studies" investigating the order in which learners acquired linguistic features. The 70s were dominated by naturalistic studies of people learning English as a second language.

By the 1980s, the theories of Stephen Krashen had become the prominent paradigm in SLA. In his theories, often collectively known as the Input Hypothesis, Krashen suggested that language acquisition is driven solely by comprehensible input,

language input that learners can understand. Krashen's model was

influential in the field of SLA and also had a large influence on

language teaching, but it left some important processes in SLA

unexplained. Research in the 1980s was characterized by the attempt to

fill in these gaps. Some approaches included White's descriptions of learner competence, and Pienemann's use of speech processing models and lexical functional grammar

to explain learner output. This period also saw the beginning of

approaches based in other disciplines, such as the psychological

approach of connectionism.

The 1990s saw a host of new theories introduced to the field, such as Michael Long's interaction hypothesis, Merrill Swain's output hypothesis, and Richard Schmidt's noticing hypothesis. However, the two main areas of research interest were linguistic theories of SLA based upon Noam Chomsky's universal grammar, and psychological approaches such as skill acquisition theory and connectionism. The latter category also saw the new theories of processability and input processing in this time period. The 1990s also saw the introduction of sociocultural theory, an approach to explain second-language acquisition in terms of the social environment of the learner.

In the 2000s research was focused on much the same areas as in

the 1990s, with research split into two main camps of linguistic and

psychological approaches. VanPatten

and Benati do not see this state of affairs as changing in the near

future, pointing to the support both areas of research have in the wider

fields of linguistics and psychology, respectively.

Universal grammar

From the field of linguistics, the most influential theory by far has been Chomsky's theory of Universal Grammar (UG). The core of this theory lies on the existence of an innate universal grammar, grounded on the poverty of the stimulus. The UG model of principles, basic properties which all languages

share, and parameters, properties which can vary between languages, has

been the basis for much second-language research.

From a UG perspective, learning the grammar of a second language is simply a matter of setting the correct parameters. Take the pro-drop parameter, which dictates whether or not sentences must have a subject in order to be grammatically correct. This parameter can have two values: positive, in which case sentences do not necessarily need a subject, and negative, in which case subjects must be present. In German the sentence "Er spricht" (he speaks) is grammatical, but the sentence "Spricht" (speaks) is ungrammatical. In Italian, however, the sentence "Parla" (speaks) is perfectly normal and grammatically correct.

A German speaker learning Italian would only need to deduce that

subjects are optional from the language he hears, and then set his pro-drop

parameter for Italian accordingly. Once he has set all the parameters

in the language correctly, then from a UG perspective he can be said to

have learned Italian, i.e. he will always produce perfectly correct

Italian sentences.

Universal Grammar also provides a succinct explanation for much

of the phenomenon of language transfer. Spanish learners of English who

make the mistake "Is raining" instead of "It is raining" have not yet

set their pro-drop parameters correctly and are still using the same setting as in Spanish.

The main shortcoming of Universal Grammar in describing

second-language acquisition is that it does not deal at all with the

psychological processes involved with learning a language. UG

scholarship is only concerned with whether parameters are set or not,

not with how they are set. Schachter (1988) is a useful critique of research testing the role of Universal Grammar in second language acquisition.

Input hypothesis

Learners' most direct source of information

about the target language is the target language itself. When they come

into direct contact with the target language, this is referred to as

"input." When learners process that language in a way that can

contribute to learning, this is referred to as "intake". However, it

must be at a level that is comprehensible to them. In his monitor theory,

Krashen advanced the concept that language input should be at the "i+1"

level, just beyond what the learner can fully understand; this input is

comprehensible, but contains structures that are not yet fully

understood. This has been criticized on the basis that there is no clear

definition of i+1, and that factors other than structural difficulty

(such as interest or presentation) can affect whether input is actually

turned into intake. The concept has been quantified, however, in

vocabulary acquisition research; Nation reviews various studies which

indicate that about 98% of the words in running text should be

previously known in order for extensive reading to be effective.

In his Input Hypothesis, Krashen proposes that language

acquisition takes place only when learners receive input just beyond

their current level of L2 competence. He termed this level of input

“i+1.” However, in contrast to emergentist and connectionist theories,

he follows the innate approach by applying Chomsky's Government and binding theory and concept of Universal grammar

(UG) to second-language acquisition. He does so by proposing a Language

Acquisition Device that uses L2 input to define the parameters of the

L2, within the constraints of UG, and to increase the L2 proficiency of

the learner. In addition, Krashen (1982)’s Affective Filter Hypothesis

holds that the acquisition of a second language is halted if the learner

has a high degree of anxiety when receiving input. According to this

concept, a part of the mind filters out L2 input and prevents intake by

the learner, if the learner feels that the process of SLA is

threatening. As mentioned earlier, since input is essential in Krashen’s

model, this filtering action prevents acquisition from progressing.

A great deal of research has taken place on input enhancement,

the ways in which input may be altered so as to direct learners'

attention to linguistically important areas. Input enhancement might

include bold-faced vocabulary words or marginal glosses in a reading text. Research here is closely linked to research on pedagogical effects, and comparably diverse.

Monitor model

Other concepts have also been influential in the speculation about

the processes of building internal systems of second-language

information. Some thinkers hold that language processing handles

distinct types of knowledge. For instance, one component of the Monitor

Model, propounded by Krashen, posits a distinction between “acquisition”

and “learning.”

According to Krashen, L2 acquisition is a subconscious process of

incidentally “picking up” a language, as children do when becoming

proficient in their first languages. Language learning, on the other

hand, is studying, consciously and intentionally, the features of a

language, as is common in traditional classrooms. Krashen sees these two

processes as fundamentally different, with little or no interface

between them. In common with connectionism, Krashen sees input as

essential to language acquisition.

Further, Bialystok and Smith make another distinction in

explaining how learners build and use L2 and interlanguage knowledge

structures.

They argue that the concept of interlanguage should include a

distinction between two specific kinds of language processing ability.

On one hand is learners’ knowledge of L2 grammatical structure and

ability to analyze the target language objectively using that knowledge,

which they term “representation,” and, on the other hand is the ability

to use their L2 linguistic knowledge, under time constraints, to

accurately comprehend input and produce output in the L2, which they

call “control.” They point out that often non-native speakers of a

language have higher levels of representation than their native-speaking

counterparts have, yet have a lower level of control. Finally,

Bialystok has framed the acquisition of language in terms of the

interaction between what she calls “analysis” and “control.”

Analysis is what learners do when they attempt to understand the rules

of the target language. Through this process, they acquire these rules

and can use them to gain greater control over their own production.

Monitoring is another important concept in some theoretical

models of learner use of L2 knowledge. According to Krashen, the Monitor

is a component of an L2 learner's language processing device that uses

knowledge gained from language learning to observe and regulate the

learner's own L2 production, checking for accuracy and adjusting

language production when necessary.

Interaction hypothesis

Long's interaction hypothesis proposes that language acquisition is strongly facilitated by the use of the target language in interaction. Similarly to Krashen's Input Hypothesis, the Interaction Hypothesis claims that comprehensible input

is important for language learning. In addition, it claims that the

effectiveness of comprehensible input is greatly increased when learners

have to negotiate for meaning.

Interactions often result in learners receiving negative evidence.

That is, if learners say something that their interlocutors do not

understand, after negotiation the interlocutors may model the correct

language form. In doing this, learners can receive feedback on their production and on grammar that they have not yet mastered. The process of interaction may also result in learners receiving more input from their interlocutors than they would otherwise. Furthermore, if learners stop to clarify things that they do not understand, they may have more time to process the input they receive. This can lead to better understanding and possibly the acquisition of new language forms. Finally, interactions may serve as a way of focusing learners' attention on a difference between their knowledge of the target language

and the reality of what they are hearing; it may also focus their

attention on a part of the target language of which they are not yet

aware.

Output hypothesis

In the 1980s, Canadian SLA researcher Merrill Swain

advanced the output hypothesis, that meaningful output is as necessary

to language learning as meaningful input. However, most studies have

shown little if any correlation between learning and quantity of output.

Today, most scholars

contend that small amounts of meaningful output are important to

language learning, but primarily because the experience of producing

language leads to more effective processing of input.

Critical Period Hypothesis

In

1967, Eric Lenneberg argued the existence of a critical period

(approximately 2-13 years old) for the acquisition of a first language.

This has attracted much attention in the realm of second language

acquisition. For instance, Newport (1990) extended the argument of

critical period hypothesis by pointing to a possibility that when a

learner is exposed to an L2 might also contribute to their second

language acquisition. Indeed, she revealed the correlation between age

of arrival and second language performance. In this regard, second

language learning might be affected by a learner's maturational state.

Competition model

Some of the major cognitive theories of how learners organize

language knowledge are based on analyses of how speakers of various

languages analyze sentences for meaning. MacWhinney, Bates, and Kliegl

found that speakers of English, German, and Italian showed varying

patterns in identifying the subjects of transitive sentences containing

more than one noun.

English speakers relied heavily on word order; German speakers used

morphological agreement, the animacy status of noun referents, and

stress; and speakers of Italian relied on agreement and stress.

MacWhinney et al. interpreted these results as supporting the

Competition Model, which states that individuals use linguistic cues to

get meaning from language, rather than relying on linguistic universals.

According to this theory, when acquiring an L2, learners sometimes

receive competing cues and must decide which cue(s) is most relevant for

determining meaning.

Connectionism and second-language acquisition

Connectionism

These findings also relate to Connectionism. Connectionism attempts to

model the cognitive language processing of the human brain, using

computer architectures that make associations between elements of

language, based on frequency of co-occurrence in the language input. Frequency has been found to be a factor in various linguistic domains of language learning.

Connectionism posits that learners form mental connections between

items that co-occur, using exemplars found in language input. From this

input, learners extract the rules of the language through cognitive

processes common to other areas of cognitive skill acquisition. Since

connectionism denies both innate rules and the existence of any innate

language-learning module, L2 input is of greater importance than it is

in processing models based on innate approaches, since, in

connectionism, input is the source of both the units and the rules of

language.

Noticing hypothesis

Attention is another characteristic that some believe to have a role

in determining the success or failure of language processing. Richard Schmidt

states that although explicit metalinguistic knowledge of a language is

not always essential for acquisition, the learner must be aware of L2

input in order to gain from it.

In his “noticing hypothesis,” Schmidt posits that learners must notice

the ways in which their interlanguage structures differ from target

norms. This noticing of the gap allows the learner's internal language

processing to restructure the learner's internal representation of the

rules of the L2 in order to bring the learner's production closer to the

target. In this respect, Schmidt's understanding is consistent with the

ongoing process of rule formation found in emergentism and

connectionism.

Processability

Some theorists and researchers have contributed to the cognitive

approach to second-language acquisition by increasing understanding of

the ways L2 learners restructure their interlanguage knowledge systems

to be in greater conformity to L2 structures. Processability theory

states that learners restructure their L2 knowledge systems in an order

of which they are capable at their stage of development.

For instance, In order to acquire the correct morphological and

syntactic forms for English questions, learners must transform

declarative English sentences. They do so by a series of stages,

consistent across learners. Clahsen proposed that certain processing

principles determine this order of restructuring.

Specifically, he stated that learners first, maintain declarative word

order while changing other aspects of the utterances, second, move words

to the beginning and end of sentences, and third, move elements within

main clauses before subordinate clauses.

Automaticity

Thinkers

have produced several theories concerning how learners use their

internal L2 knowledge structures to comprehend L2 input and produce L2

output. One idea is that learners acquire proficiency in an L2 in the

same way that people acquire other complex cognitive skills.

Automaticity is the performance of a skill without conscious control. It

results from the gradated process of proceduralization. In the field of

cognitive psychology, Anderson expounds a model of skill acquisition,

according to which persons use procedures to apply their declarative

knowledge about a subject in order to solve problems.

On repeated practice, these procedures develop into production rules

that the individual can use to solve the problem, without accessing

long-term declarative memory. Performance speed and accuracy improve as

the learner implements these production rules. DeKeyser tested the

application of this model to L2 language automaticity.

He found that subjects developed increasing proficiency in performing

tasks related to the morphosyntax of an artificial language,

Autopractan, and performed on a learning curve typical of the

acquisition of non-language cognitive skills. This evidence conforms to

Anderson's general model of cognitive skill acquisition, supports the

idea that declarative knowledge can be transformed into procedural

knowledge, and tends to undermine the idea of Krashen that knowledge gained through language “learning” cannot be used to initiate speech production.

Declarative/procedural model

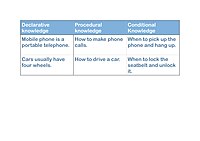

An example of declarative knowledge, procedural knowledge, and conditional knowledge

Michael T. Ullman

has used a declarative/procedural model to understand how language

information is stored. This model is consistent with a distinction made

in general cognitive science between the storage and retrieval of facts,

on the one hand, and understanding of how to carry out operations, on

the other. It states that declarative knowledge consists of arbitrary

linguistic information, such as irregular verb forms, that are stored in

the brain's declarative memory. In contrast, knowledge about the rules of a language, such as grammatical word order is procedural knowledge and is stored in procedural memory. Ullman reviews several psycholinguistic and neurolinguistic studies that support the declarative/procedural model.

Memory and second-language acquisition

Perhaps

certain psychological characteristics constrain language processing.

One area of research is the role of memory. Williams conducted a study

in which he found some positive correlation between verbatim memory

functioning and grammar learning success for his subjects.

This suggests that individuals with less short-term memory capacity

might have a limitation in performing cognitive processes for

organization and use of linguistic knowledge.

Semantic theory

For

the second-language learner, the acquisition of meaning is arguably the

most important task. Meaning is at the heart of a language, not the

exotic sounds or elegant sentence structure. There are several types of

meanings: lexical, grammatical, semantic, and pragmatic. All the

different meanings contribute to the acquisition of meaning resulting in

the integrated second language possession:

Lexical meaning – meaning that is stored in our mental lexicon;

Grammatical meaning – comes into consideration when calculating

the meaning of a sentence. usually encoded in inflectional morphology

(ex. - ed for past simple, -‘s for third person possessive)

Semantic meaning – word meaning;

Pragmatic meaning – meaning that depends on context, requires

knowledge of the world to decipher; for example, when someone asks on

the phone, “Is Mike there?” he doesn’t want to know if Mike is

physically there; he wants to know if he can talk to Mike.

Sociocultural theory

Larsen-Freeman

Sociocultural theory was originally coined by Wertsch in 1985 and derived from the work of Lev Vygotsky and the Vygotsky Circle

in Moscow from the 1920s onwards. Sociocultural theory is the notion

that human mental function is from participating cultural mediation

integrated into social activities.

The central thread of sociocultural theory focuses on diverse social,

historical, cultural, and political contexts where language learning

occurs and how learners negotiate or resist the diverse options that

surround them. More recently, in accordance with this sociocultural thread,

Larsen-Freeman (2011) created the triangle form that shows the interplay

of four Important concepts in language learning and education: (a)

teacher, (b) learner, (c) language or culture and (d) context.

In this regard, what makes sociocultural theory different from other

theories is that it argues that second learning acquisition is not a

universal process. On the contrary, it views learners as active

participants by interacting with others and also the culture of the

environment.

Complex Dynamic Systems Theory

Second language acquisition has been usually investigated by applying traditional cross-sectional studies. In these designs usually a pre-test post-test

method is used. However, in the 2000s a novel angle emerged in the

field of second language research. These studies mainly adopt Dynamic systems theory perspective to analyse longitudinal time-series data. Scientists such as Larsen-Freeman, Verspoor, de Bot, Lowie, van Geert claim that second language acquisition can be best captured by applying longitudinal case study research design rather than cross-sectional designs. In these studies variability is seen a key indicator of development, self-organization from a Dynamic systems parlance. The interconnectedness of the systems is usually analysed by moving correlations.