From Wikipedia, the free encyclopedia

One definition of Open science holds that it is the movement

to make scientific research (including publications, data, physical

samples, and software) and its dissemination accessible to all levels of an inquiring society, amateur or professional. Open science is transparent and accessible knowledge that is shared and developed through collaborative networks. It encompasses practices such as publishing open research, campaigning for open access, encouraging scientists to practice open-notebook science, and generally making it easier to publish and communicate scientific knowledge.

Usage of the term Open science varies substantially across disciplines, with a notable prevalence in the STEM disciplines. Open research

is often used quasi-synonymously to address the gap that the denotion

of "science" might have regarding an inclusion of the Arts, Humanities

and Social Sciences. The primary focus connecting all disciplines is the

widespread uptake of new technologies and tools, and the underlying

ecology of the production, dissemination and reception of knowledge from

a research-based point-of-view.

As Tennant et al. (2020) note, the term Open science "implicitly seems only to regard ‘scientific’ disciplines, whereas Open Scholarship can be considered to include research from the Arts and Humanities (Eve 2014; Knöchelmann 2019),

as well as the different roles and practices that researchers perform

as educators and communicators, and an underlying open philosophy of

sharing knowledge beyond research communities."

Open Science can be seen as a continuation of, rather than a

revolution in, practices begun in the 17th century with the advent of

the academic journal,

when the societal demand for access to scientific knowledge reached a

point at which it became necessary for groups of scientists to share

resources with each other so that they could collectively do their work. In modern times there is debate about the extent to which scientific information should be shared.

The conflict that led to the Open Science movement is between the

desire of scientists to have access to shared resources versus the

desire of individual entities to profit when other entities partake of

their resources. Additionally, the status of open access and resources that are available for its promotion are likely to differ from one field of academic inquiry to another.

Principles

Open science elements based on UNESCO presentation of 17 February 2021

The six principles of open science are:

- Open methodology

- Open source

- Open data

- Open access

- Open peer review

- Open educational resources

The figure to the right shows a breakdown of elements based on a UNESCO presentation in early 2021. This depiction includes indigenous science.

Open science involves the principles of transparency,

accessibility, authorization, and participation, underlying science

practice.

Background

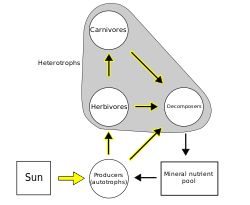

Science

is broadly understood as collecting, analyzing, publishing,

reanalyzing, critiquing, and reusing data. Proponents of open science

identify a number of barriers that impede or dissuade the broad

dissemination of scientific data.

These include financial paywalls

of for-profit research publishers, restrictions on usage applied by

publishers of data, poor formatting of data or use of proprietary

software that makes it difficult to re-purpose, and cultural reluctance

to publish data for fears of losing control of how the information is

used.

According to the FOSTER taxonomy Open science can often include aspects of Open access, Open data and the open source movement whereby modern science requires software to process data and information. Open research computation also addresses the problem of reproducibility of scientific results.

Types

The term

"open science" does not have any one fixed definition or

operationalization. On the one hand, it has been referred to as a

"puzzling phenomenon".

On the other hand, the term has been used to encapsulate a series of

principles that aim to foster scientific growth and its complementary

access to the public. Two influential sociologists, Benedikt Fecher and

Sascha Friesike, have created multiple "schools of thought" that

describe the different interpretations of the term.

According to Fecher and Friesike ‘Open Science’ is an umbrella

term for various assumptions about the development and dissemination of

knowledge. To show the term's multitudinous perceptions, they

differentiate between five Open Science schools of thought:

Infrastructure School

The

infrastructure school is founded on the assumption that "efficient"

research depends on the availability of tools and applications.

Therefore, the "goal" of the school is to promote the creation of openly

available platforms, tools, and services for scientists. Hence, the

infrastructure school is concerned with the technical infrastructure

that promotes the development of emerging and developing research

practices through the use of the internet, including the use of software

and applications, in addition to conventional computing networks. In

that sense, the infrastructure school regards open science as a

technological challenge. The infrastructure school is tied closely with

the notion of "cyberscience", which describes the trend of applying

information and communication technologies to scientific research, which

has led to an amicable development of the infrastructure school.

Specific elements of this prosperity include increasing collaboration

and interaction between scientists, as well as the development of

"open-source science" practices. The sociologists discuss two central

trends in the infrastructure school:

1. Distributed computing:

This trend encapsulates practices that outsource complex, process-heavy

scientific computing to a network of volunteer computers around the

world. The examples that the sociologists cite in their paper is that

of the Open Science Grid,

which enables the development of large-scale projects that require

high-volume data management and processing, which is accomplished

through a distributed computer network. Moreover, the grid provides the

necessary tools that the scientists can use to facilitate this process.

2. Social and Collaboration Networks of Scientists: This trend

encapsulates the development of software that makes interaction with

other researchers and scientific collaborations much easier than

traditional, non-digital practices. Specifically, the trend is focused

on implementing newer Web 2.0 tools to facilitate research related activities on the internet. De Roure and colleagues (2008) list a series of four key capabilities which they believe define a Social Virtual Research Environment (SVRE):

- The SVRE should primarily aid the management and sharing of

research objects. The authors define these to be a variety of digital

commodities that are used repeatedly by researchers.

- Second, the SVRE should have inbuilt incentives for researchers to make their research objects available on the online platform.

- Third, the SVRE should be "open" as well as "extensible", implying

that different types of digital artifacts composing the SVRE can be

easily integrated.

- Fourth, the authors propose that the SVRE is more than a simple

storage tool for research information. Instead, the researchers propose

that the platform should be "actionable". That is, the platform should

be built in such a way that research objects can be used in the conduct

of research as opposed to simply being stored.

Measurement school

The measurement school, in the view of the authors, deals with developing alternative methods to determine scientific impact. This school acknowledges that measurements of scientific impact are crucial to a researcher's reputation, funding

opportunities, and career development. Hence, the authors argue, that

any discourse about Open Science is pivoted around developing a robust

measure of scientific impact in the digital age. The authors then

discuss other research indicating support for the measurement school.

The three key currents of previous literature discussed by the authors

are:

- The peer-review is described as being time-consuming.

- The impact of an article, tied to the name of the authors of the

article, is related more to the circulation of the journal rather than

the overall quality of the article itself.

- New publishing formats that are closely aligned with the philosophy

of Open Science are rarely found in the format of a journal that allows

for the assignment of the impact factor.

Hence, this school argues that there are faster impact measurement

technologies that can account for a range of publication types as well

as social media web coverage of a scientific contribution to arrive at a

complete evaluation of how impactful the science contribution was. The

gist of the argument for this school is that hidden uses like reading,

bookmarking, sharing, discussing and rating are traceable activities,

and these traces can and should be used to develop a newer measure of

scientific impact. The umbrella jargon for this new type of impact

measurements is called altmetrics, coined in a 2011 article by Priem et

al., (2011). Markedly, the authors discuss evidence that altmetrics differ from traditional webometrics

which are slow and unstructured. Altmetrics are proposed to rely upon a

greater set of measures that account for tweets, blogs, discussions,

and bookmarks. The authors claim that the existing literature has often

proposed that altmetrics should also encapsulate the scientific process,

and measure the process of research and collaboration to create an

overall metric. However, the authors are explicit in their assessment

that few papers offer methodological details as to how to accomplish

this. The authors use this and the general dearth of evidence to

conclude that research in the area of altmetrics is still in its

infancy.

Public School

According

to the authors, the central concern of the school is to make science

accessible to a wider audience. The inherent assumption of this school,

as described by the authors, is that the newer communication

technologies such as Web 2.0

allow scientists to open up the research process and also allow

scientist to better prepare their "products of research" for interested

non-experts. Hence, the school is characterized by two broad streams:

one argues for the access of the research process to the masses, whereas

the other argues for increased access to the scientific product to the

public.

- Accessibility to the Research Process: Communication technology

allows not only for the constant documentation of research but also

promotes the inclusion of many different external individuals in the

process itself. The authors cite citizen science-

the participation of non-scientists and amateurs in research. The

authors discuss instances in which gaming tools allow scientists to

harness the brain power of a volunteer workforce to run through several

permutations of protein-folded structures. This allows for scientists to

eliminate many more plausible protein structures while also "enriching"

the citizens about science. The authors also discuss a common criticism

of this approach: the amateur nature of the participants threatens to

pervade the scientific rigor of experimentation.

- Comprehensibility of the Research Result: This stream of research

concerns itself with making research understandable for a wider

audience. The authors describe a host of authors that promote the use of

specific tools for scientific communication, such as microblogging

services, to direct users to relevant literature. The authors claim that

this school proposes that it is the obligation of every researcher to

make their research accessible to the public. The authors then proceed

to discuss if there is an emerging market for brokers and mediators of

knowledge that is otherwise too complicated for the public to grasp

effortlessly.

Democratic school

The democratic school concerns itself with the concept of access to knowledge.

As opposed to focusing on the accessibility of research and its

understandability, advocates of this school focus on the access of

products of research to the public. The central concern of the school is

with the legal and other obstacles that hinder the access of research

publications and scientific data to the public. The authors argue that

proponents of this school assert that any research product should be

freely available. The authors argue that the underlying notion of this

school is that everyone has the same, equal right of access to

knowledge, especially in the instances of state-funded experiments and

data. The authors categorize two central currents that characterize

this school: Open Access and Open Data.

- Open Data:

The authors discuss existing attitudes in the field that rebel against

the notion that publishing journals should claim copyright over

experimental data, which prevents the re-use of data and therefore

lowers the overall efficiency of science in general. The claim is that

journals have no use of the experimental data and that allowing other

researchers to use this data will be fruitful. The authors cite other

literature streams that discovered that only a quarter of researchers

agree to share their data with other researchers because of the effort

required for compliance.

- Open Access

to Research Publication: According to this school, there is a gap

between the creation and sharing of knowledge. Proponents argue, as the

authors describe, that even scientific knowledge doubles every 5 years,

access to this knowledge remains limited. These proponents consider

access to knowledge as a necessity for human development, especially in

the economic sense.

Pragmatic School

The

pragmatic school considers Open Science as the possibility to make

knowledge creation and dissemination more efficient by increasing the

collaboration throughout the research process. Proponents argue that

science could be optimized by modularizing the process and opening up

the scientific value chain. ‘Open’ in this sense follows very much the

concept of open innovation.

Take for instance transfers the outside-in (including external

knowledge in the production process) and inside-out (spillovers from the

formerly closed production process) principles to science. Web 2.0 is considered a set of helpful tools that can foster collaboration (sometimes also referred to as Science 2.0). Further, citizen science is seen as a form of collaboration that includes knowledge and information from non-scientists. Fecher and Friesike describe data sharing

as an example of the pragmatic school as it enables researchers to use

other researchers’ data to pursue new research questions or to conduct

data-driven replications.

History

The widespread adoption of the institution of the scientific journal

marks the beginning of the modern concept of open science. Before this

time societies pressured scientists into secretive behaviors.

Before journals

Before the advent of scientific journals, scientists had little to gain and much to lose by publicizing scientific discoveries. Many scientists, including Galileo, Kepler, Isaac Newton, Christiaan Huygens, and Robert Hooke,

made claim to their discoveries by describing them in papers coded in

anagrams or cyphers and then distributing the coded text.

Their intent was to develop their discovery into something off which

they could profit, then reveal their discovery to prove ownership when

they were prepared to make a claim on it.

The system of not publicizing discoveries caused problems because

discoveries were not shared quickly and because it sometimes was

difficult for the discoverer to prove priority. Newton and Gottfried Leibniz both claimed priority in discovering calculus. Newton said that he wrote about calculus in the 1660s and 1670s, but did not publish until 1693. Leibniz published "Nova Methodus pro Maximis et Minimis",

a treatise on calculus, in 1684. Debates over priority are inherent in

systems where science is not published openly, and this was problematic

for scientists who wanted to benefit from priority.

These cases are representative of a system of aristocratic patronage in which scientists received funding to develop either immediately useful things or to entertain.

In this sense, funding of science gave prestige to the patron in the

same way that funding of artists, writers, architects, and philosophers

did.

Because of this, scientists were under pressure to satisfy the desires

of their patrons, and discouraged from being open with research which

would bring prestige to persons other than their patrons.

Emergence of academies and journals

Eventually the individual patronage system ceased to provide the scientific output which society began to demand. Single patrons could not sufficiently fund scientists, who had unstable careers and needed consistent funding.

The development which changed this was a trend to pool research by

multiple scientists into an academy funded by multiple patrons. In 1660 England established the Royal Society and in 1666 the French established the French Academy of Sciences.

Between the 1660s and 1793, governments gave official recognition to 70

other scientific organizations modeled after those two academies. In 1665, Henry Oldenburg became the editor of Philosophical Transactions of the Royal Society, the first academic journal devoted to science, and the foundation for the growth of scientific publishing. By 1699 there were 30 scientific journals; by 1790 there were 1052. Since then publishing has expanded at even greater rates.

Popular Science Writing

The

first popular science periodical of its kind was published in 1872,

under a suggestive name that is still a modern portal for the offering

science journalism: Popular Science. The magazine claims to have

documented the invention of the telephone, the phonograph, the electric

light and the onset of automobile technology. The magazine goes so far

as to claim that the "history of Popular Science is a true reflection of

humankind's progress over the past 129+ years".

Discussions of popular science writing most often contend their

arguments around some type of "Science Boom". A recent historiographic

account of popular science traces mentions of the term "science boom" to

Daniel Greenberg's Science and Government Reports in 1979 which posited

that "Scientific magazines are bursting out all over. Similarly, this

account discusses the publication Time, and its cover story of Carl

Sagan in 1980 as propagating the claim that popular science has "turned

into enthusiasm".

Crucially, this secondary accounts asks the important question as to

what was considered as popular "science" to begin with. The paper claims

that any account of how popular science writing bridged the gap between

the informed masses and the expert scientists must first consider who

was considered a scientist to begin with.

Collaboration among academies

In

modern times many academies have pressured researchers at publicly

funded universities and research institutions to engage in a mix of

sharing research and making some technological developments proprietary.

Some research products have the potential to generate commercial

revenue, and in hope of capitalizing on these products, many research

institutions withhold information and technology which otherwise would

lead to overall scientific advancement if other research institutions

had access to these resources.

It is difficult to predict the potential payouts of technology or to

assess the costs of withholding it, but there is general agreement that

the benefit to any single institution of holding technology is not as

great as the cost of withholding it from all other research

institutions.

Coining of phrase "OpenScience"

The exact phrase "Open Science" was coined by Steve Mann

in 1998 at which time he also registered the domain name

openscience.com and openscience.org which he sold to degruyter.com in

2011.

Politics

In

many countries, governments fund some science research. Scientists often

publish the results of their research by writing articles and donating

them to be published in scholarly journals, which frequently are

commercial. Public entities such as universities and libraries subscribe

to these journals. Michael Eisen, a founder of the Public Library of Science,

has described this system by saying that "taxpayers who already paid

for the research would have to pay again to read the results."

In December 2011, some United States legislators introduced a bill called the Research Works Act,

which would prohibit federal agencies from issuing grants with any

provision requiring that articles reporting on taxpayer-funded research

be published for free to the public online.

Darrell Issa, a co-sponsor of the bill, explained the bill by saying

that "Publicly funded research is and must continue to be absolutely

available to the public. We must also protect the value added to

publicly funded research by the private sector and ensure that there is

still an active commercial and non-profit research community." One response to this bill was protests from various researchers; among them was a boycott of commercial publisher Elsevier called The Cost of Knowledge.

The Dutch Presidency of the Council of the European Union called out for action in April 2016 to migrate European Commission funded research to Open Science. European Commissioner Carlos Moedas introduced the Open Science Cloud at the Open Science Conference in Amsterdam on 4–5 April. During this meeting also The Amsterdam Call for Action on Open Science was presented, a living document outlining concrete actions for the European Community to move to Open Science.

Standard setting instruments

There is currently no global normative framework covering all aspects of Open Science. In November 2019, UNESCO

was tasked by its 193 Member States, during their 40th General

Conference, with leading a global dialogue on Open Science to identify

globally-agreed norms and to create a standard-setting instrument.

The multistakeholder, consultative, inclusive and participatory process

to define a new global normative instrument on Open Science is expected

to take two years and to lead to the adoption of a UNESCO

Recommendation on Open Science by Member States in 2021.

Two UN frameworks set out some common global standards for

application of Open Science and closely related concepts: the UNESCO

Recommendation on Science and Scientific Researchers,

approved by the General Conference at its 39th session in 2017, and the

UNESCO Strategy on Open Access to scientific information and research, approved by the General Conference at its 36th session in 2011.

Advantages and disadvantages

Arguments

in favor of open science generally focus on the value of increased

transparency in research, and in the public ownership of science,

particularly that which is publicly funded. In January 2014 J.

Christopher Bare published a comprehensive "Guide to Open Science".

Likewise, in 2017, a group of scholars known for advocating open

science published a "manifesto" for open science in the journal Nature.

Advantages

- Open access publication of research reports and data allows for rigorous peer-review

An article published by a team of NASA astrobiologists in 2010 in Science reported a bacterium known as GFAJ-1 that could purportedly metabolize arsenic (unlike any previously known species of lifeform). This finding, along with NASA's claim that the paper "will impact the search for evidence of extraterrestrial life", met with criticism within the scientific community.

Much of the scientific commentary and critique around this issue took

place in public forums, most notably on Twitter, where hundreds of

scientists and non-scientists created a hashtag community around the hashtag #arseniclife.

University of British Columbia astrobiologist Rosie Redfield, one of

the most vocal critics of the NASA team's research, also submitted a

draft of a research report of a study that she and colleagues conducted

which contradicted the NASA team's findings; the draft report appeared

in arXiv,

an open-research repository, and Redfield called in her lab's research

blog for peer review both of their research and of the NASA team's

original paper.

Researcher Jeff Rouder defined Open Science as "endeavoring to preserve

the rights of others to reach independent conclusions about your data

and work".

Publicly funded science will be publicly available

Public funding of research has long been cited as one of the primary reasons for providing Open Access to research articles.

Since there is significant value in other parts of the research such as

code, data, protocols, and research proposals a similar argument is

made that since these are publicly funded, they should be publicly

available under a Creative Commons Licence.

Open science will make science more reproducible and transparent

Increasingly the reproducibility of science is being questioned and the term "reproducibility crisis" has been coined. For example, psychologist Stuart Vyse

notes that "(r)ecent research aimed at previously published psychology

studies has demonstrated--shockingly--that a large number of classic

phenomena cannot be reproduced, and the popularity of p-hacking is thought to be one of the culprits." Open Science approaches are proposed as one way to help increase the reproducibility of work as well as to help mitigate against manipulation of data.

Open science has more impact

There are several components to impact in research, many of which are hotly debated.

However, under traditional scientific metrics parts Open science such

as Open Access and Open Data have proved to outperform traditional

versions.

Open science will help answer uniquely complex questions

Recent arguments in favor of Open Science have maintained that

Open Science is a necessary tool to begin answering immensely complex

questions, such as the neural basis of consciousness.

The typical argument propagates the fact that these type of

investigations are too complex to be carried out by any one individual,

and therefore, they must rely on a network of open scientists to be

accomplished. By default, the nature of these investigations also makes

this "open science" as "big science".

Disadvantages

The open sharing of research data is not widely practiced

Arguments against open science tend to focus on the advantages of data ownership and concerns about the misuse of data.

- Potential misuse

In 2011, Dutch researchers announced their intention to publish a research paper in the journal Science describing the creation of a strain of H5N1 influenza which can be easily passed between ferrets, the mammals which most closely mimic the human response to the flu. The announcement triggered a controversy in both political and scientific circles about the ethical implications of publishing scientific data which could be used to create biological weapons. These events are examples of how science data could potentially be misused. Scientists have collaboratively agreed to limit their own fields of inquiry on occasions such as the Asilomar conference on recombinant DNA in 1975, and a proposed 2015 worldwide moratorium on a human-genome-editing technique.

- The public may misunderstand science data

In 2009 NASA launched the Kepler

spacecraft and promised that they would release collected data in June

2010. Later they decided to postpone release so that their scientists

could look at it first. Their rationale was that non-scientists might

unintentionally misinterpret the data, and NASA scientists thought it

would be preferable for them to be familiar with the data in advance so

that they could report on it with their level of accuracy.

- Low-quality science

Post-publication peer review, a staple of open science, has been

criticized as promoting the production of lower quality papers that are

extremely voluminous.

Specifically, critics assert that as quality is not guaranteed by

preprint servers, the veracity of papers will be difficult to assess by

individual readers. This will lead to rippling effects of false science,

akin to the recent epidemic of false news, propagated with ease on

social media websites.

Common solutions to this problem have been cited as adaptations of a

new format in which everything is allowed to be published but a

subsequent filter-curator model is imposed to ensure some basic quality

of standards are met by all publications.

- Entrapment by platform capitalism

For Philip Mirowski open science runs the risk of continuing a trend of commodification of science which ultimately serves the interests of capital in the guise of platform capitalism.

Actions and initiatives

Open-science projects

Different projects conduct, advocate, develop tools for, or fund open science.

The Allen Institute for Brain Science conducts numerous open science projects while the Center for Open Science

has projects to conduct, advocate, and create tools for open science.

Other workgroups have been created in different fields, such as the

Decision Analysis in R for Technologies in Health (DARTH) workgroup],

which is a multi-institutional, multi-university collaborative effort

by researchers who have a common goal to develop transparent and

open-source solutions to decision analysis in health.

Organizations have extremely diverse sizes and structures. The Open Knowledge Foundation

(OKF) is a global organization sharing large data catalogs, running

face to face conferences, and supporting open source software projects.

In contrast, Blue Obelisk is an informal group of chemists and associated cheminformatics projects. The tableau of organizations is dynamic with some organizations becoming defunct, e.g., Science Commons, and new organizations trying to grow, e.g., the Self-Journal of Science. Common organizing forces include the knowledge domain, type of service provided, and even geography, e.g., OCSDNet's concentration on the developing world.

The Allen Brain Atlas maps gene expression in human and mouse brains; the Encyclopedia of Life documents all the terrestrial species; the Galaxy Zoo classifies galaxies; the International HapMap Project maps the haplotypes of the human genome; the Monarch Initiative makes available integrated public model organism and clinical data; and the Sloan Digital Sky Survey

which regularizes and publishes data sets from many sources. All these

projects accrete information provided by many different researchers with

different standards of curation and contribution.

Mathematician Timothy Gowers launched open science journal Discrete Analysis in 2016 to demonstrate that a high-quality mathematics journal could be produced outside the traditional academic publishing industry. The launch followed a boycott of scientific journals that he initiated. The journal is published by a nonprofit which is owned and published by a team of scholars.

Other projects are organized around completion of projects that require extensive collaboration. For example, OpenWorm seeks to make a cellular level simulation of a roundworm, a multidisciplinary project. The Polymath Project

seeks to solve difficult mathematical problems by enabling faster

communications within the discipline of mathematics. The Collaborative

Replications and Education project recruits undergraduate students as citizen scientists by offering funding. Each project defines its needs for contributors and collaboration.

Another practical example for open science project was the first

"open" doctoral thesis started in 2012. It was made publicly available

as a self-experiment right from the start to examine whether this

dissemination is even possible during the productive stage of scientific

studies.

The goal of the dissertation project: Publish everything related to the

doctoral study and research process as soon as possible, as

comprehensive as possible and under an open license, online available at

all time for everyone. End of 2017, the experiment was successfully completed and published in early 2018 as an open access book.

The ideas of open science have also been applied to recruitment

with jobRxiv, a free and international job board that aims to mitigate

imbalances in what different labs can afford to spend on hiring.

Advocacy

Numerous

documents, organizations, and social movements advocate wider adoption

of open science. Statements of principles include the Budapest Open Access Initiative from a December 2001 conference and the Panton Principles. New statements are constantly developed, such as the Amsterdam Call for Action on Open Science to be presented to the Dutch Presidency of the Council of the European Union in late May 2016. These statements often try to regularize licenses and disclosure for data and scientific literature.

Other advocates concentrate on educating scientists about

appropriate open science software tools. Education is available as

training seminars, e.g., the Software Carpentry project; as domain specific training materials, e.g., the Data Carpentry

project; and as materials for teaching graduate classes, e.g., the Open

Science Training Initiative. Many organizations also provide education

in the general principles of open science.

Within scholarly societies there are also sections and interest groups that promote open science practices. The Ecological Society of America has an Open Science Section. Similarly, the Society for American Archaeology has an Open Science Interest Group.

Journal support

Many individual journals are experimenting with the open access model: the Public Library of Science, or PLOS, is creating a library of open access journals and scientific literature. Other publishing experiments include delayed and hybrid models. There are experiments in different fields:

Journal support for open-science does not contradict with preprint servers:

figshare archives and shares images, readings, and other data; and Open Science Framework preprints, arXiv, and HAL Archives Ouvertes provide electronic preprints across many fields.

Software

A variety of computer resources support open science. These include software like the Open Science Framework from the Center for Open Science to manage project information, data archiving and team coordination; distributed computing services like Ibercivis to use unused CPU time for computationally intensive tasks; and services like Experiment.com to provide crowdsourced funding for research projects.

Blockchain

platforms for open science have been proposed. The first such platform

is the Open Science Organization, which aims to solve urgent problems

with fragmentation of the scientific ecosystem and difficulties of

producing validated, quality science. Among the initiatives of Open

Science Organization include the Interplanetary Idea System (IPIS),

Researcher Index (RR-index), Unique Researcher Identity (URI), and

Research Network. The Interplanetary Idea System is a blockchain based

system that tracks the evolution of scientific ideas over time. It

serves to quantify ideas based on uniqueness and importance, thus

allowing the scientific community to identify pain points with current

scientific topics and preventing unnecessary re-invention of previously

conducted science. The Researcher Index aims to establish a data-driven

statistical metric for quantifying researcher impact. The Unique

Researcher Identity is a blockchain technology based solution for

creating a single unifying identity for each researcher, which is

connected to the researcher's profile, research activities, and

publications. The Research Network is a social networking platform for

researchers.

A scientific paper from November 2019 examined the suitability of blockchain technology to support open science.

The results of their research showed that the technology is well suited

for open science and can provide advantages, for example, in data

security, trust, and collaboration. However, they state that the

widespread use of the technology depends on whether the scientific

community accepts it and adapts its processes accordingly.

Preprint servers

Preprint Servers come in many varieties, but the standard traits

across them are stable: they seek to create a quick, free mode of

communicating scientific knowledge to the public. Preprint servers act

as a venue to quickly disseminate research and vary on their policies

concerning when articles may be submitted relative to journal

acceptance.

Also typical of preprint servers is their lack of a peer-review process

– typically, preprint servers have some type of quality check in place

to ensure a minimum standard of publication, but this mechanism is not

the same as a peer-review mechanism. Some preprint servers have

explicitly partnered with the broader open science movement. Preprint servers can offer service similar to those of journals, and Google Scholar indexes many preprint servers and collects information about citations to preprints. The case for preprint servers is often made based on the slow pace of conventional publication formats.

The motivation to start Socarxiv, an open-access preprint server for

social science research, is the claim that valuable research being

published in traditional venues often takes several months to years to

get published, which slows down the process of science significantly.

Another argument made in favor of preprint servers like Socarxiv is the

quality and quickness of feedback offered to scientists on their

pre-published work.

The founders of Socarxiv claim that their platform allows researchers

to gain easy feedback from their colleagues on the platform, thereby

allowing scientists to develop their work into the highest possible

quality before formal publication and circulation. The founders of

Socarxiv further claim that their platform affords the authors the

greatest level of flexibility in updating and editing their work to

ensure that the latest version is available for rapid dissemination. The

founders claim that this is not traditionally the case with formal

journals, which instate formal procedures to make updates to published

articles.

Perhaps the strongest advantage of some preprint servers is their

seamless compatibility with Open Science software such as the Open

Science Framework. The founders of SocArXiv claim that their preprint

server connects all aspects of the research life cycle in OSF with the

article being published on the preprint server. According to the

founders, this allows for greater transparency and minimal work on the

authors' part.

One criticism of pre-print servers is their potential to foster a

culture of plagiarism. For example, the popular physics preprint server

ArXiv had to withdraw 22 papers when it came to light that they were

plagiarized. In June 2002, a high-energy physicist in Japan was

contacted by a man called Ramy Naboulsi, a non-institutionally

affiliated mathematical physicist. Naboulsi requested Watanabe to upload

his papers on ArXiv as he was not able to do so, because of his lack of

an institutional affiliation. Later, the papers were realized to have

been copied from the proceedings of a physics conference.

Preprint servers are increasingly developing measures to circumvent

this plagiarism problem. In developing nations like India and China,

explicit measures are being taken to combat it.

These measures usually involve creating some type of central repository

for all available pre-prints, allowing the use of traditional

plagiarism detecting algorithms to detect the fraud. Nonetheless, this is a pressing issue in the discussion of pre-print servers, and consequently for open science.