| Part of a series of articles on |

| Synthetic biology |

|---|

| Synthetic biological circuits |

| Genome editing |

| Artificial cells |

| Xenobiology |

| Other topics |

Synthetic biology (SynBio) is a multidisciplinary area of research that seeks to create new biological parts, devices, and systems, or to redesign systems that are already found in nature.

It is a branch of science that encompasses a broad range of methodologies from various disciplines, such as biotechnology, genetic engineering, molecular biology, molecular engineering, systems biology, membrane science, biophysics, chemical and biological engineering, electrical and computer engineering, control engineering and evolutionary biology.

Due to more powerful genetic engineering capabilities and decreased DNA synthesis and sequencing costs, the field of synthetic biology is rapidly growing. In 2016, more than 350 companies across 40 countries were actively engaged in synthetic biology applications; all these companies had an estimated net worth of $3.9 billion in the global market.

Definition

Synthetic biology currently has no generally accepted definition. Here are a few examples:

- "the use of a mixture of physical engineering and genetic engineering to create new (and, therefore, synthetic) life forms"

- "an emerging field of research that aims to combine the knowledge and methods of biology, engineering and related disciplines in the design of chemically synthesized DNA to create organisms with novel or enhanced characteristics and traits"

- "designing and constructing biological modules, biological systems, and biological machines or, re-design of existing biological systems for useful purposes"

- “applying the engineering paradigm of systems design to biological systems in order to produce predictable and robust systems with novel functionalities that do not exist in nature” (The European Commission, 2005) This can include the possibility of a molecular assembler, based upon biomolecular systems such as the ribosome

Synthetic biology has traditionally been divided into two different approaches: top down and bottom up.

- The top down approach involves using metabolic and genetic engineering techniques to impart new functions to living cells.

- The bottom up approach involves creating new biological systems in vitro by bringing together 'non-living' biomolecular components, often with the aim of constructing an artificial cell.

Biological systems are thus assembled module-by-module. Cell-free protein expression systems are often employed, as are membrane-based molecular machinery. There are increasing efforts to bridge the divide between these approaches by forming hybrid living/synthetic cells, and engineering communication between living and synthetic cell populations.

History

1910: First identifiable use of the term "synthetic biology" in Stéphane Leduc's publication Théorie physico-chimique de la vie et générations spontanées. He also noted this term in another publication, La Biologie Synthétique in 1912.

1961: Jacob and Monod postulate cellular regulation by molecular networks from their study of the lac operon in E. coli and envisioned the ability to assemble new systems from molecular components.

1973: First molecular cloning and amplification of DNA in a plasmid is published in P.N.A.S. by Cohen, Boyer et al. constituting the dawn of synthetic biology.

1978: Arber, Nathans and Smith win the Nobel Prize in Physiology or Medicine for the discovery of restriction enzymes, leading Szybalski to offer an editorial comment in the journal Gene:

The work on restriction nucleases not only permits us easily to construct recombinant DNA molecules and to analyze individual genes, but also has led us into the new era of synthetic biology where not only existing genes are described and analyzed but also new gene arrangements can be constructed and evaluated.

1988: First DNA amplification by the polymerase chain reaction (PCR) using a thermostable DNA polymerase is published in Science by Mullis et al. This obviated adding new DNA polymerase after each PCR cycle, thus greatly simplifying DNA mutagenesis and assembly.

2000: Two papers in Nature report synthetic biological circuits, a genetic toggle switch and a biological clock, by combining genes within E. coli cells.

2003: The most widely used standardized DNA parts, BioBrick plasmids, are invented by Tom Knight. These parts will become central to the international Genetically Engineered Machine competition (iGEM) founded at MIT in the following year.

2003: Researchers engineer an artemisinin precursor pathway in E. coli.

2004: First international conference for synthetic biology, Synthetic Biology 1.0 (SB1.0) is held at the Massachusetts Institute of Technology, USA.

2005: Researchers develop a light-sensing circuit in E. coli. Another group designs circuits capable of multicellular pattern formation.

2006: Researchers engineer a synthetic circuit that promotes bacterial invasion of tumour cells.

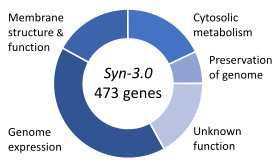

2010: Researchers publish in Science the first synthetic bacterial genome, called M. mycoides JCVI-syn1.0. The genome is made from chemically-synthesized DNA using yeast recombination.

2011: Functional synthetic chromosome arms are engineered in yeast.

2012: Charpentier and Doudna labs publish in Science the programming of CRISPR-Cas9 bacterial immunity for targeting DNA cleavage. This technology greatly simplified and expanded eukaryotic gene editing.

2019: Scientists at ETH Zurich report the creation of the first bacterial genome, named Caulobacter ethensis-2.0, made entirely by a computer, although a related viable form of C. ethensis-2.0 does not yet exist.

2019: Researchers report the production of a new synthetic (possibly artificial) form of viable life, a variant of the bacteria Escherichia coli, by reducing the natural number of 64 codons in the bacterial genome to 59 codons instead, in order to encode 20 amino acids.

Perspectives

Engineers view biology as a technology (in other words, a given system includes biotechnology or its biological engineering) Synthetic biology includes the broad redefinition and expansion of biotechnology, with the ultimate goals of being able to design and build engineered live biological systems that process information, manipulate chemicals, fabricate materials and structures, produce energy, provide food, and maintain and enhance human health, as well as advance fundamental knowledge of biological systems and our environment.

Studies in synthetic biology can be subdivided into broad classifications according to the approach they take to the problem at hand: standardization of biological parts, biomolecular engineering, genome engineering, metabolic engineering.

Biomolecular engineering includes approaches that aim to create a toolkit of functional units that can be introduced to present new technological functions in living cells. Genetic engineering includes approaches to construct synthetic chromosomes or minimal organisms like Mycoplasma laboratorium.

Biomolecular design refers to the general idea of de novo design and additive combination of biomolecular components. Each of these approaches share a similar task: to develop a more synthetic entity at a higher level of complexity by inventively manipulating a simpler part at the preceding level.

On the other hand, "re-writers" are synthetic biologists interested in testing the irreducibility of biological systems. Due to the complexity of natural biological systems, it would be simpler to rebuild the natural systems of interest from the ground up; In order to provide engineered surrogates that are easier to comprehend, control and manipulate. Re-writers draw inspiration from refactoring, a process sometimes used to improve computer software.

Enabling technologies

Several novel enabling technologies were critical to the success of synthetic biology. Concepts include standardization of biological parts and hierarchical abstraction to permit using those parts in synthetic systems. Basic technologies include reading and writing DNA (sequencing and fabrication). Measurements under multiple conditions are needed for accurate modeling and computer-aided design (CAD).

DNA and gene synthesis

Driven by dramatic decreases in costs of oligonucleotide ("oligos") synthesis and the advent of PCR, the sizes of DNA constructions from oligos have increased to the genomic level. In 2000, researchers reported synthesis of the 9.6 kbp (kilo bp) Hepatitis C virus genome from chemically synthesized 60 to 80-mers. In 2002 researchers at Stony Brook University succeeded in synthesizing the 7741 bp poliovirus genome from its published sequence, producing the second synthetic genome, spanning two years. In 2003 the 5386 bp genome of the bacteriophage Phi X 174 was assembled in about two weeks. In 2006, the same team, at the J. Craig Venter Institute, constructed and patented a synthetic genome of a novel minimal bacterium, Mycoplasma laboratorium and were working on getting it functioning in a living cell.

In 2007 it was reported that several companies were offering synthesis of genetic sequences up to 2000 base pairs (bp) long, for a price of about $1 per bp and a turnaround time of less than two weeks. Oligonucleotides harvested from a photolithographic- or inkjet-manufactured DNA chip combined with PCR and DNA mismatch error-correction allows inexpensive large-scale changes of codons in genetic systems to improve gene expression or incorporate novel amino-acids (see George M. Church's and Anthony Forster's synthetic cell projects.) This favors a synthesis-from-scratch approach.

Additionally, the CRISPR/Cas system has emerged as a promising technique for gene editing. It was described as "the most important innovation in the synthetic biology space in nearly 30 years". While other methods take months or years to edit gene sequences, CRISPR speeds that time up to weeks. Due to its ease of use and accessibility, however, it has raised ethical concerns, especially surrounding its use in biohacking.

Sequencing

DNA sequencing determines the order of nucleotide bases in a DNA molecule. Synthetic biologists use DNA sequencing in their work in several ways. First, large-scale genome sequencing efforts continue to provide information on naturally occurring organisms. This information provides a rich substrate from which synthetic biologists can construct parts and devices. Second, sequencing can verify that the fabricated system is as intended. Third, fast, cheap, and reliable sequencing can facilitate rapid detection and identification of synthetic systems and organisms.

Microfluidics

Microfluidics, in particular droplet microfluidics, is an emerging tool used to construct new components, and to analyse and characterize them. It is widely employed in screening assays.

Modularity

The most used standardized DNA parts are BioBrick plasmids, invented by Tom Knight in 2003. Biobricks are stored at the Registry of Standard Biological Parts in Cambridge, Massachusetts. The BioBrick standard has been used by thousands of students worldwide in the international Genetically Engineered Machine (iGEM) competition.

While DNA is most important for information storage, a large fraction of the cell's activities are carried out by proteins. Tools can send proteins to specific regions of the cell and to link different proteins together. The interaction strength between protein partners should be tunable between a lifetime of seconds (desirable for dynamic signaling events) up to an irreversible interaction (desirable for device stability or resilient to harsh conditions). Interactions such as coiled coils, SH3 domain-peptide binding or SpyTag/SpyCatcher offer such control. In addition it is necessary to regulate protein-protein interactions in cells, such as with light (using light-oxygen-voltage-sensing domains) or cell-permeable small molecules by chemically induced dimerization.

In a living cell, molecular motifs are embedded in a bigger network with upstream and downstream components. These components may alter the signaling capability of the modeling module. In the case of ultrasensitive modules, the sensitivity contribution of a module can differ from the sensitivity that the module sustains in isolation.

Modeling

Models inform the design of engineered biological systems by better predicting system behavior prior to fabrication. Synthetic biology benefits from better models of how biological molecules bind substrates and catalyze reactions, how DNA encodes the information needed to specify the cell and how multi-component integrated systems behave. Multiscale models of gene regulatory networks focus on synthetic biology applications. Simulations can model all biomolecular interactions in transcription, translation, regulation and induction of gene regulatory networks.

Synthetic transcription factors

Studies have considered the components of the DNA transcription mechanism. One desire of scientists creating synthetic biological circuits is to be able to control the transcription of synthetic DNA in unicellular organisms (prokaryotes) and in multicellular organisms (eukaryotes). One study tested the adjustability of synthetic transcription factors (sTFs) in areas of transcription output and cooperative ability among multiple transcription factor complexes. Researchers were able to mutate functional regions called zinc fingers, the DNA specific component of sTFs, to decrease their affinity for specific operator DNA sequence sites, and thus decrease the associated site-specific activity of the sTF (usually transcriptional regulation). They further used the zinc fingers as components of complex-forming sTFs, which are the eukaryotic translation mechanisms.

Applications

Biological computers

A biological computer refers to an engineered biological system that can perform computer-like operations, which is a dominant paradigm in synthetic biology. Researchers built and characterized a variety of logic gates in a number of organisms, and demonstrated both analog and digital computation in living cells. They demonstrated that bacteria can be engineered to perform both analog and/or digital computation. In human cells research demonstrated a universal logic evaluator that operates in mammalian cells in 2007. Subsequently, researchers utilized this paradigm to demonstrate a proof-of-concept therapy that uses biological digital computation to detect and kill human cancer cells in 2011. Another group of researchers demonstrated in 2016 that principles of computer engineering, can be used to automate digital circuit design in bacterial cells. In 2017, researchers demonstrated the 'Boolean logic and arithmetic through DNA excision' (BLADE) system to engineer digital computation in human cells.

Biosensors

A biosensor refers to an engineered organism, usually a bacterium, that is capable of reporting some ambient phenomenon such as the presence of heavy metals or toxins. One such system is the Lux operon of Aliivibrio fischeri, which codes for the enzyme that is the source of bacterial bioluminescence, and can be placed after a respondent promoter to express the luminescence genes in response to a specific environmental stimulus. One such sensor created, consisted of a bioluminescent bacterial coating on a photosensitive computer chip to detect certain petroleum pollutants. When the bacteria sense the pollutant, they luminesce. Another example of a similar mechanism is the detection of landmines by an engineered E.coli reporter strain capable of detecting TNT and its main degradation product DNT, and consequently producing a green fluorescent protein (GFP).

Modified organisms can sense environmental signals and send output signals that can be detected and serve diagnostic purposes. Microbe cohorts have been used.

Cell transformation

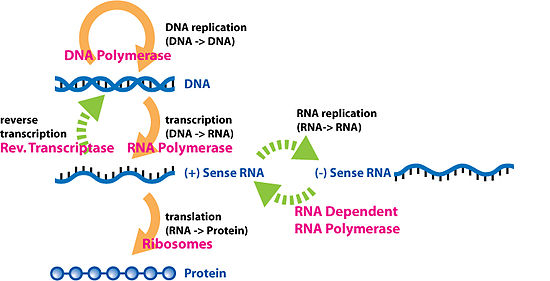

Cells use interacting genes and proteins, which are called gene circuits, to implement diverse function, such as responding to environmental signals, decision making and communication. Three key components are involved: DNA, RNA and Synthetic biologist designed gene circuits that can control gene expression from several levels including transcriptional, post-transcriptional and translational levels.

Traditional metabolic engineering has been bolstered by the introduction of combinations of foreign genes and optimization by directed evolution. This includes engineering E. coli and yeast for commercial production of a precursor of the antimalarial drug, Artemisinin.

Entire organisms have yet to be created from scratch, although living cells can be transformed with new DNA. Several ways allow constructing synthetic DNA components and even entire synthetic genomes, but once the desired genetic code is obtained, it is integrated into a living cell that is expected to manifest the desired new capabilities or phenotypes while growing and thriving. Cell transformation is used to create biological circuits, which can be manipulated to yield desired outputs.

By integrating synthetic biology with materials science, it would be possible to use cells as microscopic molecular foundries to produce materials with properties whose properties were genetically encoded. Re-engineering has produced Curli fibers, the amyloid component of extracellular material of biofilms, as a platform for programmable nanomaterial. These nanofibers were genetically constructed for specific functions, including adhesion to substrates, nanoparticle templating and protein immobilization.

Designed proteins

Natural proteins can be engineered, for example, by directed evolution, novel protein structures that match or improve on the functionality of existing proteins can be produced. One group generated a helix bundle that was capable of binding oxygen with similar properties as hemoglobin, yet did not bind carbon monoxide. A similar protein structure was generated to support a variety of oxidoreductase activities while another formed a structurally and sequentially novel ATPase. Another group generated a family of G-protein coupled receptors that could be activated by the inert small molecule clozapine N-oxide but insensitive to the native ligand, acetylcholine; these receptors are known as DREADDs. Novel functionalities or protein specificity can also be engineered using computational approaches. One study was able to use two different computational methods – a bioinformatics and molecular modeling method to mine sequence databases, and a computational enzyme design method to reprogram enzyme specificity. Both methods resulted in designed enzymes with greater than 100 fold specificity for production of longer chain alcohols from sugar.

Another common investigation is expansion of the natural set of 20 amino acids. Excluding stop codons, 61 codons have been identified, but only 20 amino acids are coded generally in all organisms. Certain codons are engineered to code for alternative amino acids including: nonstandard amino acids such as O-methyl tyrosine; or exogenous amino acids such as 4-fluorophenylalanine. Typically, these projects make use of re-coded nonsense suppressor tRNA-Aminoacyl tRNA synthetase pairs from other organisms, though in most cases substantial engineering is required.

Other researchers investigated protein structure and function by reducing the normal set of 20 amino acids. Limited protein sequence libraries are made by generating proteins where groups of amino acids may be replaced by a single amino acid. For instance, several non-polar amino acids within a protein can all be replaced with a single non-polar amino acid. One project demonstrated that an engineered version of Chorismate mutase still had catalytic activity when only 9 amino acids were used.

Researchers and companies practice synthetic biology to synthesize industrial enzymes with high activity, optimal yields and effectiveness. These synthesized enzymes aim to improve products such as detergents and lactose-free dairy products, as well as make them more cost effective. The improvements of metabolic engineering by synthetic biology is an example of a biotechnological technique utilized in industry to discover pharmaceuticals and fermentive chemicals. Synthetic biology may investigate modular pathway systems in biochemical production and increase yields of metabolic production. Artificial enzymatic activity and subsequent effects on metabolic reaction rates and yields may develop "efficient new strategies for improving cellular properties ... for industrially important biochemical production".

Designed nucleic acid systems

Scientists can encode digital information onto a single strand of synthetic DNA. In 2012, George M. Church encoded one of his books about synthetic biology in DNA. The 5.3 Mb of data was more than 1000 times greater than the previous largest amount of information to be stored in synthesized DNA. A similar project encoded the complete sonnets of William Shakespeare in DNA. More generally, algorithms such as NUPACK, ViennaRNA, Ribosome Binding Site Calculator, Cello, and Non-Repetitive Parts Calculator enables the design of new genetic systems.

Many technologies have been developed for incorporating unnatural nucleotides and amino acids into nucleic acids and proteins, both in vitro and in vivo. For example, in May 2014, researchers announced that they had successfully introduced two new artificial nucleotides into bacterial DNA. By including individual artificial nucleotides in the culture media, they were able to exchange the bacteria 24 times; they did not generate mRNA or proteins able to use the artificial nucleotides.

Space exploration

Synthetic biology raised NASA's interest as it could help to produce resources for astronauts from a restricted portfolio of compounds sent from Earth. On Mars, in particular, synthetic biology could lead to production processes based on local resources, making it a powerful tool in the development of manned outposts with less dependence on Earth. Work has gone into developing plant strains that are able to cope with the harsh Martian environment, using similar techniques to those employed to increase resilience to certain environmental factors in agricultural crops.

Synthetic life

One important topic in synthetic biology is synthetic life, that is concerned with hypothetical organisms created in vitro from biomolecules and/or chemical analogues thereof. Synthetic life experiments attempt to either probe the origins of life, study some of the properties of life, or more ambitiously to recreate life from non-living (abiotic) components. Synthetic life biology attempts to create living organisms capable of carrying out important functions, from manufacturing pharmaceuticals to detoxifying polluted land and water. In medicine, it offers prospects of using designer biological parts as a starting point for new classes of therapies and diagnostic tools.

A living "artificial cell" has been defined as a completely synthetic cell that can capture energy, maintain ion gradients, contain macromolecules as well as store information and have the ability to mutate. Nobody has been able to create such a cell.

A completely synthetic bacterial chromosome was produced in 2010 by Craig Venter, and his team introduced it to genomically emptied bacterial host cells. The host cells were able to grow and replicate. The Mycoplasma laboratorium is the only living organism with completely engineered genome.

The first living organism with 'artificial' expanded DNA code was presented in 2014; the team used E. coli that had its genome extracted and replaced with a chromosome with an expanded genetic code. The nucleosides added are d5SICS and dNaM.

In May 2019, researchers, in a milestone effort, reported the creation of a new synthetic (possibly artificial) form of viable life, a variant of the bacteria Escherichia coli, by reducing the natural number of 64 codons in the bacterial genome to 59 codons instead, in order to encode 20 amino acids.

In 2017 the international Build-a-Cell large-scale research collaboration for the construction of synthetic living cell was started, followed by national synthetic cell organizations in several countries, including FabriCell, MaxSynBio and BaSyC. The European synthetic cell efforts were unified in 2019 as SynCellEU initiative.

Drug delivery platforms

Engineered bacteria-based platform

Bacteria have long been used in cancer treatment. Bifidobacterium and Clostridium selectively colonize tumors and reduce their size. Recently synthetic biologists reprogrammed bacteria to sense and respond to a particular cancer state. Most often bacteria are used to deliver a therapeutic molecule directly to the tumor to minimize off-target effects. To target the tumor cells, peptides that can specifically recognize a tumor were expressed on the surfaces of bacteria. Peptides used include an affibody molecule that specifically targets human epidermal growth factor receptor 2 and a synthetic adhesin. The other way is to allow bacteria to sense the tumor microenvironment, for example hypoxia, by building an AND logic gate into bacteria. The bacteria then only release target therapeutic molecules to the tumor through either lysis or the bacterial secretion system. Lysis has the advantage that it can stimulate the immune system and control growth. Multiple types of secretion systems can be used and other strategies as well. The system is inducible by external signals. Inducers include chemicals, electromagnetic or light waves.

Multiple species and strains are applied in these therapeutics. Most commonly used bacteria are Salmonella typhimurium, Escherichia Coli, Bifidobacteria, Streptococcus, Lactobacillus, Listeria and Bacillus subtilis. Each of these species have their own property and are unique to cancer therapy in terms of tissue colonization, interaction with immune system and ease of application.

Cell-based platform

The immune system plays an important role in cancer and can be harnessed to attack cancer cells. Cell-based therapies focus on immunotherapies, mostly by engineering T cells.

T cell receptors were engineered and ‘trained’ to detect cancer epitopes. Chimeric antigen receptors (CARs) are composed of a fragment of an antibody fused to intracellular T cell signaling domains that can activate and trigger proliferation of the cell. A second generation CAR-based therapy was approved by FDA.

Gene switches were designed to enhance safety of the treatment. Kill switches were developed to terminate the therapy should the patient show severe side effects. Mechanisms can more finely control the system and stop and reactivate it. Since the number of T-cells are important for therapy persistence and severity, growth of T-cells is also controlled to dial the effectiveness and safety of therapeutics.

Although several mechanisms can improve safety and control, limitations include the difficulty of inducing large DNA circuits into the cells and risks associated with introducing foreign components, especially proteins, into cells.

Ethics

The creation of new life and the tampering of existing life has raised ethical concerns in the field of synthetic biology and are actively being discussed.

Common ethical questions include:

- Is it morally right to tamper with nature?

- Is one playing God when creating new life?

- What happens if a synthetic organism accidentally escapes?

- What if an individual misuses synthetic biology and creates a harmful entity (e.g., a biological weapon)?

- Who will have control of and access to the products of synthetic biology?

- Who will gain from these innovations? Investors? Medical patients? Industrial farmers?

- Does the patent system allow patents on living organisms? What about parts of organisms, like HIV resistance genes in humans?

- What if a new creation is deserving of moral or legal status?

The ethical aspects of synthetic biology has 3 main features: biosafety, biosecurity, and the creation of new life forms. Other ethical issues mentioned include the regulation of new creations, patent management of new creations, benefit distribution, and research integrity.

Ethical issues have surfaced for recombinant DNA and genetically modified organism (GMO) technologies and extensive regulations of genetic engineering and pathogen research were in place in many jurisdictions. Amy Gutmann, former head of the Presidential Bioethics Commission, argued that we should avoid the temptation to over-regulate synthetic biology in general, and genetic engineering in particular. According to Gutmann, "Regulatory parsimony is especially important in emerging technologies...where the temptation to stifle innovation on the basis of uncertainty and fear of the unknown is particularly great. The blunt instruments of statutory and regulatory restraint may not only inhibit the distribution of new benefits, but can be counterproductive to security and safety by preventing researchers from developing effective safeguards.".

The "creation" of life

One ethical question is whether or not it is acceptable to create new life forms, sometimes known as "playing God". Currently, the creation of new life forms not present in nature is at small-scale, the potential benefits and dangers remain unknown, and careful consideration and oversight are ensured for most studies. Many advocates express the great potential value—to agriculture, medicine, and academic knowledge, among other fields—of creating artificial life forms. Creation of new entities could expand scientific knowledge well beyond what is currently known from studying natural phenomena. Yet there is concern that artificial life forms may reduce nature's "purity" (i.e., nature could be somehow corrupted by human intervention and manipulation) and potentially influence the adoption of more engineering-like principles instead of biodiversity- and nature-focused ideals. Some are also concerned that if an artificial life form were to be released into nature, it could hamper biodiversity by beating out natural species for resources (similar to how algal blooms kill marine species). Another concern involves the ethical treatment of newly created entities if they happen to sense pain, sentience, and self-perception. Should such life be given moral or legal rights? If so, how?

Biosafety and biocontainment

What is most ethically appropriate when considering biosafety measures? How can accidental introduction of synthetic life in the natural environment be avoided? Much ethical consideration and critical thought has been given to these questions. Biosafety not only refers to biological containment; it also refers to strides taken to protect the public from potentially hazardous biological agents. Even though such concerns are important and remain unanswered, not all products of synthetic biology present concern for biological safety or negative consequences for the environment. It is argued that most synthetic technologies are benign and are incapable of flourishing in the outside world due to their "unnatural" characteristics as there is yet to be an example of a transgenic microbe conferred with a fitness advantage in the wild.

In general, existing hazard controls, risk assessment methodologies, and regulations developed for traditional genetically modified organisms (GMOs) are considered to be sufficient for synthetic organisms. "Extrinsic" biocontainment methods in a laboratory context include physical containment through biosafety cabinets and gloveboxes, as well as personal protective equipment. In an agricultural context they include isolation distances and pollen barriers, similar to methods for biocontainment of GMOs. Synthetic organisms may offer increased hazard control because they can be engineered with "intrinsic" biocontainment methods that limit their growth in an uncontained environment, or prevent horizontal gene transfer to natural organisms. Examples of intrinsic biocontainment include auxotrophy, biological kill switches, inability of the organism to replicate or to pass modified or synthetic genes to offspring, and the use of xenobiological organisms using alternative biochemistry, for example using artificial xeno nucleic acids (XNA) instead of DNA. Regarding auxotrophy, bacteria and yeast can be engineered to be unable to produce histidine, an important amino acid for all life. Such organisms can thus only be grown on histidine-rich media in laboratory conditions, nullifying fears that they could spread into undesirable areas.

Biosecurity

Some

ethical issues relate to biosecurity, where biosynthetic technologies

could be deliberately used to cause harm to society and/or the

environment. Since synthetic biology raises ethical issues and

biosecurity issues, humanity must consider and plan on how to deal with

potentially harmful creations, and what kinds of ethical measures could

possibly be employed to deter nefarious biosynthetic technologies. With

the exception of regulating synthetic biology and biotechnology

companies, however, the issues are not seen as new because they were raised during the earlier recombinant DNA and genetically modified organism (GMO) debates and extensive regulations of genetic engineering and pathogen research are already in place in many jurisdictions.

European Union

The European Union-funded project SYNBIOSAFE has issued reports on how to manage synthetic biology. A 2007 paper identified key issues in safety, security, ethics and the science-society interface, which the project defined as public education and ongoing dialogue among scientists, businesses, government and ethicists. The key security issues that SYNBIOSAFE identified involved engaging companies that sell synthetic DNA and the biohacking community of amateur biologists. Key ethical issues concerned the creation of new life forms.

A subsequent report focused on biosecurity, especially the so-called dual-use challenge. For example, while synthetic biology may lead to more efficient production of medical treatments, it may also lead to synthesis or modification of harmful pathogens (e.g., smallpox). The biohacking community remains a source of special concern, as the distributed and diffuse nature of open-source biotechnology makes it difficult to track, regulate or mitigate potential concerns over biosafety and biosecurity.

COSY, another European initiative, focuses on public perception and communication. To better communicate synthetic biology and its societal ramifications to a broader public, COSY and SYNBIOSAFE published SYNBIOSAFE, a 38-minute documentary film, in October 2009.

The International Association Synthetic Biology has proposed self-regulation. This proposes specific measures that the synthetic biology industry, especially DNA synthesis companies, should implement. In 2007, a group led by scientists from leading DNA-synthesis companies published a "practical plan for developing an effective oversight framework for the DNA-synthesis industry".

United States

In January 2009, the Alfred P. Sloan Foundation funded the Woodrow Wilson Center, the Hastings Center, and the J. Craig Venter Institute to examine the public perception, ethics and policy implications of synthetic biology.

On July 9–10, 2009, the National Academies' Committee of Science, Technology & Law convened a symposium on "Opportunities and Challenges in the Emerging Field of Synthetic Biology".

After the publication of the first synthetic genome and the accompanying media coverage about "life" being created, President Barack Obama established the Presidential Commission for the Study of Bioethical Issues to study synthetic biology. The commission convened a series of meetings, and issued a report in December 2010 titled "New Directions: The Ethics of Synthetic Biology and Emerging Technologies." The commission stated that "while Venter's achievement marked a significant technical advance in demonstrating that a relatively large genome could be accurately synthesized and substituted for another, it did not amount to the “creation of life”. It noted that synthetic biology is an emerging field, which creates potential risks and rewards. The commission did not recommend policy or oversight changes and called for continued funding of the research and new funding for monitoring, study of emerging ethical issues and public education.

Synthetic biology, as a major tool for biological advances, results in the "potential for developing biological weapons, possible unforeseen negative impacts on human health ... and any potential environmental impact". These security issues may be avoided by regulating industry uses of biotechnology through policy legislation. Federal guidelines on genetic manipulation are being proposed by "the President's Bioethics Commission ... in response to the announced creation of a self-replicating cell from a chemically synthesized genome, put forward 18 recommendations not only for regulating the science ... for educating the public".

Opposition

On March 13, 2012, over 100 environmental and civil society groups, including Friends of the Earth, the International Center for Technology Assessment and the ETC Group issued the manifesto The Principles for the Oversight of Synthetic Biology. This manifesto calls for a worldwide moratorium on the release and commercial use of synthetic organisms until more robust regulations and rigorous biosafety measures are established. The groups specifically call for an outright ban on the use of synthetic biology on the human genome or human microbiome. Richard Lewontin wrote that some of the safety tenets for oversight discussed in The Principles for the Oversight of Synthetic Biology are reasonable, but that the main problem with the recommendations in the manifesto is that "the public at large lacks the ability to enforce any meaningful realization of those recommendations".

Health and safety

The hazards of synthetic biology include biosafety hazards to workers and the public, biosecurity hazards stemming from deliberate engineering of organisms to cause harm, and environmental hazards. The biosafety hazards are similar to those for existing fields of biotechnology, mainly exposure to pathogens and toxic chemicals, although novel synthetic organisms may have novel risks. For biosecurity, there is concern that synthetic or redesigned organisms could theoretically be used for bioterrorism. Potential risks include recreating known pathogens from scratch, engineering existing pathogens to be more dangerous, and engineering microbes to produce harmful biochemicals. Lastly, environmental hazards include adverse effects on biodiversity and ecosystem services, including potential changes to land use resulting from agricultural use of synthetic organisms.

Existing risk analysis systems for GMOs are generally considered sufficient for synthetic organisms, although there may be difficulties for an organism built "bottom-up" from individual genetic sequences. Synthetic biology generally falls under existing regulations for GMOs and biotechnology in general, and any regulations that exist for downstream commercial products, although there are generally no regulations in any jurisdiction that are specific to synthetic biology.