Biotechnology is the application of scientific and engineering principles to the processing of materials by biological agents to provide goods and services. From its inception, biotechnology has maintained a close relationship with society. Although now most often associated with the development of drugs, historically biotechnology has been principally associated with food, addressing such issues as malnutrition and famine. The history of biotechnology begins with zymotechnology, which commenced with a focus on brewing techniques for beer. By World War I, however, zymotechnology would expand to tackle larger industrial issues, and the potential of industrial fermentation gave rise to biotechnology. However, both the single-cell protein and gasohol projects failed to progress due to varying issues including public resistance, a changing economic scene, and shifts in political power.

Yet the formation of a new field, genetic engineering, would soon bring biotechnology to the forefront of science in society, and the intimate relationship between the scientific community, the public, and the government would ensue. These debates gained exposure in 1975 at the Asilomar Conference, where Joshua Lederberg was the most outspoken supporter for this emerging field in biotechnology. By as early as 1978, with the development of synthetic human insulin, Lederberg's claims would prove valid, and the biotechnology industry grew rapidly. Each new scientific advance became a media event designed to capture public support, and by the 1980s, biotechnology grew into a promising real industry. In 1988, only five proteins from genetically engineered cells had been approved as drugs by the United States Food and Drug Administration (FDA), but this number would skyrocket to over 125 by the end of the 1990s.

The field of genetic engineering remains a heated topic of discussion in today's society with the advent of gene therapy, stem cell research, cloning, and genetically modified food. While it seems only natural nowadays to link pharmaceutical drugs as solutions to health and societal problems, this relationship of biotechnology serving social needs began centuries ago.

Origins of biotechnology

Biotechnology arose from the field of zymotechnology or zymurgy, which began as a search for a better understanding of industrial fermentation, particularly beer. Beer was an important industrial, and not just social, commodity. In late 19th-century Germany, brewing contributed as much to the gross national product as steel, and taxes on alcohol proved to be significant sources of revenue to the government. In the 1860s, institutes and remunerative consultancies were dedicated to the technology of brewing. The most famous was the private Carlsberg Institute, founded in 1875, which employed Emil Christian Hansen, who pioneered the pure yeast process for the reliable production of consistent beer. Less well known were private consultancies that advised the brewing industry. One of these, the Zymotechnic Institute, was established in Chicago by the German-born chemist John Ewald Siebel.

The heyday and expansion of zymotechnology came in World War I in response to industrial needs to support the war. Max Delbrück grew yeast on an immense scale during the war to meet 60 percent of Germany's animal feed needs. Compounds of another fermentation product, lactic acid, made up for a lack of hydraulic fluid, glycerol. On the Allied side the Russian chemist Chaim Weizmann used starch to eliminate Britain's shortage of acetone, a key raw material for cordite, by fermenting maize to acetone. The industrial potential of fermentation was outgrowing its traditional home in brewing, and "zymotechnology" soon gave way to "biotechnology."

With food shortages spreading and resources fading, some dreamed of a new industrial solution. The Hungarian Károly Ereky coined the word "biotechnology" in Hungary during 1919 to describe a technology based on converting raw materials into a more useful product. He built a slaughterhouse for a thousand pigs and also a fattening farm with space for 50,000 pigs, raising over 100,000 pigs a year. The enterprise was enormous, becoming one of the largest and most profitable meat and fat operations in the world. In a book entitled Biotechnologie, Ereky further developed a theme that would be reiterated through the 20th century: biotechnology could provide solutions to societal crises, such as food and energy shortages. For Ereky, the term "biotechnologie" indicated the process by which raw materials could be biologically upgraded into socially useful products.

This catchword spread quickly after the First World War, as "biotechnology" entered German dictionaries and was taken up abroad by business-hungry private consultancies as far away as the United States. In Chicago, for example, the coming of prohibition at the end of World War I encouraged biological industries to create opportunities for new fermentation products, in particular a market for nonalcoholic drinks. Emil Siebel, the son of the founder of the Zymotechnic Institute, broke away from his father's company to establish his own called the "Bureau of Biotechnology," which specifically offered expertise in fermented nonalcoholic drinks.

The belief that the needs of an industrial society could be met by fermenting agricultural waste was an important ingredient of the "chemurgic movement." Fermentation-based processes generated products of ever-growing utility. In the 1940s, penicillin was the most dramatic. While it was discovered in England, it was produced industrially in the U.S. using a deep fermentation process originally developed in Peoria, Illinois. The enormous profits and the public expectations penicillin engendered caused a radical shift in the standing of the pharmaceutical industry. Doctors used the phrase "miracle drug", and the historian of its wartime use, David Adams, has suggested that to the public penicillin represented the perfect health that went together with the car and the dream house of wartime American advertising. Beginning in the 1950s, fermentation technology also became advanced enough to produce steroids on industrially significant scales. Of particular importance was the improved semisynthesis of cortisone which simplified the old 31 step synthesis to 11 steps. This advance was estimated to reduce the cost of the drug by 70%, making the medicine inexpensive and available. Today biotechnology still plays a central role in the production of these compounds and likely will for years to come.

Single-cell protein and gasohol projects

Even greater expectations of biotechnology were raised during the 1960s by a process that grew single-cell protein. When the so-called protein gap threatened world hunger, producing food locally by growing it from waste seemed to offer a solution. It was the possibilities of growing microorganisms on oil that captured the imagination of scientists, policy makers, and commerce. Major companies such as British Petroleum (BP) staked their futures on it. In 1962, BP built a pilot plant at Cap de Lavera in Southern France to publicize its product, Toprina. Initial research work at Lavera was done by Alfred Champagnat, In 1963, construction started on BP's second pilot plant at Grangemouth Oil Refinery in Britain.

As there was no well-accepted term to describe the new foods, in 1966 the term "single-cell protein" (SCP) was coined at MIT to provide an acceptable and exciting new title, avoiding the unpleasant connotations of microbial or bacterial.

The "food from oil" idea became quite popular by the 1970s, when facilities for growing yeast fed by n-paraffins were built in a number of countries. The Soviets were particularly enthusiastic, opening large "BVK" (belkovo-vitaminny kontsentrat, i.e., "protein-vitamin concentrate") plants next to their oil refineries in Kstovo (1973) and Kirishi (1974).

By the late 1970s, however, the cultural climate had completely changed, as the growth in SCP interest had taken place against a shifting economic and cultural scene (136). First, the price of oil rose catastrophically in 1974, so that its cost per barrel was five times greater than it had been two years earlier. Second, despite continuing hunger around the world, anticipated demand also began to shift from humans to animals. The program had begun with the vision of growing food for Third World people, yet the product was instead launched as an animal food for the developed world. The rapidly rising demand for animal feed made that market appear economically more attractive. The ultimate downfall of the SCP project, however, came from public resistance.

This was particularly vocal in Japan, where production came closest to fruition. For all their enthusiasm for innovation and traditional interest in microbiologically produced foods, the Japanese were the first to ban the production of single-cell proteins. The Japanese ultimately were unable to separate the idea of their new "natural" foods from the far from natural connotation of oil. These arguments were made against a background of suspicion of heavy industry in which anxiety over minute traces of petroleum was expressed. Thus, public resistance to an unnatural product led to the end of the SCP project as an attempt to solve world hunger.

Also, in 1989 in the USSR, the public environmental concerns made the government decide to close down (or convert to different technologies) all 8 paraffin-fed-yeast plants that the Soviet Ministry of Microbiological Industry had by that time.

In the late 1970s, biotechnology offered another possible solution to a societal crisis. The escalation in the price of oil in 1974 increased the cost of the Western world's energy tenfold. In response, the U.S. government promoted the production of gasohol, gasoline with 10 percent alcohol added, as an answer to the energy crisis. In 1979, when the Soviet Union sent troops to Afghanistan, the Carter administration cut off its supplies to agricultural produce in retaliation, creating a surplus of agriculture in the U.S. As a result, fermenting the agricultural surpluses to synthesize fuel seemed to be an economical solution to the shortage of oil threatened by the Iran–Iraq War. Before the new direction could be taken, however, the political wind changed again: the Reagan administration came to power in January 1981 and, with the declining oil prices of the 1980s, ended support for the gasohol industry before it was born.

Biotechnology seemed to be the solution for major social problems, including world hunger and energy crises. In the 1960s, radical measures would be needed to meet world starvation, and biotechnology seemed to provide an answer. However, the solutions proved to be too expensive and socially unacceptable, and solving world hunger through SCP food was dismissed. In the 1970s, the food crisis was succeeded by the energy crisis, and here too, biotechnology seemed to provide an answer. But once again, costs proved prohibitive as oil prices slumped in the 1980s. Thus, in practice, the implications of biotechnology were not fully realized in these situations. But this would soon change with the rise of genetic engineering.

Genetic engineering

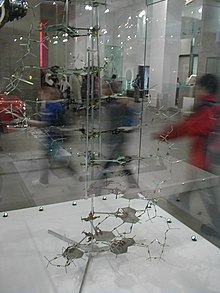

The origins of biotechnology culminated with the birth of genetic engineering. There were two key events that have come to be seen as scientific breakthroughs beginning the era that would unite genetics with biotechnology. One was the 1953 discovery of the structure of DNA, by Watson and Crick, and the other was the 1973 discovery by Cohen and Boyer of a recombinant DNA technique by which a section of DNA was cut from the plasmid of an E. coli bacterium and transferred into the DNA of another. This approach could, in principle, enable bacteria to adopt the genes and produce proteins of other organisms, including humans. Popularly referred to as "genetic engineering," it came to be defined as the basis of new biotechnology.

Genetic engineering proved to be a topic that thrust biotechnology into the public scene, and the interaction between scientists, politicians, and the public defined the work that was accomplished in this area. Technical developments during this time were revolutionary and at times frightening. In December 1967, the first heart transplant by Christian Barnard reminded the public that the physical identity of a person was becoming increasingly problematic. While poetic imagination had always seen the heart at the center of the soul, now there was the prospect of individuals being defined by other people's hearts. During the same month, Arthur Kornberg announced that he had managed to biochemically replicate a viral gene. "Life had been synthesized," said the head of the National Institutes of Health. Genetic engineering was now on the scientific agenda, as it was becoming possible to identify genetic characteristics with diseases such as beta thalassemia and sickle-cell anemia.

Responses to scientific achievements were colored by cultural skepticism. Scientists and their expertise were looked upon with suspicion. In 1968, an immensely popular work, The Biological Time Bomb, was written by the British journalist Gordon Rattray Taylor. The author's preface saw Kornberg's discovery of replicating a viral gene as a route to lethal doomsday bugs. The publisher's blurb for the book warned that within ten years, "You may marry a semi-artificial man or woman…choose your children's sex…tune out pain…change your memories…and live to be 150 if the scientific revolution doesn’t destroy us first." The book ended with a chapter called "The Future – If Any." While it is rare for current science to be represented in the movies, in this period of "Star Trek", science fiction and science fact seemed to be converging. "Cloning" became a popular word in the media. Woody Allen satirized the cloning of a person from a nose in his 1973 movie Sleeper, and cloning Adolf Hitler from surviving cells was the theme of the 1976 novel by Ira Levin, The Boys from Brazil.

In response to these public concerns, scientists, industry, and governments increasingly linked the power of recombinant DNA to the immensely practical functions that biotechnology promised. One of the key scientific figures that attempted to highlight the promising aspects of genetic engineering was Joshua Lederberg, a Stanford professor and Nobel laureate. While in the 1960s "genetic engineering" described eugenics and work involving the manipulation of the human genome, Lederberg stressed research that would involve microbes instead. Lederberg emphasized the importance of focusing on curing living people. Lederberg's 1963 paper, "Biological Future of Man" suggested that, while molecular biology might one day make it possible to change the human genotype, "what we have overlooked is euphenics, the engineering of human development." Lederberg constructed the word "euphenics" to emphasize changing the phenotype after conception rather than the genotype which would affect future generations.

With the discovery of recombinant DNA by Cohen and Boyer in 1973, the idea that genetic engineering would have major human and societal consequences was born. In July 1974, a group of eminent molecular biologists headed by Paul Berg wrote to Science suggesting that the consequences of this work were so potentially destructive that there should be a pause until its implications had been thought through. This suggestion was explored at a meeting in February 1975 at California's Monterey Peninsula, forever immortalized by the location, Asilomar. Its historic outcome was an unprecedented call for a halt in research until it could be regulated in such a way that the public need not be anxious, and it led to a 16-month moratorium until National Institutes of Health (NIH) guidelines were established.

Joshua Lederberg was the leading exception in emphasizing, as he had for years, the potential benefits. At Asilomar, in an atmosphere favoring control and regulation, he circulated a paper countering the pessimism and fears of misuses with the benefits conferred by successful use. He described "an early chance for a technology of untold importance for diagnostic and therapeutic medicine: the ready production of an unlimited variety of human proteins. Analogous applications may be foreseen in fermentation process for cheaply manufacturing essential nutrients, and in the improvement of microbes for the production of antibiotics and of special industrial chemicals." In June 1976, the 16-month moratorium on research expired with the Director's Advisory Committee (DAC) publication of the NIH guidelines of good practice. They defined the risks of certain kinds of experiments and the appropriate physical conditions for their pursuit, as well as a list of things too dangerous to perform at all. Moreover, modified organisms were not to be tested outside the confines of a laboratory or allowed into the environment.

Atypical as Lederberg was at Asilomar, his optimistic vision of genetic engineering would soon lead to the development of the biotechnology industry. Over the next two years, as public concern over the dangers of recombinant DNA research grew, so too did interest in its technical and practical applications. Curing genetic diseases remained in the realms of science fiction, but it appeared that producing human simple proteins could be good business. Insulin, one of the smaller, best characterized and understood proteins, had been used in treating type 1 diabetes for a half century. It had been extracted from animals in a chemically slightly different form from the human product. Yet, if one could produce synthetic human insulin, one could meet an existing demand with a product whose approval would be relatively easy to obtain from regulators. In the period 1975 to 1977, synthetic "human" insulin represented the aspirations for new products that could be made with the new biotechnology. Microbial production of synthetic human insulin was finally announced in September 1978 and was produced by a startup company, Genentech. Although that company did not commercialize the product themselves, instead, it licensed the production method to Eli Lilly and Company. 1978 also saw the first application for a patent on a gene, the gene which produces human growth hormone, by the University of California, thus introducing the legal principle that genes could be patented. Since that filing, almost 20% of the more than 20,000 genes in the human DNA have been patented.

The radical shift in the connotation of "genetic engineering" from an emphasis on the inherited characteristics of people to the commercial production of proteins and therapeutic drugs was nurtured by Joshua Lederberg. His broad concerns since the 1960s had been stimulated by enthusiasm for science and its potential medical benefits. Countering calls for strict regulation, he expressed a vision of potential utility. Against a belief that new techniques would entail unmentionable and uncontrollable consequences for humanity and the environment, a growing consensus on the economic value of recombinant DNA emerged.

Biosensor technology

The MOSFET (metal-oxide-semiconductor field-effect transistor, or MOS transistor) was invented by Mohamed M. Atalla and Dawon Kahng in 1959, and demonstrated in 1960. Two years later, L.C. Clark and C. Lyons invented the biosensor in 1962. Biosensor MOSFETs (BioFETs) were later developed, and they have since been widely used to measure physical, chemical, biological and environmental parameters.

The first BioFET was the ion-sensitive field-effect transistor (ISFET), invented by Piet Bergveld for electrochemical and biological applications in 1970. The adsorption FET (ADFET) was patented by P.F. Cox in 1974, and a hydrogen-sensitive MOSFET was demonstrated by I. Lundstrom, M.S. Shivaraman, C.S. Svenson and L. Lundkvist in 1975. The ISFET is a special type of MOSFET with a gate at a certain distance, and where the metal gate is replaced by an ion-sensitive membrane, electrolyte solution and reference electrode. The ISFET is widely used in biomedical applications, such as the detection of DNA hybridization, biomarker detection from blood, antibody detection, glucose measurement, pH sensing, and genetic technology.

By the mid-1980s, other BioFETs had been developed, including the gas sensor FET (GASFET), pressure sensor FET (PRESSFET), chemical field-effect transistor (ChemFET), reference ISFET (REFET), enzyme-modified FET (ENFET) and immunologically modified FET (IMFET). By the early 2000s, BioFETs such as the DNA field-effect transistor (DNAFET), gene-modified FET (GenFET) and cell-potential BioFET (CPFET) had been developed.

Biotechnology and industry

With ancestral roots in industrial microbiology that date back centuries, the new biotechnology industry grew rapidly beginning in the mid-1970s. Each new scientific advance became a media event designed to capture investment confidence and public support. Although market expectations and social benefits of new products were frequently overstated, many people were prepared to see genetic engineering as the next great advance in technological progress. By the 1980s, biotechnology characterized a nascent real industry, providing titles for emerging trade organizations such as the Biotechnology Industry Organization (BIO).

The main focus of attention after insulin were the potential profit makers in the pharmaceutical industry: human growth hormone and what promised to be a miraculous cure for viral diseases, interferon. Cancer was a central target in the 1970s because increasingly the disease was linked to viruses. By 1980, a new company, Biogen, had produced interferon through recombinant DNA. The emergence of interferon and the possibility of curing cancer raised money in the community for research and increased the enthusiasm of an otherwise uncertain and tentative society. Moreover, to the 1970s plight of cancer was added AIDS in the 1980s, offering an enormous potential market for a successful therapy, and more immediately, a market for diagnostic tests based on monoclonal antibodies. By 1988, only five proteins from genetically engineered cells had been approved as drugs by the United States Food and Drug Administration (FDA): synthetic insulin, human growth hormone, hepatitis B vaccine, alpha-interferon, and tissue plasminogen activator (TPa), for lysis of blood clots. By the end of the 1990s, however, 125 more genetically engineered drugs would be approved.

The 2007–2008 global financial crisis led to several changes in the way the biotechnology industry was financed and organized. First, it led to a decline in overall financial investment in the sector, globally; and second, in some countries like the UK it led to a shift from business strategies focused on going for an initial public offering (IPO) to seeking a trade sale instead. By 2011, financial investment in the biotechnology industry started to improve again and by 2014 the global market capitalization reached $1 trillion.

Genetic engineering also reached the agricultural front as well. There was tremendous progress since the market introduction of the genetically engineered Flavr Savr tomato in 1994. Ernst and Young reported that in 1998, 30% of the U.S. soybean crop was expected to be from genetically engineered seeds. In 1998, about 30% of the US cotton and corn crops were also expected to be products of genetic engineering.

Genetic engineering in biotechnology stimulated hopes for both therapeutic proteins, drugs and biological organisms themselves, such as seeds, pesticides, engineered yeasts, and modified human cells for treating genetic diseases. From the perspective of its commercial promoters, scientific breakthroughs, industrial commitment, and official support were finally coming together, and biotechnology became a normal part of business. No longer were the proponents for the economic and technological significance of biotechnology the iconoclasts. Their message had finally become accepted and incorporated into the policies of governments and industry.

Global trends

According to Burrill and Company, an industry investment bank, over $350 billion has been invested in biotech since the emergence of the industry, and global revenues rose from $23 billion in 2000 to more than $50 billion in 2005. The greatest growth has been in Latin America but all regions of the world have shown strong growth trends. By 2007 and into 2008, though, a downturn in the fortunes of biotech emerged, at least in the United Kingdom, as the result of declining investment in the face of failure of biotech pipelines to deliver and a consequent downturn in return on investment.