In information theory, the entropy of a random variable is the average level of "information", "surprise", or "uncertainty" inherent to the variable's possible outcomes. Given a discrete random variable , which takes values in the alphabet and is distributed according to :

The concept of information entropy was introduced by Claude Shannon in his 1948 paper "A Mathematical Theory of Communication", and is also referred to as Shannon entropy. Shannon's theory defines a data communication system composed of three elements: a source of data, a communication channel, and a receiver. The "fundamental problem of communication" – as expressed by Shannon – is for the receiver to be able to identify what data was generated by the source, based on the signal it receives through the channel. Shannon considered various ways to encode, compress, and transmit messages from a data source, and proved in his famous source coding theorem that the entropy represents an absolute mathematical limit on how well data from the source can be losslessly compressed onto a perfectly noiseless channel. Shannon strengthened this result considerably for noisy channels in his noisy-channel coding theorem.

Entropy in information theory is directly analogous to the entropy in statistical thermodynamics. The analogy results when the values of the random variable designate energies of microstates, so Gibbs formula for the entropy is formally identical to Shannon's formula. Entropy has relevance to other areas of mathematics such as combinatorics and machine learning. The definition can be derived from a set of axioms establishing that entropy should be a measure of how informative the average outcome of a variable is. For a continuous random variable, differential entropy is analogous to entropy.

Introduction

The core idea of information theory is that the "informational value" of a communicated message depends on the degree to which the content of the message is surprising. If a highly likely event occurs, the message carries very little information. On the other hand, if a highly unlikely event occurs, the message is much more informative. For instance, the knowledge that some particular number will not be the winning number of a lottery provides very little information, because any particular chosen number will almost certainly not win. However, knowledge that a particular number will win a lottery has high informational value because it communicates the outcome of a very low probability event.

The information content, also called the surprisal or self-information, of an event is a function which increases as the probability of an event decreases. When is close to 1, the surprisal of the event is low, but if is close to 0, the surprisal of the event is high. This relationship is described by the function

Hence, we can define the information, or surprisal, of an event by

Entropy measures the expected (i.e., average) amount of information conveyed by identifying the outcome of a random trial. This implies that casting a die has higher entropy than tossing a coin because each outcome of a die toss has smaller probability (about ) than each outcome of a coin toss ().

Consider a coin with probability p of landing on heads and probability 1 − p of landing on tails. The maximum surprise is when p = 1/2, for which one outcome is not expected over the other. In this case a coin flip has an entropy of one bit. (Similarly, one trit with equiprobable values contains (about 1.58496) bits of information because it can have one of three values.) The minimum surprise is when p = 0 or p = 1, when the event outcome is known ahead of time, and the entropy is zero bits. When the entropy is zero bits, this is sometimes referred to as unity, where there is no uncertainty at all – no freedom of choice – no information. Other values of p give entropies between zero and one bits.

Information theory is useful to calculate the smallest amount of information required to convey a message, as in data compression. For example, consider the transmission of sequences comprising the 4 characters 'A', 'B', 'C', and 'D' over a binary channel. If all 4 letters are equally likely (25%), one can not do better than using two bits to encode each letter. 'A' might code as '00', 'B' as '01', 'C' as '10', and 'D' as '11'. However, if the probabilities of each letter are unequal, say 'A' occurs with 70% probability, 'B' with 26%, and 'C' and 'D' with 2% each, one could assign variable length codes. In this case, 'A' would be coded as '0', 'B' as '10', 'C' as '110', and D as '111'. With this representation, 70% of the time only one bit needs to be sent, 26% of the time two bits, and only 4% of the time 3 bits. On average, fewer than 2 bits are required since the entropy is lower (owing to the high prevalence of 'A' followed by 'B' – together 96% of characters). The calculation of the sum of probability-weighted log probabilities measures and captures this effect. English text, treated as a string of characters, has fairly low entropy; i.e. it is fairly predictable. We can be fairly certain that, for example, 'e' will be far more common than 'z', that the combination 'qu' will be much more common than any other combination with a 'q' in it, and that the combination 'th' will be more common than 'z', 'q', or 'qu'. After the first few letters one can often guess the rest of the word. English text has between 0.6 and 1.3 bits of entropy per character of the message.

Definition

Named after Boltzmann's Η-theorem, Shannon defined the entropy Η (Greek capital letter eta) of a discrete random variable , which takes values in the alphabet and is distributed according to such that :

Here is the expected value operator, and I is the information content of X. is itself a random variable.

The entropy can explicitly be written as:

In the case of for some , the value of the corresponding summand 0 logb(0) is taken to be 0, which is consistent with the limit:

One may also define the conditional entropy of two variables and taking values from sets and respectively, as:

Measure theory

Entropy can be formally defined in the language of measure theory as follows:[11] Let be a probability space. Let be an event. The surprisal of is

The expected surprisal of is

A -almost partition is a set family such that and for all distinct . (This is a relaxation of the usual conditions for a partition.) The entropy of is

Let be a sigma-algebra on . The entropy of is

Ellerman definition

David Ellerman wanted to explain why conditional entropy and other functions had properties similar to functions in probability theory. He claims that previous definitions based on measure theory only worked with powers of 2.

Ellerman created a "logic of partitions" that is the dual of subsets of a universal set. Information is quantified as "dits" (distinctions), a measure on partitions. "Dits" can be converted into Shannon's bits, to get the formulas for conditional entropy, etc..

Example

Here, the entropy is at most 1 bit, and to communicate the outcome of a coin flip (2 possible values) will require an average of at most 1 bit (exactly 1 bit for a fair coin). The result of a fair die (6 possible values) would have entropy log26 bits.

Consider tossing a coin with known, not necessarily fair, probabilities of coming up heads or tails; this can be modelled as a Bernoulli process.

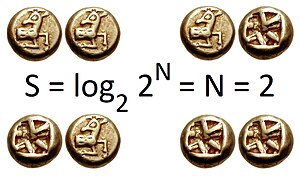

The entropy of the unknown result of the next toss of the coin is maximized if the coin is fair (that is, if heads and tails both have equal probability 1/2). This is the situation of maximum uncertainty as it is most difficult to predict the outcome of the next toss; the result of each toss of the coin delivers one full bit of information. This is because

However, if we know the coin is not fair, but comes up heads or tails with probabilities p and q, where p ≠ q, then there is less uncertainty. Every time it is tossed, one side is more likely to come up than the other. The reduced uncertainty is quantified in a lower entropy: on average each toss of the coin delivers less than one full bit of information. For example, if p = 0.7, then

Uniform probability yields maximum uncertainty and therefore maximum entropy. Entropy, then, can only decrease from the value associated with uniform probability. The extreme case is that of a double-headed coin that never comes up tails, or a double-tailed coin that never results in a head. Then there is no uncertainty. The entropy is zero: each toss of the coin delivers no new information as the outcome of each coin toss is always certain.

Entropy can be normalized by dividing it by information length. This ratio is called metric entropy and is a measure of the randomness of the information.

Characterization

To understand the meaning of −Σ pi log(pi), first define an information function I in terms of an event i with probability pi. The amount of information acquired due to the observation of event i follows from Shannon's solution of the fundamental properties of information:

- I(p) is monotonically decreasing in p: an increase in the probability of an event decreases the information from an observed event, and vice versa.

- I(1) = 0: events that always occur do not communicate information.

- I(p1·p2) = I(p1) + I(p2): the information learned from independent events is the sum of the information learned from each event.

Given two independent events, if the first event can yield one of n equiprobable outcomes and another has one of m equiprobable outcomes then there are mn equiprobable outcomes of the joint event. This means that if log2(n) bits are needed to encode the first value and log2(m) to encode the second, one needs log2(mn) = log2(m) + log2(n) to encode both.

Shannon discovered that a suitable choice of is given by:

In fact, the only possible values of are for . Additionally, choosing a value for k is equivalent to choosing a value for , so that x corresponds to the base for the logarithm. Thus, entropy is characterized by the above four properties.

Proof

The different units of information (bits for the binary logarithm log2, nats for the natural logarithm ln, bans for the decimal logarithm log10 and so on) are constant multiples of each other. For instance, in case of a fair coin toss, heads provides log2(2) = 1 bit of information, which is approximately 0.693 nats or 0.301 decimal digits. Because of additivity, n tosses provide n bits of information, which is approximately 0.693n nats or 0.301n decimal digits.

The meaning of the events observed (the meaning of messages) does not matter in the definition of entropy. Entropy only takes into account the probability of observing a specific event, so the information it encapsulates is information about the underlying probability distribution, not the meaning of the events themselves.

Alternative characterization

Another characterization of entropy uses the following properties. We denote pi = Pr(X = xi) and Ηn(p1, ..., pn) = Η(X).

- Continuity: H should be continuous, so that changing the values of the probabilities by a very small amount should only change the entropy by a small amount.

- Symmetry: H should be unchanged if the outcomes xi are re-ordered. That is, for any permutation of .

- Maximum: should be maximal if all the outcomes are equally likely i.e. .

- Increasing number of outcomes: for equiprobable events, the entropy should increase with the number of outcomes i.e.

- Additivity: given an ensemble of n uniformly distributed elements that are divided into k boxes (sub-systems) with b1, ..., bk elements each, the entropy of the whole ensemble should be equal to the sum of the entropy of the system of boxes and the individual entropies of the boxes, each weighted with the probability of being in that particular box.

The rule of additivity has the following consequences: for positive integers bi where b1 + ... + bk = n,

Choosing k = n, b1 = ... = bn = 1 this implies that the entropy of a certain outcome is zero: Η1(1) = 0. This implies that the efficiency of a source alphabet with n symbols can be defined simply as being equal to its n-ary entropy. See also Redundancy (information theory).

Alternative characterization via additivity and subadditivity

Another succinct axiomatic characterization of Shannon entropy was given by Aczél, Forte and Ng, via the following properties:

- Subadditivity: for jointly distributed random variables .

- Additivity: when the random variables are independent.

- Expansibility: , i.e., adding an outcome with probability zero does not change the entropy.

- Symmetry: is invariant under permutation of .

- Small for small probabilities: .

It was shown that any function satisfying the above properties must be a constant multiple of Shannon entropy, with a non-negative constant. Compared to the previously mentioned characterizations of entropy, this characterization focuses on the properties of entropy as a function of random variables (subadditivity and additivity), rather than the properties of entropy as a function of the probability vector .

It is worth noting that if we drop the "small for small probabilities" property, then must be a non-negative linear combination of the Shannon entropy and the Hartley entropy.

Further properties

The Shannon entropy satisfies the following properties, for some of which it is useful to interpret entropy as the expected amount of information learned (or uncertainty eliminated) by revealing the value of a random variable X:

- Adding or removing an event with probability zero does not contribute to the entropy:

- .

- It can be confirmed using the Jensen inequality and then Sedrakyan's inequality that

- .

- This maximal entropy of logb(n) is effectively attained by a source alphabet having a uniform probability distribution: uncertainty is maximal when all possible events are equiprobable.

- The entropy or the amount of information revealed by evaluating (X,Y) (that is, evaluating X and Y simultaneously) is equal to the information revealed by conducting two consecutive experiments: first evaluating the value of Y, then revealing the value of X given that you know the value of Y. This may be written as:

- If where is a function, then . Applying the previous formula to yields

- so , the entropy of a variable can only decrease when the latter is passed through a function.

- If X and Y are two independent random variables, then knowing the value of Y doesn't influence our knowledge of the value of X (since the two don't influence each other by independence):

- More generally, for any random variables X and Y, we have

- .

- The entropy of two simultaneous events is no more than the sum of the entropies of each individual event i.e., , with equality if and only if the two events are independent.

- The entropy is concave in the probability mass function , i.e.

- for all probability mass functions and .

- Accordingly, the negative entropy (negentropy) function is convex, and its convex conjugate is LogSumExp.

Aspects

Relationship to thermodynamic entropy

The inspiration for adopting the word entropy in information theory came from the close resemblance between Shannon's formula and very similar known formulae from statistical mechanics.

In statistical thermodynamics the most general formula for the thermodynamic entropy S of a thermodynamic system is the Gibbs entropy,

where kB is the Boltzmann constant, and pi is the probability of a microstate. The Gibbs entropy was defined by J. Willard Gibbs in 1878 after earlier work by Boltzmann (1872).

The Gibbs entropy translates over almost unchanged into the world of quantum physics to give the von Neumann entropy, introduced by John von Neumann in 1927,

where ρ is the density matrix of the quantum mechanical system and Tr is the trace.

At an everyday practical level, the links between information entropy and thermodynamic entropy are not evident. Physicists and chemists are apt to be more interested in changes in entropy as a system spontaneously evolves away from its initial conditions, in accordance with the second law of thermodynamics, rather than an unchanging probability distribution. As the minuteness of the Boltzmann constant kB indicates, the changes in S / kB for even tiny amounts of substances in chemical and physical processes represent amounts of entropy that are extremely large compared to anything in data compression or signal processing. In classical thermodynamics, entropy is defined in terms of macroscopic measurements and makes no reference to any probability distribution, which is central to the definition of information entropy.

The connection between thermodynamics and what is now known as information theory was first made by Ludwig Boltzmann and expressed by his famous equation:

where is the thermodynamic entropy of a particular macrostate (defined by thermodynamic parameters such as temperature, volume, energy, etc.), W is the number of microstates (various combinations of particles in various energy states) that can yield the given macrostate, and kB is the Boltzmann constant. It is assumed that each microstate is equally likely, so that the probability of a given microstate is pi = 1/W. When these probabilities are substituted into the above expression for the Gibbs entropy (or equivalently kB times the Shannon entropy), Boltzmann's equation results. In information theoretic terms, the information entropy of a system is the amount of "missing" information needed to determine a microstate, given the macrostate.

In the view of Jaynes (1957), thermodynamic entropy, as explained by statistical mechanics, should be seen as an application of Shannon's information theory: the thermodynamic entropy is interpreted as being proportional to the amount of further Shannon information needed to define the detailed microscopic state of the system, that remains uncommunicated by a description solely in terms of the macroscopic variables of classical thermodynamics, with the constant of proportionality being just the Boltzmann constant. Adding heat to a system increases its thermodynamic entropy because it increases the number of possible microscopic states of the system that are consistent with the measurable values of its macroscopic variables, making any complete state description longer. (See article: maximum entropy thermodynamics). Maxwell's demon can (hypothetically) reduce the thermodynamic entropy of a system by using information about the states of individual molecules; but, as Landauer (from 1961) and co-workers have shown, to function the demon himself must increase thermodynamic entropy in the process, by at least the amount of Shannon information he proposes to first acquire and store; and so the total thermodynamic entropy does not decrease (which resolves the paradox). Landauer's principle imposes a lower bound on the amount of heat a computer must generate to process a given amount of information, though modern computers are far less efficient.

Data compression

Shannon's definition of entropy, when applied to an information source, can determine the minimum channel capacity required to reliably transmit the source as encoded binary digits. Shannon's entropy measures the information contained in a message as opposed to the portion of the message that is determined (or predictable). Examples of the latter include redundancy in language structure or statistical properties relating to the occurrence frequencies of letter or word pairs, triplets etc. The minimum channel capacity can be realized in theory by using the typical set or in practice using Huffman, Lempel–Ziv or arithmetic coding. (See also Kolmogorov complexity.) In practice, compression algorithms deliberately include some judicious redundancy in the form of checksums to protect against errors. The entropy rate of a data source is the average number of bits per symbol needed to encode it. Shannon's experiments with human predictors show an information rate between 0.6 and 1.3 bits per character in English; the PPM compression algorithm can achieve a compression ratio of 1.5 bits per character in English text.

If a compression scheme is lossless – one in which you can always recover the entire original message by decompression – then a compressed message has the same quantity of information as the original but communicated in fewer characters. It has more information (higher entropy) per character. A compressed message has less redundancy. Shannon's source coding theorem states a lossless compression scheme cannot compress messages, on average, to have more than one bit of information per bit of message, but that any value less than one bit of information per bit of message can be attained by employing a suitable coding scheme. The entropy of a message per bit multiplied by the length of that message is a measure of how much total information the message contains. Shannon's theorem also implies that no lossless compression scheme can shorten all messages. If some messages come out shorter, at least one must come out longer due to the pigeonhole principle. In practical use, this is generally not a problem, because one is usually only interested in compressing certain types of messages, such as a document in English, as opposed to gibberish text, or digital photographs rather than noise, and it is unimportant if a compression algorithm makes some unlikely or uninteresting sequences larger.

A 2011 study in Science estimates the world's technological capacity to store and communicate optimally compressed information normalized on the most effective compression algorithms available in the year 2007, therefore estimating the entropy of the technologically available sources.

| Type of Information | 1986 | 2007 |

|---|---|---|

| Storage | 2.6 | 295 |

| Broadcast | 432 | 1900 |

| Telecommunications | 0.281 | 65 |

The authors estimate humankind technological capacity to store information (fully entropically compressed) in 1986 and again in 2007. They break the information into three categories—to store information on a medium, to receive information through one-way broadcast networks, or to exchange information through two-way telecommunication networks.

Entropy as a measure of diversity

Entropy is one of several ways to measure biodiversity, and is applied in the form of the Shannon index. A diversity index is a quantitative statistical measure of how many different types exist in a dataset, such as species in a community, accounting for ecological richness, evenness, and dominance. Specifically, Shannon entropy is the logarithm of 1D, the true diversity index with parameter equal to 1. The Shannon index is related to the proportional abundances of types.

Limitations of entropy

There are a number of entropy-related concepts that mathematically quantify information content in some way:

- the self-information of an individual message or symbol taken from a given probability distribution,

- the entropy of a given probability distribution of messages or symbols, and

- the entropy rate of a stochastic process.

(The "rate of self-information" can also be defined for a particular sequence of messages or symbols generated by a given stochastic process: this will always be equal to the entropy rate in the case of a stationary process.) Other quantities of information are also used to compare or relate different sources of information.

It is important not to confuse the above concepts. Often it is only clear from context which one is meant. For example, when someone says that the "entropy" of the English language is about 1 bit per character, they are actually modeling the English language as a stochastic process and talking about its entropy rate. Shannon himself used the term in this way.

If very large blocks are used, the estimate of per-character entropy rate may become artificially low because the probability distribution of the sequence is not known exactly; it is only an estimate. If one considers the text of every book ever published as a sequence, with each symbol being the text of a complete book, and if there are N published books, and each book is only published once, the estimate of the probability of each book is 1/N, and the entropy (in bits) is −log2(1/N) = log2(N). As a practical code, this corresponds to assigning each book a unique identifier and using it in place of the text of the book whenever one wants to refer to the book. This is enormously useful for talking about books, but it is not so useful for characterizing the information content of an individual book, or of language in general: it is not possible to reconstruct the book from its identifier without knowing the probability distribution, that is, the complete text of all the books. The key idea is that the complexity of the probabilistic model must be considered. Kolmogorov complexity is a theoretical generalization of this idea that allows the consideration of the information content of a sequence independent of any particular probability model; it considers the shortest program for a universal computer that outputs the sequence. A code that achieves the entropy rate of a sequence for a given model, plus the codebook (i.e. the probabilistic model), is one such program, but it may not be the shortest.

The Fibonacci sequence is 1, 1, 2, 3, 5, 8, 13, .... treating the sequence as a message and each number as a symbol, there are almost as many symbols as there are characters in the message, giving an entropy of approximately log2(n). The first 128 symbols of the Fibonacci sequence has an entropy of approximately 7 bits/symbol, but the sequence can be expressed using a formula [F(n) = F(n−1) + F(n−2) for n = 3, 4, 5, ..., F(1) =1, F(2) = 1] and this formula has a much lower entropy and applies to any length of the Fibonacci sequence.

Limitations of entropy in cryptography

In cryptanalysis, entropy is often roughly used as a measure of the unpredictability of a cryptographic key, though its real uncertainty is unmeasurable. For example, a 128-bit key that is uniformly and randomly generated has 128 bits of entropy. It also takes (on average) guesses to break by brute force. Entropy fails to capture the number of guesses required if the possible keys are not chosen uniformly. Instead, a measure called guesswork can be used to measure the effort required for a brute force attack.

Other problems may arise from non-uniform distributions used in cryptography. For example, a 1,000,000-digit binary one-time pad using exclusive or. If the pad has 1,000,000 bits of entropy, it is perfect. If the pad has 999,999 bits of entropy, evenly distributed (each individual bit of the pad having 0.999999 bits of entropy) it may provide good security. But if the pad has 999,999 bits of entropy, where the first bit is fixed and the remaining 999,999 bits are perfectly random, the first bit of the ciphertext will not be encrypted at all.

Data as a Markov process

A common way to define entropy for text is based on the Markov model of text. For an order-0 source (each character is selected independent of the last characters), the binary entropy is:

where pi is the probability of i. For a first-order Markov source (one in which the probability of selecting a character is dependent only on the immediately preceding character), the entropy rate is:

where i is a state (certain preceding characters) and is the probability of j given i as the previous character.

For a second order Markov source, the entropy rate is

Efficiency (normalized entropy)

A source alphabet with non-uniform distribution will have less entropy than if those symbols had uniform distribution (i.e. the "optimized alphabet"). This deficiency in entropy can be expressed as a ratio called efficiency:

Applying the basic properties of the logarithm, this quantity can also be expressed as:

Efficiency has utility in quantifying the effective use of a communication channel. This formulation is also referred to as the normalized entropy, as the entropy is divided by the maximum entropy . Furthermore, the efficiency is indifferent to choice of (positive) base b, as indicated by the insensitivity within the final logarithm above thereto.

Entropy for continuous random variables

Differential entropy

The Shannon entropy is restricted to random variables taking discrete values. The corresponding formula for a continuous random variable with probability density function f(x) with finite or infinite support on the real line is defined by analogy, using the above form of the entropy as an expectation:

This is the differential entropy (or continuous entropy). A precursor of the continuous entropy h[f] is the expression for the functional Η in the H-theorem of Boltzmann.

Although the analogy between both functions is suggestive, the following question must be set: is the differential entropy a valid extension of the Shannon discrete entropy? Differential entropy lacks a number of properties that the Shannon discrete entropy has – it can even be negative – and corrections have been suggested, notably limiting density of discrete points.

To answer this question, a connection must be established between the two functions:

In order to obtain a generally finite measure as the bin size goes to zero. In the discrete case, the bin size is the (implicit) width of each of the n (finite or infinite) bins whose probabilities are denoted by pn. As the continuous domain is generalized, the width must be made explicit.

To do this, start with a continuous function f discretized into bins of size . By the mean-value theorem there exists a value xi in each bin such that

We will denote

As Δ → 0, we have

Note; log(Δ) → −∞ as Δ → 0, requires a special definition of the differential or continuous entropy:

which is, as said before, referred to as the differential entropy. This means that the differential entropy is not a limit of the Shannon entropy for n → ∞. Rather, it differs from the limit of the Shannon entropy by an infinite offset (see also the article on information dimension).

Limiting density of discrete points

It turns out as a result that, unlike the Shannon entropy, the differential entropy is not in general a good measure of uncertainty or information. For example, the differential entropy can be negative; also it is not invariant under continuous co-ordinate transformations. This problem may be illustrated by a change of units when x is a dimensioned variable. f(x) will then have the units of 1/x. The argument of the logarithm must be dimensionless, otherwise it is improper, so that the differential entropy as given above will be improper. If Δ is some "standard" value of x (i.e. "bin size") and therefore has the same units, then a modified differential entropy may be written in proper form as:

and the result will be the same for any choice of units for x. In fact, the limit of discrete entropy as would also include a term of , which would in general be infinite. This is expected: continuous variables would typically have infinite entropy when discretized. The limiting density of discrete points is really a measure of how much easier a distribution is to describe than a distribution that is uniform over its quantization scheme.

Relative entropy

Another useful measure of entropy that works equally well in the discrete and the continuous case is the relative entropy of a distribution. It is defined as the Kullback–Leibler divergence from the distribution to a reference measure m as follows. Assume that a probability distribution p is absolutely continuous with respect to a measure m, i.e. is of the form p(dx) = f(x)m(dx) for some non-negative m-integrable function f with m-integral 1, then the relative entropy can be defined as

In this form the relative entropy generalizes (up to change in sign) both the discrete entropy, where the measure m is the counting measure, and the differential entropy, where the measure m is the Lebesgue measure. If the measure m is itself a probability distribution, the relative entropy is non-negative, and zero if p = m as measures. It is defined for any measure space, hence coordinate independent and invariant under co-ordinate reparameterizations if one properly takes into account the transformation of the measure m. The relative entropy, and (implicitly) entropy and differential entropy, do depend on the "reference" measure m.

Use in combinatorics

Entropy has become a useful quantity in combinatorics.

Loomis–Whitney inequality

A simple example of this is an alternative proof of the Loomis–Whitney inequality: for every subset A ⊆ Zd, we have

where Pi is the orthogonal projection in the ith coordinate:

The proof follows as a simple corollary of Shearer's inequality: if X1, ..., Xd are random variables and S1, ..., Sn are subsets of {1, ..., d} such that every integer between 1 and d lies in exactly r of these subsets, then

where is the Cartesian product of random variables Xj with indexes j in Si (so the dimension of this vector is equal to the size of Si).

We sketch how Loomis–Whitney follows from this: Indeed, let X be a uniformly distributed random variable with values in A and so that each point in A occurs with equal probability. Then (by the further properties of entropy mentioned above) Η(X) = log|A|, where |A| denotes the cardinality of A. Let Si = {1, 2, ..., i−1, i+1, ..., d}. The range of is contained in Pi(A) and hence . Now use this to bound the right side of Shearer's inequality and exponentiate the opposite sides of the resulting inequality you obtain.

Approximation to binomial coefficient

For integers 0 < k < n let q = k/n. Then

where

Proof (sketch)

A nice interpretation of this is that the number of binary strings of length n with exactly k many 1's is approximately .

Use in machine learning

Machine learning techniques arise largely from statistics and also information theory. In general, entropy is a measure of uncertainty and the objective of machine learning is to minimize uncertainty.

Decision tree learning algorithms use relative entropy to determine the decision rules that govern the data at each node. The Information gain in decision trees , which is equal to the difference between the entropy of and the conditional entropy of given , quantifies the expected information, or the reduction in entropy, from additionally knowing the value of an attribute . The information gain is used to identify which attributes of the dataset provide the most information and should be used to split the nodes of the tree optimally.

Bayesian inference models often apply the Principle of maximum entropy to obtain Prior probability distributions. The idea is that the distribution that best represents the current state of knowledge of a system is the one with the largest entropy, and is therefore suitable to be the prior.

Classification in machine learning performed by logistic regression or artificial neural networks often employs a standard loss function, called cross entropy loss, that minimizes the average cross entropy between ground truth and predicted distributions. In general, cross entropy is a measure of the differences between two datasets similar to the KL divergence (also known as relative entropy).

![{\displaystyle p:{\mathcal {X}}\to [0,1]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/f219961c04ea0285eff7416f743c7684e0da25fa)

![{\displaystyle \mathrm {H} (X):=-\sum _{x\in {\mathcal {X}}}p(x)\log p(x)=\mathbb {E} [-\log p(X)],}](https://wikimedia.org/api/rest_v1/media/math/render/svg/5a9e707eb6c54290db4ee6be05582944e34e30c8)

![{\displaystyle p(x):=\mathbb {P} [X=x]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/9aa0ae7ad3abfcf67512937135163355464fa594)

![{\displaystyle \mathrm {H} (X)=\mathbb {E} [\operatorname {I} (X)]=\mathbb {E} [-\log p(X)].}](https://wikimedia.org/api/rest_v1/media/math/render/svg/5ff47e0ff30877da3b4c93b311fe1cc4b4194a86)

![{\displaystyle p_{X,Y}(x,y):=\mathbb {P} [X=x,Y=y]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/78582c96838c79f6c42f9e40afd3a01d4cbfaf6a)

![{\displaystyle p_{Y}(y)=\mathbb {P} [Y=y]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/92fdcaec99cdc3e8031abfeffc84addb07477e2c)

![{\displaystyle \mathrm {H} (X)=\mathbb {E} [-\log _{b}p(X)]\leq -\log _{b}\left(\mathbb {E} [p(X)]\right)\leq \log _{b}n}](https://wikimedia.org/api/rest_v1/media/math/render/svg/3adc8a7d3442cf093e15df11d4cb64a3fbd650bd)

![{\displaystyle \mathrm {H} (X)=\mathbb {E} [-\log f(X)]=-\int _{\mathbb {X} }f(x)\log f(x)\,\mathrm {d} x.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/9e222b735b621457042c194ed573f7455ffd8393)

![h[f]=\lim _{\Delta \to 0}\left(\mathrm {H} ^{\Delta }+\log \Delta \right)=-\int _{-\infty }^{\infty }f(x)\log f(x)\,dx,](https://wikimedia.org/api/rest_v1/media/math/render/svg/de75a70e038437e41b9059539b98401c0dfee502)

![\mathrm {H} [(X_{1},\ldots ,X_{d})]\leq {\frac {1}{r}}\sum _{i=1}^{n}\mathrm {H} [(X_{j})_{j\in S_{i}}]](https://wikimedia.org/api/rest_v1/media/math/render/svg/8de2bd6cee10798b69a6988d48ae8b7ec3dda933)

![\mathrm {H} [(X_{j})_{j\in S_{i}}]\leq \log |P_{i}(A)|](https://wikimedia.org/api/rest_v1/media/math/render/svg/83cdf86a9cc535f574eb80edb605516645d509d6)