In mathematics, factorization (or factorisation, see English spelling differences) or factoring consists of writing a number or another mathematical object as a product of several factors, usually smaller or simpler objects of the same kind. For example, 3 × 5 is an integer factorization of 15, and (x – 2)(x + 2) is a polynomial factorization of x2 – 4.

Factorization is not usually considered meaningful within number systems possessing division, such as the real or complex numbers, since any can be trivially written as whenever is not zero. However, a meaningful factorization for a rational number or a rational function can be obtained by writing it in lowest terms and separately factoring its numerator and denominator.

Factorization was first considered by ancient Greek mathematicians in the case of integers. They proved the fundamental theorem of arithmetic, which asserts that every positive integer may be factored into a product of prime numbers, which cannot be further factored into integers greater than 1. Moreover, this factorization is unique up to the order of the factors. Although integer factorization is a sort of inverse to multiplication, it is much more difficult algorithmically, a fact which is exploited in the RSA cryptosystem to implement public-key cryptography.

Polynomial factorization has also been studied for centuries. In elementary algebra, factoring a polynomial reduces the problem of finding its roots to finding the roots of the factors. Polynomials with coefficients in the integers or in a field possess the unique factorization property, a version of the fundamental theorem of arithmetic with prime numbers replaced by irreducible polynomials. In particular, a univariate polynomial with complex coefficients admits a unique (up to ordering) factorization into linear polynomials: this is a version of the fundamental theorem of algebra. In this case, the factorization can be done with root-finding algorithms. The case of polynomials with integer coefficients is fundamental for computer algebra. There are efficient computer algorithms for computing (complete) factorizations within the ring of polynomials with rational number coefficients (see factorization of polynomials).

A commutative ring possessing the unique factorization property is called a unique factorization domain. There are number systems, such as certain rings of algebraic integers, which are not unique factorization domains. However, rings of algebraic integers satisfy the weaker property of Dedekind domains: ideals factor uniquely into prime ideals.

Factorization may also refer to more general decompositions of a mathematical object into the product of smaller or simpler objects. For example, every function may be factored into the composition of a surjective function with an injective function. Matrices possess many kinds of matrix factorizations. For example, every matrix has a unique LUP factorization as a product of a lower triangular matrix L with all diagonal entries equal to one, an upper triangular matrix U, and a permutation matrix P; this is a matrix formulation of Gaussian elimination.

Integers

By the fundamental theorem of arithmetic, every integer greater than 1 has a unique (up to the order of the factors) factorization into prime numbers, which are those integers which cannot be further factorized into the product of integers greater than one.

For computing the factorization of an integer n, one needs an algorithm for finding a divisor q of n or deciding that n is prime. When such a divisor is found, the repeated application of this algorithm to the factors q and n / q gives eventually the complete factorization of n.

For finding a divisor q of n, if any, it suffices to test all values of q such that 1 < q and q2 ≤ n. In fact, if r is a divisor of n such that r2 > n, then q = n / r is a divisor of n such that q2 ≤ n.

If one tests the values of q in increasing order, the first divisor that is found is necessarily a prime number, and the cofactor r = n / q cannot have any divisor smaller than q. For getting the complete factorization, it suffices thus to continue the algorithm by searching a divisor of r that is not smaller than q and not greater than √r.

There is no need to test all values of q for applying the method. In principle, it suffices to test only prime divisors. This needs to have a table of prime numbers that may be generated for example with the sieve of Eratosthenes. As the method of factorization does essentially the same work as the sieve of Eratosthenes, it is generally more efficient to test for a divisor only those numbers for which it is not immediately clear whether they are prime or not. Typically, one may proceed by testing 2, 3, 5, and the numbers > 5, whose last digit is 1, 3, 7, 9 and the sum of digits is not a multiple of 3.

This method works well for factoring small integers, but is inefficient for larger integers. For example, Pierre de Fermat was unable to discover that the 6th Fermat number

is not a prime number. In fact, applying the above method would require more than 10000 divisions, for a number that has 10 decimal digits.

There are more efficient factoring algorithms. However they remain relatively inefficient, as, with the present state of the art, one cannot factorize, even with the more powerful computers, a number of 500 decimal digits that is the product of two randomly chosen prime numbers. This ensures the security of the RSA cryptosystem, which is widely used for secure internet communication.

Example

For factoring n = 1386 into primes:

- Start with division by 2: the number is even, and n = 2 · 693. Continue with 693, and 2 as a first divisor candidate.

- 693 is odd (2 is not a divisor), but is a multiple of 3: one has 693 = 3 · 231 and n = 2 · 3 · 231. Continue with 231, and 3 as a first divisor candidate.

- 231 is also a multiple of 3: one has 231 = 3 · 77, and thus n = 2 · 32 · 77. Continue with 77, and 3 as a first divisor candidate.

- 77 is not a multiple of 3, since the sum of its digits is 14, not a multiple of 3. It is also not a multiple of 5 because its last digit is 7. The next odd divisor to be tested is 7. One has 77 = 7 · 11, and thus n = 2 · 32 · 7 · 11. This shows that 7 is prime (easy to test directly). Continue with 11, and 7 as a first divisor candidate.

- As 72 > 11, one has finished. Thus 11 is prime, and the prime factorization is

- 1386 = 2 · 32 · 7 · 11.

Expressions

Manipulating expressions is the basis of algebra. Factorization is one of the most important methods for expression manipulation for several reasons. If one can put an equation in a factored form E⋅F = 0, then the problem of solving the equation splits into two independent (and generally easier) problems E = 0 and F = 0. When an expression can be factored, the factors are often much simpler, and may thus offer some insight on the problem. For example,

having 16 multiplications, 4 subtractions and 3 additions, may be factored into the much simpler expression

with only two multiplications and three subtractions. Moreover, the factored form immediately gives roots x = a,b,c as the roots of the polynomial.

On the other hand, factorization is not always possible, and when it is possible, the factors are not always simpler. For example, can be factored into two irreducible factors and .

Various methods have been developed for finding factorizations; some are described below.

Solving algebraic equations may be viewed as a problem of polynomial factorization. In fact, the fundamental theorem of algebra can be stated as follows: every polynomial in x of degree n with complex coefficients may be factorized into n linear factors for i = 1, ..., n, where the ais are the roots of the polynomial. Even though the structure of the factorization is known in these cases, the ais generally cannot be computed in terms of radicals (nth roots), by the Abel–Ruffini theorem. In most cases, the best that can be done is computing approximate values of the roots with a root-finding algorithm.

History of factorization of expressions

The systematic use of algebraic manipulations for simplifying expressions (more specifically equations)) may be dated to 9th century, with al-Khwarizmi's book The Compendious Book on Calculation by Completion and Balancing, which is titled with two such types of manipulation.

However, even for solving quadratic equations, the factoring method was not used before Harriot's work published in 1631, ten years after his death. In his book Artis Analyticae Praxis ad Aequationes Algebraicas Resolvendas, Harriot drew tables for addition, subtraction, multiplication and division of monomials, binomials, and trinomials. Then, in a second section, he set up the equation aa − ba + ca = + bc, and showed that this matches the form of multiplication he had previously provided, giving the factorization (a − b)(a + c).

General methods

The following methods apply to any expression that is a sum, or that may be transformed into a sum. Therefore, they are most often applied to polynomials, though they also may be applied when the terms of the sum are not monomials, that is, the terms of the sum are a product of variables and constants.

Common factor

It may occur that all terms of a sum are products and that some factors are common to all terms. In this case, the distributive law allows factoring out this common factor. If there are several such common factors, it is preferable to divide out the greatest such common factor. Also, if there are integer coefficients, one may factor out the greatest common divisor of these coefficients.

For example,

since 2 is the greatest common divisor of 6, 8, and 10, and divides all terms.

Grouping

Grouping terms may allow using other methods for getting a factorization.

For example, to factor

one may remark that the first two terms have a common factor x, and the last two terms have the common factor y. Thus

Then a simple inspection shows the common factor x + 5, leading to the factorization

In general, this works for sums of 4 terms that have been obtained as the product of two binomials. Although not frequently, this may work also for more complicated examples.

Adding and subtracting terms

Sometimes, some term grouping reveals part of a recognizable pattern. It is then useful to add and subtract terms to complete the pattern.

A typical use of this is the completing the square method for getting the quadratic formula.

Another example is the factorization of If one introduces the non-real square root of –1, commonly denoted i, then one has a difference of squares

However, one may also want a factorization with real number coefficients. By adding and subtracting and grouping three terms together, one may recognize the square of a binomial:

Subtracting and adding also yields the factorization:

These factorizations work not only over the complex numbers, but also over any field, where either –1, 2 or –2 is a square. In a finite field, the product of two non-squares is a square; this implies that the polynomial which is irreducible over the integers, is reducible modulo every prime number. For example,

- since

- since

- since

Recognizable patterns

Many identities provide an equality between a sum and a product. The above methods may be used for letting the sum side of some identity appear in an expression, which may therefore be replaced by a product.

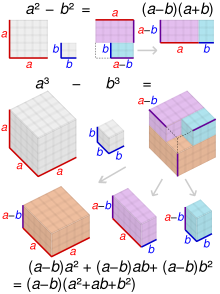

Below are identities whose left-hand sides are commonly used as patterns (this means that the variables E and F that appear in these identities may represent any subexpression of the expression that has to be factorized).

- For example,

- Sum/difference of two cubes

- Difference of two fourth powers

- Sum/difference of two nth powers

- In the following identities, the factors may often be further factorized:

- Difference, even exponent

- Difference, even or odd exponent

- This is an example showing that the factors may be much larger than the sum that is factorized.

- Sum, odd exponent

- (obtained by changing F by –F in the preceding formula)

- Sum, even exponent

- If the exponent is a power of two then the expression cannot, in general, be factorized without introducing complex numbers (if E and F contain complex numbers, this may be not the case). If n has an odd divisor, that is if n = pq with p odd, one may use the preceding formula (in "Sum, odd exponent") applied to

- Trinomials and cubic formulas

- Binomial expansions

- The binomial theorem supplies patterns that can easily be recognized from the integers that appear in them

- In low degree:

- More generally, the coefficients of the expanded forms of and are the binomial coefficients, that appear in the nth row of Pascal's triangle.

Roots of unity

The nth roots of unity are the complex numbers each of which is a root of the polynomial They are thus the numbers

for

It follows that for any two expressions E and F, one has:

If E and F are real expressions, and one wants real factors, one has to replace every pair of complex conjugate factors by its product. As the complex conjugate of is and

one has the following real factorizations (one passes from one to the other by changing k into n – k or n + 1 – k, and applying the usual trigonometric formulas:

The cosines that appear in these factorizations are algebraic numbers, and may be expressed in terms of radicals (this is possible because their Galois group is cyclic); however, these radical expressions are too complicated to be used, except for low values of n. For example,

Often one wants a factorization with rational coefficients. Such a factorization involves cyclotomic polynomials. To express rational factorizations of sums and differences or powers, we need a notation for the homogenization of a polynomial: if its homogenization is the bivariate polynomial Then, one has

where the products are taken over all divisors of n, or all divisors of 2n that do not divide n, and is the nth cyclotomic polynomial.

For example,

since the divisors of 6 are 1, 2, 3, 6, and the divisors of 12 that do not divide 6 are 4 and 12.

Polynomials

For polynomials, factorization is strongly related with the problem of solving algebraic equations. An algebraic equation has the form

where P(x) is a polynomial in x with A solution of this equation (also called a root of the polynomial) is a value r of x such that

If is a factorization of P(x) = 0 as a product of two polynomials, then the roots of P(x) are the union of the roots of Q(x) and the roots of R(x). Thus solving P(x) = 0 is reduced to the simpler problems of solving Q(x) = 0 and R(x) = 0.

Conversely, the factor theorem asserts that, if r is a root of P(x) = 0, then P(x) may be factored as

where Q(x) is the quotient of Euclidean division of P(x) = 0 by the linear (degree one) factor x – r.

If the coefficients of P(x) are real or complex numbers, the fundamental theorem of algebra asserts that P(x) has a real or complex root. Using the factor theorem recursively, it results that

where are the real or complex roots of P, with some of them possibly repeated. This complete factorization is unique up to the order of the factors.

If the coefficients of P(x) are real, one generally wants a factorization where factors have real coefficients. In this case, the complete factorization may have some quadratic (degree two) factors. This factorization may easily be deduced from the above complete factorization. In fact, if r = a + ib is a non-real root of P(x), then its complex conjugate s = a - ib is also a root of P(x). So, the product

is a factor of P(x) with real coefficients. Repeating this for all non-real factors gives a factorization with linear or quadratic real factors.

For computing these real or complex factorizations, one needs the roots of the polynomial, which may not be computed exactly, and only approximated using root-finding algorithms.

In practice, most algebraic equations of interest have integer or rational coefficients, and one may want a factorization with factors of the same kind. The fundamental theorem of arithmetic may be generalized to this case, stating that polynomials with integer or rational coefficients have the unique factorization property. More precisely, every polynomial with rational coefficients may be factorized in a product

where q is a rational number and are non-constant polynomials with integer coefficients that are irreducible and primitive; this means that none of the may be written as the product two polynomials (with integer coefficients) that are neither 1 nor –1 (integers are considered as polynomials of degree zero). Moreover, this factorization is unique up to the order of the factors and the signs of the factors.

There are efficient algorithms for computing this factorization, which are implemented in most computer algebra systems. See Factorization of polynomials. Unfortunately, these algorithms are too complicated to use for paper-and-pencil computations. Besides the heuristics above, only a few methods are suitable for hand computations, which generally work only for polynomials of low degree, with few nonzero coefficients. The main such methods are described in next subsections.

Primitive-part & content factorization

Every polynomial with rational coefficients, may be factorized, in a unique way, as the product of a rational number and a polynomial with integer coefficients, which is primitive (that is, the greatest common divisor of the coefficients is 1), and has a positive leading coefficient (coefficient of the term of the highest degree). For example:

In this factorization, the rational number is called the content, and the primitive polynomial is the primitive part. The computation of this factorization may be done as follows: firstly, reduce all coefficients to a common denominator, for getting the quotient by an integer q of a polynomial with integer coefficients. Then one divides out the greater common divisor p of the coefficients of this polynomial for getting the primitive part, the content being Finally, if needed, one changes the signs of p and all coefficients of the primitive part.

This factorization may produce a result that is larger than the original polynomial (typically when there are many coprime denominators), but, even when this is the case, the primitive part is generally easier to manipulate for further factorization.

Using the factor theorem

The factor theorem states that, if r is a root of a polynomial

meaning P(r) = 0, then there is a factorization

where

with . Then polynomial long division or synthetic division give:

This may be useful when one knows or can guess a root of the polynomial.

For example, for one may easily see that the sum of its coefficients is 0, so r = 1 is a root. As r + 0 = 1, and one has

Rational roots

For polynomials with rational number coefficients, one may search for roots which are rational numbers. Primitive part-content factorization (see above) reduces the problem of searching for rational roots to the case of polynomials with integer coefficients having no non-trivial common divisor.

If is a rational root of such a polynomial

the factor theorem shows that one has a factorization

where both factors have integer coefficients (the fact that Q has integer coefficients results from the above formula for the quotient of P(x) by ).

Comparing the coefficients of degree n and the constant coefficients in the above equality shows that, if is a rational root in reduced form, then q is a divisor of and p is a divisor of Therefore, there is a finite number of possibilities for p and q, which can be systematically examined.

For example, if the polynomial

has a rational root with q > 0, then p must divide 6; that is and q must divide 2, that is Moreover, if x < 0, all terms of the polynomial are negative, and, therefore, a root cannot be negative. That is, one must have

A direct computation shows that only is a root, so there can be no other rational root. Applying the factor theorem leads finally to the factorization

Quadratic ac method

The above method may be adapted for quadratic polynomials, leading to the ac method of factorization.

Consider the quadratic polynomial

with integer coefficients. If it has a rational root, its denominator must divide a evenly and it may be written as a possibly reducible fraction By Vieta's formulas, the other root is

with Thus the second root is also rational, and Vieta's second formula gives

that is

Checking all pairs of integers whose product is ac gives the rational roots, if any.

In summary, if has rational roots there are integers r and s such and (a finite number of cases to test), and the roots are and In other words, one has the factorization

For example, let consider the quadratic polynomial

Inspection of the factors of ac = 36 leads to 4 + 9 = 13 = b, giving the two roots

and the factorization

Using formulas for polynomial roots

Any univariate quadratic polynomial can be factored using the quadratic formula:

where and are the two roots of the polynomial.

If a, b, c are all real, the factors are real if and only if the discriminant is non-negative. Otherwise, the quadratic polynomial cannot be factorized into non-constant real factors.

The quadratic formula is valid when the coefficients belong to any field of characteristic different from two, and, in particular, for coefficients in a finite field with an odd number of elements.

There are also formulas for roots of cubic and quartic polynomials, which are, in general, too complicated for practical use. The Abel–Ruffini theorem shows that there are no general root formulas in terms of radicals for polynomials of degree five or higher.

Using relations between roots

It may occur that one knows some relationship between the roots of a polynomial and its coefficients. Using this knowledge may help factoring the polynomial and finding its roots. Galois theory is based on a systematic study of the relations between roots and coefficients, that include Vieta's formulas.

Here, we consider the simpler case where two roots and of a polynomial satisfy the relation

where Q is a polynomial.

This implies that is a common root of and It is therefore a root of the greatest common divisor of these two polynomials. It follows that this greatest common divisor is a non constant factor of Euclidean algorithm for polynomials allows computing this greatest common factor.

For example, if one know or guess that: has two roots that sum to zero, one may apply Euclidean algorithm to and The first division step consists in adding to giving the remainder of

Then, dividing by gives zero as a new remainder, and x – 5 as a quotient, leading to the complete factorization

Unique factorization domains

The integers and the polynomials over a field share the property of unique factorization, that is, every nonzero element may be factored into a product of an invertible element (a unit, ±1 in the case of integers) and a product of irreducible elements (prime numbers, in the case of integers), and this factorization is unique up to rearranging the factors and shifting units among the factors. Integral domains which share this property are called unique factorization domains (UFD).

Greatest common divisors exist in UFDs, and conversely, every integral domain in which greatest common divisors exist is an UFD. Every principal ideal domain is an UFD.

A Euclidean domain is an integral domain on which is defined a Euclidean division similar to that of integers. Every Euclidean domain is a principal ideal domain, and thus a UFD.

In a Euclidean domain, Euclidean division allows defining a Euclidean algorithm for computing greatest common divisors. However this does not imply the existence of a factorization algorithm. There is an explicit example of a field F such that there cannot exist any factorization algorithm in the Euclidean domain F[x] of the univariate polynomials over F.

Ideals

In algebraic number theory, the study of Diophantine equations led mathematicians, during 19th century, to introduce generalizations of the integers called algebraic integers. The first ring of algebraic integers that have been considered were Gaussian integers and Eisenstein integers, which share with usual integers the property of being principal ideal domains, and have thus the unique factorization property.

Unfortunately, it soon appeared that most rings of algebraic integers are not principal and do not have unique factorization. The simplest example is in which

and all these factors are irreducible.

This lack of unique factorization is a major difficulty for solving Diophantine equations. For example, many wrong proofs of Fermat's Last Theorem (probably including Fermat's "truly marvelous proof of this, which this margin is too narrow to contain") were based on the implicit supposition of unique factorization.

This difficulty was resolved by Dedekind, who proved that the rings of algebraic integers have unique factorization of ideals: in these rings, every ideal is a product of prime ideals, and this factorization is unique up the order of the factors. The integral domains that have this unique factorization property are now called Dedekind domains. They have many nice properties that make them fundamental in algebraic number theory.

Matrices

Matrix rings are non-commutative and have no unique factorization: there are, in general, many ways of writing a matrix as a product of matrices. Thus, the factorization problem consists of finding factors of specified types. For example, the LU decomposition gives a matrix as the product of a lower triangular matrix by an upper triangular matrix. As this is not always possible, one generally considers the "LUP decomposition" having a permutation matrix as its third factor.

See Matrix decomposition for the most common types of matrix factorizations.

A logical matrix represents a binary relation, and matrix multiplication corresponds to composition of relations. Decomposition of a relation through factorization serves to profile the nature of the relation, such as a difunctional relation.

![\mathbb {Z} [{\sqrt {-5}}],](https://wikimedia.org/api/rest_v1/media/math/render/svg/643ef2c1e3b8b8e684a0ddf73d649cb0202cc0c1)