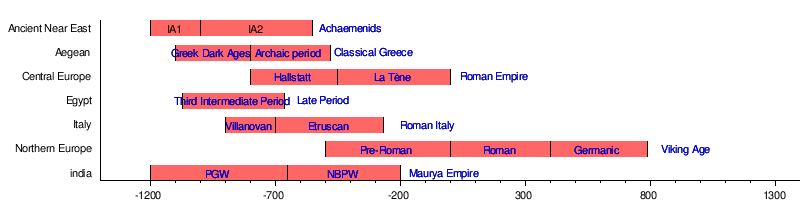

Although meteoric iron has been used for millennia in many regions, the beginning of the Iron Age is defined locally around the world by archaeological convention when the production of smelted iron (especially steel tools and weapons) replaces their bronze equivalents in common use.

In Anatolia and the Caucasus, or Southeast Europe, the Iron Age began during the late 2nd millennium BC (c. 1300 BC). In the Ancient Near East, this transition occurred simultaneously with the Late Bronze Age collapse, during the 12th century BC (1200–1100 BC). The technology soon spread throughout the Mediterranean Basin region and to South Asia between the 12th and 11th century BC. Its further spread to Central Asia, Eastern Europe, and Central Europe was somewhat delayed, and Northern Europe was not reached until about the start of the 5th century BC (500 BC).

The Iron Age in India is stated as beginning with the ironworking Painted Grey Ware culture, dating from the 15th century BC, through to the reign of Ashoka in the 3rd century BC. The term "Iron Age" in the archaeology of South, East, and Southeast Asia is more recent and less common than for Western Eurasia. Africa did not have a universal "Bronze Age", and many areas transitioned directly from stone to iron. Some archaeologists believe that iron metallurgy was developed in sub-Saharan Africa independently from Eurasia and neighbouring parts of Northeast Africa as early as 2000 BC.

The concept of the Iron Age ending with the beginning of the written historiographical record has not generalized well, as written language and steel use have developed at different times in different areas across the archaeological record. For instance, in China, written history started before iron smelting began, so the term is used infrequently for the archaeology of China. For the Ancient Near East, the establishment of the Achaemenid Empire c. 550 BC is used traditionally and still usually as an end date; later dates are considered historical according to the record by Herodotus despite considerable written records now being known from well back into the Bronze Age. In Central and Western Europe, the Roman conquests of the 1st century BC serve as marking the end of the Iron Age. The Germanic Iron Age of Scandinavia is considered to end c. AD 800, with the beginning of the Viking Age.

History of the concept

The three-age method of Stone, Bronze, and Iron Ages was first used for the archaeology of Europe during the first half of the 19th century, and by the latter half of the 19th century, it had been extended to the archaeology of the Ancient Near East. Its name harks back to the mythological "Ages of Man" of Hesiod. As an archaeological era, it was first introduced to Scandinavia by Christian Jürgensen Thomsen during the 1830s. By the 1860s, it was embraced as a useful division of the "earliest history of mankind" in general and began to be applied in Assyriology. The development of the now-conventional periodization in the archaeology of the Ancient Near East was developed during the 1920s and 1930s.

Definition of "iron"

Meteoric iron, a natural iron–nickel alloy, was used by various ancient peoples thousands of years before the Iron Age. The earliest-known meteoric iron artifacts are nine small beads dated to 3200 BC, which were found in burials at Gerzeh in Lower Egypt, having been shaped by careful hammering.

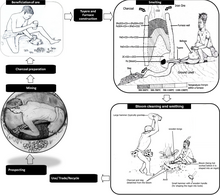

The characteristic of an Iron Age culture is the mass production of tools and weapons made not just of found iron, but from smelted steel alloys with an added carbon content. Only with the capability of the production of carbon steel does ferrous metallurgy result in tools or weapons that are harder and lighter than bronze.

Smelted iron appears sporadically in the archeological record from the middle Bronze Age. Whilst terrestrial iron is abundant naturally, temperatures above 1,250 °C (2,280 °F) are required to smelt it, impractical to achieve with the technology available commonly until the end of the second millennium BC. In contrast, the components of bronze—tin with a melting point of 231.9 °C (449.4 °F) and copper with a relatively moderate melting point of 1,085 °C (1,985 °F)—were within the capabilities of Neolithic kilns, which date back to 6000 BC and were able to produce temperatures greater than 900 °C (1,650 °F).

In addition to specially designed furnaces, ancient iron production required the development of complex procedures for the removal of impurities, the regulation of the admixture of carbon, and the invention of hot-working to achieve a useful balance of hardness and strength in steel. The use of steel has also been regulated by the economics of the metallurgical advancements.

Chronology

|

Earliest evidence

The earliest tentative evidence for iron-making is a small number of iron fragments with the appropriate amounts of carbon admixture found in the Proto-Hittite layers at Kaman-Kalehöyük in modern-day Turkey, dated to 2200–2000 BC. Akanuma (2008) concludes that "The combination of carbon dating, archaeological context, and archaeometallurgical examination indicates that it is likely that the use of ironware made of steel had already begun in the third millennium BC in Central Anatolia". Souckova-Siegolová (2001) shows that iron implements were made in Central Anatolia in very limited quantities about 1800 BC and were in general use by elites, though not by commoners, during the New Hittite Empire (≈1400–1200 BC).

Similarly, recent archaeological remains of iron-working in the Ganges Valley in India have been dated tentatively to 1800 BC. Tewari (2003) concludes that "knowledge of iron smelting and manufacturing of iron artifacts was well known in the Eastern Vindhyas and iron had been in use in the Central Ganga Plain, at least from the early second millennium BC". By the Middle Bronze Age increasing numbers of smelted iron objects (distinguishable from meteoric iron by the lack of nickel in the product) appeared in the Middle East, Southeast Asia and South Asia.

African sites are revealing dates as early as 2000–1200 BC. However, some recent studies date the inception of iron metallurgy in Africa between 3000 and 2500 BC, with evidence existing for early iron metallurgy in parts of Nigeria, Cameroon, and Central Africa, from as early as around 2,000 BC. The Nok culture of Nigeria may have practiced iron smelting from as early as 1000 BC, while the nearby Djenné-Djenno culture of the Niger Valley in Mali shows evidence of iron production from c. 250 BC. Iron technology across much of sub-Saharan Africa has an African origin dating to before 2000 BC. These findings confirm the independent invention of iron smelting in sub-Saharan Africa.

Beginning

Modern archaeological evidence identifies the start of large-scale global iron production about 1200 BC, marking the end of the Bronze Age. The Iron Age in Europe is often considered as a part of the Bronze Age collapse in the ancient Near East.

Anthony Snodgrass suggests that a shortage of tin and trade disruptions in the Mediterranean about 1300 BC forced metalworkers to seek an alternative to bronze. Many bronze implements were recycled into weapons during that time, and more widespread use of iron resulted in improved steel-making technology and lower costs. When tin became readily available again, iron was cheaper, stronger and lighter, and forged iron implements superseded cast bronze tools permanently.

In Central and Western Europe, the Iron Age lasted from c. 800 BC to c. 1 BC, beginning in pre-Roman Iron Age Northern Europe in c. 600 BC, and reaching Northern Scandinavian Europe about c. 500 BC.

The Iron Age in the Ancient Near East is considered to last from c. 1200 BC (the Bronze Age collapse) to c. 550 BC (or 539 BC), roughly the beginning of historiography with Herodotus, marking the end of the proto-historical period.

In China, because writing was developed first, there is no recognizable prehistoric period characterized by ironworking, and the Bronze Age China transitions almost directly into the Qin dynasty of imperial China. "Iron Age" in the context of China is used sometimes for the transitional period of c. 900 BC to 100 BC during which ferrous metallurgy was present even if not dominant.

Ancient Near East

The Iron Age in the Ancient Near East is believed to have begun after the discovery of iron smelting and smithing techniques in Anatolia, the Caucasus or Southeast Europe during the late 2nd millennium BC (c. 1300 BC). The earliest bloomery smelting of iron is found at Tell Hammeh, Jordan about 930 BC (determined from 14C dating).

The Early Iron Age in the Caucasus area is divided conventionally into two periods, Early Iron I, dated to about 1100 BC, and the Early Iron II phase from the tenth to ninth centuries BC. Many of the material culture traditions of the Late Bronze Age continued into the Early Iron Age. Thus, there is a sociocultural continuity during this transitional period.

In Iran, the earliest actual iron artifacts were unknown until the 9th century BC. For Iran, the best studied archaeological site during this time period is Teppe Hasanlu.

West Asia

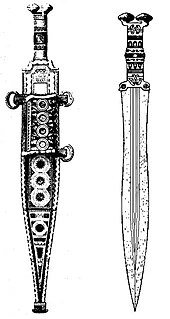

In the Mesopotamian states of Sumer, Akkad and Assyria, the initial use of iron reaches far back, to perhaps 3000 BC. One of the earliest smelted iron artifacts known is a dagger with an iron blade found in a Hattic tomb in Anatolia, dating from 2500 BC. The widespread use of iron weapons which replaced bronze weapons rapidly disseminated throughout the Near East (North Africa, southwest Asia) by the beginning of the 1st millennium BC.

The development of iron smelting was once attributed to the Hittites of Anatolia during the Late Bronze Age. As part of the Late Bronze Age-Early Iron Age, the Bronze Age collapse saw the slow, comparatively continuous spread of iron-working technology in the region. It was long believed that the success of the Hittite Empire during the Late Bronze Age had been based on the advantages entailed by the "monopoly" on ironworking at the time. Accordingly, the invading Sea Peoples would have been responsible for spreading the knowledge through that region. The idea of such a "Hittite monopoly" has been examined more thoroughly and no longer represents a scholarly consensus. While there are some iron objects from Bronze Age Anatolia, the number is comparable to iron objects found in Egypt and other places of the same time period; and only a small number of these objects are weapons.

| Date | Crete | Aegean | Greece | Cyprus | Sub-totals | Anatolia | Totals |

|---|---|---|---|---|---|---|---|

| 1300–1200 BC | 5 | 2 | 9 | 0 | 16 | 33 | 49 |

| Total Bronze Age | 5 | 2 | 9 | 0 | 16 | 33 | 49 |

| 1200–1100 BC | 1 | 2 | 8 | 26 | 37 | N/A | 37 |

| 1100–1000 BC | 13 | 3 | 31 | 33 | 80 | N/A | 80 |

| 1000–900 BC | 37+ | 30 | 115 | 29 | 211 | N/A | 211 |

| Total Iron Age [Columns don't sum precisely] |

51 | 35 | 163 | 88 | 328 | N/A | 328 |

Dates are approximate; consult particular article for details.

- Prehistoric (or Proto-historic) Iron Age Historic Iron Age

Egypt

Iron metal is singularly scarce in collections of Egyptian antiquities. Bronze remained the primary material there until the conquest by the Neo-Assyrian Empire in 671 BC. The explanation of this would seem to be that the relics are in most cases the paraphernalia of tombs, the funeral vessels and vases, and iron being considered an impure metal by the ancient Egyptians it was never used in their manufacture of these or for any religious purposes. It was attributed to Seth, the spirit of evil who according to Egyptian tradition governed the central deserts of Africa. In the Black Pyramid of Abusir, dating before 2000 BC, Gaston Maspero found some pieces of iron. In the funeral text of Pepi I, the metal is mentioned. A sword bearing the name of pharaoh Merneptah as well as a battle axe with an iron blade and gold-decorated bronze shaft were both found in the excavation of Ugarit. A dagger with an iron blade found in Tutankhamun's tomb, 13th century BC, was examined recently and found to be of meteoric origin.

Europe

In Europe, the Iron Age is the last stage of prehistoric Europe and the first of the protohistoric periods, which initially means descriptions of a particular area by Greek and Roman writers. For much of Europe, the period came to an abrupt local end after conquest by the Romans, though ironworking remained the dominant technology until recent times. Elsewhere it may last until the early centuries AD, and either Christianization or a new conquest during the Migration Period.

Iron working was introduced to Europe during the late 11th century BC, probably from the Caucasus, and slowly spread northwards and westwards over the succeeding 500 years. The Iron Age did not start when iron first appeared in Europe but it began to replace bronze in the preparation of tools and weapons. It did not happen at the same time throughout Europe; local cultural developments played a role in the transition to the Iron Age. For example, the Iron Age of Prehistoric Ireland begins about 500 BC (when the Greek Iron Age had already ended) and finishes about 400 AD. The widespread use of the technology of iron was implemented in Europe simultaneously with Asia. The prehistoric Iron Age in Central Europe is divided into two periods based on the Hallstatt culture (early Iron Age) and La Tène (late Iron Age) cultures. Material cultures of Hallstatt and La Tène consist of 4 phases (A, B, C, D).

| Culture | Phase A | Phase B | Phase C | Phase D |

|---|---|---|---|---|

| Hallstatt | 1200–700 BC Flat graves |

1200–700 BC Pottery made of polychrome |

700–600 BC Heavy iron and bronze swords |

600–475 BC Dagger swords, brooches, and ring ornaments, girdle mounts |

| La Tène | 450–390 BC S-shaped, spiral and round designs |

390–300 BC Iron swords, heavy knives, lanceheads |

300–100 BC Iron chains, iron swords, belts, heavy spearheads |

100–15 BC Iron reaping-hooks, saws, scythes and hammers |

The Iron Age in Europe is characterized by an elaboration of designs of weapons, implements, and utensils. These are no longer cast but hammered into shape, and decoration is elaborate and curvilinear rather than simple rectilinear; the forms and character of the ornamentation of the northern European weapons resemble in some respects Roman arms, while in other respects they are peculiar and evidently representative of northern art.

Citânia de Briteiros, located in Guimarães, Portugal, is one of the examples of archaeological sites of the Iron Age. This settlement (fortified villages) covered an area of 3.8 hectares (9.4 acres), and served as a Celtiberian stronghold against Roman invasions. İt dates more than 2500 years back. The site was researched by Francisco Martins Sarmento starting from 1874. A number of amphoras (containers usually for wine or olive oil), coins, fragments of pottery, weapons, pieces of jewelry, as well as ruins of a bath and its pedra formosa (lit. 'handsome stone') revealed here.

Asia

Central Asia

The Iron Age in Central Asia began when iron objects appear among the Indo-European Saka in present-day Xinjiang (China) between the 10th century BC and the 7th century BC, such as those found at the cemetery site of Chawuhukou.

The Pazyryk culture is an Iron Age archaeological culture (c. 6th to 3rd centuries BC) identified by excavated artifacts and mummified humans found in the Siberian permafrost in the Altay Mountains.

East Asia

Dates are approximate; consult particular article for details.

- Prehistoric (or Proto-historic) Iron Age Historic Iron Age

In China, Chinese bronze inscriptions are found around 1200 BC, preceding the development of iron metallurgy, which was known by the 9th century BC. The large seal script is identified with a group of characters from a book entitled Shǐ Zhòu Piān (c. 800 BC). Therefore, in China prehistory had given way to history periodized by ruling dynasties by the start of iron use, so "Iron Age" is not used typically to describe a period of Chinese history. Iron metallurgy reached the Yangtse Valley toward the end of the 6th century BC. The few objects were found at Changsha and Nanjing. The mortuary evidence suggests that the initial use of iron in Lingnan belongs to the mid-to-late Warring States period (from about 350 BC). Important non-precious husi style metal finds include iron tools found at the tomb at Guwei-cun of the 4th century BC.

The techniques used in Lingnan are a combination of bivalve moulds of distinct southern tradition and the incorporation of piece mould technology from the Zhongyuan. The products of the combination of these two periods are bells, vessels, weapons and ornaments, and the sophisticated cast.

An Iron Age culture of the Tibetan Plateau has been associated tentatively with the Zhang Zhung culture described by early Tibetan writings.

In Japan, iron items, such as tools, weapons, and decorative objects, are postulated to have entered Japan during the late Yayoi period (c. 300 BC – 300 AD) or the succeeding Kofun period (c. 250–538 AD), most likely from the Korean Peninsula and China.

Distinguishing characteristics of the Yayoi period include the appearance of new pottery styles and the start of intensive rice agriculture in paddy fields. Yayoi culture flourished in a geographic area from southern Kyūshū to northern Honshū. The Kofun and the subsequent Asuka periods are sometimes referred to collectively as the Yamato period; The word kofun is Japanese for the type of burial mounds dating from that era.

Iron objects were introduced to the Korean peninsula through trade with chiefdoms and state-level societies in the Yellow Sea area during the 4th century BC, just at the end of the Warring States Period but prior to the beginning of the Western Han dynasty. Yoon proposes that iron was first introduced to chiefdoms located along North Korean river valleys that flow into the Yellow Sea such as the Cheongcheon and Taedong Rivers. Iron production quickly followed during the 2nd century BC, and iron implements came to be used by farmers by the 1st century in southern Korea. The earliest known cast-iron axes in southern Korea are found in the Geum River basin. The time that iron production begins is the same time that complex chiefdoms of Proto-historic Korea emerged. The complex chiefdoms were the precursors of early states such as Silla, Baekje, Goguryeo, and Gaya. Iron ingots were an important mortuary item and indicated the wealth or prestige of the deceased during this period.

South Asia

Dates are approximate; consult particular article for details.

- Prehistoric (or Proto-historic) Iron Age Historic Iron Age

The earliest evidence of iron smelting predates the emergence of the Iron Age proper by several centuries. Iron was being used in Mundigak to manufacture some items in the 3rd millennium BC such as a small copper/bronze bell with an iron clapper, a copper/bronze rod with two iron decorative buttons, and a copper/bronze mirror handle with a decorative iron button. Artefacts including small knives and blades have been discovered in the Indian state of Telangana which have been dated between 2400 BC and 1800 BC. The history of metallurgy in the Indian subcontinent began prior to the 3rd millennium BC. Archaeological sites in India, such as Malhar, Dadupur, Raja Nala Ka Tila, Lahuradewa, Kosambi and Jhusi, Allahabad in present-day Uttar Pradesh show iron implements in the period 1800–1200 BC. As the evidence from the sites Raja Nala ka tila, Malhar suggest the use of Iron in c. 1800/1700 BC. The extensive use of iron smelting is from Malhar and its surrounding area. This site is assumed as the center for smelted bloomer iron to this area due to its location in the Karamnasa River and Ganga River. This site shows agricultural technology as iron implements sickles, nails, clamps, spearheads, etc., by at least c. 1500 BC. Archaeological excavations in Hyderabad show an Iron Age burial site.

The beginning of the 1st millennium BC saw extensive developments in iron metallurgy in India. Technological advancement and mastery of iron metallurgy were achieved during this period of peaceful settlements. One ironworking centre in East India has been dated to the first millennium BC. In Southern India (present-day Mysore) iron appeared as early as 12th to 11th centuries BC; these developments were too early for any significant close contact with the northwest of the country. The Indian Upanishads mention metallurgy. and the Indian Mauryan period saw advances in metallurgy. As early as 300 BC, certainly by 200 AD, high-quality steel was produced in southern India, by what would later be called the crucible technique. In this system, high-purity wrought iron, charcoal, and glass were mixed in a crucible and heated until the iron melted and absorbed the carbon.

The protohistoric Early Iron Age in Sri Lanka lasted from 1000 BC to 600 BC. Radiocarbon evidence has been collected from Anuradhapura and Aligala shelter in Sigiriya. The Anuradhapura settlement is recorded to extend 10 ha (25 acres) by 800 BC and grew to 50 ha (120 acres) by 700–600 BC to become a town. The skeletal remains of an Early Iron Age chief were excavated in Anaikoddai, Jaffna. The name "Ko Veta" is engraved in Brahmi script on a seal buried with the skeleton and is assigned by the excavators to the 3rd century BC. Ko, meaning "King" in Tamil, is comparable to such names as Ko Atan and Ko Putivira occurring in contemporary Brahmi inscriptions in south India. It is also speculated that Early Iron Age sites may exist in Kandarodai, Matota, Pilapitiya and Tissamaharama.

The earliest undisputed deciphered epigraphy found in the Indian subcontinent are the Edicts of Ashoka of the 3rd century BC, in the Brahmi script. Several inscriptions were thought to be pre-Ashokan by earlier scholars; these include the Piprahwa relic casket inscription, the Badli pillar inscription, the Bhattiprolu relic casket inscription, the Sohgaura copper plate inscription, the Mahasthangarh Brahmi inscription, the Eran coin legend, the Taxila coin legends, and the inscription on the silver coins of Sophytes. However, more recent scholars have dated them to later periods.

Southeast Asia

Dates are approximate; consult particular article for details.

- Prehistoric (or Proto-historic) Iron Age Historic Iron Age

Archaeology in Thailand at sites Ban Don Ta Phet and Khao Sam Kaeo yielding metallic, stone, and glass artifacts stylistically associated with the Indian subcontinent suggest Indianization of Southeast Asia beginning in the 4th to 2nd centuries BC during the late Iron Age.

In Philippines and Vietnam, the Sa Huynh culture showed evidence of an extensive trade network. Sa Huynh beads were made from glass, carnelian, agate, olivine, zircon, gold and garnet; most of these materials were not local to the region and were most likely imported. Han-dynasty-style bronze mirrors were also found in Sa Huynh sites. Conversely, Sa Huynh produced ear ornaments have been found in archaeological sites in Central Thailand, as well as the Orchid Island.

Africa

Early evidence for iron technology in Sub-Saharan Africa can be found at sites such as KM2 and KM3 in northwest Tanzania and parts of Nigeria and the Central African Republic. Nubia was one of the relatively few places in Africa to have a sustained Bronze Age along with Egypt and much of the rest of North Africa.

Archaeometallurgical scientific knowledge and technological development originated in numerous centers of Africa; the centers of origin were located in West Africa, Central Africa, and East Africa; consequently, as these origin centers are located within inner Africa, these archaeometallurgical developments are thus native African technologies. Iron metallurgical development occurred 2631–2458 BC at Lejja, in Nigeria, 2136–1921 BC at Obui, in Central Africa Republic, 1895–1370 BC at Tchire Ouma 147, in Niger, and 1297–1051 BC at Dekpassanware, in Togo.

Very early copper and bronze working sites in Niger may date to as early as 1500 BC. There is also evidence of iron metallurgy in Termit, Niger from around this period. Nubia was a major manufacturer and exporter of iron after the expulsion of the Nubian dynasty from Egypt by the Assyrians in the 7th century BC.

Though there is some uncertainty, some archaeologists believe that iron metallurgy was developed independently in sub-Saharan West Africa, separately from Eurasia and neighboring parts of North and Northeast Africa.

Archaeological sites containing iron smelting furnaces and slag have also been excavated at sites in the Nsukka region of southeast Nigeria in what is now Igboland: dating to 2000 BC at the site of Lejja (Eze-Uzomaka 2009) and to 750 BC and at the site of Opi (Holl 2009). The site of Gbabiri (in the Central African Republic) has yielded evidence of iron metallurgy, from a reduction furnace and blacksmith workshop; with earliest dates of 896–773 BC and 907–796 BC, respectively. Similarly, smelting in bloomery-type furnaces appear in the Nok culture of central Nigeria by about 550 BC and possibly a few centuries earlier.

Iron and copper working in Sub-Saharan Africa spread south and east from Central Africa in conjunction with the Bantu expansion, from the Cameroon region to the African Great Lakes in the 3rd century BC, reaching the Cape around 400 AD. However, iron working may have been practiced in central Africa as early as the 3rd millennium BC. Instances of carbon steel based on complex preheating principles were found to be in production around the 1st century CE in northwest Tanzania.

Dates are approximate; consult particular article for details

- Prehistoric (or Proto-historic) Iron Age Historic Iron Age