Speech perception is the process by which the sounds of language are heard, interpreted and understood. The study of speech perception is closely linked to the fields of phonology and phonetics in linguistics and cognitive psychology and perception in psychology.

Research in speech perception seeks to understand how human listeners

recognize speech sounds and use this information to understand spoken

language. Speech perception research has applications in building computer systems that can recognize speech, in improving speech recognition for hearing- and language-impaired listeners, and in foreign-language teaching.

The process of perceiving speech begins at the level of the sound signal and the process of audition. (For a complete description of the process of audition see Hearing.) After processing the initial auditory signal, speech sounds are further processed to extract acoustic cues and phonetic information. This speech information can then be used for higher-level language processes, such as word recognition.

The process of perceiving speech begins at the level of the sound signal and the process of audition. (For a complete description of the process of audition see Hearing.) After processing the initial auditory signal, speech sounds are further processed to extract acoustic cues and phonetic information. This speech information can then be used for higher-level language processes, such as word recognition.

Acoustic cues

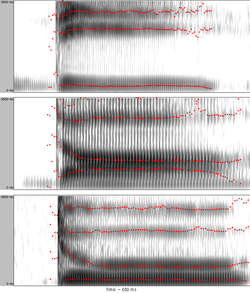

Figure 1: Spectrograms of syllables "dee" (top), "dah" (middle), and "doo" (bottom) showing how the onset formant transitions that define perceptually the consonant [d] differ depending on the identity of the following vowel. (Formants are highlighted by red dotted lines; transitions are the bending beginnings of the formant trajectories.)

Acoustic cues are sensory cues contained in the speech sound signal which are used in speech perception to differentiate speech sounds belonging to different phonetic categories. For example, one of the most studied cues in speech is voice onset time

or VOT. VOT is a primary cue signaling the difference between voiced

and voiceless plosives, such as "b" and "p". Other cues differentiate

sounds that are produced at different places of articulation or manners of articulation.

The speech system must also combine these cues to determine the

category of a specific speech sound. This is often thought of in terms

of abstract representations of phonemes. These representations can then be combined for use in word recognition and other language processes.

It is not easy to identify what acoustic cues listeners are sensitive to when perceiving a particular speech sound:

At first glance, the solution to the problem of how we perceive speech seems deceptively simple. If one could identify stretches of the acoustic waveform that correspond to units of perception, then the path from sound to meaning would be clear. However, this correspondence or mapping has proven extremely difficult to find, even after some forty-five years of research on the problem.

If a specific aspect of the acoustic waveform indicated one

linguistic unit, a series of tests using speech synthesizers would be

sufficient to determine such a cue or cues. However, there are two

significant obstacles:

- One acoustic aspect of the speech signal may cue different linguistically relevant dimensions. For example, the duration of a vowel in English can indicate whether or not the vowel is stressed, or whether it is in a syllable closed by a voiced or a voiceless consonant, and in some cases (like American English /ɛ/ and /æ/) it can distinguish the identity of vowels.[2] Some experts even argue that duration can help in distinguishing of what is traditionally called short and long vowels in English.

- One linguistic unit can be cued by several acoustic properties. For example, in a classic experiment, Alvin Liberman (1957) showed that the onset formant transitions of /d/ differ depending on the following vowel (see Figure 1) but they are all interpreted as the phoneme /d/ by listeners.

Linearity and the segmentation problem

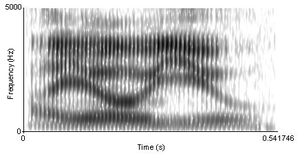

Figure 2: A spectrogram of the phrase "I owe you". There are no clearly distinguishable boundaries between speech sounds.

Although listeners perceive speech as a stream of discrete units (phonemes, syllables, and words),

this linearity is difficult to see in the physical speech signal (see

Figure 2 for an example). Speech sounds do not strictly follow one

another, rather, they overlap.

A speech sound is influenced by the ones that precede and the ones that

follow. This influence can even be exerted at a distance of two or more

segments (and across syllable- and word-boundaries).

Because the speech signal is not linear, there is a problem of

segmentation. It is difficult to delimit a stretch of speech signal as

belonging to a single perceptual unit. As an example, the acoustic

properties of the phoneme /d/ will depend on the production of the following vowel (because of coarticulation).

Lack of invariance

The

research and application of speech perception must deal with several

problems which result from what has been termed the lack of invariance.

Reliable constant relations between a phoneme of a language and its

acoustic manifestation in speech are difficult to find. There are

several reasons for this:

Context-induced variation

Phonetic environment affects the acoustic properties of speech sounds. For example, /u/ in English is fronted when surrounded by coronal consonants. Or, the voice onset time

marking the boundary between voiced and voiceless plosives are

different for labial, alveolar and velar plosives and they shift under

stress or depending on the position within a syllable.

Variation due to differing speech conditions

One

important factor that causes variation is differing speech rate. Many

phonemic contrasts are constituted by temporal characteristics (short

vs. long vowels or consonants, affricates vs. fricatives, plosives vs.

glides, voiced vs. voiceless plosives, etc.) and they are certainly

affected by changes in speaking tempo.

Another major source of variation is articulatory carefulness vs.

sloppiness which is typical for connected speech (articulatory

"undershoot" is obviously reflected in the acoustic properties of the

sounds produced).

Variation due to different speaker identity

The

resulting acoustic structure of concrete speech productions depends on

the physical and psychological properties of individual speakers. Men,

women, and children generally produce voices having different pitch.

Because speakers have vocal tracts of different sizes (due to sex and

age especially) the resonant frequencies (formants), which are important for recognition of speech sounds, will vary in their absolute values across individuals. Research shows that infants

at the age of 7.5 months cannot recognize information presented by

speakers of different genders; however by the age of 10.5 months, they

can detect the similarities. Dialect and foreign accent can also cause variation, as can the social characteristics of the speaker and listener.

Perceptual constancy and normalization

Figure 3: The left panel shows the 3 peripheral American English vowels /i/, /ɑ/, and /u/

in a standard F1 by F2 plot (in Hz). The mismatch between male, female,

and child values is apparent. In the right panel formant distances (in Bark) rather than absolute values are plotted using the normalization procedure proposed by Syrdal and Gopal in 1986. Formant values are taken from Hillenbrand et al. (1995)

Despite the great variety of different speakers and different

conditions, listeners perceive vowels and consonants as constant

categories. It has been proposed that this is achieved by means of the

perceptual normalization process in which listeners filter out the noise

(i.e. variation) to arrive at the underlying category. Vocal-tract-size

differences result in formant-frequency variation across speakers;

therefore a listener has to adjust his/her perceptual system to the

acoustic characteristics of a particular speaker. This may be

accomplished by considering the ratios of formants rather than their

absolute values.

This process has been called vocal tract normalization (see Figure 3

for an example). Similarly, listeners are believed to adjust the

perception of duration to the current tempo of the speech they are

listening to – this has been referred to as speech rate normalization.

Whether or not normalization actually takes place and what is its exact nature is a matter of theoretical controversy (see theories below). Perceptual constancy is a phenomenon not specific to speech perception only; it exists in other types of perception too.

Categorical perception

Figure 4: Example identification (red) and discrimination (blue) functions

Categorical perception is involved in processes of perceptual

differentiation. People perceive speech sounds categorically, that is to

say, they are more likely to notice the differences between categories (phonemes) than within

categories. The perceptual space between categories is therefore

warped, the centers of categories (or "prototypes") working like a sieve or like magnets for incoming speech sounds.

In an artificial continuum between a voiceless and a voiced bilabial plosive, each new step differs from the preceding one in the amount of VOT. The first sound is a pre-voiced [b], i.e. it has a negative VOT. Then, increasing the VOT, it reaches zero, i.e. the plosive is a plain unaspirated voiceless [p]. Gradually, adding the same amount of VOT at a time, the plosive is eventually a strongly aspirated voiceless bilabial [pʰ]. (Such a continuum was used in an experiment by Lisker and Abramson in 1970. The sounds they used are available online.) In this continuum of, for example, seven sounds, native English listeners will identify the first three sounds as /b/ and the last three sounds as /p/ with a clear boundary between the two categories.

A two-alternative identification (or categorization) test will yield a

discontinuous categorization function (see red curve in Figure 4).

In tests of the ability to discriminate between two sounds with

varying VOT values but having a constant VOT distance from each other

(20 ms for instance), listeners are likely to perform at chance level if

both sounds fall within the same category and at nearly 100% level if

each sound falls in a different category (see the blue discrimination

curve in Figure 4).

The conclusion to make from both the identification and the

discrimination test is that listeners will have different sensitivity to

the same relative increase in VOT depending on whether or not the

boundary between categories was crossed. Similar perceptual adjustment

is attested for other acoustic cues as well.

Top-down influences

In

a classic experiment, Richard M. Warren (1970) replaced one phoneme of a

word with a cough-like sound. Perceptually, his subjects restored the

missing speech sound without any difficulty and could not accurately

identify which phoneme had been disturbed, a phenomenon known as the phonemic restoration effect. Therefore, the process of speech perception is not necessarily uni-directional.

Another basic experiment compared recognition of naturally spoken

words within a phrase versus the same words in isolation, finding that

perception accuracy usually drops in the latter condition. To probe the

influence of semantic knowledge on perception, Garnes and Bond (1976)

similarly used carrier sentences where target words only differed in a

single phoneme (bay/day/gay, for example) whose quality changed along a

continuum. When put into different sentences that each naturally led to

one interpretation, listeners tended to judge ambiguous words according

to the meaning of the whole sentence. That is, higher-level language processes connected with morphology, syntax, or semantics may interact with basic speech perception processes to aid in recognition of speech sounds.

It may be the case that it is not necessary and maybe even not

possible for a listener to recognize phonemes before recognizing higher

units, like words for example. After obtaining at least a fundamental

piece of information about phonemic structure of the perceived entity

from the acoustic signal, listeners can compensate for missing or

noise-masked phonemes using their knowledge of the spoken language.

Compensatory mechanisms might even operate at the sentence level such as

in learned songs, phrases and verses, an effect backed-up by neural coding patterns consistent with the missed continuous speech fragments, despite the lack of all relevant bottom-up sensory input.

Acquired language impairment

The

first ever hypothesis of speech perception was used with patients who

acquired an auditory comprehension deficit, also known as receptive aphasia.

Since then there have been many disabilities that have been classified,

which resulted in a true definition of "speech perception".

The term 'speech perception' describes the process of interest that

employs sub lexical contexts to the probe process. It consists of many

different language and grammatical functions, such as: features,

segments (phonemes), syllabic structure (unit of pronunciation),

phonological word forms (how sounds are grouped together), grammatical

features, morphemic (prefixes and suffixes), and semantic information

(the meaning of the words).

In the early years, they were more interested in the acoustics of

speech. For instance, they were looking at the differences between /ba/

or /da/, but now research has been directed to the response in the brain

from the stimuli. In recent years, there has been a model developed to

create a sense of how speech perception works; this model is known as

the dual stream model. This model has drastically changed from how

psychologists look at perception. The first section of the dual stream

model is the ventral pathway. This pathway incorporates middle temporal

gyrus, inferior temporal sulcus and perhaps the inferior temporal gyrus.

The ventral pathway shows phonological representations to the lexical

or conceptual representations, which is the meaning of the words. The

second section of the dual stream model is the dorsal pathway. This

pathway includes the sylvian parietotemporal, inferior frontal gyrus,

anterior insula, and premotor cortex. Its primary function is to take

the sensory or phonological stimuli and transfer it into an

articulatory-motor representation (formation of speech).

Aphasia

Aphasia

is an impairment of language processing caused by damage to the brain.

Different parts of language processing are impacted depending on the

area of the brain that is damaged, and aphasia is further classified

based on the location of injury or constellation of symptoms. Damage to Broca's area of the brain often results in expressive aphasia which manifests as impairment in speech production. Damage to Wernicke's area often results in receptive aphasia where speech processing is impaired.

Aphasia with impaired speech perception typically shows lesions or damage located in the left temporal or parietal lobes. Lexical and semantic difficulties are common, and comprehension may be affected.

Agnosia

Agnosia is "the loss or diminution of the ability to recognize familiar objects or stimuli usually as a result of brain damage".

There are several different kinds of agnosia that affect every one of

our senses, but the two most common related to speech are speech agnosia and phonagnosia.

Speech agnosia: Pure word deafness, or speech agnosia, is

an impairment in which a person maintains the ability to hear, produce

speech, and even read speech, yet they are unable to understand or

properly perceive speech. These patients seem to have all of the skills

necessary in order to properly process speech, yet they appear to have

no experience associated with speech stimuli. Patients have reported, "I

can hear you talking, but I can't translate it".

Even though they are physically receiving and processing the stimuli of

speech, without the ability to determine the meaning of the speech,

they essentially are unable to perceive the speech at all. There are no

known treatments that have been found, but from case studies and

experiments it is known that speech agnosia is related to lesions in the

left hemisphere or both, specifically right temporoparietal

dysfunctions.

Phonagnosia: Phonagnosia

is associated with the inability to recognize any familiar voices. In

these cases, speech stimuli can be heard and even understood but the

association of the speech to a certain voice is lost. This can be due to

"abnormal processing of complex vocal properties (timbre, articulation,

and prosody—elements that distinguish an individual voice".

There is no known treatment; however, there is a case report of an

epileptic woman who began to experience phonagnosia along with other

impairments. Her EEG and MRI results showed "a right cortical parietal

T2-hyperintense lesion without gadolinium enhancement and with discrete

impairment of water molecule diffusion". So although no treatment has been discovered, phonagnosia can be correlated to postictal parietal cortical dysfunction.

Infant speech perception

Infants begin the process of language acquisition

by being able to detect very small differences between speech sounds.

They can discriminate all possible speech contrasts (phonemes).

Gradually, as they are exposed to their native language, their

perception becomes language-specific, i.e. they learn how to ignore the

differences within phonemic categories of the language (differences that

may well be contrastive in other languages – for example, English

distinguishes two voicing categories of plosives, whereas Thai has three categories;

infants must learn which differences are distinctive in their native

language uses, and which are not). As infants learn how to sort incoming

speech sounds into categories, ignoring irrelevant differences and

reinforcing the contrastive ones, their perception becomes categorical.

Infants learn to contrast different vowel phonemes of their native

language by approximately 6 months of age. The native consonantal

contrasts are acquired by 11 or 12 months of age.

Some researchers have proposed that infants may be able to learn the

sound categories of their native language through passive listening,

using a process called statistical learning. Others even claim that certain sound categories are innate, that is, they are genetically specified (see discussion about innate vs. acquired categorical distinctiveness).

If day-old babies are presented with their mother's voice

speaking normally, abnormally (in monotone), and a stranger's voice,

they react only to their mother's voice speaking normally. When a human

and a non-human sound is played, babies turn their head only to the

source of human sound. It has been suggested that auditory learning

begins already in the pre-natal period.

One of the techniques used to examine how infants perceive

speech, besides the head-turn procedure mentioned above, is measuring

their sucking rate. In such an experiment, a baby is sucking a special

nipple while presented with sounds. First, the baby's normal sucking

rate is established. Then a stimulus is played repeatedly. When the baby

hears the stimulus for the first time the sucking rate increases but as

the baby becomes habituated

to the stimulation the sucking rate decreases and levels off. Then, a

new stimulus is played to the baby. If the baby perceives the newly

introduced stimulus as different from the background stimulus the

sucking rate will show an increase.

The sucking-rate and the head-turn method are some of the more

traditional, behavioral methods for studying speech perception. Among

the new methods (see Research methods below) that help us to study speech perception, near-infrared spectroscopy is widely used in infants.

It has also been discovered that even though infants' ability to

distinguish between the different phonetic properties of various

languages begins to decline around the age of nine months, it is

possible to reverse this process by exposing them to a new language in a

sufficient way. In a research study by Patricia K. Kuhl, Feng-Ming

Tsao, and Huei-Mei Liu, it was discovered that if infants are spoken to

and interacted with by a native speaker of Mandarin Chinese, they can

actually be conditioned to retain their ability to distinguish different

speech sounds within Mandarin that are very different from speech

sounds found within the English language. This proves that given the

right conditions, it is possible to prevent infants' loss of the ability

to distinguish speech sounds in languages other than those found in the

native language.

Cross-language and second-language

A large amount of research has studied how users of a language perceive foreign speech (referred to as cross-language speech perception) or second-language speech (second-language speech perception). The latter falls within the domain of second language acquisition.

Languages differ in their phonemic inventories. Naturally, this

creates difficulties when a foreign language is encountered. For

example, if two foreign-language sounds are assimilated to a single

mother-tongue category the difference between them will be very

difficult to discern. A classic example of this situation is the

observation that Japanese learners of English will have problems with

identifying or distinguishing English liquid consonants /l/ and /r/ (see Perception of English /r/ and /l/ by Japanese speakers).

Best (1995) proposed a Perceptual Assimilation Model which

describes possible cross-language category assimilation patterns and

predicts their consequences.

Flege (1995) formulated a Speech Learning Model which combines several

hypotheses about second-language (L2) speech acquisition and which

predicts, in simple words, that an L2 sound that is not too similar to a

native-language (L1) sound will be easier to acquire than an L2 sound

that is relatively similar to an L1 sound (because it will be perceived

as more obviously "different" by the learner).

In language or hearing impairment

Research

in how people with language or hearing impairment perceive speech is

not only intended to discover possible treatments. It can provide

insight into the principles underlying non-impaired speech perception. Two areas of research can serve as an example:

Listeners with aphasia

Aphasia affects both the expression and reception of language. Both two most common types, expressive aphasia and receptive aphasia,

affect speech perception to some extent. Expressive aphasia causes

moderate difficulties for language understanding. The effect of

receptive aphasia on understanding is much more severe. It is agreed

upon, that aphasics suffer from perceptual deficits. They usually cannot

fully distinguish place of articulation and voicing.

As for other features, the difficulties vary. It has not yet been

proven whether low-level speech-perception skills are affected in

aphasia sufferers or whether their difficulties are caused by

higher-level impairment alone.

Listeners with cochlear implants

Cochlear implantation

restores access to the acoustic signal in individuals with

sensorineural hearing loss. The acoustic information conveyed by an

implant is usually sufficient for implant users to properly recognize

speech of people they know even without visual clues.

For cochlear implant users, it is more difficult to understand unknown

speakers and sounds. The perceptual abilities of children that received

an implant after the age of two are significantly better than of those

who were implanted in adulthood. A number of factors have been shown to

influence perceptual performance, specifically: duration of deafness

prior to implantation, age of onset of deafness, age at implantation

(such age effects may be related to the Critical period hypothesis)

and the duration of using an implant. There are differences between

children with congenital and acquired deafness. Postlingually deaf

children have better results than the prelingually deaf and adapt to a

cochlear implant faster.

In both children with cochlear implants and normal hearing, vowels and

voice onset time becomes prevalent in development before the ability to

discriminate the place of articulation. Several months following

implantation, children with cochlear implants can normalize speech

perception.

Noise

One of the

fundamental problems in the study of speech is how to deal with noise.

This is shown by the difficulty in recognizing human speech that

computer recognition systems have. While they can do well at recognizing

speech if trained on a specific speaker's voice and under quiet

conditions, these systems often do poorly in more realistic listening

situations where humans would understand speech without relative

difficulty. To emulate processing patterns that would be held in the

brain under normal conditions, prior knowledge is a key neural factor,

since a robust learning history may to an extent override the extreme masking effects involved in the complete absence of continuous speech signals.

Music-language connection

Research into the relationship between music and cognition

is an emerging field related to the study of speech perception.

Originally it was theorized that the neural signals for music were

processed in a specialized "module" in the right hemisphere of the

brain. Conversely, the neural signals for language were to be processed

by a similar "module" in the left hemisphere.

However, utilizing technologies such as fMRI machines, research has

shown that two regions of the brain traditionally considered exclusively

to process speech, Broca's and Wernicke's areas, also become active

during musical activities such as listening to a sequence of musical

chords.

Other studies, such as one performed by Marques et al. in 2006 showed

that 8-year-olds who were given six months of musical training showed an

increase in both their pitch detection performance and their

electrophysiological measures when made to listen to an unknown foreign

language.

Conversely, some research has revealed that, rather than music

affecting our perception of speech, our native speech can affect our

perception of music. One example is the tritone paradox.

The tritone paradox is where a listener is presented with two

computer-generated tones (such as C and F-Sharp) that are half an octave

(or a tritone) apart and are then asked to determine whether the pitch

of the sequence is descending or ascending. One such study, performed by

Ms. Diana Deutsch, found that the listener's interpretation of

ascending or descending pitch was influenced by the listener's language

or dialect, showing variation between those raised in the south of

England and those in California or from those in Vietnam and those in

California whose native language was English.

A second study, performed in 2006 on a group of English speakers and 3

groups of East Asian students at University of Southern California,

discovered that English speakers who had begun musical training at or

before age 5 had an 8% chance of having perfect pitch.

Speech phenomenology

The experience of speech

Casey O'Callaghan, in his article Experiencing Speech, analyzes whether "the perceptual experience of listening to speech differs in phenomenal character"

with regards to understanding the language being heard. He argues that

an individual's experience when hearing a language they comprehend, as

opposed to their experience when hearing a language they have no

knowledge of, displays a difference in phenomenal features which he defines as "aspects of what an experience is like" for an individual.

If a subject who is a monolingual native English speaker is

presented with a stimulus of speech in German, the string of phonemes

will appear as mere sounds and will produce a very different experience

than if exactly the same stimulus was presented to a subject who speaks

German.

He also examines how speech perception changes when one learning a

language. If a subject with no knowledge of the Japanese language was

presented with a stimulus of Japanese speech, and then was given the

exact same stimuli after being taught Japanese, this same individual would have an extremely different experience.

Research methods

The

methods used in speech perception research can be roughly divided into

three groups: behavioral, computational, and, more recently,

neurophysiological methods.

Behavioral methods

Behavioral

experiments are based on an active role of a participant, i.e. subjects

are presented with stimuli and asked to make conscious decisions about

them. This can take the form of an identification test, a discrimination test,

similarity rating, etc. These types of experiments help to provide a

basic description of how listeners perceive and categorize speech

sounds.

Sinewave Speech

Speech

perception has also been analyzed through sinewave speech, a form of

synthetic speech where the human voice is replaced by sine waves that

mimic the frequencies and amplitudes present in the original speech.

When subjects are first presented with this speech, the sinewave speech

is interpreted as random noises. But when the subjects are informed that

the stimuli actually is speech and are told what is being said, "a

distinctive, nearly immediate shift occurs" to how the sinewave speech is perceived.

Computational methods

Computational

modeling has also been used to simulate how speech may be processed by

the brain to produce behaviors that are observed. Computer models have

been used to address several questions in speech perception, including

how the sound signal itself is processed to extract the acoustic cues

used in speech, and how speech information is used for higher-level

processes, such as word recognition.

Neurophysiological methods

Neurophysiological

methods rely on utilizing information stemming from more direct and not

necessarily conscious (pre-attentative) processes. Subjects are

presented with speech stimuli in different types of tasks and the

responses of the brain are measured. The brain itself can be more

sensitive than it appears to be through behavioral responses. For

example, the subject may not show sensitivity to the difference between

two speech sounds in a discrimination test, but brain responses may

reveal sensitivity to these differences. Methods used to measure neural responses to speech include event-related potentials, magnetoencephalography, and near infrared spectroscopy. One important response used with event-related potentials is the mismatch negativity, which occurs when speech stimuli are acoustically different from a stimulus that the subject heard previously.

Neurophysiological methods were introduced into speech perception research for several reasons:

Behavioral responses may reflect late, conscious processes and be affected by other systems such as orthography, and thus they may mask speaker's ability to recognize sounds based on lower-level acoustic distributions.

Without the necessity of taking an active part in the test, even

infants can be tested; this feature is crucial in research into

acquisition processes. The possibility to observe low-level auditory

processes independently from the higher-level ones makes it possible to

address long-standing theoretical issues such as whether or not humans

possess a specialized module for perceiving speech or whether or not some complex acoustic invariance (see lack of invariance above) underlies the recognition of a speech sound.

Theories

Motor theory

Some of the earliest work in the study of how humans perceive speech sounds was conducted by Alvin Liberman and his colleagues at Haskins Laboratories. Using a speech synthesizer, they constructed speech sounds that varied in place of articulation along a continuum from /bɑ/ to /dɑ/ to /ɡɑ/.

Listeners were asked to identify which sound they heard and to

discriminate between two different sounds. The results of the experiment

showed that listeners grouped sounds into discrete categories, even

though the sounds they were hearing were varying continuously. Based on

these results, they proposed the notion of categorical perception as a mechanism by which humans can identify speech sounds.

More recent research using different tasks and methods suggests

that listeners are highly sensitive to acoustic differences within a

single phonetic category, contrary to a strict categorical account of

speech perception.

To provide a theoretical account of the categorical perception data, Liberman and colleagues

worked out the motor theory of speech perception, where "the

complicated articulatory encoding was assumed to be decoded in the

perception of speech by the same processes that are involved in

production" (this is referred to as analysis-by-synthesis). For instance, the English consonant /d/ may vary in its acoustic details across different phonetic contexts (see above), yet all /d/'s

as perceived by a listener fall within one category (voiced alveolar

plosive) and that is because "linguistic representations are abstract,

canonical, phonetic segments or the gestures that underlie these

segments".

When describing units of perception, Liberman later abandoned

articulatory movements and proceeded to the neural commands to the

articulators and even later to intended articulatory gestures,

thus "the neural representation of the utterance that determines the

speaker's production is the distal object the listener perceives". The theory is closely related to the modularity

hypothesis, which proposes the existence of a special-purpose module,

which is supposed to be innate and probably human-specific.

The theory has been criticized in terms of not being able to

"provide an account of just how acoustic signals are translated into

intended gestures"

by listeners. Furthermore, it is unclear how indexical information

(e.g. talker-identity) is encoded/decoded along with linguistically

relevant information.

Exemplar theory

Exemplar models of speech perception differ from the four theories

mentioned above which suppose that there is no connection between word-

and talker-recognition and that the variation across talkers is "noise"

to be filtered out.

The exemplar-based approaches claim listeners store information

for both word- and talker-recognition. According to this theory,

particular instances of speech sounds are stored in the memory of a

listener. In the process of speech perception, the remembered instances

of e.g. a syllable stored in the listener's memory are compared with the

incoming stimulus so that the stimulus can be categorized. Similarly,

when recognizing a talker, all the memory traces of utterances produced

by that talker are activated and the talker's identity is determined.

Supporting this theory are several experiments reported by Johnson

that suggest that our signal identification is more accurate when we

are familiar with the talker or when we have visual representation of

the talker's gender. When the talker is unpredictable or the sex

misidentified, the error rate in word-identification is much higher.

The exemplar models have to face several objections, two of which

are (1) insufficient memory capacity to store every utterance ever

heard and, concerning the ability to produce what was heard, (2) whether

also the talker's own articulatory gestures are stored or computed when

producing utterances that would sound as the auditory memories.

Acoustic landmarks and distinctive features

Kenneth N. Stevens

proposed acoustic landmarks and distinctive features as a relation

between phonological features and auditory properties. According to this

view, listeners are inspecting the incoming signal for the so-called

acoustic landmarks which are particular events in the spectrum carrying

information about gestures which produced them. Since these gestures are

limited by the capacities of humans' articulators and listeners are

sensitive to their auditory correlates, the lack of invariance

simply does not exist in this model. The acoustic properties of the

landmarks constitute the basis for establishing the distinctive

features. Bundles of them uniquely specify phonetic segments (phonemes,

syllables, words).

In this model, the incoming acoustic signal is believed to be

first processed to determine the so-called landmarks which are special spectral

events in the signal; for example, vowels are typically marked by

higher frequency of the first formant, consonants can be specified as

discontinuities in the signal and have lower amplitudes in lower and

middle regions of the spectrum. These acoustic features result from

articulation. In fact, secondary articulatory movements may be used when

enhancement of the landmarks is needed due to external conditions such

as noise. Stevens claims that coarticulation

causes only limited and moreover systematic and thus predictable

variation in the signal which the listener is able to deal with. Within

this model therefore, what is called the lack of invariance is simply claimed not to exist.

Landmarks are analyzed to determine certain articulatory events

(gestures) which are connected with them. In the next stage, acoustic

cues are extracted from the signal in the vicinity of the landmarks by

means of mental measuring of certain parameters such as frequencies of

spectral peaks, amplitudes in low-frequency region, or timing.

The next processing stage comprises acoustic-cues consolidation

and derivation of distinctive features. These are binary categories

related to articulation (for example [+/- high], [+/- back], [+/- round

lips] for vowels; [+/- sonorant], [+/- lateral], or [+/- nasal] for

consonants.

Bundles of these features uniquely identify speech segments

(phonemes, syllables, words). These segments are part of the lexicon

stored in the listener's memory. Its units are activated in the process

of lexical access and mapped on the original signal to find out whether

they match. If not, another attempt with a different candidate pattern

is made. In this iterative fashion, listeners thus reconstruct the

articulatory events which were necessary to produce the perceived speech

signal. This can be therefore described as analysis-by-synthesis.

This theory thus posits that the distal object

of speech perception are the articulatory gestures underlying speech.

Listeners make sense of the speech signal by referring to them. The

model belongs to those referred to as analysis-by-synthesis.

Fuzzy-logical model

The fuzzy logical theory of speech perception developed by Dominic Massaro

proposes that people remember speech sounds in a probabilistic, or

graded, way. It suggests that people remember descriptions of the

perceptual units of language, called prototypes. Within each prototype

various features may combine. However, features are not just binary

(true or false), there is a fuzzy

value corresponding to how likely it is that a sound belongs to a

particular speech category. Thus, when perceiving a speech signal our

decision about what we actually hear is based on the relative goodness

of the match between the stimulus information and values of particular

prototypes. The final decision is based on multiple features or sources

of information, even visual information (this explains the McGurk effect).

Computer models of the fuzzy logical theory have been used to

demonstrate that the theory's predictions of how speech sounds are

categorized correspond to the behavior of human listeners.

Speech mode hypothesis

Speech mode hypothesis is the idea that the perception of speech requires the use of specialized mental processing. The speech mode hypothesis is a branch off of Fodor's modularity theory (see modularity of mind).

It utilizes a vertical processing mechanism where limited stimuli are

processed by special-purpose areas of the brain that are stimuli

specific.

Two versions of speech mode hypothesis:

- Weak version – listening to speech engages previous knowledge of language.

- Strong version – listening to speech engages specialized speech mechanisms for perceiving speech.

Three important experimental paradigms have evolved in the search to find evidence for the speech mode hypothesis. These are dichotic listening, categorical perception, and duplex perception.

Through the research in these categories it has been found that there

may not be a specific speech mode but instead one for auditory codes

that require complicated auditory processing. Also it seems that

modularity is learned in perceptual systems. Despite this the evidence and counter-evidence for the speech mode hypothesis is still unclear and needs further research.

Direct realist theory

The direct realist theory of speech perception (mostly associated with Carol Fowler) is a part of the more general theory of direct realism, which postulates that perception allows us to have direct awareness of the world because it involves direct recovery of the distal source of the event that is perceived. For speech perception, the theory asserts that the objects of perception

are actual vocal tract movements, or gestures, and not abstract

phonemes or (as in the Motor Theory) events that are causally antecedent

to these movements, i.e. intended gestures. Listeners perceive gestures

not by means of a specialized decoder (as in the Motor Theory) but

because information in the acoustic signal specifies the gestures that

form it.

By claiming that the actual articulatory gestures that produce

different speech sounds are themselves the units of speech perception,

the theory bypasses the problem of lack of invariance.