Whole brain emulation (

WBE) or

mind uploading (sometimes called

"mind copying" or "

mind transfer") is the hypothetical process of copying mental content (including long-term memory and "self") from a particular brain substrate and copying it to a computational device, such as a digital, analog, quantum-based or software based

artificial neural network. The computational device could then run a

simulation model of the brain information processing, such that it responds in essentially the same way as the original brain (i.e., indistinguishable from the brain for all relevant purposes) and experiences having a

conscious mind.

[1][2][3]

Mind uploading may potentially be accomplished by either of two methods: Copy-and-Transfer or Gradual Replacement of neurons. In the case of the former method, mind uploading would be achieved by

scanning and

mapping the salient features of a biological brain, and then by copying, transferring, and storing that information state into a computer system or another computational device. The simulated mind could be within a

virtual reality or

simulated world, supported by an anatomic 3D body simulation model. Alternatively, the simulated mind could reside in a computer that's inside (or connected to) a

humanoid robot or a biological body.

[4]

Among some futurists and within the

transhumanist movement, mind uploading is treated as an important proposed

life extension technology. Another aim of mind uploading is to provide a permanent backup to our "mind-file", and a means for functional copies of human minds to survive a global disaster or interstellar space travels. Whole brain emulation is discussed by some

futurists as a "logical endpoint"

[4] of the topical

computational neuroscience and

neuroinformatics fields, both about

brain simulation for medical research purposes. It is discussed in

artificial intelligence research publications as an approach to

strong AI. Computer-based intelligence such as an upload could think much faster than a biological human even if it were no more intelligent. A large scale society of uploads might according to futurists give rise to a

technological singularity, meaning a sudden time constant decrease in the exponential development of technology.

[5] Mind uploading is a central conceptual feature of

numerous science fiction novels and films.

Substantial mainstream research in related areas is being conducted in animal

brain mapping and simulation, development of faster

super computers,

virtual reality,

brain-computer interfaces,

connectomics and information extraction from dynamically functioning brains.

[6] According to supporters, many of the tools and ideas needed to achieve mind uploading already exist or are currently under active development; however, they will admit that others are, as yet, very speculative, but still in the realm of engineering possibility. Neuroscientist

Randal Koene has formed a nonprofit organization called Carbon Copies to promote mind uploading research.

Overview

The human

brain contains about 85 billion nerve cells called

neurons, each individually linked to other neurons by way of connectors called

axons and

dendrites. Signals at the junctures (

synapses) of these connections are transmitted by the release and

detection of chemicals known as

neurotransmitters. The established neuroscientific consensus is that the human

mind is largely an

emergent property of the information processing of this

neural network.

Importantly, neuroscientists have stated that important functions performed by the mind, such as learning, memory, and consciousness, are due to purely physical and electrochemical processes in the brain and are governed by applicable laws. For example,

Christof Koch and

Giulio Tononi wrote in

IEEE Spectrum:

"Consciousness is part of the natural world. It depends, we believe, only on mathematics and logic and on the imperfectly known laws of physics, chemistry, and biology; it does not arise from some magical or otherworldly quality."[7]

The concept of mind uploading is based on this

mechanistic view of the mind, and denies the

vitalist view of human life and consciousness.

Eminent computer scientists and neuroscientists have predicted that specially programmed computers will be capable of thought and even attain consciousness, including Koch and Tononi,

[7] Douglas Hofstadter,

[8] Jeff Hawkins,

[8] Marvin Minsky,

[9] Randal A. Koene,

[10] and

Rodolfo Llinas.

[11]

Such a

machine intelligence capability might provide a computational substrate necessary for uploading.

However, even though uploading is dependent upon such a general capability, it is conceptually distinct from general forms of AI in that it results from dynamic reanimation of information derived from a specific human mind so that the mind retains a sense of historical identity (other forms are possible but would compromise or eliminate the life-extension feature generally associated with uploading). The transferred and reanimated information would become a form of

artificial intelligence, sometimes called an

infomorph or

"noömorph."

Many theorists have presented models of the brain and have established a range of estimates of the amount of computing power needed for partial and complete simulations.

[4][citation needed] Using these models, some have estimated that uploading may become possible within decades if trends such as

Moore's Law continue.

[12]

Theoretical benefits

"Immortality"/backup

In theory, if the information and processes of the mind can be disassociated from the biological body, they are no longer tied to the individual limits and lifespan of that body. Furthermore, information within a brain could be partly or wholly copied or transferred to one or more other substrates (including digital storage or another brain), thereby - from a purely mechanistic perspective - reducing or eliminating "mortality risk" of such information. This general proposal appears to have been first made in the biomedical literature in 1971 by

biogerontologist George M. Martin of the

University of Washington.

[13]

Speedup

If

Moore's law holds, within a few decades, a supercomputer might be able to simulate a human brain at neural level at faster perceived speed than a biological brain. However, the exact date is difficult to estimate due to limited understanding of the required accuracy, and computational speed is not the only requirement for making full human brain simulation possible. Several contradictory predictions

[citation needed] have been made about when a whole human brain can be emulated, for example 2045 has been suggested by

Ray Kurzweil; some of the predicted dates have already passed.

Given that the electrochemical signals that brains use to achieve thought travel at about 150 meters per second, while the electronic signals in computers are sent at the speed of light (three hundred million meters per second), this means that a massively parallel electronic counterpart of a human biological brain in theory might be able to think thousands to millions of times faster than our naturally evolved systems.

[14] Also, neurons can generate a maximum of about 200 to 1000

action potentials or "spikes" per second, whereas the

clock speed of microprocessors has reached 5.5 GHz in 2013,

[15] which is about five million times faster.

However, the human brain contains roughly eighty-six billion neurons with eighty-six trillion synapses connecting them.

[16] Replicating each of these as separate electronic components using

microchip based semiconductor technology would require a computer enormously large in comparison with today's super-computers. In a less futuristic implementation,

time-sharing would allow several neurons to be emulated sequentially by the same computational unit. Thus the size of the computer would be restricted, but the speedup would be lower. Assuming that

cortical minicolumns organized into

hypercolumns are the computational units, mammal brains can be emulated by today's

supercomputers, but with slower speed than in a biological brain.

[17]

Relevant technologies and techniques

The focus of mind uploading, in the case of copy-and-transfer, is on data acquisition, rather than data maintenance of the brain. A set of approaches known as Loosely-Coupled Off-Loading (LCOL) may be used in the attempt to characterize and copy the mental contents of a brain.

[18] The LCOL approach may take advantage of self-reports, life-logs and video recordings that can be analyzed by artificial intelligence. A bottom-up approach may focus on the specific resolution and morphology of neurons, the spike times of neurons, the times at which neurons produce action potential responses.

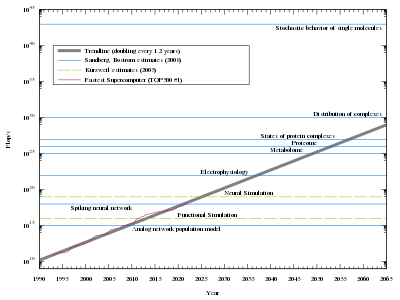

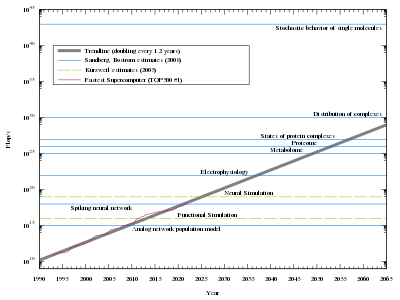

Computational complexity

Estimates of how much processing power is needed to emulate a human brain at various levels (from

Ray Kurzweil and the chart to the left), along with the fastest supercomputer from

TOP500 mapped by year. Note the logarithmic scale and exponential trendline, which assumes the computational capacity doubles every 1.1 years. Kurzweil believes that mind uploading will be possible at neural simulation, while the Sandberg, Bostrom report is less certain about where

consciousness arises.

[4]

Advocates of mind uploading point to

Moore's law to support the notion that the necessary computing power is expected to become available within a few decades. However, the actual computational requirements for running an uploaded human mind are very difficult to quantify, potentially rendering such an argument specious.

Regardless of the techniques used to capture or recreate the function of a human mind, the processing demands are likely to be immense, due to the large number of neurons in the human brain along with the considerable complexity of each neuron.

In 2004,

Henry Markram, lead researcher of the "

Blue Brain Project", has stated that "it is not [their] goal to build an intelligent neural network", based solely on the computational demands such a project would have.

[19]

It will be very difficult because, in the brain, every molecule is a powerful computer and we would need to simulate the structure and function of trillions upon trillions of molecules as well as all the rules that govern how they interact. You would literally need computers that are trillions of times bigger and faster than anything existing today.[20]

Five years later, after successful simulation of part of a rat brain, the same scientist was much more bold and optimistic. In 2009, when he was director of the

Blue Brain Project, he claimed that

A detailed, functional artificial human brain can be built within the next 10 years [21]

Required computational capacity strongly depend on the chosen level of simulation model scale:

[4]

| Level |

CPU demand

(FLOPS) |

Memory demand

(Tb) |

$1 million super‐computer

(Earliest year of making) |

| Analog network population model |

1015 |

102 |

2008 |

| Spiking neural network |

1018 |

104 |

2019 |

| Electrophysiology |

1022 |

104 |

2033 |

| Metabolome |

1025 |

106 |

2044 |

| Proteome |

1026 |

107 |

2048 |

| States of protein complexes |

1027 |

108 |

2052 |

| Distribution of complexes |

1030 |

109 |

2063 |

| Stochastic behavior of single molecules |

1043 |

1014 |

2111 |

Estimates from Sandberg, Bostrom, 2008

Simulation model scale

A high-level cognitive AI model of the brain architecture is not required for brain emulation

Simple neuron model: Black-box dynamic non-linear signal processing system

Metabolism model: The movement of positively-charged ions through the ion channels controls the membrane electrical

action potential in an axon.

Since the function of the human

mind, and how it might arise from the working of the brain's

neural network, are poorly understood issues, mind uploading relies on the idea of neural network

emulation. Rather than having to understand the high-level psychological processes and large-scale structures of the brain, and model them using classical

artificial intelligence methods and

cognitive psychology models, the low-level structure of the underlying neural network is captured, mapped and emulated with a computer system. In computer science terminology,

[dubious – discuss] rather than analyzing and

reverse engineering the behavior of the algorithms and data structures that resides in the brain, a blueprint of its source code is translated to another programming language. The human mind and the personal identity then, theoretically, is generated by the emulated neural network in an identical fashion to it being generated by the biological neural network.

On the other hand, a molecule-scale simulation of the brain is not expected to be required, provided that the functioning of the neurons is not affected by

quantum mechanical processes. The neural network emulation approach only requires that the functioning and interaction of

neurons and

synapses are understood. It is expected that it is sufficient with a

black-box signal processing model of how the neurons respond to

nerve impulses (

electrical as well as

chemical synaptic transmission).

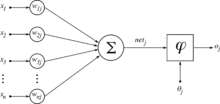

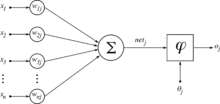

A sufficiently complex and accurate model of the neurons is required. A traditional

artificial neural network model, for example

multi-layer perceptron network model, is not considered as sufficient. A dynamic

spiking neural network model is required, which reflects that the neuron fires only when a membrane potential reaches a certain level. It is likely that the model must include delays, non-linear functions and differential equations describing the relation between electrophysical parameters such as electrical currents, voltages, membrane states (

ion channel states) and

neuromodulators.

Since learning and

long-term memory are believed to result from strengthening or weakening the synapses via a mechanism known as

synaptic plasticity or synaptic adaptation, the model should include this mechanism. The response of

sensory receptors to various stimuli must also be modelled.

Furthermore, the model may have to include

metabolism, i.e. how the neurons are affected by

hormones and other chemical substances that may cross the blood–brain barrier. It is considered likely that the model must include currently unknown

neuromodulators,

neurotransmitters and

ion channels. It is considered unlikely that the simulation model has to include

protein interaction, which would make it computationally complex.

[4]

A digital computer simulation model of an analog system such as the brain is an approximation that introduces random

quantization errors and

distortion. However, the biological neurons also suffer from randomness and limited precision, for example due to

background noise. The errors of the discrete model can be made smaller than the randomness of the biological brain by choosing a sufficiently high variable resolution and sample rate, and sufficiently accurate models of non-linearities. The

computational power and computer memory must however be sufficient to run such large simulations, preferably in

real time.

Scanning and mapping scale of an individual

When modelling and simulating the brain of a specific individual, a brain map or connectivity database showing the connections between the neurons must be extracted from an anatomic model of the brain. For whole brain simulation, this network map should show the connectivity of the whole

nervous system, including the

spinal cord,

sensory receptors, and

muscle cells. Destructive scanning of a small sample of tissue from a mouse brain including synaptic details is possible as of 2010.

[22]

However, if

short-term memory and

working memory include prolonged or repeated firing of neurons, as well as intra-neural dynamic processes, the electrical and chemical signal state of the synapses and neurons may be hard to extract. The uploaded mind may then perceive a

memory loss of the events and mental processes immediately before the time of brain scanning.

[4]

A full brain map has been estimated to occupy less than 2 x 10

16 bytes (20,000 TB) and would store the addresses of the connected neurons, the synapse type and the synapse "weight" for each of the brains' 10

15 synapses.

[4][not in citation given] However, the biological complexities of true brain function (e.g. the epigenetic states of neurons, protein components with multiple functional states, etc.) may preclude an accurate prediction of the volume of binary data required to faithfully represent a functioning human mind.

Serial sectioning

Serial sectioning of a brain

A possible method for mind uploading is serial sectioning, in which the brain tissue and perhaps other parts of the nervous system are frozen and then scanned and analyzed layer by layer, which for frozen samples at nano-scale requires a cryo-

ultramicrotome, thus capturing the structure of the neurons and their interconnections.

[23] The exposed surface of frozen nerve tissue would be scanned and recorded, and then the surface layer of tissue removed. While this would be a very slow and labor-intensive process, research is currently underway to automate the collection and microscopy of serial sections.

[24] The scans would then be analyzed, and a model of the neural net recreated in the system that the mind was being uploaded into.

There are uncertainties with this approach using current microscopy techniques. If it is possible to replicate neuron function from its visible structure alone, then the resolution afforded by a

scanning electron microscope would suffice for such a technique.

[24] However, as the function of brain tissue is partially determined by molecular events (particularly at

synapses, but also at

other places on the neuron's cell

membrane), this may not suffice for capturing and simulating neuron functions. It may be possible to extend the techniques of serial sectioning and to capture the internal molecular makeup of neurons, through the use of sophisticated

immunohistochemistry staining methods that could then be read via

confocal laser scanning microscopy. However, as the physiological genesis of 'mind' is not currently known, this method may not be able to access all of the necessary biochemical information to recreate a human brain with sufficient fidelity.

Brain imaging

|

|

Process from MRI acquisition to whole brain structural network [25] |

|

It may also be possible to create functional 3D maps of the brain activity, using advanced

neuroimaging technology, such as

functional MRI (fMRI, for mapping change in blood flow),

Magnetoencephalography (MEG, for mapping of electrical currents), or combinations of multiple methods, to build a detailed three-dimensional model of the brain using non-invasive and non-destructive methods. Today, fMRI is often combined with MEG for creating functional maps of human cortex during more complex cognitive tasks, as the methods complement each other. Even though current imaging technology lacks the spatial resolution needed to gather the information needed for such a scan, important recent and future developments are predicted to substantially improve both spatial and temporal resolutions of existing technologies.

[26]

Current research

Several animal brains have been mapped and at least partly simulated.

Simulation of C. elegans roundworm neural system

The connectivity of the neural circuit for touch sensitivity of the simple

C. elegans nematode (roundworm) was mapped in 1985,

[27] and partly simulated in 1993.

[28] Several software simulation models of the complete neural and muscular system, and to some extent the worm's physical environment, have been presented since 2004, and are in some cases available for downloading.

[29][30] However, we still lack understanding of how the neurons and the connections between them generate the surprisingly complex range of behaviors that are observed in this relatively simple organism.

[31]

The

OpenWorm Project — an open-source project dedicated to creating a virtual C. elegans nematode in a computer by reverse-engineering its biology— has now developed software that replicates the worm’s muscle movement.

[32]

Simulation of Drosophila fruit fly neural system

The brain belonging to the fruit fly

Drosophila is also thoroughly studied, and to some extent simulated.

[33] The

Drosophila connectome, a complete list of the neurons and connections of the brain of

Drosophila, is likely to be available in the near future.

Rodent brain simulation

An

artificial neural network described as being "as big and as complex as half of a mouse brain" was run on an IBM

Blue Gene supercomputer by a University of Nevada research team in 2007. A simulated time of one second took ten seconds of computer time. The researchers said they had seen "biologically consistent" nerve impulses flowed through the virtual cortex. However, the simulation lacked the structures seen in real mice brains, and they intend to improve the accuracy of the neuron model.

[34]

Blue Brain is a project, launched in May 2005 by

IBM and the

Swiss Federal Institute of Technology in

Lausanne, with the aim to create a

computer simulation of a mammalian cortical column, down to the molecular level.

[35] The project uses a

supercomputer based on IBM's

Blue Gene design to simulate the electrical behavior of neurons based upon their synaptic connectivity and complement of intrinsic membrane currents. The initial goal of the project, completed in December 2006,

[36] was the simulation of a rat

neocortical column, which can be considered the smallest functional unit of the

neocortex (the part of the brain thought to be responsible for higher functions such as conscious thought), containing 10,000 neurons (and 10

8 synapses). Between 1995 and 2005,

Henry Markram mapped the types of neurons and their connections in such a column. In November 2007,

[37] the project reported the end of the first phase, delivering a data-driven process for creating, validating, and researching the neocortical column. The project seeks to eventually reveal aspects of human cognition and various psychiatric disorders caused by malfunctioning neurons, such as

autism, and to understand how pharmacological agents affect network behavior.

An organization called the

Brain Preservation Foundation was founded in 2010 and is offering a Brain Preservation Technology prize to promote exploration of brain preservation technology in service of humanity. The Prize, currently $106,000, will be awarded in two parts, 25% to the first international team to preserve a whole mouse brain, and 75% to the first team to preserve a whole large animal brain in a manner that could also be adopted for humans in a hospital or hospice setting immediately upon clinical death. Ultimately the goal of this prize is to generate a whole brain map which may be used in support of separate efforts to upload and possibly 'reboot' a mind in virtual space.

Issues

Possibility of strong artificial intelligence

The concept and possibility of mind uploading may be subject to many of the same criticisms as

functionalism and

strong artificial intelligence.

Several of the better-known objections are the

Chinese Room argument (advanced by

John Searle) and the

knowledge argument.

Thomas Nagel has also advanced several criticisms of the philosophical basis of this concept, possibly most famously in his article

What is it like to be a bat?.

According to Searle, accomplishing true strong AI isn't simply a matter of getting "better" or "faster" computers or software; rather, anything equivalent to a

Turing machine (or, equivalently,

lambda calculus) is intrinsically incapable of producing this type of result. He gives the example of

Deep Blue defeating

World Chess Champion Garry Kasparov; Searle has argued that, in a sense, Deep Blue didn't actually play chess because it didn't

know that it was playing chess - all it was doing was manipulating symbols.

Others argue that even an exhaustive physical description of something doesn't necessarily reveal all facts about it. For example, according to Nagel, an exhaustive physical description of a bat wouldn't reveal what it's subjectively like to be a bat. Similarly, according to proponents, exhaustive physical descriptions of the brain don't account for all properties of the mind. Thus, according to proponents of these arguments, materialist (including functionalist) explanations fail to truly explain consciousness, which would imply that merely simulating brain processes with a computer may be inadequate to generate consciousness.

Several common alternative solutions to classical materialist accounts include

property dualism,

substance dualism, and

biological naturalism.

Philosophical issues

Underlying the concept of "mind uploading" (more accurately "mind transferring") is the broad philosophy that consciousness lies within the brain's information processing and is in essence an

emergent feature that arises from large neural network high-level patterns of organization, and that the same patterns of organization can be realized in other processing devices. Mind uploading also relies on the idea that the human mind (the "self" and the long-term memory), just like animal minds, is represented by the current neural network paths and the weights of the brain synapses rather than by a

dualistic and mystic soul and spirit. The mind or "soul" can be defined as the information state of the brain, and is immaterial only in the same sense as the information content of a data file or the state of a computer software currently residing in the work-space memory of the computer. Data specifying the information state of the neural network can be captured and copied as a "computer file" from the brain and re-implemented into a different physical form.

[38] This is not to deny that minds are richly adapted to their substrates.

[39] An analogy to the idea of mind uploading is to copy the temporary information state (the variable values) of a computer program from the computer memory to another computer and continue its execution. The other computer may perhaps have different hardware architecture but

emulates the hardware of the first computer.

These issues have a long history. In 1775

Thomas Reid wrote:

[40]

I would be glad to know... whether when my brain has lost its original structure, and when some hundred years after the same materials are fabricated so curiously as to become an intelligent being, whether, I say that being will be me; or, if, two or three such beings should be formed out of my brain; whether they will all be me, and consequently one and the same intelligent being.

A considerable portion of

transhumanists and

singularitarians place great hope into the belief that they may become immortal, by creating one or many non-biological functional copies of their brains, thereby leaving their "biological shell". However, the philosopher and transhumanist Susan Schneider claims that it would create a creature

[clarification needed] who has/is a computational copy

[clarification needed] of the original persons mind.

[41] Susan Schneider agrees that consciousness has a computational basis, but this doesn't mean we can upload and survive. According to her views, "uploading" would probably result in the death of the original person's brain, while only outside observers can maintain the illusion of the original person still being alive. For it is implausible to think that one's consciousness would leave one's brain and travel to a remote location; ordinary physical objects in the macroscopic world do not behave this way.

[clarification needed] At best, a computational duplicate of the original is created.

[clarification needed][42] Others have argued against such conclusions. For example, buddhist transhumanist James Hughes has pointed out that this consideration only goes so far: if one believes the self is an illusion, worries about survival are not reasons to avoid uploading,

[43] and Keith Wiley has presented an argument wherein all resulting minds of an uploading procedure are granted equal primacy in their claim to the original identity, such that survival of the self is determined retroactively from a strictly subjective position.

[44][45]

Another potential consequence of mind uploading is that the decision to "upload" may then create a mindless symbol manipulator instead of a conscious mind (see

philosophical zombie).

[46][47] Are we to assume that an Upload is conscious if it displays behaviors that are highly indicative of consciousness? Are we to assume that an Upload is conscious if it verbally insists that it is conscious?

[48] Could there be an absolute upper limit in processing speed above which consciousness cannot be sustained? The mystery of consciousness precludes a definitive answer to this question.

[49] Numerous scientists, including Kurzweil, strongly believe that determining whether a separate entity is conscious (with 100% confidence) is fundamentally unknowable, since consciousness is inherently subjective (see

solipsism). Regardless, some scientists strongly believe consciousness is the consequence of computational processes which are independent of substrate. On the contrary, numerous scientists

[citation needed] believe consciousness may be the result of some form of quantum computation dependent on substrate (see

quantum mind).

[50][51][52]

In light of uncertainty on whether to regard uploads as conscious, Sandberg proposes a cautious approach:

[53]

Principle of assuming the most (PAM): Assume that any emulated system could have the same mental properties as the original system and treat it correspondingly.

Copying vs. moving

A philosophical issue with mind uploading is whether the newly generated digital mind is really the "same" sentience, or simply an exact copy with the same memories and personality. This issue is especially obvious when the original remains essentially unchanged by the procedure, thereby resulting in a copy which could potentially have rights separate from the unaltered, obvious original.

Most projected brain scanning technologies, such as serial sectioning of the brain, would necessarily be destructive, and the original brain would not survive the brain scanning procedure. But if it can be kept intact, the computer-based consciousness could be a copy of the still-living biological person. It is in that case implicit that copying a consciousness could be as feasible as literally moving it into one or several copies, since these technologies generally involve simulation of a human brain in a computer of some sort, and digital files such as computer programs can be copied precisely. It is assumed that once the versions are exposed to different sensory inputs, their experiences would begin to diverge, but all their memories up until the moment of the copying would remain the same.

The problem is made even more apparent through the possibility of creating a potentially infinite number of initially identical copies of the original person, which would of course all exist simultaneously as distinct beings with their own emotions and thoughts. The most parsimonious view of this phenomenon is that the two (or more) minds would share memories of their past but from the point of duplication would simply be distinct minds.

Toward the goal of resolving the copy-vs-move debate, some have argued for a third way of conceptualizing the process, which is described by such terms as

split[45] and

divergence. The distinguishing feature of this third terminological option is that while

moving implies that a single instance relocates in space and while

copying invokes problematic connotations (a

copy is often denigrated in status relative to its

original), the notion of a

split better illustrates that some kinds of entities might become two separate instances, but without the imbalanced associations assigned to originals and copies, and that such equality may apply to minds.

Depending on computational capacity, the simulation's subjective time may be faster or slower than elapsed physical time, resulting in that the simulated mind would perceive that the physical world is running in

slow motion or

fast motion respectively, while biological persons will see the simulated mind in fast or slow motion respectively.

A brain simulation can be started, paused, backed-up and rerun from a saved backup state at any time. The simulated mind would in the latter case forget everything that has happened after the instant of backup, and perhaps not even be aware that it is repeating itself. An older version of a simulated mind may meet a younger version and share experiences with it.

One proposed route for mind uploading is gradual transfer of functions from an "aging biological brain"

[3] into an

exocortex.

Ethical and legal implications

The process of developing emulation technology raises ethical issues related to

animal welfare and

artificial consciousness.

[53] The neuroscience required to develop brain emulation would require animal experimentation, first on invertebrates and then on small mammals before moving on to humans. Sometimes the animals would just need to be euthanized in order to extract, slice, and scan their brains, but sometimes behavioral and

in vivo measures would be required, which might cause pain to living animals.

[53]

In addition, the resulting animal emulations themselves might suffer, depending on one's views about consciousness.

[53] Bancroft argues for the plausibility of consciousness in brain simulations on the basis of the "

fading qualia" thought experiment of

David Chalmers. He then concludes:

[54]

If, as I argue above, a sufficiently detailed computational simulation of the brain is potentially operationally equivalent to an organic brain, it follows that we must consider extending protections against suffering to simulations.

It might help reduce emulation suffering to develop virtual equivalents of anaesthesia, as well as to omit processing related to pain and/or consciousness. However, some experiments might require a fully functioning and suffering animal emulation. Animals might also suffer by accident due to flaws and lack of insight into what parts of their brains are suffering.

[53] Questions also arise regarding the moral status of

partial brain emulations, as well as creating neuromorphic emulations that draw inspiration from biological brains but are built somewhat differently.

[54]

Brain emulations could be erased by computer viruses or malware, without need to destroy the underlying hardware. This may make assassination easier than for physical humans. The attacker might take the computing power for its own use.

[55]

Many questions arise regarding the legal personhood of emulations.

[56] Would they be given the rights of biological humans? If a person makes an emulated copy of himself and then dies, does the emulation inherit his property and official positions? Could the emulation ask to "pull the plug" when its biological version was terminally ill or in a coma? Would it help to treat emulations as adolescents for a few years so that the biological creator would maintain temporary control? Would criminal emulations receive the death penalty, or would they be given forced data modification as a form of "rehabilitation"? Could an upload have marriage and child-care rights?

[56]

If simulated minds would come true and if they were assigned rights of their own, it may be difficult to ensure the protection of "digital human rights". For example, social science researchers might be tempted to secretly expose simulated minds, or whole isolated societies of simulated minds, to controlled experiments in which many copies of the same minds are exposed (serially or simultaneously) to different test conditions.

[citation needed]

Political and economic implications

The only limited physical resource to be expected in a simulated world is the computational capacity, and thus the speed and complexity of the simulation. Wealthy or

privileged individuals in a society of uploads might thus experience more subjective time than others in the same real time, or may be able to run multiple copies of themselves or others, and thus produce more service and become even more wealthy. Others may suffer from computational resource

starvation and show a

slow motion behavior. Eventually, the most powerful beings may attempt to eliminate all other beings in competition for resources.

[citation needed]

Emulations could create a number of conditions that might increase risk of war, including inequality, changes of power dynamics, a possible technological arms race to build emulations first,

first-strike advantages, strong loyalty and willingness to "die" among emulations, and triggers for racist, xenophobic, and religious prejudice.

[55] If emulations run much faster than humans, there might not be enough time for human leaders to make wise decisions or negotiate. It's possible that humans would react violently against growing power of emulations, especially if they depress human wages. Or maybe emulations wouldn't trust each other, and even well intentioned defensive measures

might be interpreted as offense.

[55]

Emulation timelines and AI risk

There are very few feasible technologies that humans have refrained from developing. The neuroscience and computer-hardware technologies that may make brain emulation possible are widely desired for other reasons, so cutting off funding doesn't seem to be an option. If we assume that emulation technology will arrive, a question becomes whether we should accelerate or slow its advance.

[55]

Arguments for speeding up brain-emulation research:

- If neuroscience is the bottleneck on brain emulation rather than computing power, emulation advances may be more erratic and unpredictable based on when new scientific discoveries happen.[55][57][58] Limited computing power would mean the first emulations would run slower and so would be easier to adapt to, and there would be more time for the technology to transition through society.[58]

- Improvements in manufacturing, 3D printing, and nanotechnology may accelerate hardware production,[55] which could increase the "computing overhang"[59] from excess hardware relative to neuroscience.

- If one AI-development group had a lead in emulation technology, it would have more subjective time to win an arms race to build the first superhuman AI. Because it would be less rushed, it would have more freedom to consider AI risks.[60][61]

Arguments for slowing down brain-emulation research:

- Greater investment in brain emulation and associated cognitive science might enhance the ability of artificial intelligence (AI) researchers to create "neuromorphic" (brain-inspired) algorithms, such as neural networks, reinforcement learning, and hierarchical perception. This could accelerate risks from uncontrolled AI.[55][61] Participants at a 2011 AI workshop estimated an 85% probability that neuromorphic AI would arrive before brain emulation. This was based on the idea that brain emulation would require understanding some brain components, and it would be easier to tinker with these than to reconstruct the entire brain in its original form. By a very narrow margin, the participants on balance leaned toward the view that accelerating brain emulation would increase expected AI risk.[60]

- Waiting might give society more time to think about the consequences of brain emulation and develop institutions to improve cooperation.[55][61]

Emulation research would also speed up neuroscience as a whole, which might accelerate medical advances, cognitive enhancement, lie detectors, and capability for psychological manipulation.

[61]

Emulations might be easier to control than

de novo AI because

- We understand better human abilities, behavioral tendencies, and vulnerabilities, so control measures might be more intuitive and easier to plan for.[60][61]

- Emulations could more easily inherit human motivations.[61]

- Emulations are harder to manipulate than de novo AI, because brains are messy and complicated; this could reduce risks of their rapid takeoff.[55][61] Also, emulations may be bulkier and require more hardware than AI, which would also slow the speed of a transition.[61] Unlike AI, an emulation wouldn't be able to rapidly expand beyond the size of a human brain.[61] Emulations running at digital speeds would have less intelligence differential vis-à-vis AI and so might more easily control AI.[61]

As counterpoint to these considerations, Bostrom notes some downsides:

- Even if we better understand human behavior, the evolution of emulation behavior under self-improvement might be much less predictable than the evolution of safe de novo AI under self-improvement.[61]

- Emulations may not inherit all human motivations. Perhaps they would inherit our darker motivations or would behave abnormally in the unfamiliar environment of cyberspace.[61]

- Even if there's a slow takeoff toward emulations, there would still be a second transition to de novo AI later on. Two intelligence explosions may mean more total risk.[61]

Mind uploading advocates[edit]

Ray Kurzweil, director of engineering at

Google, claims to know and foresee that people will be able to "upload" their entire brains to computers and become "digitally immortal" by 2045. Kurzweil made this claim for many years, e.g. during his speech in 2013 at the

Global Futures 2045 International Congress in New York, which claims to subscribe to a similar set of beliefs.

[62][63][64]

Mind uploading is also advocated by a number of researchers in

neuroscience and

artificial intelligence, such as

Marvin Minsky. In 1993, Joe Strout created a small web site called the Mind Uploading Home Page, and began advocating the idea in

cryonics circles and elsewhere on the net. That site has not been actively updated in recent years, but it has spawned other sites including

MindUploading.org, run by

Randal A. Koene, Ph.D., who also moderates a mailing list on the topic. These advocates see mind uploading as a medical procedure which could eventually save countless lives.

Many

transhumanists look forward to the development and deployment of mind uploading technology, with transhumanists such as

Nick Bostrom predicting that it will become possible within the 21st century due to technological trends such as

Moore's Law.

[4]

The book

Beyond Humanity: CyberEvolution and Future Minds by

Gregory S. Paul & Earl D. Cox, is about the eventual (and, to the authors, almost inevitable) evolution of

computers into

sentient beings, but also deals with

human mind transfer.

Richard Doyle's

Wetwares: Experiments in PostVital Living deals extensively with uploading from the perspective of distributed embodiment, arguing for example that humans are currently part of the "

artificial life phenotype." Doyle's vision reverses the polarity on uploading, with

artificial life forms such as

uploads actively seeking out

biological embodiment as part of their

reproductive strategy.

Raymond Kurzweil, a prominent advocate of

transhumanism and the likelihood of a

technological singularity, has suggested that the easiest path to human-level

artificial intelligence may lie in "

reverse-engineering the

human brain", which he usually uses to refer to the creation of a new

intelligence based on the general

cognitive process of the brain, but he also sometimes uses the term to refer to the notion of uploading individual human minds based on highly detailed

scans and

simulations. This idea is discussed on pp. 198–203 of his book

The Singularity is Near, for example.