From Wikipedia, the free encyclopedia

Thorium-based nuclear power generation is fueled primarily by the

nuclear fission of the

isotope uranium-233 produced from the

fertile element

thorium. According to proponents, a

thorium fuel cycle offers several potential advantages over a

uranium fuel cycle—including much

greater abundance

of thorium on Earth, superior physical and nuclear fuel properties, and

reduced nuclear waste production. However, development of thorium power

has significant start-up costs. Proponents also cite the lack of

weaponization potential as an advantage of thorium, while critics say

that development of

breeder reactors

in general (including thorium reactors, which are breeders by nature)

increases proliferation concerns. Since about 2008, nuclear energy

experts have become more interested in thorium to supply

nuclear fuel in place of

uranium to generate

nuclear power. This renewed interest has been highlighted in a number of scientific conferences, the latest of which, ThEC13 was held at

CERN by

iThEC and attracted over 200 scientists from 32 countries.

A nuclear reactor consumes certain specific

fissile isotopes to produce energy. The three most practical types of nuclear reactor fuel are:

- Uranium-235, purified (i.e. "enriched") by reducing the amount of uranium-238

in natural mined uranium. Most nuclear power has been generated using

low-enriched uranium (LEU), whereas high-enriched uranium (HEU) is

necessary for weapons.

- Plutonium-239, transmuted from uranium-238 obtained from natural mined uranium.

- Uranium-233, transmuted from thorium-232, derived from natural mined thorium, which is the subject of this article.

Some believe thorium is key to developing a new generation of cleaner, safer nuclear power. According to a 2011 opinion piece by a group of scientists at the

Georgia Institute of Technology,

considering its overall potential, thorium-based power "can mean a

1000+ year solution or a quality low-carbon bridge to truly sustainable

energy sources solving a huge portion of mankind’s negative

environmental impact."

After studying the feasibility of using thorium, nuclear scientists Ralph W. Moir and

Edward Teller

suggested that thorium nuclear research should be restarted after a

three-decade shutdown and that a small prototype plant should be built.

Background and brief history

After World War II, uranium-based nuclear reactors were built to

produce electricity. These were similar to the reactor designs that

produced material for nuclear weapons. During that period, the

government of the United States also built

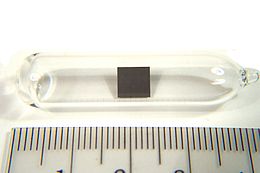

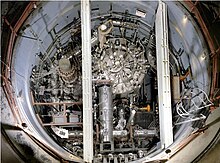

an experimental molten salt reactor using U-233 fuel, the fissile material created by bombarding thorium with neutrons. The MSRE reactor, built at

Oak Ridge National Laboratory, operated

critical for roughly 15,000 hours from 1965 to 1969. In 1968, Nobel laureate and discoverer of

plutonium,

Glenn Seaborg, publicly announced to the

Atomic Energy Commission, of which he was chairman, that the thorium-based reactor had been successfully developed and tested.

In 1973, however, the US government settled on uranium technology

and largely discontinued thorium-related nuclear research. The reasons

were that uranium-fueled reactors were more efficient, the research was

proven, and thorium's breeding ratio was thought insufficient to produce

enough fuel to support development of a commercial nuclear industry.

As Moir and Teller later wrote, "The competition came down to a liquid

metal fast breeder reactor (LMFBR) on the uranium-plutonium cycle and a

thermal reactor on the thorium-233U cycle, the molten salt breeder

reactor. The LMFBR had a larger breeding rate ... and won the

competition." In their opinion, the decision to stop development of

thorium reactors, at least as a backup option, “was an excusable

mistake.”

Science writer Richard Martin states that nuclear physicist

Alvin Weinberg,

who was director at Oak Ridge and primarily responsible for the new

reactor, lost his job as director because he championed development of

the safer thorium reactors. Weinberg himself recalls this period:

[Congressman] Chet Holifield

was clearly exasperated with me, and he finally blurted out, "Alvin, if

you are concerned about the safety of reactors, then I think it may be

time for you to leave nuclear energy." I was speechless. But it was

apparent to me that my style, my attitude, and my perception of the

future were no longer in tune with the powers within the AEC.

Martin explains that Weinberg's unwillingness to sacrifice

potentially safe nuclear power for the benefit of military uses forced

him to retire:

Weinberg realized that you could

use thorium in an entirely new kind of reactor, one that would have zero

risk of meltdown. . . . his team built a working reactor . . . . and he

spent the rest of his 18-year tenure trying to make thorium the heart

of the nation’s atomic power effort. He failed. Uranium reactors had

already been established, and Hyman Rickover,

de facto head of the US nuclear program, wanted the plutonium from

uranium-powered nuclear plants to make bombs. Increasingly shunted

aside, Weinberg was finally forced out in 1973.

Despite the documented history of thorium nuclear power, many of

today’s nuclear experts were nonetheless unaware of it. According to

Chemical & Engineering News,

"most people—including scientists—have hardly heard of the heavy-metal

element and know little about it...," noting a comment by a conference

attendee that "it's possible to have a Ph.D. in nuclear reactor

technology and not know about thorium energy." Nuclear physicist

Victor J. Stenger, for one, first learned of it in 2012:

It came as a surprise to me to

learn recently that such an alternative has been available to us since

World War II, but not pursued because it lacked weapons applications.

Others, including former

NASA scientist and thorium expert

Kirk Sorensen, agree that "thorium was the alternative path that was not taken … "

According to Sorensen, during a documentary interview, he states that

if the US had not discontinued its research in 1974 it could have

"probably achieved energy independence by around 2000."

Possible benefits

The thorium fuel cycle offers

enormous energy security benefits in the long-term – due to its

potential for being a self-sustaining fuel without the need for fast

neutron reactors. It is therefore an important and potentially viable

technology that seems able to contribute to building credible, long-term

nuclear energy scenarios.

Moir and Teller agree, noting that the possible advantages of thorium

include "utilization of an abundant fuel, inaccessibility of that fuel

to terrorists or for diversion to weapons use, together with good

economics and safety features … "

Thorium is considered the "most abundant, most readily available,

cleanest, and safest energy source on Earth," adds science writer

Richard Martin.

- Thorium is three times as abundant as uranium and nearly as abundant as lead and gallium in the Earth's crust. The Thorium Energy Alliance

estimates "there is enough thorium in the United States alone to power

the country at its current energy level for over 1,000 years." "America has buried tons as a by-product of rare earth metals mining," notes Evans-Pritchard. Almost all thorium is fertile Th-232, compared to uranium that is composed of 99.3% fertile U-238 and 0.7% more valuable fissile U-235.

- It is difficult to make a practical nuclear bomb from a thorium reactor's byproducts. According to Alvin Radkowsky,

designer of the world's first full-scale atomic electric power plant,

"a thorium reactor's plutonium production rate would be less than 2

percent of that of a standard reactor, and the plutonium's isotopic

content would make it unsuitable for a nuclear detonation." Several uranium-233 bombs have been tested, but the presence of uranium-232 tended to "poison" the uranium-233 in two ways: intense radiation

from the uranium-232 made the material difficult to handle, and the

uranium-232 led to possible pre-detonation. Separating the uranium-232

from the uranium-233 proved very difficult, although newer laser techniques could facilitate that process.

- There is much less nuclear waste—up to two orders of magnitude less, state Moir and Teller, eliminating the need for large-scale or long-term storage; "Chinese scientists claim that hazardous waste will be a thousand times less than with uranium."

The radioactivity of the resulting waste also drops down to safe levels

after just a one or a few hundred years, compared to tens of thousands

of years needed for current nuclear waste to cool off.

- According to Moir and Teller, "once started up [it] needs no other

fuel except thorium because it makes most or all of its own fuel."

This only applies to breeding reactors, that produce at least as much

fissile material as they consume. Other reactors require additional

fissile material, such as uranium-235 or plutonium.

- Thorium fuel cycle is a potential way to produce long term nuclear

energy with low radio-toxicity waste. In addition, the transition to

thorium could be done through the incineration of weapons grade

plutonium (WPu) or civilian plutonium.

- Since all natural thorium can be used as fuel no expensive fuel enrichment is needed. However the same is true for U-238 as fertile fuel in the uranium-plutonium cycle.

- Comparing the amount of thorium needed with coal, Nobel laureate Carlo Rubbia of CERN,

(European Organization for Nuclear Research), estimates that one ton of

thorium can produce as much energy as 200 tons of uranium, or 3,500,000

tons of coal.

- Liquid fluoride thorium reactors are designed to be meltdown proof. A

plug at the bottom of the reactor melts in the event of a power failure

or if temperatures exceed a set limit, draining the fuel into an

underground tank for safe storage.

- Mining thorium is safer and more efficient than mining uranium.

Thorium's ore monazite generally contains higher concentrations of

thorium than the percentage of uranium found in its respective ore. This

makes thorium a more cost efficient and less environmentally damaging

fuel source. Thorium mining is also easier and less dangerous than

uranium mining, as the mine is an open pit which requires no

ventilation, unlike underground uranium mines, where radon levels can be potentially harmful.

Summarizing some of the potential benefits, Martin offers his general

opinion: "Thorium could provide a clean and effectively limitless

source of power while allaying all public concern—weapons proliferation,

radioactive pollution, toxic waste, and fuel that is both costly and

complicated to process. From an economics viewpoint, UK business editor

Ambrose Evans-Pritchard has suggested that "Obama could kill fossil fuels overnight with a nuclear dash for thorium," suggesting a "new

Manhattan Project," and adding, "If it works, Manhattan II could restore American optimism and strategic leadership at a stroke …"

Moir and Teller estimated in 2004 that the cost for their recommended

prototype would be "well under $1 billion with operation costs likely on

the order of $100 million per year," and as a result a "large-scale

nuclear power plan" usable by many countries could be set up within a

decade.

A report by the

Bellona Foundation

in 2013 concluded that the economics are quite speculative. Thorium

nuclear reactors are unlikely to produce cheaper energy, but the

management of spent fuel is likely to be cheaper than for uranium

nuclear reactors.

Possible disadvantages

Some experts note possible specific disadvantages of thorium nuclear power:

- Breeding in a thermal neutron spectrum is slow and requires extensive reprocessing. The feasibility of reprocessing is still open.

- Significant and expensive testing, analysis and licensing work is first required, requiring business and government support. In a 2012 report on the use of thorium fuel with existing water-cooled reactors, the Bulletin of the Atomic Scientists

suggested that it would "require too great an investment and provide no

clear payoff", and that "from the utilities’ point of view, the only

legitimate driver capable of motivating pursuit of thorium is

economics".

- There is a higher cost of fuel fabrication and reprocessing than in plants using traditional solid fuel rods.

- Thorium, when being irradiated for use in reactors, will make

uranium-232, which is very dangerous due to the gamma rays it emits.

This irradiation process may be altered slightly by removing

protactinium-233. The irradiation would then make uranium-233 in lieu of

uranium-232, which can be used in nuclear weapons to make thorium into a

dual purpose fuel.

Thorium-based nuclear power projects

Research and development of thorium-based nuclear reactors, primarily the

Liquid fluoride thorium reactor (LFTR),

MSR design, has been or is now being done in the

United States,

United Kingdom,

Germany,

Brazil,

India,

China,

France, the

Czech Republic,

Japan,

Russia,

Canada,

Israel, and the

Netherlands. Conferences with experts from as many as 32 countries are held, including one by the

European Organization for Nuclear Research (

CERN) in 2013, which focuses on thorium as an alternative nuclear technology without requiring production of nuclear waste. Recognized experts, such as

Hans Blix, former head of the

International Atomic Energy Agency,

calls for expanded support of new nuclear power technology, and states,

"the thorium option offers the world not only a new sustainable supply

of fuel for nuclear power but also one that makes better use of the

fuel's energy content."

Canada

CANDU reactors are capable of using thorium, and Thorium Power Canada has, in 2013, planned and proposed developing thorium power projects for Chile and Indonesia.

The proposed 10 MW demonstration reactor in

Chile could be used to power a 20 million litre/day

desalination plant. All land and regulatory approvals are currently in process.

Thorium Power Canada's proposal for the development of a 25 MW

thorium reactor in

Indonesia is meant to be a "demonstration power project" which could provide electrical power to the country’s power grid.

In 2018, the New Brunswick Energy Solutions Corporation announced

the participation of Moltex Energy in the nuclear research cluster that

will work on research and development on small modular reactor

technology.

China

At the 2011 annual conference of the

Chinese Academy of Sciences, it was announced that "China has initiated a research and development project in thorium

MSR technology." In addition, Dr.

Jiang Mianheng, son of China's former leader

Jiang Zemin, led a thorium delegation in non-disclosure talks at

Oak Ridge National Laboratory, Tennessee, and by late 2013 China had officially partnered with Oak Ridge to aid China in its own development. The

World Nuclear Association notes that the

China Academy of Sciences

in January 2011 announced its R&D program, "claiming to have the

world's largest national effort on it, hoping to obtain full

intellectual property rights on the technology."

According to Martin, "China has made clear its intention to go it

alone," adding that China already has a monopoly over most of the

world's

rare earth minerals.

In March 2014, with their reliance on coal-fired power having

become a major cause of their current "smog crisis," they reduced their

original goal of creating a working reactor from 25 years down to 10.

"In the past, the government was interested in nuclear power because of

the energy shortage. Now they are more interested because of smog," said

Professor Li Zhong, a scientist working on the project. "This is

definitely a race," he added.

In early 2012, it was reported that China, using components

produced by the West and Russia, planned to build two prototype thorium

MSRs by 2015, and had budgeted the project at $400 million and requiring 400 workers." China also finalized an agreement with a Canadian nuclear technology company to develop improved

CANDU reactors using thorium and uranium as a fuel.

Germany, 1980s

The German

THTR-300

was a prototype commercial power station using thorium as fertile and

highly enriched U-235 as fissile fuel. Though named thorium high

temperature reactor, mostly U-235 was fissioned. The THTR-300 was a

helium-cooled high-temperature reactor with a

pebble-bed reactor

core consisting of approximately 670,000 spherical fuel compacts each 6

centimetres (2.4 in) in diameter with particles of uranium-235 and

thorium-232 fuel embedded in a graphite matrix.

It fed power to Germany's grid for 432 days in the late 1980s, before it

was shut down for cost, mechanical and other reasons.

India

India has

one of the largest supplies of thorium in the world, with comparatively

poor quantities of uranium. India has projected meeting as much as 30%

of its electrical demands through thorium by 2050.

In February 2014,

Bhabha Atomic Research Centre

(BARC), in Mumbai, India, presented their latest design for a

"next-generation nuclear reactor" that will burn thorium as its fuel

ore, calling it the Advanced Heavy Water Reactor (AWHR). They estimated

the reactor could function without an operator for 120 days. Validation of its core reactor physics was underway by late 2017.

According to Dr R K Sinha, chairman of their Atomic Energy

Commission, "This will reduce our dependence on fossil fuels, mostly

imported, and will be a major contribution to global efforts to combat

climate change." Because of its inherent safety, they expect that

similar designs could be set up "within" populated cities, like Mumbai

or Delhi.

India's government is also developing up to 62, mostly thorium

reactors, which it expects to be operational by 2025. It is the "only

country in the world with a detailed, funded, government-approved plan"

to focus on thorium-based nuclear power. The country currently gets

under 2% of its electricity from nuclear power, with the rest coming

from coal (60%), hydroelectricity (16%), other renewable sources (12%)

and natural gas (9%). It expects to produce around 25% of its electricity from nuclear power.

In 2009 the chairman of the Indian Atomic Energy Commission said that

India has a "long-term objective goal of becoming energy-independent

based on its vast thorium resources."

In late June 2012, India announced that their "first commercial

fast reactor" was near completion making India the most advanced country

in thorium research." We have huge reserves of thorium. The challenge

is to develop technology for converting this to fissile material,"

stated their former Chairman of India's Atomic Energy Commission. That vision of using thorium in place of uranium was set out in the 1950s by physicist

Homi Bhabha. India's first commercial fast breeder reactor — the 500 MWe

Prototype Fast Breeder Reactor (PFBR) — is approaching completion at the

Indira Gandhi Centre for Atomic Research,

Kalpakkam,

Tamil Nadu.

As of July 2013 the major equipment of the PFBR had been erected

and the loading of "dummy" fuels in peripheral locations was in

progress. The reactor was expected to go critical by September 2014. The Centre had sanctioned Rs. 5,677

crore

for building the PFBR and “we will definitely build the reactor within

that amount,” Mr. Kumar asserted. The original cost of the project was

Rs. 3,492 crore, revised to Rs. 5,677 crore. Electricity generated from

the PFBR would be sold to the State Electricity Boards at Rs. 4.44 a

unit. BHAVINI builds

breeder reactors in India.

In 2013 India's 300 MWe

AHWR (pressurized heavy water reactor) was slated to be built at an undisclosed location.

The design envisages a start up with reactor grade plutonium that will

breed U-233 from Th-232. Thereafter thorium is to be the only fuel. As of 2017, the design is in the final stages of validation.

By Nov 2015 the PFBR was built and expected to

Delays have since postponed the commissioning [criticality?] of the PFBR to Sept 2016,

but India's commitment to long-term nuclear energy production is

underscored by the approval in 2015 of ten new sites for reactors of

unspecified types,

though procurement of primary fissile material – preferably plutonium –

may be problematic due to India's low uranium reserves and capacity for

production.

Israel

In May 2010, researchers from

Ben-Gurion University of the Negev in Israel and

Brookhaven National Laboratory in New York began to collaborate on the development of thorium reactors,

aimed at being self-sustaining, "meaning one that will produce and

consume about the same amounts of fuel," which is not possible with

uranium in a light water reactor.

Japan

In June 2012, Japan utility

Chubu Electric Power wrote that they regard thorium as "one of future possible energy resources."

Norway

In late 2012, Norway's privately owned Thor Energy, in collaboration with the government and

Westinghouse, announced a four-year trial using thorium in an existing nuclear reactor." In 2013,

Aker Solutions purchased patents from Nobel Prize winning physicist

Carlo Rubbia for the design of a proton accelerator-based thorium nuclear power plant.

United Kingdom

In Britain, one organisation promoting or examining research on thorium-based nuclear plants is

The Alvin Weinberg Foundation. House of Lords member

Bryony Worthington is promoting thorium, calling it “the forgotten fuel” that could alter Britain’s energy plans. However, in 2010, the UK’s

National Nuclear Laboratory

(NNL) concluded that for the short to medium term, "...the thorium fuel

cycle does not currently have a role to play," in that it is

"technically immature, and would require a significant financial

investment and risk without clear benefits," and concluded that the

benefits have been "overstated."

Friends of the Earth UK considers research into it as "useful" as a fallback option.

United States

In its January 2012 report to the

United States Secretary of Energy, the Blue Ribbon Commission on America's Future notes that a "molten-salt reactor using thorium [has] also been proposed." That same month it was reported that the

US Department of Energy is "quietly collaborating with China" on thorium-based nuclear power designs using an

MSR.

Some experts and politicians want thorium to be "the pillar of the U.S. nuclear future." Senators

Harry Reid and

Orrin Hatch have supported using $250 million in federal research funds to revive

ORNL research. In 2009, Congressman

Joe Sestak unsuccessfully attempted to secure funding for research and development of a

destroyer-sized reactor [reactor of a size to power a destroyer] using thorium-based liquid fuel.

Alvin Radkowsky, chief designer of the world’s second full-scale atomic electric power plant in

Shippingport, Pennsylvania, founded a joint US and Russian project in 1997 to create a thorium-based reactor, considered a "creative breakthrough." In 1992, while a resident professor in

Tel Aviv, Israel, he founded the US company, Thorium Power Ltd., near Washington, D.C., to build thorium reactors.

The primary fuel of the proposed

HT3R research project near

Odessa, Texas, United States, will be ceramic-coated thorium beads. The earliest the reactor would become operational was 2015.

World sources of thorium

World thorium reserves (2007)

| Country

|

Tons

|

%

|

| Australia |

489,000 |

18.7%

|

| USA |

400,000 |

15.3%

|

| Turkey |

344,000 |

13.2%

|

| India |

319,000 |

12.2%

|

| Brazil |

302,000 |

11.6%

|

| Venezuela |

300,000 |

11.5%

|

| Norway |

132,000 |

5.1%

|

| Egypt |

100,000 |

3.8%

|

| Russia |

75,000 |

2.9%

|

| Greenland (Denmark) |

54,000 |

2.1%

|

| Canada |

44,000 |

1.7%

|

| South Africa |

18,000 |

0.7%

|

| Other countries |

33,000 |

1.2%

|

| World Total |

2,610,000 |

100.0%

|

Thorium is mostly found with the

rare earth phosphate mineral,

monazite,

which contains up to about 12% thorium phosphate, but 6-7% on average.

World monazite resources are estimated to be about 12 million tons,

two-thirds of which are in heavy mineral sands deposits on the south and

east coasts of India. There are substantial deposits in several other

countries

(see table "World thorium reserves").

Monazite is a good source of REEs (Rare Earth Element), but monazites

are currently not economical to produce because the radioactive thorium

that is produced as a byproduct would have to be stored indefinitely.

However, if thorium-based power plants were adopted on a large-scale,

virtually all the world's thorium requirements could be supplied simply

by refining monazites for their more valuable REEs.

Another estimate of reasonably assured reserves (RAR) and

estimated additional reserves (EAR) of thorium comes from OECD/NEA,

Nuclear Energy, "Trends in Nuclear Fuel Cycle", Paris, France (2001).

IAEA Estimates in tons (2005)

| India |

519,000 |

21%

|

| Australia |

489,000 |

19%

|

| USA |

400,000 |

13%

|

| Turkey |

344,000 |

11%

|

| Venezuela |

302,000 |

10%

|

| Brazil |

302,000 |

10%

|

| Norway |

132,000 |

4%

|

| Egypt |

100,000 |

3%

|

| Russia |

75,000 |

2%

|

| Greenland |

54,000 |

2%

|

| Canada |

44,000 |

2%

|

| South Africa |

18,000 |

1%

|

| Other countries |

33,000 |

2%

|

| World Total |

2,810,000 |

100%

|

The preceding figures are reserves and as such refer to the amount of

thorium in high-concentration deposits inventoried so far and estimated

to be extractable at current market prices; millions of times more

total exist in Earth's 3×1019

tonne crust, around 120 trillion tons of thorium, and lesser but vast

quantities of thorium exist at intermediate concentrations. Proved reserves are a good indicator of the total future supply of a mineral resource.

Types of thorium-based reactors

According to the

World Nuclear Association,

there are seven types of reactors that can be designed to use thorium

as a nuclear fuel. Six of these have all entered into operational

service at some point. The seventh is still conceptual, although

currently in development by many countries:

- Heavy water reactors (PHWRs)

- High-temperature gas-cooled reactors (HTRs)

- Boiling (light) water reactors (BWRs)

- Pressurized (light) water reactors (PWRs)

- Fast neutron reactors (FNRs)

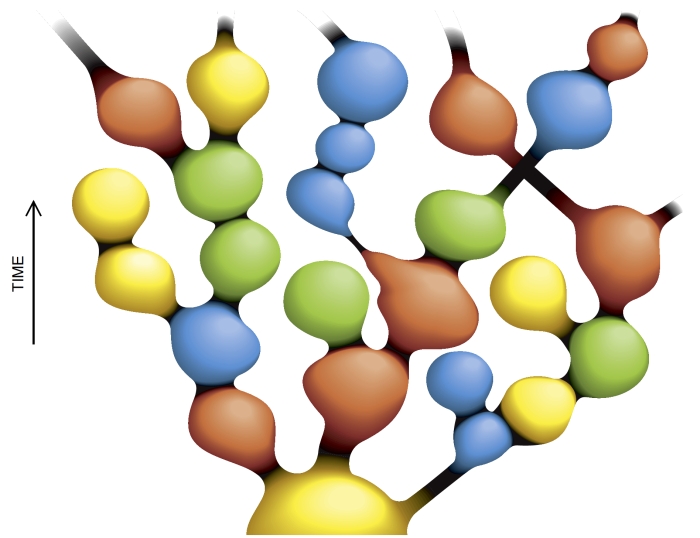

- Molten salt reactors (MSRs), including Liquid fluoride thorium reactors

(LFTRs). Instead of using fuel rods, molten salt reactors use compounds

of fissile materials, in the form of molten salts, which are cycled

through the core to undergo fission. The molten salt then carries the

heat produced by the reactions away from the core, and exchanges it with

a secondary medium.

Molten salt breeder reactors, or MSBRs, are another type of molten salt

reactor that use thorium to breed more fissile material. Currently,

India's nuclear program is looking into using MSBRs, in order to take

advantage of their efficiency and India's own available Thorium

reserves.

- Aqueous Homogeneous Reactors

(AHRs) have been proposed as a fluid fueled design that could accept

naturally occurring uranium and thorium suspended in a heavy water

solution. AHRs have been built and according to the IAEA reactor database, 7 are currently in operation as research reactors.

- Accelerator driven reactors (ADS)