| Evidence-based practices |

|---|

Metascience (also known as meta-research) is the use of scientific methodology to study science itself. Metascience seeks to increase the quality of scientific research while reducing waste. It is also known as "research on research" and "the science of science", as it uses research methods to study how research is done and where improvements can be made. Metascience concerns itself with all fields of research and has been described as "a bird's eye view of science." In the words of John Ioannidis, "Science is the best thing that has happened to human beings ... but we can do it better."

In 1966, an early meta-research paper examined the statistical methods of 295 papers published in ten high-profile medical journals. It found that, "in almost 73% of the reports read ... conclusions were drawn when the justification for these conclusions was invalid." Meta-research in the following decades found many methodological flaws, inefficiencies, and poor practices in research across numerous scientific fields. Many scientific studies could not be reproduced, particularly in medicine and the soft sciences. The term "replication crisis" was coined in the early 2010s as part of a growing awareness of the problem.

Measures have been implemented to address the issues revealed by metascience. These measures include the pre-registration of scientific studies and clinical trials as well as the founding of organizations such as CONSORT and the EQUATOR Network that issue guidelines for methodology and reporting. There are continuing efforts to reduce the misuse of statistics, to eliminate perverse incentives from academia, to improve the peer review process, to combat bias in scientific literature, and to increase the overall quality and efficiency of the scientific process.

History

In 1966, an early meta-research paper examined the statistical methods of 295 papers published in ten high-profile medical journals. It found that, "in almost 73% of the reports read ... conclusions were drawn when the justification for these conclusions was invalid." In 2005, John Ioannidis published a paper titled "Why Most Published Research Findings Are False", which argued that a majority a papers in the medical field produce conclusions that are wrong. The paper went on to become the most downloaded paper in the Public Library of Science and is considered foundational to the field of metascience. In a related study with Jeremy Howick and Despina Koletsi, Ioannidis showed that only a minority of medical interventions are supported by 'high quality' evidence according to The Grading of Recommendations Assessment, Development and Evaluation (GRADE) approach. Later meta-research identified widespread difficulty in replicating results in many scientific fields, including psychology and medicine. This problem was termed "the replication crisis". Metascience has grown as a reaction to the replication crisis and to concerns about waste in research.

Many prominent publishers are interested in meta-research and in improving the quality of their publications. Top journals such as Science, The Lancet, and Nature, provide ongoing coverage of meta-research and problems with reproducibility. In 2012 PLOS ONE launched a Reproducibility Initiative. In 2015 Biomed Central introduced a minimum-standards-of-reporting checklist to four titles.

The first international conference in the broad area of meta-research was the Research Waste/EQUATOR conference held in Edinburgh in 2015; the first international conference on peer review was the Peer Review Congress held in 1989. In 2016, Research Integrity and Peer Review was launched. The journal's opening editorial called for "research that will increase our understanding and suggest potential solutions to issues related to peer review, study reporting, and research and publication ethics".

Areas of meta-research

Metascience can be categorize into five major areas of interest: Methods, Reporting, Reproducibility, Evaluation, and Incentives. These correspond, respectively, with how to perform, communicate, verify, evaluate, and reward research.

Methods

Metascience seeks to identify poor research practices, including biases in research, poor study design, abuse of statistics, and to find methods to reduce these practices. Meta-research has identified numerous biases in scientific literature. Of particular note is the widespread misuse of p-values and abuse of statistical significance.

Reporting

Meta-research has identified poor practices in reporting, explaining, disseminating and popularizing research, particularly within the social and health sciences. Poor reporting makes it difficult to accurately interpret the results of scientific studies, to replicate studies, and to identify biases and conflicts of interest in the authors. Solutions include the implementation of reporting standards, and greater transparency in scientific studies (including better requirements for disclosure of conflicts of interest). There is an attempt to standardize reporting of data and methodology through the creation of guidelines by reporting agencies such as CONSORT and the larger EQUATOR Network.

Reproducibility

The replication crisis is an ongoing methodological crisis in which it has been found that many scientific studies are difficult or impossible to replicate. While the crisis has its roots in the meta-research of the mid- to late-1900s, the phrase "replication crisis" was not coined until the early 2010s as part of a growing awareness of the problem. The replication crisis particularly affects psychology (especially social psychology) and medicine. Replication is an essential part of the scientific process, and the widespread failure of replication puts into question the reliability of affected fields.

Moreover, replication of research (or failure to replicate) is considered less influential than original research, and is less likely to be published in many fields. This discourages the reporting of, and even attempts to replicate, studies.

Evaluation

Metascience seeks to create a scientific foundation for peer review. Meta-research evaluates peer review systems including pre-publication peer review, post-publication peer review, and open peer review. It also seeks to develop better research funding criteria.

Incentives

Metascience seeks to promote better research through better incentive systems. This includes studying the accuracy, effectiveness, costs, and benefits of different approaches to ranking and evaluating research and those who perform it. Critics argue that perverse incentives have created a publish-or-perish environment in academia which promotes the production of junk science, low quality research, and false positives. According to Brian Nosek, “The problem that we face is that the incentive system is focused almost entirely on getting research published, rather than on getting research right.” Proponents of reform seek to structure the incentive system to favor higher-quality results.

Reforms

Meta-research identifying flaws in scientific practice has inspired reforms in science. These reforms seek to address and fix problems in scientific practice which lead to low-quality or inefficient research.

Pre-registration

The practice of registering a scientific study before it is conducted is called pre-registration. It arose as a means to address the replication crisis. Pregistration requires the submission of a registered report, which is then accepted for publication or rejected by a journal based on theoretical justification, experimental design, and the proposed statistical analysis. Pre-registration of studies serves to prevent publication bias, reduce data dredging, and increase replicability.

Reporting standards

Studies showing poor consistency and quality of reporting have demonstrated the need for reporting standards and guidelines in science, which has led to the rise of organisations that produce such standards, such as CONSORT (Consolidated Standards of Reporting Trials) and the EQUATOR Network.

The EQUATOR (Enhancing the QUAlity and Transparency Of health Research) Network is an international initiative aimed at promoting transparent and accurate reporting of health research studies to enhance the value and reliability of medical research literature. The EQUATOR Network was established with the goals of raising awareness of the importance of good reporting of research, assisting in the development, dissemination and implementation of reporting guidelines for different types of study designs, monitoring the status of the quality of reporting of research studies in the health sciences literature, and conducting research relating to issues that impact the quality of reporting of health research studies. The Network acts as an "umbrella" organisation, bringing together developers of reporting guidelines, medical journal editors and peer reviewers, research funding bodies, and other key stakeholders with a mutual interest in improving the quality of research publications and research itself.

Applications

Medicine

Clinical research in medicine is often of low quality, and many studies cannot be replicated. An estimated 85% of research funding is wasted. Additionally, the presence of bias affects research quality. The pharmaceutical industry exerts substantial influence on the design and execution of medical research. Conflicts of interest are common among authors of medical literature and among editors of medical journals. While almost all medical journals require their authors to disclose conflicts of interest, editors are not required to do so. Financial conflicts of interest have been linked to higher rates of positive study results. In antidepressant trials, pharmaceutical sponsorship is the best predictor of trial outcome.

Blinding is another focus of meta-research, as error caused by poor blinding is a source of experimental bias. Blinding is not well reported in medical literature, and widespread misunderstanding of the subject has resulted in poor implementation of blinding in clinical trials. Furthermore, failure of blinding is rarely measured or reported. Research showing the failure of blinding in antidepressant trials has led some scientists to argue that antidepressants are no better than placebo. In light of meta-research showing failures of blinding, CONSORT standards recommend that all clinical trials assess and report the quality of blinding.

Studies have shown that systematic reviews of existing research evidence are sub-optimally used in planning a new research or summarizing the results. Cumulative meta-analyses of studies evaluating the effectiveness of medical interventions have shown that many clinical trials could have been avoided if a systematic review of existing evidence was done prior to conducting a new trial. For example, Lau et al. analyzed 33 clinical trials (involving 36974 patients) evaluating the effectiveness of intravenous streptokinase for acute myocardial infarction. Their cumulative meta-analysis demonstrated that 25 of 33 trials could have been avoided if a systematic review was conducted prior to conducting a new trial. In other words, randomizing 34542 patients was potentially unnecessary. One study analyzed 1523 clinical trials included in 227 meta-analyses and concluded that "less than one quarter of relevant prior studies" were cited. They also confirmed earlier findings that most clinical trial reports do not present systematic review to justify the research or summarize the results.

Many treatments used in modern medicine have been proven to be ineffective, or even harmful. A 2007 study by John Ioannidis found that it took an average of ten years for the medical community to stop referencing popular practices after their efficacy was unequivocally disproven.

Psychology

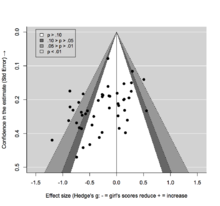

Metascience has revealed significant problems in psychological research. The field suffers from high bias, low reproducibility, and widespread misuse of statistics. The replication crisis affects psychology more strongly than any other field; as many as two-thirds of highly publicized findings may be impossible to replicate. Meta-research finds that 80-95% of psychological studies support their initial hypotheses, which strongly implies the existence of publication bias.

The replication crisis has led to renewed efforts to re-test important findings. In response to concerns about publication bias and p-hacking, more than 140 psychology journals have adopted result-blind peer review, in which studies are pre-registered and published without regard for their outcome. An analysis of these reforms estimated that 61 percent of result-blind studies produce null results, in contrast with 5 to 20 percent in earlier research. This analysis shows that result-blind peer review substantially reduces publication bias.

Psychologists routinely confuse statistical significance with practical importance, enthusiastically reporting great certainty in unimportant facts. Some psychologists have responded with an increased use of effect size statistics, rather than sole reliance on the p values.

Physics

Richard Feynman noted that estimates of physical constants were closer to published values than would be expected by chance. This was believed to be the result of confirmation bias: results that agreed with existing literature were more likely to be believed, and therefore published. Physicists now implement blinding to prevent this kind of bias.

Associated fields

Journalology

Journalology, also known as publication science, is the scholarly study of all aspects of the academic publishing process. The field seeks to improve the quality of scholarly research by implementing evidence-based practices in academic publishing. The term "journalology" was coined by Stephen Lock, the former editor-in-chief of the BMJ. The first Peer Review Congress, held in 1989 in Chicago, Illinois, is considered a pivotal moment in the founding of journalology as a distinct field. The field of journalology has been influential in pushing for study pre-registration in science, particularly in clinical trials. Clinical-trial registration is now expected in most countries.

Scientometrics

Scientometrics concerns itself with measuring bibliographic data in scientific publications. Major research issues include the measurement of the impact of research papers and academic journals, the understanding of scientific citations, and the use of such measurements in policy and management contexts.

Scientific data science

Scientific data science is the use of data science to analyse research papers. It encompasses both qualitative and quantitative methods. Research in scientific data science includes fraud detection and citation network analysis.