A Medley of Potpourri is just what it says; various thoughts, opinions, ruminations, and contemplations on a variety of subjects.

Search This Blog

Thursday, November 17, 2022

Neuromorphic engineering

Neuromorphic engineering, also known as neuromorphic computing, is the use of electronic circuits to mimic neuro-biological architectures present in the nervous system. A neuromorphic computer/chip is any device that uses physical artificial neurons (made from silicon) to do computations. In recent times, the term neuromorphic has been used to describe analog, digital, mixed-mode analog/digital VLSI, and software systems that implement models of neural systems (for perception, motor control, or multisensory integration). The implementation of neuromorphic computing on the hardware level can be realized by oxide-based memristors, spintronic memories, threshold switches, transistors, among others. Training software-based neuromorphic systems of spiking neural networks can be achieved using error backpropagation, e.g., using Python based frameworks such as snnTorch, or using canonical learning rules from the biological learning literature, e.g., using BindsNet.

A key aspect of neuromorphic engineering is understanding how the morphology of individual neurons, circuits, applications, and overall architectures creates desirable computations, affects how information is represented, influences robustness to damage, incorporates learning and development, adapts to local change (plasticity), and facilitates evolutionary change.

Neuromorphic engineering is an interdisciplinary subject that takes inspiration from biology, physics, mathematics, computer science, and electronic engineering to design artificial neural systems, such as vision systems, head-eye systems, auditory processors, and autonomous robots, whose physical architecture and design principles are based on those of biological nervous systems. One of the first applications for neuromorphic engineering was proposed by Carver Mead in the late 1980s.

Neurological inspiration

Neuromorphic engineering is for now set apart by the inspiration it takes from what we know about the structure and operations of the brain. Neuromorphic engineering translates what we know about the brain's function into computer systems. Work has mostly focused on replicating the analog nature of biological computation and the role of neurons in cognition.

The biological processes of neurons and their synapses are dauntingly complex, and thus very difficult to artificially simulate. A key feature of biological brains is that all of the processing in neurons uses analog chemical signals. This makes it hard to replicate brains in computers because the current generation of computers is completely digital. However, the characteristics of these parts can be abstracted into mathematical functions that closely capture the essence of the neuron's operations.

The goal of neuromorphic computing is not to perfectly mimic the brain and all of its functions, but instead to extract what is known of its structure and operations to be used in a practical computing system. No neuromorphic system will claim nor attempt to reproduce every element of neurons and synapses, but all adhere to the idea that computation is highly distributed throughout a series of small computing elements analogous to a neuron. While this sentiment is standard, researchers chase this goal with different methods.

Examples

As early as 2006, researchers at Georgia Tech published a field programmable neural array. This chip was the first in a line of increasingly complex arrays of floating gate transistors that allowed programmability of charge on the gates of MOSFETs to model the channel-ion characteristics of neurons in the brain and was one of the first cases of a silicon programmable array of neurons.

In November 2011, a group of MIT researchers created a computer chip that mimics the analog, ion-based communication in a synapse between two neurons using 400 transistors and standard CMOS manufacturing techniques.

In June 2012, spintronic researchers at Purdue University presented a paper on the design of a neuromorphic chip using lateral spin valves and memristors. They argue that the architecture works similarly to neurons and can therefore be used to test methods of reproducing the brain's processing. In addition, these chips are significantly more energy-efficient than conventional ones.

Research at HP Labs on Mott memristors has shown that while they can be non-volatile, the volatile behavior exhibited at temperatures significantly below the phase transition temperature can be exploited to fabricate a neuristor, a biologically-inspired device that mimics behavior found in neurons. In September 2013, they presented models and simulations that show how the spiking behavior of these neuristors can be used to form the components required for a Turing machine.

Neurogrid, built by Brains in Silicon at Stanford University, is an example of hardware designed using neuromorphic engineering principles. The circuit board is composed of 16 custom-designed chips, referred to as NeuroCores. Each NeuroCore's analog circuitry is designed to emulate neural elements for 65536 neurons, maximizing energy efficiency. The emulated neurons are connected using digital circuitry designed to maximize spiking throughput.

A research project with implications for neuromorphic engineering is the Human Brain Project that is attempting to simulate a complete human brain in a supercomputer using biological data. It is made up of a group of researchers in neuroscience, medicine, and computing. Henry Markram, the project's co-director, has stated that the project proposes to establish a foundation to explore and understand the brain and its diseases, and to use that knowledge to build new computing technologies. The three primary goals of the project are to better understand how the pieces of the brain fit and work together, to understand how to objectively diagnose and treat brain diseases, and to use the understanding of the human brain to develop neuromorphic computers. That the simulation of a complete human brain will require a supercomputer a thousand times more powerful than today's encourages the current focus on neuromorphic computers. $1.3 billion has been allocated to the project by The European Commission.

Other research with implications for neuromorphic engineering involve the BRAIN Initiative and the TrueNorth chip from IBM. Neuromorphic devices have also been demonstrated using nanocrystals, nanowires, and conducting polymers. There also is development of a memristive device for quantum neuromorphic architectures. In 2022, researchers at MIT have reported the development of brain-inspired artificial synapses, using the ion proton (H+

), for 'analog deep learning'.

Intel unveiled its neuromorphic research chip, called “Loihi”, in October 2017. The chip uses an asynchronous spiking neural network (SNN) to implement adaptive self-modifying event-driven fine-grained parallel computations used to implement learning and inference with high efficiency.

IMEC, a Belgium-based nanoelectronics research center, demonstrated the world's first self-learning neuromorphic chip. The brain-inspired chip, based on OxRAM technology, has the capability of self-learning and has been demonstrated to have the ability to compose music. IMEC released the 30-second tune composed by the prototype. The chip was sequentially loaded with songs in the same time signature and style. The songs were old Belgian and French flute minuets, from which the chip learned the rules at play and then applied them.

The Blue Brain Project, led by Henry Markram, aims to build biologically detailed digital reconstructions and simulations of the mouse brain. The Blue Brain Project has created in silico models of rodent brains, while attempting to replicate as many details about its biology as possible. The supercomputer-based simulations offer new perspectives on understanding the structure and functions of the brain.

The European Union funded a series of projects at the University of Heidelberg, which led to the development of BrainScaleS (brain-inspired multiscale computation in neuromorphic hybrid systems), a hybrid analog neuromorphic supercomputer located at Heidelberg University, Germany. It was developed as part of the Human Brain Project neuromorphic computing platform and is the complement to the SpiNNaker supercomputer (which is based on digital technology). The architecture used in BrainScaleS mimics biological neurons and their connections on a physical level; additionally, since the components are made of silicon, these model neurons operate on average 864 times (24 hours of real time is 100 seconds in the machine simulation) that of their biological counterparts.

Neuromorphic sensors

The concept of neuromorphic systems can be extended to sensors (not just to computation). An example of this applied to detecting light is the retinomorphic sensor or, when employed in an array, the event camera.

Ethical considerations

While the interdisciplinary concept of neuromorphic engineering is relatively new, many of the same ethical considerations apply to neuromorphic systems as apply to human-like machines and artificial intelligence in general. However, the fact that neuromorphic systems are designed to mimic a human brain gives rise to unique ethical questions surrounding their usage.

However, the practical debate is that neuromorphic hardware as well as artificial "neural networks" are immensely simplified models of how the brain operates or processes information at a much lower complexity in terms of size and functional technology and a much more regular structure in terms of connectivity. Comparing neuromorphic chips to the brain is a very crude comparison similar to comparing a plane to a bird just because they both have wings and a tail. The fact is that neural cognitive systems are many orders of magnitude more energy- and compute-efficient than current state-of-the-art AI and neuromorphic engineering is an attempt to narrow this gap by inspiring from the brain's mechanism just like many engineering designs have bio-inspired features.

Democratic concerns

Significant ethical limitations may be placed on neuromorphic engineering due to public perception. Special Eurobarometer 382: Public Attitudes Towards Robots, a survey conducted by the European Commission, found that 60% of European Union citizens wanted a ban of robots in the care of children, the elderly, or the disabled. Furthermore, 34% were in favor of a ban on robots in education, 27% in healthcare, and 20% in leisure. The European Commission classifies these areas as notably “human.” The report cites increased public concern with robots that are able to mimic or replicate human functions. Neuromorphic engineering, by definition, is designed to replicate the function of the human brain.

The democratic concerns surrounding neuromorphic engineering are likely to become even more profound in the future. The European Commission found that EU citizens between the ages of 15 and 24 are more likely to think of robots as human-like (as opposed to instrument-like) than EU citizens over the age of 55. When presented an image of a robot that had been defined as human-like, 75% of EU citizens aged 15–24 said it corresponded with the idea they had of robots while only 57% of EU citizens over the age of 55 responded the same way. The human-like nature of neuromorphic systems, therefore, could place them in the categories of robots many EU citizens would like to see banned in the future.

Personhood

As neuromorphic systems have become increasingly advanced, some scholars have advocated for granting personhood rights to these systems. If the brain is what grants humans their personhood, to what extent does a neuromorphic system have to mimic the human brain to be granted personhood rights? Critics of technology development in the Human Brain Project, which aims to advance brain-inspired computing, have argued that advancement in neuromorphic computing could lead to machine consciousness or personhood. If these systems are to be treated as people, critics argue, then many tasks humans perform using neuromorphic systems, including the act of termination of neuromorphic systems, may be morally impermissible as these acts would violate the autonomy of the neuromorphic systems.

Dual use (military applications)

The Joint Artificial Intelligence Center, a branch of the U.S. military, is a center dedicated to the procurement and implementation of AI software and neuromorphic hardware for combat use. Specific applications include smart headsets/goggles and robots. JAIC intends to rely heavily on neuromorphic technology to connect "every sensor (to) every shooter" within a network of neuromorphic-enabled units.

Legal considerations

Skeptics have argued that there is no way to apply the electronic personhood, the concept of personhood that would apply to neuromorphic technology, legally. In a letter signed by 285 experts in law, robotics, medicine, and ethics opposing a European Commission proposal to recognize “smart robots” as legal persons, the authors write, “A legal status for a robot can’t derive from the Natural Person model, since the robot would then hold human rights, such as the right to dignity, the right to its integrity, the right to remuneration or the right to citizenship, thus directly confronting the Human rights. This would be in contradiction with the Charter of Fundamental Rights of the European Union and the Convention for the Protection of Human Rights and Fundamental Freedoms.”

Ownership and property rights

There is significant legal debate around property rights and artificial intelligence. In Acohs Pty Ltd v. Ucorp Pty Ltd, Justice Christopher Jessup of the Federal Court of Australia found that the source code for Material Safety Data Sheets could not be copyrighted as it was generated by a software interface rather than a human author. The same question may apply to neuromorphic systems: if a neuromorphic system successfully mimics a human brain and produces a piece of original work, who, if anyone, should be able to claim ownership of the work?

Neuromemristive systems

Neuromemristive systems is a subclass of neuromorphic computing systems that focuses on the use of memristors to implement neuroplasticity. While neuromorphic engineering focuses on mimicking biological behavior, neuromemristive systems focus on abstraction. For example, a neuromemristive system may replace the details of a cortical microcircuit's behavior with an abstract neural network model.

There exist several neuron inspired threshold logic functions implemented with memristors that have applications in high level pattern recognition applications. Some of the applications reported recently include speech recognition, face recognition and object recognition. They also find applications in replacing conventional digital logic gates.

For ideal passive memristive circuits there is an exact equation (Caravelli-Traversa-Di Ventra equation) for the internal memory of the circuit:

as a function of the properties of the physical memristive network and the external sources. In the equation above,

Neurorobotics

Neurorobotics is the combined study of neuroscience, robotics, and artificial intelligence. It is the science and technology of embodied autonomous neural systems. Neural systems include brain-inspired algorithms (e.g. connectionist networks), computational models of biological neural networks (e.g. artificial spiking neural networks, large-scale simulations of neural microcircuits) and actual biological systems (e.g. in vivo and in vitro neural nets). Such neural systems can be embodied in machines with mechanic or any other forms of physical actuation. This includes robots, prosthetic or wearable systems but also, at smaller scale, micro-machines and, at the larger scales, furniture and infrastructures.

Neurorobotics is that branch of neuroscience with robotics, which deals with the study and application of science and technology of embodied autonomous neural systems like brain-inspired algorithms. It is based on the idea that the brain is embodied and the body is embedded in the environment. Therefore, most neurorobots are required to function in the real world, as opposed to a simulated environment.

Beyond brain-inspired algorithms for robots neurorobotics may also involve the design of brain-controlled robot systems.

Major classes of neurorobotic models

Neurorobots can be divided into various major classes based on the robot's purpose. Each class is designed to implement a specific mechanism of interest for study. Common types of neurorobots are those used to study motor control, memory, action selection, and perception.

Locomotion and motor control

Neurorobots are often used to study motor feedback and control systems, and have proved their merit in developing controllers for robots. Locomotion is modeled by a number of neurologically inspired theories on the action of motor systems. Locomotion control has been mimicked using models or central pattern generators, clumps of neurons capable of driving repetitive behavior, to make four-legged walking robots. Other groups have expanded the idea of combining rudimentary control systems into a hierarchical set of simple autonomous systems. These systems can formulate complex movements from a combination of these rudimentary subsets. This theory of motor action is based on the organization of cortical columns, which progressively integrate from simple sensory input into a complex afferent signals, or from complex motor programs to simple controls for each muscle fiber in efferent signals, forming a similar hierarchical structure.

Another method for motor control uses learned error correction and predictive controls to form a sort of simulated muscle memory. In this model, awkward, random, and error-prone movements are corrected for using error feedback to produce smooth and accurate movements over time. The controller learns to create the correct control signal by predicting the error. Using these ideas, robots have been designed which can learn to produce adaptive arm movements or to avoid obstacles in a course.

Learning and memory systems

Robots designed to test theories of animal memory systems. Many studies examine the memory system of rats, particularly the rat hippocampus, dealing with place cells, which fire for a specific location that has been learned. Systems modeled after the rat hippocampus are generally able to learn mental maps of the environment, including recognizing landmarks and associating behaviors with them, allowing them to predict the upcoming obstacles and landmarks.

Another study has produced a robot based on the proposed learning paradigm of barn owls for orientation and localization based on primarily auditory, but also visual stimuli. The hypothesized method involves synaptic plasticity and neuromodulation, a mostly chemical effect in which reward neurotransmitters such as dopamine or serotonin affect the firing sensitivity of a neuron to be sharper. The robot used in the study adequately matched the behavior of barn owls. Furthermore, the close interaction between motor output and auditory feedback proved to be vital in the learning process, supporting active sensing theories that are involved in many of the learning models.

Neurorobots in these studies are presented with simple mazes or patterns to learn. Some of the problems presented to the neurorobot include recognition of symbols, colors, or other patterns and execute simple actions based on the pattern. In the case of the barn owl simulation, the robot had to determine its location and direction to navigate in its environment.

Action selection and value systems

Action selection studies deal with negative or positive weighting to an action and its outcome. Neurorobots can and have been used to study simple ethical interactions, such as the classical thought experiment where there are more people than a life raft can hold, and someone must leave the boat to save the rest. However, more neurorobots used in the study of action selection contend with much simpler persuasions such as self-preservation or perpetuation of the population of robots in the study. These neurorobots are modeled after the neuromodulation of synapses to encourage circuits with positive results.

In biological systems, neurotransmitters such as dopamine or acetylcholine positively reinforce neural signals that are beneficial. One study of such interaction involved the robot Darwin VII, which used visual, auditory, and a simulated taste input to "eat" conductive metal blocks. The arbitrarily chosen good blocks had a striped pattern on them while the bad blocks had a circular shape on them. The taste sense was simulated by conductivity of the blocks. The robot had positive and negative feedbacks to the taste based on its level of conductivity. The researchers observed the robot to see how it learned its action selection behaviors based on the inputs it had. Other studies have used herds of small robots which feed on batteries strewn about the room, and communicate its findings to other robots.

Sensory perception

Neurorobots have also been used to study sensory perception, particularly vision. These are primarily systems that result from embedding neural models of sensory pathways in automatas. This approach gives exposure to the sensory signals that occur during behavior and also enables a more realistic assessment of the degree of robustness of the neural model. It is well known that changes in the sensory signals produced by motor activity provide useful perceptual cues that are used extensively by organisms. For example, researchers have used the depth information that emerges during replication of human head and eye movements to establish robust representations of the visual scene.

Biological robots

Biological robots are not officially neurorobots in that they are not neurologically inspired AI systems, but actual neuron tissue wired to a robot. This employs the use of cultured neural networks to study brain development or neural interactions. These typically consist of a neural culture raised on a multielectrode array (MEA), which is capable of both recording the neural activity and stimulating the tissue. In some cases, the MEA is connected to a computer which presents a simulated environment to the brain tissue and translates brain activity into actions in the simulation, as well as providing sensory feedback. The ability to record neural activity gives researchers a window into a brain, which they can use to learn about a number of the same issues neurorobots are used for.

An area of concern with the biological robots is ethics. Many questions are raised about how to treat such experiments. The central question concerns consciousness and whether or not the rat brain experiences it. There are many theories about how to define consciousness.

Implications for neuroscience

Neuroscientists benefit from neurorobotics because it provides a blank slate to test various possible methods of brain function in a controlled and testable environment. While robots are more simplified versions of the systems they emulate, they are more specific, allowing more direct testing of the issue at hand. They also have the benefit of being accessible at all times, while it is more difficult to monitor large portions of a brain while the animal is active, especially individual neurons.

The development of neuroscience has produced neural treatments. These include pharmaceuticals and neural rehabilitation. Progress is dependent on an intricate understanding of the brain and how exactly it functions. It is difficult to study the brain, especially in humans, due to the danger associated with cranial surgeries. Neurorobots can improved the range of tests and experiments that can be performed in the study of neural processes.

Artificial neuron

From Wikipedia, the free encyclopedia

https://en.wikipedia.org/wiki/Artificial_neuron

An artificial neuron is a mathematical function conceived as a model of biological neurons, a neural network. Artificial neurons are elementary units in an artificial neural network. The artificial neuron receives one or more inputs (representing excitatory postsynaptic potentials and inhibitory postsynaptic potentials at neural dendrites) and sums them to produce an output (or activation, representing a neuron's action potential which is transmitted along its axon). Usually each input is separately weighted, and the sum is passed through a non-linear function known as an activation function or transfer function. The transfer functions usually have a sigmoid shape, but they may also take the form of other non-linear functions, piecewise linear functions, or step functions. They are also often monotonically increasing, continuous, differentiable and bounded. Non-monotonic, unbounded and oscillating activation functions with multiple zeros that outperform sigmoidal and ReLU like activation functions on many tasks have also been recently explored. The thresholding function has inspired building logic gates referred to as threshold logic; applicable to building logic circuits resembling brain processing. For example, new devices such as memristors have been extensively used to develop such logic in recent times.

The artificial neuron transfer function should not be confused with a linear system's transfer function.

Artificial neurons can also refer to artificial cells in neuromorphic engineering (see below) that are similar to natural physical neurons.

Basic structure

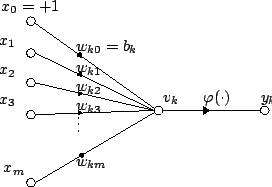

For a given artificial neuron k, let there be m + 1 inputs with signals x0 through xm and weights wk0 through wkm. Usually, the x0 input is assigned the value +1, which makes it a bias input with wk0 = bk. This leaves only m actual inputs to the neuron: from x1 to xm.

The output of the kth neuron is:

Where

The output is analogous to the axon of a biological neuron, and its value propagates to the input of the next layer, through a synapse. It may also exit the system, possibly as part of an output vector.

It has no learning process as such. Its transfer function weights are calculated and threshold value are predetermined.

Types

Depending on the specific model used they may be called a semi-linear unit, Nv neuron, binary neuron, linear threshold function, or McCulloch–Pitts (MCP) neuron.

Simple artificial neurons, such as the McCulloch–Pitts model, are sometimes described as "caricature models", since they are intended to reflect one or more neurophysiological observations, but without regard to realism.

Biological models

Artificial neurons are designed to mimic aspects of their biological counterparts. However a significant performance gap exists between biological and artificial neural networks. In particular single biological neurons in the human brain with oscillating activation function capable of learning the XOR function have been discovered.

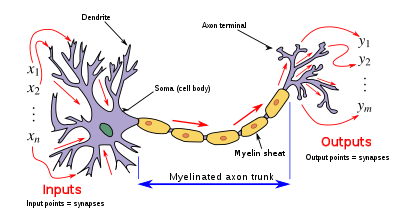

- Dendrites – In a biological neuron, the dendrites act as the input vector. These dendrites allow the cell to receive signals from a large (>1000) number of neighboring neurons. As in the above mathematical treatment, each dendrite is able to perform "multiplication" by that dendrite's "weight value." The multiplication is accomplished by increasing or decreasing the ratio of synaptic neurotransmitters to signal chemicals introduced into the dendrite in response to the synaptic neurotransmitter. A negative multiplication effect can be achieved by transmitting signal inhibitors (i.e. oppositely charged ions) along the dendrite in response to the reception of synaptic neurotransmitters.

- Soma – In a biological neuron, the soma acts as the summation function, seen in the above mathematical description. As positive and negative signals (exciting and inhibiting, respectively) arrive in the soma from the dendrites, the positive and negative ions are effectively added in summation, by simple virtue of being mixed together in the solution inside the cell's body.

- Axon – The axon gets its signal from the summation behavior which occurs inside the soma. The opening to the axon essentially samples the electrical potential of the solution inside the soma. Once the soma reaches a certain potential, the axon will transmit an all-in signal pulse down its length. In this regard, the axon behaves as the ability for us to connect our artificial neuron to other artificial neurons.

Unlike most artificial neurons, however, biological neurons fire in discrete pulses. Each time the electrical potential inside the soma reaches a certain threshold, a pulse is transmitted down the axon. This pulsing can be translated into continuous values. The rate (activations per second, etc.) at which an axon fires converts directly into the rate at which neighboring cells get signal ions introduced into them. The faster a biological neuron fires, the faster nearby neurons accumulate electrical potential (or lose electrical potential, depending on the "weighting" of the dendrite that connects to the neuron that fired). It is this conversion that allows computer scientists and mathematicians to simulate biological neural networks using artificial neurons which can output distinct values (often from −1 to 1).

Encoding

Research has shown that unary coding is used in the neural circuits responsible for birdsong production. The use of unary in biological networks is presumably due to the inherent simplicity of the coding. Another contributing factor could be that unary coding provides a certain degree of error correction.

Physical artificial cells

There is research and development into physical artificial neurons – organic and inorganic.

For example, some artificial neurons can receive and release dopamine (chemical signals rather than electrical signals) and communicate with natural rat muscle and brain cells, with potential for use in BCIs/prosthetics.

Low-power biocompatible memristors may enable construction of artificial neurons which function at voltages of biological action potentials and could be used to directly process biosensing signals, for neuromorphic computing and/or direct communication with biological neurons.

Organic neuromorphic circuits made out of polymers, coated with an ion-rich gel to enable a material to carry an electric charge like real neurons, have been built into a robot, enabling it to learn sensorimotorically within the real world, rather than via simulations or virtually. Moreover, artificial spiking neurons made of soft matter (polymers) can operate in biologically relevant environments and enable the synergetic communication between the artificial and biological domains.

History

The first artificial neuron was the Threshold Logic Unit (TLU), or Linear Threshold Unit, first proposed by Warren McCulloch and Walter Pitts in 1943. The model was specifically targeted as a computational model of the "nerve net" in the brain. As a transfer function, it employed a threshold, equivalent to using the Heaviside step function. Initially, only a simple model was considered, with binary inputs and outputs, some restrictions on the possible weights, and a more flexible threshold value. Since the beginning it was already noticed that any boolean function could be implemented by networks of such devices, what is easily seen from the fact that one can implement the AND and OR functions, and use them in the disjunctive or the conjunctive normal form. Researchers also soon realized that cyclic networks, with feedbacks through neurons, could define dynamical systems with memory, but most of the research concentrated (and still does) on strictly feed-forward networks because of the smaller difficulty they present.

One important and pioneering artificial neural network that used the linear threshold function was the perceptron, developed by Frank Rosenblatt. This model already considered more flexible weight values in the neurons, and was used in machines with adaptive capabilities. The representation of the threshold values as a bias term was introduced by Bernard Widrow in 1960 – see ADALINE.

In the late 1980s, when research on neural networks regained strength, neurons with more continuous shapes started to be considered. The possibility of differentiating the activation function allows the direct use of the gradient descent and other optimization algorithms for the adjustment of the weights. Neural networks also started to be used as a general function approximation model. The best known training algorithm called backpropagation has been rediscovered several times but its first development goes back to the work of Paul Werbos.

Types of transfer functions

The transfer function (activation function) of a neuron is chosen to have a number of properties which either enhance or simplify the network containing the neuron. Crucially, for instance, any multilayer perceptron using a linear transfer function has an equivalent single-layer network; a non-linear function is therefore necessary to gain the advantages of a multi-layer network.

Below, u refers in all cases to the weighted sum of all the inputs to the neuron, i.e. for n inputs,

where w is a vector of synaptic weights and x is a vector of inputs.

Step function

The output y of this transfer function is binary, depending on whether the input meets a specified threshold, θ. The "signal" is sent, i.e. the output is set to one, if the activation meets the threshold.

This function is used in perceptrons and often shows up in many other models. It performs a division of the space of inputs by a hyperplane. It is specially useful in the last layer of a network intended to perform binary classification of the inputs. It can be approximated from other sigmoidal functions by assigning large values to the weights.

Linear combination

In this case, the output unit is simply the weighted sum of its inputs plus a bias term. A number of such linear neurons perform a linear transformation of the input vector. This is usually more useful in the first layers of a network. A number of analysis tools exist based on linear models, such as harmonic analysis, and they can all be used in neural networks with this linear neuron. The bias term allows us to make affine transformations to the data.

See: Linear transformation, Harmonic analysis, Linear filter, Wavelet, Principal component analysis, Independent component analysis, Deconvolution.

Sigmoid

A fairly simple non-linear function, the sigmoid function such as the logistic function also has an easily calculated derivative, which can be important when calculating the weight updates in the network. It thus makes the network more easily manipulable mathematically, and was attractive to early computer scientists who needed to minimize the computational load of their simulations. It was previously commonly seen in multilayer perceptrons. However, recent work has shown sigmoid neurons to be less effective than rectified linear neurons. The reason is that the gradients computed by the backpropagation algorithm tend to diminish towards zero as activations propagate through layers of sigmoidal neurons, making it difficult to optimize neural networks using multiple layers of sigmoidal neurons.

Rectifier

In the context of artificial neural networks, the rectifier or ReLU (Rectified Linear Unit) is an activation function defined as the positive part of its argument:

where x is the input to a neuron. This is also known as a ramp function and is analogous to half-wave rectification in electrical engineering. This activation function was first introduced to a dynamical network by Hahnloser et al. in a 2000 paper in Nature with strong biological motivations and mathematical justifications. It has been demonstrated for the first time in 2011 to enable better training of deeper networks, compared to the widely used activation functions prior to 2011, i.e., the logistic sigmoid (which is inspired by probability theory; see logistic regression) and its more practical counterpart, the hyperbolic tangent.

A commonly used variant of the ReLU activation function is the Leaky ReLU which allows a small, positive gradient when the unit is not active:

where x is the input to the neuron and a is a small positive constant (in the original paper the value 0.01 was used for a).

Pseudocode algorithm

The following is a simple pseudocode implementation of a single TLU which takes boolean inputs (true or false), and returns a single boolean output when activated. An object-oriented model is used. No method of training is defined, since several exist. If a purely functional model were used, the class TLU below would be replaced with a function TLU with input parameters threshold, weights, and inputs that returned a boolean value.

class TLU defined as:

data member threshold : number

data member weights : list of numbers of size X

function member fire(inputs : list of booleans of size X) : boolean defined as:

variable T : number

T ← 0

for each i in 1 to X do

if inputs(i) is true then

T ← T + weights(i)

end if

end for each

if T > threshold then

return true

else:

return false

end if

end function

end class

Epigenome editing

Epigenome editing or Epigenome engineering is a type of genetic engineering in which the epigenome is modified at specific sites using engineered molecules targeted to those sites (as opposed to whole-genome modifications). Whereas gene editing involves changing the actual DNA sequence itself, epigenetic editing involves modifying and presenting DNA sequences to proteins and other DNA binding factors that influence DNA function. By "editing” epigenomic features in this manner, researchers can determine the exact biological role of an epigenetic modification at the site in question.

The engineered proteins used for epigenome editing are composed of a DNA binding domain that target specific sequences and an effector domain that modifies epigenomic features. Currently, three major groups of DNA binding proteins have been predominantly used for epigenome editing: Zinc finger proteins, Transcription Activator-Like Effectors (TALEs) and nuclease deficient Cas9 fusions (CRISPR).

General concept

Comparing genome-wide epigenetic maps with gene expression has allowed researchers to assign either activating or repressing roles to specific modifications. The importance of DNA sequence in regulating the epigenome has been demonstrated by using DNA motifs to predict epigenomic modification. Further insights into mechanisms behind epigenetics have come from in vitro biochemical and structural analyses. Using model organisms, researchers have been able to describe the role of many chromatin factors through knockout studies. However knocking out an entire chromatin modifier has massive effects on the entire genome, which may not be an accurate representation of its function in a specific context. As one example of this, DNA methylation occurs at repeat regions, promoters, enhancers, and gene bodies. Although DNA methylation at gene promoters typically correlates with gene repression, methylation at gene bodies is correlated with gene activation, and DNA methylation may also play a role in gene splicing. The ability to directly target and edit individual methylation sites is critical to determining the exact function of DNA methylation at a specific site. Epigenome editing is a powerful tool that allows this type of analysis. For site-specific DNA methylation editing as well as for histone editing, genome editing systems have been adapted into epigene editing systems. In short, genome homing proteins with engineered or naturally occurring nuclease functions for gene editing, can be mutated and adapted into purely delivery systems. An epigenetic modifying enzyme or domain can be fused to the homing protein and local epigenetic modifications can be altered upon protein recruitment.

Targeting proteins

TALE

The Transcription Activator-Like Effector (TALE) protein recognizes specific DNA sequences based on the composition of its DNA binding domain. This allows the researcher to construct different TALE proteins to recognize a target DNA sequence by editing the TALE's primary protein structure. The binding specificity of this protein is then typically confirmed using Chromatin Immunoprecipitation (ChIP) and Sanger sequencing of the resulting DNA fragment. This confirmation is still required on all TALE sequence recognition research. When used for epigenome editing, these DNA binding proteins are attached to an effector protein. Effector proteins that have been used for this purpose include Ten-eleven translocation methylcytosine dioxygenase 1 (TET1), Lysine (K)-specific demethylase 1A (LSD1) and Calcium and integrin binding protein 1 (CIB1).

Zinc finger proteins

The use of zinc finger-fusion proteins to recognize sites for epigenome editing has been explored as well. Maeder et al. has constructed a ZF-TET1 protein for use in DNA demethylation. These zinc finger proteins work similarly to TALE proteins in that they are able to bind to sequence specific sites in on the DNA based on their protein structure which can be modified. Chen et al. have successfully used a zinc finger DNA binding domain coupled with the TET1 protein to induce demethylation of several previously silenced genes. Kungulovski and Jeltsch successfully used ZFP-guided deposition of DNA methylation gene to cause gene silencing but the DNA methylation and silencing were lost when the trigger signal stopped. The authors suggest for stable epigenetic changes, there must be either multiple depositions of DNA methylation of related epigenetic marks, or long-lasting trigger stimuli. ZFP epigenetic editing has shown potential to treat various neurodegenerative diseases.

CRISPR-Cas

The Clustered Regulatory Interspaced Short Palindromic Repeat (CRISPR)-Cas system functions as a DNA site-specific nuclease. In the well-studied type II CRISPR system, the Cas9 nuclease associates with a chimera composed of tracrRNA and crRNA. This chimera is frequently referred to as a guide RNA (gRNA). When the Cas9 protein associates with a DNA region-specific gRNA, the Cas9 cleaves DNA at targeted DNA loci. However, when the D10A and H840A point mutations are introduced, a catalytically-dead Cas9 (dCas9) is generated that can bind DNA but will not cleave. The dCas9 system has been utilized for targeted epigenetic reprogramming in order to introduce site-specific DNA methylation. By fusing the DNMT3a catalytic domain with the dCas9 protein, dCas9-DNMT3a is capable of achieving targeted DNA methylation of a targeted region as specified by the present guide RNA. Similarly, dCas9 has been fused with the catalytic core of the human acetyltransferase p300. dCas9-p300 successfully catalyzes targeted acetylation of histone H3 lysine 27.

A variant in CRISPR epigenome editing (called FIRE-Cas9) allows to reverse the changes made, in case something went wrong.

CRISPRoff is a dead Cas9 fusion protein that can be used to heritably silence the gene expression of "most genes" and allows for reversible modifications.

Commonly used effector proteins

TET1 induces demethylation of cytosine at CpG sites. This protein has been used to activate genes that are repressed by CpG methylation and to determine the role of individual CpG methylation sites. LSD1 induces the demethylation of H3K4me1/2, which also causes an indirect effect of deacetylation on H3K27. This effector can be used on histones in enhancer regions, which can changes the expression of neighboring genes. CIB1 is a light sensitive cryptochrome, this cryptochrome is fused to the TALE protein. A second protein contains an interaction partner (CRY2) fused with a chromatin/DNA modifier (ex. SID4X). CRY2 is able to interact with CIB1 when the cryptochrome has been activated by illumination with blue light. The interaction allows the chromatin modifier to act on the desired location. This means that the modification can be performed in an inducible and reversible manner, which reduces long-term secondary effects that would be caused by constitutive epigenetic modification.

Applications

Studying enhancer function and activity

Editing of gene enhancer regions in the genome through targeted epigenetic modification has been demonstrated by Mendenhall et al. (2013). This study utilized a TALE-LSD1 effector fusion protein in order to target enhancers of genes, to induce enhancer silencing in order to deduce enhancer activity and gene control. Targeting specific enhancers followed by locus specific RT-qPCR allows for the genes affected by the silenced enhancer to be determined. Alternatively, inducing enhancer silencing in regions upstream of genes allows for gene expression to be altered. RT-qPCR can then be utilized to study effects of this on gene expression. This allows for enhancer function and activity to be studied in detail.

Determining the function of specific methylation sites

It is important to understand the role specific methylation sites play regulating in gene expression. To study this, one research group used a TALE-TET1 fusion protein to demethylate a single CpG methylation site. Although this approach requires many controls to ensure specific binding to target loci, a properly performed study using this approach can determine the biological function of a specific CpG methylation site.

Determining the role of epigenetic modifications directly

Epigenetic editing using an inducible mechanism offers a wide array of potential use to study epigenetic effects in various states. One research group employed an optogenetic two-hybrid system which integrated the sequence specific TALE DNA-binding domain with a light-sensitive cryptochrome 2 protein (CIB1). Once expressed in the cells, the system was able to inducibly edit histone modifications and determine their function in a specific context.

Functional engineering

Targeted regulation of disease-related genes may enable novel therapies for many diseases, especially in cases where adequate gene therapies are not yet developed or are inappropriate. While transgenerational and population level consequences are not fully understood, it may become a major tool for applied functional genomics and personalized medicine. As with RNA editing, it does not involve genetic changes and their accompanying risks. One example of a potential functional use of epigenome editing was described in 2021: repressing Nav1.7 gene expression via CRISPR-dCas9 which showed therapeutic potential in three mouse models of chronic pain.

In 2022, research assessed its usefulness in reducing tau protein levels, regulating a protein involved in Huntington's disease, targeting an inherited form of obesity, and Dravet syndrome.

Limitations

Sequence specificity is critically important in epigenome editing and must be carefully verified (this can be done using chromatin immunoprecipitation followed by Sanger sequencing to verify the targeted sequence). It is unknown if the TALE fusion may cause effects on the catalytic activity of the epigenome modifier. This could be especially important in effector proteins that require multiple subunits and complexes such as the Polycomb repressive complex. Proteins used for epigenome editing may obstruct ligands and substrates at the target site. The TALE protein itself may even compete with transcription factors if they are targeted to the same sequence. In addition, DNA repair systems could reverse the alterations on the chromatin and prevent the desired changes from being made. It is therefore necessary for fusion constructs and targeting mechanisms to be optimized for reliable and repeatable epigenome editing.Speciesism

From Wikipedia, the free encyclopedia https://en.wikipedia.org/wiki/Speciesism The differential treat...

-

From Wikipedia, the free encyclopedia Ward Cunningham , inventor of the wiki A wiki is a website on whi...

-

From Wikipedia, the free encyclopedia https://en.wikipedia.org/wiki/Human_cloning Diagram of ...

-

From Wikipedia, the free encyclopedia Islamic State of Iraq and the Levant الدولة الإسلامية في العراق والشام ( ...