Observational cosmology is the study of the structure, the evolution and the origin of the universe through observation, using instruments such as telescopes and cosmic ray detectors.

Early observations

The science of physical cosmology as it is practiced today had its subject material defined in the years following the Shapley-Curtis debate when it was determined that the universe had a larger scale than the Milky Way galaxy. This was precipitated by observations that established the size and the dynamics of the cosmos that could be explained by Albert Einstein's General Theory of Relativity. In its infancy, cosmology was a speculative science based on a very limited number of observations and characterized by a dispute between steady state theorists and promoters of Big Bang cosmology. It was not until the 1990s and beyond that the astronomical observations would be able to eliminate competing theories and drive the science to the "Golden Age of Cosmology" which was heralded by David Schramm at a National Academy of Sciences colloquium in 1992.

Hubble's law and the cosmic distance ladder

Distance measurements in astronomy have historically been and continue to be confounded by considerable measurement uncertainty. In particular, while stellar parallax can be used to measure the distance to nearby stars, the observational limits imposed by the difficulty in measuring the minuscule parallaxes associated with objects beyond our galaxy meant that astronomers had to look for alternative ways to measure cosmic distances. To this end, a standard candle measurement for Cepheid variables was discovered by Henrietta Swan Leavitt in 1908 which would provide Edwin Hubble with the rung on the cosmic distance ladder he would need to determine the distance to spiral nebula. Hubble used the 100-inch Hooker Telescope at Mount Wilson Observatory to identify individual stars in those galaxies, and determine the distance to the galaxies by isolating individual Cepheids. This firmly established the spiral nebula as being objects well outside the Milky Way galaxy. Determining the distance to "island universes", as they were dubbed in the popular media, established the scale of the universe and settled the Shapley-Curtis debate once and for all.

In 1927, by combining various measurements, including Hubble's distance measurements and Vesto Slipher's determinations of redshifts for these objects, Georges Lemaître was the first to estimate a constant of proportionality between galaxies' distances and what was termed their "recessional velocities", finding a value of about 600 km/s/Mpc. He showed that this was theoretically expected in a universe model based on general relativity. Two years later, Hubble showed that the relation between the distances and velocities was a positive correlation and had a slope of about 500 km/s/Mpc. This correlation would come to be known as Hubble's law and would serve as the observational foundation for the expanding universe theories on which cosmology is still based. The publication of the observations by Slipher, Wirtz, Hubble and their colleagues and the acceptance by the theorists of their theoretical implications in light of Einstein's General theory of relativity is considered the beginning of the modern science of cosmology.

Nuclide abundances

Determination of the cosmic abundance of elements has a history dating back to early spectroscopic measurements of light from astronomical objects and the identification of emission and absorption lines which corresponded to particular electronic transitions in chemical elements identified on Earth. For example, the element Helium was first identified through its spectroscopic signature in the Sun before it was isolated as a gas on Earth.

Computing relative abundances was achieved through corresponding spectroscopic observations to measurements of the elemental composition of meteorites.

Detection of the cosmic microwave background

A cosmic microwave background was predicted in 1948 by George Gamow and Ralph Alpher, and by Alpher and Robert Herman as due to the hot Big Bang model. Moreover, Alpher and Herman were able to estimate the temperature, but their results were not widely discussed in the community. Their prediction was rediscovered by Robert Dicke and Yakov Zel'dovich in the early 1960s with the first published recognition of the CMB radiation as a detectable phenomenon appeared in a brief paper by Soviet astrophysicists A. G. Doroshkevich and Igor Novikov, in the spring of 1964. In 1964, David Todd Wilkinson and Peter Roll, Dicke's colleagues at Princeton University, began constructing a Dicke radiometer to measure the cosmic microwave background. In 1965, Arno Penzias and Robert Woodrow Wilson at the Crawford Hill location of Bell Telephone Laboratories in nearby Holmdel Township, New Jersey had built a Dicke radiometer that they intended to use for radio astronomy and satellite communication experiments. Their instrument had an excess 3.5 K antenna temperature which they could not account for. After receiving a telephone call from Crawford Hill, Dicke famously quipped: "Boys, we've been scooped." A meeting between the Princeton and Crawford Hill groups determined that the antenna temperature was indeed due to the microwave background. Penzias and Wilson received the 1978 Nobel Prize in Physics for their discovery.

Modern observations

Today, observational cosmology continues to test the predictions of theoretical cosmology and has led to the refinement of cosmological models. For example, the observational evidence for dark matter has heavily influenced theoretical modeling of structure and galaxy formation. When trying to calibrate the Hubble diagram with accurate supernova standard candles, observational evidence for dark energy was obtained in the late 1990s. These observations have been incorporated into a six-parameter framework known as the Lambda-CDM model which explains the evolution of the universe in terms of its constituent material. This model has subsequently been verified by detailed observations of the cosmic microwave background, especially through the WMAP experiment.

Included here are the modern observational efforts that have directly influenced cosmology.

Redshift surveys

With the advent of automated telescopes and improvements in spectroscopes, a number of collaborations have been made to map the universe in redshift space. By combining redshift with angular position data, a redshift survey maps the 3D distribution of matter within a field of the sky. These observations are used to measure properties of the large-scale structure of the universe. The Great Wall, a vast supercluster of galaxies over 500 million light-years wide, provides a dramatic example of a large-scale structure that redshift surveys can detect.

The first redshift survey was the CfA Redshift Survey, started in 1977 with the initial data collection completed in 1982. More recently, the 2dF Galaxy Redshift Survey determined the large-scale structure of one section of the Universe, measuring z-values for over 220,000 galaxies; data collection was completed in 2002, and the final data set was released 30 June 2003. (In addition to mapping large-scale patterns of galaxies, 2dF established an upper limit on neutrino mass.) Another notable investigation, the Sloan Digital Sky Survey (SDSS), is ongoing as of 2011 and aims to obtain measurements on around 100 million objects. SDSS has recorded redshifts for galaxies as high as 0.4, and has been involved in the detection of quasars beyond z = 6. The DEEP2 Redshift Survey uses the Keck telescopes with the new "DEIMOS" spectrograph; a follow-up to the pilot program DEEP1, DEEP2 is designed to measure faint galaxies with redshifts 0.7 and above, and it is therefore planned to provide a complement to SDSS and 2dF.

Cosmic microwave background experiments

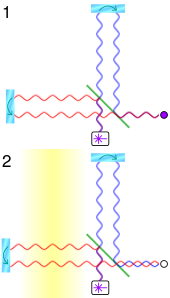

Subsequent to the discovery of the CMB, hundreds of cosmic microwave background experiments have been conducted to measure and characterize the signatures of the radiation. The most famous experiment is probably the NASA Cosmic Background Explorer (COBE) satellite that orbited in 1989–1996 and which detected and quantified the large scale anisotropies at the limit of its detection capabilities. Inspired by the initial COBE results of an extremely isotropic and homogeneous background, a series of ground- and balloon-based experiments quantified CMB anisotropies on smaller angular scales over the next decade. The primary goal of these experiments was to measure the angular scale of the first acoustic peak, for which COBE did not have sufficient resolution. These measurements were able to rule out cosmic strings as the leading theory of cosmic structure formation, and suggested cosmic inflation was the right theory.

During the 1990s, the first peak was measured with increasing sensitivity and by 2000 the BOOMERanG experiment reported that the highest power fluctuations occur at scales of approximately one degree. Together with other cosmological data, these results implied that the geometry of the universe is flat. A number of ground-based interferometers provided measurements of the fluctuations with higher accuracy over the next three years, including the Very Small Array, Degree Angular Scale Interferometer (DASI), and the Cosmic Background Imager (CBI). DASI made the first detection of the polarization of the CMB and the CBI provided the first E-mode polarization spectrum with compelling evidence that it is out of phase with the T-mode spectrum.

In June 2001, NASA launched a second CMB space mission, WMAP, to make much more precise measurements of the large scale anisotropies over the full sky. WMAP used symmetric, rapid-multi-modulated scanning, rapid switching radiometers to minimize non-sky signal noise. The first results from this mission, disclosed in 2003, were detailed measurements of the angular power spectrum at a scale of less than one degree, tightly constraining various cosmological parameters. The results are broadly consistent with those expected from cosmic inflation as well as various other competing theories, and are available in detail at NASA's data bank for Cosmic Microwave Background (CMB) (see links below). Although WMAP provided very accurate measurements of the large scale angular fluctuations in the CMB (structures about as broad in the sky as the moon), it did not have the angular resolution to measure the smaller scale fluctuations which had been observed by former ground-based interferometers.

A third space mission, the ESA (European Space Agency) Planck Surveyor, was launched in May 2009 and performed an even more detailed investigation until it was shut down in October 2013. Planck employed both HEMT radiometers and bolometer technology and measured the CMB at a smaller scale than WMAP. Its detectors were trialled in the Antarctic Viper telescope as ACBAR (Arcminute Cosmology Bolometer Array Receiver) experiment—which has produced the most precise measurements at small angular scales to date—and in the Archeops balloon telescope.

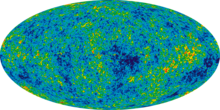

On 21 March 2013, the European-led research team behind the Planck cosmology probe released the mission's all-sky map (565x318 jpeg, 3600x1800 jpeg) of the cosmic microwave background. The map suggests the universe is slightly older than researchers expected. According to the map, subtle fluctuations in temperature were imprinted on the deep sky when the cosmos was about 370000 years old. The imprint reflects ripples that arose as early, in the existence of the universe, as the first nonillionth of a second. Apparently, these ripples gave rise to the present vast cosmic web of galaxy clusters and dark matter. Based on the 2013 data, the universe contains 4.9% ordinary matter, 26.8% dark matter and 68.3% dark energy. On 5 February 2015, new data was released by the Planck mission, according to which the age of the universe is 13.799±0.021 billion years old and the Hubble constant was measured to be 67.74±0.46 (km/s)/Mp

Additional ground-based instruments such as the South Pole Telescope in Antarctica and the proposed Clover Project, Atacama Cosmology Telescope and the QUIET telescope in Chile will provide additional data not available from satellite observations, possibly including the B-mode polarization.Telescope observations

Radio

The brightest sources of low-frequency radio emission (10 MHz and 100 GHz) are radio galaxies which can be observed out to extremely high redshifts. These are subsets of the active galaxies that have extended features known as lobes and jets which extend away from the galactic nucleus distances on the order of megaparsecs. Because radio galaxies are so bright, astronomers have used them to probe extreme distances and early times in the evolution of the universe.

Infrared

Far infrared observations including submillimeter astronomy have revealed a number of sources at cosmological distances. With the exception of a few atmospheric windows, most of infrared light is blocked by the atmosphere, so the observations generally take place from balloon or space-based instruments. Current observational experiments in the infrared include NICMOS, the Cosmic Origins Spectrograph, the Spitzer Space Telescope, the Keck Interferometer, the Stratospheric Observatory For Infrared Astronomy, and the Herschel Space Observatory. The next large space telescope planned by NASA, the James Webb Space Telescope will also explore in the infrared.

An additional infrared survey, the Two-Micron All Sky Survey, has also been very useful in revealing the distribution of galaxies, similar to other optical surveys described below.

Optical rays (visible to human eyes)

Optical light is still the primary means by which astronomy occurs, and in the context of cosmology, this means observing distant galaxies and galaxy clusters in order to learn about the large scale structure of the Universe as well as galaxy evolution. Redshift surveys have been a common means by which this has been accomplished with some of the most famous including the 2dF Galaxy Redshift Survey, the Sloan Digital Sky Survey, and the upcoming Large Synoptic Survey Telescope. These optical observations generally use either photometry or spectroscopy to measure the redshift of a galaxy and then, via Hubble's Law, determine its distance modulo redshift distortions due to peculiar velocities. Additionally, the position of the galaxies as seen on the sky in celestial coordinates can be used to gain information about the other two spatial dimensions.

Very deep observations (which is to say sensitive to dim sources) are also useful tools in cosmology. The Hubble Deep Field, Hubble Ultra Deep Field, Hubble Extreme Deep Field, and Hubble Deep Field South are all examples of this.

Ultraviolet

X-rays

See X-ray astronomy.

Gamma-rays

See Gamma-ray astronomy.

Cosmic ray observations

Future observations

Cosmic neutrinos

It is a prediction of the Big Bang model that the universe is filled with a neutrino background radiation, analogous to the cosmic microwave background radiation. The microwave background is a relic from when the universe was about 380,000 years old, but the neutrino background is a relic from when the universe was about two seconds old.

If this neutrino radiation could be observed, it would be a window into very early stages of the universe. Unfortunately, these neutrinos would now be very cold, and so they are effectively impossible to observe directly.