From Wikipedia, the free encyclopedia

https://en.wikipedia.org/wiki/Ensemble_forecasting

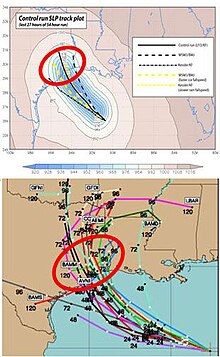

Ensemble forecasting is a method used in or within numerical weather prediction. Instead of making a single forecast of the most likely weather, a set (or ensemble) of forecasts is produced. This set of forecasts aims to give an indication of the range of possible future states of the atmosphere. Ensemble forecasting is a form of Monte Carlo analysis. The multiple simulations are conducted to account for the two usual sources of uncertainty in forecast models: (1) the errors introduced by the use of imperfect initial conditions, amplified by the chaotic nature of the evolution equations of the atmosphere, which is often referred to as sensitive dependence on initial conditions; and (2) errors introduced because of imperfections in the model formulation, such as the approximate mathematical methods to solve the equations. Ideally, the verified future atmospheric state should fall within the predicted ensemble spread, and the amount of spread should be related to the uncertainty (error) of the forecast. In general, this approach can be used to make probabilistic forecasts of any dynamical system, and not just for weather prediction.

Instances

Today ensemble predictions are commonly made at most of the major operational weather prediction facilities worldwide, including:

- National Centers for Environmental Prediction (NCEP of the US)

- European Centre for Medium-Range Weather Forecasts (ECMWF)

- United Kingdom Met Office

- Météo-France

- Environment Canada

- Japan Meteorological Agency

- Bureau of Meteorology (Australia)

- China Meteorological Administration (CMA)

- Korea Meteorological Administration

- CPTEC (Brazil)

- Ministry of Earth Sciences (IMD, IITM & NCMRWF) (India)

Experimental ensemble forecasts are made at a number of universities, such as the University of Washington, and ensemble forecasts in the US are also generated by the US Navy and Air Force. There are various ways of viewing the data such as spaghetti plots, ensemble means or Postage Stamps where a number of different results from the models run can be compared.

History

As proposed by Edward Lorenz in 1963, it is impossible for long-range forecasts—those made more than two weeks in advance—to predict the state of the atmosphere with any degree of skill owing to the chaotic nature of the fluid dynamics equations involved. Furthermore, existing observation networks have limited spatial and temporal resolution (for example, over large bodies of water such as the Pacific Ocean), which introduces uncertainty into the true initial state of the atmosphere. While a set of equations, known as the Liouville equations, exists to determine the initial uncertainty in the model initialization, the equations are too complex to run in real-time, even with the use of supercomputers. The practical importance of ensemble forecasts derives from the fact that in a chaotic and hence nonlinear system, the rate of growth of forecast error is dependent on starting conditions. An ensemble forecast therefore provides a prior estimate of state-dependent predictability, i.e. an estimate of the types of weather that might occur, given inevitable uncertainties in the forecast initial conditions and in the accuracy of the computational representation of the equations. These uncertainties limit forecast model accuracy to about six days into the future. The first operational ensemble forecasts were produced for sub-seasonal timescales in 1985. However, it was realised that the philosophy underpinning such forecasts was also relevant on shorter timescales – timescales where predictions had previously been made by purely deterministic means.

Edward Epstein recognized in 1969 that the atmosphere could not be completely described with a single forecast run due to inherent uncertainty, and proposed a stochastic dynamic model that produced means and variances for the state of the atmosphere. Although these Monte Carlo simulations showed skill, in 1974 Cecil Leith revealed that they produced adequate forecasts only when the ensemble probability distribution was a representative sample of the probability distribution in the atmosphere. It was not until 1992 that ensemble forecasts began being prepared by the European Centre for Medium-Range Weather Forecasts (ECMWF) and the National Centers for Environmental Prediction (NCEP).

Methods for representing uncertainty

There are two main sources of uncertainty that must be accounted for when making an ensemble weather forecast: initial condition uncertainty and model uncertainty.

Initial condition uncertainty

Initial condition uncertainty arises due to errors in the estimate of the starting conditions for the forecast, both due to limited observations of the atmosphere, and uncertainties involved in using indirect measurements, such as satellite data, to measure the state of atmospheric variables. Initial condition uncertainty is represented by perturbing the starting conditions between the different ensemble members. This explores the range of starting conditions consistent with our knowledge of the current state of the atmosphere, together with its past evolution. There are a number of ways to generate these initial condition perturbations. The ECMWF model, the Ensemble Prediction System (EPS), uses a combination of singular vectors and an ensemble of data assimilations (EDA) to simulate the initial probability density. The singular vector perturbations are more active in the extra-tropics, while the EDA perturbations are more active in the tropics. The NCEP ensemble, the Global Ensemble Forecasting System, uses a technique known as vector breeding.

Model uncertainty

Model uncertainty arises due to the limitations of the forecast model. The process of representing the atmosphere in a computer model involves many simplifications such as the development of parametrisation schemes, which introduce errors into the forecast. Several techniques to represent model uncertainty have been proposed.

Perturbed parameter schemes

When developing a parametrisation scheme, many new parameters are introduced to represent simplified physical processes. These parameters may be very uncertain. For example, the 'entrainment coefficient' represents the turbulent mixing of dry environmental air into a convective cloud, and so represents a complex physical process using a single number. In a perturbed parameter approach, uncertain parameters in the model's parametrisation schemes are identified and their value changed between ensemble members. While in probabilistic climate modelling, such as climateprediction.net, these parameters are often held constant globally and throughout the integration, in modern numerical weather prediction it is more common to stochastically vary the value of the parameters in time and space. The degree of parameter perturbation can be guided using expert judgement, or by directly estimating the degree of parameter uncertainty for a given model.

Stochastic parametrisations

A traditional parametrisation scheme seeks to represent the average effect of the sub grid-scale motion (e.g. convective clouds) on the resolved scale state (e.g. the large scale temperature and wind fields). A stochastic parametrisation scheme recognises that there may be many sub-grid scale states consistent with a particular resolved scale state. Instead of predicting the most likely sub-grid scale motion, a stochastic parametrisation scheme represents one possible realisation of the sub-grid. It does this through including random numbers into the equations of motion. This samples from the probability distribution assigned to uncertain processes. Stochastic parametrisations have significantly improved the skill of weather forecasting models, and are now used in operational forecasting centres worldwide. Stochastic parametrisations were first developed at the European Centre for Medium Range Weather Forecasts.

Multi model ensembles

When many different forecast models are used to try to generate a forecast, the approach is termed multi-model ensemble forecasting. This method of forecasting can improve forecasts when compared to a single model-based approach. When the models within a multi-model ensemble are adjusted for their various biases, this process is known as "superensemble forecasting". This type of a forecast significantly reduces errors in model output. When models of different physical processes are combined, such as combinations of atmospheric, ocean and wave models, the multi-model ensemble is called hyper-ensemble.

Probability assessment

The ensemble forecast is usually evaluated by comparing the ensemble average of the individual forecasts for one forecast variable to the observed value of that variable (the "error"). This is combined with consideration of the degree of agreement between various forecasts within the ensemble system, as represented by their overall standard deviation or "spread". Ensemble spread can be visualised through tools such as spaghetti diagrams, which show the dispersion of one quantity on prognostic charts for specific time steps in the future. Another tool where ensemble spread is used is a meteogram, which shows the dispersion in the forecast of one quantity for one specific location. It is common for the ensemble spread to be too small, such that the observed atmospheric state falls outside of the ensemble forecast. This can lead the forecaster to be overconfident in their forecast. This problem becomes particularly severe for forecasts of the weather about 10 days in advance, particularly if model uncertainty is not accounted for in the forecast.

Reliability and resolution (calibration and sharpness)

The spread of the ensemble forecast indicates how confident the forecaster can be in his or her prediction. When ensemble spread is small and the forecast solutions are consistent within multiple model runs, forecasters perceive more confidence in the forecast in general. When the spread is large, this indicates more uncertainty in the prediction. Ideally, a spread-skill relationship should exist, whereby the spread of the ensemble is a good predictor of the expected error in the ensemble mean. If the forecast is reliable, the observed state will behave as if it is drawn from the forecast probability distribution. Reliability (or calibration) can be evaluated by comparing the standard deviation of the error in the ensemble mean with the forecast spread: for a reliable forecast, the two should match, both at different forecast lead times and for different locations.

The reliability of forecasts of a specific weather event can also be assessed. For example, if 30 of 50 members indicated greater than 1 cm rainfall during the next 24 h, the probability of exceeding 1 cm could be estimated to be 60%. The forecast would be considered reliable if, considering all the situations in the past when a 60% probability was forecast, on 60% of those occasions did the rainfall actually exceed 1 cm. In practice, the probabilities generated from operational weather ensemble forecasts are not highly reliable, though with a set of past forecasts (reforecasts or hindcasts) and observations, the probability estimates from the ensemble can be adjusted to ensure greater reliability.

Another desirable property of ensemble forecasts is resolution. This is an indication of how much the forecast deviates from the climatological event frequency – provided that the ensemble is reliable, increasing this deviation will increase the usefulness of the forecast. This forecast quality can also be considered in terms of sharpness, or how small the spread of the forecast is. The key aim of a forecaster should be to maximise sharpness, while maintaining reliability. Forecasts at long leads will inevitably not be particularly sharp (have particularly high resolution), for the inevitable (albeit usually small) errors in the initial condition will grow with increasing forecast lead until the expected difference between two model states is as large as the difference between two random states from the forecast model's climatology.

Calibration of ensemble forecasts

If ensemble forecasts are to be used for predicting probabilities of observed weather variables they typically need calibration in order to create unbiased and reliable forecasts. For forecasts of temperature one simple and effective method of calibration is linear regression, often known in this context as model output statistics. The linear regression model takes the ensemble mean as a predictor for the real temperature, ignores the distribution of ensemble members around the mean, and predicts probabilities using the distribution of residuals from the regression. In this calibration setup the value of the ensemble in improving the forecast is then that the ensemble mean typically gives a better forecast than any single ensemble member would, and not because of any information contained in the width or shape of the distribution of the members in the ensemble around the mean. However, in 2004, a generalisation of linear regression (now known as Nonhomogeneous Gaussian regression) was introduced that uses a linear transformation of the ensemble spread to give the width of the predictive distribution, and it was shown that this can lead to forecasts with higher skill than those based on linear regression alone. This proved for the first time that information in the shape of the distribution of the members of an ensemble around the mean, in this case summarized by the ensemble spread, can be used to improve forecasts relative to linear regression. Whether or not linear regression can be beaten by using the ensemble spread in this way varies, depending on the forecast system, forecast variable and lead time.

Predicting the size of forecast changes

In addition to being used to improve predictions of uncertainty, the ensemble spread can also be used as a predictor for the likely size of changes in the mean forecast from one forecast to the next. This works because, in some ensemble forecast systems, narrow ensembles tend to precede small changes in the mean, while wide ensembles tend to precede larger changes in the mean. This has applications in the trading industries, for whom understanding the likely sizes of future forecast changes can be important.

Co-ordinated research

The Observing System Research and Predictability Experiment (THORPEX) is a 10-year international research and development programme to accelerate improvements in the accuracy of one-day to two-week high impact weather forecasts for the benefit of society, the economy and the environment. It establishes an organizational framework that addresses weather research and forecast problems whose solutions will be accelerated through international collaboration among academic institutions, operational forecast centres and users of forecast products.

One of its key components is THORPEX Interactive Grand Global Ensemble (TIGGE), a World Weather Research Programme to accelerate the improvements in the accuracy of 1-day to 2 week high-impact weather forecasts for the benefit of humanity. Centralized archives of ensemble model forecast data, from many international centers, are used to enable extensive data sharing and research.