The first systematic measurements of global direct irradiance at the Earth's surface began in the 1950s. A decline in irradiance was soon observed, and it was given the name of global dimming. It continued from 1950s until 1980s, with an observed reduction of 4–5% per decade, even though solar activity did not vary more than the usual at the time. Global dimming has instead been attributed to an increase in atmospheric particulate matter, predominantly sulfate aerosols, as the result of rapidly growing air pollution due to post-war industrialization. After 1980s, global dimming started to reverse, alongside reductions in particulate emissions, in what has been described as global brightening, although this reversal is only considered "partial" for now. The reversal has also been globally uneven, as the dimming trend continued during the 1990s over some mostly developing countries like India, Zimbabwe, Chile and Venezuela. Over China, the dimming trend continued at a slower rate after 1990, and did not begin to reverse until around 2005.

Global dimming has interfered with the hydrological cycle by lowering evaporation, which is likely to have reduced rainfall in certain areas, and may have caused the observed southwards shift of the entire tropical rain belt between 1950 and 1985, with a limited recovery afterwards. Since high evaporation at the tropics is needed to drive the wet season, cooling caused by particulate pollution appears to weaken Monsoon of South Asia, while reductions in pollution strengthen it. Multiple studies have also connected record levels of particulate pollution in the Northern Hemisphere to the monsoon failure behind the 1984 Ethiopian famine, although the full extent of anthropogenic vs. natural influences on that event is still disputed. On the other hand, global dimming has also counteracted some of the greenhouse gas emissions, effectively "masking" the total extent of global warming experienced to date, with the most-polluted regions even experiencing cooling in the 1970s. Conversely, global brightening had contributed to the acceleration of global warming which began in the 1990s.

In the near future, global brightening is expected to continue, as nations act to reduce the toll of air pollution on the health of their citizens. This also means that less of global warming would be masked in the future. Climate models are broadly capable of simulating the impact of aerosols like sulfates, and in the IPCC Sixth Assessment Report, they are believed to offset around 0.5 °C (0.90 °F) of warming. Likewise, climate change scenarios incorporate reductions in particulates and the cooling they offered into their projections, and this includes the scenarios for climate action required to meet 1.5 °C (2.7 °F) and 2 °C (3.6 °F) targets. It is generally believed that the cooling provided by global dimming is similar to the warming derived from atmospheric methane, meaning that simultaneous reductions in both would effectively cancel each other out. However, uncertainties remain about the models' representation of aerosol impacts on weather systems, especially over the regions with a poorer historical record of atmospheric observations.

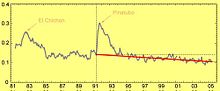

The processes behind global dimming are similar to those which drive reductions in direct sunlight after volcanic eruptions. In fact, the eruption of Mount Pinatubo in 1991 had temporarily reversed the brightening trend. Both processes are considered an analogue for stratospheric aerosol injection, a solar geoengineering intervention which aims to counteract global warming through intentional releases of reflective aerosols, albeit at much higher altitudes, where lower quantities would be needed and the polluting effects would be minimized. However, while that intervention may be very effective at stopping or reversing warming and its main consequences, it would also have substantial effects on the global hydrological cycle, as well as regional weather and ecosystems. Because its effects are only temporary, it would have to be maintained for centuries until the greenhouse gas concentrations are normalized to avoid a rapid and violent return of the warming, sometimes known as termination shock.

History

In the late 1960s, Mikhail Ivanovich Budyko worked with simple two-dimensional energy-balance climate models to investigate the reflectivity of ice. He found that the ice–albedo feedback created a positive feedback loop in the Earth's climate system. The more snow and ice, the more solar radiation is reflected back into space and hence the colder Earth grows and the more it snows. Other studies suggested that sulfate pollution or a volcano eruption could provoke the onset of an ice age.

In the 1980s, research in Israel and the Netherlands revealed an apparent reduction in the amount of sunlight, and Atsumu Ohmura, a geography researcher at the Swiss Federal Institute of Technology, found that solar radiation striking the Earth's surface had declined by more than 10% over the three previous decades, even as the global temperature had been generally rising since the 1970s. In the 1990s, this was followed by the papers describing multi-decade declines in Estonia, Germany and across the former Soviet Union, which prompted the researcher Gerry Stanhill to coin the term "global dimming". Subsequent research estimated an average reduction in sunlight striking the terrestrial surface of around 4–5% per decade over late 1950s–1980s, and 2–3% per decade when 1990s were included. Notably, solar radiation at the top of the atmosphere did not vary by more than 0.1-0.3% in all that time, strongly suggesting that the reasons for the dimming were on Earth. Additionally, only visible light and infrared radiation were dimmed, rather than the ultraviolet part of the spectrum.

Reversal

Starting from 2005, scientific papers began to report that after 1990, the global dimming trend had clearly switched to global brightening. This followed measures taken to combat air pollution by the developed nations, typically through flue-gas desulfurization installations at thermal power plants, such as wet scrubbers or fluidized bed combustion. In the United States, sulfate aerosols have declined significantly since 1970 with the passage of the Clean Air Act, which was strengthened in 1977 and 1990. According to the EPA, from 1970 to 2005, total emissions of the six principal air pollutants, including sulfates, dropped by 53% in the US. By 2010, this reduction in sulfate pollution led to estimated healthcare cost savings valued at $50 billion annually. Similar measures were taken in Europe, such as the 1985 Helsinki Protocol on the Reduction of Sulfur Emissions under the Convention on Long-Range Transboundary Air Pollution, and with similar improvements.

On the other hand, a 2009 review found that dimming continued in China after stabilizing in the 1990s and intensified in India, consistent with their continued industrialization, while the US, Europe, and South Korea continued to brighten. Evidence from Zimbabwe, Chile and Venezuela also pointed to continued dimming during that period, albeit at a lower confidence level due to the lower number of observations. Due to these contrasting trends, no statistically significant change had occurred on a global scale from 2001 to 2012. Post-2010 observations indicate that the global decline in aerosol concentrations and global dimming continued, with pollution controls on the global shipping industry playing a substantial role in the recent years. Since nearly 90% of the human population lives in the Northern Hemisphere, clouds there are far more affected by aerosols than in the Southern Hemisphere, but these differences have halved in the two decades since 2000, providing further evidence for the ongoing global brightening.

Causes

Global dimming had been widely attributed to the increased presence of aerosol particles in Earth's atmosphere, predominantly those of sulfates. While natural dust is also an aerosol with some impacts on climate, and volcanic eruptions considerably increase sulfate concentrations in the short term, these effects have been dwarfed by increases in sulfate emissions since the start of the Industrial Revolution. According to the IPCC First Assessment Report, the global human-caused emissions of sulfur into the atmosphere were less than 3 million tons per year in 1860, yet they increased to 15 million tons in 1900, 40 million tons in 1940 and about 80 millions in 1980. This meant that the human-caused emissions became "at least as large" as all natural emissions of sulfur-containing compounds: the largest natural source, emissions of dimethyl sulfide from the ocean, was estimated at 40 million tons per year, while volcano emissions were estimated at 10 million tons. Moreover, that was the average figure: according to the report, "in the industrialized regions of Europe and North America, anthropogenic emissions dominate over natural emissions by about a factor of ten or even more".

Aerosols and other atmospheric particulates have direct and indirect effects on the amount of sunlight received at the surface. Directly, particles of sulfur dioxide reflect almost all sunlight, like tiny mirrors. On the other hand, incomplete combustion of fossil fuels (such as diesel) and wood releases particles of black carbon (predominantly soot), which absorb solar energy and heat up, reducing the overall amount of sunlight received on the surface while also contributing to warming. Black carbon is an extremely small component of air pollution at land surface levels, yet it has a substantial heating effect on the atmosphere at altitudes above two kilometers (6,562 ft).

Indirectly, the pollutants affect the climate by acting as nuclei, meaning that water droplets in clouds coalesce around the particles. Increased pollution causes more particulates and thereby creates clouds consisting of a greater number of smaller droplets (that is, the same amount of water is spread over more droplets). The smaller droplets make clouds more reflective, so that more incoming sunlight is reflected back into space and less reaches the Earth's surface. This same effect also reflects radiation from below, trapping it in the lower atmosphere. In models, these smaller droplets also decrease rainfall. In the 1990s, experiments comparing the atmosphere over the northern and southern islands of the Maldives, showed that the effect of macroscopic pollutants in the atmosphere at that time (blown south from India) caused about a 10% reduction in sunlight reaching the surface in the area under the Asian brown cloud – a much greater reduction than expected from the presence of the particles themselves. Prior to the research being undertaken, predictions were of a 0.5–1% effect from particulate matter; the variation from prediction may be explained by cloud formation with the particles acting as the focus for droplet creation.

Relationship to climate change

It has been understood for a long time that any effect on solar irradiance from aerosols would necessarily impact Earth's radiation balance. Reductions in atmospheric temperatures have already been observed after large volcanic eruptions such as the 1963 eruption of Mount Agung in Bali, 1982 El Chichón eruption in Mexico, 1985 Nevado del Ruiz eruption in Colombia and 1991 eruption of Mount Pinatubo in the Philippines. However, even the major eruptions only result in temporary jumps of sulfur particles, unlike the more sustained increases caused by the anthropogenic pollution. In 1990, the IPCC First Assessment Report acknowledged that "Human-made aerosols, from sulphur emitted largely in fossil fuel combustion can modify clouds and this may act to lower temperatures", while "a decrease in emissions of sulphur might be expected to increase global temperatures". However, lack of observational data and difficulties in calculating indirect effects on clouds left the report unable to estimate whether the total impact of all anthropogenic aerosols on the global temperature amounted to cooling or warming. By 1995, the IPCC Second Assessment Report had confidently assessed the overall impact of aerosols as negative (cooling); however, aerosols were recognized as the largest source of uncertainty in future projections in that report and the subsequent ones.

At the peak of global dimming, it was able to counteract the warming trend completely, but by 1975, the continually increasing concentrations of greenhouse gases have overcome the masking effect and dominated ever since. Even then, regions with high concentrations of sulfate aerosols due to air pollution had initially experienced cooling, in contradiction to the overall warming trend. The eastern United States was a prominent example: the temperatures there declined by 0.7 °C (1.3 °F) between 1970 and 1980, and by up to 1 °C (1.8 °F) in the Arkansas and Missouri. As the sulfate pollution was reduced, the central and eastern United States had experienced warming of 0.3 °C (0.54 °F) between 1980 and 2010, even as sulfate particles still accounted for around 25% of all particulates. By 2021, the northeastern coast of the United States was instead one of the fastest-warming regions of North America, as the slowdown of the Atlantic Meridional Overturning Circulation increased temperatures in that part of the North Atlantic Ocean.

Globally, the emergence of extreme heat beyond the preindustrial records was delayed by aerosol cooling, and hot extremes accelerated as global dimming abated: it has been estimated that since the mid-1990s, peak daily temperatures in northeast Asia and hottest days of the year in Western Europe would have been substantially less hot if aerosol concentrations had stayed the same as before. In Europe, the declines in aerosol concentrations since the 1980s had also reduced the associated fog, mist and haze: altogether, it was responsible for about 10–20% of daytime warming across Europe, and about 50% of the warming over the more polluted Eastern Europe. Because aerosol cooling depends on reflecting sunlight, air quality improvements had a negligible impact on wintertime temperatures, but had increased temperatures from April to September by around 1 °C (1.8 °F) in Central and Eastern Europe. Some of the acceleration of sea level rise, as well as Arctic amplification and the associated Arctic sea ice decline, was also attributed to the reduction in aerosol masking.

Pollution from black carbon, mostly represented by soot, also contributes to global dimming. However, because it absorbs heat instead of reflecting it, it warms the planet instead of cooling it like sulfates. This warming is much weaker than that of greenhouse gases, but it can be regionally significant when black carbon is deposited over ice masses like mountain glaciers and the Greenland ice sheet, where it reduces their albedo and increases their absorption of solar radiation. Even the indirect effect of soot particles acting as cloud nuclei is not strong enough to provide cooling: the "brown clouds" formed around soot particles were known to have a net warming effect since the 2000s. Black carbon pollution is particularly strong over India, and as the result, it is considered to be one of the few regions where cleaning up air pollution would reduce, rather than increase, warming.

Since changes in aerosol concentrations already have an impact on the global climate, they would necessarily influence future projections as well. In fact, it is impossible to fully estimate the warming impact of all greenhouse gases without accounting for the counteracting cooling from aerosols. Climate models started to account for the effects of sulfate aerosols around the IPCC Second Assessment Report; when the IPCC Fourth Assessment Report was published in 2007, every climate model had integrated sulfates, but only 5 were able to account for less impactful particulates like black carbon. By 2021, CMIP6 models estimated total aerosol cooling in the range from 0.1 °C (0.18 °F) to 0.7 °C (1.3 °F); The IPCC Sixth Assessment Report selected the best estimate of a 0.5 °C (0.90 °F) cooling provided by sulfate aerosols, while black carbon amounts to about 0.1 °C (0.18 °F) of warming. While these values are based on combining model estimates with observational constraints, including those on ocean heat content, the matter is not yet fully settled. The difference between model estimates mainly stems from disagreements over the indirect effects of aerosols on clouds. While it is well known that aerosols increase the number of cloud droplets and this makes the clouds more reflective, calculating how liquid water path, an important cloud property, is affected by their presence is far more challenging, as it involves computationally heavy continuous calculations of evaporation and condensation within clouds. Climate models generally assume that aerosols increase liquid water path, which makes the clouds even more reflective.

However, satellite observations taken in 2010s suggested that aerosols decreased liquid water path instead, and in 2018, this was reproduced in a model which integrated more complex cloud microphysics. Yet, 2019 research found that earlier satellite observations were biased by failing to account for the thickest, most water-heavy clouds naturally raining more and shedding more particulates: very strong aerosol cooling was seen when comparing clouds of the same thickness. Moreover, large-scale observations can be confounded by changes in other atmospheric factors, like humidity: i.e. it was found that while post-1980 improvements in air quality would have reduced the number of clouds over the East Coast of the United States by around 20%, this was offset by the increase in relative humidity caused by atmospheric response to AMOC slowdown. Similarly, while the initial research looking at sulfates from the 2014–2015 eruption of Bárðarbunga found that they caused no change in liquid water path, it was later suggested that this finding was confounded by counteracting changes in humidity. To avoid confounders, many observations of aerosol effects focus on ship tracks, but post-2020 research found that visible ship tracks are a poor proxy for other clouds, and estimates derived from them overestimate aerosol cooling by as much as 200%. At the same time, other research found that the majority of ship tracks are "invisible" to satellites, meaning that the earlier research had underestimated aerosol cooling by overlooking them. Finally, 2023 research indicates that all climate models have underestimated sulfur emissions from volcanoes which occur in the background, outside of major eruptions, and so had consequently overestimated the cooling provided by anthropogenic aerosols, especially in the Arctic climate.

Regardless of the current strength of aerosol cooling, all future climate change scenarios project decreases in particulates and this includes the scenarios where 1.5 °C (2.7 °F) and 2 °C (3.6 °F) targets are met: their specific emission reduction targets assume the need to make up for lower dimming. Since models estimate that the cooling caused by sulfates is largely equivalent to the warming caused by atmospheric methane (and since methane is a relatively short-lived greenhouse gas), it is believed that simultaneous reductions in both would effectively cancel each other out. Yet, in the recent years, methane concentrations had been increasing at rates exceeding their previous period of peak growth in the 1980s, with wetland methane emissions driving much of the recent growth, while air pollution is getting cleaned up aggressively. These trends are some of the main reasons why 1.5 °C (2.7 °F) warming is now expected around 2030, as opposed to the mid-2010s estimates where it would not occur until 2040.

It has also been suggested that aerosols are not given sufficient attention in regional risk assessments, in spite of being more influential on a regional scale than globally. For instance, a climate change scenario with high greenhouse gas emissions but strong reductions in air pollution would see 0.2 °C (0.36 °F) more global warming by 2050 than the same scenario with little improvement in air quality, but regionally, the difference would add 5 more tropical nights per year in northern China and substantially increase precipitation in northern China and northern India. Likewise, a paper comparing current level of clean air policies with a hypothetical maximum technically feasible action under otherwise the same climate change scenario found that the latter would increase the risk of temperature extremes by 30–50% in China and in Europe. Unfortunately, because historical records of aerosols are sparser in some regions than in others, accurate regional projections of aerosol impacts are difficult. Even the latest CMIP6 climate models can only accurately represent aerosol trends over Europe, but struggle with representing North America and Asia, meaning that their near-future projections of regional impacts are likely to contain errors as well.

Aircraft contrails and lockdowns

In general, aircraft contrails (also called vapor trails) are believed to trap outgoing longwave radiation emitted by the Earth and atmosphere more than they reflect incoming solar radiation, resulting in a net increase in radiative forcing. In 1992, this warming effect was estimated between 3.5 mW/m2 and 17 mW/m2. Global radiative forcing impact of aircraft contrails has been calculated from the reanalysis data, climate models, and radiative transfer codes; estimated at 12 mW/m2 for 2005, with an uncertainty range of 5 to 26 mW/m2, and with a low level of scientific understanding. Contrail cirrus may be air traffic's largest radiative forcing component, larger than all CO2 accumulated from aviation, and could triple from a 2006 baseline to 160–180 mW/m2 by 2050 without intervention. For comparison, the total radiative forcing from human activities amounted to 2.72 W/m2 (with a range between 1.96 and 3.48W/m2) in 2019, and the increase from 2011 to 2019 alone amounted to 0.34W/m2.

Contrail effects differ a lot depending on when they are formed, as they decrease the daytime temperature and increase the nighttime temperature, reducing their difference. In 2006, it was estimated that night flights contribute 60 to 80% of contrail radiative forcing while accounting for 25% of daily air traffic, and winter flights contribute half of the annual mean radiative forcing while accounting for 22% of annual air traffic. Starting from the 1990s, it was suggested that contrails during daytime have a strong cooling effect, and when combined with the warming from night-time flights, this would lead to a substantial diurnal temperature variation (the difference in the day's highs and lows at a fixed station). When no commercial aircraft flew across the USA following the September 11 attacks, the diurnal temperature variation was widened by 1.1 °C (2.0 °F). Measured across 4,000 weather stations in the continental United States, this increase was the largest recorded in 30 years. Without contrails, the local diurnal temperature range was 1 °C (1.8 °F) higher than immediately before. In the southern US, the difference was diminished by about 3.3 °C (6 °F), and by 2.8 °C (5 °F) in the US midwest.However, follow-up studies found that a natural change in cloud cover can more than explain these findings. The authors of a 2008 study wrote, "The variations in high cloud cover, including contrails and contrail-induced cirrus clouds, contribute weakly to the changes in the diurnal temperature range, which is governed primarily by lower altitude clouds, winds, and humidity."

A 2011 study of British meteorological records taken during World War II identified one event where the temperature was 0.8 °C (1.4 °F) higher than the day's average near airbases used by USAAF strategic bombers after they flew in a formation, although they cautioned it was a single event.

The global response to the 2020 coronavirus pandemic led to a reduction in global air traffic of nearly 70% relative to 2019. Thus, it provided an extended opportunity to study the impact of contrails on regional and global temperature. Multiple studies found "no significant response of diurnal surface air temperature range" as the result of contrail changes, and either "no net significant global ERF" (effective radiative forcing) or a very small warming effect. On the other hand, the decline in sulfate emissions caused by the curtailed road traffic and industrial output during the COVID-19 lockdowns did have a detectable warming impact: it was estimated to have increased global temperatures by 0.01–0.02 °C (0.018–0.036 °F) initially and up to 0.03 °C (0.054 °F) by 2023, before disappearing. Regionally, the lockdowns were estimated to increase temperatures by 0.05–0.15 °C (0.090–0.270 °F) in eastern China over January–March, and then by 0.04–0.07 °C (0.072–0.126 °F) over Europe, eastern United States, and South Asia in March–May, with the peak impact of 0.3 °C (0.54 °F) in some regions of the United States and Russia. In the city of Wuhan, the urban heat island effect was found to have decreased by 0.24 °C (0.43 °F) at night and by 0.12 °C (0.22 °F) overall during the strictest lockdowns.

Relationship to hydrological cycle

On regional and global scale, air pollution can affect the water cycle, in a manner similar to some natural processes. One example is the impact of Sahara dust on hurricane formation: air laden with sand and mineral particles moves over the Atlantic Ocean, where they block some of the sunlight from reaching the water surface, slightly cooling it and dampening the development of hurricanes. Likewise, it has been suggested since the early 2000s that since aerosols decrease solar radiation over the ocean and hence reduce evaporation from it, they would be "spinning down the hydrological cycle of the planet." In 2011, it was found that anthropogenic aerosols had been the predominant factor behind 20th century changes in rainfall over the Atlantic Ocean sector, when the entire tropical rain belt shifted southwards between 1950 and 1985, with a limited northwards shift afterwards. Future reductions in aerosol emissions are expected to result in a more rapid northwards shift, with limited impact in the Atlantic but a substantially greater impact in the Pacific.

Most notably, multiple studies connect aerosols from the Northern Hemisphere to the failed monsoon in sub-Saharan Africa during the 1970s and 1980s, which then led to the Sahel drought and the associated famine. However, model simulations of Sahel climate are very inconsistent, so it's difficult to prove that the drought would not have occurred without aerosol pollution, although it would have clearly been less severe. Some research indicates that those models which demonstrate warming alone driving strong precipitation increases in the Sahel are the most accurate, making it more likely that sulfate pollution was to blame for overpowering this response and sending the region into drought.

Another dramatic finding had connected the impact of aerosols with the weakening of the Monsoon of South Asia. It was first advanced in 2006, yet it also remained difficult to prove. In particular, some research suggested that warming itself increases the risk of monsoon failure, potentially pushing it past a tipping point. By 2021, however, it was concluded that global warming consistently strengthened the monsoon, and some strengthening was already observed in the aftermath of lockdown-caused aerosol reductions.

In 2009, an analysis of 50 years of data found that light rains had decreased over eastern China, even though there was no significant change in the amount of water held by the atmosphere. This was attributed to aerosols reducing droplet size within clouds, which led to those clouds retaining water for a longer time without raining. The phenomenon of aerosols suppressing rainfall through reducing cloud droplet size has been confirmed by subsequent studies. Later research found that aerosol pollution over South and East Asia didn't just suppress rainfall there, but also resulted in more moisture transferred to Central Asia, where summer rainfall had increased as the result. IPCC Sixth Assessment Report had also linked changes in aerosol concentrations to altered precipitation in the Mediterranean region.

Solar geoengineering

An increase in planetary albedo of 1% would eliminate most of radiative forcing from anthropogenic greenhouse gas emissions and thereby global warming, while a 2% albedo increase would negate the warming effect of doubling the atmospheric carbon dioxide concentration. This is the theory behind solar geoengineering, and the high reflective potential of sulfate aerosols means that they were considered in this capacity for a long time. In 1974, Mikhail Budyko suggested that if global warming became a problem, the planet could be cooled by burning sulfur in the stratosphere, which would create a haze. This approach would simply send the sulfates to the troposphere – the lowest part of the atmosphere. Using it today would be equivalent to more than reversing the decades of air quality improvements, and the world would face the same issues which prompted the introduction of those regulations in the first place, such as acid rain. The suggestion of relying on tropospheric global dimming to curb warming has been described as a "Faustian bargain" and is not seriously considered by modern research.

Instead, starting with the seminal 2006 paper by Paul Crutzen, the solution advocated is known as stratospheric aerosol injection, or SAI. It would transport sulfates into the next higher layer of the atmosphere – stratosphere, where they would last for years instead of weeks, so far less sulfur would have to be emitted. It has been estimated that the amount of sulfur needed to offset a warming of around 4 °C (7.2 °F) relative to now (and 5 °C (9.0 °F) relative to the preindustrial), under the highest-emission scenario RCP 8.5 would be less than what is already emitted through air pollution today, and that reductions in sulfur pollution from future air quality improvements already expected under that scenario would offset the sulfur used for geoengineering. The trade-off is increased cost. While there's a popular narrative that stratospheric aerosol injection can be carried out by individuals, small states, or other non-state rogue actors, scientific estimates suggest that cooling the atmosphere by 1 °C (1.8 °F) through stratospheric aerosol injection would cost at least $18 billion annually (at 2020 USD value), meaning that only the largest economies or economic blocs could afford this intervention. Even so, these approaches would still be "orders of magnitude" cheaper than greenhouse gas mitigation, let alone the costs of unmitigated effects of climate change.

The main downside to SAI is that any such cooling would still cease 1–3 years after the last aerosol injection, while the warming from CO2 emissions lasts for hundreds to thousands of years unless they are reversed earlier. This means that neither stratospheric aerosol injection nor other forms of solar geoengineering can be used as a substitute for reducing greenhouse gas emissions, because if solar geoengineering were to cease while greenhouse gas levels remained high, it would lead to "large and extremely rapid" warming and similarly abrupt changes to the water cycle. Many thousands of species would likely go extinct as the result. Instead, any solar geoengineering would act as a temporary measure to limit warming while emissions of greenhouse gases are reduced and carbon dioxide is removed, which may well take hundreds of years.

Other risks include limited knowledge about the regional impacts of solar geoengineering (beyond the certainty that even stopping or reversing the warming entirely would still result in significant changes in weather patterns in many areas) and, correspondingly, the impacts on ecosystems. It is generally believed that relative to now, crop yields and carbon sinks would be largely unaffected or may even increase slightly, because reduced photosynthesis due to lower sunlight would be offset by CO2 fertilization effect and the reduction in thermal stress, but there's less confidence about how specific ecosystems may be affected. Moreover, stratospheric aerosol injection is likely to somewhat increase mortality from skin cancer due to the weakened ozone layer, but it would also reduce mortality from ground-level ozone, with the net effect unclear. Changes in precipitation are also likely to shift the habitat of mosquitoes and thus substantially affect the distribution and spread of vector-borne diseases, with currently unclear consequences.

![{\displaystyle T(\varphi ,\lambda ,t)=T_{\infty }\{1+\Delta T_{2}^{0}P_{2}^{0}(\varphi )+\Delta T_{1}^{0}P_{1}^{0}(\varphi )\cos[\omega _{a}(t-t_{a})]+\Delta T_{1}^{1}P_{1}^{1}(\varphi )\cos(\tau -\tau _{d})+\cdots \}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/661329a03c1ca2879f02d6d3ea6b0097c091dafa)