From Wikipedia, the free encyclopedia

Computer science is the scientific and practical approach to computation and its applications. It is the systematic study of the feasibility, structure, expression, and mechanization of the methodical procedures (or algorithms) that underlie the acquisition, representation, processing, storage, communication of, and access to information, whether such information is encoded as bits in a computer memory or transcribed in genes and protein structures in a biological cell.[1] An alternate, more succinct definition of computer science is the study of automating algorithmic processes that scale. A computer scientist specializes in the theory of computation and the design of computational systems.[2]

Its subfields can be divided into a variety of theoretical and practical disciplines. Some fields, such as computational complexity theory (which explores the fundamental properties of computational and intractable problems), are highly abstract, while fields such as computer graphics emphasize real-world visual applications. Still other fields focus on the challenges in implementing computation. For example, programming language theory considers various approaches to the description of computation, while the study of computer programming itself investigates various aspects of the use of programming language and complex systems. Human–computer interaction considers the challenges in making computers and computations useful, usable, and universally accessible to humans.

The earliest foundations of what would become computer science predate the invention of the modern digital computer. Machines for calculating fixed numerical tasks such as the abacus have existed since antiquity, aiding in computations such as multiplication and division. Further, algorithms for performing computations have existed since antiquity, even before sophisticated computing equipment were created. The ancient Sanskrit treatise Shulba Sutras, or "Rules of the Chord", is a book of algorithms written in 800 BCE for constructing geometric objects like altars using a peg and chord, an early precursor of the modern field of computational geometry.

Blaise Pascal designed and constructed the first working mechanical calculator, Pascal's calculator, in 1642.[3] In 1673 Gottfried Leibniz demonstrated a digital mechanical calculator, called the 'Stepped Reckoner'.[4] He may be considered the first computer scientist and information theorist, for, among other reasons, documenting the binary number system. In 1820, Thomas de Colmar launched the mechanical calculator industry[5] when he released his simplified arithmometer, which was the first calculating machine strong enough and reliable enough to be used daily in an office environment. Charles Babbage started the design of the first automatic mechanical calculator, his difference engine, in 1822, which eventually gave him the idea of the first programmable mechanical calculator, his Analytical Engine.[6] He started developing this machine in 1834 and "in less than two years he had sketched out many of the salient features of the modern computer. A crucial step was the adoption of a punched card system derived from the Jacquard loom"[7] making it infinitely programmable.[8] In 1843, during the translation of a French article on the analytical engine, Ada Lovelace wrote, in one of the many notes she included, an algorithm to compute the Bernoulli numbers, which is considered to be the first computer program.[9] Around 1885, Herman Hollerith invented the tabulator, which used punched cards to process statistical information; eventually his company became part of IBM. In 1937, one hundred years after Babbage's impossible dream,

Howard Aiken convinced IBM, which was making all kinds of punched card equipment and was also in the calculator business[10] to develop his giant programmable calculator, the ASCC/Harvard Mark I, based on Babbage's analytical engine, which itself used cards and a central computing unit. When the machine was finished, some hailed it as "Babbage's dream come true".[11]

During the 1940s, as new and more powerful computing machines were developed, the term computer came to refer to the machines rather than their human predecessors.[12] As it became clear that computers could be used for more than just mathematical calculations, the field of computer science broadened to study computation in general. Computer science began to be established as a distinct academic discipline in the 1950s and early 1960s.[13][14] The world's first computer science degree program, the Cambridge Diploma in Computer Science, began at the University of Cambridge Computer Laboratory in 1953. The first computer science degree program in the United States was formed at Purdue University in 1962.[15] Since practical computers became available, many applications of computing have become distinct areas of study in their own rights.

Although many initially believed it was impossible that computers themselves could actually be a scientific field of study, in the late fifties it gradually became accepted among the greater academic population.[16] It is the now well-known IBM brand that formed part of the computer science revolution during this time. IBM (short for International Business Machines) released the IBM 704[17] and later the IBM 709[18] computers, which were widely used during the exploration period of such devices. "Still, working with the IBM [computer] was frustrating ... if you had misplaced as much as one letter in one instruction, the program would crash, and you would have to start the whole process over again".[16] During the late 1950s, the computer science discipline was very much in its developmental stages, and such issues were commonplace.

Time has seen significant improvements in the usability and effectiveness of computing technology. Modern society has seen a significant shift in the users of computer technology, from usage only by experts and professionals, to a near-ubiquitous user base. Initially, computers were quite costly, and some degree of human aid was needed for efficient use - in part from professional computer operators. As computer adoption became more widespread and affordable, less human assistance was needed for common usage.

Despite its short history as a formal academic discipline, computer science has made a number of fundamental contributions to science and society - in fact, along with electronics, it is a founding science of the current epoch of human history called the Information Age and a driver of the Information Revolution, seen as the third major leap in human technological progress after the Industrial Revolution (1750-1850 CE) and the Agricultural Revolution (8000-5000 BCE).

These contributions include:

The term is used mainly in the Scandinavian countries. Also, in the early days of computing, a number of terms for the practitioners of the field of computing were suggested in the Communications of the ACM – turingineer, turologist, flow-charts-man, applied meta-mathematician, and applied epistemologist.[34] Three months later in the same journal, comptologist was suggested, followed next year by hypologist.[35] The term computics has also been suggested.[36] In Europe, terms derived from contracted translations of the expression "automatic information" (e.g. "informazione automatica" in Italian) or "information and mathematics" are often used, e.g. informatique (French), Informatik (German), informatica (Italy, The Netherlands), informática (Spain, Portugal), informatika (Slavic languages and Hungarian) or pliroforiki (πληροφορική, which means informatics) in Greek. Similar words have also been adopted in the UK (as in the School of Informatics of the University of Edinburgh).[37]

A folkloric quotation, often attributed to—but almost certainly not first formulated by—Edsger Dijkstra, states that "computer science is no more about computers than astronomy is about telescopes."[note 1] The design and deployment of computers and computer systems is generally considered the province of disciplines other than computer science. For example, the study of computer hardware is usually considered part of computer engineering, while the study of commercial computer systems and their deployment is often called information technology or information systems. However, there has been much cross-fertilization of ideas between the various computer-related disciplines. Computer science research also often intersects other disciplines, such as philosophy, cognitive science, linguistics, mathematics, physics, biology, statistics, and logic.

Computer science is considered by some to have a much closer relationship with mathematics than many scientific disciplines, with some observers saying that computing is a mathematical science.[13] Early computer science was strongly influenced by the work of mathematicians such as Kurt Gödel and Alan Turing, and there continues to be a useful interchange of ideas between the two fields in areas such as mathematical logic, category theory, domain theory, and algebra.

The relationship between computer science and software engineering is a contentious issue, which is further muddied by disputes over what the term "software engineering" means, and how computer science is defined.[38] David Parnas, taking a cue from the relationship between other engineering and science disciplines, has claimed that the principal focus of computer science is studying the properties of computation in general, while the principal focus of software engineering is the design of specific computations to achieve practical goals, making the two separate but complementary disciplines.[39]

The academic, political, and funding aspects of computer science tend to depend on whether a department formed with a mathematical emphasis or with an engineering emphasis. Computer science departments with a mathematics emphasis and with a numerical orientation consider alignment with computational science. Both types of departments tend to make efforts to bridge the field educationally if not across all research.

They typically also teach computer programming, but treat it as a vessel for the support of other fields of computer science rather than a central focus of high-level study. The ACM/IEEE-CS Joint Curriculum Task Force "Computing Curriculum 2005" (and 2008 update)[48] gives a guideline for university curriculum.

Other colleges and universities, as well as secondary schools and vocational programs that teach computer science, emphasize the practice of advanced programming rather than the theory of algorithms and computation in their computer science curricula. Such curricula tend to focus on those skills that are important to workers entering the software industry. The process aspects of computer programming are often referred to as software engineering.

While computer science professions increasingly drive the U.S. economy, computer science education is absent in most American K-12 curricula. A report entitled "Running on Empty: The Failure to Teach K-12 Computer Science in the Digital Age" was released in October 2010 by Association for Computing Machinery (ACM) and Computer Science Teachers Association (CSTA), and revealed that only 14 states have adopted significant education standards for high school computer science. The report also found that only nine states count high school computer science courses as a core academic subject in their graduation requirements. In tandem with "Running on Empty", a new non-partisan advocacy coalition - Computing in the Core (CinC) - was founded to influence federal and state policy, such as the Computer Science Education Act, which calls for grants to states to develop plans for improving computer science education and supporting computer science teachers.

Within the United States a gender gap in computer science education has been observed as well. Research conducted by the WGBH Educational Foundation and the Association for Computing Machinery (ACM) revealed that more than twice as many high school boys considered computer science to be a "very good" or "good" college major than high school girls.[49] In addition, the high school Advanced Placement (AP) exam for computer science has displayed a disparity in gender. Compared to other AP subjects it has the lowest number of female participants, with a composition of about 15 percent women.[50] This gender gap in computer science is further witnessed at the college level, where 31 percent of undergraduate computer science degrees are earned by women and only 8 percent of computer science faculty consists of women.[51] According to an article published by the Epistemic Games Group in August 2012, the number of women graduates in the computer science field has declined to 13 percent.[52]

A 2014 Mother Jones article, "We Can Code It", advocates for adding computer literacy and coding to the K-12 curriculum in the United States, and notes that computer science is not incorporated into the requirements for the Common Core State Standards Initiative.[53]

Its subfields can be divided into a variety of theoretical and practical disciplines. Some fields, such as computational complexity theory (which explores the fundamental properties of computational and intractable problems), are highly abstract, while fields such as computer graphics emphasize real-world visual applications. Still other fields focus on the challenges in implementing computation. For example, programming language theory considers various approaches to the description of computation, while the study of computer programming itself investigates various aspects of the use of programming language and complex systems. Human–computer interaction considers the challenges in making computers and computations useful, usable, and universally accessible to humans.

History

The earliest foundations of what would become computer science predate the invention of the modern digital computer. Machines for calculating fixed numerical tasks such as the abacus have existed since antiquity, aiding in computations such as multiplication and division. Further, algorithms for performing computations have existed since antiquity, even before sophisticated computing equipment were created. The ancient Sanskrit treatise Shulba Sutras, or "Rules of the Chord", is a book of algorithms written in 800 BCE for constructing geometric objects like altars using a peg and chord, an early precursor of the modern field of computational geometry.

Blaise Pascal designed and constructed the first working mechanical calculator, Pascal's calculator, in 1642.[3] In 1673 Gottfried Leibniz demonstrated a digital mechanical calculator, called the 'Stepped Reckoner'.[4] He may be considered the first computer scientist and information theorist, for, among other reasons, documenting the binary number system. In 1820, Thomas de Colmar launched the mechanical calculator industry[5] when he released his simplified arithmometer, which was the first calculating machine strong enough and reliable enough to be used daily in an office environment. Charles Babbage started the design of the first automatic mechanical calculator, his difference engine, in 1822, which eventually gave him the idea of the first programmable mechanical calculator, his Analytical Engine.[6] He started developing this machine in 1834 and "in less than two years he had sketched out many of the salient features of the modern computer. A crucial step was the adoption of a punched card system derived from the Jacquard loom"[7] making it infinitely programmable.[8] In 1843, during the translation of a French article on the analytical engine, Ada Lovelace wrote, in one of the many notes she included, an algorithm to compute the Bernoulli numbers, which is considered to be the first computer program.[9] Around 1885, Herman Hollerith invented the tabulator, which used punched cards to process statistical information; eventually his company became part of IBM. In 1937, one hundred years after Babbage's impossible dream,

Howard Aiken convinced IBM, which was making all kinds of punched card equipment and was also in the calculator business[10] to develop his giant programmable calculator, the ASCC/Harvard Mark I, based on Babbage's analytical engine, which itself used cards and a central computing unit. When the machine was finished, some hailed it as "Babbage's dream come true".[11]

During the 1940s, as new and more powerful computing machines were developed, the term computer came to refer to the machines rather than their human predecessors.[12] As it became clear that computers could be used for more than just mathematical calculations, the field of computer science broadened to study computation in general. Computer science began to be established as a distinct academic discipline in the 1950s and early 1960s.[13][14] The world's first computer science degree program, the Cambridge Diploma in Computer Science, began at the University of Cambridge Computer Laboratory in 1953. The first computer science degree program in the United States was formed at Purdue University in 1962.[15] Since practical computers became available, many applications of computing have become distinct areas of study in their own rights.

Although many initially believed it was impossible that computers themselves could actually be a scientific field of study, in the late fifties it gradually became accepted among the greater academic population.[16] It is the now well-known IBM brand that formed part of the computer science revolution during this time. IBM (short for International Business Machines) released the IBM 704[17] and later the IBM 709[18] computers, which were widely used during the exploration period of such devices. "Still, working with the IBM [computer] was frustrating ... if you had misplaced as much as one letter in one instruction, the program would crash, and you would have to start the whole process over again".[16] During the late 1950s, the computer science discipline was very much in its developmental stages, and such issues were commonplace.

Time has seen significant improvements in the usability and effectiveness of computing technology. Modern society has seen a significant shift in the users of computer technology, from usage only by experts and professionals, to a near-ubiquitous user base. Initially, computers were quite costly, and some degree of human aid was needed for efficient use - in part from professional computer operators. As computer adoption became more widespread and affordable, less human assistance was needed for common usage.

History

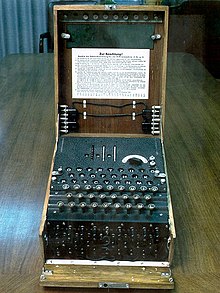

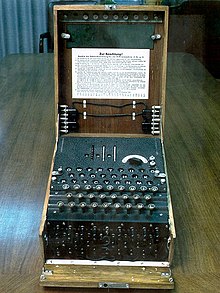

The German military used the Enigma machine (shown here) during World War II for communication they thought to be secret. The large-scale decryption of Enigma traffic at Bletchley Park was an important factor that contributed to Allied victory in WWII.[19]

Despite its short history as a formal academic discipline, computer science has made a number of fundamental contributions to science and society - in fact, along with electronics, it is a founding science of the current epoch of human history called the Information Age and a driver of the Information Revolution, seen as the third major leap in human technological progress after the Industrial Revolution (1750-1850 CE) and the Agricultural Revolution (8000-5000 BCE).

These contributions include:

- The start of the "digital revolution", which includes the current Information Age and the Internet.[20]

- A formal definition of computation and computability, and proof that there are computationally unsolvable and intractable problems.[21]

- The concept of a programming language, a tool for the precise expression of methodological information at various levels of abstraction.[22]

- In cryptography, breaking the Enigma code was an important factor contributing to the Allied victory in World War II.[19]

- Scientific computing enabled practical evaluation of processes and situations of great complexity, as well as experimentation entirely by software. It also enabled advanced study of the mind, and mapping of the human genome became possible with the Human Genome Project.[20] Distributed computing projects such as Folding@home explore protein folding.

- Algorithmic trading has increased the efficiency and liquidity of financial markets by using artificial intelligence, machine learning, and other statistical and numerical techniques on a large scale.[23] High frequency algorithmic trading can also exacerbate volatility.[24]

- Computer graphics and computer-generated imagery have become ubiquitous in modern entertainment, particularly in television, cinema, advertising, animation and video games. Even films that feature no explicit CGI are usually "filmed" now on digital cameras, or edited or postprocessed using a digital video editor.[citation needed]

- Simulation of various processes, including computational fluid dynamics, physical, electrical, and electronic systems and circuits, as well as societies and social situations (notably war games) along with their habitats, among many others. Modern computers enable optimization of such designs as complete aircraft. Notable in electrical and electronic circuit design are SPICE, as well as software for physical realization of new (or modified) designs. The latter includes essential design software for integrated circuits.[citation needed]

- Artificial intelligence is becoming increasingly important as it gets more efficient and complex. There are many applications of the AI, some of which can be seen at home, such as robotic vacuum cleaners. It is also present in video games and on the modern battlefield in drones, anti-missile systems, and squad support robots.

Philosophy

A number of computer scientists have argued for the distinction of three separate paradigms in computer science. Peter Wegner argued that those paradigms are science, technology, and mathematics.[25] Peter Denning's working group argued that they are theory, abstraction (modeling), and design.[26] Amnon H. Eden described them as the "rationalist paradigm" (which treats computer science as a branch of mathematics, which is prevalent in theoretical computer science, and mainly employs deductive reasoning), the "technocratic paradigm" (which might be found in engineering approaches, most prominently in software engineering), and the "scientific paradigm" (which approaches computer-related artifacts from the empirical perspective of natural sciences, identifiable in some branches of artificial intelligence).[27]Name of the field

The term "computer science" appears in a 1959 article in Communications of the ACM,[28] in which Louis Fein argues for the creation of a Graduate School in Computer Sciences analogous to the creation of Harvard Business School in 1921,[29] justifying the name by arguing that, like management science, the subject is applied and interdisciplinary in nature, while having the characteristics typical of an academic discipline.[30] His efforts, and those of others such as numerical analyst George Forsythe, were rewarded: universities went on to create such programs, starting with Purdue in 1962.[31] Despite its name, a significant amount of computer science does not involve the study of computers themselves. Because of this, several alternative names have been proposed.[32] Certain departments of major universities prefer the term computing science, to emphasize precisely that difference. Danish scientist Peter Naur suggested the term datalogy,[33] to reflect the fact that the scientific discipline revolves around data and data treatment, while not necessarily involving computers. The first scientific institution to use the term was the Department of Datalogy at the University of Copenhagen, founded in 1969, with Peter Naur being the first professor in datalogy.The term is used mainly in the Scandinavian countries. Also, in the early days of computing, a number of terms for the practitioners of the field of computing were suggested in the Communications of the ACM – turingineer, turologist, flow-charts-man, applied meta-mathematician, and applied epistemologist.[34] Three months later in the same journal, comptologist was suggested, followed next year by hypologist.[35] The term computics has also been suggested.[36] In Europe, terms derived from contracted translations of the expression "automatic information" (e.g. "informazione automatica" in Italian) or "information and mathematics" are often used, e.g. informatique (French), Informatik (German), informatica (Italy, The Netherlands), informática (Spain, Portugal), informatika (Slavic languages and Hungarian) or pliroforiki (πληροφορική, which means informatics) in Greek. Similar words have also been adopted in the UK (as in the School of Informatics of the University of Edinburgh).[37]

A folkloric quotation, often attributed to—but almost certainly not first formulated by—Edsger Dijkstra, states that "computer science is no more about computers than astronomy is about telescopes."[note 1] The design and deployment of computers and computer systems is generally considered the province of disciplines other than computer science. For example, the study of computer hardware is usually considered part of computer engineering, while the study of commercial computer systems and their deployment is often called information technology or information systems. However, there has been much cross-fertilization of ideas between the various computer-related disciplines. Computer science research also often intersects other disciplines, such as philosophy, cognitive science, linguistics, mathematics, physics, biology, statistics, and logic.

Computer science is considered by some to have a much closer relationship with mathematics than many scientific disciplines, with some observers saying that computing is a mathematical science.[13] Early computer science was strongly influenced by the work of mathematicians such as Kurt Gödel and Alan Turing, and there continues to be a useful interchange of ideas between the two fields in areas such as mathematical logic, category theory, domain theory, and algebra.

The relationship between computer science and software engineering is a contentious issue, which is further muddied by disputes over what the term "software engineering" means, and how computer science is defined.[38] David Parnas, taking a cue from the relationship between other engineering and science disciplines, has claimed that the principal focus of computer science is studying the properties of computation in general, while the principal focus of software engineering is the design of specific computations to achieve practical goals, making the two separate but complementary disciplines.[39]

The academic, political, and funding aspects of computer science tend to depend on whether a department formed with a mathematical emphasis or with an engineering emphasis. Computer science departments with a mathematics emphasis and with a numerical orientation consider alignment with computational science. Both types of departments tend to make efforts to bridge the field educationally if not across all research.

Areas of computer science

As a discipline, computer science spans a range of topics from theoretical studies of algorithms and the limits of computation to the practical issues of implementing computing systems in hardware and software.[40][41] CSAB, formerly called Computing Sciences Accreditation Board – which is made up of representatives of the Association for Computing Machinery (ACM), and the IEEE Computer Society (IEEE-CS)[42] – identifies four areas that it considers crucial to the discipline of computer science: theory of computation, algorithms and data structures, programming methodology and languages, and computer elements and architecture. In addition to these four areas, CSAB also identifies fields such as software engineering, artificial intelligence, computer networking and telecommunications, database systems, parallel computation, distributed computation, computer-human interaction, computer graphics, operating systems, and numerical and symbolic computation as being important areas of computer science.[40]Theoretical computer science

The broader field of theoretical computer science encompasses both the classical theory of computation and a wide range of other topics that focus on the more abstract, logical, and mathematical aspects of computing.Theory of computation

According to Peter J. Denning, the fundamental question underlying computer science is, "What can be (efficiently) automated?"[13] The study of the theory of computation is focused on answering fundamental questions about what can be computed and what amount of resources are required to perform those computations. In an effort to answer the first question, computability theory examines which computational problems are solvable on various theoretical models of computation. The second question is addressed by computational complexity theory, which studies the time and space costs associated with different approaches to solving a multitude of computational problems.The famous "P=NP?" problem, one of the Millennium Prize Problems,[43] is an open problem in the theory of computation. |

P = NP ? | GNITIRW-TERCES |  |

|

| Automata theory | Computability theory | Computational complexity theory | Cryptography | Quantum computing theory |

Information and coding theory

Information theory is related to the quantification of information. This was developed by Claude E. Shannon to find fundamental limits on signal processing operations such as compressing data and on reliably storing and communicating data.[44] Coding theory is the study of the properties of codes (systems for converting information from one form to another) and their fitness for a specific application. Codes are used for data compression, cryptography, error detection and correction, and more recently also for network coding. Codes are studied for the purpose of designing efficient and reliable data transmission methods.Algorithms and data structures

Algorithms and data structures is the study of commonly used computational methods and their computational efficiency. |

|

|

||

| Analysis of algorithms | Algorithms | Data structures | Combinatorial optimization | Computational geometry |

Programming language theory

Programming language theory is a branch of computer science that deals with the design, implementation, analysis, characterization, and classification of programming languages and their individual features. It falls within the discipline of computer science, both depending on and affecting mathematics, software engineering and linguistics. It is an active research area, with numerous dedicated academic journals. |

|

|

| Type theory | Compiler design | Programming languages |

Formal methods

Formal methods are a particular kind of mathematically based technique for the specification, development and verification of software and hardware systems. The use of formal methods for software and hardware design is motivated by the expectation that, as in other engineering disciplines, performing appropriate mathematical analysis can contribute to the reliability and robustness of a design. They form an important theoretical underpinning for software engineering, especially where safety or security is involved. Formal methods are a useful adjunct to software testing since they help avoid errors and can also give a framework for testing. For industrial use, tool support is required. However, the high cost of using formal methods means that they are usually only used in the development of high-integrity and life-critical systems, where safety or security is of utmost importance. Formal methods are best described as the application of a fairly broad variety of theoretical computer science fundamentals, in particular logic calculi, formal languages, automata theory, and program semantics, but also type systems and algebraic data types to problems in software and hardware specification and verification.Applied computer science

Applied computer science aims at identifying certain computer science concepts that can be used directly in solving real world problems.Artificial intelligence

This branch of computer science aims to or is required to synthesise goal-orientated processes such as problem-solving, decision-making, environmental adaptation, learning and communication found in humans and animals. From its origins in cybernetics and in the Dartmouth Conference (1956), artificial intelligence (AI) research has been necessarily cross-disciplinary, drawing on areas of expertise such as applied mathematics, symbolic logic, semiotics, electrical engineering, philosophy of mind, neurophysiology, and social intelligence. AI is associated in the popular mind with robotic development, but the main field of practical application has been as an embedded component in areas of software development, which require computational understanding and modeling such as finance and economics, data mining and the physical sciences.[citation needed] The starting-point in the late 1940s was Alan Turing's question "Can computers think?", and the question remains effectively unanswered although the "Turing Test" is still used to assess computer output on the scale of human intelligence. But the automation of evaluative and predictive tasks has been increasingly successful as a substitute for human monitoring and intervention in domains of computer application involving complex real-world data.Computer architecture and engineering

Computer architecture, or digital computer organization, is the conceptual design and fundamental operational structure of a computer system. It focuses largely on the way by which the central processing unit performs internally and accesses addresses in memory.[45] The field often involves disciplines of computer engineering and electrical engineering, selecting and interconnecting hardware components to create computers that meet functional, performance, and cost goals. |

|||

| Digital logic | Microarchitecture | Multiprocessing | |

|

|

|

|

| Operating systems | Computer networks | Databases | Information security |

|

|

|

|

| Ubiquitous computing | Systems architecture | Compiler design | Programming languages |

Computer Performance Analysis

Computer Performance Analysis is the study of work flowing through computers with the general goals of improving throughput, controlling response time, using resources efficiently, eliminating bottlenecks, and predicting performance under anticipated peak loads.[46]Computer graphics and visualization

Computer graphics is the study of digital visual contents, and involves synthese and manipulations of image data. The study is connected to many other fields in computer science, including computer vision, image processing, and computational geometry, and is heavily applied in the fields of special effects and video games.Computer security and cryptography

Computer security is a branch of computer technology, whose objective includes protection of information from unauthorized access, disruption, or modification while maintaining the accessibility and usability of the system for its intended users. Cryptography is the practice and study of hiding (encryption) and therefore deciphering (decryption) information. Modern cryptography is largely related to computer science, for many encryption and decryption algorithms are based on their computational complexity.Computational science

Computational science (or scientific computing) is the field of study concerned with constructing mathematical models and quantitative analysis techniques and using computers to analyze and solve scientific problems. In practical use, it is typically the application of computer simulation and other forms of computation to problems in various scientific disciplines. |

|

|

|

| Numerical analysis | Computational physics | Computational chemistry | Bioinformatics |

Computer networks

This branch of computer science aims to manage networks between computers worldwide.Concurrent, parallel and distributed systems

Concurrency is a property of systems in which several computations are executing simultaneously, and potentially interacting with each other. A number of mathematical models have been developed for general concurrent computation including Petri nets, process calculi and the Parallel Random Access Machine model. A distributed system extends the idea of concurrency onto multiple computers connected through a network. Computers within the same distributed system have their own private memory, and information is often exchanged among themselves to achieve a common goal.Databases

A database is intended to organize, store, and retrieve large amounts of data easily. Digital databases are managed using database management systems to store, create, maintain, and search data, through database models and query languages.Health informatics

Health Informatics in computer science deals with computational techniques for solving problems in health care.Information science

|

|

|

|

| Information retrieval | Knowledge representation | Natural language processing | Human–computer interaction |

Software engineering

Software engineering is the study of designing, implementing, and modifying software in order to ensure it is of high quality, affordable, maintainable, and fast to build. It is a systematic approach to software design, involving the application of engineering practices to software. Software engineering deals with the organizing and analyzing of software— it doesn't just deal with the creation or manufacture of new software, but its internal maintenance and arrangement. Both computer applications software engineers and computer systems software engineers are projected to be among the fastest growing occupations from 2008 and 2018.The great insights of computer science

The philosopher of computing Bill Rapaport noted three Great Insights of Computer Science[47]- Leibniz's, Boole's, Alan Turing's, Shannon's, & Morse's insight: There are only 2 objects that a computer has to deal with in order to represent "anything"

- All the information about any computable problem can be represented using only 0 & 1 (or any other bistable pair that can flip-flop between two easily distinguishable states,such as "on"/"off", "magnetized/de-magnetized", "high-voltage/low-voltage", etc.).

See also: digital physics

- Alan Turing's insight: There are only 5 actions that a computer has to perform in order to do "anything"

- Every algorithm can be expressed in a language for a computer consisting of only 5 basic instructions:

- * move left one location

- * move right one location

- * read symbol at current location

- * print 0 at current location

- * print 1 at current location

See also: Turing machine

- Böhm and Jacopini's insight: There are only 3 ways of combining these actions (into more complex ones) that are needed in order for a computer to do "anything"

- Only 3 rules are needed to combine any set of basic instructions into more complex ones:

- sequence:

- first do this; then do that

- selection :

- IF such-&-such is the case,

- THEN do this

- ELSE do that

- repetition:

- WHILE such & such is the case DO this

Academia

Conferences

Conferences are strategic events of the Academic Research in computer science. During those conferences, researchers from the public and private sectors present their recent work and meet. Proceedings of these conferences are an important part of the computer science literature.Journals

Education

Some universities teach computer science as a theoretical study of computation and algorithmic reasoning. These programs often feature the theory of computation, analysis of algorithms, formal methods, concurrency theory, databases, computer graphics, and systems analysis, among others.They typically also teach computer programming, but treat it as a vessel for the support of other fields of computer science rather than a central focus of high-level study. The ACM/IEEE-CS Joint Curriculum Task Force "Computing Curriculum 2005" (and 2008 update)[48] gives a guideline for university curriculum.

Other colleges and universities, as well as secondary schools and vocational programs that teach computer science, emphasize the practice of advanced programming rather than the theory of algorithms and computation in their computer science curricula. Such curricula tend to focus on those skills that are important to workers entering the software industry. The process aspects of computer programming are often referred to as software engineering.

While computer science professions increasingly drive the U.S. economy, computer science education is absent in most American K-12 curricula. A report entitled "Running on Empty: The Failure to Teach K-12 Computer Science in the Digital Age" was released in October 2010 by Association for Computing Machinery (ACM) and Computer Science Teachers Association (CSTA), and revealed that only 14 states have adopted significant education standards for high school computer science. The report also found that only nine states count high school computer science courses as a core academic subject in their graduation requirements. In tandem with "Running on Empty", a new non-partisan advocacy coalition - Computing in the Core (CinC) - was founded to influence federal and state policy, such as the Computer Science Education Act, which calls for grants to states to develop plans for improving computer science education and supporting computer science teachers.

Within the United States a gender gap in computer science education has been observed as well. Research conducted by the WGBH Educational Foundation and the Association for Computing Machinery (ACM) revealed that more than twice as many high school boys considered computer science to be a "very good" or "good" college major than high school girls.[49] In addition, the high school Advanced Placement (AP) exam for computer science has displayed a disparity in gender. Compared to other AP subjects it has the lowest number of female participants, with a composition of about 15 percent women.[50] This gender gap in computer science is further witnessed at the college level, where 31 percent of undergraduate computer science degrees are earned by women and only 8 percent of computer science faculty consists of women.[51] According to an article published by the Epistemic Games Group in August 2012, the number of women graduates in the computer science field has declined to 13 percent.[52]

A 2014 Mother Jones article, "We Can Code It", advocates for adding computer literacy and coding to the K-12 curriculum in the United States, and notes that computer science is not incorporated into the requirements for the Common Core State Standards Initiative.[53]