A linear system in three variables determines a collection of planes. The intersection point is the solution.

In mathematics, a system of linear equations (or linear system) is a collection of two or more linear equations involving the same set of variables.[1] For example,

In mathematics, the theory of linear systems is the basis and a fundamental part of linear algebra, a subject which is used in most parts of modern mathematics. Computational algorithms for finding the solutions are an important part of numerical linear algebra, and play a prominent role in engineering, physics, chemistry, computer science, and economics. A system of non-linear equations can often be approximated by a linear system (see linearization), a helpful technique when making a mathematical model or computer simulation of a relatively complex system.

Very often, the coefficients of the equations are real or complex numbers and the solutions are searched in the same set of numbers, but the theory and the algorithms apply for coefficients and solutions in any field. For solutions in an integral domain like the ring of the integers, or in other algebraic structures, other theories have been developed, see Linear equation over a ring. Integer linear programming is a collection of methods for finding the "best" integer solution (when there are many). Gröbner basis theory provides algorithms when coefficients and unknowns are polynomials. Also tropical geometry is an example of linear algebra in a more exotic structure.

Elementary example

The simplest kind of linear system involves two equations and two variables: in terms of

in terms of  :

: . Solving gives

. Solving gives  , and substituting this back into the equation for

, and substituting this back into the equation for  yields

yields  . This method generalizes to systems with additional variables (see "elimination of variables" below, or the article on elementary algebra.)

. This method generalizes to systems with additional variables (see "elimination of variables" below, or the article on elementary algebra.)General form

A general system of m linear equations with n unknowns can be written as are the unknowns,

are the unknowns,  are the coefficients of the system, and

are the coefficients of the system, and  are the constant terms.

are the constant terms.Often the coefficients and unknowns are real or complex numbers, but integers and rational numbers are also seen, as are polynomials and elements of an abstract algebraic structure.

Vector equation

One extremely helpful view is that each unknown is a weight for a column vector in a linear combination.Matrix equation

The vector equation is equivalent to a matrix equation of the formSolution set

The solution set for the equations x − y = −1 and 3x + y = 9 is the single point (2, 3).

A solution of a linear system is an assignment of values to the variables x1, x2, ..., xn such that each of the equations is satisfied. The set of all possible solutions is called the solution set.

A linear system may behave in any one of three possible ways:

- The system has infinitely many solutions.

- The system has a single unique solution.

- The system has no solution.

Geometric interpretation

For a system involving two variables (x and y), each linear equation determines a line on the xy-plane. Because a solution to a linear system must satisfy all of the equations, the solution set is the intersection of these lines, and is hence either a line, a single point, or the empty set.For three variables, each linear equation determines a plane in three-dimensional space, and the solution set is the intersection of these planes. Thus the solution set may be a plane, a line, a single point, or the empty set. For example, as three parallel planes do not have a common point, the solution set of their equations is empty; the solution set of the equations of three planes intersecting at a point is single point; if three planes pass through two points, their equations have at least two common solutions; in fact the solution set is infinite and consists in all the line passing through these points.[2]

For n variables, each linear equation determines a hyperplane in n-dimensional space. The solution set is the intersection of these hyperplanes, and is a flat, which may have any dimension lower than n.

General behavior

The solution set for two equations in three variables is, in general, a line.

In general, the behavior of a linear system is determined by the relationship between the number of equations and the number of unknowns. Here, "in general" means that a different behavior may occur for specific values of the coefficients of the equations.

- In general, a system with fewer equations than unknowns has infinitely many solutions, but it may have no solution. Such a system is known as an underdetermined system.

- In general, a system with the same number of equations and unknowns has a single unique solution.

- In general, a system with more equations than unknowns has no solution. Such a system is also known as an overdetermined system.

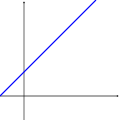

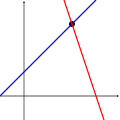

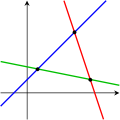

The following pictures illustrate this trichotomy in the case of two variables:

The first system has infinitely many solutions, namely all of the points on the blue line. The second system has a single unique solution, namely the intersection of the two lines. The third system has no solutions, since the three lines share no common point.

It must be kept in mind that the pictures above show only the most common case (the general case). It is possible for a system of two equations and two unknowns to have no solution (if the two lines are parallel), or for a system of three equations and two unknowns to be solvable (if the three lines intersect at a single point).

A system of linear equations behave differently from the general case if the equations are linearly dependent, or if it is inconsistent and has no more equations than unknowns.

Properties

Independence

The equations of a linear system are independent if none of the equations can be derived algebraically from the others. When the equations are independent, each equation contains new information about the variables, and removing any of the equations increases the size of the solution set. For linear equations, logical independence is the same as linear independence.

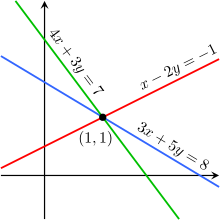

The equations x − 2y = −1, 3x + 5y = 8, and 4x + 3y = 7 are linearly dependent.

For example, the equations

For a more complicated example, the equations

Consistency

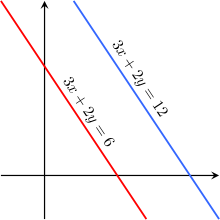

The equations 3x + 2y = 6 and 3x + 2y = 12 are inconsistent.

A linear system is inconsistent if it has no solution, and otherwise it is said to be consistent. When the system is inconsistent, it is possible to derive a contradiction from the equations, that may always be rewritten as the statement 0 = 1.

For example, the equations

It is possible for three linear equations to be inconsistent, even though any two of them are consistent together. For example, the equations

In general, inconsistencies occur if the left-hand sides of the equations in a system are linearly dependent, and the constant terms do not satisfy the dependence relation. A system of equations whose left-hand sides are linearly independent is always consistent.

Putting it another way, according to the Rouché–Capelli theorem, any system of equations (overdetermined or otherwise) is inconsistent if the rank of the augmented matrix is greater than the rank of the coefficient matrix. If, on the other hand, the ranks of these two matrices are equal, the system must have at least one solution. The solution is unique if and only if the rank equals the number of variables. Otherwise the general solution has k free parameters where k is the difference between the number of variables and the rank; hence in such a case there are an infinitude of solutions. The rank of a system of equations (i.e. the rank of the augmented matrix) can never be higher than [the number of variables] + 1, which means that a system with any number of equations can always be reduced to a system that has a number of independent equations that is at most equal to [the number of variables] + 1.

Equivalence

Two linear systems using the same set of variables are equivalent if each of the equations in the second system can be derived algebraically from the equations in the first system, and vice versa. Two systems are equivalent if either both are inconsistent or each equation of each of them is a linear combination of the equations of the other one. It follows that two linear systems are equivalent if and only if they have the same solution set.Solving a linear system

There are several algorithms for solving a system of linear equations.Describing the solution

When the solution set is finite, it is reduced to a single element. In this case, the unique solution is described by a sequence of equations whose left-hand sides are the names of the unknowns and right-hand sides are the corresponding values, for example . When an order on the unknowns has been fixed, for example the alphabetical order the solution may be described as a vector of values, like

. When an order on the unknowns has been fixed, for example the alphabetical order the solution may be described as a vector of values, like  for the previous example.

for the previous example.To describe a set with an infinite number of solutions, typically some of the variables are designated as free (or independent, or as parameters), meaning that they are allowed to take any value, while the remaining variables are dependent on the values of the free variables.

For example, consider the following system:

Each free variable gives the solution space one degree of freedom, the number of which is equal to the dimension of the solution set. For example, the solution set for the above equation is a line, since a point in the solution set can be chosen by specifying the value of the parameter z. An infinite solution of higher order may describe a plane, or higher-dimensional set.

Different choices for the free variables may lead to different descriptions of the same solution set. For example, the solution to the above equations can alternatively be described as follows:

Elimination of variables

The simplest method for solving a system of linear equations is to repeatedly eliminate variables. This method can be described as follows:- In the first equation, solve for one of the variables in terms of the others.

- Substitute this expression into the remaining equations. This yields a system of equations with one fewer equation and one fewer unknown.

- Repeat until the system is reduced to a single linear equation.

- Solve this equation, and then back-substitute until the entire solution is found.

Row reduction

In row reduction (also known as Gaussian elimination), the linear system is represented as an augmented matrix:- Type 1: Swap the positions of two rows.

- Type 2: Multiply a row by a nonzero scalar.

- Type 3: Add to one row a scalar multiple of another.

There are several specific algorithms to row-reduce an augmented matrix, the simplest of which are Gaussian elimination and Gauss-Jordan elimination. The following computation shows Gauss-Jordan elimination applied to the matrix above:

Cramer's rule

Cramer's rule is an explicit formula for the solution of a system of linear equations, with each variable given by a quotient of two determinants. For example, the solution to the systemThough Cramer's rule is important theoretically, it has little practical value for large matrices, since the computation of large determinants is somewhat cumbersome. (Indeed, large determinants are most easily computed using row reduction.) Further, Cramer's rule has very poor numerical properties, making it unsuitable for solving even small systems reliably, unless the operations are performed in rational arithmetic with unbounded precision.[citation needed]

Matrix solution

If the equation system is expressed in the matrix form , the entire solution set can also be expressed in matrix form. If the matrix A is square (has m rows and n=m columns) and has full rank (all m rows are independent), then the system has a unique solution given by

, the entire solution set can also be expressed in matrix form. If the matrix A is square (has m rows and n=m columns) and has full rank (all m rows are independent), then the system has a unique solution given by is the inverse of A. More generally, regardless of whether m=n or not and regardless of the rank of A, all solutions (if any exist) are given using the Moore-Penrose pseudoinverse of A, denoted

is the inverse of A. More generally, regardless of whether m=n or not and regardless of the rank of A, all solutions (if any exist) are given using the Moore-Penrose pseudoinverse of A, denoted  , as follows:

, as follows: is a vector of free parameters that ranges over all possible n×1 vectors. A necessary and sufficient condition for any solution(s) to exist is that the potential solution obtained using

is a vector of free parameters that ranges over all possible n×1 vectors. A necessary and sufficient condition for any solution(s) to exist is that the potential solution obtained using  satisfy

satisfy  — that is, that

— that is, that  If this condition does not hold, the equation system is inconsistent

and has no solution. If the condition holds, the system is consistent

and at least one solution exists. For example, in the above-mentioned

case in which A is square and of full rank,

If this condition does not hold, the equation system is inconsistent

and has no solution. If the condition holds, the system is consistent

and at least one solution exists. For example, in the above-mentioned

case in which A is square and of full rank,  simply equals

simply equals  and the general solution equation simplifies to

and the general solution equation simplifies to  as previously stated, where

as previously stated, where  has completely dropped out of the solution, leaving only a single solution. In other cases, though,

has completely dropped out of the solution, leaving only a single solution. In other cases, though,  remains and hence an infinitude of potential values of the free parameter vector

remains and hence an infinitude of potential values of the free parameter vector  give an infinitude of solutions of the equation.

give an infinitude of solutions of the equation.Other methods

While systems of three or four equations can be readily solved by hand (see Cracovian), computers are often used for larger systems. The standard algorithm for solving a system of linear equations is based on Gaussian elimination with some modifications. Firstly, it is essential to avoid division by small numbers, which may lead to inaccurate results. This can be done by reordering the equations if necessary, a process known as pivoting. Secondly, the algorithm does not exactly do Gaussian elimination, but it computes the LU decomposition of the matrix A. This is mostly an organizational tool, but it is much quicker if one has to solve several systems with the same matrix A but different vectors b.If the matrix A has some special structure, this can be exploited to obtain faster or more accurate algorithms. For instance, systems with a symmetric positive definite matrix can be solved twice as fast with the Cholesky decomposition. Levinson recursion is a fast method for Toeplitz matrices. Special methods exist also for matrices with many zero elements (so-called sparse matrices), which appear often in applications.

A completely different approach is often taken for very large systems, which would otherwise take too much time or memory. The idea is to start with an initial approximation to the solution (which does not have to be accurate at all), and to change this approximation in several steps to bring it closer to the true solution. Once the approximation is sufficiently accurate, this is taken to be the solution to the system. This leads to the class of iterative methods.

There is also a quantum algorithm for linear systems of equations.[3]

Homogeneous systems

A system of linear equations is homogeneous if all of the constant terms are zero:Solution set

Every homogeneous system has at least one solution, known as the zero solution (or trivial solution), which is obtained by assigning the value of zero to each of the variables. If the system has a non-singular matrix (det(A) ≠ 0) then it is also the only solution. If the system has a singular matrix then there is a solution set with an infinite number of solutions. This solution set has the following additional properties:- If u and v are two vectors representing solutions to a homogeneous system, then the vector sum u + v is also a solution to the system.

- If u is a vector representing a solution to a homogeneous system, and r is any scalar, then ru is also a solution to the system.

Relation to nonhomogeneous systems

There is a close relationship between the solutions to a linear system and the solutions to the corresponding homogeneous system:This reasoning only applies if the system Ax = b has at least one solution. This occurs if and only if the vector b lies in the image of the linear transformation A.

![\left[{\begin{array}{rrr|r}1&3&-2&5\\3&5&6&7\\2&4&3&8\end{array}}\right]{\text{.}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/6d99c79eb45b325d779be9693c613d9aec07b6d4)

![{\begin{aligned}\left[{\begin{array}{rrr|r}1&3&-2&5\\3&5&6&7\\2&4&3&8\end{array}}\right]&\sim \left[{\begin{array}{rrr|r}1&3&-2&5\\0&-4&12&-8\\2&4&3&8\end{array}}\right]\sim \left[{\begin{array}{rrr|r}1&3&-2&5\\0&-4&12&-8\\0&-2&7&-2\end{array}}\right]\sim \left[{\begin{array}{rrr|r}1&3&-2&5\\0&1&-3&2\\0&-2&7&-2\end{array}}\right]\\&\sim \left[{\begin{array}{rrr|r}1&3&-2&5\\0&1&-3&2\\0&0&1&2\end{array}}\right]\sim \left[{\begin{array}{rrr|r}1&3&-2&5\\0&1&0&8\\0&0&1&2\end{array}}\right]\sim \left[{\begin{array}{rrr|r}1&3&0&9\\0&1&0&8\\0&0&1&2\end{array}}\right]\sim \left[{\begin{array}{rrr|r}1&0&0&-15\\0&1&0&8\\0&0&1&2\end{array}}\right].\end{aligned}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/5f6367f306a7947555dd25f9b3b29a5903efdabb)