| |

| Filename extension | .sgml |

|---|---|

| Internet media type | application/sgml, text/sgml |

| Uniform Type Identifier (UTI) | public.xml |

| Developed by | ISO |

| Type of format | Markup Language |

| Extended from | GML |

| Extended to | HTML, XML |

| Standard | ISO 8879 |

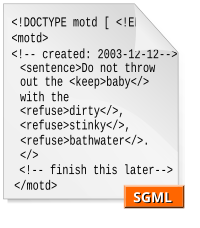

The Standard Generalized Markup Language (SGML; ISO 8879:1986) is a standard for defining generalized markup languages for documents. ISO 8879 Annex A.1 defines generalized markup.

Generalized markup is based on two postulates:

- Markup should be declarative: it should describe a document's structure and other attributes, rather than specify the processing to be performed on it. Declarative markup is less likely to conflict with unforeseen future processing needs and techniques;

- Markup should be rigorous so that the techniques available for processing rigorously-defined objects like programs and databases can be used for processing documents as well.

DocBook SGML and LinuxDoc are examples which were used almost exclusively with actual SGML tools.

Standard versions

- Original SGML, which was accepted in October 1986, followed by a minor Technical Corrigendum;

- SGML (ENR), in 1996, resulted from a Technical Corrigendum to add extended naming rules allowing arbitrary-language and -script markup;

- SGML (ENR+WWW or WebSGML), in 1998, resulted from a Technical Corrigendum to better support XML and WWW requirements.

- SGML (ISO 8879)—Generalized markup language

- DSSSL (ISO/IEC 10179)—Document processing and styling language based on Scheme.

- DSSSL was reworked into[clarification needed] W3C XSLT and XSL-FO which use an XML syntax. Nowadays, DSSSL is rarely used in new projects apart from Linux documentation;

- HyTime—Generalized hypertext and scheduling.

- ISO/IEC TR 9573 – Information processing – SGML support facilities – Techniques for using SGML

- Part 13: Public entity sets for mathematics and science

- In 2007, the W3C MathML working group agreed to assume the maintenance of these entity sets.

- Part 13: Public entity sets for mathematics and science

History

SGML descended from IBM's Generalized Markup Language (GML), which Charles Goldfarb, Edward Mosher, and Raymond Lorie developed in the 1960s. Goldfarb, editor of the international standard, coined the “GML” term using their surname initials. Goldfarb also wrote the definitive work on SGML syntax in "The SGML Handbook". The syntax of SGML is closer to the COCOA format. As a document markup language, SGML was originally designed to enable the sharing of machine-readable large-project documents in government, law, and industry. Many such documents must remain readable for several decades—a long time in the information technology field. SGML also was extensively applied by the military, and the aerospace, technical reference, and industrial publishing industries. The advent of the XML profile has made SGML suitable for widespread application for small-scale, general-purpose use.

A fragment of the Oxford English Dictionary (1985), showing SGML markup

Document validity

SGML (ENR+WWW) defines two kinds of validity. According to the revised Terms and Definitions of ISO 8879 (from the public draft):A conforming SGML document must be either a type-valid SGML document, a tag-valid SGML document, or both. Note: A user may wish to enforce additional constraints on a document, such as whether a document instance is integrally-stored or free of entity references.A type-valid SGML document is defined by the standard as:

An SGML document in which, for each document instance, there is an associated document type declaration (DTD) to whose DTD that instance conforms.A tag-valid SGML document is defined by the standard as:

An SGML document, all of whose document instances are fully tagged. There need not be a document type declaration associated with any of the instances. Note: If there is a document type declaration, the instance can be parsed with or without reference to it.

Terminology

Tag-validity was introduced in SGML (ENR+WWW) to support XML which allows documents with no DOCTYPE declaration but which can be parsed without a grammar or documents which have a DOCTYPE declaration that makes no XML Infoset contributions to the document. The standard calls this fully tagged. Integrally stored reflects the XML requirement that elements end in the same entity in which they started. Reference-free reflects the HTML requirement that entity references are for special characters and do not contain markup. SGML validity commentary, especially commentary that was made before 1997 or that is unaware of SGML (ENR+WWW), covers type-validity only.The SGML emphasis on validity supports the requirement for generalized markup that markup should be rigorous. (ISO 8879 A.1)

Syntax

An SGML document may have three parts:- the SGML Declaration;

- the Prologue, containing a DOCTYPE declaration with the various markup declarations that together make a Document Type Definition (DTD); and

- the instance itself, containing one top-most element and its contents.

Although full SGML allows implicit markup and some other kinds of tags, the XML specification (s4.3.1) states:

Each XML document has both a logical and a physical structure. Physically, the document is composed of units called entities. An entity may refer to other entities to cause their inclusion in the document. A document begins in a "root" or document entity. Logically, the document is composed of declarations, elements, comments, character references, and processing instructions, all of which are indicated in the document by explicit markup.For introductory information on a basic, modern SGML syntax, see XML. The following material concentrates on features not in XML and is not a comprehensive summary of SGML syntax.

Optional features

SGML generalizes and supports a wide range of markup languages as found in the mid 1980s. These ranged from terse Wiki-like syntaxes to RTF-like bracketed languages to HTML-like matching-tag languages. SGML did this by a relatively simple default reference concrete syntax augmented with a large number of optional features that could be enabled in the SGML Declaration. Not every SGML parser can necessarily process every SGML document. Because each processor's System Declaration can be compared to the document's SGML Declaration it is always possible to know whether a document is supported by a particular processor.Many SGML features relate to markup minimization. Other features relate to concurrent (parallel) markup (CONCUR), to linking processing attributes (LINK), and to embedding SGML documents within SGML documents (SUBDOC).

The notion of customizable features was not appropriate for Web use, so one goal of XML was to minimize optional features. However XML's well-formedness rules cannot support Wiki-like languages, leaving them unstandardized and difficult to integrate with non-text information systems.

Concrete and abstract syntaxes

The usual (default) SGML concrete syntax resembles this example, which is the default HTML concrete syntax: TYPE="example">

typically something like

SGML provides an abstract syntax that can be implemented in many different types of concrete syntax. Although the markup norm is using angle brackets as start- and end- tag delimiters in an SGML document (per the standard-defined reference concrete syntax), it is possible to use other characters—provided a suitable concrete syntax is defined in the document's SGML declaration. For example, an SGML interpreter might be programmed to parse GML, wherein the tags are delimited with a left colon and a right full stop, thus, an :e prefix denotes an end tag:

:xmp.Hello, world:exmp.. According to the reference syntax, letter-case (upper- or lower-) is not distinguished in tag names, thus the three tags: (i)

Markup minimization

SGML has features for reducing the number of characters required to mark up a document, which must be enabled in the SGML Declaration. SGML processors need not support every available feature, thus allowing applications to tolerate many types of inadvertent markup omissions; however, SGML systems usually are intolerant of invalid structures. XML is intolerant of syntax omissions, and does not require a DTD for checking well-formedness.OMITTAG

Both start tags and end tags may be omitted from a document instance, provided:- The OMITTAG feature is enabled in the SGML Declaration;

- The DTD indicates that the tags are permitted to be omitted;

- (for start tags) the element has no associated required (

#REQUIRED) attributes; and - The tag can be unambiguously inferred by context.

chapter - - (title, section+)>

title o o (#PCDATA)>

section - - (title, subsection+)>

which omits two

</code> tags and two <code class="nowrap" style=""> Note also that omitting tags is optional – the same excerpt could be tagged like this:

</span>Introduction to SGML<span class="nt"> </span>The SGML Declaration<span class="nt"> and would still represent valid markup.

Note: The OMITTAG feature is unrelated to the tagging of elements whose declared content is

EMPTY as defined in the DTD: image - o EMPTY>

Elements defined like this have no end tag, and specifying one in the document instance would result in invalid markup. This is syntactically different than XML empty elements in this regard.

SHORTREF

Tags can be replaced with delimiter strings, for a terser markup, via the SHORTREF feature. This markup style is now associated with wiki markup, e.g. wherein two equals-signs (==), at the start of a line, are the “heading start-tag”, and two equals signs (==) after that are the “heading end-tag”.SHORTTAG

SGML markup languages whose concrete syntax enables the SHORTTAG VALUE feature, do not require attribute values containing only alphanumeric characters to be enclosed within quotation marks—either double" " (LIT) or single ' ' (LITA)—so that the previous markup example could be written: TYPE=example>

typically something like

One feature of SGML markup languages is the "presumptuous empty tagging", such that the empty end tag

</> in this</ >this NET

Another feature is the NET (Null End Tag) construction:this Other features

Additionally, the SHORTTAG NETENABL IMMEDNET feature allows shortening tags surrounding an empty text value, but forbids shortening full tags:

>

can be written as

wherein the first slash ( / ) stands for the NET-enabling “start-tag close” (NESTC), and the second slash stands for the NET. NOTE: XML defines NESTC with a /, and NET with an > (angled bracket)—hence the corresponding construct in XML appears as

.

The third feature is 'text on the same line', allowing a markup item to be ended with a line-end; especially useful for headings and such, requiring using either SHORTREF or DATATAG minimization. For example, if the DTD includes the following declarations:

lines (line*)

line O - (#PCDATA)>

line-tagc "

(and "&#RE;&#RS;" is a short-reference delimiter in the concrete syntax), then:

is equivalent to:

Formal characterization

SGML has many features that defied convenient description with the popular formal automata theory and the contemporary parser technology of the 1980s and the 1990s. The standard warns in Annex H:The SGML model group notation was deliberately designed to resemble the regular expression notation of automata theory, because automata theory provides a theoretical foundation for some aspects of the notion of conformance to a content model. No assumption should be made about the general applicability of automata to content models.A report on an early implementation of a parser for basic SGML, the Amsterdam SGML Parser, notes

the DTD-grammar in SGML must conform to a notion of unambiguity which closely resembles the LL(1) conditionsand specifies various differences.

There appears to be no definitive classification of full SGML against a known class of formal grammar. Plausible classes may include tree-adjoining grammars and adaptive grammars.

XML is described as being generally parsable like a two-level grammar for non-validated XML and a Conway-style pipeline of coroutines (lexer, parser, validator) for valid XML. The SGML productions in the ISO standard are reported to be LL(3) or LL(4). XML-class subsets are reported to be expressible using a W-grammar. According to one paper, and probably considered at an information set or parse tree level rather than a character or delimiter level:

The class of documents that conform to a given SGML document grammar forms an LL(1) language. … The SGML document grammars by themselves are, however, not LL(1) grammars.The SGML standard does not define SGML with formal data structures, such as parse trees, however, an SGML document is constructed of a rooted directed acyclic graph (RDAG) of physical storage units known as “entities”, which is parsed into a RDAG of structural units known as “elements”. The physical graph is loosely characterized as an entity tree, but entities might appear multiple times. Moreover, the structure graph is also loosely characterized as an element tree, but the ID/IDREF markup allows arbitrary arcs.

The results of parsing can also be understood as a data tree in different notations; where the document is the root node, and entities in other notations (text, graphics) are child nodes. SGML provides apparatus for linking to and annotating external non-SGML entities.

The SGML standard describes it in terms of maps and recognition modes (s9.6.1). Each entity, and each element, can have an associated notation or declared content type, which determines the kinds of references and tags which will be recognized in that entity and element. Also, each element can have an associated delimiter map (and short reference map), which determines which characters are treated as delimiters in context. The SGML standard characterizes parsing as a state machine switching between recognition modes. During parsing, there is a stack of maps that configure the scanner, while the tokenizer relates to the recognition modes.

Parsing involves traversing the dynamically-retrieved entity graph, finding/implying tags and the element structure, and validating those tags against the grammar. An unusual aspect of SGML is that the grammar (DTD) is used both passively — to recognize lexical structures, and actively — to generate missing structures and tags that the DTD has declared optional. End- and start- tags can be omitted, because they can be inferred. Loosely, a series of tags can be omitted only if there is a single, possible path in the grammar to imply them. It was this active use of grammars that made concrete SGML parsing difficult to formally characterize.

SGML uses the term validation for both recognition and generation. XML does not use the grammar (DTD) to change delimiter maps or to inform the parse modes, and does not allow tag omission; consequently, XML validation of elements is not active in the sense that SGML validation is active. SGML without a DTD (e.g. simple XML), is a grammar or a language; SGML with a DTD is a metalanguage. SGML with an SGML declaration is, perhaps, a meta-metalanguage, since it is a metalanguage whose declaration mechanism is a metalanguage.

SGML has an abstract syntax implemented by many possible concrete syntaxes, however, this is not the same usage as in an abstract syntax tree and as in a concrete syntax tree. In the SGML usage, a concrete syntax is a set of specific delimiters, while the abstract syntax is the set of names for the delimiters. The XML Infoset corresponds more to the programming language notion of abstract syntax introduced by John McCarthy.

Derivatives

XML

The W3C XML (Extensible Markup Language) is a profile (subset) of SGML designed to ease the implementation of the parser compared to a full SGML parser, primarily for use on the World Wide Web. In addition to disabling many SGML options present in the reference syntax (such as omitting tags and nested subdocuments) XML adds a number of additional restrictions on the kinds of SGML syntax. For example, despite enabling SGML shortened tag forms, XML does not allow unclosed start or end tags. It also relied on many of the additions made by the WebSGML Annex. XML currently is more widely used than full SGML. XML has lightweight internationalization based on Unicode. Applications of XML include XHTML, XQuery, XSLT, XForms, XPointer, JSP, SVG, RSS, Atom, XML-RPC, RDF/XML, and SOAP.HTML

While HTML was developed partially independently and in parallel with SGML, its creator, Tim Berners-Lee, intended it to be an application of SGML. The design of HTML (Hyper Text Markup Language) was therefore inspired by SGML tagging, but, since no clear expansion and parsing guidelines were established, most actual HTML documents are not valid SGML documents. Later, HTML was reformulated (version 2.0) to be more of an SGML application, however, the HTML markup language has many legacy- and exception- handling features that differ from SGML's requirements. HTML 4 is an SGML application that fully conforms to ISO 8879 – SGML.The charter for the 2006 revival of the World Wide Web Consortium HTML Working Group says, "the Group will not assume that an SGML parser is used for 'classic HTML'". Although HTML syntax closely resembles SGML syntax with the default reference concrete syntax, HTML5 abandons any attempt to define HTML as an SGML application, explicitly defining its own parsing rules, which more closely match existing implementations and documents. It does, however, define an alternative XHTML serialization, which conforms to XML and therefore to SGML as well.

OED

The second edition of the Oxford English Dictionary (OED) is entirely marked up with an SGML-based markup language.The third edition is marked up as XML.

Others

Other document markup languages are partly related to SGML and XML, but — because they cannot be parsed or validated or other-wise processed using standard SGML and XML tools — they are not considered either SGML or XML languages; the Z Format markup language for typesetting and documentation is an example.Several modern programming languages support tags as primitive token types, or now support Unicode and regular expression pattern-matching. An example is the Scala programming language.

Applications

Document markup languages defined using SGML are called "applications" by the standard; many pre-XML SGML applications were proprietary property of the organizations which developed them, and thus unavailable in the World Wide Web. The following list is of pre-XML SGML applications.- TEI (Text Encoding Initiative) is an academic consortium that designs, maintains, and develops technical standards for digital-format textual representation applications.

- DocBook is a markup language originally created as an SGML application, designed for authoring technical documentation; DocBook currently is an XML application.

- CALS (Continuous Acquisition and Life-cycle Support) is a US Department of Defense (DoD) initiative for electronically capturing military documents and for linking related data and information.

- HyTime defines a set of hypertext-oriented element types that allow SGML document authors to build hypertext and multimedia presentations.

- EDGAR (Electronic Data-Gathering, Analysis, and Retrieval) system effects automated collection, validation, indexing, acceptance, and forwarding of submissions, by companies and others, who are legally required to file data and information forms with the US Securities and Exchange Commission (SEC).

- LinuxDoc. Documentation for Linux packages has used the LinuxDoc SGML DTD and Docbook XML DTD.

- AAP DTD is a document type definition for scientific documents, defined by the Association of American Publishers.

- SGMLguid was an early SGML document type definition created, developed and used at CERN.

Open-source implementations

Significant open-source implementations of SGML have included:- ASP-SGML

- ARC-SGML, by Standard Generalized Markup Language Users', 1991, C language

- SGMLS, by James Clark, 1993, C language

- Project YAO, by Yuan-ze Institute of Technology, Taiwan, with Charles Goldfarb, 1994, object

- SP by James Clark, C++ language