Information management (IM) concerns a cycle of organizational activity: the acquisition of information from one or more sources, the custodianship and the distribution of that information to those who need it, and its ultimate disposition through archiving or deletion.

This cycle of organisational involvement with information involves a variety of stakeholders, including those who are responsible for assuring the quality, accessibility and utility of acquired information; those who are responsible for its safe storage and disposal; and those who need it for decision making. Stakeholders might have rights to originate, change, distribute or delete information according to organisational information management policies.

Information management embraces all the generic concepts of management, including the planning, organizing, structuring, processing, controlling, evaluation and reporting of information activities, all of which is needed in order to meet the needs of those with organisational roles or functions that depend on information. These generic concepts allow the information to be presented to the audience or the correct group of people. After individuals are able to put that information to use, it then gains more value.

Information management is closely related to, and overlaps with, the management of data, systems, technology, processes and – where the availability of information is critical to organisational success – strategy. This broad view of the realm of information management contrasts with the earlier, more traditional view, that the life cycle of managing information is an operational matter that requires specific procedures, organisational capabilities and standards that deal with information as a product or a service.

This cycle of organisational involvement with information involves a variety of stakeholders, including those who are responsible for assuring the quality, accessibility and utility of acquired information; those who are responsible for its safe storage and disposal; and those who need it for decision making. Stakeholders might have rights to originate, change, distribute or delete information according to organisational information management policies.

Information management embraces all the generic concepts of management, including the planning, organizing, structuring, processing, controlling, evaluation and reporting of information activities, all of which is needed in order to meet the needs of those with organisational roles or functions that depend on information. These generic concepts allow the information to be presented to the audience or the correct group of people. After individuals are able to put that information to use, it then gains more value.

Information management is closely related to, and overlaps with, the management of data, systems, technology, processes and – where the availability of information is critical to organisational success – strategy. This broad view of the realm of information management contrasts with the earlier, more traditional view, that the life cycle of managing information is an operational matter that requires specific procedures, organisational capabilities and standards that deal with information as a product or a service.

History

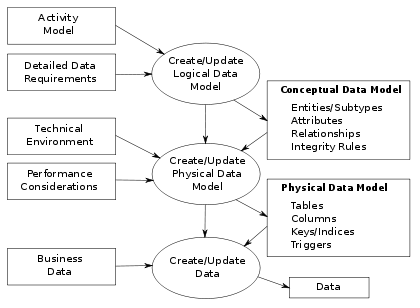

Emergent ideas out of data management

In the 1970s, the management of information largely concerned matters closer to what would now be called data management: punched cards, magnetic tapes and other record-keeping media,

involving a life cycle of such formats requiring origination,

distribution, backup, maintenance and disposal. At this time the huge

potential of information technology began to be recognised: for example a single chip storing a whole book, or electronic mail moving messages instantly around the world, remarkable ideas at the time. With the proliferation of information technology and the extending reach of information systems in the 1980s and 1990s, information management took on a new form. Progressive businesses such as British Petroleum transformed the vocabulary of what was then "IT management", so that “systems analysts” became “business analysts”, “monopoly supply” became a mixture of “insourcing” and “outsourcing”,

and the large IT function was transformed into “lean teams” that began

to allow some agility in the processes that harness information for business benefit. The scope of senior management interest in information at British Petroleum extended from the creation of value through improved business processes, based upon the effective management of information, permitting the implementation of appropriate information systems (or “applications”) that were operated on IT infrastructure that was outsourced.

In this way, information management was no longer a simple job that

could be performed by anyone who had nothing else to do, it became

highly strategic and a matter for senior management attention. An understanding of the technologies involved, an ability to manage information systems projects and business change well, and a willingness to align technology and business strategies all became necessary.

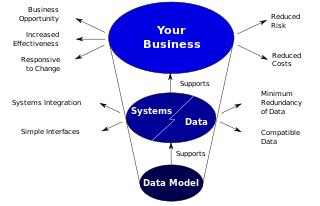

Positioning information management in the bigger picture

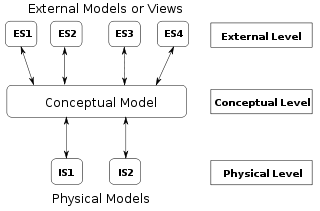

In

the transitional period leading up to the strategic view of information

management, Venkatraman (a strong advocate of this transition and

transformation, proffered a simple arrangement of ideas that succinctly brought together the managements of data, information, and knowledge (see the figure)) argued that:

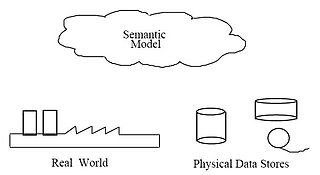

- Data that is maintained in IT infrastructure has to be interpreted in order to render information.

- The information in our information systems has to be understood in order to emerge as knowledge.

- Knowledge allows managers to take effective decisions.

- Effective decisions have to lead to appropriate actions.

- Appropriate actions are expected to deliver meaningful results.

This simple model summarises a presentation by Venkatraman in 1996, as reported by Ward and Peppard (2002, page 207).

This is often referred to as the DIKAR model: Data, Information, Knowledge, Action and Result,

it gives a strong clue as to the layers involved in aligning technology

and organisational strategies, and it can be seen as a pivotal moment

in changing attitudes to information management. The recognition that

information management is an investment that must deliver meaningful

results is important to all modern organisations that depend on

information and good decision-making for their success.

Theoretical background

Behavioural and organisational theories

It

is commonly believed that good information management is crucial to the

smooth working of organisations, and although there is no commonly

accepted theory of information management per se, behavioural and organisational theories help. Following the behavioural science theory of management, mainly developed at Carnegie Mellon University and prominently supported by March and Simon,

most of what goes on in modern organizations is actually information

handling and decision making. One crucial factor in information handling

and decision making is an individual's ability to process information

and to make decisions under limitations that might derive from the

context: a person's age, the situational complexity, or a lack of

requisite quality in the information that is at hand – all of which is

exacerbated by the rapid advance of technology and the new kinds of system that it enables, especially as the social web

emerges as a phenomenon that business cannot ignore. And yet, well

before there was any general recognition of the importance of

information management in organisations, March and Simon argued that organizations have to be considered as cooperative systems,

with a high level of information processing and a vast need for

decision making at various levels. Instead of using the model of the "economic man", as advocated in classical theory they proposed "administrative man"

as an alternative, based on their argumentation about the cognitive

limits of rationality. Additionally they proposed the notion of satisficing,

which entails searching through the available alternatives until an

acceptability threshold is met - another idea that still has currency.

Economic theory

In

addition to the organisational factors mentioned by March and Simon,

there are other issues that stem from economic and environmental

dynamics. There is the cost of collecting and evaluating the information

needed to take a decision, including the time and effort required. The transaction cost

associated with information processes can be high. In particular,

established organizational rules and procedures can prevent the taking

of the most appropriate decision, leading to sub-optimum outcomes.

This is an issue that has been presented as a major problem with

bureaucratic organizations that lose the economies of strategic change

because of entrenched attitudes.

Strategic information management

Background

According

to the Carnegie Mellon School an organization's ability to process

information is at the core of organizational and managerial competency, and an organization's strategies must be designed to improve information processing capability and as information systems that provide that capability became

formalised and automated, competencies were severely tested at many

levels. It was recognised that organisations needed to be able to learn and adapt in ways that were never so evident before

and academics began to organise and publish definitive works concerning

the strategic management of information, and information systems. Concurrently, the ideas of business process management and knowledge management although much of the optimistic early thinking about business process redesign has since been discredited in the information management literature.

In the strategic studies field, it is considered of the highest

priority the understanding of the information environment, conceived as

the aggregate of individuals, organizations, and systems that collect,

process, disseminate, or act on information. This environment consists

of three interrelated dimensions which continuously interact with

individuals, organizations, and systems. These dimensions are the

physical, informational, and cognitive.

Aligning technology and business strategy with information management

Venkatraman

has provided a simple view of the requisite capabilities of an

organisation that wants to manage information well – the DIKAR

model (see above). He also worked with others to understand how

technology and business strategies could be appropriately aligned in

order to identify specific capabilities that are needed. This work was paralleled by other writers in the world of consulting, practice and academia.

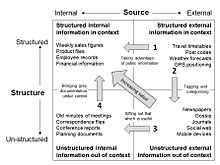

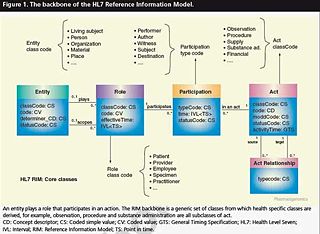

A contemporary portfolio model for information

Bytheway has collected and organised basic tools and techniques for information management in a single volume.

At the heart of his view of information management is a portfolio

model that takes account of the surging interest in external sources of

information and the need to organise un-structured information external

so as to make it useful (see the figure).

This

portfolio model organizes issues of internal and external sourcing and

management of information, that may be either structured or

unstructured.

Such an information portfolio as this shows how information can be gathered and usefully organised, in four stages:

Stage 1: Taking advantage of public information:

recognise and adopt well-structured external schemes of reference data,

such as post codes, weather data, GPS positioning data and travel

timetables, exemplified in the personal computing press.

Stage 2: Tagging the noise on the world wide web: use existing schemes such as post codes and GPS data or more typically by adding “tags”, or construct a formal ontology that provides structure. Shirky provides an overview of these two approaches.

Stage 3: Sifting and analysing: in the wider world the

generalised ontologies that are under development extend to hundreds of

entities and hundreds of relations between them and provide the means to

elicit meaning from large volumes of data. Structured data in databases

works best when that structure reflects a higher-level information

model – an ontology, or an entity-relationship model.

Stage 4: Structuring and archiving: with the large volume of data available from sources such as the social web and from the miniature telemetry systems used in personal health management, new ways to archive and then trawl data for meaningful information. Map-reduce methods, originating from functional programming, are a more recent way of eliciting information from large archival datasets

that is becoming interesting to regular businesses that have very large

data resources to work with, but it requires advanced multi-processor

resources.

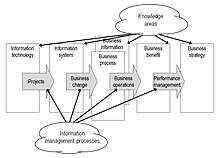

Competencies to manage information well

The Information Management Body of Knowledge was made available on the world wide web in 2004

and sets out to show that the required management competencies to derive

real benefits from an investment in information are complex and

multi-layered. The framework model that is the basis for understanding

competencies comprises six “knowledge” areas and four “process” areas:

This

framework is the basis of organising the "Information Management Body

of Knowledge" first made available in 2004. This version is adapted by

the addition of "Business information" in 2014.

- The information management knowledge areas

The IMBOK is based on the argument that there are six areas of

required management competency, two of which (“business process

management” and “business information management”) are very closely

related.

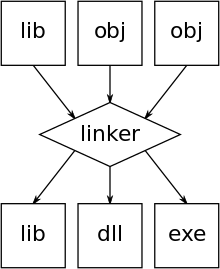

- Information technology: The pace of change of technology and the pressure to constantly acquire the newest technological products can undermine the stability of the infrastructure that supports systems, and thereby optimises business processes and delivers benefits. It is necessary to manage the “supply side” and recognise that technology is, increasingly, becoming a commodity.

- Information system: While historically information systems were developed in-house, over the years it has become possible to acquire most of the software systems that an organisation needs from the software package industry. However, there is still the potential for competitive advantage from the implementation of new systems ideas that deliver to the strategic intentions of organisations.

- Business processes and Business information: Information systems are applied to business processes in order to improve them, and they bring data to the business that becomes useful as business information. Business process management is still seen as a relatively new idea because it is not universally adopted, and it has been difficult in many cases; business information management is even more of a challenge.

- Business benefit: What are the benefits that we are seeking? It is necessary not only to be brutally honest about what can be achieved, but also to ensure the active management and assessment of benefit delivery. Since the emergence and popularisation of the Balanced scorecard there has been huge interest in business performance management but not much serious effort has been made to relate business performance management to the benefits of information technology investments and the introduction of new information systems until the turn of the millennium.

- Business strategy: Although a long way from the workaday issues of managing information in organisations, strategy in most organisations simply has to be informed by information technology and information systems opportunities, whether to address poor performance or to improve differentiation and competitiveness. Strategic analysis tools such as the value chain and critical success factor analysis are directly dependent on proper attention to the information that is (or could be) managed

- The information management processes

Even with full capability and competency within the six knowledge

areas, it is argued that things can still go wrong. The problem lies in

the migration of ideas and information management value from one area of

competency to another. Summarising what Bytheway explains in some

detail (and supported by selected secondary references):

- Projects: Information technology is without value until it is engineered into information systems that meet the needs of the business by means of good project management.

- Business change: The best information systems succeed in delivering benefits through the achievement of change within the business systems, but people do not appreciate change that makes new demands upon their skills in the ways that new information systems often do. Contrary to common expectations, there is some evidence that the public sector has succeeded with information technology induced business change.

- Business operations: With new systems in place, with business processes and business information improved, and with staff finally ready and able to work with new processes, then the business can get to work, even when new systems extend far beyond the boundaries of a single business.

- Performance management: Investments are no longer solely about financial results, financial success must be balanced with internal efficiency, customer satisfaction, and with organisational learning and development.

Summary

There

are always many ways to see a business, and the information management

viewpoint is only one way. It is important to remember that other areas

of business activity will also contribute to strategy – it is not only

good information management that moves a business forwards. Corporate governance, human resource management, product development and marketing

will all have an important role to play in strategic ways, and we must

not see one domain of activity alone as the sole source of strategic

success. On the other hand, corporate governance, human resource

management, product development and marketing are all dependent on

effective information management, and so in the final analysis our

competency to manage information well, on the broad basis that is

offered here, can be said to be predominant.

Operationalising information management

Managing requisite change

Organizations are often confronted with many information management challenges and issues at the operational level, especially when organisational change is engendered. The novelty of new systems architectures and a lack of experience with new styles of information management requires a level of organisational change management

that is notoriously difficult to deliver. As a result of a general

organisational reluctance to change, to enable new forms of information

management, there might be (for example): a shortfall in the requisite

resources, a failure to acknowledge new classes of information and the

new procedures that use them, a lack of support from senior management

leading to a loss of strategic vision, and even political manoeuvring

that undermines the operation of the whole organisation. However, the implementation of new forms of information management should normally lead to operational benefits.

The early work of Galbraith

In early work, taking an information processing view of organisation design, Jay Galbraith has identified five tactical areas to increase information processing capacity and reduce the need for information processing.

- Developing, implementing, and monitoring all aspects of the “environment” of an organization.

- Creation of slack resources so as to decrease the load on the overall hierarchy of resources and to reduce information processing relating to overload.

- Creation of self-contained tasks with defined boundaries and that can achieve proper closure, and with all the resources at hand required to perform the task.

- Recognition of lateral relations that cut across functional units, so as to move decision power to the process instead of fragmenting it within the hierarchy.

- Investment in vertical information systems that route information flows for a specific task (or set of tasks) in accordance to the applied business logic.

The matrix organisation

The lateral relations concept leads to an organizational form that is different from the simple hierarchy, the “matrix organization”.

This brings together the vertical (hierarchical) view of an

organisation and the horizontal (product or project) view of the work

that it does visible to the outside world. The creation of a matrix

organization is one management response to a persistent fluidity of

external demand, avoiding multifarious and spurious responses to

episodic demands that tend to be dealt with individually.