The Old Fire burning in the San Bernardino Mountains (image taken from the International Space Station)

Fire ecology is a scientific discipline concerned with natural processes involving fire in an ecosystem and the ecological

effects, the interactions between fire and the abiotic and biotic

components of an ecosystem, and the role as an ecosystem process. Many

ecosystems, particularly prairie, savanna, chaparral and coniferous forests, have evolved with fire as an essential contributor to habitat vitality and renewal. Many plant species in fire-affected environments require fire to germinate, establish, or to reproduce. Wildfire suppression not only eliminates these species, but also the animals that depend upon them.

Campaigns in the United States have historically molded public opinion to believe that wildfires

are always harmful to nature. This view is based on the outdated belief

that ecosystems progress toward an equilibrium and that any

disturbance, such as fire, disrupts the harmony of nature. More recent

ecological research has shown, however, that fire is an integral

component in the function and biodiversity

of many natural habitats, and that the organisms within these

communities have adapted to withstand, and even to exploit, natural

wildfire. More generally, fire is now regarded as a 'natural

disturbance', similar to flooding, wind-storms, and landslides, that has driven the evolution of species and controls the characteristics of ecosystems.

Fire suppression, in combination with other human-caused

environmental changes, may have resulted in unforeseen consequences for

natural ecosystems. Some large wildfires in the United States have been

blamed on years of fire suppression and the continuing expansion of

people into fire-adapted ecosystems, but climate change is more likely responsible. Land managers are faced with tough questions regarding how to restore a natural fire regime, but allowing wildfires to burn is the least expensive and likely most effective method.

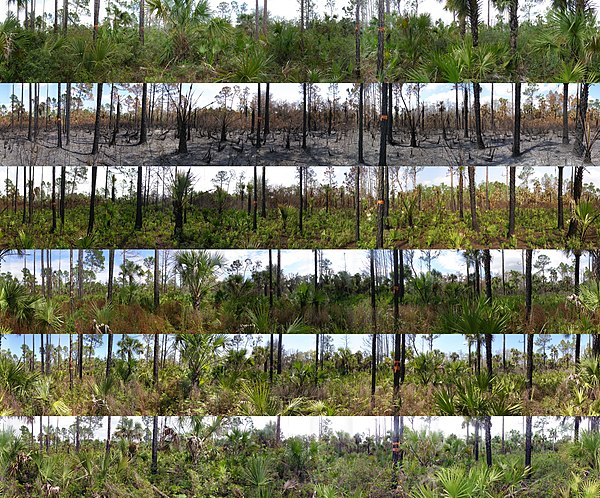

A

combination of photos taken at a photo point at Florida Panther NWR.

The photos are panoramic and cover a 360 degree view from a monitoring

point. These photos range from pre-burn to 2 year post burn.

Fire components

A fire regime describes the characteristics of fire and how it interacts with a particular ecosystem.

Its "severity" is a term that ecologists use to refer to the impact

that a fire has on an ecosystem. Ecologists can define this in many

ways, but one way is through an estimate of plant mortality. Fire can

burn at three levels. Ground fires will burn through soil that is rich

in organic matter. Surface fires will burn through dead plant material

that is lying on the ground. Crown fires will burn in the tops of shrubs

and trees. Ecosystems generally experience a mix of all three.

Fires will often break out during a dry season, but in some areas

wildfires may also commonly occur during a time of year when lightning

is prevalent. The frequency over a span of years at which fire will

occur at a particular location is a measure of how common wildfires are

in a given ecosystem. It is either defined as the average interval

between fires at a given site, or the average interval between fires in

an equivalent specified area.

Defined as the energy released per unit length of fireline (kW m−1), wildfire intensity can be estimated either as

- the product of

- the linear spread rate (m s−1),

- the low heat of combustion (kJ kg−1),

- and the combusted fuel mass per unit area,

- or it can be estimated from the flame length.

Radiata pine plantation burnt during the 2003 Eastern Victorian alpine bushfires, Australia

Abiotic responses

Fires

can affect soils through heating and combustion processes. Depending on

the temperatures of the soils caused by the combustion processes,

different effects will happen- from evaporation of water at the lower

temperature ranges, to the combustion of soil organic matter and formation of pyrogenic organic matter, otherwise known as charcoal.

Fires can cause changes in soil nutrients through a variety of

mechanisms, which include oxidation, volatilization, erosion, and

leaching by water, but the event must usually be of high temperatures in

order of significant loss of nutrients to occur. However, quantity of

nutrients available in soils are usually increased due to the ash that

is generated, and this is made quickly available, as opposed to the slow

release of nutrients by decomposition. Rock spalling (or thermal exfoliation) accelerates weathering of rock and potentially the release of some nutrients.

Increase in the pH of the soil following a fire is commonly

observed, most likely due to the formation of calcium carbonate, and the

subsequent decomposition of this calcium carbonate to calcium oxide

when temperatures get even higher.

It could also be due to the increased cation content in the soil due to

the ash, which temporarily increases soil pH. Microbial activity in the

soil might also increase due to the heating of soil and increased

nutrient content in the soil, though studies have also found complete

loss of microbes on the top layer of soil after a fire. Overall, soils become more basic (higher pH) following fires because of acid combustion. By driving novel chemical reactions at high temperatures, fire can even alter the texture and structure of soils by affecting the clay content and the soil's porosity.

Removal of vegetation following a fire can cause several effects

on the soil, such as increasing the temperatures of the soil during the

day due to increased solar radiation on the soil surface, and greater

cooling due to loss of radiative heat at night. Fewer leaves to

intercept rain will also cause more rain to reach the soil surface, and

with fewer plants to absorb the water, the amount of water content in

the soils might increase. However, it might be seen that ash can be

water repellent when dry, and therefore water content and availability

might not actually increase.

Biotic responses and adaptations

Plants

Lodgepole pine cones

Plants have evolved many adaptations to cope with fire. Of these adaptations, one of the best-known is likely pyriscence,

where maturation and release of seeds is triggered, in whole or in

part, by fire or smoke; this behaviour is often erroneously called serotiny,

although this term truly denotes the much broader category of seed

release activated by any stimulus. All pyriscent plants are serotinous,

but not all serotinous plants are pyriscent (some are necriscent,

hygriscent, xeriscent, soliscent, or some combination thereof). On the

other hand, germination of seed activated by trigger is not to be confused with pyriscence; it is known as physiological dormancy.

In chaparral communities in Southern California, for example, some plants have leaves coated in flammable oils that encourage an intense fire.

This heat causes their fire-activated seeds to germinate (an example of

dormancy) and the young plants can then capitalize on the lack of competition in a burnt landscape. Other plants have smoke-activated seeds, or fire-activated buds. The cones of the Lodgepole pine (Pinus contorta) are, conversely, pyriscent: they are sealed with a resin that a fire melts away, releasing the seeds. Many plant species, including the shade-intolerant giant sequoia (Sequoiadendron giganteum),

require fire to make gaps in the vegetation canopy that will let in

light, allowing their seedlings to compete with the more shade-tolerant

seedlings of other species, and so establish themselves.

Because their stationary nature precludes any fire avoidance, plant

species may only be fire-intolerant, fire-tolerant or fire-resistant.

Fire intolerance

Fire-intolerant

plant species tend to be highly flammable and are destroyed completely

by fire. Some of these plants and their seeds may simply fade from the

community after a fire and not return; others have adapted to ensure

that their offspring survives into the next generation. "Obligate

seeders" are plants with large, fire-activated seed banks that

germinate, grow, and mature rapidly following a fire, in order to

reproduce and renew the seed bank before the next fire.

Seeds may contain the receptor protein KAI2, that is activated by the growth hormones karrikin released by the fire.

Fire tolerance. Typical regrowth after an Australian bushfire

Fire tolerance

Fire-tolerant

species are able to withstand a degree of burning and continue growing

despite damage from fire. These plants are sometimes referred to as "resprouters."

Ecologists have shown that some species of resprouters store extra

energy in their roots to aid recovery and re-growth following a fire. For example, after an Australian bushfire, the Mountain Grey Gum tree (Eucalyptus cypellocarpa)

starts producing a mass of shoots of leaves from the base of the tree

all the way up the trunk towards the top, making it look like a black

stick completely covered with young, green leaves.

Fire resistance

Fire-resistant

plants suffer little damage during a characteristic fire regime. These

include large trees whose flammable parts are high above surface fires.

Mature ponderosa pine (Pinus ponderosa)

is an example of a tree species that suffers virtually no crown damage

under a naturally mild fire regime, because it sheds its lower,

vulnerable branches as it matures.

Animals, birds and microbes

A mixed flock of hawks hunting in and around a bushfire

Like plants, animals display a range of abilities to cope with fire,

but they differ from most plants in that they must avoid the actual fire

to survive. Although birds

are vulnerable when nesting, they are generally able to escape a fire;

indeed they often profit from being able to take prey fleeing from a

fire and to recolonize burned areas quickly afterwards. Some

anthropological and ethno-ornithological evidence suggests that certain

species of fire-foraging raptors may engage in intentional fire

propagation to flush out prey. Mammals are often capable of fleeing a fire, or seeking cover if they can burrow. Amphibians and reptiles

may avoid flames by burrowing into the ground or using the burrows of

other animals. Amphibians in particular are able to take refuge in water

or very wet mud.

Some arthropods also take shelter during a fire, although the heat and smoke may actually attract some of them, to their peril. Microbial

organisms in the soil vary in their heat tolerance but are more likely

to be able to survive a fire the deeper they are in the soil. A low fire

intensity, a quick passing of the flames and a dry soil will also help.

An increase in available nutrients after the fire has passed may result

in larger microbial communities than before the fire.

The generally greater heat tolerance of bacteria relative to fungi

makes it possible for soil microbial population diversity to change

following a fire, depending on the severity of the fire, the depth of

the microbes in the soil, and the presence of plant cover. Certain species of fungi, such as Cylindrocarpon destructans

appear to be unaffected by combustion contaminants, which can inhibit

re-population of burnt soil by other microorganisms, and therefore have a

higher chance of surviving fire disturbance and then recolonizing and

out-competing other fungal species afterwards.

Fire and ecological succession

Fire

behavior is different in every ecosystem and the organisms in those

ecosystems have adapted accordingly. One sweeping generality is that in

all ecosystems, fire creates a mosaic of different habitat

patches, with areas ranging from those having just been burned to those

that have been untouched by fire for many years. This is a form of ecological succession

in which a freshly burned site will progress through continuous and

directional phases of colonization following the destruction caused by

the fire.

Ecologists usually characterize succession through the changes in

vegetation that successively arise. After a fire, the first species to

re-colonize will be those with seeds are already present in the soil, or

those with seeds are able to travel into the burned area quickly. These

are generally fast-growing herbaceous

plants that require light and are intolerant of shading. As time

passes, more slowly growing, shade-tolerant woody species will suppress

some of the herbaceous plants.

Conifers are often early successional species, while broad leaf trees

frequently replace them in the absence of fire. Hence, many conifer

forests are themselves dependent upon recurring fire.

Different species of plants, animals, and microbes specialize in

exploiting different stages in this process of succession, and by

creating these different types of patches, fire allows a greater number

of species to exist within a landscape. Soil characteristics will be a

factor in determining the specific nature of a fire-adapted ecosystem,

as will climate and topography.

Some examples of fire in different ecosystems

Forests

Mild to moderate fires burn in the forest understory, removing small trees and herbaceous groundcover.

High-severity fires will burn into the crowns of the trees and kill

most of the dominant vegetation. Crown fires may require support from

ground fuels to maintain the fire in the forest canopy (passive crown

fires), or the fire may burn in the canopy independently of any ground

fuel support (an active crown fire). High-severity fire creates complex early seral forest habitat, or snag forest

with high levels of biodiversity. When a forest burns frequently and

thus has less plant litter build-up, below-ground soil temperatures rise

only slightly and will not be lethal to roots that lie deep in the

soil. Although other characteristics of a forest will influence the impact of fire upon it, factors such as climate and topography play an important role in determining fire severity and fire extent. Fires spread most widely during drought years, are most severe on upper

slopes and are influenced by the type of vegetation that is growing.

Forests in British Columbia

In Canada,

forests cover about 10% of the land area and yet harbor 70% of the

country’s bird and terrestrial mammal species. Natural fire regimes are

important in maintaining a diverse assemblage of vertebrate species in up to twelve different forest types in British Columbia.

Different species have adapted to exploit the different stages of

succession, regrowth and habitat change that occurs following an episode

of burning, such as downed trees and debris. The characteristics of the

initial fire, such as its size and intensity, cause the habitat to

evolve differentially afterwards and influence how vertebrate species

are able to use the burned areas.

Shrublands

Lightning-sparked wildfires are frequent occurrences on shrublands and grasslands in Nevada.

Shrub fires typically concentrate in the canopy and spread continuously if the shrubs are close enough together. Shrublands

are typically dry and are prone to accumulations of highly volatile

fuels, especially on hillsides. Fires will follow the path of least

moisture and the greatest amount of dead fuel material. Surface and

below-ground soil temperatures during a burn are generally higher than

those of forest fires because the centers of combustion lie closer to

the ground, although this can vary greatly. Common plants in shrubland or chaparral include manzanita, chamise and Coyote Brush.

California shrublands

California shrubland, commonly known as chaparral, is a widespread plant community of low growing species, typically on arid sloping areas of the California Coast Ranges or western foothills of the Sierra Nevada. There are a number of common shrubs and tree shrub forms in this association, including salal, toyon, coffeeberry and Western poison oak. Regeneration following a fire is usually a major factor in the association of these species.

South African Fynbos shrublands

Fynbos shrublands occur in a small belt across South Africa.

The plant species in this ecosystem are highly diverse, yet the

majority of these species are obligate seeders, that is, a fire will

cause germination of the seeds and the plants will begin a new

life-cycle because of it. These plants may have coevolved into obligate seeders as a response to fire and nutrient-poor soils.

Because fire is common in this ecosystem and the soil has limited

nutrients, it is most efficient for plants to produce many seeds and

then die in the next fire. Investing a lot of energy in roots to survive

the next fire when those roots will be able to extract little extra

benefit from the nutrient-poor soil would be less efficient. It is

possible that the rapid generation time that these obligate seeders

display has led to more rapid evolution and speciation in this ecosystem, resulting in its highly diverse plant community.

Grasslands

Grasslands

burn more readily than forest and shrub ecosystems, with the fire

moving through the stems and leaves of herbaceous plants and only

lightly heating the underlying soil, even in cases of high intensity. In

most grassland ecosystems, fire is the primary mode of decomposition, making it crucial in the recycling of nutrients.

In some grassland systems, fire only became the primary mode of

decomposition after the disappearance of large migratory herds of

browsing or grazing megafauna driven by predator pressure. In the

absence of functional communities of large migratory herds of

herbivorous megafauna and attendant predators, overuse of fire to

maintain grassland ecosystems may lead to excessive oxidation, loss of

carbon, and desertification in susceptible climates. Some grassland ecosystems respond poorly to fire.

North American grasslands

In North America fire-adapted invasive grasses such as Bromus tectorum

contribute to increased fire frequency which exerts selective pressure

against native species. This is a concern for grasslands in the Western United States.

In less arid grassland presettlement fires worked in concert with grazing to create a healthy grassland ecosystem as indicated by the accumulation of soil organic matter significantly altered by fire.

The tallgrass prairie ecosystem in the Flint Hills of eastern Kansas and Oklahoma is responding positively to the current use of fire in combination with grazing.

South African savanna

In the savanna of South Africa,

recently burned areas have new growth that provides palatable and

nutritious forage compared to older, tougher grasses. This new forage

attracts large herbivores

from areas of unburned and grazed grassland that has been kept short by

constant grazing. On these unburned "lawns", only those plant species

adapted to heavy grazing are able to persist; but the distraction

provided by the newly burned areas allows grazing-intolerant grasses to

grow back into the lawns that have been temporarily abandoned, so

allowing these species to persist within that ecosystem.

Longleaf pine savannas

Yellow pitcher plant is dependent upon recurring fire in coastal plain savannas and flatwoods.

Much of the southeastern United States was once open longleaf pine

forest with a rich understory of grasses, sedges, carnivorous plants

and orchids. The above maps shows that these ecosystems (coded as pale

blue) had the highest fire frequency of any habitat, once per decade or

less. Without fire, deciduous forest trees invade, and their shade

eliminates both the pines and the understory. Some of the typical

plants associated with fire include Yellow Pitcher Plant and Rose pogonia. The abundance and diversity of such plants is closely related to fire frequency. Rare animals such as gopher tortoises and indigo snakes also depend upon these open grasslands and flatwoods. Hence, the restoration of fire is a priority to maintain species composition and biological diversity.

Fire in wetlands

Although

it may seem strange, many kinds of wetlands are also influenced by

fire.

This usually occurs during periods of drought.

In landscapes with peat soils, such as bogs, the peat substrate itself

may burn, leaving holes that refill with water as new ponds.

Fires that are less intense will remove accumulated litter and allow

other wetland plants to regenerate from buried seeds, or from rhizomes.

Wetlands that are influenced by fire include coastal marshes, wet prairies, peat bogs, floodplains, prairie marshes and flatwoods.

Since wetlands can store large amounts of carbon in peat, the fire

frequency of vast northern peatlands is linked to processes controlling

the carbon dioxide levels of the atmosphere, and to the phenomenon of

global warming.

Dissolved organic carbon (DOC) is abundant in wetlands and plays a critical role in their ecology. In the Florida Everglades, a significant portion of the DOC is "dissolved charcoal" indicating that fire can play a critical role in wetland ecosystems.

Fire suppression

Fire serves many important functions within fire-adapted ecosystems.

Fire plays an important role in nutrient cycling, diversity maintenance

and habitat structure. The suppression of fire can lead to unforeseen

changes in ecosystems that often adversely affect the plants, animals

and humans that depend upon that habitat. Wildfires that deviate from a

historical fire regime because of fire suppression are called

"uncharacteristic fires".

Chaparral communities

In 2003, southern California witnessed powerful chaparral

wildfires. Hundreds of homes and hundreds of thousands of acres of land

went up in flames. Extreme fire weather (low humidity, low fuel

moisture and high winds) and the accumulation of dead plant material

from 8 years of drought, contributed to a catastrophic outcome. Although

some have maintained that fire suppression contributed to an unnatural

buildup of fuel loads, a detailed analysis of historical fire data has showed that this may not have been the case.

Fire suppression activities had failed to exclude fire from the

southern California chaparral. Research showing differences in fire size

and frequency between southern California and Baja has been used to

imply that the larger fires north of the border are the result of fire

suppression, but this opinion has been challenged by numerous

investigators and is no longer supported by the majority of fire

ecologists.

One consequence of the fires in 2003 has been the increased density of invasive and non-native

plant species that have quickly colonized burned areas, especially

those that had already been burned in the previous 15 years. Because

shrubs in these communities are adapted to a particular historical fire

regime, altered fire regimes may change the selective pressures on plants and favor invasive and non-native species that are better able to exploit the novel post-fire conditions.

Fish impacts

The Boise National Forest is a US national forest located north and east of the city of Boise, Idaho.

Following several uncharacteristically large wildfires, an immediately

negative impact on fish populations was observed, posing particular

danger to small and isolated fish populations. In the long term, however, fire appears to rejuvenate fish habitats by causing hydraulic changes that increase flooding and lead to silt

removal and the deposition of a favorable habitat substrate. This leads

to larger post-fire populations of the fish that are able to recolonize

these improved areas.

But although fire generally appears favorable for fish populations in

these ecosystems, the more intense effects of uncharacteristic

wildfires, in combination with the fragmentation of populations by human

barriers to dispersal such as weirs and dams, will pose a threat to fish populations.

Fire as a management tool

Restoration ecology is the name given to an attempt to reverse or mitigate some of the changes that humans have caused to an ecosystem. Controlled burning

is one tool that is currently receiving considerable attention as a

means of restoration and management. Applying fire to an ecosystem may

create habitats for species that have been negatively impacted by fire

suppression, or fire may be used as a way of controlling invasive

species without resorting to herbicides or pesticides. However, there is

debate as to what state managers should aim to restore their ecosystems

to, especially as to whether "natural" means pre-human or pre-European.

Native American use of fire, not natural fires, historically maintained the diversity of the savannas of North America. When, how, and where managers should use fire as a management tool is a subject of debate.

The Great Plains shortgrass prairie

A combination of heavy livestock grazing and fire-suppression has

drastically altered the structure, composition, and diversity of the

shortgrass prairie ecosystem on the Great Plains,

allowing woody species to dominate many areas and promoting

fire-intolerant invasive species. In semi-arid ecosystems where the

decomposition of woody material is slow, fire is crucial for returning

nutrients to the soil and allowing the grasslands to maintain their high

productivity.

Although fire can occur during the growing or the dormant

seasons, managed fire during the dormant season is most effective at

increasing the grass and forb cover, biodiversity and plant nutrient uptake in shortgrass prairies.

Managers must also take into account, however, how invasive and

non-native species respond to fire if they want to restore the integrity

of a native ecosystem. For example, fire can only control the invasive spotted knapweed (Centaurea maculosa)

on the Michigan tallgrass prairie in the summer, because this is the

time in the knapweed's life cycle that is most important to its

reproductive growth.

Mixed conifer forests in the US Sierra Nevada

Mixed conifer forests in the United States Sierra Nevada

used to have fire return intervals that ranged from 5 years up to 300

years, depending on the local climate. Lower elevations had more

frequent fire return intervals, whilst higher and wetter elevations saw

much longer intervals between fires. Native Americans tended to set

fires during fall and winter, and land at a higher elevation was

generally occupied by Native Americans only during the summer.

Finnish boreal forests

The

decline of habitat area and quality has caused many species populations

to be red-listed by the International Union for Conservation of Nature.

According to a study on forest management of Finnish boreal forests,

improving the habitat quality of areas outside reserves can help in

conservation efforts of endangered deadwood-dependent beetles. These

beetles and various types of fungi both need dead trees in order to

survive. Old growth forests can provide this particular habitat.

However, most Fennoscandian boreal forested areas are used for timber

and therefore are unprotected. The use of controlled burning and tree

retention of a forested area with deadwood was studied and its effect on

the endangered beetles. The study found that after the first year of

management the number of species increased in abundance and richness

compared to pre-fire treatment. The abundance of beetles continued to

increase the following year in sites where tree retention was high and

deadwood was abundant. The correlation between forest fire management

and increased beetle populations shows a key to conserving these

red-listed species.

Australian eucalypt forests

Much

of the old growth eucalypt forest in Australia is designated for

conservation. Management of these forests is important because species

like Eucalyptus grandis rely on fire to survive. There are a few eucalypt species that do not have a lignotuber,

a root swelling structure that contains buds where new shoots can then

sprout. During a fire a lignotuber is helpful in the reestablishment of

the plant. Because some eucalypts do not have this particular mechanism,

forest fire management can be helpful by creating rich soil, killing

competitors, and allowing seeds to be released.

Management policies

United States

Fire policy

in the United States involves the federal government, individual state

governments, tribal governments, interest groups, and the general

public. The new federal outlook on fire policy parallels advances in

ecology and is moving towards the view that many ecosystems depend on

disturbance for their diversity and for the proper maintenance of their

natural processes. Although human safety is still the number one

priority in fire management, new US government objectives include a

long-term view of ecosystems. The newest policy allows managers to gauge

the relative values of private property and resources in particular

situations and to set their priorities accordingly.

One of the primary goals in fire management is to improve public education in order to suppress the "Smokey Bear" fire-suppression mentality and introduce the public to the benefits of regular natural fires.