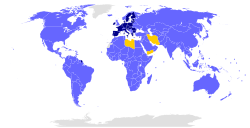

World map of countries by their raw ecological footprint, relative to the world average biocapacity (2007).

National ecological surplus or deficit,

measured as a country's biocapacity per person (in global hectares)

minus its ecological footprint per person (also in global hectares).

Data from 2013.

| −9 | −8 | −7 | −6 | −5 | −4 | −3 | −2 | −1 | 0 | 2 | 4 | 6 | 8 |

The ecological footprint measures human demand on nature, i.e., the quantity of nature it takes to support people or an economy. It tracks this demand through an ecological accounting

system. The accounts contrast the biologically productive area people

use for their consumption to the biologically productive area available

within a region or the world (biocapacity, the productive area that can regenerate what people demand from nature). In short, it is a measure of human impact on Earth's ecosystem and reveals the dependence of the human economy on natural capital.

Footprint and biocapacity can be compared at the individual,

regional, national or global scale. Both footprint and biocapacity

change every year with number of people, per person consumption,

efficiency of production, and productivity of ecosystems. At a global

scale, footprint assessments show how big humanity's demand is compared

to what planet Earth can renew. Since 2003, Global Footprint Network

has calculated the ecological footprint from UN data sources for the

world as a whole and for over 200 nations (known as the National

Footprint Accounts). Every year the calculations are updated with the

newest data. The time series are recalculated with every update since UN

statistics also change historical data sets. As shown in Lin et al.

(2018)

the time trends for countries and the world have stayed consistent

despite data updates. Also, a recent study by the Swiss Ministry of

Environment independently recalculated the Swiss trends and reproduced

them within 1–4% for the time period that they studied (1996–2015). Global Footprint Network estimates that, as of 2014, humanity has been using natural capital 1.7 times as fast as Earth can renew it. This means humanity's ecological footprint corresponds to 1.7 planet Earths.

Ecological footprint analysis is widely used around the Earth in support of sustainability assessments.

It enables people to measure and manage the use of resources throughout

the economy and explore the sustainability of individual lifestyles, goods and services, organizations, industry sectors, neighborhoods, cities, regions and nations.

Since 2006, a first set of ecological footprint standards exist that

detail both communication and calculation procedures. The latest version

are the updated standards from 2009.

Footprint measurements and methodology

The natural resources of Earth are finite, and unsustainably strained by current levels of human activity.

For 2014, Global Footprint Network estimated humanity's ecological

footprint as 1.7 planet Earths. This means that, according to their

calculations, humanity's demands were 1.7 times faster than what the

planet's ecosystems renewed.

Ecological footprints can be calculated at any scale: for an

activity, a person, a community, a city, a town, a region, a nation, or

humanity as a whole. Cities, due to their population concentration, have large ecological footprints and have become ground zero for footprint reduction.

The ecological footprint accounting method at the national level is described on the web page of Global Footprint Network or in greater detail in academic papers, including Borucke et al.

The National Accounts Review Committee has also published a research agenda on how to improve the accounts.

Overview

The first academic publication about ecological footprints was by William Rees in 1992. The ecological footprint concept and calculation method was developed as the PhD dissertation of Mathis Wackernagel, under Rees' supervision at the University of British Columbia in Vancouver, Canada, from 1990–1994. Originally, Wackernagel and Rees called the concept "appropriated carrying capacity".

To make the idea more accessible, Rees came up with the term

"ecological footprint", inspired by a computer technician who praised

his new computer's "small footprint on the desk". In early 1996, Wackernagel and Rees published the book Our Ecological Footprint: Reducing Human Impact on the Earth with illustrations by Phil Testemale.

Footprint values at the end of a survey are categorized for

Carbon, Food, Housing, and Goods and Services as well as the total

footprint number of Earths needed to sustain the world's population at

that level of consumption. This approach can also be applied to an

activity such as the manufacturing of a product or driving of a car.

This resource accounting is similar to life-cycle analysis wherein the consumption of energy, biomass (food, fiber), building material, water and other resources are converted into a normalized measure of land area called global hectares (gha).

The focus of Ecological Footprint accounting is biological resources. Rather than non-renewable resources like oil or minerals, it is the biological resources that are the materially most limiting resources

for the human enterprise. For instance, while the amount of fossil fuel

still underground is limited, even more limiting is the biosphere's

ability to cope with the CO2 emitted when burning it. This

ability is one of the competing uses of the planet's biocapacity.

Similarly, minerals are limited by the energy available to extract them

from the lithosphere and concentrate them. The limits of ecosystems'

ability to renew biomass is given by factors such as water availability,

climate, soil fertility, solar energy, technology and management

practices. This capacity to renew, driven by photosynthesis, is called biocapacity.

Per capita ecological footprint (EF), or ecological footprint

analysis (EFA), is a means of comparing consumption and lifestyles, and

checking this against biocapacity - nature's ability to provide for this

consumption. The tool can inform policy by examining to what extent a

nation uses more (or less) than is available within its territory, or to

what extent the nation's lifestyle would be replicable worldwide. The

footprint can also be a useful tool to educate people about carrying capacity and overconsumption, with the aim of altering personal behavior. Ecological footprints may be used to argue that many current lifestyles are not sustainable.

Such a global comparison also clearly shows the inequalities of

resource use on this planet at the beginning of the twenty-first

century.

In 2007, the average biologically productive area per person worldwide was approximately 1.8 global hectares (gha) per capita. The U.S. footprint per capita was 9.0 gha, and that of Switzerland was 5.6 gha, while China's was 1.8 gha. The WWF claims that the human footprint has exceeded the biocapacity (the available supply of natural resources) of the planet by 20%.

Wackernagel and Rees originally estimated that the available biological

capacity for the 6 billion people on Earth at that time was about 1.3

hectares per person, which is smaller than the 1.8 global hectares

published for 2006, because the initial studies neither used global

hectares nor included bioproductive marine areas.

A number of NGOs offer ecological footprint calculators.

Global trends in humanity's ecological footprint

According

to the 2018 edition of the National Footprint Accounts, humanity's

total ecological footprint has exhibited an increasing trend since 1961,

growing an average of 2.1% per year (SD= 1.9). Humanity's ecological footprint was 7.0 billion gha in 1961 and increased to 20.6 billion gha in 2014. The world-average ecological footprint in 2014 was 2.8 global hectares per person.

The carbon footprint is the fastest growing part of the ecological

footprint and accounts currently for about 60% of humanity's total

ecological footprint.

The Earth's biocapacity has not increased at the same rate as the

ecological footprint. The increase of biocapacity averaged at only 0.5%

per year (SD = 0.7). Because of agricultural intensification, biocapacity was at 9.6 billion gha in 1961 and grew to 12.2 billion gha in 2016.

Therefore, the Earth has been in ecological overshoot (where

humanity is using more resources and generating waste at a pace that the

ecosystem can't renew) since the 1970s. In 2018, Earth Overshoot Day, the date where humanity has used more from nature than the planet can renew in the entire year, was estimated to be August 1. Now more than 85% of humanity lives in countries that run an ecological deficit. This means their ecological footprint for consumption exceeds the biocapacity of that country.

Studies in the United Kingdom

The UK's average ecological footprint is 5.45 global hectares per capita (gha) with variations between regions ranging from 4.80 gha (Wales) to 5.56 gha (East England).

Two recent studies have examined relatively low-impact small communities. BedZED, a 96-home mixed-income housing development in South London, was designed by Bill Dunster Architects and sustainability consultants BioRegional for the Peabody Trust.

Despite being populated by relatively "mainstream" home-buyers, BedZED

was found to have a footprint of 3.20 gha due to on-site renewable

energy production, energy-efficient architecture, and an extensive green

lifestyles program that included on-site London's first carsharing

club. The report did not measure the added footprint of the 15,000

visitors who have toured BedZED since its completion in 2002. Findhorn Ecovillage, a rural intentional community in Moray, Scotland,

had a total footprint of 2.56 gha, including both the many guests and

visitors who travel to the community to undertake residential courses

there and the nearby campus of Cluny Hill

College. However, the residents alone have a footprint of 2.71 gha, a

little over half the UK national average and one of the lowest

ecological footprints of any community measured so far in the

industrialized world.

Keveral Farm, an organic farming community in Cornwall, was found to

have a footprint of 2.4 gha, though with substantial differences in

footprints among community members.

Ecological footprint at the individual level

In a 2012 study of consumers acting "green" vs. "brown" (where green

people are «expected to have significantly lower ecological impact than

“brown” consumers»), the conclusion was "the research found no

significant difference between the carbon footprints of green and brown

consumers". A 2013 study concluded the same.

A 2017 study published in Environmental Research Letters

posited that the most significant way individuals could reduce their

own carbon footprint is to have fewer children, followed by living

without a vehicle, forgoing air travel and adopting a plant-based diet. The 2017 World Scientists' Warning to Humanity:

A Second Notice, co-signed by over 15,000 scientists around the globe,

urges human beings to "re-examine and change our individual behaviors,

including limiting our own reproduction (ideally to replacement level at

most) and drastically diminishing our per capita consumption of fossil

fuels, meat, and other resources." According to a 2018 study published in Science,

avoiding animal products altogether, including meat and dairy, is the

most significant way individuals can reduce their overall ecological

impact on earth, meaning "not just greenhouse gases, but global

acidification, eutrophication, land use and water use".

Reviews and critiques

Early criticism was published by van den Bergh and Verbruggen in 1999, which was updated in 2014. Another criticism was published in 2008. A more complete review commissioned by the Directorate-General for the Environment (European Commission)

was published in June 2008. The review found Ecological Footprint "a

useful indicator for assessing progress on the EU’s Resource Strategy"

the authors noted that Ecological Footprint analysis was unique "in its

ability to relate resource use to the concept of carrying capacity." The

review noted that further improvements in data quality, methodologies

and assumptions were needed.

A recent critique of the concept is due to Blomqvist et al., 2013a, with a reply from Rees and Wackernagel, 2013, and a rejoinder by Blomqvist et al., 2013b.

An additional strand of critique is due to Giampietro and Saltelli (2014a), with a reply from Goldfinger et al., 2014, a rejoinder by Giampietro and Saltelli (2014a), and additional comments from van den Bergh and Grazi (2015).

A number of countries have engaged in research collaborations to

test the validity of the method. This includes Switzerland, Germany,

United Arab Emirates, and Belgium.

Grazi et al. (2007) have performed a systematic comparison of the

ecological footprint method with spatial welfare analysis that includes

environmental externalities, agglomeration effects and trade advantages.

They find that the two methods can lead to very distinct, and even

opposite, rankings of different spatial patterns of economic activity.

However this should not be surprising, since the two methods address

different research questions.

Newman

(2006) has argued that the ecological footprint concept may have an

anti-urban bias, as it does not consider the opportunities created by

urban growth.

Calculating the ecological footprint for densely populated areas, such

as a city or small country with a comparatively large population — e.g.

New York and Singapore respectively — may lead to the perception of

these populations as "parasitic". This is because these communities have

little intrinsic biocapacity, and instead must rely upon large hinterlands.

Critics argue that this is a dubious characterization since mechanized

rural farmers in developed nations may easily consume more resources

than urban inhabitants, due to transportation requirements and the

unavailability of economies of scale. Furthermore, such moral conclusions seem to be an argument for autarky.

Some even take this train of thought a step further, claiming that the

Footprint denies the benefits of trade. Therefore, the critics argue

that the Footprint can only be applied globally.

The method seems to reward the replacement of original ecosystems with high-productivity agricultural monocultures

by assigning a higher biocapacity to such regions. For example,

replacing ancient woodlands or tropical forests with monoculture forests

or plantations may improve the ecological footprint. Similarly, if organic farming

yields were lower than those of conventional methods, this could result

in the former being "penalized" with a larger ecological footprint.

Of course, this insight, while valid, stems from the idea of using the

footprint as one's only metric. If the use of ecological footprints are

complemented with other indicators, such as one for biodiversity, the problem might be solved. Indeed, WWF's Living Planet Report complements the biennial Footprint calculations with the Living Planet Index of biodiversity.[51]

Manfred Lenzen and Shauna Murray have created a modified Ecological

Footprint that takes biodiversity into account for use in Australia.

Although the ecological footprint model prior to 2008 treated nuclear power in the same manner as coal power, the actual real world effects of the two are radically different. A life cycle analysis centered on the Swedish Forsmark Nuclear Power Plant estimated carbon dioxide emissions at 3.10 g/kW⋅h and 5.05 g/kW⋅h in 2002 for the Torness Nuclear Power Station.

This compares to 11 g/kW⋅h for hydroelectric power, 950 g/kW⋅h for

installed coal, 900 g/kW⋅h for oil and 600 g/kW⋅h for natural gas

generation in the United States in 1999.

Figures released by Mark Hertsgaard, however, show that because of the

delays in building nuclear plants and the costs involved, investments in

energy efficiency and renewable energies have seven times the return on

investment of investments in nuclear energy.

The Swedish utility Vattenfall did a study of full life-cycle greenhouse-gas emissions of energy sources

the utility uses to produce electricity, namely: Nuclear, Hydro, Coal,

Gas, Solar Cell, Peat and Wind. The net result of the study was that

nuclear power produced 3.3 grams of carbon dioxide per kW⋅h of produced

power. This compares to 400 for natural gas and 700 for coal (according to this study). The study also concluded that nuclear power produced the smallest amount of CO2 of any of their electricity sources.

Claims exist that the problems of nuclear waste do not come anywhere close to approaching the problems of fossil fuel waste. A 2004 article from the BBC states: "The World Health Organization

(WHO) says 3 million people are killed worldwide by outdoor air

pollution annually from vehicles and industrial emissions, and 1.6

million indoors through using solid fuel." In the U.S. alone, fossil fuel waste kills 20,000 people each year. A coal power plant releases 100 times as much radiation as a nuclear power plant of the same wattage. It is estimated that during 1982, US coal burning released 155 times as much radioactivity into the atmosphere as the Three Mile Island incident. In addition, fossil fuel waste causes global warming, which leads to increased deaths from hurricanes, flooding, and other weather events. The World Nuclear Association

provides a comparison of deaths due to accidents among different forms

of energy production. In their comparison, deaths per TW-yr of

electricity produced (in UK and USA) from 1970 to 1992 are quoted as 885

for hydropower, 342 for coal, 85 for natural gas, and 8 for nuclear.

The Western Australian government State of the Environment Report

included an Ecological Footprint measure for the average Western

Australian seven times the average footprint per person on the planet in

2007, a total of about 15 hectares.

Footprint by country

Ecological footprint for different nations compared to their Human Development Index.

The world-average ecological footprint in 2013 was 2.8 global hectares per person.

The average per country ranges from over 10 to under 1 global hectares

per person. There is also a high variation within countries, based on

individual lifestyle and economic possibilities.

The GHG footprint or the more narrow carbon footprint

are a component of the ecological footprint. Often, when only the

carbon footprint is reported, it is expressed in weight of CO2 (or CO2e

representing GHG warming potential (GGWP)), but it can also be expressed

in land areas like ecological footprints. Both can be applied to

products, people or whole societies.

Implications

. . . the average world citizen has an eco-footprint of about 2.7 global average hectares while there are only 2.1 global hectare of bioproductive land and water per capita on earth. This means that humanity has already overshot global biocapacity by 30% and now lives unsustainabily by depleting stocks of "natural capital"