| |

| Description | Around 50–100 million vertebrate animals are used in experiments annually. |

|---|---|

| Subjects | Animal testing, science, medicine, animal welfare, animal rights, ethics |

Animal testing, also known as animal experimentation, animal research and in vivo testing, is the use of non-human animals in experiments that seek to control the variables that affect the behavior or biological system under study. This approach can be contrasted with field studies in which animals are observed in their natural environments or habitats. Experimental research with animals is usually conducted in universities, medical schools, pharmaceutical companies, defense establishments and commercial facilities that provide animal-testing services to industry. The focus of animal testing varies on a continuum from pure research, focusing on developing fundamental knowledge of an organism, to applied research, which may focus on answering some question of great practical importance, such as finding a cure for a disease. Examples of applied research include testing disease treatments, breeding, defense research and toxicology, including cosmetics testing. In education, animal testing is sometimes a component of biology or psychology courses. The practice is regulated to varying degrees in different countries.

It is estimated that the annual use of vertebrate animals—from zebrafish to non-human primates—ranges from tens to more than 100 million. In the European Union, vertebrate species represent 93% of animals used in research, and 11.5 million animals were used there in 2011. By one estimate the number of mice and rats used in the United States alone in 2001 was 80 million. Mice, rats, fish, amphibians and reptiles together account for over 85% of research animals.

Most animals are euthanized after being used in an experiment. Sources of laboratory animals vary between countries and species; most animals are purpose-bred, while a minority are caught in the wild or supplied by dealers who obtain them from auctions and pounds. Supporters of the use of animals in experiments, such as the British Royal Society, argue that virtually every medical achievement in the 20th century relied on the use of animals in some way. The Institute for Laboratory Animal Research of the United States National Academy of Sciences has argued that animal research cannot be replaced by even sophisticated computer models, which are unable to deal with the extremely complex interactions between molecules, cells, tissues, organs, organisms and the environment. Animal rights organizations—such as PETA and BUAV—question the need for and legitimacy of animal testing, arguing that it is cruel and poorly regulated, that medical progress is actually held back by misleading animal models that cannot reliably predict effects in humans, that some of the tests are outdated, that the costs outweigh the benefits, or that animals have the intrinsic right not to be used or harmed in experimentation.

Definitions

The terms animal testing, animal experimentation, animal research, in vivo testing, and vivisection have similar denotations but different connotations. Literally, "vivisection" means "live sectioning" of an animal, and historically referred only to experiments that involved the dissection of live animals. The term is occasionally used to refer pejoratively to any experiment using living animals; for example, the Encyclopædia Britannica defines "vivisection" as: "Operation on a living animal for experimental rather than healing purposes; more broadly, all experimentation on live animals", although dictionaries point out that the broader definition is "used only by people who are opposed to such work". The word has a negative connotation, implying torture, suffering, and death. The word "vivisection" is preferred by those opposed to this research, whereas scientists typically use the term "animal experimentation".

History

The earliest references to animal testing are found in the writings of the Greeks in the 2nd and 4th centuries BC. Aristotle and Erasistratus were among the first to perform experiments on living animals. Galen, a 2nd-century Roman physician, dissected pigs and goats; he is known as the "father of vivisection". Avenzoar, a 12th-century Arabic physician in Moorish Spain also practiced dissection; he introduced animal testing as an experimental method of testing surgical procedures before applying them to human patients.

Animals have repeatedly been used through the history of biomedical research. In 1831, the founders of the Dublin Zoo were members of the medical profession who were interested in studying animals while they were alive and when they were dead. In the 1880s, Louis Pasteur convincingly demonstrated the germ theory of medicine by inducing anthrax in sheep. In the 1880s, Robert Koch infected mice and guinea pigs with anthrax and tuberculosis. In the 1890s, Ivan Pavlov famously used dogs to describe classical conditioning. In World War I, German agents infected sheep bound for Russia with anthrax, and inoculated mules and horses of the French cavalry with the equine glanders disease. Between 1917 and 1918, the Germans infected mules in Argentina bound for American forces, resulting in the death of 200 mules. Insulin was first isolated from dogs in 1922, and revolutionized the treatment of diabetes. On 3 November 1957, a Soviet dog, Laika, became the first of many animals to orbit the earth. In the 1970s, antibiotic treatments and vaccines for leprosy were developed using armadillos, then given to humans. The ability of humans to change the genetics of animals took a large step forwards in 1974 when Rudolf Jaenisch was able to produce the first transgenic mammal, by integrating DNA from the SV40 virus into the genome of mice. This genetic research progressed rapidly and, in 1996, Dolly the sheep was born, the first mammal to be cloned from an adult cell.

Toxicology testing became important in the 20th century. In the 19th century, laws regulating drugs were more relaxed. For example, in the US, the government could only ban a drug after a company had been prosecuted for selling products that harmed customers. However, in response to the Elixir Sulfanilamide disaster of 1937 in which the eponymous drug killed more than 100 users, the US Congress passed laws that required safety testing of drugs on animals before they could be marketed. Other countries enacted similar legislation. In the 1960s, in reaction to the Thalidomide tragedy, further laws were passed requiring safety testing on pregnant animals before a drug can be sold.

Historical debate

As the experimentation on animals increased, especially the practice of vivisection, so did criticism and controversy. In 1655, the advocate of Galenic physiology Edmund O'Meara said that "the miserable torture of vivisection places the body in an unnatural state". O'Meara and others argued that animal physiology could be affected by pain during vivisection, rendering results unreliable. There were also objections on an ethical basis, contending that the benefit to humans did not justify the harm to animals. Early objections to animal testing also came from another angle—many people believed that animals were inferior to humans and so different that results from animals could not be applied to humans.

On the other side of the debate, those in favor of animal testing held that experiments on animals were necessary to advance medical and biological knowledge. Claude Bernard—who is sometimes known as the "prince of vivisectors" and the father of physiology, and whose wife, Marie Françoise Martin, founded the first anti-vivisection society in France in 1883—famously wrote in 1865 that "the science of life is a superb and dazzlingly lighted hall which may be reached only by passing through a long and ghastly kitchen". Arguing that "experiments on animals ... are entirely conclusive for the toxicology and hygiene of man...the effects of these substances are the same on man as on animals, save for differences in degree", Bernard established animal experimentation as part of the standard scientific method.

In 1896, the physiologist and physician Dr. Walter B. Cannon said "The antivivisectionists are the second of the two types Theodore Roosevelt described when he said, 'Common sense without conscience may lead to crime, but conscience without common sense may lead to folly, which is the handmaiden of crime.'" These divisions between pro- and anti-animal testing groups first came to public attention during the Brown Dog affair in the early 1900s, when hundreds of medical students clashed with anti-vivisectionists and police over a memorial to a vivisected dog.

In 1822, the first animal protection law was enacted in the British parliament, followed by the Cruelty to Animals Act (1876), the first law specifically aimed at regulating animal testing. The legislation was promoted by Charles Darwin, who wrote to Ray Lankester in March 1871: "You ask about my opinion on vivisection. I quite agree that it is justifiable for real investigations on physiology; but not for mere damnable and detestable curiosity. It is a subject which makes me sick with horror, so I will not say another word about it, else I shall not sleep to-night." In response to the lobbying by anti-vivisectionists, several organizations were set up in Britain to defend animal research: The Physiological Society was formed in 1876 to give physiologists "mutual benefit and protection", the Association for the Advancement of Medicine by Research was formed in 1882 and focused on policy-making, and the Research Defence Society (now Understanding Animal Research) was formed in 1908 "to make known the facts as to experiments on animals in this country; the immense importance to the welfare of mankind of such experiments and the great saving of human life and health directly attributable to them".

Opposition to the use of animals in medical research first arose in the United States during the 1860s, when Henry Bergh founded the American Society for the Prevention of Cruelty to Animals (ASPCA), with America's first specifically anti-vivisection organization being the American AntiVivisection Society (AAVS), founded in 1883. Antivivisectionists of the era generally believed the spread of mercy was the great cause of civilization, and vivisection was cruel. However, in the USA the antivivisectionists' efforts were defeated in every legislature, overwhelmed by the superior organization and influence of the medical community. Overall, this movement had little legislative success until the passing of the Laboratory Animal Welfare Act, in 1966.

Care and use of animals

Regulations and laws

| | Nationwide ban on all cosmetic testing on animals | | Partial ban on cosmetic testing on animals1 |

| | Ban on the sale of cosmetics tested on animals | | No ban on any cosmetic testing on animals |

| | Unknown |

The regulations that apply to animals in laboratories vary across species. In the U.S., under the provisions of the Animal Welfare Act and the Guide for the Care and Use of Laboratory Animals (the Guide), published by the National Academy of Sciences, any procedure can be performed on an animal if it can be successfully argued that it is scientifically justified. In general, researchers are required to consult with the institution's veterinarian and its Institutional Animal Care and Use Committee (IACUC), which every research facility is obliged to maintain. The IACUC must ensure that alternatives, including non-animal alternatives, have been considered, that the experiments are not unnecessarily duplicative, and that pain relief is given unless it would interfere with the study. The IACUCs regulate all vertebrates in testing at institutions receiving federal funds in the USA. Although the provisions of the Animal Welfare Act do not include purpose-bred rodents and birds, these species are equally regulated under Public Health Service policies that govern the IACUCs. The Public Health Service policy oversees the Food and Drug Administration (FDA) and the Centers for Disease Control and Prevention (CDC). The CDC conducts infectious disease research on nonhuman primates, rabbits, mice, and other animals, while FDA requirements cover use of animals in pharmaceutical research. Animal Welfare Act (AWA) regulations are enforced by the USDA, whereas Public Health Service regulations are enforced by OLAW and in many cases by AAALAC.

According to the 2014 U.S. Department of Agriculture Office of the Inspector General (OIG) report—which looked at the oversight of animal use during a three-year period—"some Institutional Animal Care and Use Committees ...did not adequately approve, monitor, or report on experimental procedures on animals". The OIG found that "as a result, animals are not always receiving basic humane care and treatment and, in some cases, pain and distress are not minimized during and after experimental procedures". According to the report, within a three-year period, nearly half of all American laboratories with regulated species were cited for AWA violations relating to improper IACUC oversight. The USDA OIG made similar findings in a 2005 report. With only a broad number of 120 inspectors, the United States Department of Agriculture (USDA) oversees more than 12,000 facilities involved in research, exhibition, breeding, or dealing of animals. Others have criticized the composition of IACUCs, asserting that the committees are predominantly made up of animal researchers and university representatives who may be biased against animal welfare concerns.

Larry Carbone, a laboratory animal veterinarian, writes that, in his experience, IACUCs take their work very seriously regardless of the species involved, though the use of non-human primates always raises what he calls a "red flag of special concern". A study published in Science magazine in July 2001 confirmed the low reliability of IACUC reviews of animal experiments. Funded by the National Science Foundation, the three-year study found that animal-use committees that do not know the specifics of the university and personnel do not make the same approval decisions as those made by animal-use committees that do know the university and personnel. Specifically, blinded committees more often ask for more information rather than approving studies.

Scientists in India are protesting a recent guideline issued by the University Grants Commission to ban the use of live animals in universities and laboratories.

Numbers

Accurate global figures for animal testing are difficult to obtain; it has been estimated that 100 million vertebrates are experimented on around the world every year, 10–11 million of them in the EU. The Nuffield Council on Bioethics reports that global annual estimates range from 50 to 100 million animals. None of the figures include invertebrates such as shrimp and fruit flies.

The USDA/APHIS has published the 2016 animal research statistics. Overall, the number of animals (covered by the Animal Welfare Act) used in research in the US rose 6.9% from 767,622 (2015) to 820,812 (2016). This includes both public and private institutions. By comparing with EU data, where all vertebrate species are counted, Speaking of Research estimated that around 12 million vertebrates were used in research in the US in 2016. A 2015 article published in the Journal of Medical Ethics, argued that the use of animals in the US has dramatically increased in recent years. Researchers found this increase is largely the result of an increased reliance on genetically modified mice in animal studies.

In 1995, researchers at Tufts University Center for Animals and Public Policy estimated that 14–21 million animals were used in American laboratories in 1992, a reduction from a high of 50 million used in 1970. In 1986, the U.S. Congress Office of Technology Assessment reported that estimates of the animals used in the U.S. range from 10 million to upwards of 100 million each year, and that their own best estimate was at least 17 million to 22 million. In 2016, the Department of Agriculture listed 60,979 dogs, 18,898 cats, 71,188 non-human primates, 183,237 guinea pigs, 102,633 hamsters, 139,391 rabbits, 83,059 farm animals, and 161,467 other mammals, a total of 820,812, a figure that includes all mammals except purpose-bred mice and rats. The use of dogs and cats in research in the U.S. decreased from 1973 to 2016 from 195,157 to 60,979, and from 66,165 to 18,898, respectively.

In the UK, Home Office figures show that 3.79 million procedures were carried out in 2017. 2,960 procedures used non-human primates, down over 50% since 1988. A "procedure" refers here to an experiment that might last minutes, several months, or years. Most animals are used in only one procedure: animals are frequently euthanized after the experiment; however death is the endpoint of some procedures. The procedures conducted on animals in the UK in 2017 were categorised as –

- 43% (1.61 million) were assessed as sub-threshold

- 4% (0.14 million) were assessed as non-recovery

- 36% (1.35 million) were assessed as mild

- 15% (0.55 million) were assessed as moderate

- 4% (0.14 million) were assessed as severe

A 'severe' procedure would be, for instance, any test where death is the end-point or fatalities are expected, whereas a 'mild' procedure would be something like a blood test or an MRI scan.

The Three Rs

The Three Rs (3Rs) are guiding principles for more ethical use of animals in testing. These were first described by W.M.S. Russell and R.L. Burch in 1959. The 3Rs state:

- Replacement which refers to the preferred use of non-animal methods over animal methods whenever it is possible to achieve the same scientific aims. These methods include computer modeling.

- Reduction which refers to methods that enable researchers to obtain comparable levels of information from fewer animals, or to obtain more information from the same number of animals.

- Refinement which refers to methods that alleviate or minimize potential pain, suffering or distress, and enhance animal welfare for the animals used. These methods include non-invasive techniques.

The 3Rs have a broader scope than simply encouraging alternatives to animal testing, but aim to improve animal welfare and scientific quality where the use of animals can not be avoided. These 3Rs are now implemented in many testing establishments worldwide and have been adopted by various pieces of legislation and regulations.

Despite the widespread acceptance of the 3Rs, many countries—including Canada, Australia, Israel, South Korea, and Germany—have reported rising experimental use of animals in recent years with increased use of mice and, in some cases, fish while reporting declines in the use of cats, dogs, primates, rabbits, guinea pigs, and hamsters. Along with other countries, China has also escalated its use of GM animals, resulting in an increase in overall animal use.

Invertebrates

Although many more invertebrates than vertebrates are used in animal testing, these studies are largely unregulated by law. The most frequently used invertebrate species are Drosophila melanogaster, a fruit fly, and Caenorhabditis elegans, a nematode worm. In the case of C. elegans, the worm's body is completely transparent and the precise lineage of all the organism's cells is known, while studies in the fly D. melanogaster can use an amazing array of genetic tools. These invertebrates offer some advantages over vertebrates in animal testing, including their short life cycle and the ease with which large numbers may be housed and studied. However, the lack of an adaptive immune system and their simple organs prevent worms from being used in several aspects of medical research such as vaccine development. Similarly, the fruit fly immune system differs greatly from that of humans, and diseases in insects can be different from diseases in vertebrates; however, fruit flies and waxworms can be useful in studies to identify novel virulence factors or pharmacologically active compounds.

Several invertebrate systems are considered acceptable alternatives to vertebrates in early-stage discovery screens. Because of similarities between the innate immune system of insects and mammals, insects can replace mammals in some types of studies. Drosophila melanogaster and the Galleria mellonella waxworm have been particularly important for analysis of virulence traits of mammalian pathogens. Waxworms and other insects have also proven valuable for the identification of pharmaceutical compounds with favorable bioavailability. The decision to adopt such models generally involves accepting a lower degree of biological similarity with mammals for significant gains in experimental throughput.

Vertebrates

In the U.S., the numbers of rats and mice used is estimated to be from 11 million to between 20 and 100 million a year. Other rodents commonly used are guinea pigs, hamsters, and gerbils. Mice are the most commonly used vertebrate species because of their size, low cost, ease of handling, and fast reproduction rate. Mice are widely considered to be the best model of inherited human disease and share 95% of their genes with humans. With the advent of genetic engineering technology, genetically modified mice can be generated to order and can provide models for a range of human diseases. Rats are also widely used for physiology, toxicology and cancer research, but genetic manipulation is much harder in rats than in mice, which limits the use of these rodents in basic science.

Over 500,000 fish and 9,000 amphibians were used in the UK in 2016. The main species used is the zebrafish, Danio rerio, which are translucent during their embryonic stage, and the African clawed frog, Xenopus laevis. Over 20,000 rabbits were used for animal testing in the UK in 2004. Albino rabbits are used in eye irritancy tests (Draize test) because rabbits have less tear flow than other animals, and the lack of eye pigment in albinos make the effects easier to visualize. The numbers of rabbits used for this purpose has fallen substantially over the past two decades. In 1996, there were 3,693 procedures on rabbits for eye irritation in the UK, and in 2017 this number was just 63. Rabbits are also frequently used for the production of polyclonal antibodies.

Cats

Cats are most commonly used in neurological research. In 2016, 18,898 cats were used in the United States alone, around a third of which were used in experiments which have the potential to cause "pain and/or distress" though only 0.1% of cat experiments involved potential pain which was not relieved by anesthetics/analgesics. In the UK, just 198 procedures were carried out on cats in 2017. The number has been around 200 for most of the last decade.

Dogs

Dogs are widely used in biomedical research, testing, and education—particularly beagles, because they are gentle and easy to handle, and to allow for comparisons with historical data from beagles (a Reduction technique).(citation needed) They are used as models for human and veterinary diseases in cardiology, endocrinology, and bone and joint studies, research that tends to be highly invasive, according to the Humane Society of the United States. The most common use of dogs is in the safety assessment of new medicines for human or veterinary use as a second species following testing in rodents, in accordance with the regulations set out in the International Conference on Harmonisation of Technical Requirements for Registration of Pharmaceuticals for Human Use. One of the most significant advancements in medical science involves the use of dogs in developing the answers to insulin production in the body for diabetics and the role of the pancreas in this process. They found that the pancreas was responsible for producing insulin in the body and that removal of the pancreas, resulted in the development of diabetes in the dog. After re-injecting the pancreatic extract, (insulin), the blood glucose levels were significantly lowered. The advancements made in this research involving the use of dogs has resulted in a definite improvement in the quality of life for both humans and animals.

The U.S. Department of Agriculture's Animal Welfare Report shows that 60,979 dogs were used in USDA-registered facilities in 2016. In the UK, according to the UK Home Office, there were 3,847 procedures on dogs in 2017. Of the other large EU users of dogs, Germany conducted 3,976 procedures on dogs in 2016 and France conducted 4,204 procedures in 2016. In both cases this represents under 0.2% of the total number of procedures conducted on animals in the respective countries.

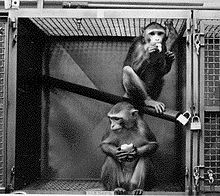

Non-human primates

Non-human primates (NHPs) are used in toxicology tests, studies of AIDS and hepatitis, studies of neurology, behavior and cognition, reproduction, genetics, and xenotransplantation. They are caught in the wild or purpose-bred. In the United States and China, most primates are domestically purpose-bred, whereas in Europe the majority are imported purpose-bred. The European Commission reported that in 2011, 6,012 monkeys were experimented on in European laboratories. According to the U.S. Department of Agriculture, there were 71,188 monkeys in U.S. laboratories in 2016. 23,465 monkeys were imported into the U.S. in 2014 including 929 who were caught in the wild. Most of the NHPs used in experiments are macaques; but marmosets, spider monkeys, and squirrel monkeys are also used, and baboons and chimpanzees are used in the US. As of 2015, there are approximately 730 chimpanzees in U.S. laboratories.

In a survey in 2003, it was found that 89% of singly-housed primates exhibited self-injurious or abnormal stereotypyical behaviors including pacing, rocking, hair pulling, and biting among others.

The first transgenic primate was produced in 2001, with the development of a method that could introduce new genes into a rhesus macaque. This transgenic technology is now being applied in the search for a treatment for the genetic disorder Huntington's disease. Notable studies on non-human primates have been part of the polio vaccine development, and development of Deep Brain Stimulation, and their current heaviest non-toxicological use occurs in the monkey AIDS model, SIV. In 2008 a proposal to ban all primates experiments in the EU has sparked a vigorous debate.

Sources

Animals used by laboratories are largely supplied by specialist dealers. Sources differ for vertebrate and invertebrate animals. Most laboratories breed and raise flies and worms themselves, using strains and mutants supplied from a few main stock centers. For vertebrates, sources include breeders and dealers like Covance and Charles River Laboratories who supply purpose-bred and wild-caught animals; businesses that trade in wild animals such as Nafovanny; and dealers who supply animals sourced from pounds, auctions, and newspaper ads. Animal shelters also supply the laboratories directly. Large centers also exist to distribute strains of genetically modified animals; the International Knockout Mouse Consortium, for example, aims to provide knockout mice for every gene in the mouse genome.

In the U.S., Class A breeders are licensed by the U.S. Department of Agriculture (USDA) to sell animals for research purposes, while Class B dealers are licensed to buy animals from "random sources" such as auctions, pound seizure, and newspaper ads. Some Class B dealers have been accused of kidnapping pets and illegally trapping strays, a practice known as bunching. It was in part out of public concern over the sale of pets to research facilities that the 1966 Laboratory Animal Welfare Act was ushered in—the Senate Committee on Commerce reported in 1966 that stolen pets had been retrieved from Veterans Administration facilities, the Mayo Institute, the University of Pennsylvania, Stanford University, and Harvard and Yale Medical Schools. The USDA recovered at least a dozen stolen pets during a raid on a Class B dealer in Arkansas in 2003.

Four states in the U.S.—Minnesota, Utah, Oklahoma, and Iowa—require their shelters to provide animals to research facilities. Fourteen states explicitly prohibit the practice, while the remainder either allow it or have no relevant legislation.

In the European Union, animal sources are governed by Council Directive 86/609/EEC, which requires lab animals to be specially bred, unless the animal has been lawfully imported and is not a wild animal or a stray. The latter requirement may also be exempted by special arrangement. In 2010 the Directive was revised with EU Directive 2010/63/EU. In the UK, most animals used in experiments are bred for the purpose under the 1988 Animal Protection Act, but wild-caught primates may be used if exceptional and specific justification can be established. The United States also allows the use of wild-caught primates; between 1995 and 1999, 1,580 wild baboons were imported into the U.S. Over half the primates imported between 1995 and 2000 were handled by Charles River Laboratories, or by Covance, which is the single largest importer of primates into the U.S.

Pain and suffering

| | National recognition of animal sentience | | Partial recognition of animal sentience1 |

| | National recognition of animal suffering | | Partial recognition of animal suffering2 |

| | No official recognition of animal sentience or suffering | | Unknown |

2only includes domestic animals

The extent to which animal testing causes pain and suffering, and the capacity of animals to experience and comprehend them, is the subject of much debate.

According to the USDA, in 2016 501,560 animals (61%) (not including rats, mice, birds, or invertebrates) were used in procedures that did not include more than momentary pain or distress. 247,882 (31%) animals were used in procedures in which pain or distress was relieved by anesthesia, while 71,370 (9%) were used in studies that would cause pain or distress that would not be relieved.

Since 2014, in the UK, every research procedure was retrospectively assessed for severity. The five categories are "sub-threshold", "mild", "moderate", "severe" and "non-recovery", the latter being procedures in which an animal is anesthetized and subsequently killed without recovering consciousness. In 2017, 43% (1.61 million) were assessed as sub-threshold, 4% (0.14 million) were assessed as non-recovery, 36% (1.35 million) were assessed as mild, 15% (0.55 million) were assessed as moderate and 4% (0.14 million) were assessed as severe.

The idea that animals might not feel pain as human beings feel it traces back to the 17th-century French philosopher, René Descartes, who argued that animals do not experience pain and suffering because they lack consciousness. Bernard Rollin of Colorado State University, the principal author of two U.S. federal laws regulating pain relief for animals, writes that researchers remained unsure into the 1980s as to whether animals experience pain, and that veterinarians trained in the U.S. before 1989 were simply taught to ignore animal pain. In his interactions with scientists and other veterinarians, he was regularly asked to "prove" that animals are conscious, and to provide "scientifically acceptable" grounds for claiming that they feel pain. Carbone writes that the view that animals feel pain differently is now a minority view. Academic reviews of the topic are more equivocal, noting that although the argument that animals have at least simple conscious thoughts and feelings has strong support, some critics continue to question how reliably animal mental states can be determined. However, some canine experts are stating that, while intelligence does differ animal to animal, dogs have the intelligence of a two to two-and-a-half-year old. This does support the idea that dogs, at the very least, have some form of consciousness. The ability of invertebrates to experience pain and suffering is less clear, however, legislation in several countries (e.g. U.K., New Zealand, Norway) protects some invertebrate species if they are being used in animal testing.

In the U.S., the defining text on animal welfare regulation in animal testing is the Guide for the Care and Use of Laboratory Animals. This defines the parameters that govern animal testing in the U.S. It states "The ability to experience and respond to pain is widespread in the animal kingdom...Pain is a stressor and, if not relieved, can lead to unacceptable levels of stress and distress in animals." The Guide states that the ability to recognize the symptoms of pain in different species is vital in efficiently applying pain relief and that it is essential for the people caring for and using animals to be entirely familiar with these symptoms. On the subject of analgesics used to relieve pain, the Guide states "The selection of the most appropriate analgesic or anesthetic should reflect professional judgment as to which best meets clinical and humane requirements without compromising the scientific aspects of the research protocol". Accordingly, all issues of animal pain and distress, and their potential treatment with analgesia and anesthesia, are required regulatory issues in receiving animal protocol approval.

In 2019, Katrien Devolder and Matthias Eggel proposed gene editing research animals to remove the ability to feel pain. This would be an intermediate step towards eventually stopping all experimentation on animals and adopting alternatives. Additionally, this would not stop research animals from experiencing psychological harm.

Euthanasia

Regulations require that scientists use as few animals as possible, especially for terminal experiments. However, while policy makers consider suffering to be the central issue and see animal euthanasia as a way to reduce suffering, others, such as the RSPCA, argue that the lives of laboratory animals have intrinsic value. Regulations focus on whether particular methods cause pain and suffering, not whether their death is undesirable in itself. The animals are euthanized at the end of studies for sample collection or post-mortem examination; during studies if their pain or suffering falls into certain categories regarded as unacceptable, such as depression, infection that is unresponsive to treatment, or the failure of large animals to eat for five days; or when they are unsuitable for breeding or unwanted for some other reason.

Methods of euthanizing laboratory animals are chosen to induce rapid unconsciousness and death without pain or distress. The methods that are preferred are those published by councils of veterinarians. The animal can be made to inhale a gas, such as carbon monoxide and carbon dioxide, by being placed in a chamber, or by use of a face mask, with or without prior sedation or anesthesia. Sedatives or anesthetics such as barbiturates can be given intravenously, or inhalant anesthetics may be used. Amphibians and fish may be immersed in water containing an anesthetic such as tricaine. Physical methods are also used, with or without sedation or anesthesia depending on the method. Recommended methods include decapitation (beheading) for small rodents or rabbits. Cervical dislocation (breaking the neck or spine) may be used for birds, mice, and immature rats and rabbits. High-intensity microwave irradiation of the brain can preserve brain tissue and induce death in less than 1 second, but this is currently only used on rodents. Captive bolts may be used, typically on dogs, ruminants, horses, pigs and rabbits. It causes death by a concussion to the brain. Gunshot may be used, but only in cases where a penetrating captive bolt may not be used. Some physical methods are only acceptable after the animal is unconscious. Electrocution may be used for cattle, sheep, swine, foxes, and mink after the animals are unconscious, often by a prior electrical stun. Pithing (inserting a tool into the base of the brain) is usable on animals already unconscious. Slow or rapid freezing, or inducing air embolism are acceptable only with prior anesthesia to induce unconsciousness.

Research classification

Pure research

Basic or pure research investigates how organisms behave, develop, and function. Those opposed to animal testing object that pure research may have little or no practical purpose, but researchers argue that it forms the necessary basis for the development of applied research, rendering the distinction between pure and applied research—research that has a specific practical aim—unclear. Pure research uses larger numbers and a greater variety of animals than applied research. Fruit flies, nematode worms, mice and rats together account for the vast majority, though small numbers of other species are used, ranging from sea slugs through to armadillos. Examples of the types of animals and experiments used in basic research include:

- Studies on embryogenesis and developmental biology. Mutants are created by adding transposons into their genomes, or specific genes are deleted by gene targeting. By studying the changes in development these changes produce, scientists aim to understand both how organisms normally develop, and what can go wrong in this process. These studies are particularly powerful since the basic controls of development, such as the homeobox genes, have similar functions in organisms as diverse as fruit flies and man.

- Experiments into behavior, to understand how organisms detect and interact with each other and their environment, in which fruit flies, worms, mice, and rats are all widely used. Studies of brain function, such as memory and social behavior, often use rats and birds. For some species, behavioral research is combined with enrichment strategies for animals in captivity because it allows them to engage in a wider range of activities.

- Breeding experiments to study evolution and genetics. Laboratory mice, flies, fish, and worms are inbred through many generations to create strains with defined characteristics. These provide animals of a known genetic background, an important tool for genetic analyses. Larger mammals are rarely bred specifically for such studies due to their slow rate of reproduction, though some scientists take advantage of inbred domesticated animals, such as dog or cattle breeds, for comparative purposes. Scientists studying how animals evolve use many animal species to see how variations in where and how an organism lives (their niche) produce adaptations in their physiology and morphology. As an example, sticklebacks are now being used to study how many and which types of mutations are selected to produce adaptations in animals' morphology during the evolution of new species.

Applied research

Applied research aims to solve specific and practical problems. These may involve the use of animal models of diseases or conditions, which are often discovered or generated by pure research programmes. In turn, such applied studies may be an early stage in the drug discovery process. Examples include:

- Genetic modification of animals to study disease. Transgenic animals have specific genes inserted, modified or removed, to mimic specific conditions such as single gene disorders, such as Huntington's disease. Other models mimic complex, multifactorial diseases with genetic components, such as diabetes, or even transgenic mice that carry the same mutations that occur during the development of cancer. These models allow investigations on how and why the disease develops, as well as providing ways to develop and test new treatments. The vast majority of these transgenic models of human disease are lines of mice, the mammalian species in which genetic modification is most efficient. Smaller numbers of other animals are also used, including rats, pigs, sheep, fish, birds, and amphibians.

- Studies on models of naturally occurring disease and condition. Certain domestic and wild animals have a natural propensity or predisposition for certain conditions that are also found in humans. Cats are used as a model to develop immunodeficiency virus vaccines and to study leukemia because their natural predisposition to FIV and Feline leukemia virus. Certain breeds of dog suffer from narcolepsy making them the major model used to study the human condition. Armadillos and humans are among only a few animal species that naturally suffer from leprosy; as the bacteria responsible for this disease cannot yet be grown in culture, armadillos are the primary source of bacilli used in leprosy vaccines.

- Studies on induced animal models of human diseases. Here, an animal is treated so that it develops pathology and symptoms that resemble a human disease. Examples include restricting blood flow to the brain to induce stroke, or giving neurotoxins that cause damage similar to that seen in Parkinson's disease. Much animal research into potential treatments for humans is wasted because it is poorly conducted and not evaluated through systematic reviews. For example, although such models are now widely used to study Parkinson's disease, the British anti-vivisection interest group BUAV argues that these models only superficially resemble the disease symptoms, without the same time course or cellular pathology. In contrast, scientists assessing the usefulness of animal models of Parkinson's disease, as well as the medical research charity The Parkinson's Appeal, state that these models were invaluable and that they led to improved surgical treatments such as pallidotomy, new drug treatments such as levodopa, and later deep brain stimulation.

- Animal testing has also included the use of placebo testing. In these cases animals are treated with a substance that produces no pharmacological effect, but is administered in order to determine any biological alterations due to the experience of a substance being administered, and the results are compared with those obtained with an active compound.

Xenotransplantation

Xenotransplantation research involves transplanting tissues or organs from one species to another, as a way to overcome the shortage of human organs for use in organ transplants. Current research involves using primates as the recipients of organs from pigs that have been genetically modified to reduce the primates' immune response against the pig tissue. Although transplant rejection remains a problem, recent clinical trials that involved implanting pig insulin-secreting cells into diabetics did reduce these people's need for insulin.

Documents released to the news media by the animal rights organization Uncaged Campaigns showed that, between 1994 and 2000, wild baboons imported to the UK from Africa by Imutran Ltd, a subsidiary of Novartis Pharma AG, in conjunction with Cambridge University and Huntingdon Life Sciences, to be used in experiments that involved grafting pig tissues, suffered serious and sometimes fatal injuries. A scandal occurred when it was revealed that the company had communicated with the British government in an attempt to avoid regulation.

Toxicology testing

Toxicology testing, also known as safety testing, is conducted by pharmaceutical companies testing drugs, or by contract animal testing facilities, such as Huntingdon Life Sciences, on behalf of a wide variety of customers. According to 2005 EU figures, around one million animals are used every year in Europe in toxicology tests; which are about 10% of all procedures. According to Nature, 5,000 animals are used for each chemical being tested, with 12,000 needed to test pesticides. The tests are conducted without anesthesia, because interactions between drugs can affect how animals detoxify chemicals, and may interfere with the results.

Toxicology tests are used to examine finished products such as pesticides, medications, food additives, packing materials, and air freshener, or their chemical ingredients. Most tests involve testing ingredients rather than finished products, but according to BUAV, manufacturers believe these tests overestimate the toxic effects of substances; they therefore repeat the tests using their finished products to obtain a less toxic label.

The substances are applied to the skin or dripped into the eyes; injected intravenously, intramuscularly, or subcutaneously; inhaled either by placing a mask over the animals and restraining them, or by placing them in an inhalation chamber; or administered orally, through a tube into the stomach, or simply in the animal's food. Doses may be given once, repeated regularly for many months, or for the lifespan of the animal.

There are several different types of acute toxicity tests. The LD50 ("Lethal Dose 50%") test is used to evaluate the toxicity of a substance by determining the dose required to kill 50% of the test animal population. This test was removed from OECD international guidelines in 2002, replaced by methods such as the fixed dose procedure, which use fewer animals and cause less suffering. Abbott writes that, as of 2005, "the LD50 acute toxicity test ... still accounts for one-third of all animal [toxicity] tests worldwide".

Irritancy can be measured using the Draize test, where a test substance is applied to an animal's eyes or skin, usually an albino rabbit. For Draize eye testing, the test involves observing the effects of the substance at intervals and grading any damage or irritation, but the test should be halted and the animal killed if it shows "continuing signs of severe pain or distress". The Humane Society of the United States writes that the procedure can cause redness, ulceration, hemorrhaging, cloudiness, or even blindness. This test has also been criticized by scientists for being cruel and inaccurate, subjective, over-sensitive, and failing to reflect human exposures in the real world. Although no accepted in vitro alternatives exist, a modified form of the Draize test called the low volume eye test may reduce suffering and provide more realistic results and this was adopted as the new standard in September 2009. However, the Draize test will still be used for substances that are not severe irritants.

The most stringent tests are reserved for drugs and foodstuffs. For these, a number of tests are performed, lasting less than a month (acute), one to three months (subchronic), and more than three months (chronic) to test general toxicity (damage to organs), eye and skin irritancy, mutagenicity, carcinogenicity, teratogenicity, and reproductive problems. The cost of the full complement of tests is several million dollars per substance and it may take three or four years to complete.

These toxicity tests provide, in the words of a 2006 United States National Academy of Sciences report, "critical information for assessing hazard and risk potential". Animal tests may overestimate risk, with false positive results being a particular problem, but false positives appear not to be prohibitively common. Variability in results arises from using the effects of high doses of chemicals in small numbers of laboratory animals to try to predict the effects of low doses in large numbers of humans. Although relationships do exist, opinion is divided on how to use data on one species to predict the exact level of risk in another.

Scientists face growing pressure to move away from using traditional animal toxicity tests to determine whether manufactured chemicals are safe. Among variety of approaches to toxicity evaluation the ones which have attracted increasing interests are in vitro cell-based sensing methods applying fluorescence.

Cosmetics testing

Cosmetics testing on animals is particularly controversial. Such tests, which are still conducted in the U.S., involve general toxicity, eye and skin irritancy, phototoxicity (toxicity triggered by ultraviolet light) and mutagenicity.

Cosmetics testing on animals is banned in India, the European Union, Israel and Norway while legislation in the U.S. and Brazil is currently considering similar bans. In 2002, after 13 years of discussion, the European Union agreed to phase in a near-total ban on the sale of animal-tested cosmetics by 2009, and to ban all cosmetics-related animal testing. France, which is home to the world's largest cosmetics company, L'Oreal, has protested the proposed ban by lodging a case at the European Court of Justice in Luxembourg, asking that the ban be quashed. The ban is also opposed by the European Federation for Cosmetics Ingredients, which represents 70 companies in Switzerland, Belgium, France, Germany, and Italy. In October 2014, India passed stricter laws that also ban the importation of any cosmetic products that are tested on animals.

Drug testing

Before the early 20th century, laws regulating drugs were lax. Currently, all new pharmaceuticals undergo rigorous animal testing before being licensed for human use. Tests on pharmaceutical products involve:

- metabolic tests, investigating pharmacokinetics—how drugs are absorbed, metabolized and excreted by the body when introduced orally, intravenously, intraperitoneally, intramuscularly, or transdermally.

- toxicology tests, which gauge acute, sub-acute, and chronic toxicity. Acute toxicity is studied by using a rising dose until signs of toxicity become apparent. Current European legislation demands that "acute toxicity tests must be carried out in two or more mammalian species" covering "at least two different routes of administration". Sub-acute toxicity is where the drug is given to the animals for four to six weeks in doses below the level at which it causes rapid poisoning, in order to discover if any toxic drug metabolites build up over time. Testing for chronic toxicity can last up to two years and, in the European Union, is required to involve two species of mammals, one of which must be non-rodent.

- efficacy studies, which test whether experimental drugs work by inducing the appropriate illness in animals. The drug is then administered in a double-blind controlled trial, which allows researchers to determine the effect of the drug and the dose-response curve.

- Specific tests on reproductive function, embryonic toxicity, or carcinogenic potential can all be required by law, depending on the result of other studies and the type of drug being tested.

Education

It is estimated that 20 million animals are used annually for educational purposes in the United States including, classroom observational exercises, dissections and live-animal surgeries. Frogs, fetal pigs, perch, cats, earthworms, grasshoppers, crayfish and starfish are commonly used in classroom dissections. Alternatives to the use of animals in classroom dissections are widely used, with many U.S. States and school districts mandating students be offered the choice to not dissect. Citing the wide availability of alternatives and the decimation of local frog species, India banned dissections in 2014.

The Sonoran Arthropod Institute hosts an annual Invertebrates in Education and Conservation Conference to discuss the use of invertebrates in education. There also are efforts in many countries to find alternatives to using animals in education. The NORINA database, maintained by Norecopa, lists products that may be used as alternatives or supplements to animal use in education, and in the training of personnel who work with animals. These include alternatives to dissection in schools. InterNICHE has a similar database and a loans system.

In November 2013, the U.S.-based company Backyard Brains released for sale to the public what they call the "Roboroach", an "electronic backpack" that can be attached to cockroaches. The operator is required to amputate a cockroach's antennae, use sandpaper to wear down the shell, insert a wire into the thorax, and then glue the electrodes and circuit board onto the insect's back. A mobile phone app can then be used to control it via Bluetooth. It has been suggested that the use of such a device may be a teaching aid that can promote interest in science. The makers of the "Roboroach" have been funded by the National Institute of Mental Health and state that the device is intended to encourage children to become interested in neuroscience.

Defense

Animals are used by the military to develop weapons, vaccines, battlefield surgical techniques, and defensive clothing. For example, in 2008 the United States Defense Advanced Research Projects Agency used live pigs to study the effects of improvised explosive device explosions on internal organs, especially the brain.

In the US military, goats are commonly used to train combat medics. (Goats have become the main animal species used for this purpose after the Pentagon phased out using dogs for medical training in the 1980s.) While modern mannequins used in medical training are quite efficient in simulating the behavior of a human body, some trainees feel that "the goat exercise provide[s] a sense of urgency that only real life trauma can provide". Nevertheless, in 2014, the U.S. Coast Guard announced that it would reduce the number of animals it uses in its training exercises by half after PETA released video showing Guard members cutting off the limbs of unconscious goats with tree trimmers and inflicting other injuries with a shotgun, pistol, ax and a scalpel. That same year, citing the availability of human simulators and other alternatives, the Department of Defense announced it would begin reducing the number of animals it uses in various training programs. In 2013, several Navy medical centers stopped using ferrets in intubation exercises after complaints from PETA.

Besides the United States, six out of 28 NATO countries, including Poland and Denmark, use live animals for combat medic training.

Ethics

Viewpoints

The moral and ethical questions raised by performing experiments on animals are subject to debate, and viewpoints have shifted significantly over the 20th century. There remain disagreements about which procedures are useful for which purposes, as well as disagreements over which ethical principles apply to which species.

A 2015 Gallup poll found that 67% of Americans were "very concerned" or "somewhat concerned" about animals used in research. A Pew poll taken the same year found 50% of American adults opposed the use of animals in research.

Still, a wide range of viewpoints exist. The view that animals have moral rights (animal rights) is a philosophical position proposed by Tom Regan, among others, who argues that animals are beings with beliefs and desires, and as such are the "subjects of a life" with moral value and therefore moral rights. Regan still sees ethical differences between killing human and non-human animals, and argues that to save the former it is permissible to kill the latter. Likewise, a "moral dilemma" view suggests that avoiding potential benefit to humans is unacceptable on similar grounds, and holds the issue to be a dilemma in balancing such harm to humans to the harm done to animals in research. In contrast, an abolitionist view in animal rights holds that there is no moral justification for any harmful research on animals that is not to the benefit of the individual animal. Bernard Rollin argues that benefits to human beings cannot outweigh animal suffering, and that human beings have no moral right to use an animal in ways that do not benefit that individual. Donald Watson has stated that vivisection and animal experimentation "is probably the cruelest of all Man's attack on the rest of Creation." Another prominent position is that of philosopher Peter Singer, who argues that there are no grounds to include a being's species in considerations of whether their suffering is important in utilitarian moral considerations. Malcolm Macleod and collaborators argue that most controlled animal studies do not employ randomization, allocation concealment, and blinding outcome assessment, and that failure to employ these features exaggerates the apparent benefit of drugs tested in animals, leading to a failure to translate much animal research for human benefit.

Governments such as the Netherlands and New Zealand have responded to the public's concerns by outlawing invasive experiments on certain classes of non-human primates, particularly the great apes. In 2015, captive chimpanzees in the U.S. were added to the Endangered Species Act adding new road blocks to those wishing to experiment on them. Similarly, citing ethical considerations and the availability of alternative research methods, the U.S. NIH announced in 2013 that it would dramatically reduce and eventually phase out experiments on chimpanzees.

The British government has required that the cost to animals in an experiment be weighed against the gain in knowledge. Some medical schools and agencies in China, Japan, and South Korea have built cenotaphs for killed animals. In Japan there are also annual memorial services (Ireisai 慰霊祭) for animals sacrificed at medical school.

Various specific cases of animal testing have drawn attention, including both instances of beneficial scientific research, and instances of alleged ethical violations by those performing the tests. The fundamental properties of muscle physiology were determined with work done using frog muscles (including the force generating mechanism of all muscle, the length-tension relationship, and the force-velocity curve), and frogs are still the preferred model organism due to the long survival of muscles in vitro and the possibility of isolating intact single-fiber preparations (not possible in other organisms). Modern physical therapy and the understanding and treatment of muscular disorders is based on this work and subsequent work in mice (often engineered to express disease states such as muscular dystrophy). In February 1997 a team at the Roslin Institute in Scotland announced the birth of Dolly the sheep, the first mammal to be cloned from an adult somatic cell.

Concerns have been raised over the mistreatment of primates undergoing testing. In 1985 the case of Britches, a macaque monkey at the University of California, Riverside, gained public attention. He had his eyelids sewn shut and a sonar sensor on his head as part of an experiment to test sensory substitution devices for blind people. The laboratory was raided by Animal Liberation Front in 1985, removing Britches and 466 other animals. The National Institutes of Health conducted an eight-month investigation and concluded, however, that no corrective action was necessary. During the 2000s other cases have made headlines, including experiments at the University of Cambridge and Columbia University in 2002. In 2004 and 2005, undercover footage of staff of Covance's, a contract research organization that provides animal testing services, Virginia lab was shot by People for the Ethical Treatment of Animals (PETA). Following release of the footage, the U.S. Department of Agriculture fined Covance $8,720 for 16 citations, three of which involved lab monkeys; the other citations involved administrative issues and equipment.

Threats to researchers

Threats of violence to animal researchers are not uncommon.

In 2006, a primate researcher at the University of California, Los Angeles (UCLA) shut down the experiments in his lab after threats from animal rights activists. The researcher had received a grant to use 30 macaque monkeys for vision experiments; each monkey was anesthetized for a single physiological experiment lasting up to 120 hours, and then euthanized. The researcher's name, phone number, and address were posted on the website of the Primate Freedom Project. Demonstrations were held in front of his home. A Molotov cocktail was placed on the porch of what was believed to be the home of another UCLA primate researcher; instead, it was accidentally left on the porch of an elderly woman unrelated to the university. The Animal Liberation Front claimed responsibility for the attack. As a result of the campaign, the researcher sent an email to the Primate Freedom Project stating "you win", and "please don't bother my family anymore". In another incident at UCLA in June 2007, the Animal Liberation Brigade placed a bomb under the car of a UCLA children's ophthalmologist who experiments on cats and rhesus monkeys; the bomb had a faulty fuse and did not detonate.

In 1997, PETA filmed staff from Huntingdon Life Sciences, showing dogs being mistreated. The employees responsible were dismissed, with two given community service orders and ordered to pay £250 costs, the first lab technicians to have been prosecuted for animal cruelty in the UK. The Stop Huntingdon Animal Cruelty campaign used tactics ranging from non-violent protest to the alleged firebombing of houses owned by executives associated with HLS's clients and investors. The Southern Poverty Law Center, which monitors US domestic extremism, has described SHAC's modus operandi as "frankly terroristic tactics similar to those of anti-abortion extremists," and in 2005 an official with the FBI's counter-terrorism division referred to SHAC's activities in the United States as domestic terrorist threats. 13 members of SHAC were jailed for between 15 months and eleven years on charges of conspiracy to blackmail or harm HLS and its suppliers.

These attacks—as well as similar incidents that caused the Southern Poverty Law Center to declare in 2002 that the animal rights movement had "clearly taken a turn toward the more extreme"—prompted the US government to pass the Animal Enterprise Terrorism Act and the UK government to add the offense of "Intimidation of persons connected with animal research organisation" to the Serious Organised Crime and Police Act 2005. Such legislation and the arrest and imprisonment of activists may have decreased the incidence of attacks.

Scientific criticism

Systematic reviews have pointed out that animal testing often fails to accurately mirror outcomes in humans. For instance, a 2013 review noted that some 100 vaccines have been shown to prevent HIV in animals, yet none of them have worked on humans. Effects seen in animals may not be replicated in humans, and vice versa. Many corticosteroids cause birth defects in animals, but not in humans. Conversely, thalidomide causes serious birth defects in humans, but not in animals. A 2004 paper concluded that much animal research is wasted because systemic reviews are not used, and due to poor methodology. A 2006 review found multiple studies where there were promising results for new drugs in animals, but human clinical studies did not show the same results. The researchers suggested that this might be due to researcher bias, or simply because animal models do not accurately reflect human biology. Lack of meta-reviews may be partially to blame. Poor methodology is an issue in many studies. A 2009 review noted that many animal experiments did not use blinded experiments, a key element of many scientific studies in which researchers are not told about the part of the study they are working on to reduce bias.

Alternatives to animal testing

Most scientists and governments state that animal testing should cause as little suffering to animals as possible, and that animal tests should only be performed where necessary. The "Three Rs" are guiding principles for the use of animals in research in most countries. Whilst replacement of animals, i.e. alternatives to animal testing, is one of the principles, their scope is much broader. Although such principles have been welcomed as a step forwards by some animal welfare groups, they have also been criticized as both outdated by current research, and of little practical effect in improving animal welfare.

The scientists and engineers at Harvard's Wyss Institute have created "organs-on-a-chip", including the "lung-on-a-chip" and "gut-on-a-chip". Researchers at cellasys in Germany developed a "skin-on-a-chip". These tiny devices contain human cells in a 3-dimensional system that mimics human organs. The chips can be used instead of animals in in vitro disease research, drug testing, and toxicity testing. Researchers have also begun using 3-D bioprinters to create human tissues for in vitro testing.

Another non-animal research method is in silico or computer simulation and mathematical modeling which seeks to investigate and ultimately predict toxicity and drug affects in humans without using animals. This is done by investigating test compounds on a molecular level using recent advances in technological capabilities with the ultimate goal of creating treatments unique to each patient.

Microdosing is another alternative to the use of animals in experimentation. Microdosing is a process whereby volunteers are administered a small dose of a test compound allowing researchers to investigate its pharmacological affects without harming the volunteers. Microdosing can replace the use of animals in pre-clinical drug screening and can reduce the number of animals used in safety and toxicity testing.

Additional alternative methods include positron emission tomography (PET), which allows scanning of the human brain in vivo, and comparative epidemiological studies of disease risk factors among human populations.

Simulators and computer programs have also replaced the use of animals in dissection, teaching and training exercises.

Official bodies such as the European Centre for the Validation of Alternative Test Methods of the European Commission, the Interagency Coordinating Committee for the Validation of Alternative Methods in the US, ZEBET in Germany, and the Japanese Center for the Validation of Alternative Methods (among others) also promote and disseminate the 3Rs. These bodies are mainly driven by responding to regulatory requirements, such as supporting the cosmetics testing ban in the EU by validating alternative methods.

The European Partnership for Alternative Approaches to Animal Testing serves as a liaison between the European Commission and industries. The European Consensus Platform for Alternatives coordinates efforts amongst EU member states.

Academic centers also investigate alternatives, including the Center for Alternatives to Animal Testing at the Johns Hopkins University and the NC3Rs in the UK.