In economics, a network effect (also called network externality or demand-side economies of scale) is the phenomenon by which the value or utility a user derives from a good or service depends on the number of users of compatible products. Network effects are typically positive, resulting in a given user deriving more value from a product as more users join the same network. The adoption of a product by an additional user can be broken into two effects: an increase in the value to all other users ( "total effect") and also the enhancement of other non-users' motivation for using the product ("marginal effect").

Network effects can be direct or indirect. Direct network effects arise when a given user's utility increases with the number of other users of the same product or technology, meaning that adoption of a product by different users is complementary. This effect is separate from effects related to price, such as a benefit to existing users resulting from price decreases as more users join. Direct network effects can be seen with social networking services, including Twitter, Facebook, Airbnb, Uber, and LinkedIn; telecommunications devices like the telephone; and instant messaging services such as MSN, AIM or QQ. Indirect (or cross-group) network effects arise when there are "at least two different customer groups that are interdependent, and the utility of at least one group grows as the other group(s) grow". For example, hardware may become more valuable to consumers with the growth of compatible software.

Network effects are commonly mistaken for economies of scale, which describe decreasing average production costs in relation to the total volume of units produced. Economies of scale are a common phenomenon in traditional industries such as manufacturing, whereas network effects are most prevalent in new economy industries, particularly information and communication technologies. Network effects are the demand side counterpart of economies of scale, as they function by increasing a customer's willingness to pay due rather than decreasing the supplier's average cost.

Upon reaching critical mass, a bandwagon effect can result. As the network continues to become more valuable with each new adopter, more people are incentivised to adopt, resulting in a positive feedback loop. Multiple equilibria and a market monopoly are two key potential outcomes in markets that exhibit network effects. Consumer expectations are key in determining which outcomes will result.

Origins

Network effects were a central theme in the arguments of Theodore Vail, the first post-patent president of Bell Telephone, in gaining a monopoly on US telephone services. In 1908, when he presented the concept in Bell's annual report, there were over 4,000 local and regional telephone exchanges, most of which were eventually merged into the Bell System.

Network effects were popularized by Robert Metcalfe, stated as Metcalfe's law. Metcalfe was one of the co-inventors of Ethernet and a co-founder of the company 3Com. In selling the product, Metcalfe argued that customers needed Ethernet cards to grow above a certain critical mass if they were to reap the benefits of their network. According to Metcalfe, the rationale behind the sale of networking cards was that the cost of the network was directly proportional to the number of cards installed, but the value of the network was proportional to the square of the number of users. This was expressed algebraically as having a cost of N, and a value of N2. While the actual numbers behind this proposition were never firm, the concept allowed customers to share access to expensive resources like disk drives and printers, send e-mail, and eventually access the Internet.

The economic theory of the network effect was advanced significantly between 1985 and 1995 by researchers Michael L. Katz, Carl Shapiro, Joseph Farrell, and Garth Saloner. Author, high-tech entrepreneur Rod Beckstrom presented a mathematical model for describing networks that are in a state of positive network effect at BlackHat and Defcon in 2009 and also presented the "inverse network effect" with an economic model for defining it as well. Because of the positive feedback often associated with the network effect, system dynamics can be used as a modelling method to describe the phenomena. Word of mouth and the Bass diffusion model are also potentially applicable. The next major advance occurred between 2000 and 2003 when researchers Geoffrey G Parker, Marshall Van Alstyne, Jean-Charles Rochet and Jean Tirole independently developed the two-sided market literature showing how network externalities that cross distinct groups can lead to free pricing for one of those groups.

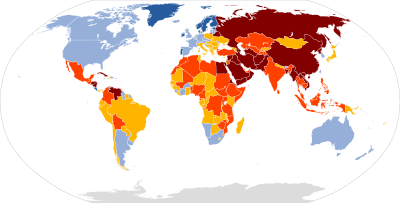

Evidence and consequences

While the diversity of sources is in decline, there is a countervailing force of continually increasing functionality with new services, products and applications — such as music streaming services (Spotify), file sharing programs (Dropbox) and messaging platforms (Messenger, Whatsapp and Snapchat). Another major finding was the dramatic increase in the “infant mortality” rate of websites — with the dominant players in each functional niche - once established guarding their turf more staunchly than ever.

On the other hand, growing network effect does not always bring proportional increase in returns. Whether additional users bring more value depends on the commoditization of supply, the type of incremental user and the nature of substitutes. For example, social networks can hit an inflection point, after which additional users do not bring more value. This could be attributed to the fact that as more people join the network, its users are less willing to share personal content and the site becomes more focused on news and public content.

Economics

Network economics refers to business economics that benefit from the network effect. This is when the value of a good or service increases when others buy the same good or service. Examples are website such as EBay, or iVillage where the community comes together and shares thoughts to help the website become a better business organization.

In sustainability, network economics refers to multiple professionals (architects, designers, or related businesses) all working together to develop sustainable products and technologies. The more companies are involved in environmentally friendly production, the easier and cheaper it becomes to produce new sustainable products. For instance, if no one produces sustainable products, it is difficult and expensive to design a sustainable house with custom materials and technology. But due to network economics, the more industries are involved in creating such products, the easier it is to design an environmentally sustainable building.

Another benefit of network economics in a certain field is improvement that results from competition and networking within an industry.

Adoption and competition

Critical mass

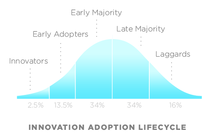

In the early phases of a network technology, incentives to adopt the new technology are low. After a certain number of people have adopted the technology, network effects become significant enough that adoption becomes a dominant strategy. This point is called critical mass. At the critical mass point, the value obtained from the good or service is greater than or equal to the price paid for the good or service.

When a product reaches critical mass, network effects will drive subsequent growth until a stable balance is reached. Therefore, a key business concern must then be how to attract users prior to reaching critical mass. Critical quality is closely related to consumer expectations, which will be affected by price and quality of products or services, the company's reputation and the growth path of the network. Thus, one way is to rely on extrinsic motivation, such as a payment, a fee waiver, or a request for friends to sign up. A more natural strategy is to build a system that has enough value without network effects, at least to early adopters. Then, as the number of users increases, the system becomes even more valuable and is able to attract a wider user base.

Limits to growth

Network growth is generally not infinite, and tends to plateau when it reaches market saturation (all customers have already joined) or diminishing returns make acquisition of the last few customers too costly.

Networks can also stop growing or collapse if they do not have enough capacity to handle growth. For example, a overloaded phone network that has so many customers that it becomes congested, leading to busy signals, the inability to get a dial tone, and poor customer support. This creates a risk that customers will defect to a rival network because of the inadequate capacity of the existing system. After this point, each additional user decreases the value obtained by every other user.

Peer-to-peer (P2P) systems are networks designed to distribute load among their user pool. This theoretically allows P2P networks to scale indefinitely. The P2P based telephony service Skype benefits from this effect and its growth is limited primarily by market saturation.

Market tipping

Network effects give rise to the potential outcome of market tipping, defined as "the tendency of one system to pull away from its rivals in popularity once it has gained an initial edge". Tipping results in a market in which only one good or service dominates and competition is stifled, and can result in a monopoly. This is because network effects tend to incentivise users to coordinate their adoption of a single product. Therefore, tipping can result in a natural form of market concentration in markets that display network effects. However, the presence of network effects does not necessarily imply that a market will tip; the following additional conditions must be met:

- The utility derived by users from network effects must exceed the utility they derive from differentiation

- Users must have high costs of multihoming (i.e. adopting more than one competing networks)

- Users must have high switching costs

If any of these three conditions are not satisfied, the market may fail to tip and multiple products with significant market shares may coexist. One such example is the U.S. instant messaging market, which remained an oligopoly despite significant network effects. This can be attributed to the low multi-homing and switching costs faced by users.

Market tipping does not imply permanent success in a given market. Competition can be reintroduced into the market due to shocks such as the development of new technologies. Additionally, if the price is raised above customers' willingness to pay, this may reverse market tipping.

Multiple equilibria and expectations

Networks effects often result in multiple potential market equilibrium outcomes. The key determinant in which equilibrium will manifest are the expectations of the market participants, which are self-fulfilling. Because users are incentivised to coordinate their adoption, user will tend to adopt the product that they expect to draw the largest number of users. These expectations may be shaped by path dependence, such as a perceived first-mover advantage, which can result in lock-in. The most commonly cited example of path dependence is the QWERTY keyboard, which owes its ubiquity to its establishment of an early lead in the keyboard layout industry and high switching costs, rather than any inherent advantage over competitors. Other key influences of adoption expectations can be reputational (e.g. a firm that has previously produced high quality products may be favoured over a new firm).

Markets with network effects may result in inefficient equilibrium outcomes. With simultaneous adoption, users may fail to coordinate towards a single agreed-upon product, resulting in splintering among different networks, or may coordinate to lock-in to a different product than the one that is best for them.

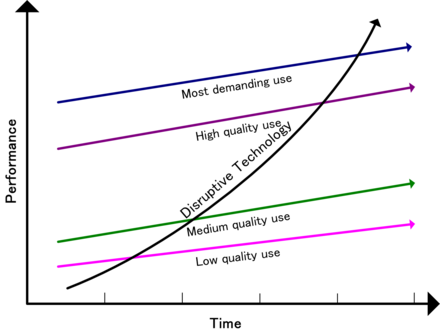

Technology lifecycle

If some existing technology or company whose benefits are largely based on network effects starts to lose market share against a challenger such as a disruptive technology or open standards based competition, the benefits of network effects will reduce for the incumbent, and increase for the challenger. In this model, a tipping point is eventually reached at which the network effects of the challenger dominate those of the former incumbent, and the incumbent is forced into an accelerating decline, whilst the challenger takes over the incumbent's former position.

Sony's Betamax and Victor Company of Japan (JVC)'s video home system (VHS) can both be used for video cassette recorders (VCR), but the two technologies are not compatible. Therefore, the VCR that is suitable for one type of cassette cannot fit in another. VHS's technology gradually surpassed Betamax in the competition. In the end, Betamax lost its original market share and was replaced by VHS.

Negative network externalities

Negative network externalities, in the mathematical sense, are those that have a negative effect compared to normal (positive) network effects. Just as positive network externalities (network effects) cause positive feedback and exponential growth, negative network externalities create negative feedback and exponential decay. In nature, negative network externalities are the forces that pull towards equilibrium, are responsible for stability, and represent physical limitations keeping systems bounded.

Besides, Negative network externalities has four characteristics, which are namely, more login retries, longer query times, longer download times and more download attempts. Therefore, congestion occurs when the efficiency of a network decreases as more people use it, and this reduces the value to people already using it. Traffic congestion that overloads the freeway and network congestion on connections with limited bandwidth both display negative network externalities.

Braess's paradox suggests that adding paths through a network can have a negative effect on performance of the network.

Interoperability

Interoperability has the effect of making the network bigger and thus increases the external value of the network to consumers. Interoperability achieves this primarily by increasing potential connections and secondarily by attracting new participants to the network. Other benefits of interoperability include reduced uncertainty, reduced lock-in, commoditization and competition based on price.

Interoperability can be achieved through standardization or other cooperation. Companies involved in fostering interoperability face a tension between cooperating with their competitors to grow the potential market for products and competing for market share.

Compatibility and incompatibility

Product compatibility is closely related to network externalities in company's competition, which refers to two systems that can be operated together without changing. Compatible products are characterized by better matching with customers, so they can enjoy all the benefits of the network without having to purchase products from the same company. However, not only products of compatibility will intensify competition between companies, this will make users who had purchased products lose their advantages, but also proprietary networks may raise the industry entry standards. Compared to large companies with better reputation or strength, weaker companies or small networks will more inclined to choose compatible products.

Besides, the compatibility of products is conducive to the company's increase in market share. For example, the Windows system is famous for its operating compatibility, thereby satisfying consumers' diversification of other applications. As the supplier of Windows systems, Microsoft benefits from indirect network effects, which cause the growing of the company's market share.

Incompatibility is the opposite of compatibility. Because incompatibility of products will aggravate market segmentation and reduce efficiency, and also harm consumer interests and enhance competition. The result of the competition between incompatible networks depends on the complete sequential of adoption and the early preferences of the adopters. Effective competition determines the market share of companies, which is historically important. Since the installed base can directly bring more network profit and increase the consumers' expectations, which will have a positive impact on the smooth implementation of subsequent network effects.

Open versus closed standards

In communication and information technologies, open standards and interfaces are often developed through the participation of multiple companies and are usually perceived to provide mutual benefit. But, in cases in which the relevant communication protocols or interfaces are closed standards, the network effect can give the company controlling those standards monopoly power. The Microsoft corporation is widely seen by computer professionals as maintaining its monopoly through these means. One observed method Microsoft uses to put the network effect to its advantage is called Embrace, extend and extinguish.

Mirabilis is an Israeli start-up which pioneered instant messaging (IM) and was bought by America Online. By giving away their ICQ product for free and preventing interoperability between their client software and other products, they were able to temporarily dominate the market for instant messaging. The IM technology has completed the use from the home to the workplace, because of its faster processing speed and simplified process characteristics. Because of the network effect, new IM users gained much more value by choosing to use the Mirabilis system (and join its large network of users) than they would use a competing system. As was typical for that era, the company never made any attempt to generate profits from its dominant position before selling the company.

Network effect as a competitive advantage

Network effect can significantly influence the competitive landscape of an industry. According to Michael E. Porter, strong network effect might decrease the threat of new entrants, which is one of the five major competitive forces that act on an industry. Persistent barriers to entry a market may help incumbent companies to fend off competition and keep or increase their market share, while maintaining profitability and return on capital.

These attractive characteristics are one of the reasons that allowed platform companies like Amazon, Google or Facebook to grow rapidly and create shareholder value. On the other hand, network effect can result in high concentration of power in an industry, or even a monopoly. This often leads to increased scrutiny from regulators that try to restore healthy competition, as is often the case with large technology companies.

Examples

The Telephone

Network effects are the incremental benefit gained by each user for each new user that joins a network. An example of a direct network effect is the telephone. Originally when only a small number of people owned a telephone the value it provided was minimal. Not only did other people need to own a telephone for it to be useful, but it also had to be connected to the network through the users home. As technology advanced it became more affordable for people to own a telephone. This created more value and utility due to the increase in users. Eventually increased usage through exponential growth led to the telephone is used by almost every household adding more value to the network for all users. Without the network effect and technological advances the telephone would have no where near the amount of value or utility as it does today.

Financial exchanges

Stock exchanges and derivatives exchanges feature a network effect. Market liquidity is a major determinant of transaction cost in the sale or purchase of a security, as a bid–ask spread exists between the price at which a purchase can be made versus the price at which the sale of the same security can be made. As the number of sellers and buyers in the exchange, who have the symmetric information increases, liquidity increases, and transaction costs decrease. This then attracts a larger number of buyers and sellers to the exchange.

The network advantage of financial exchanges is apparent in the difficulty that startup exchanges have in dislodging a dominant exchange. For example, the Chicago Board of Trade has retained overwhelming dominance of trading in US Treasury bond futures despite the startup of Eurex US trading of identical futures contracts. Similarly, the Chicago Mercantile Exchange has maintained dominance in trading of Eurobond interest rate futures despite a challenge from Euronext.Liffe.

Cryptocurrencies

Cryptocurrencies such as Bitcoin, also feature network effects. Bitcoin's unique properties make it an attractive asset to users and investors. The more users that join the network, the more valuable and secure it becomes. This method creates incentive for users to join so that when the network and community grows, a network effect occurs, making it more likely that new people will also join. Bitcoin provides its users with financial value through the network effect which may lead to more investors due to the appeal of financial gain. This is an example of an indirect network effect as the value only increases due to the initial network being created.

Software

The widely used computer software benefits from powerful network effects. The software-purchase characteristic is that it is easily influenced by the opinions of others, so the customer base of the software is the key to realizing a positive network effect. Although customers' motivation for choosing software is related to the product itself, media interaction and word-of-mouth recommendations from purchased customers can still increase the possibility of software being applied to other customers who have not purchased it, thereby resulting in network effects.

In 2007 Apple released the iPhone followed by the app store. Most iPhone apps rely heavily on the existence of strong network effects. This enables the software to grow in popularity very quickly and spread to a large userbase with very limited marketing needed. The Freemium business model has evolved to take advantage of these network effects by releasing a free version that will not limit the adoption or any users and then charge for premium features as the primary source of revenue. Furthermore, some software companies will launch free trial versions during the trial period to attract buyers and reduce their uncertainty. The duration of free time is related to the network effect. The more positive feedback the company received, the shorter the free trial time will be.

Software companies (for example Adobe or Autodesk) often give significant discounts to students. By doing so, they intentionally stimulate the network effect - as more students learn to use a particular piece of software, it becomes more viable for companies and employers to use it as well. And the more employers require a given skill, the higher the benefit that employees will receive from learning it. This creates a self-reinforcing cycle, further strengthening the network effect.

Web sites

Many web sites benefit from a network effect. One example is web marketplaces and exchanges. For example, eBay would not be a particularly useful site if auctions were not competitive. As the number of users grows on eBay, auctions grow more competitive, pushing up the prices of bids on items. This makes it more worthwhile to sell on eBay and brings more sellers onto eBay, which, in turn, drives prices down again due to increased supply. Increased supply brings even more buyers to eBay. Essentially, as the number of users of eBay grows, prices fall and supply increases, and more and more people find the site to be useful.

Network effects were used as justification in business models by some of the dot-com companies in the late 1990s. These firms operated under the belief that when a new market comes into being which contains strong network effects, firms should care more about growing their market share than about becoming profitable. The justification was that market share would determine which firm could set technical and marketing standards and giving these companies a first-mover advantage.

Social networking websites are good examples. The more people register onto a social networking website, the more useful the website is to its registrants.

Google uses the network effect in its advertising business with its Google AdSense service. AdSense places ads on many small sites, such as blogs, using Google technology to determine which ads are relevant to which blogs. Thus, the service appears to aim to serve as an exchange (or ad network) for matching many advertisers with many small sites. In general, the more blogs AdSense can reach, the more advertisers it will attract, making it the most attractive option for more blogs.

By contrast, the value of a news site is primarily proportional to the quality of the articles, not to the number of other people using the site. Similarly, the first generation of search engines experienced little network effect, as the value of the site was based on the value of the search results. This allowed Google to win users away from Yahoo! without much trouble, once users believed that Google's search results were superior. Some commentators mistook the value of the Yahoo! brand (which does increase as more people know of it) for a network effect protecting its advertising business.

Rail gauge

There are strong network effects in the initial choice of rail gauge, and in gauge conversion decisions. Even when placing isolated rails not connected to any other lines, track layers usually choose a standard rail gauge so they can use off-the-shelf rolling stock. Although a few manufacturers make rolling stock that can adjust to different rail gauges, most manufacturers make rolling stock that only works with one of the standard rail gauges. This even applies to urban rail systems where historically tramways and to a lesser extent metros would come in a wide array of different gauges, nowadays virtually all new networks are built to a handful of gauges and overwhelmingly standard gauge.

Credit cards

For credit cards that are now widely used, large-scale applications on the market are closely related to network effects. Credit card, as one of the currency payment methods in the current economy, which was originated in 1949. Early research on the circulation of credit cards at the retail level found that credit card interest rates were not affected by macroeconomic interest rates and remained almost unchanged. Later, credit cards gradually entered the network level due to changes in policy priorities and became a popular trend in payment in the 1980s. Different levels of credit cards separate benefit from two types of network effects. The application of credit cards related to external network effects, which is because when this has become a payment method, and more people use credit cards. Each additional person uses the same credit card, the value of rest people who use the credit card will increase. Besides, the credit card system at the network level could be seen as a two-sided market. On the one hand, the number of cardholders attracts merchants to use credit cards as a payment method. On the other hand, an increasing number of merchants can also attract more new cardholders. In other words, the use of credit cards has increased significantly among merchants which leads to increased value. This can conversely increase the cardholder's credit card value and the number of users. Moreover, credit card services also display a network effect between merchant discounts and credit accessibility. When credit accessibility increases which greater sales can be obtained, merchants are willing to be charged more discounts by credit card issuers.

Visa has become a leader in the electronic payment industry through the network effect of credit cards as its competitive advantage. Till 2016, Visa's credit card market share has risen from a quarter to as much as half in four years. Visa is benefit from the network effect. Since every additional Visa cardholder is more attractive to merchants, and merchants can also attract more new cardholders through the brand. In other words, the popularity and convenience of Visa in the electronic payment market, lead more people and merchants choose to use Visa, which greatly increases the value of Visa.