| United States | |

|---|---|

| |

| Nuclear program start date | 21 October 1939 |

| First nuclear weapon test | 16 July 1945 |

| First thermonuclear weapon test | 1 November 1952 |

| Last nuclear test | 23 September 1992 |

| Largest yield test | 15 Mt (63 PJ) (1 March 1954) |

| Total tests | 1,054 detonations |

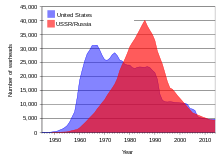

| Peak stockpile | 31,255 warheads (1967) |

| Current stockpile | 3,708 (2023) |

| Maximum missile range | ICBM: 15,000 km (9,321 mi) SLBM: 12,000 km (7,456 mi) |

| NPT party | Yes (1968) |

The United States was the first country to manufacture nuclear weapons and is the only country to have used them in combat, with the bombings of Hiroshima and Nagasaki in World War II. Before and during the Cold War, it conducted 1,054 nuclear tests, and tested many long-range nuclear weapons delivery systems.

Between 1940 and 1996, the U.S. federal government spent at least US$10.9 trillion in present-day terms on nuclear weapons, including platforms development (aircraft, rockets and facilities), command and control, maintenance, waste management and administrative costs. It is estimated that the United States produced more than 70,000 nuclear warheads since 1945, more than all other nuclear weapon states combined. Until November 1962, the vast majority of U.S. nuclear tests were above ground. After the acceptance of the Partial Nuclear Test Ban Treaty, all testing was relegated underground, in order to prevent the dispersion of nuclear fallout.

By 1998, at least US$759 million had been paid to the Marshall Islanders in compensation for their exposure to U.S. nuclear testing. By March 2021 over US$2.5 billion in compensation had been paid to U.S. citizens exposed to nuclear hazards as a result of the U.S. nuclear weapons program.

In 2019, the U.S. and Russia possessed a comparable number of nuclear warheads; together, these two nations possess more than 90% of the world's nuclear weapons stockpile. As of 2020, the United States had a stockpile of 3,750 active and inactive nuclear warheads plus approximately 2,000 warheads retired and awaiting dismantlement. Of the stockpiled warheads, the U.S. stated in its March 2019 New START declaration that 1,365 were deployed on 656 ICBMs, SLBMs, and strategic bombers.

Development history

Manhattan Project

The United States first began developing nuclear weapons during World War II under the order of President Franklin Roosevelt in 1939, motivated by the fear that they were engaged in a race with Nazi Germany to develop such a weapon. After a slow start under the direction of the National Bureau of Standards, at the urging of British scientists and American administrators, the program was put under the Office of Scientific Research and Development, and in 1942 it was officially transferred under the auspices of the United States Army and became known as the Manhattan Project, an American, British and Canadian joint venture. Under the direction of General Leslie Groves, over thirty different sites were constructed for the research, production, and testing of components related to bomb-making. These included the Los Alamos National Laboratory at Los Alamos, New Mexico, under the direction of physicist Robert Oppenheimer, the Hanford plutonium production facility in Washington, and the Y-12 National Security Complex in Tennessee.

By investing heavily in breeding plutonium in early nuclear reactors and in the electromagnetic and gaseous diffusion enrichment processes for the production of uranium-235, the United States was able to develop three usable weapons by mid-1945. The Trinity test was a plutonium implosion-design weapon tested on 16 July 1945, with around a 20 kiloton yield.

Faced with a planned invasion of the Japanese home islands scheduled to begin on 1 November 1945 and with Japan not surrendering, President Harry S. Truman ordered the atomic raids on Japan. On 6 August 1945, the U.S. detonated a uranium-gun design bomb, Little Boy, over the Japanese city of Hiroshima with an energy of about 15 kilotons of TNT, killing approximately 70,000 people, among them 20,000 Japanese combatants and 20,000 Korean slave laborers, and destroying nearly 50,000 buildings (including the 2nd General Army and Fifth Division headquarters). Three days later, on 9 August, the U.S. attacked Nagasaki using a plutonium implosion-design bomb, Fat Man, with the explosion equivalent to about 20 kilotons of TNT, destroying 60% of the city and killing approximately 35,000 people, among them 23,200–28,200 Japanese munitions workers, 2,000 Korean slave laborers, and 150 Japanese combatants.

On 1 January 1947, the Atomic Energy Act of 1946 (known as the McMahon Act) took effect, and the Manhattan Project was officially turned over to the United States Atomic Energy Commission (AEC).

On 15 August 1947, the Manhattan Project was abolished.

During the Cold War

The American atomic stockpile was small and grew slowly in the immediate aftermath of World War II, and the size of that stockpile was a closely guarded secret. However, there were forces that pushed the United States towards greatly increasing the size of the stockpile. Some of these were international in origin and focused on the increasing tensions of the Cold War, including the loss of China, the Soviet Union becoming an atomic power, and the onset of the Korean War. And some of the forces were domestic – both the Truman administration and the Eisenhower administration wanted to rein in military spending and avoid budget deficits and inflation. It was the perception that nuclear weapons gave more "bang for the buck" and thus were the most cost-efficient way to respond to the security threat the Soviet Union represented.

As a result, beginning in 1950 the AEC embarked on a massive expansion of its production facilities, an effort that would eventually be one of the largest U.S. government construction projects ever to take place outside of wartime. And this production would soon include the far more powerful hydrogen bomb, which the United States had decided to move forward with after an intense debate during 1949–50. as well as much smaller tactical atomic weapons for battlefield use.

By 1990, the United States had produced more than 70,000 nuclear warheads, in over 65 different varieties, ranging in yield from around .01 kilotons (such as the man-portable Davy Crockett shell) to the 25 megaton B41 bomb. Between 1940 and 1996, the U.S. spent at least $10.9 trillion in present-day terms on nuclear weapons development. Over half was spent on building delivery mechanisms for the weapon. $681 billion in present-day terms was spent on nuclear waste management and environmental remediation.

Richland, Washington was the first city established to support plutonium production at the nearby Hanford nuclear site, to power the American nuclear weapons arsenals. It produced plutonium for use in cold war atomic bombs.

Throughout the Cold War, the U.S. and USSR threatened with all-out nuclear attack in case of war, regardless of whether it was a conventional or a nuclear clash. U.S. nuclear doctrine called for mutually assured destruction (MAD), which entailed a massive nuclear attack against strategic targets and major populations centers of the Soviet Union and its allies. The term "mutual assured destruction" was coined in 1962 by American strategist Donald Brennan. MAD was implemented by deploying nuclear weapons simultaneously on three different types of weapons platforms.

Post–Cold War

After the 1989 end of the Cold War and the 1991 dissolution of the Soviet Union, the U.S. nuclear program was heavily curtailed; halting its program of nuclear testing, ceasing its production of new nuclear weapons, and reducing its stockpile by half by the mid-1990s under President Bill Clinton. Many former nuclear facilities were closed, and their sites became targets of extensive environmental remediation. Efforts were redirected from weapons production to stockpile stewardship;,attempting to predict the behavior of aging weapons without using full-scale nuclear testing. Increased funding was directed to anti-nuclear proliferation programs, such as helping the states of the former Soviet Union to eliminate their former nuclear sites and to assist Russia in their efforts to inventory and secure their inherited nuclear stockpile. By February 2006, over $1.2 billion had been paid under the Radiation Exposure Compensation Act of 1990 to U.S. citizens exposed to nuclear hazards as a result of the U.S. nuclear weapons program, and by 1998 at least $759 million had been paid to the Marshall Islanders in compensation for their exposure to U.S. nuclear testing. Over $15 million was paid to the Japanese government following the exposure of its citizens and food supply to nuclear fallout from the 1954 "Bravo" test. In 1998, the country spent an estimated $35.1 billion on its nuclear weapons and weapons-related programs.

In the 2013 book Plutopia: Nuclear Families, Atomic Cities, and the Great Soviet and American Plutonium Disasters (Oxford), Kate Brown explores the health of affected citizens in the United States, and the "slow-motion disasters" that still threaten the environments where the plants are located. According to Brown, the plants at Hanford, over a period of four decades, released millions of curies of radioactive isotopes into the surrounding environment. Brown says that most of this radioactive contamination over the years at Hanford were part of normal operations, but unforeseen accidents did occur and plant management kept this secret, as the pollution continued unabated. Even today, as pollution threats to health and the environment persist, the government keeps knowledge about the associated risks from the public.

During the presidency of George W. Bush, and especially after the 11 September terrorist attacks of 2001, rumors circulated in major news sources that the U.S. was considering designing new nuclear weapons ("bunker-busting nukes") and resuming nuclear testing for reasons of stockpile stewardship. Republicans argued that small nuclear weapons appear more likely to be used than large nuclear weapons, and thus small nuclear weapons pose a more credible threat that has more of a deterrent effect against hostile behavior. Democrats counterargued that allowing the weapons could trigger an arms race. In 2003, the Senate Armed Services Committee voted to repeal the 1993 Spratt-Furse ban on the development of small nuclear weapons. This change was part of the 2004 fiscal year defense authorization. The Bush administration wanted the repeal so that they could develop weapons to address the threat from North Korea. "Low-yield weapons" (those with one-third the force of the bomb that was dropped on Hiroshima in 1945) were permitted to be developed. The Bush administration was unsuccessful in its goal to develop a guided low-yield nuclear weapon, however, in 2010 President Barack Obama began funding and development for what would become the B61-12, a smart guided low-yield nuclear bomb developed off of the B61 “dumb bomb”.

Statements by the U.S. government in 2004 indicated that they planned to decrease the arsenal to around 5,500 total warheads by 2012. Much of that reduction was already accomplished by January 2008.

According to the Pentagon's June 2019 Doctrine for Joint Nuclear Operations, "Integration of nuclear weapons employment with conventional and special operations forces is essential to the success of any mission or operation."

Nuclear weapons testing

Between 16 July 1945 and 23 September 1992, the United States maintained a program of vigorous nuclear testing, with the exception of a moratorium between November 1958 and September 1961. By official count, a total of 1,054 nuclear tests and two nuclear attacks were conducted, with over 100 of them taking place at sites in the Pacific Ocean, over 900 of them at the Nevada Test Site, and ten on miscellaneous sites in the United States (Alaska, Colorado, Mississippi, and New Mexico). Until November 1962, the vast majority of the U.S. tests were atmospheric (that is, above-ground); after the acceptance of the Partial Test Ban Treaty all testing was relegated underground, in order to prevent the dispersion of nuclear fallout.

The U.S. program of atmospheric nuclear testing exposed a number of the population to the hazards of fallout. Estimating exact numbers, and the exact consequences, of people exposed has been medically very difficult, with the exception of the high exposures of Marshall Islanders and Japanese fishers in the case of the Castle Bravo incident in 1954. A number of groups of U.S. citizens—especially farmers and inhabitants of cities downwind of the Nevada Test Site and U.S. military workers at various tests—have sued for compensation and recognition of their exposure, many successfully. The passage of the Radiation Exposure Compensation Act of 1990 allowed for a systematic filing of compensation claims in relation to testing as well as those employed at nuclear weapons facilities. By June 2009 over $1.4 billion total has been given in compensation, with over $660 million going to "downwinders".

A few notable U.S. nuclear tests include:

- Trinity test on 16 July 1945, was the world's first test of a nuclear weapon (yield of around 20 kt).

- Operation Crossroads series in July 1946, was the first postwar test series and one of the largest military operations in U.S. history.

- Operation Greenhouse shots of May 1951 included the first boosted fission weapon test ("Item") and a scientific test that proved the feasibility of thermonuclear weapons ("George").

- Ivy Mike shot of 1 November 1952, was the first full test of a Teller-Ulam design "staged" hydrogen bomb, with a yield of 10 megatons. It was not a deployable weapon, however—with its full cryogenic equipment it weighed some 82 tons.

- Castle Bravo shot of 1 March 1954, was the first test of a deployable (solid fuel) thermonuclear weapon, and also (accidentally) the largest weapon ever tested by the United States (15 megatons). It was also the single largest U.S. radiological accident in connection with nuclear testing. The unanticipated yield, and a change in the weather, resulted in nuclear fallout spreading eastward onto the inhabited Rongelap and Rongerik atolls, which were soon evacuated. Many of the Marshall Islanders have since suffered from birth defects and have received some compensation from the federal government. A Japanese fishing boat, Daigo Fukuryū Maru, also came into contact with the fallout, which caused many of the crew to grow ill; one eventually died.

- Shot Argus I of Operation Argus, on 27 August 1958, was the first detonation of a nuclear weapon in outer space when a 1.7-kiloton warhead was detonated at an altitude of 200 kilometres (120 mi) during a series of high altitude nuclear explosions.

- Shot Frigate Bird of Operation Dominic I on 6 May 1962, was the only U.S. test of an operational submarine-launched ballistic missile (SLBM) with a live nuclear warhead (yield of 600 kilotons), at Christmas Island. In general, missile systems were tested without live warheads and warheads were tested separately for safety concerns. In the early 1960s, however, there mounted technical questions about how the systems would behave under combat conditions (when they were "mated", in military parlance), and this test was meant to dispel these concerns. However, the warhead had to be somewhat modified before its use, and the missile was a SLBM (and not an ICBM), so by itself it did not satisfy all concerns.

- Shot Sedan of Operation Storax on 6 July 1962 (yield of 104 kilotons), was an attempt to show the feasibility of using nuclear weapons for "civilian" and "peaceful" purposes as part of Operation Plowshare. In this instance, a 1,280-foot (390 m) diameter 320-foot (98 m) deep crater was created at the Nevada Test Site.

A summary table of each of the American operational series may be found at United States' nuclear test series.

Delivery systems

The original Little Boy and Fat Man weapons, developed by the United States during the Manhattan Project, were relatively large (Fat Man had a diameter of 5 feet (1.5 m)) and heavy (around 5 tons each) and required specially modified bomber planes to be adapted for their bombing missions against Japan. Each modified bomber could only carry one such weapon and only within a limited range. After these initial weapons were developed, a considerable amount of money and research was conducted towards the goal of standardizing nuclear warheads so that they did not require highly specialized experts to assemble them before use, as in the case with the idiosyncratic wartime devices, and miniaturization of the warheads for use in more variable delivery systems.

Through the aid of brainpower acquired through Operation Paperclip at the tail end of the European theater of World War II, the United States was able to embark on an ambitious program in rocketry. One of the first products of this was the development of rockets capable of holding nuclear warheads. The MGR-1 Honest John was the first such weapon, developed in 1953 as a surface-to-surface missile with a 15-mile (24 km) maximum range. Because of their limited range, their potential use was heavily constrained (they could not, for example, threaten Moscow with an immediate strike).

Development of long-range bombers, such as the B-29 Superfortress during World War II, was continued during the Cold War period. In 1946, the Convair B-36 Peacemaker became the first purpose-built nuclear bomber; it served with the USAF until 1959. The Boeing B-52 Stratofortress was able by the mid-1950s to carry a wide arsenal of nuclear bombs, each with different capabilities and potential use situations. Starting in 1946, the U.S. based its initial deterrence force on the Strategic Air Command, which, by the late 1950s, maintained a number of nuclear-armed bombers in the sky at all times, prepared to receive orders to attack the USSR whenever needed. This system was, however, tremendously expensive, both in terms of natural and human resources, and raised the possibility of an accidental nuclear war.

During the 1950s and 1960s, elaborate computerized early warning systems such as Defense Support Program were developed to detect incoming Soviet attacks and to coordinate response strategies. During this same period, intercontinental ballistic missile (ICBM) systems were developed that could deliver a nuclear payload across vast distances, allowing the U.S. to house nuclear forces capable of hitting the Soviet Union in the American Midwest. Shorter-range weapons, including small tactical weapons, were fielded in Europe as well, including nuclear artillery and man-portable Special Atomic Demolition Munition. The development of submarine-launched ballistic missile systems allowed for hidden nuclear submarines to covertly launch missiles at distant targets as well, making it virtually impossible for the Soviet Union to successfully launch a first strike attack against the United States without receiving a deadly response.

Improvements in warhead miniaturization in the 1970s and 1980s allowed for the development of MIRVs—missiles which could carry multiple warheads, each of which could be separately targeted. The question of whether these missiles should be based on constantly rotating train tracks (to avoid being easily targeted by opposing Soviet missiles) or based in heavily fortified silos (to possibly withstand a Soviet attack) was a major political controversy in the 1980s (eventually the silo deployment method was chosen). MIRVed systems enabled the U.S. to render Soviet missile defenses economically unfeasible, as each offensive missile would require between three and ten defensive missiles to counter.

Additional developments in weapons delivery included cruise missile systems, which allowed a plane to fire a long-distance, low-flying nuclear-armed missile towards a target from a relatively comfortable distance.

The current delivery systems of the U.S. make virtually any part of the Earth's surface within the reach of its nuclear arsenal. Though its land-based missile systems have a maximum range of 10,000 kilometres (6,200 mi) (less than worldwide), its submarine-based forces extend its reach from a coastline 12,000 kilometres (7,500 mi) inland. Additionally, in-flight refueling of long-range bombers and the use of aircraft carriers extends the possible range virtually indefinitely.

Command and control

Command and control procedures in case of nuclear war were given by the Single Integrated Operational Plan (SIOP) until 2003, when this was superseded by Operations Plan 8044.

Since World War II, the President of the United States has had sole authority to launch U.S. nuclear weapons, whether as a first strike or nuclear retaliation. This arrangement was seen as necessary during the Cold War to present a credible nuclear deterrent; if an attack was detected, the United States would have only minutes to launch a counterstrike before its nuclear capability was severely damaged, or national leaders killed. If the President has been killed, command authority follows the presidential line of succession. Changes to this policy have been proposed, but currently the only way to countermand such an order before the strike was launched would be for the Vice President and the majority of the Cabinet to relieve the President under Section 4 of the Twenty-fifth Amendment to the United States Constitution.

Regardless of whether the United States is actually under attack by a nuclear-capable adversary, the President alone has the authority to order nuclear strikes. The President and the Secretary of Defense form the National Command Authority, but the Secretary of Defense has no authority to refuse or disobey such an order. The President's decision must be transmitted to the National Military Command Center, which will then issue the coded orders to nuclear-capable forces.

The President can give a nuclear launch order using their nuclear briefcase (nicknamed the nuclear football), or can use command centers such as the White House Situation Room. The command would be carried out by a Nuclear and Missile Operations Officer (a member of a missile combat crew, also called a "missileer") at a missile launch control center. A two-man rule applies to the launch of missiles, meaning that two officers must turn keys simultaneously (far enough apart that this cannot be done by one person).

When President Reagan was shot in 1981, there was confusion about where the "nuclear football" was, and who was in charge.

In 1975, a launch crew member, Harold Hering, was dismissed from the Air Force for asking how he could know whether the order to launch his missiles came from a sane president. It has been claimed that the system is not foolproof.

Starting with President Eisenhower, authority to launch a full-scale nuclear attack has been delegated to theater commanders and other specific commanders if they believe it is warranted by circumstances, and are out of communication with the president or the president had been incapacitated. For example, during the Cuban Missile Crisis, on 24 October 1962, General Thomas Power, commander of the Strategic Air Command (SAC), took the country to DEFCON 2, the very precipice of full-scale nuclear war, launching the SAC bombers of the US with nuclear weapons ready to strike. Moreover, some of these commanders subdelegated to lower commanders the authority to launch nuclear weapons under similar circumstance. In fact, the nuclear weapons were not placed under locks (i.e., permissive action links) until decades later, and so pilots or individual submarine commanders had the power to launch nuclear weapons entirely on their own, without higher authority.

Accidents

The United States nuclear program since its inception has experienced accidents of varying forms, ranging from single-casualty research experiments (such as that of Louis Slotin during the Manhattan Project), to the nuclear fallout dispersion of the Castle Bravo shot in 1954, to accidents such as crashes of aircraft carrying nuclear weapons, the dropping of nuclear weapons from aircraft, losses of nuclear submarines, and explosions of nuclear-armed missiles (broken arrows). How close any of these accidents came to being major nuclear disasters is a matter of technical and scholarly debate and interpretation.

Weapons accidentally dropped by the United States include incidents off the coast of British Columbia (1950) (see 1950 British Columbia B-36 crash), near Atlantic City, New Jersey (1957); Savannah, Georgia (1958) (see Tybee Bomb); Goldsboro, North Carolina (1961) (see 1961 Goldsboro B-52 crash); off the coast of Okinawa (1965); in the sea near Palomares, Spain (1966, see 1966 Palomares B-52 crash); and near Thule Air Base, Greenland (1968) (see 1968 Thule Air Base B-52 crash). In some of these cases (such as the 1966 Palomares case), the explosive system of the fission weapon discharged, but did not trigger a nuclear chain reaction (safety features prevent this from easily happening), but did disperse hazardous nuclear materials across wide areas, necessitating expensive cleanup endeavors. Several US nuclear weapons, partial weapons, or weapons components are thought to be lost and unrecovered, primarily in aircraft accidents. The 1980 Damascus Titan missile explosion in Damascus, Arkansas, threw a warhead from its silo but did not release any radiation.

The nuclear testing program resulted in a number of cases of fallout dispersion onto populated areas. The most significant of these was the Castle Bravo test, which spread radioactive ash over an area of over 100 square miles (260 km2), including a number of populated islands. The populations of the islands were evacuated but not before suffering radiation burns. They would later suffer long-term effects, such as birth defects and increased cancer risk. There are ongoing concerns around deterioration of the nuclear waste site on Runit Island and a potential radioactive spill. There were also instances during the nuclear testing program in which soldiers were exposed to overly high levels of radiation, which grew into a major scandal in the 1970s and 1980s, as many soldiers later suffered from what were claimed to be diseases caused by their exposures.

Many of the former nuclear facilities produced significant environmental damages during their years of activity, and since the 1990s have been Superfund sites of cleanup and environmental remediation. Hanford is currently the most contaminated nuclear site in the United States and is the focus of the nation's largest environmental cleanup. Radioactive materials are known to be leaking from Hanford into the environment. The Radiation Exposure Compensation Act of 1990 allows for U.S. citizens exposed to radiation or other health risks through the U.S. nuclear program to file for compensation and damages.

Deliberate attacks on weapons facilities

In 1972, three hijackers took control of a domestic passenger flight along the east coast of the U.S. and threatened to crash the plane into a U.S. nuclear weapons plant in Oak Ridge, Tennessee. The plane got as close as 8,000 feet above the site before the hijackers' demands were met.

Various acts of civil disobedience since 1980 by the peace group Plowshares have shown how nuclear weapons facilities can be penetrated, and the group's actions represent extraordinary breaches of security at nuclear weapons plants in the United States. The National Nuclear Security Administration has acknowledged the seriousness of the 2012 Plowshares action. Non-proliferation policy experts have questioned "the use of private contractors to provide security at facilities that manufacture and store the government's most dangerous military material". Nuclear weapons materials on the black market are a global concern, and there is concern about the possible detonation of a small, crude nuclear weapon by a militant group in a major city, with significant loss of life and property.

Stuxnet is a computer worm discovered in June 2010 that is believed to have been created by the United States and Israel to attack Iran's nuclear fuel enrichment facilities.

Development agencies

The initial U.S. nuclear program was run by the National Bureau of Standards starting in 1939 under the edict of President Franklin Delano Roosevelt. Its primary purpose was to delegate research and dispense funds. In 1940 the National Defense Research Committee (NDRC) was established, coordinating work under the Committee on Uranium among its other wartime efforts. In June 1941, the Office of Scientific Research and Development (OSRD) was established, with the NDRC as one of its subordinate agencies, which enlarged and renamed the Uranium Committee as the Section on Uranium. In 1941, NDRC research was placed under direct control of Vannevar Bush as the OSRD S-1 Section, which attempted to increase the pace of weapons research. In June 1942, the U.S. Army Corps of Engineers took over the project to develop atomic weapons, while the OSRD retained responsibility for scientific research.

This was the beginning of the Manhattan Project, run as the Manhattan Engineering District (MED), an agency under military control that was in charge of developing the first atomic weapons. After World War II, the MED maintained control over the U.S. arsenal and production facilities and coordinated the Operation Crossroads tests. In 1946 after a long and protracted debate, the Atomic Energy Act of 1946 was passed, creating the Atomic Energy Commission (AEC) as a civilian agency that would be in charge of the production of nuclear weapons and research facilities, funded through Congress, with oversight provided by the Joint Committee on Atomic Energy. The AEC was given vast powers of control over secrecy, research, and money, and could seize lands with suspected uranium deposits. Along with its duties towards the production and regulation of nuclear weapons, it was also in charge of stimulating development and regulating civilian nuclear power. The full transference of activities was finalized in January 1947.

In 1975, following the "energy crisis" of the early 1970s and public and congressional discontent with the AEC (in part because of the impossibility to be both a producer and a regulator), it was disassembled into component parts as the Energy Research and Development Administration (ERDA), which assumed most of the AEC's former production, coordination, and research roles, and the Nuclear Regulatory Commission, which assumed its civilian regulation activities.

ERDA was short-lived, however, and in 1977 the U.S. nuclear weapons activities were reorganized under the Department of Energy, which maintains such responsibilities through the semi-autonomous National Nuclear Security Administration. Some functions were taken over or shared by the Department of Homeland Security in 2002. The already-built weapons themselves are in the control of the Strategic Command, which is part of the Department of Defense.

In general, these agencies served to coordinate research and build sites. They generally operated their sites through contractors, however, both private and public (for example, Union Carbide, a private company, ran Oak Ridge National Laboratory for many decades; the University of California, a public educational institution, has run the Los Alamos and Lawrence Livermore laboratories since their inception, and will jointly manage Los Alamos with the private company Bechtel as of its next contract). Funding was received both through these agencies directly, but also from additional outside agencies, such as the Department of Defense. Each branch of the military also maintained its own nuclear-related research agencies (generally related to delivery systems).

Weapons production complex

This table is not comprehensive, as numerous facilities throughout the United States have contributed to its nuclear weapons program. It includes the major sites related to the U.S. weapons program (past and present), their basic site functions, and their current status of activity. Not listed are the many bases and facilities at which nuclear weapons have been deployed. In addition to deploying weapons on its own soil, during the Cold War, the United States also stationed nuclear weapons in 27 foreign countries and territories, including Okinawa (which was US-controlled until 1971,) Japan (during the occupation immediately following World War II), Greenland, Germany, Taiwan, and French Morocco then independent Morocco.

| Site name | Location | Function | Status |

|---|---|---|---|

| Los Alamos National Laboratory | Los Alamos, New Mexico | Research, Design, Pit Production | Active |

| Lawrence Livermore National Laboratory | Livermore, California | Research and design | Active |

| Sandia National Laboratories | Livermore, California; Albuquerque, New Mexico | Research and design | Active |

| Hanford Site | Richland, Washington | Material production (plutonium) | Not active, in remediation |

| Oak Ridge National Laboratory | Oak Ridge, Tennessee | Material production (uranium-235, fusion fuel), research | Active to some extent |

| Y-12 National Security Complex | Oak Ridge, Tennessee | Component fabrication, stockpile stewardship, uranium storage | Active |

| Nevada Test Site | Near Las Vegas, Nevada | Nuclear testing and nuclear waste disposal | Active; no tests since 1992, now engaged in waste disposal |

| Yucca Mountain | Nevada Test Site | Waste disposal (primarily power reactor) | Pending |

| Waste Isolation Pilot Plant | East of Carlsbad, New Mexico | Radioactive waste from nuclear weapons production | Active |

| Pacific Proving Grounds | Marshall Islands | Nuclear testing | Not active, last test in 1962 |

| Rocky Flats Plant | Near Denver, Colorado | Components fabrication | Not active, in remediation |

| Pantex | Amarillo, Texas | Weapons assembly, disassembly, pit storage | Active, esp. disassembly |

| Fernald Site | Near Cincinnati, Ohio | Material fabrication (uranium-238) | Not active, in remediation |

| Paducah Plant | Paducah, Kentucky | Material production (uranium-235) | Active (commercial use) |

| Portsmouth Plant | Near Portsmouth, Ohio | Material fabrication (uranium-235) | Active, (centrifuge), but not for weapons production |

| Kansas City Plant | Kansas City, Missouri | Component production | Active |

| Mound Plant | Miamisburg, Ohio | Research, component production, tritium purification | Not active, in remediation |

| Pinellas Plant | Largo, Florida | Manufacture of electrical components | Active, but not for weapons production |

| Savannah River Site | Near Aiken, South Carolina | Material production (plutonium, tritium) | Active (limited operation), in remediation |

| |||

Proliferation

Early on in the development of its nuclear weapons, the United States relied in part on information-sharing with both the United Kingdom and Canada, as codified in the Quebec Agreement of 1943. These three parties agreed not to share nuclear weapons information with other countries without the consent of the others, an early attempt at nonproliferation. After the development of the first nuclear weapons during World War II, though, there was much debate within the political circles and public sphere of the United States about whether or not the country should attempt to maintain a monopoly on nuclear technology, or whether it should undertake a program of information sharing with other nations (especially its former ally and likely competitor, the Soviet Union), or submit control of its weapons to some sort of international organization (such as the United Nations) who would use them to attempt to maintain world peace. Though fear of a nuclear arms race spurred many politicians and scientists to advocate some degree of international control or sharing of nuclear weapons and information, many politicians and members of the military believed that it was better in the short term to maintain high standards of nuclear secrecy and to forestall a Soviet bomb as long as possible (and they did not believe the USSR would actually submit to international controls in good faith).

Since this path was chosen, the United States was, in its early days, essentially an advocate for the prevention of nuclear proliferation, though primarily for the reason of self-preservation. A few years after the USSR detonated its first weapon in 1949, though, the U.S. under President Dwight D. Eisenhower sought to encourage a program of sharing nuclear information related to civilian nuclear power and nuclear physics in general. The Atoms for Peace program, begun in 1953, was also in part political: the U.S. was better poised to commit various scarce resources, such as enriched uranium, towards this peaceful effort, and to request a similar contribution from the Soviet Union, who had far fewer resources along these lines; thus the program had a strategic justification as well, as was later revealed by internal memos. This overall goal of promoting civilian use of nuclear energy in other countries, while also preventing weapons dissemination, has been labeled by many critics as contradictory and having led to lax standards for a number of decades which allowed a number of other nations, such as China and India, to profit from dual-use technology (purchased from nations other than the U.S.).

The Cooperative Threat Reduction program of the Defense Threat Reduction Agency was established after the breakup of the Soviet Union in 1991 to aid former Soviet bloc countries in the inventory and destruction of their sites for developing nuclear, chemical, and biological weapons, and their methods of delivering them (ICBM silos, long-range bombers, etc.). Over $4.4 billion has been spent on this endeavor to prevent purposeful or accidental proliferation of weapons from the former Soviet arsenal.

After India and Pakistan tested nuclear weapons in 1998, President Bill Clinton imposed economic sanctions on the countries. In 1999, however, the sanctions against India were lifted; those against Pakistan were kept in place as a result of the military government that had taken over. Shortly after the September 11 attacks in 2001, President George W. Bush lifted the sanctions against Pakistan as well, in order to get the Pakistani government's help as a conduit for US and NATO forces for operations in Afghanistan.

The U.S. government has been vocal against the proliferation of such weapons in the countries of Iran and North Korea. The 2003 invasion of Iraq by the U.S. was done, in part, on indications that Weapons of mass destruction were being stockpiled (later, stockpiles of previously undeclared nerve agent and mustard gas shells were located in Iraq), and the Bush administration said that its policies on proliferation were responsible for the Libyan government's agreement to abandon its nuclear ambitions. However, a year after the war began, the Senate's report on pre-war intelligence on Iraq, no stockpiles of weapons of mass destruction or active programs of mass destruction were found in Iraq.

Nuclear disarmament in international law

The United States is one of the five nuclear weapons states with a declared nuclear arsenal under the Treaty on the Non-Proliferation of Nuclear Weapons (NPT), of which it was an original drafter and signatory on 1 July 1968 (ratified 5 March 1970). All signatories of the NPT agreed to refrain from aiding in nuclear weapons proliferation to other states.

Further under Article VI of the NPT, all signatories, including the US, agreed to negotiate in good faith to stop the nuclear arms race and to negotiate for complete elimination of nuclear weapons. "Each of the Parties to the Treaty undertakes to pursue negotiations in good faith on effective measures relating to cessation of the nuclear arms race at an early date and to nuclear disarmament, and on a treaty on general and complete disarmament." The International Court of Justice (ICJ), the preeminent judicial tribunal of international law, in its advisory opinion on the Legality of the Threat or Use of Nuclear Weapons, issued 8 July 1996, unanimously interprets the text of Article VI as implying that:

There exists an obligation to pursue in good faith and bring to a conclusion negotiations leading to nuclear disarmament in all its aspects under strict and effective international control.

The International Atomic Energy Agency (IAEA) in 2005 proposed a comprehensive ban on fissile material that would greatly limit the production of weapons of mass destruction. One hundred forty seven countries voted for this proposal but the United States voted against. The US government has also resisted the Treaty on the Prohibition of Nuclear Weapons, a binding agreement for negotiations for the total elimination of nuclear weapons, supported by more than 120 nations.

International relations and nuclear weapons

In 1958, the United States Air Force had considered a plan to drop nuclear bombs on China during a confrontation over Taiwan but it was overruled, previously secret documents showed after they were declassified due to the Freedom of Information Act in April 2008. The plan included an initial plan to drop 10–15 kiloton bombs on airfields in Amoy (now called Xiamen) in the event of a Chinese blockade against Taiwan's Offshore Islands.

Occupational illness

The Energy Employees Occupational Illness Compensation Program (EEOICP) began on 31 July 2001. The program provides compensation and health benefits to Department of Energy nuclear weapons workers (employees, former employees, contractors and subcontractors) as well as compensation to certain survivors if the worker is already deceased. By 14 August 2010, the program had already identified 45,799 civilians who lost their health (including 18,942 who developed cancer) due to exposure to radiation and toxic substances while producing nuclear weapons for the United States.

Current status

The United States is one of the five recognized nuclear powers by the signatories of the Treaty on the Non-Proliferation of Nuclear Weapons (NPT). As of 2017, the US has an estimated 4,018 nuclear weapons in either deployment or storage. This figure compares to a peak of 31,225 total warheads in 1967 and 22,217 in 1989, and does not include "several thousand" warheads that have been retired and scheduled for dismantlement. The Pantex Plant near Amarillo, Texas, is the only location in the United States where weapons from the aging nuclear arsenal can be refurbished or dismantled.

In 2009 and 2010, the Obama administration declared policies that would invalidate the Bush-era policy for use of nuclear weapons and its motions to develop new ones. First, in a prominent 2009 speech, U.S. President Barack Obama outlined a goal of "a world without nuclear weapons". To that goal, U.S. President Barack Obama and Russian President Dmitry Medvedev signed a new START treaty on 8 April 2010, to reduce the number of active nuclear weapons from 2,200 to 1,550. That same week Obama also revised U.S. policy on the use of nuclear weapons in a Nuclear Posture Review required of all presidents, declaring for the first time that the U.S. would not use nuclear weapons against non-nuclear, NPT-compliant states. The policy also renounces development of any new nuclear weapons. However, within the same Nuclear Posture Review of April of 2010, there was a stated need to develop new “low yield” nuclear weapons. This resulted in the development of the B61 Mod 12. Despite President Obama's goal of a nuclear-free world and reversal of former President Bush’s nuclear policies, his presidency cut fewer warheads from the stockpile any previous post-Cold War presidency.

Following a renewal of tension after the Russo-Ukrainian War started in 2014, the Obama administration announced plans to continue to renovate the US nuclear weapons facilities and platforms with a budgeted spend of about a trillion dollars over 30 years. Under these news plans, the US government would fund research and development of new nuclear cruise missiles. The Trump and Biden administrations continued with these plans.

As of 2021, American nuclear forces on land consist of 400 Minuteman III ICBMs spread among 450 operational launchers, staffed by Air Force Global Strike Command. Those in the seas consist of 14 nuclear-capable Ohio-class Trident submarines, nine in the Pacific and five in the Atlantic. Nuclear capabilities in the air are provided by 60 nuclear-capable heavy bombers, 20 B-2 bombers and 40 B-52s.

The Air Force has modernized its Minuteman III missiles to last through 2030, and a Ground Based Strategic Deterrent (GBSD) is set to begin replacing them in 2029. The Navy has undertaken efforts to extend the operational lives of its missiles in warheads past 2020; it is also producing new Columbia-class submarines to replace the Ohio fleet beginning 2031. The Air Force is also retiring the nuclear cruise missiles of its B-52s, leaving only half nuclear-capable. It intends to procure a new long-range bomber, the B-21, and a new long-range standoff (LRSO) cruise missile in the 2020s.

Nuclear disarmament movement

In the early 1980s, the revival of the nuclear arms race triggered large protests about nuclear weapons. On 12 June 1982, one million people demonstrated in New York City's Central Park against nuclear weapons and for an end to the cold war arms race. It was the largest anti-nuclear protest and the largest political demonstration in American history. International Day of Nuclear Disarmament protests were held on 20 June 1983 at 50 sites across the United States. There were many Nevada Desert Experience protests and peace camps at the Nevada Test Site during the 1980s and 1990s.

There have also been protests by anti-nuclear groups at the Y-12 Nuclear Weapons Plant, the Idaho National Laboratory, Yucca Mountain nuclear waste repository proposal, the Hanford Site, the Nevada Test Site, Lawrence Livermore National Laboratory, and transportation of nuclear waste from the Los Alamos National Laboratory.

On 1 May 2005, 40,000 anti-nuclear/anti-war protesters marched past the United Nations in New York, 60 years after the atomic bombings of Hiroshima and Nagasaki. This was the largest anti-nuclear rally in the U.S. for several decades. In May 2010, some 25,000 people, including members of peace organizations and 1945 atomic bomb survivors, marched from downtown New York to the United Nations headquarters, calling for the elimination of nuclear weapons.

Some scientists and engineers have opposed nuclear weapons, including Paul M. Doty, Hermann Joseph Muller, Linus Pauling, Eugene Rabinowitch, M.V. Ramana and Frank N. von Hippel. In recent years, many elder statesmen have also advocated nuclear disarmament. Sam Nunn, William Perry, Henry Kissinger, and George Shultz—have called upon governments to embrace the vision of a world free of nuclear weapons, and in various op-ed columns have proposed an ambitious program of urgent steps to that end. The four have created the Nuclear Security Project to advance this agenda. Organizations such as Global Zero, an international non-partisan group of 300 world leaders dedicated to achieving nuclear disarmament, have also been established.

United States strategic nuclear weapons arsenal

New START Treaty Aggregate Numbers of Strategic Offensive Arms, 14 June 2023

| Category of Data | United States of America |

|---|---|

| Deployed ICBMs, Deployed SLBMs,

and Deployed Heavy Bombers |

665 |

| Warheads on Deployed ICBMs, on Deployed SLBMs,

and Nuclear Warheads Counted for Deployed Heavy Bombers |

1,389 |

| Deployed and Non-deployed Launchers of ICBMs,

Deployed and Non-deployed Launchers of SLBMs, and Deployed and Non-deployed Heavy Bombers |

800 |

| Total | 2,854 |

Notes:

- Each heavy bomber is counted as one warhead (The New START Treaty)

- The nuclear weapon delivery capability has been removed from B-1 heavy bombers.