In navigation, dead reckoning is the process of calculating the current position of a moving object by using a previously determined position, or fix, and incorporating estimates of speed, heading (or direction or course), and elapsed time. The corresponding term in biology, to describe the processes by which animals update their estimates of position or heading, is path integration.

Advances in navigational aids that give accurate information on position, in particular satellite navigation using the Global Positioning System, have made simple dead reckoning by humans obsolete for most purposes. However, inertial navigation systems, which provide very accurate directional information, use dead reckoning and are very widely applied.

Etymology

Contrary to myth, the term "dead reckoning" was not originally used to abbreviate "deduced reckoning", nor is it a misspelling of the term "ded reckoning". The use of "ded" or "deduced reckoning" is not known to have appeared earlier than 1931, much later in history than "dead reckoning", which appeared as early as 1613 in the Oxford English Dictionary. The original intention of "dead" in the term is generally assumed to mean using a stationary object that is "dead in the water" as a basis for calculations. Additionally, at the time the first appearance of "dead reckoning", "ded" was considered a common spelling of "dead". This potentially led to later confusion of the origin of the term.

By analogy with their navigational use, the words dead reckoning are also used to mean the process of estimating the value of any variable quantity by using an earlier value and adding whatever changes have occurred in the meantime. Often, this usage implies that the changes are not known accurately. The earlier value and the changes may be measured or calculated quantities.

Errors

While dead reckoning can give the best available information on the present position with little math or analysis, it is subject to significant errors of approximation. For precise positional information, both speed and direction must be accurately known at all times during travel. Most notably, dead reckoning does not account for directional drift during travel through a fluid medium. These errors tend to compound themselves over greater distances, making dead reckoning a difficult method of navigation for longer journeys.

For example, if displacement is measured by the number of rotations of a wheel, any discrepancy between the actual and assumed traveled distance per rotation, due perhaps to slippage or surface irregularities, will be a source of error. As each estimate of position is relative to the previous one, errors are cumulative, or compounding, over time.

The accuracy of dead reckoning can be increased significantly by using other, more reliable methods to get a new fix part way through the journey. For example, if one was navigating on land in poor visibility, then dead reckoning could be used to get close enough to the known position of a landmark to be able to see it, before walking to the landmark itself—giving a precisely known starting point—and then setting off again.

Localization of mobile sensor nodes

Localizing a static sensor node is not a difficult task because attaching a Global Positioning System (GPS) device suffices the need of localization. But a mobile sensor node, which continuously changes its geographical location with time is difficult to localize. Mostly mobile sensor nodes within some particular domain for data collection can be used, i.e, sensor node attached to an animal within a grazing field or attached to a soldier on a battlefield. Within these scenarios a GPS device for each sensor node cannot be afforded. Some of the reasons for this include cost, size and battery drainage of constrained sensor nodes. To overcome this problem a limited number of reference nodes (with GPS) within a field is employed. These nodes continuously broadcast their locations and other nodes in proximity receive these locations and calculate their position using some mathematical technique like trilateration. For localization, at least three known reference locations are necessary to localize. Several localization algorithms based on Sequential Monte Carlo (SMC) method have been proposed in literature. Sometimes a node at some places receives only two known locations and hence it becomes impossible to localize. To overcome this problem, dead reckoning technique is used. With this technique a sensor node uses its previous calculated location for localization at later time intervals. For example, at time instant 1 if node A calculates its position as loca_1 with the help of three known reference locations; then at time instant 2 it uses loca_1 along with two other reference locations received from other two reference nodes. This not only localizes a node in less time but also localizes in positions where it is difficult to get three reference locations.

Animal navigation

In studies of animal navigation, dead reckoning is more commonly (though not exclusively) known as path integration. Animals use it to estimate their current location based on their movements from their last known location. Animals such as ants, rodents, and geese have been shown to track their locations continuously relative to a starting point and to return to it, an important skill for foragers with a fixed home.

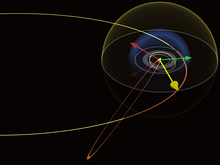

Vehicular navigation

Marine

In marine navigation a "dead" reckoning plot generally does not take into account the effect of currents or wind. Aboard ship a dead reckoning plot is considered important in evaluating position information and planning the movement of the vessel.

Dead reckoning begins with a known position, or fix, which is then advanced, mathematically or directly on the chart, by means of recorded heading, speed, and time. Speed can be determined by many methods. Before modern instrumentation, it was determined aboard ship using a chip log. More modern methods include pit log referencing engine speed (e.g. in rpm) against a table of total displacement (for ships) or referencing one's indicated airspeed fed by the pressure from a pitot tube. This measurement is converted to an equivalent airspeed based upon known atmospheric conditions and measured errors in the indicated airspeed system. A naval vessel uses a device called a pit sword (rodmeter), which uses two sensors on a metal rod to measure the electromagnetic variance caused by the ship moving through water. This change is then converted to ship's speed. Distance is determined by multiplying the speed and the time. This initial position can then be adjusted resulting in an estimated position by taking into account the current (known as set and drift in marine navigation). If there is no positional information available, a new dead reckoning plot may start from an estimated position. In this case subsequent dead reckoning positions will have taken into account estimated set and drift.

Dead reckoning positions are calculated at predetermined intervals, and are maintained between fixes. The duration of the interval varies. Factors including one's speed made good and the nature of heading and other course changes, and the navigator's judgment determine when dead reckoning positions are calculated.

Before the 18th-century development of the marine chronometer by John Harrison and the lunar distance method, dead reckoning was the primary method of determining longitude available to mariners such as Christopher Columbus and John Cabot on their trans-Atlantic voyages. Tools such as the traverse board were developed to enable even illiterate crew members to collect the data needed for dead reckoning. Polynesian navigation, however, uses different wayfinding techniques.

Air

On 14 June, 1919, John Alcock and Arthur Brown took off from Lester's Field in St. John's, Newfoundland in a Vickers Vimy. They navigated across the Atlantic Ocean by dead reckoning and landed in County Galway, Ireland at 8:40 a.m. on 15 June completing the first non-stop transatlantic flight.

On 21 May 1927 Charles Lindbergh landed in Paris, France after a successful non-stop flight from the United States in the single-engined Spirit of St. Louis. As the aircraft was equipped with very basic instruments, Lindbergh used dead reckoning to navigate.

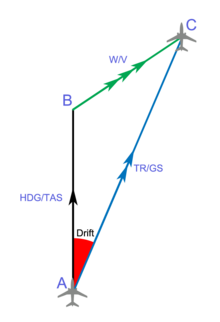

Dead reckoning in the air is similar to dead reckoning on the sea, but slightly more complicated. The density of the air the aircraft moves through affects its performance as well as winds, weight, and power settings.

The basic formula for DR is Distance = Speed x Time. An aircraft flying at 250 knots airspeed for 2 hours has flown 500 nautical miles through the air. The wind triangle is used to calculate the effects of wind on heading and airspeed to obtain a magnetic heading to steer and the speed over the ground (groundspeed). Printed tables, formulae, or an E6B flight computer are used to calculate the effects of air density on aircraft rate of climb, rate of fuel burn, and airspeed.

A course line is drawn on the aeronautical chart along with estimated positions at fixed intervals (say every half hour). Visual observations of ground features are used to obtain fixes. By comparing the fix and the estimated position corrections are made to the aircraft's heading and groundspeed.

Dead reckoning is on the curriculum for VFR (visual flight rules – or basic level) pilots worldwide. It is taught regardless of whether the aircraft has navigation aids such as GPS, ADF and VOR and is an ICAO Requirement. Many flying training schools will prevent a student from using electronic aids until they have mastered dead reckoning.

Inertial navigation systems (INSes), which are nearly universal on more advanced aircraft, use dead reckoning internally. The INS provides reliable navigation capability under virtually any conditions, without the need for external navigation references, although it is still prone to slight errors.

Automotive

Dead reckoning is today implemented in some high-end automotive navigation systems in order to overcome the limitations of GPS/GNSS technology alone. Satellite microwave signals are unavailable in parking garages and tunnels, and often severely degraded in urban canyons and near trees due to blocked lines of sight to the satellites or multipath propagation. In a dead-reckoning navigation system, the car is equipped with sensors that know the wheel circumference and record wheel rotations and steering direction. These sensors are often already present in cars for other purposes (anti-lock braking system, electronic stability control) and can be read by the navigation system from the controller-area network bus. The navigation system then uses a Kalman filter to integrate the always-available sensor data with the accurate but occasionally unavailable position information from the satellite data into a combined position fix.

Autonomous navigation in robotics

Dead reckoning is utilized in some robotic applications. It is usually used to reduce the need for sensing technology, such as ultrasonic sensors, GPS, or placement of some linear and rotary encoders, in an autonomous robot, thus greatly reducing cost and complexity at the expense of performance and repeatability. The proper utilization of dead reckoning in this sense would be to supply a known percentage of electrical power or hydraulic pressure to the robot's drive motors over a given amount of time from a general starting point. Dead reckoning is not totally accurate, which can lead to errors in distance estimates ranging from a few millimeters (in CNC machining) to kilometers (in UAVs), based upon the duration of the run, the speed of the robot, the length of the run, and several other factors.

Pedestrian dead reckoning

With the increased sensor offering in smartphones, built-in accelerometers can be used as a pedometer and built-in magnetometer as a compass heading provider. Pedestrian dead reckoning (PDR) can be used to supplement other navigation methods in a similar way to automotive navigation, or to extend navigation into areas where other navigation systems are unavailable.

In a simple implementation, the user holds their phone in front of them and each step causes position to move forward a fixed distance in the direction measured by the compass. Accuracy is limited by the sensor precision, magnetic disturbances inside structures, and unknown variables such as carrying position and stride length. Another challenge is differentiating walking from running, and recognizing movements like bicycling, climbing stairs, or riding an elevator.

Before phone-based systems existed, many custom PDR systems existed. While a pedometer can only be used to measure linear distance traveled, PDR systems have an embedded magnetometer for heading measurement. Custom PDR systems can take many forms including special boots, belts, and watches, where the variability of carrying position has been minimized to better utilize magnetometer heading. True dead reckoning is fairly complicated, as it is not only important to minimize basic drift, but also to handle different carrying scenarios and movements, as well as hardware differences across phone models.

Directional dead reckoning

The south-pointing chariot was an ancient Chinese device consisting of a two-wheeled horse-drawn vehicle which carried a pointer that was intended always to aim to the south, no matter how the chariot turned. The chariot pre-dated the navigational use of the magnetic compass, and could not detect the direction that was south. Instead it used a kind of directional dead reckoning: at the start of a journey, the pointer was aimed southward by hand, using local knowledge or astronomical observations e.g. of the Pole Star. Then, as it traveled, a mechanism possibly containing differential gears used the different rotational speeds of the two wheels to turn the pointer relative to the body of the chariot by the angle of turns made (subject to available mechanical accuracy), keeping the pointer aiming in its original direction, to the south. Errors, as always with dead reckoning, would accumulate as distance traveled increased.

For networked games

Networked games and simulation tools routinely use dead reckoning to predict where an actor should be right now, using its last known kinematic state (position, velocity, acceleration, orientation, and angular velocity). This is primarily needed because it is impractical to send network updates at the rate that most games run, 60 Hz. The basic solution starts by projecting into the future using linear physics:

This formula is used to move the object until a new update is received over the network. At that point, the problem is that there are now two kinematic states: the currently estimated position and the just received, actual position. Resolving these two states in a believable way can be quite complex. One approach is to create a curve (e.g. cubic Bézier splines, centripetal Catmull–Rom splines, and Hermite curves) between the two states while still projecting into the future. Another technique is to use projective velocity blending, which is the blending of two projections (last known and current) where the current projection uses a blending between the last known and current velocity over a set time.

The first equation calculates a blended velocity given the client-side velocity at the time of the last server update and the last known server-side velocity . This essentially blends from the client-side velocity towards the server-side velocity for a smooth transition. Note that should go from zero (at the time of the server update) to one (at the time at which the next update should be arriving). A late server update is unproblematic as long as remains at one.

Next, two positions are calculated: firstly, the blended velocity and the last known server-side acceleration are used to calculate . This is a position which is projected from the client-side start position based on , the time which has passed since the last server update. Secondly, the same equation is used with the last known server-side parameters to calculate the position projected from the last known server-side position and velocity , resulting in .

Finally, the new position to display on the client is the result of interpolating from the projected position based on client information towards the projected position based on the last known server information . The resulting movement smoothly resolves the discrepancy between client-side and server-side information, even if this server-side information arrives infrequently or inconsistently. It is also free of oscillations which spline-based interpolation may suffer from.

Computer science

In computer science, dead-reckoning refers to navigating an array data structure using indexes. Since every array element has the same size, it is possible to directly access one array element by knowing any position in the array.

Given the following array:

| A | B | C | D | E |

knowing the memory address where the array starts, it is easy to compute the memory address of D:

Likewise, knowing D's memory address, it is easy to compute the memory address of B:

This property is particularly important for performance when used in conjunction with arrays of structures because data can be directly accessed, without going through a pointer dereference.