| Positron emission tomography | |

|---|---|

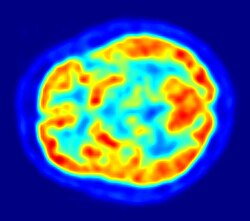

Cardiac PET scanner Positron emission tomography (PET) is a functional imaging technique that uses radioactive substances known as radiotracers to visualize and measure changes in metabolic processes, and in other physiological activities including blood flow, regional chemical composition, and absorption. Different tracers are used for various imaging purposes, depending on the target process within the body. |

For example:

- Fluorodeoxyglucose ([18F]FDG or FDG) is commonly used to detect cancer;

- [18F]Sodium fluoride (Na18F) is widely used for detecting bone formation;

- Oxygen-15 (15O) is sometimes used to measure blood flow.

PET is a common imaging technique, a medical scintillography technique used in nuclear medicine. A radiopharmaceutical—a radioisotope attached to a drug—is injected into the body as a tracer. When the radiopharmaceutical undergoes beta plus decay, a positron is emitted, and when the positron interacts with an ordinary electron, the two particles annihilate and two gamma rays are emitted in opposite directions. These gamma rays are detected by two gamma cameras to form a three-dimensional image.

PET scanners can incorporate a computed tomography scanner (CT) and are known as PET–CT scanners. PET scan images can be reconstructed using a CT scan performed using one scanner during the same session.

One of the disadvantages of a PET scanner is its high initial cost and ongoing operating costs.

Uses

PET is both a medical and research tool used in pre-clinical and clinical settings. It is used heavily in the imaging of tumors and the search for metastases within the field of clinical oncology, and for the clinical diagnosis of certain diffuse brain diseases such as those causing various types of dementias. PET is a valuable research tool to learn and enhance our knowledge of the normal human brain, heart function, and support drug development. PET is also used in pre-clinical studies using animals. It allows repeated investigations into the same subjects over time, where subjects can act as their own control and substantially reduces the numbers of animals required for a given study. This approach allows research studies to reduce the sample size needed while increasing the statistical quality of its results.

Physiological processes lead to anatomical changes in the body. Since PET is capable of detecting biochemical processes as well as expression of some proteins, PET can provide molecular-level information much before any anatomic changes are visible. PET scanning does this by using radiolabelled molecular probes that have different rates of uptake depending on the type and function of tissue involved. Regional tracer uptake in various anatomic structures can be visualized and relatively quantified in terms of injected positron emitter within a PET scan.

PET imaging is best performed using a dedicated PET scanner. It is also possible to acquire PET images using a conventional dual-head gamma camera fitted with a coincidence detector. The quality of gamma-camera PET imaging is lower, and the scans take longer to acquire. However, this method allows a low-cost on-site solution to institutions with low PET scanning demand. An alternative would be to refer these patients to another center or relying on a visit by a mobile scanner.

Alternative methods of medical imaging include single-photon emission computed tomography (SPECT), computed tomography (CT), magnetic resonance imaging (MRI) and functional magnetic resonance imaging (fMRI), and ultrasound. SPECT is an imaging technique similar to PET that uses radioligands to detect molecules in the body. SPECT is less expensive and provides inferior image quality than PET.

Oncology

PET scanning with the radiotracer [18F]fluorodeoxyglucose (FDG) is widely used in clinical oncology. FDG is a glucose analog that is taken up by glucose-using cells and phosphorylated by hexokinase (whose mitochondrial form is significantly elevated in rapidly growing malignant tumors). Metabolic trapping of the radioactive glucose molecule allows the PET scan to be utilized. The concentrations of imaged FDG tracer indicate tissue metabolic activity as it corresponds to the regional glucose uptake. FDG is used to explore the possibility of cancer spreading to other body sites (cancer metastasis). These FDG PET scans for detecting cancer metastasis are the most common in standard medical care (representing 90% of current scans). The same tracer may also be used for the diagnosis of types of dementia. Less often, other radioactive tracers, usually but not always labelled with fluorine-18 (18F), are used to image the tissue concentration of different kinds of molecules of interest inside the body.

A typical dose of FDG used in an oncological scan has an effective radiation dose of 7.6 mSv. Because the hydroxy group that is replaced by fluorine-18 to generate FDG is required for the next step in glucose metabolism in all cells, no further reactions occur in FDG. Furthermore, most tissues (with the notable exception of liver and kidneys) cannot remove the phosphate added by hexokinase. This means that FDG is trapped in any cell that takes it up until it decays, since phosphorylated sugars, due to their ionic charge, cannot exit from the cell. This results in intense radiolabeling of tissues with high glucose uptake, such as the normal brain, liver, kidneys, and most cancers, which have a higher glucose uptake than most normal tissue due to the Warburg effect. As a result, FDG-PET can be used for diagnosis, staging, and monitoring treatment of cancers, particularly in Hodgkin lymphoma, non-Hodgkin lymphoma, and lung cancer.

A 2020 review of research on the use of PET for Hodgkin lymphoma found evidence that negative findings in interim PET scans are linked to higher overall survival and progression-free survival; however, the certainty of the available evidence was moderate for survival, and very low for progression-free survival.

A few other isotopes and radiotracers are slowly being introduced into oncology for specific purposes. For example, 11C-labelled metomidate (11C-metomidate) has been used to detect tumors of adrenocortical origin. Also, fluorodopa (FDOPA) PET/CT (also called F-18-DOPA PET/CT) has proven to be a more sensitive alternative to finding and also localizing pheochromocytoma than the iobenguane (MIBG) scan.

Neuroimaging

Neurology

PET imaging with oxygen-15 indirectly measures blood flow to the brain. In this method, increased radioactivity signal indicates increased blood flow which is assumed to correlate with increased brain activity. Because of its two-minute half-life, oxygen-15 must be piped directly from a medical cyclotron for such uses, which is difficult.

PET imaging with FDG takes advantage of the fact that the brain is normally a rapid user of glucose. Standard FDG PET of the brain measures regional glucose use and can be used in neuropathological diagnosis.

Brain pathologies such as Alzheimer's disease (AD) greatly decrease brain metabolism of both glucose and oxygen in tandem. Therefore FDG PET of the brain may also be used to successfully differentiate Alzheimer's disease from other dementing processes, and also to make early diagnoses of Alzheimer's disease. The advantage of FDG PET for these uses is its much wider availability. Some fluorine-18 based radioactive tracers used for Alzheimer's include florbetapir, flutemetamol, Pittsburgh compound B (PiB) and florbetaben, which are all used to detect amyloid-beta plaques, a potential biomarker for Alzheimer's in the brain.

PET imaging with FDG can also be used for localization of "seizure focus". A seizure focus will appear as hypometabolic during an interictal scan. Several radiotracers (i.e. radioligands) have been developed for PET that are ligands for specific neuroreceptor subtypes such as [11C]raclopride, [18F]fallypride and [18F]desmethoxyfallypride for dopamine D2/D3 receptors; [11C]McN5652 and [11C]DASB for serotonin transporters; [18F]mefway for serotonin 5HT1A receptors; and [18F]nifene for nicotinic acetylcholine receptors or enzyme substrates (e.g. 6-FDOPA for the AADC enzyme). These agents permit the visualization of neuroreceptor pools in the context of a plurality of neuropsychiatric and neurologic illnesses.

PET may also be used for the diagnosis of hippocampal sclerosis, which causes epilepsy. FDG, and the less common tracers flumazenil and MPPF have been explored for this purpose. If the sclerosis is unilateral (right hippocampus or left hippocampus), FDG uptake can be compared with the healthy side. Even if the diagnosis is difficult with MRI, it may be diagnosed with PET.

The development of a number of novel probes for non-invasive, in-vivo PET imaging of neuroaggregate in human brain has brought amyloid imaging close to clinical use. The earliest amyloid imaging probes included [18F]FDDNP, developed at the University of California, Los Angeles, and Pittsburgh compound B (PiB), developed at the University of Pittsburgh. These probes permit the visualization of amyloid plaques in the brains of Alzheimer's patients and could assist clinicians in making a positive clinical diagnosis of AD pre-mortem and aid in the development of novel anti-amyloid therapies. [11C]polymethylpentene (PMP) is a novel radiopharmaceutical used in PET imaging to determine the activity of the acetylcholinergic neurotransmitter system by acting as a substrate for acetylcholinesterase. Post-mortem examination of AD patients has shown decreased levels of acetylcholinesterase. [11C]PMP is used to map the acetylcholinesterase activity in the brain, which could allow for premortem diagnoses of AD and help to monitor AD treatments. Avid Radiopharmaceuticals has developed and commercialized a compound called florbetapir that uses the longer-lasting radionuclide fluorine-18 to detect amyloid plaques using PET scans.

Neuropsychology or cognitive neuroscience

To examine links between specific psychological processes or disorders and brain activity.

Psychiatry

Numerous compounds that bind selectively to neuroreceptors of interest in biological psychiatry have been radiolabeled with C-11 or F-18. Radioligands that bind to dopamine receptors (D1, D2, reuptake transporter), serotonin receptors (5HT1A, 5HT2A, reuptake transporter), opioid receptors (mu and kappa), cholinergic receptors (nicotinic and muscarinic) and other sites have been used successfully in studies with human subjects. Studies have been performed examining the state of these receptors in patients compared to healthy controls in schizophrenia, substance abuse, mood disorders and other psychiatric conditions.

Stereotactic surgery and radiosurgery

PET can also be used in image guided surgery for the treatment of intracranial tumors, arteriovenous malformations and other surgically treatable conditions.

Cardiology

Cardiology, atherosclerosis and vascular disease study: FDG PET can help in identifying hibernating myocardium. However, the cost-effectiveness of PET for this role versus SPECT is unclear. FDG PET imaging of atherosclerosis to detect patients at risk of stroke is also feasible. Also, it can help test the efficacy of novel anti-atherosclerosis therapies.

Infectious diseases

Imaging infections with molecular imaging technologies can improve diagnosis and treatment follow-up. Clinically, PET has been widely used to image bacterial infections using FDG to identify the infection-associated inflammatory response. Three different PET contrast agents have been developed to image bacterial infections in vivo are [18F]maltose, [18F]maltohexaose, and [18F]2-fluorodeoxysorbitol (FDS). FDS has the added benefit of being able to target only Enterobacteriaceae.

Bio-distribution studies

In pre-clinical trials, a new drug can be radiolabeled and injected into animals. Such scans are referred to as biodistribution studies. The information regarding drug uptake, retention and elimination over time can be obtained quickly and cost-effectively compare to the older technique of killing and dissecting the animals. Commonly, drug occupancy at a purported site of action can be inferred indirectly by competition studies between unlabeled drug and radiolabeled compounds to bind with specificity to the site. A single radioligand can be used this way to test many potential drug candidates for the same target. A related technique involves scanning with radioligands that compete with an endogenous (naturally occurring) substance at a given receptor to demonstrate that a drug causes the release of the natural substance.

Small animal imaging

A miniature animal PET has been constructed that is small enough for a fully conscious rat to be scanned. This RatCAP (rat conscious animal PET) allows animals to be scanned without the confounding effects of anesthesia. PET scanners designed specifically for imaging rodents, often referred to as microPET, as well as scanners for small primates, are marketed for academic and pharmaceutical research. The scanners are based on microminiature scintillators and amplified avalanche photodiodes (APDs) through a system that uses single-chip silicon photomultipliers.

In 2018 the UC Davis School of Veterinary Medicine became the first veterinary center to employ a small clinical PET scanner as a scanner for clinical (rather than research) animal diagnosis. Because of cost as well as the marginal utility of detecting cancer metastases in companion animals (the primary use of this modality), veterinary PET scanning is expected to be rarely available in the immediate future.

Musculo-skeletal imaging

PET imaging has been used for imaging muscles and bones. FDG is the most commonly used tracer for imaging muscles, and NaF-F18 is the most widely used tracer for imaging bones.

Muscles

PET is a feasible technique for studying skeletal muscles during exercise. Also, PET can provide muscle activation data about deep-lying muscles (such as the vastus intermedialis and the gluteus minimus) compared to techniques like electromyography, which can be used only on superficial muscles directly under the skin. However, a disadvantage is that PET provides no timing information about muscle activation because it has to be measured after the exercise is completed. This is due to the time it takes for FDG to accumulate in the activated muscles.

Bones

Together with [18F]sodium floride, PET for bone imaging has been in use for 60 years for measuring regional bone metabolism and blood flow using static and dynamic scans. Researchers have recently started using [18F]sodium fluoride to study bone metastasis as well.

Safety

PET scanning is non-invasive, but it does involve exposure to ionizing radiation. FDG, which is now the standard radiotracer used for PET neuroimaging and cancer patient management, has an effective radiation dose of 14 mSv.

The amount of radiation in FDG is similar to the effective dose of spending one year in the American city of Denver, Colorado (12.4 mSv/year). For comparison, radiation dosage for other medical procedures range from 0.02 mSv for a chest X-ray and 6.5–8 mSv for a CT scan of the chest. Average civil aircrews are exposed to 3 mSv/year, and the whole body occupational dose limit for nuclear energy workers in the US is 50 mSv/year. For scale, see Orders of magnitude (radiation).

For PET–CT scanning, the radiation exposure may be substantial—around 23–26 mSv (for a 70 kg person—dose is likely to be higher for higher body weights).

Operation

Radionuclides and radiotracers

| Isotope | 11C | 13N | 15O | 18F | 68Ga | 64Cu | 52Mn | 55Co | 89Zr | 82Rb |

| Half-life | 20 min | 10 min | 2 min | 110 min | 67.81 min | 12.7 h | 5.6 d | 17.5 h | 78.4 h | 1.3 min |

Radionuclides are incorporated either into compounds normally used by the body such as glucose (or glucose analogues), water, or ammonia, or into molecules that bind to receptors or other sites of drug action. Such labelled compounds are known as radiotracers. PET technology can be used to trace the biologic pathway of any compound in living humans (and many other species as well), provided it can be radiolabeled with a PET isotope. Thus, the specific processes that can be probed with PET are virtually limitless, and radiotracers for new target molecules and processes are continuing to be synthesized. As of this writing there are already dozens in clinical use and hundreds applied in research. In 2020 by far the most commonly used radiotracer in clinical PET scanning is the carbohydrate derivative FDG. This radiotracer is used in essentially all scans for oncology and most scans in neurology, thus makes up the large majority of radiotracer (>95%) used in PET and PET–CT scanning.

Due to the short half-lives of most positron-emitting radioisotopes, the radiotracers have traditionally been produced using a cyclotron in close proximity to the PET imaging facility. The half-life of fluorine-18 is long enough that radiotracers labeled with fluorine-18 can be manufactured commercially at offsite locations and shipped to imaging centers. Recently rubidium-82 generators have become commercially available. These contain strontium-82, which decays by electron capture to produce positron-emitting rubidium-82.

The use of positron-emitting isotopes of metals in PET scans has been reviewed, including elements not listed above, such as lanthanides.

Immuno-PET

The isotope 89Zr has been applied to the tracking and quantification of molecular antibodies with PET cameras (a method called "immuno-PET").

The biological half-life of antibodies is typically on the order of days, see daclizumab and erenumab by way of example. To visualize and quantify the distribution of such antibodies in the body, the PET isotope 89Zr is well suited because its physical half-life matches the typical biological half-life of antibodies, see table above.

Emission

To conduct the scan, a short-lived radioactive tracer isotope is injected into the living subject (usually into blood circulation). Each tracer atom has been chemically incorporated into a biologically active molecule. There is a waiting period while the active molecule becomes concentrated in tissues of interest. Then the subject is placed in the imaging scanner. The molecule most commonly used for this purpose is FDG, a sugar, for which the waiting period is typically an hour. During the scan, a record of tissue concentration is made as the tracer decays.

As the radioisotope undergoes positron emission decay (also known as positive beta decay), it emits a positron, an antiparticle of the electron with opposite charge. The emitted positron travels in tissue for a short distance (typically less than 1 mm, but dependent on the isotope), during which time it loses kinetic energy, until it decelerates to a point where it can interact with an electron. The encounter annihilates both electron and positron, producing a pair of annihilation (gamma) photons moving in approximately opposite directions. These are detected when they reach a scintillator in the scanning device, creating a burst of light which is detected by photomultiplier tubes or silicon avalanche photodiodes (Si APD). The technique depends on simultaneous or coincident detection of the pair of photons moving in approximately opposite directions (they would be exactly opposite in their center of mass frame, but the scanner has no way to know this, and so has a built-in slight direction-error tolerance). Photons that do not arrive in temporal "pairs" (i.e. within a timing-window of a few nanoseconds) are ignored.

Localization of the positron annihilation event

The most significant fraction of electron–positron annihilations results in two 511 keV gamma photons being emitted at almost 180 degrees to each other. Hence, it is possible to localize their source along a straight line of coincidence (also called the line of response, or LOR). In practice, the LOR has a non-zero width as the emitted photons are not exactly 180 degrees apart. If the resolving time of the detectors is less than 500 picoseconds rather than about 10 nanoseconds, it is possible to localize the event to a segment of a chord, whose length is determined by the detector timing resolution. As the timing resolution improves, the signal-to-noise ratio (SNR) of the image will improve, requiring fewer events to achieve the same image quality. This technology is not yet common, but it is available on some new systems.

Image reconstruction

The raw data collected by a PET scanner are a list of 'coincidence events' representing near-simultaneous detection (typically, within a window of 6 to 12 nanoseconds of each other) of annihilation photons by a pair of detectors. Each coincidence event represents a line in space connecting the two detectors along which the positron emission occurred (i.e., the line of response (LOR)).

Analytical techniques, much like the reconstruction of computed tomography (CT) and single-photon emission computed tomography (SPECT) data, are commonly used, although the data set collected in PET is much poorer than CT, so reconstruction techniques are more difficult. Coincidence events can be grouped into projection images, called sinograms. The sinograms are sorted by the angle of each view and tilt (for 3D images). The sinogram images are analogous to the projections captured by CT scanners, and can be reconstructed in a similar way. The statistics of data thereby obtained are much worse than those obtained through transmission tomography. A normal PET data set has millions of counts for the whole acquisition, while the CT can reach a few billion counts. This contributes to PET images appearing "noisier" than CT. Two major sources of noise in PET are scatter (a detected pair of photons, at least one of which was deflected from its original path by interaction with matter in the field of view, leading to the pair being assigned to an incorrect LOR) and random events (photons originating from two different annihilation events but incorrectly recorded as a coincidence pair because their arrival at their respective detectors occurred within a coincidence timing window).

In practice, considerable pre-processing of the data is required – correction for random coincidences, estimation and subtraction of scattered photons, detector dead-time correction (after the detection of a photon, the detector must "cool down" again) and detector-sensitivity correction (for both inherent detector sensitivity and changes in sensitivity due to angle of incidence).

Filtered back projection (FBP) has been frequently used to reconstruct images from the projections. This algorithm has the advantage of being simple while having a low requirement for computing resources. Disadvantages are that shot noise in the raw data is prominent in the reconstructed images, and areas of high tracer uptake tend to form streaks across the image. Also, FBP treats the data deterministically – it does not account for the inherent randomness associated with PET data, thus requiring all the pre-reconstruction corrections described above.

Statistical, likelihood-based approaches: Statistical, likelihood-based iterative expectation-maximization algorithms such as the Shepp–Vardi algorithm are now the preferred method of reconstruction. These algorithms compute an estimate of the likely distribution of annihilation events that led to the measured data, based on statistical principles. The advantage is a better noise profile and resistance to the streak artifacts common with FBP, but the disadvantage is greater computer resource requirements. A further advantage of statistical image reconstruction techniques is that the physical effects that would need to be pre-corrected for when using an analytical reconstruction algorithm, such as scattered photons, random coincidences, attenuation and detector dead-time, can be incorporated into the likelihood model being used in the reconstruction, allowing for additional noise reduction. Iterative reconstruction has also been shown to result in improvements in the resolution of the reconstructed images, since more sophisticated models of the scanner physics can be incorporated into the likelihood model than those used by analytical reconstruction methods, allowing for improved quantification of the radioactivity distribution.

Research has shown that Bayesian methods that involve a Poisson likelihood function and an appropriate prior probability (e.g., a smoothing prior leading to total variation regularization or a Laplacian distribution leading to -based regularization in a wavelet or other domain), such as via Ulf Grenander's Sieve estimator or via Bayes penalty methods or via I.J. Good's roughness method may yield superior performance to expectation-maximization-based methods which involve a Poisson likelihood function but do not involve such a prior.

Attenuation correction: Quantitative PET Imaging requires attenuation correction. In these systems attenuation correction is based on a transmission scan using 68Ge rotating rod source.

Transmission scans directly measure attenuation values at 511 keV. Attenuation occurs when photons emitted by the radiotracer inside the body are absorbed by intervening tissue between the detector and the emission of the photon. As different LORs must traverse different thicknesses of tissue, the photons are attenuated differentially. The result is that structures deep in the body are reconstructed as having falsely low tracer uptake. Contemporary scanners can estimate attenuation using integrated x-ray CT equipment, in place of earlier equipment that offered a crude form of CT using a gamma ray (positron emitting) source and the PET detectors.

While attenuation-corrected images are generally more faithful representations, the correction process is itself susceptible to significant artifacts. As a result, both corrected and uncorrected images are always reconstructed and read together.

2D/3D reconstruction: Early PET scanners had only a single ring of detectors, hence the acquisition of data and subsequent reconstruction was restricted to a single transverse plane. More modern scanners now include multiple rings, essentially forming a cylinder of detectors.

There are two approaches to reconstructing data from such a scanner:

- Treat each ring as a separate entity, so that only coincidences within a ring are detected, the image from each ring can then be reconstructed individually (2D reconstruction), or

- Allow coincidences to be detected between rings as well as within rings, then reconstruct the entire volume together (3D).

3D techniques have better sensitivity (because more coincidences are detected and used) hence less noise, but are more sensitive to the effects of scatter and random coincidences, as well as requiring greater computer resources. The advent of sub-nanosecond timing resolution detectors affords better random coincidence rejection, thus favoring 3D image reconstruction.

Time-of-flight (TOF) PET: For modern systems with a higher time resolution (roughly 3 nanoseconds) a technique called "time-of-flight" is used to improve the overall performance. Time-of-flight PET makes use of very fast gamma-ray detectors and data processing system which can more precisely decide the difference in time between the detection of the two photons. It is impossible to localize the point of origin of the annihilation event exactly (currently within 10 cm). Therefore, image reconstruction is still needed. TOF technique gives a remarkable improvement in image quality, especially signal-to-noise ratio.

Combination of PET with CT or MRI

PET scans are increasingly read alongside CT or MRI scans, with the combination (co-registration) giving both anatomic and metabolic information (i.e., what the structure is, and what it is doing biochemically). Because PET imaging is most useful in combination with anatomical imaging, such as CT, modern PET scanners are now available with integrated high-end multi-detector-row CT scanners (PET–CT). Because the two scans can be performed in immediate sequence during the same session, with the patient not changing position between the two types of scans, the two sets of images are more precisely registered, so that areas of abnormality on the PET imaging can be more perfectly correlated with anatomy on the CT images. This is very useful in showing detailed views of moving organs or structures with higher anatomical variation, which is more common outside the brain.

At the Jülich Institute of Neurosciences and Biophysics, the world's largest PET–MRI device began operation in April 2009. A 9.4-tesla magnetic resonance tomograph (MRT) combined with a PET. Presently, only the head and brain can be imaged at these high magnetic field strengths.

For brain imaging, registration of CT, MRI and PET scans may be accomplished without the need for an integrated PET–CT or PET–MRI scanner by using a device known as the N-localizer.

Limitations

The minimization of radiation dose to the subject is an attractive feature of the use of short-lived radionuclides. Besides its established role as a diagnostic technique, PET has an expanding role as a method to assess the response to therapy, in particular, cancer therapy, where the risk to the patient from lack of knowledge about disease progress is much greater than the risk from the test radiation. Since the tracers are radioactive, the elderly and pregnant are unable to use it due to risks posed by radiation.

Limitations to the widespread use of PET arise from the high costs of cyclotrons needed to produce the short-lived radionuclides for PET scanning and the need for specially adapted on-site chemical synthesis apparatus to produce the radiopharmaceuticals after radioisotope preparation. Organic radiotracer molecules that will contain a positron-emitting radioisotope cannot be synthesized first and then the radioisotope prepared within them, because bombardment with a cyclotron to prepare the radioisotope destroys any organic carrier for it. Instead, the isotope must be prepared first, then the chemistry to prepare any organic radiotracer (such as FDG) accomplished very quickly, in the short time before the isotope decays. Few hospitals and universities are capable of maintaining such systems, and most clinical PET is supported by third-party suppliers of radiotracers that can supply many sites simultaneously. This limitation restricts clinical PET primarily to the use of tracers labelled with fluorine-18, which has a half-life of 110 minutes and can be transported a reasonable distance before use, or to rubidium-82 (used as rubidium-82 chloride) with a half-life of 1.27 minutes, which is created in a portable generator and is used for myocardial perfusion studies. In recent years a few on-site cyclotrons with integrated shielding and "hot labs" (automated chemistry labs that are able to work with radioisotopes) have begun to accompany PET units to remote hospitals. The presence of the small on-site cyclotron promises to expand in the future as the cyclotrons shrink in response to the high cost of isotope transportation to remote PET machines. In recent years the shortage of PET scans has been alleviated in the US, as rollout of radiopharmacies to supply radioisotopes has grown 30%/year.

Because the half-life of fluorine-18 is about two hours, the prepared dose of a radiopharmaceutical bearing this radionuclide will undergo multiple half-lives of decay during the working day. This necessitates frequent recalibration of the remaining dose (determination of activity per unit volume) and careful planning with respect to patient scheduling.

History

The concept of emission and transmission tomography was introduced by David E. Kuhl, Luke Chapman and Roy Edwards in the late 1950s. Their work would lead to the design and construction of several tomographic instruments at Washington University School of Medicine and later at the University of Pennsylvania. In the 1960s and 70s tomographic imaging instruments and techniques were further developed by Michel Ter-Pogossian, Michael E. Phelps, Edward J. Hoffman and others at Washington University School of Medicine.

Work by Gordon Brownell, Charles Burnham and their associates at the Massachusetts General Hospital beginning in the 1950s contributed significantly to the development of PET technology and included the first demonstration of annihilation radiation for medical imaging. Their innovations, including the use of light pipes and volumetric analysis, have been important in the deployment of PET imaging. In 1961, James Robertson and his associates at Brookhaven National Laboratory built the first single-plane PET scan, nicknamed the "head-shrinker."

One of the factors most responsible for the acceptance of positron imaging was the development of radiopharmaceuticals. In particular, the development of labeled 2-fluorodeoxy-D-glucose (FDG-firstly synthethized and described by two Czech scientists from Charles University in Prague in 1968) by the Brookhaven group under the direction of Al Wolf and Joanna Fowler was a major factor in expanding the scope of PET imaging. The compound was first administered to two normal human volunteers by Abass Alavi in August 1976 at the University of Pennsylvania. Brain images obtained with an ordinary (non-PET) nuclear scanner demonstrated the concentration of FDG in that organ. Later, the substance was used in dedicated positron tomographic scanners, to yield the modern procedure.

The logical extension of positron instrumentation was a design using two 2-dimensional arrays. PC-I was the first instrument using this concept and was designed in 1968, completed in 1969 and reported in 1972. The first applications of PC-I in tomographic mode as distinguished from the computed tomographic mode were reported in 1970. It soon became clear to many of those involved in PET development that a circular or cylindrical array of detectors was the logical next step in PET instrumentation. Although many investigators took this approach, James Robertson and Zang-Hee Cho were the first to propose a ring system that has become the prototype of the current shape of PET. The first multislice cylindrical array PET scanner was completed in 1974 at the Mallinckrodt Institute of Radiology by the group led by Ter-Pogossian.

The PET–CT scanner, attributed to David Townsend and Ronald Nutt, was named by Time as the medical invention of the year in 2000.

Cost

As of August 2008, Cancer Care Ontario reports that the current average incremental cost to perform a PET scan in the province is CA$1,000–1,200 per scan. This includes the cost of the radiopharmaceutical and a stipend for the physician reading the scan.

In the United States, a PET scan is estimated to be US$1,500-$5,000.

In England, the National Health Service reference cost (2015–2016) for an adult outpatient PET scan is £798.

In Australia, as of July 2018, the Medicare Benefits Schedule Fee for whole body FDG PET ranges from A$953 to A$999, depending on the indication for the scan.